Effective Use of Tables and Figures in Research Papers

Research papers are often based on copious amounts of data that can be summarized and easily read through tables and graphs. When writing a research paper , it is important for data to be presented to the reader in a visually appealing way. The data in figures and tables, however, should not be a repetition of the data found in the text. There are many ways of presenting data in tables and figures, governed by a few simple rules. An APA research paper and MLA research paper both require tables and figures, but the rules around them are different. When writing a research paper, the importance of tables and figures cannot be underestimated. How do you know if you need a table or figure? The rule of thumb is that if you cannot present your data in one or two sentences, then you need a table .

Using Tables

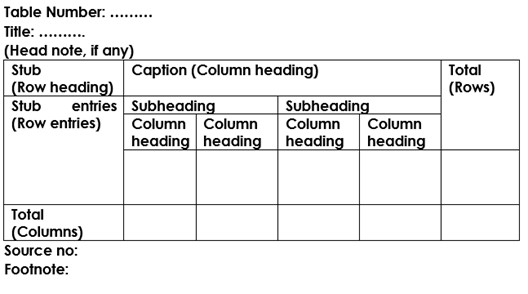

Tables are easily created using programs such as Excel. Tables and figures in scientific papers are wonderful ways of presenting data. Effective data presentation in research papers requires understanding your reader and the elements that comprise a table. Tables have several elements, including the legend, column titles, and body. As with academic writing, it is also just as important to structure tables so that readers can easily understand them. Tables that are disorganized or otherwise confusing will make the reader lose interest in your work.

- Title: Tables should have a clear, descriptive title, which functions as the “topic sentence” of the table. The titles can be lengthy or short, depending on the discipline.

- Column Titles: The goal of these title headings is to simplify the table. The reader’s attention moves from the title to the column title sequentially. A good set of column titles will allow the reader to quickly grasp what the table is about.

- Table Body: This is the main area of the table where numerical or textual data is located. Construct your table so that elements read from up to down, and not across.

Related: Done organizing your research data effectively in tables? Check out this post on tips for citing tables in your manuscript now!

The placement of figures and tables should be at the center of the page. It should be properly referenced and ordered in the number that it appears in the text. In addition, tables should be set apart from the text. Text wrapping should not be used. Sometimes, tables and figures are presented after the references in selected journals.

Using Figures

Figures can take many forms, such as bar graphs, frequency histograms, scatterplots, drawings, maps, etc. When using figures in a research paper, always think of your reader. What is the easiest figure for your reader to understand? How can you present the data in the simplest and most effective way? For instance, a photograph may be the best choice if you want your reader to understand spatial relationships.

- Figure Captions: Figures should be numbered and have descriptive titles or captions. The captions should be succinct enough to understand at the first glance. Captions are placed under the figure and are left justified.

- Image: Choose an image that is simple and easily understandable. Consider the size, resolution, and the image’s overall visual attractiveness.

- Additional Information: Illustrations in manuscripts are numbered separately from tables. Include any information that the reader needs to understand your figure, such as legends.

Common Errors in Research Papers

Effective data presentation in research papers requires understanding the common errors that make data presentation ineffective. These common mistakes include using the wrong type of figure for the data. For instance, using a scatterplot instead of a bar graph for showing levels of hydration is a mistake. Another common mistake is that some authors tend to italicize the table number. Remember, only the table title should be italicized . Another common mistake is failing to attribute the table. If the table/figure is from another source, simply put “ Note. Adapted from…” underneath the table. This should help avoid any issues with plagiarism.

Using tables and figures in research papers is essential for the paper’s readability. The reader is given a chance to understand data through visual content. When writing a research paper, these elements should be considered as part of good research writing. APA research papers, MLA research papers, and other manuscripts require visual content if the data is too complex or voluminous. The importance of tables and graphs is underscored by the main purpose of writing, and that is to be understood.

Frequently Asked Questions

"Consider the following points when creating figures for research papers: Determine purpose: Clarify the message or information to be conveyed. Choose figure type: Select the appropriate type for data representation. Prepare and organize data: Collect and arrange accurate and relevant data. Select software: Use suitable software for figure creation and editing. Design figure: Focus on clarity, labeling, and visual elements. Create the figure: Plot data or generate the figure using the chosen software. Label and annotate: Clearly identify and explain all elements in the figure. Review and revise: Verify accuracy, coherence, and alignment with the paper. Format and export: Adjust format to meet publication guidelines and export as suitable file."

"To create tables for a research paper, follow these steps: 1) Determine the purpose and information to be conveyed. 2) Plan the layout, including rows, columns, and headings. 3) Use spreadsheet software like Excel to design and format the table. 4) Input accurate data into cells, aligning it logically. 5) Include column and row headers for context. 6) Format the table for readability using consistent styles. 7) Add a descriptive title and caption to summarize and provide context. 8) Number and reference the table in the paper. 9) Review and revise for accuracy and clarity before finalizing."

"Including figures in a research paper enhances clarity and visual appeal. Follow these steps: Determine the need for figures based on data trends or to explain complex processes. Choose the right type of figure, such as graphs, charts, or images, to convey your message effectively. Create or obtain the figure, properly citing the source if needed. Number and caption each figure, providing concise and informative descriptions. Place figures logically in the paper and reference them in the text. Format and label figures clearly for better understanding. Provide detailed figure captions to aid comprehension. Cite the source for non-original figures or images. Review and revise figures for accuracy and consistency."

"Research papers use various types of tables to present data: Descriptive tables: Summarize main data characteristics, often presenting demographic information. Frequency tables: Display distribution of categorical variables, showing counts or percentages in different categories. Cross-tabulation tables: Explore relationships between categorical variables by presenting joint frequencies or percentages. Summary statistics tables: Present key statistics (mean, standard deviation, etc.) for numerical variables. Comparative tables: Compare different groups or conditions, displaying key statistics side by side. Correlation or regression tables: Display results of statistical analyses, such as coefficients and p-values. Longitudinal or time-series tables: Show data collected over multiple time points with columns for periods and rows for variables/subjects. Data matrix tables: Present raw data or matrices, common in experimental psychology or biology. Label tables clearly, include titles, and use footnotes or captions for explanations."

Enago is a very useful site. It covers nearly all topics of research writing and publishing in a simple, clear, attractive way. Though I’m a journal editor having much knowledge and training in these issues, I always find something new in this site. Thank you

“Thank You, your contents really help me :)”

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

- Reporting Research

Explanatory & Response Variable in Statistics — A quick guide for early career researchers!

Often researchers have a difficult time choosing the parameters and variables (like explanatory and response…

- Manuscript Preparation

- Publishing Research

How to Use Creative Data Visualization Techniques for Easy Comprehension of Qualitative Research

“A picture is worth a thousand words!”—an adage used so often stands true even whilst…

- Figures & Tables

Effective Use of Statistics in Research – Methods and Tools for Data Analysis

Remember that impending feeling you get when you are asked to analyze your data! Now…

- Old Webinars

- Webinar Mobile App

SCI中稿技巧: 提升研究数据的说服力

如何寻找原创研究课题 快速定位目标文献的有效搜索策略 如何根据期刊指南准备手稿的对应部分 论文手稿语言润色实用技巧分享,快速提高论文质量

Distill: A Journal With Interactive Images for Machine Learning Research

Research is a wide and extensive field of study. This field has welcomed a plethora…

Explanatory & Response Variable in Statistics — A quick guide for early career…

How to Create and Use Gantt Charts

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

What should universities' stance be on AI tools in research and academic writing?

- Manuscript Preparation

How to Use Tables and Figures effectively in Research Papers

- 3 minute read

- 42.9K views

Table of Contents

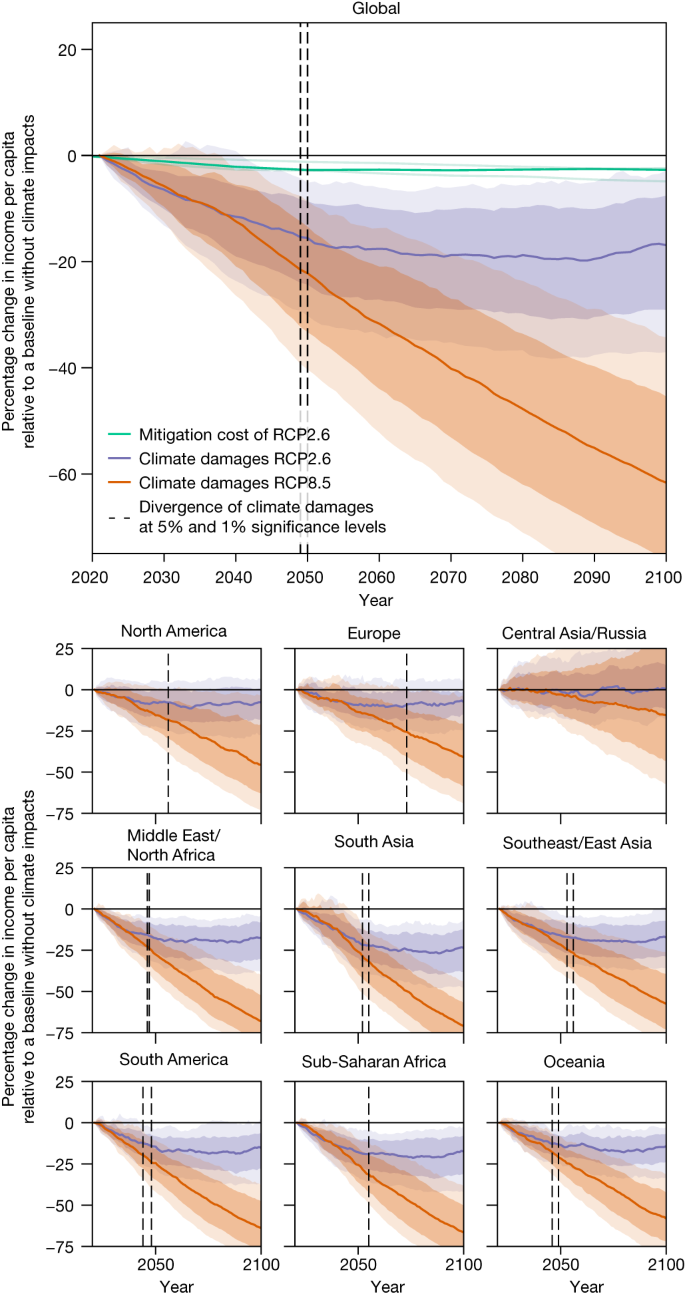

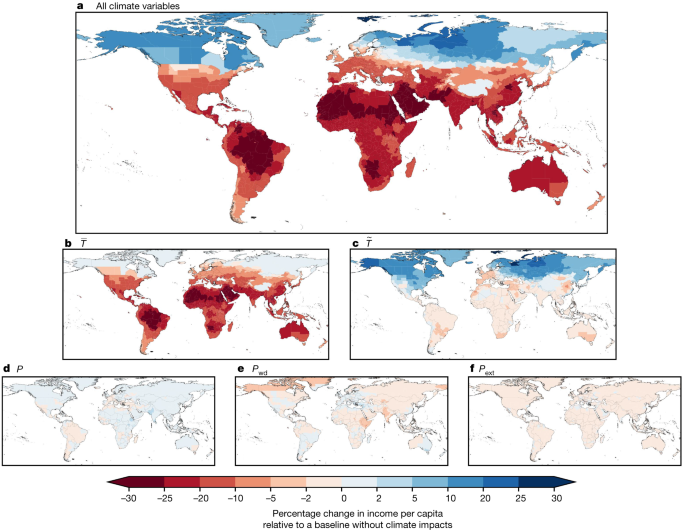

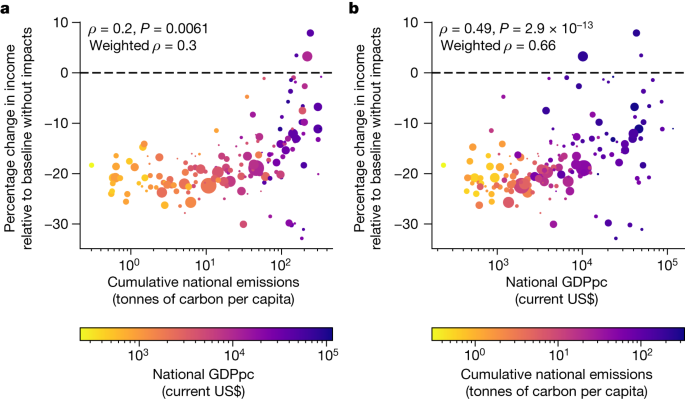

Data is the most important component of any research. It needs to be presented effectively in a paper to ensure that readers understand the key message in the paper. Figures and tables act as concise tools for clear presentation . Tables display information arranged in rows and columns in a grid-like format, while figures convey information visually, and take the form of a graph, diagram, chart, or image. Be it to compare the rise and fall of GDPs among countries over the years or to understand how COVID-19 has impacted incomes all over the world, tables and figures are imperative to convey vital findings accurately.

So, what are some of the best practices to follow when creating meaningful and attractive tables and figures? Here are some tips on how best to present tables and figures in a research paper.

Guidelines for including tables and figures meaningfully in a paper:

- Self-explanatory display items: Sometimes, readers, reviewers and journal editors directly go to the tables and figures before reading the entire text. So, the tables need to be well organized and self-explanatory.

- Avoidance of repetition: Tables and figures add clarity to the research. They complement the research text and draw attention to key points. They can be used to highlight the main points of the paper, but values should not be repeated as it defeats the very purpose of these elements.

- Consistency: There should be consistency in the values and figures in the tables and figures and the main text of the research paper.

- Informative titles: Titles should be concise and describe the purpose and content of the table. It should draw the reader’s attention towards the key findings of the research. Column heads, axis labels, figure labels, etc., should also be appropriately labelled.

- Adherence to journal guidelines: It is important to follow the instructions given in the target journal regarding the preparation and presentation of figures and tables, style of numbering, titles, image resolution, file formats, etc.

Now that we know how to go about including tables and figures in the manuscript, let’s take a look at what makes tables and figures stand out and create impact.

How to present data in a table?

For effective and concise presentation of data in a table, make sure to:

- Combine repetitive tables: If the tables have similar content, they should be organized into one.

- Divide the data: If there are large amounts of information, the data should be divided into categories for more clarity and better presentation. It is necessary to clearly demarcate the categories into well-structured columns and sub-columns.

- Keep only relevant data: The tables should not look cluttered. Ensure enough spacing.

Example of table presentation in a research paper

For comprehensible and engaging presentation of figures:

- Ensure clarity: All the parts of the figure should be clear. Ensure the use of a standard font, legible labels, and sharp images.

- Use appropriate legends: They make figures effective and draw attention towards the key message.

- Make it precise: There should be correct use of scale bars in images and maps, appropriate units wherever required, and adequate labels and legends.

It is important to get tables and figures correct and precise for your research paper to convey your findings accurately and clearly. If you are confused about how to suitably present your data through tables and figures, do not worry. Elsevier Author Services are well-equipped to guide you through every step to ensure that your manuscript is of top-notch quality.

- Research Process

What is a Problem Statement? [with examples]

What is the Background of a Study and How Should it be Written?

You may also like.

Make Hook, Line, and Sinker: The Art of Crafting Engaging Introductions

Can Describing Study Limitations Improve the Quality of Your Paper?

A Guide to Crafting Shorter, Impactful Sentences in Academic Writing

6 Steps to Write an Excellent Discussion in Your Manuscript

How to Write Clear and Crisp Civil Engineering Papers? Here are 5 Key Tips to Consider

The Clear Path to An Impactful Paper: ②

The Essentials of Writing to Communicate Research in Medicine

Changing Lines: Sentence Patterns in Academic Writing

Input your search keywords and press Enter.

How to Present Results in a Research Paper

- First Online: 01 October 2023

Cite this chapter

- Aparna Mukherjee 4 ,

- Gunjan Kumar 4 &

- Rakesh Lodha 5

689 Accesses

The results section is the core of a research manuscript where the study data and analyses are presented in an organized, uncluttered manner such that the reader can easily understand and interpret the findings. This section is completely factual; there is no place for opinions or explanations from the authors. The results should correspond to the objectives of the study in an orderly manner. Self-explanatory tables and figures add value to this section and make data presentation more convenient and appealing. The results presented in this section should have a link with both the preceding methods section and the following discussion section. A well-written, articulate results section lends clarity and credibility to the research paper and the study as a whole. This chapter provides an overview and important pointers to effective drafting of the results section in a research manuscript and also in theses.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Kallestinova ED (2011) How to write your first research paper. Yale J Biol Med 84(3):181–190

PubMed PubMed Central Google Scholar

STROBE. STROBE. [cited 2022 Nov 10]. https://www.strobe-statement.org/

Consort—Welcome to the CONSORT Website. http://www.consort-statement.org/ . Accessed 10 Nov 2022

Korevaar DA, Cohen JF, Reitsma JB, Bruns DE, Gatsonis CA, Glasziou PP et al (2016) Updating standards for reporting diagnostic accuracy: the development of STARD 2015. Res Integr Peer Rev 1(1):7

Article PubMed PubMed Central Google Scholar

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD et al (2021) The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 372:n71

Page MJ, Moher D, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD et al (2021) PRISMA 2020 explanation and elaboration: updated guidance and exemplars for reporting systematic reviews. BMJ 372:n160

Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups | EQUATOR Network. https://www.equator-network.org/reporting-guidelines/coreq/ . Accessed 10 Nov 2022

Aggarwal R, Sahni P (2015) The results section. In: Aggarwal R, Sahni P (eds) Reporting and publishing research in the biomedical sciences, 1st edn. National Medical Journal of India, Delhi, pp 24–44

Google Scholar

Mukherjee A, Lodha R (2016) Writing the results. Indian Pediatr 53(5):409–415

Article PubMed Google Scholar

Lodha R, Randev S, Kabra SK (2016) Oral antibiotics for community acquired pneumonia with chest indrawing in children aged below five years: a systematic review. Indian Pediatr 53(6):489–495

Anderson C (2010) Presenting and evaluating qualitative research. Am J Pharm Educ 74(8):141

Roberts C, Kumar K, Finn G (2020) Navigating the qualitative manuscript writing process: some tips for authors and reviewers. BMC Med Educ 20:439

Bigby C (2015) Preparing manuscripts that report qualitative research: avoiding common pitfalls and illegitimate questions. Aust Soc Work 68(3):384–391

Article Google Scholar

Vincent BP, Kumar G, Parameswaran S, Kar SS (2019) Barriers and suggestions towards deceased organ donation in a government tertiary care teaching hospital: qualitative study using socio-ecological model framework. Indian J Transplant 13(3):194

McCormick JB, Hopkins MA (2021) Exploring public concerns for sharing and governance of personal health information: a focus group study. JAMIA Open 4(4):ooab098

Groenland -emeritus professor E. Employing the matrix method as a tool for the analysis of qualitative research data in the business domain. Rochester, NY; 2014. https://papers.ssrn.com/abstract=2495330 . Accessed 10 Nov 2022

Download references

Acknowledgments

The book chapter is derived in part from our article “Mukherjee A, Lodha R. Writing the Results. Indian Pediatr. 2016 May 8;53(5):409-15.” We thank the Editor-in-Chief of the journal “Indian Pediatrics” for the permission for the same.

Author information

Authors and affiliations.

Clinical Studies, Trials and Projection Unit, Indian Council of Medical Research, New Delhi, India

Aparna Mukherjee & Gunjan Kumar

Department of Pediatrics, All India Institute of Medical Sciences, New Delhi, India

Rakesh Lodha

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Rakesh Lodha .

Editor information

Editors and affiliations.

Retired Senior Expert Pharmacologist at the Office of Cardiology, Hematology, Endocrinology, and Nephrology, Center for Drug Evaluation and Research, US Food and Drug Administration, Silver Spring, MD, USA

Gowraganahalli Jagadeesh

Professor & Director, Research Training and Publications, The Office of Research and Development, Periyar Maniammai Institute of Science & Technology (Deemed to be University), Vallam, Tamil Nadu, India

Pitchai Balakumar

Division Cardiology & Nephrology, Office of Cardiology, Hematology, Endocrinology and Nephrology, Center for Drug Evaluation and Research, US Food and Drug Administration, Silver Spring, MD, USA

Fortunato Senatore

Ethics declarations

Rights and permissions.

Reprints and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this chapter

Mukherjee, A., Kumar, G., Lodha, R. (2023). How to Present Results in a Research Paper. In: Jagadeesh, G., Balakumar, P., Senatore, F. (eds) The Quintessence of Basic and Clinical Research and Scientific Publishing. Springer, Singapore. https://doi.org/10.1007/978-981-99-1284-1_44

Download citation

DOI : https://doi.org/10.1007/978-981-99-1284-1_44

Published : 01 October 2023

Publisher Name : Springer, Singapore

Print ISBN : 978-981-99-1283-4

Online ISBN : 978-981-99-1284-1

eBook Packages : Biomedical and Life Sciences Biomedical and Life Sciences (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Get science-backed answers as you write with Paperpal's Research feature

How to Present Data and Statistics in Your Research Paper: Language Matters

Statistics is an inexact science as it is based on probabilities rather than certainties. However, the language used to present data and statistics in your thesis or research paper needs to be accurate to avoid misunderstandings when your work is read by others. If the written descriptions of your data and statistics are not clear and accurate, experienced researchers may lose confidence in your entire study and dismiss your results, no matter how compelling they may be.

The presentation of data in research and effective communication of statistical results requires writers to be very careful in their word choices. You must be confident that you understand the analysis you performed and the meaning of the results to really know how to present the data and statistics in your research paper effectively. Here are some terms and concepts that are often misused and may be confusing to early career researchers.

Averages, the measures of the central tendency of a dataset, can be calculated in several different ways. The word “average” in non-scholarly writings typically refers to the arithmetic mean. However, the median and mode are two other frequently used measures. In your research paper, it is critical to state exactly what measure you are using. Therefore, don’t report an average but a mean, median, or mode.

Percentages

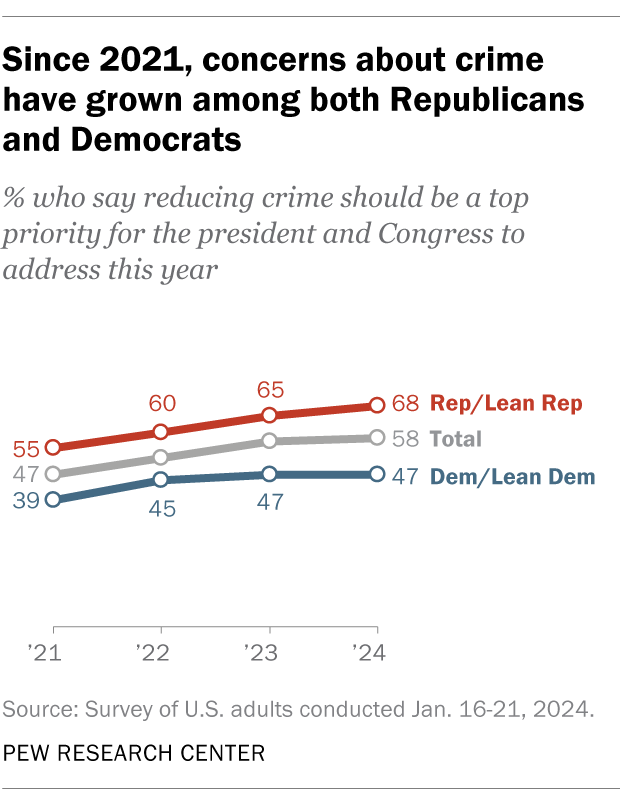

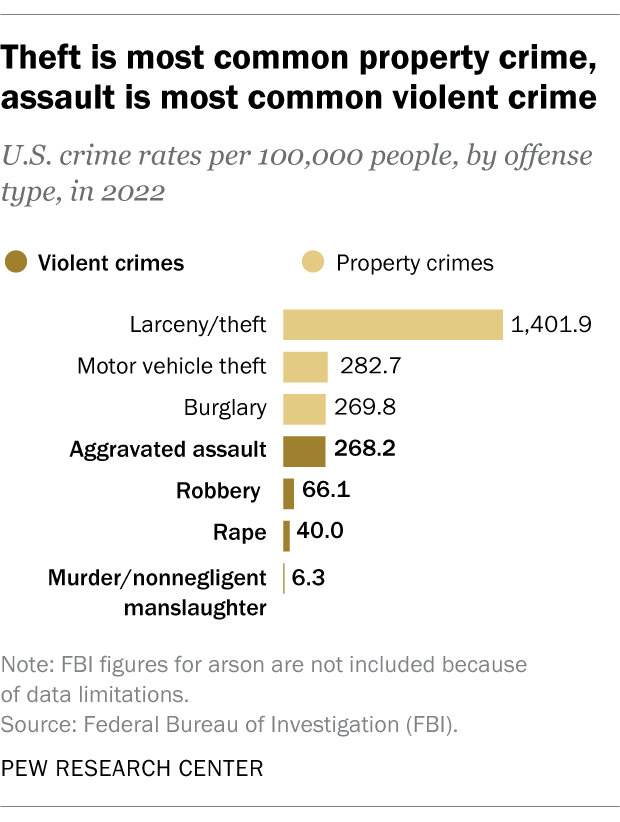

Percentages are commonly used in presentations of data in research. They can indicate concentrations, probabilities, or comparisons, and they are frequently used to report changes in values. For example, the annual crime rate increased by 25%. However, unless you have a basis for this number, it’s difficult to judge the meaningfulness of this increase 1 . Did the number of crimes increase from 4 incidents to 5 or from 4,000 incidents to 5,000? Be sure to include enough information for the reader to understand the context.

In addition, when used for comparison, make sure your comparison is complete. For instance, if the temperature was 17% higher in 2022, be sure to include that it was 17% higher than the temperature in 2017.

Descriptive vs. inferential statistics

Descriptive statistics deal with populations, while inferential statistics deal with samples. A population is a group of objects or measurements that includes all possible instances, and a sample is a subset of that population. For example, you measure the mass of all the 1.1 kg jars of peanut butter at your favorite grocery store and report the mean and standard deviation. These are descriptive statistics for this population of peanut butter jars. However, if you then say that this is the mean of all such jars of peanut butter produced, you are engaging in inferential statistics because you now have measured only a sample of jars. You are inferring a characteristic of a population based on a sample. Inferential statistics are usually reported with a margin of error or confidence interval, such as 1.1 ± .02 kg.

A hypothesis is a testable statement about the relationship between two or more groups or variables that forms the basis of the scientific method. The appropriate language around the topic of hypotheses and hypothesis testing can be confusing for even seasoned researchers.

The alternative hypothesis is generally the researcher’s prediction for the study, and the null hypothesis is the negation of the alternative hypothesis. The aim of the study is to find evidence to reject the null hypothesis, which supports the truth of the alternative hypothesis.

When writing up the results of your hypothesis test, it is important to understand exactly what the results mean. Remember, hypothesis testing can never “prove” anything – it merely provides evidence for either rejecting or not rejecting the null hypothesis. Also, be careful that you don’t overgeneralize the meaning of the results. Just because you find evidence that the null hypothesis can be rejected in this case does not mean the same is true under all conditions.

Tips for effectively presenting statistics in academic writing

Presenting your data and statistical results can be very challenging. For researchers without extensive experience or statistical training, writing this part of the study report can be especially daunting. Here are some things to keep in mind when presenting your data and statistical results 1 .

- If you don’t completely understand a statistical procedure, do not attempt to write it up without guidance from an expert. This is the most important thing you can do.

- Keep your audience in mind. When you present your data and statistical results, think about how familiar your readers may be with the analysis and include the amount of detail needed for them to be comfortable 2 .

- Use tables and graphics to illustrate your results more clearly and make your writing more understandable.

We hope the points above help answer the question of how to present data and statistics in your research paper correctly. All the best!

- The University of North Carolina at Chapel Hill Writing Center. Statistics. https://writingcenter.unc.edu/tips-and-tools/statistics/ [Accessed October 10, 2022]

- Purdue University Online Writing Lab. Writing with statistics. https://owl.purdue.edu/owl/research_and_citation/using_research/writing_with_statistics/index.html [Accessed October 10, 2022]

Paperpal is a comprehensive AI writing toolkit that helps students and researchers achieve 2x the writing in half the time. It leverages 21+ years of STM experience and insights from millions of research articles to provide in-depth academic writing, language editing, and submission readiness support to help you write better, faster.

Get accurate academic translations, rewriting support, grammar checks, vocabulary suggestions, and generative AI assistance that delivers human precision at machine speed. Try for free or upgrade to Paperpal Prime starting at US$19 a month to access premium features, including consistency, plagiarism, and 30+ submission readiness checks to help you succeed.

Experience the future of academic writing – Sign up to Paperpal and start writing for free!

Related Reads:

- How to Write a Research Paper Outline: Simple Steps for Researchers

- Manuscript Withdrawal: Reasons, Consequences, and How to Withdraw Submitted Manuscripts

- Supplementary Materials in Research: 5 Tips for Authors

- What is an Expository Essay and How to Write It

Confusing Elements of a Research Paper That Trip Up Most Academics

How to fix sentence fragments in your writing , you may also like, measuring academic success: definition & strategies for excellence, phd qualifying exam: tips for success , ai in education: it’s time to change the..., is it ethical to use ai-generated abstracts without..., what are journal guidelines on using generative ai..., should you use ai tools like chatgpt for..., 9 steps to publish a research paper, how to make translating academic papers less challenging, self-plagiarism in research: what it is and how..., 6 tips for post-doc researchers to take their....

How To Present Data Successfully in Academic & Scientific Research

How To Present Data Successfully in Academic and Scientific Research The main point of most academic and scientific writing is to report the findings of advanced research. Doing so necessarily involves the successful presentation of research data, but communicating data can be surprisingly challenging, even when the study is a small one and the results are relatively straightforward. For large or collaborative projects that generate enormous and complicated data sets, the task can be truly daunting. Clarity is essential, as are accuracy and precision, and a style that is as concise as possible yet also conveys all the information necessary for readers to assess and understand the findings is required. Choosing appropriate formats for organising and presenting data is an essential aspect of reporting research results effectively. Data can be presented in running text, in framed boxes, in lists, in tables or in figures, with each of these having a marked effect not only on how readers perceive and understand the research results, but also on how authors analyse and interpret those results in the first place. Making the right choice for each piece of information can be among the most difficult aspects of deciding how to present data in research papers and other documents. Text is the primary format for reporting research to an academic or scientific community as well as other readers. Running text is used to relate the overall story of a research project, from introductory and background material to final conclusions and implications, so text will play a central role in presenting data in the section of a research document dedicated to results or findings. The main body of text will be particularly useful for conveying information about the research findings that is relatively straightforward and neither too complex nor too convoluted. For example, comparative presentations of the discoveries about two historical objects or the results associated with two groups of participants may prove effective in the running text of a paper, but if comparison of five or ten objects or groups is necessary, one of the more visual formats described below will usually convey the information to readers more quickly and more successfully. Text is also the right format for explaining and interpreting research data that is presented in more visual forms, such as the tables and figures discussed below. Regardless of content, the text in an academic or scientific document intended for publication or degree credit should always be written in a formal and authoritative style in keeping with the standards and conventions of the relevant discipline(s). Careful proofreading are also necessary to remove all errors and inappropriate inconsistencies in data, grammar, spelling, punctuation and paragraph structure in order to ensure clear and precise presentation of research data. PhD Thesis Editing Services It is important to remember when considering how to present data in research that text can itself be offered in a more visual format than the normal running sentences and paragraphs in the main body of a document. The headings and subheadings within an academic or scientific paper or report are a simple example: spacing as well as font style and size make these short bits of text stand out and provide a clear structure and logical transitions for presenting data in an accessible fashion. Effective headings guide readers successfully through long and complex reports of research findings, and they also divide the presentation of data according to chronology, research methods, thematic categories or other organisational principles, rendering the information more comprehensible. Longer chunks of textual material that offer necessary or helpful information for the reader, such as examples of key findings, summaries of case studies, descriptions of data analysis or insightful authorial reflections on results can be separated from the main text and framed in a box to attract the attention of readers. The font in such boxes might be slightly different than that in the running text and the background may be shaded, perhaps with colour if the publication allows it, but neither is necessary to achieve the meaningful and lasting impact that makes framed boxes so common in textbooks and other publications intended for an audience of learners. Indeed, using chunks of text in this visual way can even increase the use of a document and the number of times it is cited. Lists of research data can have a similar effect whether they are framed or simply laid out down a normal page, but a parallel grammatical structure should always be used for all the items in a list, and accuracy is paramount because readers are likely to return to lists as well as framed boxes to refresh their memories about important data. Tables tend to be the format of choice for presenting data in research documents. The experimental results of quantitative research are often collected and analysed as well as shared with readers in carefully designed tables that offer a column and row structure to enable efficient presentation, consultation, comparison and evaluation of precise data or data sets. Numerical information fills most tables, so authors should take extra care to specify units of measure, round off long numbers, limit decimal places and otherwise make the data clear, consistent and useful and the table as a whole effective and uncluttered. The information must be grouped and arranged in the columns and rows in such a way that reading down from top to bottom and across from left to right to compare, contrast and establish relationships is an easy and intuitive process. Textual data can also be presented in a table, which might alternately be referred to as a matrix, particularly in qualitative as opposed to quantitative research. Like tables, matrices are useful for presenting and comparing data about two or more variables or concepts of relevance. Whether table or matrix, this kind of visual display of tabulated information requires a concise title or heading that usually appears at the top of the table, describes the purpose or content of the table and directs readers to whatever the author wants them to observe. Columns and rows within tables also require clear headings, and footnotes at the bottom of the table (and usually in a smaller font than the rest of the table) should define any nonstandard abbreviations, unusual symbols, specialised terms or other potentially confusing elements so that the table can function meaningfully on its own without the reader referring to the main text. If aspects of a table or matrix have been borrowed from a published source, that source must be acknowledged, usually in the table footnotes or sometimes in its heading. When a document contains more than one table or matrix, a consistent format and style across all of them is advisable, and they should also be numbered according to the order in which they are first mentioned in the main text (e.g., ‘Table 1,’ ‘Table 2’ etc.) . These numbers can then be used, alone or along with the relevant titles, to refer readers to the tables or matrices at appropriate points in the main text. PhD Thesis Editing Services Figures are also frequently used tools for presenting data in research, and they too should be numbered according to the order in which they are mentioned in the main body of a paper or other document. They are usually distinguished from tables (e.g., ‘Figure 1,’ ‘Figure 2’ etc.) and since several different kinds of figures are used in academic and scientific writing, the figures might also be divided into separate groups for numbering (e.g., ‘Chart 1,’ ‘Map 1’ etc.). The type of figure used to present a specific kind or cluster of research data will depend on both the nature of the data and the way in which the author is using the data to address research problems or questions. Bar charts or bar graphs are particularly common for revealing patterns and trends in research variables and they are especially effective when presenting discrete data in groups or categories for comparison and assessment. Line graphs or line charts also reveal trends and patterns and can successfully represent the changing values of several continuous variables over time, highlighting significant changes and turning points and enabling effective comparison. These types of visual displays can be combined in a single figure using both bars and lines along with careful shading and colour to expand comparisons among variables or categories and save valuable space in a document. Each figure in a research document should be given a concise but descriptive caption or heading, which might appear just above or just beneath the figure. The footnotes that explain elements of a table or matrix usually do not feature in a figure, but a figure legend can be used to define abbreviations, explain symbols, acknowledge sources (though that sometimes appears in the caption instead) and clarify any aspect of a figure for readers. Consistency in formatting and style across all the figures used in a research document is desirable, particularly when it comes to key features for understanding the information such as the x and y axes and scale bars in charts and graphs, but if different types of figures are used, each type may have its own format or style. Clear labelling should be provided for all important or potentially confusing parts of a figure (for those axes and scale bars, for instance) and if a photograph is used to illustrate or present data, consent must be obtained from any participants who appear in the photo and identities should usually be obscured. When a list, table or figure used for presenting data proves to be particularly large or complicated, it is often better to divide it into two or more lists, tables or figures in order to simplify and clarify the intended messages or purposes. This will be especially important for deciding how to present data in research when speaking to listeners instead of writing for readers. Lists, tables and figures offered via slides as a presenter speaks are viewed by the audience for a very short period of time, so simple is best, but longer lists, tables and figures can be distributed as handouts if necessary. When presenting data in research-based writing, the inclusion of even extremely complex lists, tables or figures may be acceptable, but keeping the needs of readers in mind is imperative and so is observing the relevant instructions or guidelines. Course instructors will often have specific requirements that must be met by students, university departments will usually offer formatting specifications for theses and dissertations, and scholarly journals will always have some kind of author guidelines that must be followed. There may be limits on the number of tables and figures allowed, specific requirements for the use of lines or rules within tables, or detailed instructions for ensuring appropriate resolution in photographs. PhD Thesis Editing Services All such requirements must be met, but many academic and scientific journals permit authors to submit appendices or supplementary materials with a manuscript, offering an opportunity to include, for instance, a detailed table of precise research data as an appendix or supplementary document while using simpler graphs in the paper itself to show important trends that are discussed and interpreted by the author. Relegating detailed data to supplementary files can also help with shortening a manuscript, and proofreaders, reviewers and researchers will appreciate the extra data while general readers will be able to encounter the main argument of the paper without the distraction that too much information might introduce. You may even want to include a list of tables, figures or both to draw attention to the presence of those elements whether the guidelines indicate the need for such a list or not. Do be sure, however, to exercise consistency with any information repeated across different formats, including supplementary materials (the same terminology should be used for an important concept or group, for instance, every time it is mentioned), and remember to use the specified file formats for those supplementary materials as well as for the tables and figures in the main document. Along with the relevant guidelines, authors should be prepared to take a close look at successful models of how other authors have presented research data. A published research article with especially clear and effective tables and figures will prove helpful if you are preparing a manuscript for submission to the journal that published that research article. A successful thesis recently written by a candidate in your university department who made particularly good use of lists and matrices will provide creative ideas as you design your own thesis. A conference presentation with excellent slides that significantly increased the impact of the spoken message might effectively be emulated as you plan your own presentation. Whatever sort of research document you are writing, it is always essential to plan carefully and give the formats in which you will present your research data considerable thought before you begin writing in order to avoid overlaps, repetitions and wasted time. You may also find that organising data into clear and appealing formats such as tables and charts will reveal or highlight details and patterns that you had not detected or considered important when assessing the raw data from your research. Using a variety of formats throughout a study and ensuring that the best format is chosen and designed for each bit of information as you determine how to present data in research documents means creating an effective comprehension tool not only for your readers, but also for yourself as you draft, revise and perfect your work.

Why Our Editing and Proofreading Services? At Proof-Reading-Service.com we offer the highest quality journal article editing , phd thesis editing and proofreading services via our large and extremely dedicated team of academic and scientific professionals. All of our proofreaders are native speakers of English who have earned their own postgraduate degrees, and their areas of specialisation cover such a wide range of disciplines that we are able to help our international clientele with research editing to improve and perfect all kinds of academic manuscripts for successful publication. Many of the carefully trained members of our expert editing and proofreading team work predominantly on articles intended for publication in scholarly journals, applying painstaking journal editing standards to ensure that the references and formatting used in each paper are in conformity with the journal’s instructions for authors and to correct any grammar, spelling, punctuation or simple typing errors. In this way, we enable our clients to report their research in the clear and accurate ways required to impress acquisitions proofreaders and achieve publication.

Our scientific proofreading services for the authors of a wide variety of scientific journal papers are especially popular, but we also offer manuscript proofreading services and have the experience and expertise to proofread and edit manuscripts in all scholarly disciplines, as well as beyond them. We have team members who specialise in medical proofreading services , and some of our experts dedicate their time exclusively to PhD proofreading and master’s proofreading , offering research students the opportunity to improve their use of formatting and language through the most exacting PhD thesis editing and dissertation proofreading practices. Whether you are preparing a conference paper for presentation, polishing a progress report to share with colleagues, or facing the daunting task of editing and perfecting any kind of scholarly document for publication, a qualified member of our professional team can provide invaluable assistance and give you greater confidence in your written work.

If you are in the process of preparing an article for an academic or scientific journal, or planning one for the near future, you may well be interested in a new book, Guide to Journal Publication , which is available on our Tips and Advice on Publishing Research in Journals website.

Guide to Academic and Scientific Publication

How to get your writing published in scholarly journals.

It provides practical advice on planning, preparing and submitting articles for publication in scholarly journals.

PhD Success

How to write a doctoral thesis.

If you are in the process of preparing a PhD thesis for submission, or planning one for the near future, you may well be interested in the book, How to Write a Doctoral Thesis , which is available on our thesis proofreading website.

PhD Success: How to Write a Doctoral Thesis provides guidance for students familiar with English and the procedures of English universities, but it also acknowledges that many theses in the English language are now written by candidates whose first language is not English, so it carefully explains the scholarly styles, conventions and standards expected of a successful doctoral thesis in the English language.

Why Is Proofreading Important?

To improve the quality of papers.

Effective proofreading is absolutely vital to the production of high-quality scholarly and professional documents. When done carefully, correctly and thoroughly, proofreading can make the difference between writing that communicates successfully with its intended readers and writing that does not. No author creates a perfect text without reviewing, reflecting on and revising what he or she has written, and proofreading is an extremely important part of this process.

Princeton Correspondents on Undergraduate Research

How to Make a Successful Research Presentation

Turning a research paper into a visual presentation is difficult; there are pitfalls, and navigating the path to a brief, informative presentation takes time and practice. As a TA for GEO/WRI 201: Methods in Data Analysis & Scientific Writing this past fall, I saw how this process works from an instructor’s standpoint. I’ve presented my own research before, but helping others present theirs taught me a bit more about the process. Here are some tips I learned that may help you with your next research presentation:

More is more

In general, your presentation will always benefit from more practice, more feedback, and more revision. By practicing in front of friends, you can get comfortable with presenting your work while receiving feedback. It is hard to know how to revise your presentation if you never practice. If you are presenting to a general audience, getting feedback from someone outside of your discipline is crucial. Terms and ideas that seem intuitive to you may be completely foreign to someone else, and your well-crafted presentation could fall flat.

Less is more

Limit the scope of your presentation, the number of slides, and the text on each slide. In my experience, text works well for organizing slides, orienting the audience to key terms, and annotating important figures–not for explaining complex ideas. Having fewer slides is usually better as well. In general, about one slide per minute of presentation is an appropriate budget. Too many slides is usually a sign that your topic is too broad.

Limit the scope of your presentation

Don’t present your paper. Presentations are usually around 10 min long. You will not have time to explain all of the research you did in a semester (or a year!) in such a short span of time. Instead, focus on the highlight(s). Identify a single compelling research question which your work addressed, and craft a succinct but complete narrative around it.

You will not have time to explain all of the research you did. Instead, focus on the highlights. Identify a single compelling research question which your work addressed, and craft a succinct but complete narrative around it.

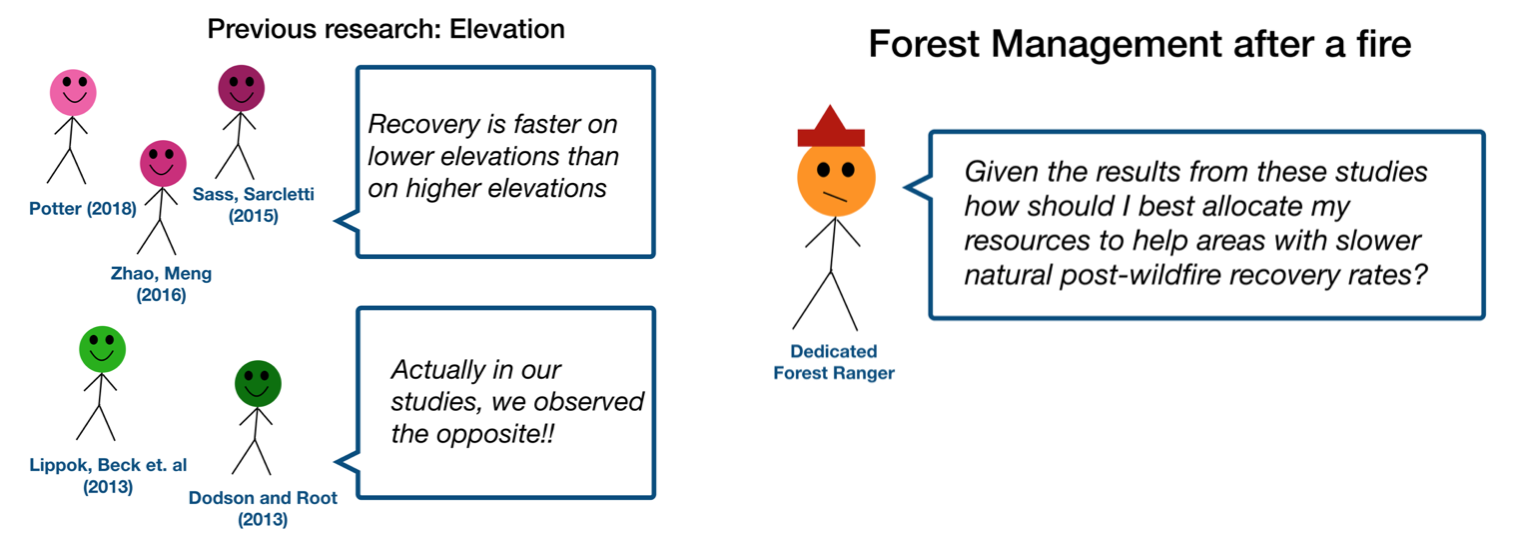

Craft a compelling research narrative

After identifying the focused research question, walk your audience through your research as if it were a story. Presentations with strong narrative arcs are clear, captivating, and compelling.

- Introduction (exposition — rising action)

Orient the audience and draw them in by demonstrating the relevance and importance of your research story with strong global motive. Provide them with the necessary vocabulary and background knowledge to understand the plot of your story. Introduce the key studies (characters) relevant in your story and build tension and conflict with scholarly and data motive. By the end of your introduction, your audience should clearly understand your research question and be dying to know how you resolve the tension built through motive.

- Methods (rising action)

The methods section should transition smoothly and logically from the introduction. Beware of presenting your methods in a boring, arc-killing, ‘this is what I did.’ Focus on the details that set your story apart from the stories other people have already told. Keep the audience interested by clearly motivating your decisions based on your original research question or the tension built in your introduction.

- Results (climax)

Less is usually more here. Only present results which are clearly related to the focused research question you are presenting. Make sure you explain the results clearly so that your audience understands what your research found. This is the peak of tension in your narrative arc, so don’t undercut it by quickly clicking through to your discussion.

- Discussion (falling action)

By now your audience should be dying for a satisfying resolution. Here is where you contextualize your results and begin resolving the tension between past research. Be thorough. If you have too many conflicts left unresolved, or you don’t have enough time to present all of the resolutions, you probably need to further narrow the scope of your presentation.

- Conclusion (denouement)

Return back to your initial research question and motive, resolving any final conflicts and tying up loose ends. Leave the audience with a clear resolution of your focus research question, and use unresolved tension to set up potential sequels (i.e. further research).

Use your medium to enhance the narrative

Visual presentations should be dominated by clear, intentional graphics. Subtle animation in key moments (usually during the results or discussion) can add drama to the narrative arc and make conflict resolutions more satisfying. You are narrating a story written in images, videos, cartoons, and graphs. While your paper is mostly text, with graphics to highlight crucial points, your slides should be the opposite. Adapting to the new medium may require you to create or acquire far more graphics than you included in your paper, but it is necessary to create an engaging presentation.

The most important thing you can do for your presentation is to practice and revise. Bother your friends, your roommates, TAs–anybody who will sit down and listen to your work. Beyond that, think about presentations you have found compelling and try to incorporate some of those elements into your own. Remember you want your work to be comprehensible; you aren’t creating experts in 10 minutes. Above all, try to stay passionate about what you did and why. You put the time in, so show your audience that it’s worth it.

For more insight into research presentations, check out these past PCUR posts written by Emma and Ellie .

— Alec Getraer, Natural Sciences Correspondent

Share this:

- Share on Tumblr

The Ultimate Guide to Qualitative Research - Part 3: Presenting Qualitative Data

- Introduction

How do you present qualitative data?

Data visualization.

- Research paper writing

- Transparency and rigor in research

- How to publish a research paper

Table of contents

- Transparency and rigor

Navigate to other guide parts:

Part 1: The Basics or Part 2: Handling Qualitative Data

- Presenting qualitative data

In the end, presenting qualitative research findings is just as important a skill as mastery of qualitative research methods for the data collection and data analysis process . Simply uncovering insights is insufficient to the research process; presenting a qualitative analysis holds the challenge of persuading your audience of the value of your research. As a result, it's worth spending some time considering how best to report your research to facilitate its contribution to scientific knowledge.

When it comes to research, presenting data in a meaningful and accessible way is as important as gathering it. This is particularly true for qualitative research , where the richness and complexity of the data demand careful and thoughtful presentation. Poorly written research is taken less seriously and left undiscussed by the greater scholarly community; quality research reporting that persuades its audience stands a greater chance of being incorporated in discussions of scientific knowledge.

Qualitative data presentation differs fundamentally from that found in quantitative research. While quantitative data tend to be numerical and easily lend themselves to statistical analysis and graphical representation, qualitative data are often textual and unstructured, requiring an interpretive approach to bring out their inherent meanings. Regardless of the methodological approach , the ultimate goal of data presentation is to communicate research findings effectively to an audience so they can incorporate the generated knowledge into their research inquiry.

As the section on research rigor will suggest, an effective presentation of your research depends on a thorough scientific process that organizes raw data into a structure that allows for a thorough analysis for scientific understanding.

Preparing the data

The first step in presenting qualitative data is preparing the data. This preparation process often begins with cleaning and organizing the data. Cleaning involves checking the data for accuracy and completeness, removing any irrelevant information, and making corrections as needed. Organizing the data often entails arranging the data into categories or groups that make sense for your research framework.

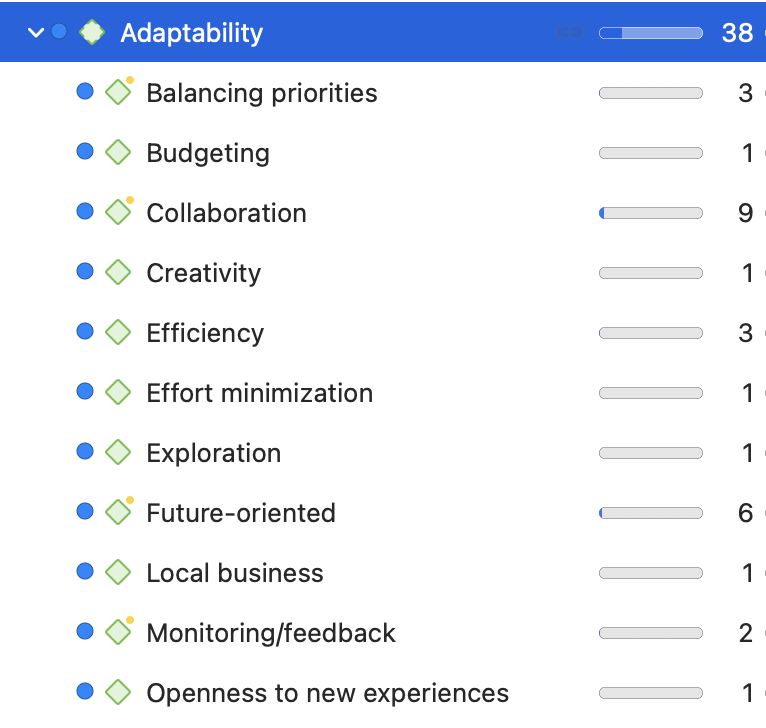

Coding the data

Once the data are cleaned and organized, the next step is coding , a crucial part of qualitative data analysis. Coding involves assigning labels to segments of the data to summarize or categorize them. This process helps to identify patterns and themes in the data, laying the groundwork for subsequent data interpretation and presentation. Qualitative research often involves multiple iterations of coding, creating new and meaningful codes while discarding unnecessary ones , to generate a rich structure through which data analysis can occur.

Uncovering insights

As you navigate through these initial steps, keep in mind the broader aim of qualitative research, which is to provide rich, detailed, and nuanced understandings of people's experiences, behaviors, and social realities. These guiding principles will help to ensure that your data presentation is not only accurate and comprehensive but also meaningful and impactful.

While this process might seem intimidating at first, it's an essential part of any qualitative research project. It's also a skill that can be learned and refined over time, so don't be discouraged if you find it challenging at first. Remember, the goal of presenting qualitative data is to make your research findings accessible and understandable to others. This requires careful preparation, a clear understanding of your data, and a commitment to presenting your findings in a way that respects and honors the complexity of the phenomena you're studying.

In the following sections, we'll delve deeper into how to create a comprehensive narrative from your data, the visualization of qualitative data , and the writing and publication processes . Let's briefly excerpt some of the content in the articles in this part of the guide.

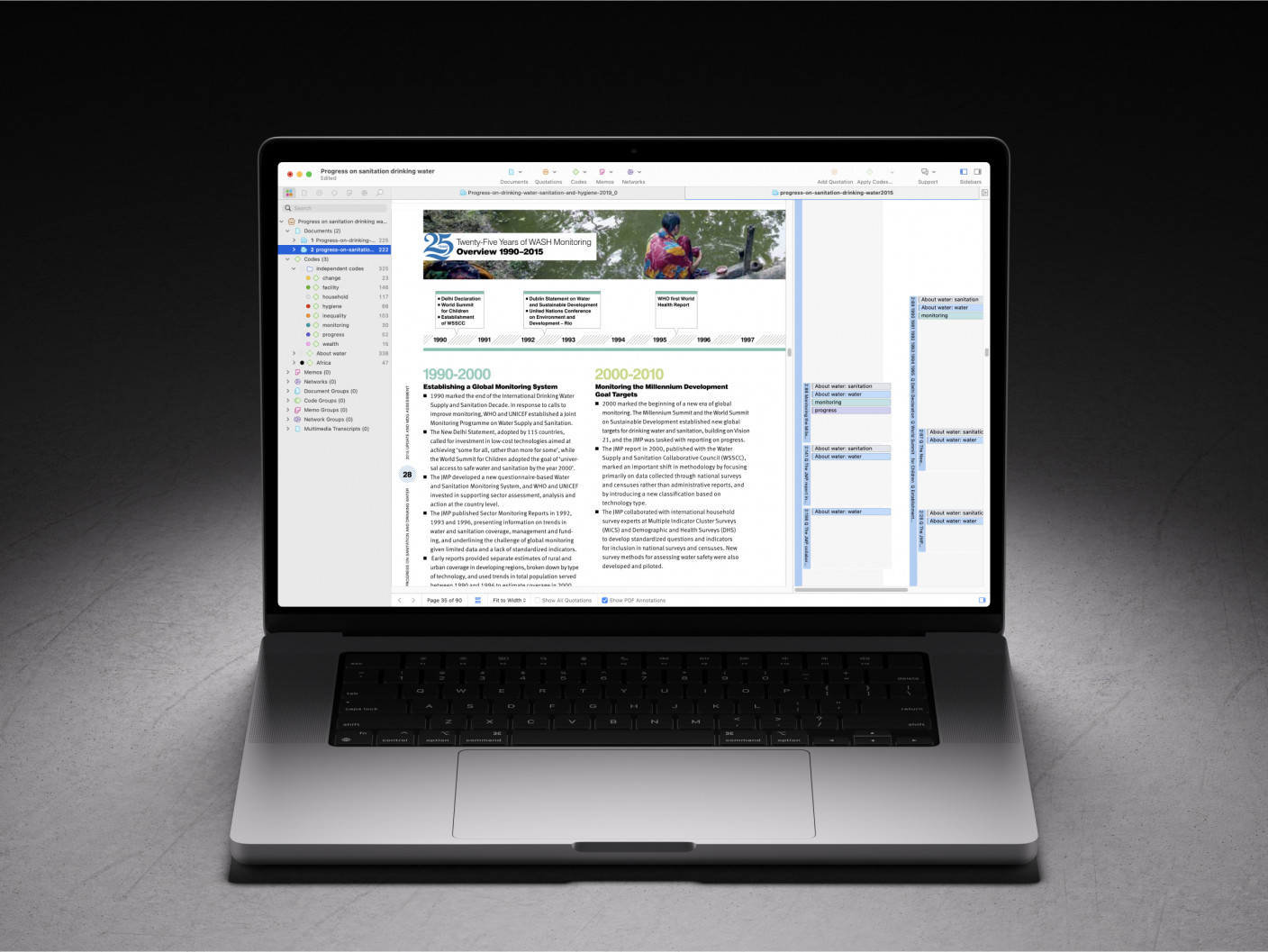

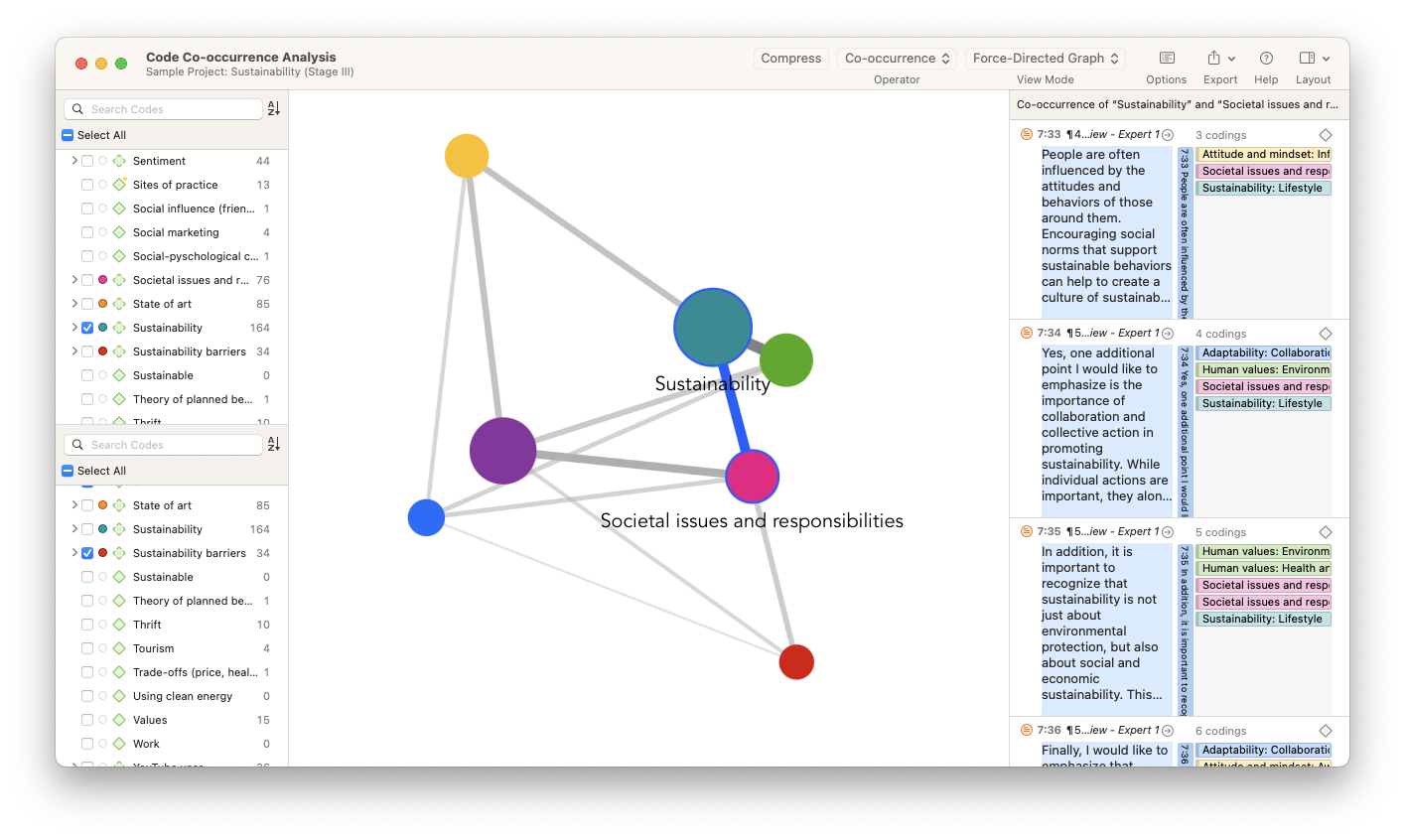

ATLAS.ti helps you make sense of your data

Find out how with a free trial of our powerful data analysis interface.

How often do you read a research article and skip straight to the tables and figures? That's because data visualizations representing qualitative and quantitative data have the power to make large and complex research projects with thousands of data points comprehensible when authors present data to research audiences. Researchers create visual representations to help summarize the data generated from their study and make clear the pathways for actionable insights.

In everyday situations, a picture is always worth a thousand words. Illustrations, figures, and charts convey messages that words alone cannot. In research, data visualization can help explain scientific knowledge, evidence for data insights, and key performance indicators in an orderly manner based on data that is otherwise unstructured.

For all of the various data formats available to researchers, a significant portion of qualitative and social science research is still text-based. Essays, reports, and research articles still rely on writing practices aimed at repackaging research in prose form. This can create the impression that simply writing more will persuade research audiences. Instead, framing research in terms that are easy for your target readers to understand makes it easier for your research to become published in peer-reviewed scholarly journals or find engagement at scholarly conferences. Even in market or professional settings, data visualization is an essential concept when you need to convince others about the insights of your research and the recommendations you make based on the data.

Importance of data visualization

Data visualization is important because it makes it easy for your research audience to understand your data sets and your findings. Also, data visualization helps you organize your data more efficiently. As the explanation of ATLAS.ti's tools will illustrate in this section, data visualization might point you to research inquiries that you might not even be aware of, helping you get the most out of your data. Strictly speaking, the primary role of data visualization is to make the analysis of your data , if not the data itself, clear. Especially in social science research, data visualization makes it easy to see how data scientists collect and analyze data.

Prerequisites for generating data visualizations

Data visualization is effective in explaining research to others only if the researcher or data scientist can make sense of the data in front of them. Traditional research with unstructured data usually calls for coding the data with short, descriptive codes that can be analyzed later, whether statistically or thematically. These codes form the basic data points of a meaningful qualitative analysis. They represent the structure of qualitative data sets, without which a scientific visualization with research rigor would be extremely difficult to achieve. In most respects, data visualization of a qualitative research project requires coding the entire data set so that the codes adequately represent the collected data.

A successfully crafted research study culminates in the writing of the research paper . While a pilot study or preliminary research might guide the research design , a full research study leads to discussion that highlights avenues for further research. As such, the importance of the research paper cannot be overestimated in the overall generation of scientific knowledge.

The physical and natural sciences tend to have a clinical structure for a research paper that mirrors the scientific method: outline the background research, explain the materials and methods of the study, outline the research findings generated from data analysis, and discuss the implications. Qualitative research tends to preserve much of this structure, but there are notable and numerous variations from a traditional research paper that it's worth emphasizing the flexibility in the social sciences with respect to the writing process.

Requirements for research writing

While there aren't any hard and fast rules regarding what belongs in a qualitative research paper , readers expect to find a number of pieces of relevant information in a rigorously-written report. The best way to know what belongs in a full research paper is to look at articles in your target journal or articles that share a particular topic similar to yours and examine how successfully published papers are written.

It's important to emphasize the more mundane but equally important concerns of proofreading and formatting guidelines commonly found when you write a research paper. Research publication shouldn't strictly be a test of one's writing skills, but acknowledging the importance of convincing peer reviewers of the credibility of your research means accepting the responsibility of preparing your research manuscript to commonly accepted standards in research.

As a result, seemingly insignificant things such as spelling mistakes, page numbers, and proper grammar can make a difference with a particularly strict reviewer. Even when you expect to develop a paper through reviewer comments and peer feedback, your manuscript should be as close to a polished final draft as you can make it prior to submission.

Qualitative researchers face particular challenges in convincing their target audience of the value and credibility of their subsequent analysis. Numbers and quantifiable concepts in quantitative studies are relatively easier to understand than their counterparts associated with qualitative methods . Think about how easy it is to make conclusions about the value of items at a store based on their prices, then imagine trying to compare those items based on their design, function, and effectiveness.

Qualitative research involves and requires these sorts of discussions. The goal of qualitative data analysis is to allow a qualitative researcher and their audience to make such determinations, but before the audience can accept these determinations, the process of conducting research that produces the qualitative analysis must first be seen as trustworthy. As a result, it is on the researcher to persuade their audience that their data collection process and subsequent analysis is rigorous.

Qualitative rigor refers to the meticulousness, consistency, and transparency of the research. It is the application of systematic, disciplined, and stringent methods to ensure the credibility, dependability, confirmability, and transferability of research findings. In qualitative inquiry, these attributes ensure the research accurately reflects the phenomenon it is intended to represent, that its findings can be understood or used by others, and that its processes and results are open to scrutiny and validation.

Transparency

It is easier to believe the information presented to you if there is a rigorous analysis process behind that information, and if that process is explicitly detailed. The same is true for qualitative research results, making transparency a key element in qualitative research methodologies. Transparency is a fundamental aspect of rigor in qualitative research. It involves the clear, detailed, and explicit documentation of all stages of the research process. This allows other researchers to understand, evaluate, replicate, and build upon the study. Transparency in qualitative research is essential for maintaining rigor, trustworthiness, and ethical integrity. By being transparent, researchers allow their work to be scrutinized, critiqued, and improved upon, contributing to the ongoing development and refinement of knowledge in their field.

Research papers are only as useful as their audience in the scientific community is wide. To reach that audience, a paper needs to pass the peer review process of an academic journal. However, the idea of having research published in peer-reviewed journals may seem daunting to newer researchers, so it's important to provide a guide on how an academic journal looks at your research paper as well as how to determine what is the right journal for your research.

In simple terms, a research article is good if it is accepted as credible and rigorous by the scientific community. A study that isn't seen as a valid contribution to scientific knowledge shouldn't be published; ultimately, it is up to peers within the field in which the study is being considered to determine the study's value. In established academic research, this determination is manifest in the peer review process. Journal editors at a peer-reviewed journal assign papers to reviewers who will determine the credibility of the research. A peer-reviewed article that completed this process and is published in a reputable journal can be seen as credible with novel research that can make a profound contribution to scientific knowledge.

The process of research publication

The process has been codified and standardized within the scholarly community to include three main stages. These stages include the initial submission stage where the editor reviews the relevance of the paper, the review stage where experts in your field offer feedback, and, if reviewers approve your paper, the copyediting stage where you work with the journal to prepare the paper for inclusion in their journal.

Publishing a research paper may seem like an opaque process where those involved with academic journals make arbitrary decisions about the worthiness of research manuscripts. In reality, reputable publications assign a rubric or a set of guidelines that reviewers need to keep in mind when they review a submission. These guidelines will most likely differ depending on the journal, but they fall into a number of typical categories that are applicable regardless of the research area or the type of methods employed in a research study, including the strength of the literature review , rigor in research methodology , and novelty of findings.

Choosing the right journal isn't simply a matter of which journal is the most famous or has the broadest reach. Many universities keep lists of prominent journals where graduate students and faculty members should publish a research paper , but oftentimes this list is determined by a journal's impact factor and their inclusion in major academic databases.

Guide your research to publication with ATLAS.ti

Turn insights into visualizations with our easy-to-use interface. Download a free trial today.

This section is part of an entire guide. Use this table of contents to jump to any page in the guide.

Part 1: The Basics

- What is qualitative data?

- 10 examples of qualitative data

- Qualitative vs. quantitative research

- What is mixed methods research?

- Theoretical perspective

- Theoretical framework

- Literature reviews

- Research questions

- Conceptual framework

- Conceptual vs. theoretical framework

- Focus groups

- Observational research

- Case studies

- Survey research

- What is ethnographic research?

- Confidentiality and privacy in research

- Bias in research

- Power dynamics in research

- Reflexivity

Part 2: Handling Qualitative Data

- Research transcripts

- Field notes in research

- Research memos

- Survey data

- Images, audio, and video in qualitative research

- Coding qualitative data

- Coding frame

- Auto-coding and smart coding

- Organizing codes

- Content analysis

- Thematic analysis

- Thematic analysis vs. content analysis

- Narrative research

- Phenomenological research

- Discourse analysis

- Grounded theory

- Deductive reasoning

- What is inductive reasoning?

- Inductive vs. deductive reasoning

- What is data interpretation?

- Qualitative analysis software

Part 3: Presenting Qualitative Data

- Data visualization - What is it and why is it important?

Skills for Learning : Research Skills

Data analysis is an ongoing process that should occur throughout your research project. Suitable data-analysis methods must be selected when you write your research proposal. The nature of your data (i.e. quantitative or qualitative) will be influenced by your research design and purpose. The data will also influence the analysis methods selected.

We run interactive workshops to help you develop skills related to doing research, such as data analysis, writing literature reviews and preparing for dissertations. Find out more on the Skills for Learning Workshops page.

We have online academic skills modules within MyBeckett for all levels of university study. These modules will help your academic development and support your success at LBU. You can work through the modules at your own pace, revisiting them as required. Find out more from our FAQ What academic skills modules are available?

Quantitative data analysis

Broadly speaking, 'statistics' refers to methods, tools and techniques used to collect, organise and interpret data. The goal of statistics is to gain understanding from data. Therefore, you need to know how to:

- Produce data – for example, by handing out a questionnaire or doing an experiment.

- Organise, summarise, present and analyse data.

- Draw valid conclusions from findings.

There are a number of statistical methods you can use to analyse data. Choosing an appropriate statistical method should follow naturally, however, from your research design. Therefore, you should think about data analysis at the early stages of your study design. You may need to consult a statistician for help with this.

Tips for working with statistical data

- Plan so that the data you get has a good chance of successfully tackling the research problem. This will involve reading literature on your subject, as well as on what makes a good study.

- To reach useful conclusions, you need to reduce uncertainties or 'noise'. Thus, you will need a sufficiently large data sample. A large sample will improve precision. However, this must be balanced against the 'costs' (time and money) of collection.

- Consider the logistics. Will there be problems in obtaining sufficient high-quality data? Think about accuracy, trustworthiness and completeness.

- Statistics are based on random samples. Consider whether your sample will be suited to this sort of analysis. Might there be biases to think about?

- How will you deal with missing values (any data that is not recorded for some reason)? These can result from gaps in a record or whole records being missed out.

- When analysing data, start by looking at each variable separately. Conduct initial/exploratory data analysis using graphical displays. Do this before looking at variables in conjunction or anything more complicated. This process can help locate errors in the data and also gives you a 'feel' for the data.

- Look out for patterns of 'missingness'. They are likely to alert you if there’s a problem. If the 'missingness' is not random, then it will have an impact on the results.

- Be vigilant and think through what you are doing at all times. Think critically. Statistics are not just mathematical tricks that a computer sorts out. Rather, analysing statistical data is a process that the human mind must interpret!

Top tips! Try inventing or generating the sort of data you might get and see if you can analyse it. Make sure that your process works before gathering actual data. Think what the output of an analytic procedure will look like before doing it for real.

(Note: it is actually difficult to generate realistic data. There are fraud-detection methods in place to identify data that has been fabricated. So, remember to get rid of your practice data before analysing the real stuff!)

Statistical software packages

Software packages can be used to analyse and present data. The most widely used ones are SPSS and NVivo.

SPSS is a statistical-analysis and data-management package for quantitative data analysis. Click on ‘ How do I install SPSS? ’ to learn how to download SPSS to your personal device. SPSS can perform a wide variety of statistical procedures. Some examples are:

- Data management (i.e. creating subsets of data or transforming data).

- Summarising, describing or presenting data (i.e. mean, median and frequency).

- Looking at the distribution of data (i.e. standard deviation).

- Comparing groups for significant differences using parametric (i.e. t-test) and non-parametric (i.e. Chi-square) tests.

- Identifying significant relationships between variables (i.e. correlation).

NVivo can be used for qualitative data analysis. It is suitable for use with a wide range of methodologies. Click on ‘ How do I access NVivo ’ to learn how to download NVivo to your personal device. NVivo supports grounded theory, survey data, case studies, focus groups, phenomenology, field research and action research.

- Process data such as interview transcripts, literature or media extracts, and historical documents.

- Code data on screen and explore all coding and documents interactively.

- Rearrange, restructure, extend and edit text, coding and coding relationships.

- Search imported text for words, phrases or patterns, and automatically code the results.

Qualitative data analysis

Miles and Huberman (1994) point out that there are diverse approaches to qualitative research and analysis. They suggest, however, that it is possible to identify 'a fairly classic set of analytic moves arranged in sequence'. This involves:

- Affixing codes to a set of field notes drawn from observation or interviews.

- Noting reflections or other remarks in the margins.

- Sorting/sifting through these materials to identify: a) similar phrases, relationships between variables, patterns and themes and b) distinct differences between subgroups and common sequences.

- Isolating these patterns/processes and commonalties/differences. Then, taking them out to the field in the next wave of data collection.

- Highlighting generalisations and relating them to your original research themes.

- Taking the generalisations and analysing them in relation to theoretical perspectives.

(Miles and Huberman, 1994.)

Patterns and generalisations are usually arrived at through a process of analytic induction (see above points 5 and 6). Qualitative analysis rarely involves statistical analysis of relationships between variables. Qualitative analysis aims to gain in-depth understanding of concepts, opinions or experiences.

Presenting information

There are a number of different ways of presenting and communicating information. The particular format you use is dependent upon the type of data generated from the methods you have employed.

Here are some appropriate ways of presenting information for different types of data:

Bar charts: These may be useful for comparing relative sizes. However, they tend to use a large amount of ink to display a relatively small amount of information. Consider a simple line chart as an alternative.

Pie charts: These have the benefit of indicating that the data must add up to 100%. However, they make it difficult for viewers to distinguish relative sizes, especially if two slices have a difference of less than 10%.

Other examples of presenting data in graphical form include line charts and scatter plots .

Qualitative data is more likely to be presented in text form. For example, using quotations from interviews or field diaries.

- Plan ahead, thinking carefully about how you will analyse and present your data.

- Think through possible restrictions to resources you may encounter and plan accordingly.

- Find out about the different IT packages available for analysing your data and select the most appropriate.

- If necessary, allow time to attend an introductory course on a particular computer package. You can book SPSS and NVivo workshops via MyHub .

- Code your data appropriately, assigning conceptual or numerical codes as suitable.

- Organise your data so it can be analysed and presented easily.

- Choose the most suitable way of presenting your information, according to the type of data collected. This will allow your information to be understood and interpreted better.

Primary, secondary and tertiary sources

Information sources are sometimes categorised as primary, secondary or tertiary sources depending on whether or not they are ‘original’ materials or data. For some research projects, you may need to use primary sources as well as secondary or tertiary sources. However the distinction between primary and secondary sources is not always clear and depends on the context. For example, a newspaper article might usually be categorised as a secondary source. But it could also be regarded as a primary source if it were an article giving a first-hand account of a historical event written close to the time it occurred.

- Primary sources

- Secondary sources

- Tertiary sources

- Grey literature

Primary sources are original sources of information that provide first-hand accounts of what is being experienced or researched. They enable you to get as close to the actual event or research as possible. They are useful for getting the most contemporary information about a topic.