- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

You’re Not Powerless in the Face of Online Harassment

- Viktorya Vilk

Eight steps to take.

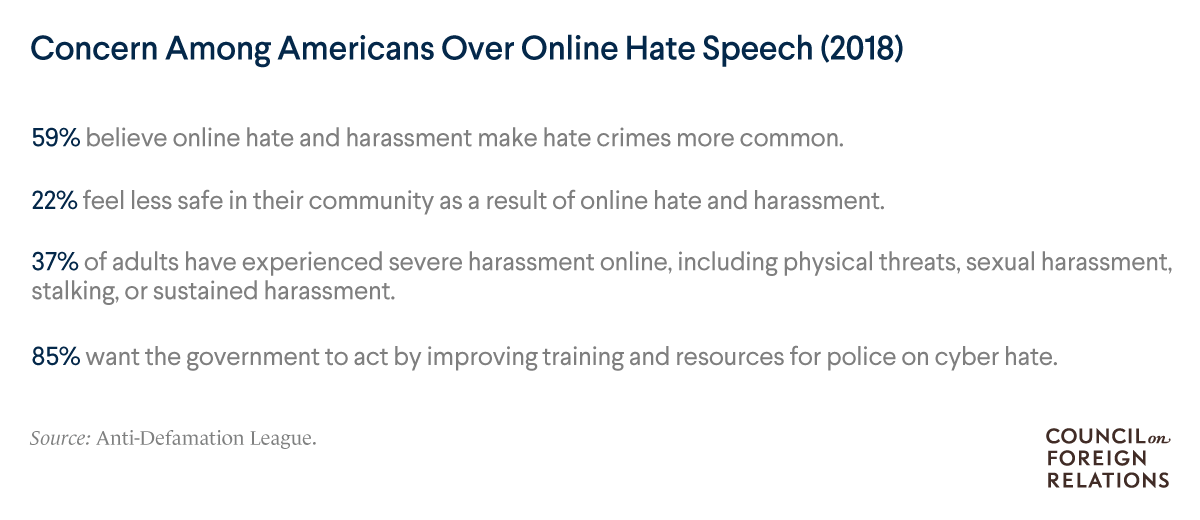

If you or someone you know is experiencing online harassment, remember that you are not powerless. There are concrete steps you can take to defend yourself and others. First, understand what’s happening to you. If you’re being critiqued or insulted, you can choose to refute it or let it go. But if you’re being abused, naming what you’re experiencing not only signals that it’s a tangible problem, but can also help you communicate with allies, employers, and law enforcement. Next, be sure to document. If you report online abuse and succeed in getting it taken down, you could lose valuable evidence. Save emails, voicemails, and texts. Take screenshots on social media and copy direct links whenever possible. Finally, assess your safety. If you’re being made to feel physically unsafe in any way — trust your instincts. While police may not always be able to stop the abuse (and not all authorities are equally well-trained in dealing with it), at the very least you are creating a record that could be useful later.

Online abuse — from impersonation accounts to hateful slurs and death threats — began with the advent of the internet itself, but the problem is pervasive and growing. A 2017 study from the Pew Research Center found that more than 40% of Americans have experienced online abuse, and more than 60% have witnessed it. People of color and LGBTQ+ people are disproportionately targeted, and women are twice as likely as men to experience sexual harassment online.

- VV Viktorya Vilk is Program Director for Digital Safety and Free Expression at PEN America, where she develops resources, including the Online Harassment Field Manual , and conducts trainings on online abuse, self-defense, and best practices for offering support.

Partner Center

- Foreign Affairs

- CFR Education

- Newsletters

Climate Change

Global Climate Agreements: Successes and Failures

Backgrounder by Lindsay Maizland December 5, 2023 Renewing America

- Defense & Security

- Diplomacy & International Institutions

- Energy & Environment

- Human Rights

- Politics & Government

- Social Issues

Myanmar’s Troubled History

Backgrounder by Lindsay Maizland January 31, 2022

- Europe & Eurasia

- Global Commons

- Middle East & North Africa

- Sub-Saharan Africa

How Tobacco Laws Could Help Close the Racial Gap on Cancer

Interactive by Olivia Angelino, Thomas J. Bollyky , Elle Ruggiero and Isabella Turilli February 1, 2023 Global Health Program

- Backgrounders

- Special Projects

United States

CFR Welcomes Daniel B. Poneman Back as Senior Fellow

News Releases July 11, 2024

- Centers & Programs

- Books & Reports

- Independent Task Force Program

- Fellowships

Oil and Petroleum Products

Academic Webinar: The Geopolitics of Oil

Webinar with Carolyn Kissane and Irina A. Faskianos April 12, 2023

- State & Local Officials

- Religion Leaders

- Local Journalists

NATO's Future: Enlarged and More European?

Virtual Event with Emma M. Ashford, Michael R. Carpenter, Camille Grand, Thomas Wright, Liana Fix and Charles A. Kupchan June 25, 2024 Europe Program

- Lectureship Series

- Webinars & Conference Calls

- Member Login

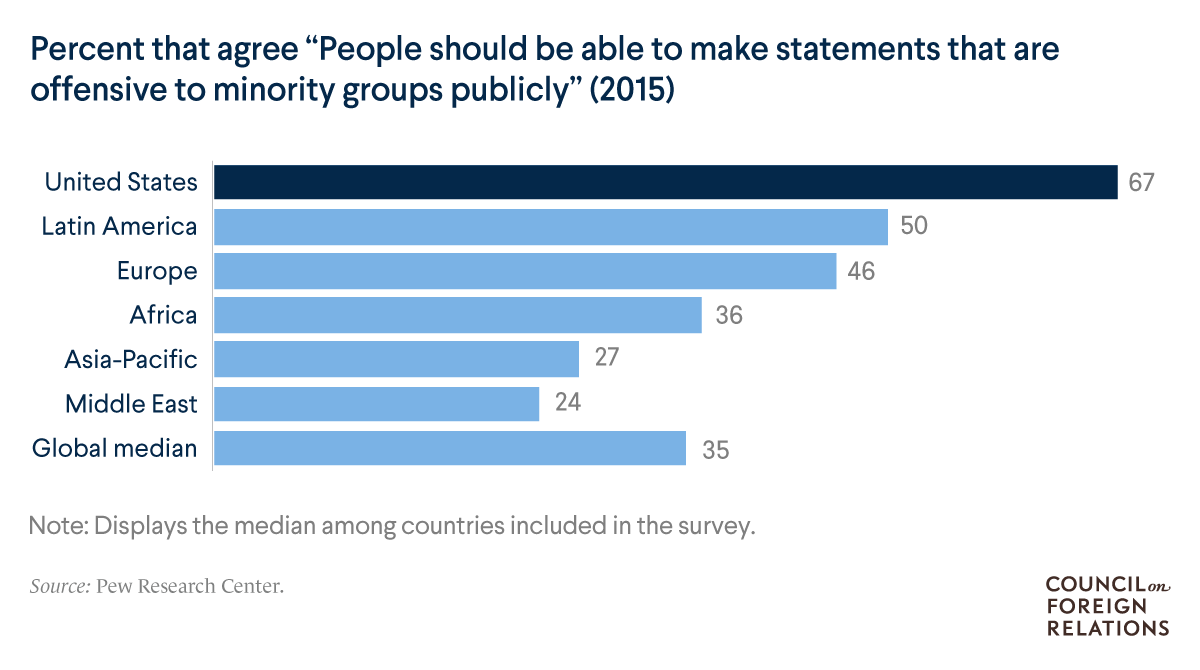

Hate Speech on Social Media: Global Comparisons

- Hate speech online has been linked to a global increase in violence toward minorities, including mass shootings, lynchings, and ethnic cleansing.

- Policies used to curb hate speech risk limiting free speech and are inconsistently enforced.

- Countries such as the United States grant social media companies broad powers in managing their content and enforcing hate speech rules. Others, including Germany, can force companies to remove posts within certain time periods.

Introduction

A mounting number of attacks on immigrants and other minorities has raised new concerns about the connection between inflammatory speech online and violent acts, as well as the role of corporations and the state in policing speech. Analysts say trends in hate crimes around the world echo changes in the political climate, and that social media can magnify discord. At their most extreme, rumors and invective disseminated online have contributed to violence ranging from lynchings to ethnic cleansing.

The response has been uneven, and the task of deciding what to censor, and how, has largely fallen to the handful of corporations that control the platforms on which much of the world now communicates. But these companies are constrained by domestic laws. In liberal democracies, these laws can serve to defuse discrimination and head off violence against minorities. But such laws can also be used to suppress minorities and dissidents.

How widespread is the problem?

- Radicalization and Extremism

- Social Media

- Race and Ethnicity

- Censorship and Freedom of Expression

- Digital Policy

Incidents have been reported on nearly every continent. Much of the world now communicates on social media, with nearly a third of the world’s population active on Facebook alone. As more and more people have moved online, experts say, individuals inclined toward racism, misogyny, or homophobia have found niches that can reinforce their views and goad them to violence. Social media platforms also offer violent actors the opportunity to publicize their acts.

Social scientists and others have observed how social media posts, and other online speech, can inspire acts of violence:

- In Germany a correlation was found between anti-refugee Facebook posts by the far-right Alternative for Germany party and attacks on refugees. Scholars Karsten Muller and Carlo Schwarz observed that upticks in attacks, such as arson and assault, followed spikes in hate-mongering posts .

- In the United States, perpetrators of recent white supremacist attacks have circulated among racist communities online, and also embraced social media to publicize their acts. Prosecutors said the Charleston church shooter , who killed nine black clergy and worshippers in June 2015, engaged in a “ self-learning process ” online that led him to believe that the goal of white supremacy required violent action.

- The 2018 Pittsburgh synagogue shooter was a participant in the social media network Gab , whose lax rules have attracted extremists banned by larger platforms. There, he espoused the conspiracy that Jews sought to bring immigrants into the United States, and render whites a minority, before killing eleven worshippers at a refugee-themed Shabbat service. This “great replacement” trope, which was heard at the white supremacist rally in Charlottesville, Virginia, a year prior and originates with the French far right , expresses demographic anxieties about nonwhite immigration and birth rates.

- The great replacement trope was in turn espoused by the perpetrator of the 2019 New Zealand mosque shootings, who killed forty-nine Muslims at prayer and sought to broadcast the attack on YouTube.

- In Myanmar, military leaders and Buddhist nationalists used social media to slur and demonize the Rohingya Muslim minority ahead of and during a campaign of ethnic cleansing . Though Rohingya comprised perhaps 2 percent of the population, ethnonationalists claimed that Rohingya would soon supplant the Buddhist majority. The UN fact-finding mission said, “Facebook has been a useful instrument for those seeking to spread hate, in a context where, for most users, Facebook is the Internet [PDF].”

- In India, lynch mobs and other types of communal violence, in many cases originating with rumors on WhatsApp groups , have been on the rise since the Hindu-nationalist Bharatiya Janata Party (BJP) came to power in 2014.

- Sri Lanka has similarly seen vigilantism inspired by rumors spread online, targeting the Tamil Muslim minority. During a spate of violence in March 2018, the government blocked access to Facebook and WhatsApp, as well as the messaging app Viber, for a week, saying that Facebook had not been sufficiently responsive during the emergency.

Does social media catalyze hate crimes?

The same technology that allows social media to galvanize democracy activists can be used by hate groups seeking to organize and recruit. It also allows fringe sites, including peddlers of conspiracies, to reach audiences far broader than their core readership. Online platforms’ business models depend on maximizing reading or viewing times. Since Facebook and similar platforms make their money by enabling advertisers to target audiences with extreme precision, it is in their interests to let people find the communities where they will spend the most time.

Users’ experiences online are mediated by algorithms designed to maximize their engagement, which often inadvertently promote extreme content. Some web watchdog groups say YouTube’s autoplay function, in which the player, at the end of one video, tees up a related one, can be especially pernicious. The algorithm drives people to videos that promote conspiracy theories or are otherwise “ divisive, misleading or false ,” according to a Wall Street Journal investigative report. “YouTube may be one of the most powerful radicalizing instruments of the 21st century,” writes sociologist Zeynep Tufekci .

YouTube said in June 2019 that changes to its recommendation algorithm made in January had halved views of videos deemed “borderline content” for spreading misinformation. At that time, the company also announced that it would remove neo-Nazi and white supremacist videos from its site. Yet the platform faced criticism that its efforts to curb hate speech do not go far enough. For instance, critics note that rather than removing videos that provoked homophobic harassment of a journalist, YouTube instead cut off the offending user from sharing in advertising revenue.

How do platforms enforce their rules?

Social media platforms rely on a combination of artificial intelligence, user reporting, and staff known as content moderators to enforce their rules regarding appropriate content. Moderators, however, are burdened by the sheer volume of content and the trauma that comes from sifting through disturbing posts , and social media companies don’t evenly devote resources across the many markets they serve.

A ProPublica investigation found that Facebook’s rules are opaque to users and inconsistently applied by its thousands of contractors charged with content moderation. (Facebook says there are fifteen thousand.) In many countries and disputed territories, such as the Palestinian territories, Kashmir, and Crimea, activists and journalists have found themselves censored , as Facebook has sought to maintain access to national markets or to insulate itself from legal liability. “The company’s hate-speech rules tend to favor elites and governments over grassroots activists and racial minorities,” ProPublica found.

Daily News Brief

A summary of global news developments with cfr analysis delivered to your inbox each morning. weekdays., the world this week, a weekly digest of the latest from cfr on the biggest foreign policy stories of the week, featuring briefs, opinions, and explainers. every friday., think global health.

A curation of original analyses, data visualizations, and commentaries, examining the debates and efforts to improve health worldwide. Weekly.

Addressing the challenges of navigating varying legal systems and standards around the world—and facing investigations by several governments—Facebook CEO Mark Zuckerberg called for global regulations to establish baseline content, electoral integrity, privacy, and data standards.

Problems also arise when platforms’ artificial intelligence is poorly adapted to local languages and companies have invested little in staff fluent in them. This was particularly acute in Myanmar, where, Reuters reported, Facebook employed just two Burmese speakers as of early 2015. After a series of anti-Muslim violence began in 2012, experts warned of the fertile environment ultranationalist Buddhist monks found on Facebook for disseminating hate speech to an audience newly connected to the internet after decades under a closed autocratic system.

Facebook admitted it had done too little after seven hundred thousand Rohingya were driven to Bangladesh and a UN human rights panel singled out the company in a report saying Myanmar’s security forces should be investigated for genocidal intent. In August 2018, it banned military officials from the platform and pledged to increase the number of moderators fluent in the local language.

How do countries regulate hate speech online?

In many ways, the debates confronting courts, legislatures, and publics about how to reconcile the competing values of free expression and nondiscrimination have been around for a century or longer. Democracies have varied in their philosophical approaches to these questions, as rapidly changing communications technologies have raised technical challenges of monitoring and responding to incitement and dangerous disinformation.

United States. Social media platforms have broad latitude [PDF], each establishing its own standards for content and methods of enforcement. Their broad discretion stems from the Communications Decency Act . The 1996 law exempts tech platforms from liability for actionable speech by their users. Magazines and television networks, for example, can be sued for publishing defamatory information they know to be false; social media platforms cannot be found similarly liable for content they host.

Recent congressional hearings have highlighted the chasm between Democrats and Republicans on the issue. House Judiciary Committee Chairman Jerry Nadler convened a hearing in the aftermath of the New Zealand attack, saying the internet has aided white nationalism’s international proliferation. “The President’s rhetoric fans the flames with language that—whether intentional or not—may motivate and embolden white supremacist movements,” he said, a charge Republicans on the panel disputed. The Senate Judiciary Committee, led by Ted Cruz, held a nearly simultaneous hearing in which he alleged that major social media companies’ rules disproportionately censor conservative speech , threatening the platforms with federal regulation. Democrats on that panel said Republicans seek to weaken policies dealing with hate speech and disinformation that instead ought to be strengthened.

European Union. The bloc’s twenty-eight members all legislate the issue of hate speech on social media differently, but they adhere to some common principles. Unlike the United States, it is not only speech that directly incites violence that comes under scrutiny; so too does speech that incites hatred or denies or minimizes genocide and crimes against humanity. Backlash against the millions of predominantly Muslim migrants and refugees who have arrived in Europe in recent years has made this a particularly salient issue, as has an uptick in anti-Semitic incidents in countries including France, Germany, and the United Kingdom.

In a bid to preempt bloc-wide legislation, major tech companies agreed to a code of conduct with the European Union in which they pledged to review posts flagged by users and take down those that violate EU standards within twenty-four hours. In a February 2019 review, the European Commission found that social media platforms were meeting this requirement in three-quarters of cases .

The Nazi legacy has made Germany especially sensitive to hate speech. A 2018 law requires large social media platforms to take down posts that are “manifestly illegal” under criteria set out in German law within twenty-four hours. Human Rights Watch raised concerns that the threat of hefty fines would encourage the social media platforms to be “overzealous censors.”

New regulations under consideration by the bloc’s executive arm would extend a model similar to Germany’s across the EU, with the intent of “preventing the dissemination of terrorist content online .” Civil libertarians have warned against the measure for its “ vague and broad ” definitions of prohibited content, as well as for making private corporations, rather than public authorities, the arbiters of censorship.

India. Under new social media rules, the government can order platforms to take down posts within twenty-four hours based on a wide range of offenses, as well as to obtain the identity of the user. As social media platforms have made efforts to stanch the sort of speech that has led to vigilante violence, lawmakers from the ruling BJP have accused them of censoring content in a politically discriminatory manner, disproportionately suspending right-wing accounts, and thus undermining Indian democracy . Critics of the BJP accuse it of deflecting blame from party elites to the platforms hosting them. As of April 2018, the New Delhi–based Association for Democratic Reforms had identified fifty-eight lawmakers facing hate speech cases, including twenty-seven from the ruling BJP. The opposition has expressed unease with potential government intrusions into privacy.

Japan. Hate speech has become a subject of legislation and jurisprudence in Japan in the past decade [PDF], as anti-racism activists have challenged ultranationalist agitation against ethnic Koreans. This attention to the issue attracted a rebuke from the UN Committee on the Elimination of Racial Discrimination in 2014 and inspired a national ban on hate speech in 2016, with the government adopting a model similar to Europe’s. Rather than specify criminal penalties, however, it delegates to municipal governments the responsibility “to eliminate unjust discriminatory words and deeds against People from Outside Japan.” A handful of recent cases concerning ethnic Koreans could pose a test: in one, the Osaka government ordered a website containing videos deemed hateful taken down , and in Kanagawa and Okinawa Prefectures courts have fined individuals convicted of defaming ethnic Koreans in anonymous online posts.

What are the prospects for international prosecution?

Cases of genocide and crimes against humanity could be the next frontier of social media jurisprudence, drawing on precedents set in Nuremberg and Rwanda. The Nuremberg trials in post-Nazi Germany convicted the publisher of the newspaper Der Sturmer ; the 1948 Genocide Convention subsequently included “ direct and public incitement to commit genocide ” as a crime. During the UN International Criminal Tribunal for Rwanda, two media executives were convicted on those grounds. As prosecutors look ahead to potential genocide and war crimes tribunals for cases such as Myanmar, social media users with mass followings could be found similarly criminally liable.

Recommended Resources

Andrew Sellars sorts through attempts to define hate speech .

Columbia University compiles relevant case law from around the world.

The U.S. Holocaust Memorial Museum lays out the legal history of incitement to genocide.

Kate Klonick describes how private platforms have come to govern public speech .

Timothy McLaughlin chronicles Facebook’s role in atrocities against Rohingya in Myanmar.

Adrian Chen reports on the psychological toll of content moderation on contract workers.

Tarleton Gillespie discusses the politics of content moderation .

- Technology and Innovation

More From Our Experts

How Will the EU Elections Results Change Europe?

In Brief by Liana Fix June 10, 2024 Europe Program

Iran Attack Means an Even Tougher Balancing Act for the U.S. in the Middle East

In Brief by Steven A. Cook April 14, 2024 Middle East Program

Iran Attacks on Israel Spur Escalation Concerns

In Brief by Ray Takeyh April 14, 2024 Middle East Program

Top Stories on CFR

Trump and NATO: Global Perspectives on the 2024 NATO Summit and America Link

via Council of Councils July 18, 2024

Will Maduro Hold on to Power in Venezuela’s 2024 Election?

Expert Brief by Shannon K. O'Neil and Julia Huesa July 16, 2024 Latin America Studies Program

International Law

Trump v. U.S.: Has the Supreme Court Made the Presidency More Dangerous?

Expert Brief by David J. Scheffer July 10, 2024

You are using an outdated browser. Please upgrade your browser to improve your experience.

Suggested Results

Antes de cambiar....

Esta página no está disponible en español

¿Le gustaría continuar en la página de inicio de Brennan Center en español?

al Brennan Center en inglés

al Brennan Center en español

Informed citizens are our democracy’s best defense.

We respect your privacy .

- Research & Reports

Social Media Surveillance by the U.S. Government

A growing and unregulated trend of online surveillance raises concerns for civil rights and liberties.

- Social Media

- Transparency & Oversight

- First Amendment

Social media has become a significant source of information for U.S. law enforcement and intelligence agencies. The Department of Homeland Security, the FBI, and the State Department are among the many federal agencies that routinely monitor social platforms, for purposes ranging from conducting investigations to identifying threats to screening travelers and immigrants. This is not surprising; as the U.S. Supreme Court has said , social media platforms have become “for many . . . the principal sources for knowing current events, . . . speaking and listening in the modern public square, and otherwise exploring the vast realms of human thought and knowledge” — in other words, an essential means for participating in public life and communicating with others.

At the same time, this growing — and mostly unregulated — use of social media raises a host of civil rights and civil liberties concerns. Because social media can reveal a wealth of personal information — including about political and religious views, personal and professional connections, and health and sexuality — its use by the government is rife with risks for freedom of speech, assembly, and faith, particularly for the Black, Latino, and Muslim communities that are historically targeted by law enforcement and intelligence efforts. These risks are far from theoretical: many agencies have a track record of using these programs to target minority communities and social movements. For all that, there is little evidence that this type of monitoring advances security objectives; agencies rarely measure the usefulness of social media monitoring and DHS’s own pilot programs showed that they were not helpful in identifying threats. Nevertheless, the use of social media for a range of purposes continues to grow.

In this Q&A, we survey the ways in which federal law enforcement and intelligence agencies use social media monitoring and the risks posed by its thinly regulated and growing use in various contexts.

Which federal agencies use social media monitoring?

Many federal agencies use social media, including the Department of Homeland Security (DHS), Federal Bureau of Investigation (FBI), Department of State (State Department), Drug Enforcement Administration (DEA), Bureau of Alcohol, Tobacco, Firearms and Explosives (ATF), U.S. Postal Service (USPS), Internal Revenue Service (IRS), U.S. Marshals Service , and Social Security Administration (SSA). This document focuses primarily on the activities of DHS, FBI, and the State Department, as the agencies that make the most extensive use of social media for monitoring, targeting, and information collection.

Why do federal agencies monitor social media?

Publicly available information shows that federal agencies use social media for four main — and sometimes overlapping — purposes. The examples below are illustrative and do not capture the full spectrum of social media surveillance by federal agencies.

Investigations : Law enforcement agencies, such as the FBI and some components of DHS, use social media monitoring to assist with criminal and civil investigations. Some of these investigations may not even require a showing of criminal activity. For example, FBI agents can open an “assessment” simply on the basis of an “authorized purpose,” such as preventing crime or terrorism, and without a factual basis. During assessments, FBI agents can carry out searches of publicly available online information. Subsequent investigative stages, which require some factual basis, open the door for more invasive surveillance tactics, such as the monitoring and recording of chats, direct messages, and other private online communications in real time.

At DHS, Homeland Security Investigations (HSI) — which is part of Immigration and Customs Enforcement (ICE) — is the Department’s “ principal investigative arm .” HSI asserts in its training materials that it has the authority to enforce any federal law, and relies on social media when conducting investigations on matters ranging from civil immigration violations to terrorism. ICE agents can look at publicly available social media content for purposes ranging from finding fugitives to gathering evidence in support of investigations to probing “potential criminal activity,” a “threat detection” function discussed below. Agents can also operate undercover online and monitor private online communications, but the circumstances under which they are permitted to do so are not publicly known.

Monitoring to detect threats: Even without opening an assessment or other investigation, FBI agents can monitor public social media postings. DHS components from ICE to its intelligence arm, the Office of Intelligence & Analysis, also monitor social media — including specific individuals — with the goal of identifying potential threats of violence or terrorism. In addition, the FBI and DHS both engage private companies to conduct online monitoring of this type on their behalf. One firm, for example, was awarded a contract with the FBI in December 2020 to scour social media to proactively identify “national security and public safety-related events” — including various unspecified threats, as well as crimes — which have not yet been reported to law enforcement.

Situational awareness: Social media may provide an “ear to the ground” to help the federal government coordinate a response to breaking events. For example, a range of DHS components — from Customs and Border Protection (CBP) to the National Operations Center (NOC) to the Federal Emergency Management Agency ( FEMA ) — monitor the internet, including by keeping tabs on a broad list of websites and keywords being discussed on social media platforms and tracking information from sources like news services and local government agencies. Privacy impact assessments suggest there are few limits on the content that can be reviewed — for instance, the PIAs list a sweeping range of keywords that are monitored (ranging, for example, from “attack,” “public health,” and “power outage,” to “jihad”). The purposes of such monitoring include helping keep the public, private sector, and governmental partners informed about developments during a crisis such as a natural disaster or terrorist attack; identifying people needing help during an emergency; and knowing about “ threats or dangers ” to DHS facilities.

“Situational awareness” and “threat detection” overlap because they both involve broad monitoring of social media, but situational awareness has a wider focus and is generally not intended to monitor or preemptively identify specific people who are thought to pose a threat.

Immigration and travel screening: Social media is used to screen and vet travelers and immigrants coming into the United States and even to monitor them while they live here. People applying for a range of immigration benefits also undergo social media checks to verify information in their application and determine whether they pose a security risk.

How can the government’s use of social media harm people?

Government monitoring of social media can work to people’s detriment in at least four ways: (1) wrongly implicating an individual or group in criminal behavior based on their activity on social media; (2) misinterpreting the meaning of social media activity, sometimes with severe consequences; (3) suppressing people’s willingness to talk or connect openly online; and (4) invading individuals’ privacy. These are explained in further detail below.

Assumed criminality: The government may use information from social media to label an individual or group as a threat, including characterizing ordinary activity (like wearing a particular sneaker brand or making common hand signs) or social media connections as evidence of criminal or threatening behavior. This kind of assumption can have high-stakes consequences. For example, the NYPD wrongly arrested 19-year-old Jelani Henry for attempted murder, after which he was denied bail and jailed for over a year and a half, in large part because prosecutors thought his “likes” and photos on social media proved he was a member of a violent gang. In another case of guilt by association, DHS officials barred a Palestinian student arriving to study at Harvard from entering the country based on the content of his friends’ social media posts. The student had neither written nor engaged with the posts, which were critical of the U.S. government. Black, Latino, and Muslim people are especially vulnerable to being falsely labeled threats based on social media activity, given that it is used to inform government decisions that are often already tainted by bias such as gang determinations and travel screening decisions.

Mistaken judgments: It can be difficult to accurately interpret online activity, and the repercussions can be severe. In 2020, police in Wichita, Kansas arrested a teenager on suspicion of inciting a riot based on a mistaken interpretation of his Snapchat post, in which he was actually denouncing violence. British travelers were interrogated at Los Angeles International Airport and sent back to the U.K. due to a border agent’s misinterpretation of a joking tweet. And DHS and the FBI disseminated reports to a Maine-area intelligence-sharing hub warning of potential violence at anti-police brutality demonstrations based on fake social media posts by right-wing provocateurs, which were distributed as a warning to local police.

Chilling effects: People are highly likely to censor themselves when they think they are being watched by the government, and this undermines everything from political speech to creativity to other forms of self-expression. The Brennan Center’s lawsuit against the State Department and DHS documents how the collection of social media identifiers on visa forms — which are then stored indefinitely and shared across the U.S. government, and sometimes with state, local, and foreign governments — led a number of international filmmakers to stop talking about politics and promoting their work on social media. They self-censored because they were concerned that what they said online would prevent them from getting a U.S. visa or be used to retaliate against them because it could be misinterpreted or reflect controversial viewpoints.

Loss of privacy: A person’s social media presence — their posts, comments, photos, likes, group memberships, and so on — can collectively reveal their ethnicity, political views, religious practices, gender identity, sexual orientation, personality traits, and vices. Further, social media can reveal more about a person than they intend. Platforms’ privacy settings frequently change and can be difficult to navigate, and even when individuals keep information private it can be disclosed through the activity or identity of their connections on social media. DHS at least has recognized this risk, categorizing social media handles as “sensitive personally identifiable information” that could “result in substantial harm, embarrassment, inconvenience, or unfairness to an individual.” Yet the agency has failed to place robust safeguards on social media monitoring.

Who is harmed by social media monitoring?

While all Americans may be harmed by untrammeled social media monitoring, people from historically marginalized communities and those who protest government policies typically bear the brunt of suspicionless surveillance. Social media monitoring is no different.

Echoing the transgressions of the civil rights era , there are myriad examples of the FBI and DHS using social media to surveil people speaking out on issues from racial justice to the treatment of immigrants. Both agencies have monitored Black Lives Matter activists. In 2017, the FBI created a specious terrorism threat category called “Black Identity Extremism” (BIE), which can be read to include protests against police violence. This category has been used to rationalize continued surveillance of black activists, including monitoring of social media activity. In 2020, DHS’s Office of Intelligence & Analysis (I&A) used social media and other tools to target and monitor racial justice protestors in Portland, OR, justifying this surveillance by pointing to the threat of vandalism to Confederate monuments. I&A then disseminated intelligence reports on journalists reporting on this overreach.

DHS especially has focused social media surveillance on immigration activists, including those engaged in peaceful protests against the Trump administration’s family separation policy and others characterized as “anti-Trump protests.” From 2017 through 2020, ICE kept tabs on immigrant rights groups’ social media activity, and in late 2018 and early 2019, CBP and HSI used information gleaned from social media in compiling dossiers and putting out travel alerts on advocates, journalists, and lawyers — including U.S. citizens — whom the government suspected of helping migrants south of the U.S. border.

Muslim, Arab, Middle Eastern, and South Asian communities have often been particular targets of the U.S. government’s discriminatory travel and immigration screening practices, including social media screening. The State Department’s collection of social media identifiers on visa forms, for instance, came out of President Trump’s Muslim ban, while earlier social media monitoring and collection programs focused disproportionately on people from predominantly Muslim countries and Arabic speakers.

Is social media surveillance an effective way of getting information about potential threats?

Not particularly. Broad social media monitoring for threat detection purposes untethered from suspicion of wrongdoing generates reams of useless information, crowding out information on — and resources for — real public safety concerns.

Social media conversations are difficult to interpret because they are often highly context-specific and can be riddled with slang, jokes, memes, sarcasm, and references to popular culture; heated rhetoric is also common. Government officials and assessments have repeatedly recognized that this dynamic makes it difficult to distinguish a sliver of genuine threats from the millions of everyday communications that do not warrant law enforcement attention. As the former acting chief of DHS I&A said , “actual intent to carry out violence can be difficult to discern from the angry, hyperbolic — and constitutionally protected — speech and information commonly found on social media.” Likewise, a 2021 internal review of DHS’s Office of Intelligence & Analysis noted: “[s]earching for true threats of violence before they happen is a difficult task filled with ambiguity.” The review observed that personnel trying to anticipate future threats ended up collecting information on a “broad range of general threats that did not meet the threshold of intelligence collection” and provided I&A’s law enforcement and intelligence customers with “information of limited value,” including “memes, hyperbole, statements on political organizations and other protected First Amendment speech.” Similar concerns cropped up with the DHS’s pilot programs to use social media to vet refugees.

The result is a high volume of false alarms, distracting law enforcement from investigating and preparing for genuine threats: as the FBI bluntly put it , for example, I&A’s reporting practices resulted in “crap” being sent through one of its threat notification systems.

What rules govern federal agencies’ use of social media?

Some agencies, like the FBI, DHS, State Department and IRS , have released information on the rules governing their use of social media in certain contexts. Other agencies — such as the ATF, DEA, Postal Service, and Social Security Administration — have not made any information public; what is known about their use of social media has emerged from media coverage, some of which has attracted congressional scrutiny . Below we describe some of what is known about the rules governing the use of social media by the FBI, DHS, and State Department.

FBI: The main document governing the FBI’s social media surveillance practices is its Domestic Investigations and Operations Guide (DIOG), last made public in redacted form in 2016. Under the DIOG, FBI agents may review publicly available social media information prior to initiating any form of inquiry. During the lowest-level investigative stage, called an assessment (which requires an “authorized purpose” such as stopping terrorism, but no factual basis), agents may also log public, real-time communications (such as public chat room conversations) and work with informants to gain access to private online spaces, though they may not record private communications in real-time.

Beginning with “preliminary investigations” (which require that there be “information or an allegation” of wrongdoing but not that it be credible), FBI agents may monitor and record private online communications in real-time using informants and may even use false social media identities with the approval of a supervisor. While conducting full investigations (which require a reasonable indication of criminal activity), FBI agents may use all of these methods and can also get probable cause warrants to conduct wiretapping, including to collect private social media communications .

The DIOG does restrict the FBI from probing social media based solely on “an individual’s legal exercise of his or her First Amendment rights,” though such activity can be a substantial motivating factor. It also requires that the collection of online information about First Amendment-protected activity be connected to an “authorized investigative purpose” and be as minimally intrusive as reasonable under the circumstances, although it is not clear how adherence to these standards is evaluated.

DHS: DHS policies can be pieced together using a combination of legally mandated disclosures — such as privacy impact assessments and data mining reports — and publicly available policy guidelines, though the amount of information available varies. In 2012, DHS published a policy requiring that components collecting personally identifiable information from social media for “operational uses,” such as investigations (but not intelligence functions), implement basic guidelines and training for employees engaged in such uses and ensure compliance with relevant laws and privacy rules. Whether this policy has been holistically implemented for “operational uses” of social media across DHS remains unclear. However, the Brennan Center has obtained a number of templates describing how DHS components use social media, created pursuant to the 2012 policy, through the Freedom of Information Act.

In practice, DHS policies are generally permissive. The examples below illustrate the ways in which various parts of the Department use social media.

- ICE agents monitor social media for purposes ranging from situational awareness and criminal intelligence gathering to support for investigations. In addition to engaging private companies to monitor social media, ICE agents may collect public social media data whenever they determine it is “relevant for developing a viable case” and “supports the investigative process.”

- Parts of DHS, including the National Operations Center (NOC) (part of the Office of Operations Coordination and Planning ( OPS )), Federal Emergency Management Agency ( FEMA ), and Customs and Border Protection ( CBP ), use social media monitoring for situational awareness. The goal is generally not to “seek or collect” personally identifiable information. DHS may do so in “in extremis situations,” however, such as when serious harm to a person may be imminent or there is a “credible threat[] to [DHS] facilities or systems.” NOC’s situational awareness operations are not covered by the 2012 policy; other components carrying out situational awareness monitoring must create a but may receive an exception from the broader policy with the approval of DHS’s Chief Privacy Officer.

- DHS’s U.S. Citizenship and Immigration Services ( USCIS ) uses social media to verify the accuracy of materials provided by applicants for immigration benefits (such as applications for refugee status or to become a U.S. citizen) and to identify fraud and threats to public safety. USCIS says it only looks at publicly available information and that it will respect account holders’ privacy settings and refrain from direct dialogue with subjects, though staff may use fictitious accounts in certain cases, including when “overt research would compromise the integrity of an investigation.”

- DHS’s Office of Intelligence & Analysis (I&A), as a member of the Intelligence Community, is not covered by the 2012 policy. Instead it operates under a separate set of guidelines — pursuant to Executive Order 12,333, issued by the Secretary of Homeland Security and approved by the Attorney General — that govern its management of information collected about U.S. persons, including via social media. The office incorporates social media into the open-source intelligence reports it produces for federal, state, and local law enforcement; these reports provide threat warnings, investigative leads, and referrals. I&A personnel may collect and retain social media information on U.S. citizens and green card holders so long as they reasonably believe that doing so supports a national or departmental mission; these missions are broadly defined to include addressing homeland security concerns. And they may disseminate the information further if they believe it would help the recipient with “lawful intelligence, counterterrorism, law enforcement, or other homeland security-related functions.”

State Department. The Department’s policies covering social media monitoring for visa vetting purposes are not publicly available. However, public disclosures shed some light on the rules consular officers are supposed to follow when vetting visa applicants using social media. For example, consular officers are not supposed to interact with applicants on social media, request their passwords, or try to get around their privacy settings — and if they create an account to view social media information, they “must abide by the contractual rules of that service or platform provider,” such as Facebook’s real name policy. Further, information gleaned from social media must not be used to deny visas based on protected characteristics (i.e., race, religion, ethnicity, national origin, political views, gender or sexual orientation). It is supposed to be used only to confirm an applicant’s identity and visa eligibility under criteria set forth in U.S. law.

Are there constitutional limits on social media surveillance?

Yes. Social media monitoring may violate the First or Fourteenth Amendments. It is well established that public posts receive constitutional protection: as the investigations guide of the Federal Bureau of Investigation recognizes, “[o]nline information, even if publicly available, may still be protected by the First Amendment. Surveillance is clearly unconstitutional when a person is specifically targeted for the exercise of constitutional rights protected by the First Amendment (speech, expression, association, religious practice) or on the basis of a characteristic protected by the Fourteenth Amendment (including race, ethnicity, and religion). Social media monitoring may also violate the First Amendment when it burdens constitutionally protected activity and does not contribute to a legitimate government objective. Our lawsuit against the State Department and DHS ( Doc Society v. Blinken ), for instance, challenges the collection, retention, and dissemination of social media identifiers from millions of people — almost none of whom have engaged in any wrongdoing — because the government has not adequately justified the screening program and it imposes a substantial burden on speech for little demonstrated value. The White House office that reviews federal regulations noted the latter point — which a DHS Inspector General report and internal reviews have also underscored — when it rejected , in April 2021, DHS’s proposal to collect social media identifiers on travel and immigration forms.

Additionally, the Fourth Amendment protects people from “unreasonable searches and seizures” by the government, including searches of data in which people have a “reasonable expectation of privacy.” Judges have generally concluded that content posted publicly online cannot be reasonably expected to be private, and that police therefore do not need a warrant to view or collect it. Courts are increasingly recognizing, however, that when the government can collect far more information — especially information revealing sensitive or intimate details — at a far lower cost than traditional surveillance, the Fourth Amendment may protect that data. The same is true of social media monitoring and the use of powerful social media monitoring tools, even if they are employed to review publicly available information.

Are there statutory limits on social media surveillance?

Yes. Most notably, the Privacy Act limits the collection, storage, and sharing of personally identifiable information about U.S. citizens and permanent residents (green card holders), including social media data. It also bars, under most circumstances, maintaining records that describe the exercise of a person’s First Amendment rights. However, the statute contains an exception for such records “within the scope of an authorized law enforcement activity.” Its coverage is limited to databases from which personal information can be retrieved by an individual identifier like a name, social security address, or phone number.

Additionally, federal agencies’ collection of social media handles must be authorized by law and, in some cases, be subject to public notice and comment and justified by a reasoned explanation that accounts for contrary evidence. Doc Society v. Blinken , for example, alleges that the State Department’s collection of social media identifiers on visa forms violates the Administrative Procedure Act (APA) because it exceeds the Secretary of State’s statutory authority and did not consider that prior social media screening pilot programs had failed to demonstrate efficacy.

Is the government’s use of social media consistent with platform rules?

Not always. Companies do not bar government officials from making accounts and looking at what is happening on their platforms. However, after the ACLU exposed in 2016 that third-party social media monitoring companies were pitching their services to California law enforcement agencies as a way to monitor protestors against racial injustice, Twitter , Facebook , and Instagram changed or clarified their rules to prohibit the use of their data for surveillance (though the actual application of those rules can be murky).

Additionally, Facebook has a policy requiring users identify themselves by their “real names,” with no exception for law enforcement. The FBI and other federal law enforcement agencies permit their agents to use false identities notwithstanding this rule, and there have been documented instances of other law enforcement departments violating this policy as well.

How do federal agencies share information collected from social media, and why is it a problem?

Federal agencies may share information they collect from social media across all levels of government and the private sector and will sometimes even disclose data to foreign governments (for instance, identifiers on travel and immigration forms). In particular, information is shared domestically with state and local law enforcement, including through fusion centers, which are post-9/11 surveillance and intelligence hubs that were intended to facilitate coordination among federal, state, and local law enforcement and private industry. Such unfettered data sharing magnifies the risks of abusive practices.

Part of the risk stems from the dissemination of data to actors with a documented history of discriminatory surveillance, such as fusion centers. A 2012 bipartisan Senate investigation concluded that fusion centers have “yielded little, if any, benefit to federal counterterrorism intelligence efforts,” instead producing reams of low-quality information while labeling Muslim Americans engaging in innocuous activities, such as voter registration, as potential threats. More recently, fusion centers have been caught monitoring racial and social justice organizers and protests and promoting fake social media posts by right-wing provocateurs as credible intelligence regarding potential violence at anti-police brutality protests. Further, many police departments that get information from social media through fusion centers (or from federal agencies like the FBI and DHS directly) have a history of targeting and surveilling minority communities and activists, but lack basic policies that govern their use of social media. Finally, existing agreements permit the U.S. government to share social media data — collected from U.S. visa applicants, for example — with repressive foreign governments that are known to retaliate against online critics.

The broad dissemination of social media data amplifies some of the harms of social media monitoring by eliminating context and safeguards. Under some circumstances, a government official who initially reviews and collects information from social media may better understand — from witness interviews, notes of observations from the field, or other material obtained during an investigation, for example — its meaning and relevance than a downstream recipient lacking this background. And any safeguards the initial agency places upon its monitoring and collection — use and retention limitations, data security protocols, etc. — cannot be guaranteed after it disseminates what has been gathered. Once social media is disseminated, the originating agency has little control over how such information is used, how long it is kept, whether it could be misinterpreted, or how it might spur overreach.

Together, these dynamics amplify the harms to free expression and privacy that social media monitoring generates. A qualified and potentially unreliable assessment based on social media that a protest could turn violent or that a particular person poses a threat might easily turn into a justification for policing that protest aggressively or arresting the person, as illustrated by the examples above. Similarly, a person who has applied for a U.S. visa or been investigated by federal authorities, even if they are cleared, is likely to be wary of what they say on social media well into the future if they know that there is no endpoint to potential scrutiny or disclosure of their online activity. Formerly, one branch of DHS I&A had a practice of redacting publicly available U.S. person information contained in open-source intelligence reports disseminated to partners because of the “risk of civil rights and liberties issues.” This practice was an apparent justification for removing pre-publication oversight to identify such issues, which implies that DHS recognized that information identifying a person could be used to target them without a legitimate law enforcement reason.

What role do private companies play, and what is the harm in using them?

Both the FBI and DHS have reportedly hired private firms to help conduct social media surveillance, including to help identify threats online. This raises concerns around transparency and accountability as well as effectiveness.

Transparency and accountability: Outsourcing surveillance to private industry obscures how monitoring is being carried out; limited information is available about relationships between the federal government and social media surveillance contractors, and the contractors, unlike the government, are not subject to freedom of information laws. Outsourcing also weakens safeguards because private vendors may not be subject to the same legal or institutional constraints as public agencies.

Efficacy: The most ambitious tools use artificial intelligence with the goal of making judgments about which threats, calls for violence, or individuals pose the highest risk. But doing so reliably is beyond the capacity of both humans and existing technology, as more than 50 technologists wrote in opposing an ICE proposal aimed at predicting whether a given person would commit terrorism or crime. The more rudimentary of these tools look for specific words and then flag posts containing those words. Such flags are overinclusive, and garden-variety content will regularly be elevated . Consider how the word “extremism,” for instance, could appear in a range of news articles, be used in reference to a friend’s strict dietary standards, or arise in connection with discussion about U.S. politics. Even the best Natural Language Processing tools, which attempt to ascertain the meaning of text, are prone to error , and fare particularly poorly on speakers of non-standard English, who may more frequently be from minority communities, as well as speakers of languages other than English. Similar concerns apply to mechanisms used to flag images and videos, which generally lack the context necessary to differentiate a scenario in which an image is used for reporting or commentary from one where it is used by a group or person to incite violence.

Records Show DC and Federal Law Enforcement Sharing Surveillance Info on Racial Justice Protests

Officers tracked social media posts about racial justice protests with no evidence of violence, threatening First Amendment rights.

Documents Reveal How DC Police Surveil Social Media Profiles and Protest Activity

A lawsuit forced the Metropolitan Police Department to reveal how it uses social media surveillance tools to track First Amendment–protected activity.

Study Reveals Inadequacy of Police Departments’ Social Media Surveillance Policies

Ftc must investigate meta and x for complicity with government surveillance, we’re suing the nypd to uncover its online surveillance practices, senate ai hearings highlight increased need for regulation, documents reveal widespread use of fake social media accounts by dhs, informed citizens are democracy’s best defense.

The harmful effects of online abuse

A look at how the offline harm of online abuse is real and widespread with potentially severe consequences.

The price of shame

The conversation we're not having about digital child abuse

When online shaming goes too far

How online abuse of women has spiraled out of control

- All Stories

- Journalists

- Expert Advisories

- Media Contacts

- X (Twitter)

- Arts & Culture

- Business & Economy

- Education & Society

- Environment

- Law & Politics

- Science & Technology

- International

- Michigan Minds Podcast

- Michigan Stories

- 2024 Elections

- Artificial Intelligence

- Abortion Access

- Mental Health

Hate speech in social media: How platforms can do better

- Morgan Sherburne

With all of the resources, power and influence they possess, social media platforms could and should do more to detect hate speech, says a University of Michigan researcher.

Libby Hemphill

In a report from the Anti-Defamation League , Libby Hemphill, an associate research professor at U-M’s Institute for Social Research and an ADL Belfer Fellow, explores social media platforms’ shortcomings when it comes to white supremacist speech and how it differs from general or nonextremist speech, and recommends ways to improve automated hate speech identification methods.

“We also sought to determine whether and how white supremacists adapt their speech to avoid detection,” said Hemphill, who is also a professor at U-M’s School of Information. “We found that platforms often miss discussions of conspiracy theories about white genocide and Jewish power and malicious grievances against Jews and people of color. Platforms also let decorous but defamatory speech persist.”

How platforms can do better

White supremacist speech is readily detectable, Hemphill says, detailing the ways it is distinguishable from commonplace speech in social media, including:

- Frequently referencing racial and ethnic groups using plural noun forms (whites, etc.)

- Appending “white” to otherwise unmarked terms (e.g., power)

- Using less profanity than is common in social media to elude detection based on “offensive” language

- Being congruent on both extremist and mainstream platforms

- Keeping complaints and messaging consistent from year to year

- Describing Jews in racial, rather than religious, terms

“Given the identifiable linguistic markers and consistency across platforms, social media companies should be able to recognize white supremacist speech and distinguish it from general, nontoxic speech,” Hemphill said.

The research team used commonly available computing resources, existing algorithms from machine learning and dynamic topic modeling to conduct the study.

“We needed data from both extremist and mainstream platforms,” said Hemphill, noting that mainstream user data comes from Reddit and extremist website user data comes from Stormfront.

What should happen next?

Even though the research team found that white supremacist speech is indentifiable and consistent—with more sophisticated computing capabilities and additional data—social media platforms still miss a lot and struggle to distinguish nonprofane, hateful speech from profane, innocuous speech.

“Leveraging more specific training datasets, and reducing their emphasis on profanity can improve platforms’ performance,” Hemphill said.

The report recommends that social media platforms: 1) enforce their own rules; 2) use data from extremist sites to create detection models; 3) look for specific linguistic markers; 4) deemphasize profanity in toxicity detection; and 5) train moderators and algorithms to recognize that white supremacists’ conversations are dangerous and hateful.

“Social media platforms can enable social support, political dialogue and productive collective action. But the companies behind them have civic responsibilities to combat abuse and prevent hateful users and groups from harming others,” Hemphill said. “We hope these findings and recommendations help platforms fulfill these responsibilities now and in the future.”

More information:

- Report: Very Fine People: What Social Media Platforms Miss About White Supremacist Speech

- Related: Video: ISR Insights Speaker Series: Detecting white supremacist speech on social media

- Podcast: Data Brunch Live! Extremism in Social Media

412 Maynard St. Ann Arbor, MI 48109-1399 Email [email protected] Phone 734-764-7260 About Michigan News

- Engaged Michigan

- Global Michigan

- Michigan Medicine

- Public Affairs

Publications

- Michigan Today

- The University Record

Office of the Vice President for Communications © 2024 The Regents of the University of Michigan

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

- Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

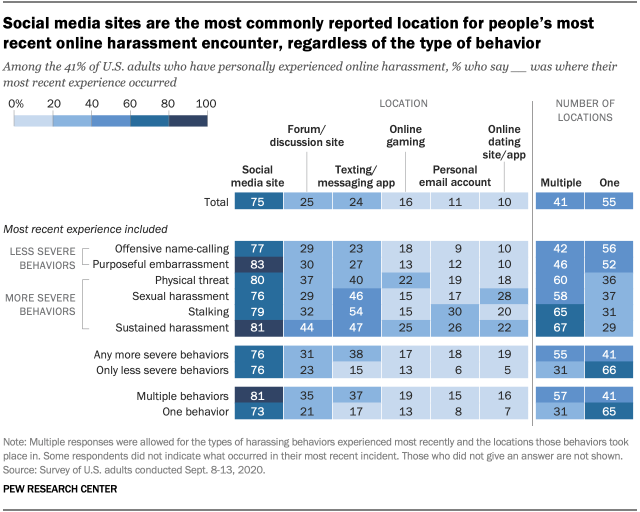

Online harassment occurs most often on social media, but strikes in other places, too

As has been the case since at least 2014 , social media sites are the most common place Americans encounter harassment online, according to a September 2020 Pew Research Center survey . But harassment often occurs in other online locations, too.

Overall, three-quarters of U.S. adults who have recently faced some kind of online harassment say it happened on social media. But notable shares say their most recent such experience happened elsewhere, including on forum or discussion sites (25%), texting or messaging apps (24%), online gaming platforms (16%), their personal email account (11%) or online dating sites or apps (10%).

Certain kinds of harassing behavior, meanwhile, are particularly likely to occur in certain locations online, according to a new analysis of the 2020 data. The analysis focuses on respondents’ most recent experience with online harassment. (See “Measuring online harassment” box for more information.)

This analysis focuses on U.S. adults’ experiences and attitudes related to online harassment and is based on a survey of 10,093 U.S. adults conducted from Sept. 8 to 13, 2020. Everyone who took part is a member of the Center’s American Trends Panel (ATP), an online survey panel that is recruited through national, random sampling of residential addresses. This way nearly all U.S. adults have a chance of selection. The survey is weighted to be representative of the U.S. adult population by gender, race, ethnicity, partisan affiliation, education and other categories. Read more about the ATP’s methodology . Here are the questions used for this report , along with responses, and its methodology .

Looking first at where harassing behavior occurs, several findings stand out. For one, people who most recently faced harassment over a sustained period were especially likely to have experienced it while using a texting or messaging app (47%) or on an online forum (44%) compared with the overall shares whose most recent harassment of any kind took place on these platforms.

Measuring online harassment

This study measures six distinct harassment behaviors.

We classify two of the behaviors as “less severe”:

- Offensive name-calling

- Purposeful embarrassment

We classify four of them as “more severe”:

- Physical threats

- Harassment over a sustained period of time

- Sexual harassment

In all, 41% of Americans say they had ever experienced at least one of those behaviors.

The people who faced any of those behaviors were asked follow-up questions about their most recent harassment episode, including the specific behaviors that were involved and where the incidents occurred. We inquired about six potential locations:

- Social media

- Online forums or discussion sites

- Texting or messaging apps

- Online gaming

- Personal email accounts

- Online dating sites or apps

Similarly, those who say they most recently had been stalked or sexually harassed online were more likely to have faced this while using a texting platform (54% and 46%, respectively) compared with the broader rate of harassment on those venues. In addition, people who were most recently stalked are roughly three times as likely to have experienced this harassment via email (30%) compared with the share of all whose latest harassment incident was email-based (11%).

In general, those who faced any of the more severe behaviors in their most recent incident are more likely to say the experience occurred across multiple locations online. Some 55% of those who have faced at least one of these more severe forms of online harassment in their most recent incident – such as stalking or sustained harassment – encountered it in multiple places online, compared with 41% of those who have experienced any form of harassment. Roughly six-in-ten or more adults whose most recent incident involved sustained harassment (67%), stalking (65%), physical threats (60%) or sexual harassment (58%) say their encounter took place across multiple online locations.

Those who endured multiple forms of online harassment (57%), meanwhile, are also particularly likely to say the harassment spanned multiple locations, compared with the overall share whose recent encounters occurred across multiple locations.

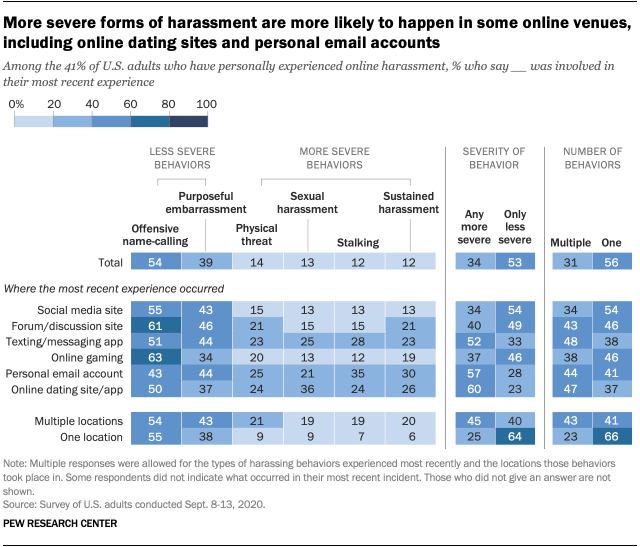

More severe harassment is more common in some online locations

Overall, the most common types of harassment across all six digital spaces we examined are those we classified as “less severe” – that is, offensive name calling and purposeful embarrassment. For instance, 61% of those who were harassed on an online forum or discussion site in their most recent incident were met with offensive name calling, while 46% of those harassed in this kind of venue faced purposeful embarrassment.

However, it is also the case that certain online platforms see higher shares of more severe harassing behaviors than are seen across all platforms. For example, recent incidents on dating sites (60%), in personal emails (57%) or on a texting or messaging app (52%) are especially likely to involve at least one more severe behavior.

Specifically, those who were most recently harassed on a dating app or site are about three times as likely to say this harassment was sexual, compared with the general prevalence of sexual harassment in recent encounters (36% vs. 13%). In addition, stalking, physical threats and sustained harassment on dating sites all occur at notably higher rates than those seen among recent incidents across all online platforms.

Among those who report that their most recent encounter occurred in just one of these six locations, 64% say they faced only less severe behaviors, while a quarter reported any more severe behaviors. Specifically, about one-in-ten or fewer people who had most recently experienced harassment in only one location reported facing each of these more severe behaviors. Similarly, those who were most recently harassed in only one location are about three times as likely to have faced just one type of harassing behavior rather than multiple behaviors (66% vs. 23%).

Note: Here are the questions used for this report , along with responses, and its methodology .

- Online Harassment & Bullying

- Smartphones

- Social Media

Emily A. Vogels is a former research associate focusing on internet and technology at Pew Research Center .

Most Americans think the government could be monitoring their phone calls and emails

What the public knows about cybersecurity, americans and cybersecurity, candidates’ social media outpaces their websites and emails as an online campaign news source, in an historic move, census bureau tries electronic outreach, most popular.

1615 L St. NW, Suite 800 Washington, DC 20036 USA (+1) 202-419-4300 | Main (+1) 202-857-8562 | Fax (+1) 202-419-4372 | Media Inquiries

Research Topics

- Email Newsletters

ABOUT PEW RESEARCH CENTER Pew Research Center is a nonpartisan fact tank that informs the public about the issues, attitudes and trends shaping the world. It conducts public opinion polling, demographic research, media content analysis and other empirical social science research. Pew Research Center does not take policy positions. It is a subsidiary of The Pew Charitable Trusts .

© 2024 Pew Research Center

Social media and political violence – how to break the cycle

Principal Lecturer in Computer Science and Electrical Engineering, University of Maryland, Baltimore County

Disclosure statement

Richard Forno has received research funding related to cybersecurity from the National Science Foundation (NSF) and the Department of Defense (DOD) during his academic career. He is a registered independent voter.

University of Maryland, Baltimore County provides funding as a member of The Conversation US.

View all partners

The attempted assassination of Donald Trump on July 13, 2024, added more fuel to an already fiery election season. In this case, political violence was carried out against the party that is most often found espousing it . The incident shows how uncontrollable political violence can be – and how dangerous the current times are for America.

Part of the complication is the contentious and adversarial nature of American politics, of course. But technology makes it more difficult for Americans to understand sudden news developments .

Gone are the days when only a handful of media outlets reported the news to broad swaths of society after rigorous fact-checking by professional journalists.

By contrast, anyone today can “report” news online, provide what they claim is “analysis” of events, and combine fact, fiction, speculation and opinion to fit a desired narrative or political perspective.

Then that perspective is potentially made to seem legitimate by virtue of the poster’s official office, net worth, number of social media followers, or attention from mainstream news organizations seeking to fill news cycles.

And that’s before any mention of convincing deepfake audio and video clips , whose lies and misrepresentations can further sow confusion and distrust online and in society.

Today’s internet-based narratives also often involve personal attacks either directly or through inference and suggestion – what experts call “ stochastic terrorism ” that can motivate people to violence. Political violence is the inevitable result – and has been for years, including attacks on U.S. Rep. Gabby Giffords , former House Speaker Nancy Pelosi’s husband, Paul , the 2017 congressional baseball practice shooting , the Jan. 6, 2021 insurrection , and now the attempted assassination of a former president running for the White House again.

When bullets and conspiracies fly

As a security and internet researcher , I believe it was entirely predictable that within minutes of the attack, right-wing social media exploded with instant-reaction narratives that assigned blame to political rivals, the media, or implied that a sinister “inside job” by the federal government was behind the incident.

But it wasn’t just average internet users or prominent business magnates fanning these flames. Several Republicans issued such statements from their official social media accounts. For instance, less than an hour after the attack, Georgia Congressman Mike Collins accused President Joe Biden of “inciting an assassination” and said Biden “ sent the orders .” Ohio Senator J.D. Vance, now Trump’s nominee for vice president , also implied that Biden was responsible for the attack.

The bloodied former president stood up and delayed his Secret Service evacuation for a fist-pumping photo before leaving the rally, and his campaign issued a defiant fundraising email later that evening. This led some Trump critics to suggest the incident was a “ false flag ” attack staged to earn a sympathetic national spotlight. Others claimed the incident fits into Trump’s ongoing messaging to supporters that he’s the victim of persecution.

From a historical perspective, it’s worth noting former Brazil right-wing President Jair Bolsonaro survived an assassination attempt in 2018 to become the country’s next president in 2019.

It’s long been known that internet narratives, memes and content can spread around the world like wildfire well before the actual truth becomes known. Unfortunately, those narratives, whether factual or fictional, can get picked up – and thus given a degree of perceived legitimacy and further disseminated – by traditional news organizations.

Many who see such messages, amplified by both social media and traditional news services, often believe them – and some may respond with political violence or terrorism.

Can anything help?

Several threads of research show that there are some ways regular people can help break this dangerous cycle.

In the immediate aftermath of breaking news, it’s important to remember that first reports often are wrong, incomplete or inaccurate . Rather than rushing to repost things during rapidly developing news events, it’s best to avoid retweeting, reposting or otherwise amplifying online content right away. When information has been confirmed by multiple credible sources, ideally across the political spectrum, then it’s likely safe enough to believe and share.

In the longer term, as a nation and a society, it will be useful to further understand how technology and human tendencies interact. Teaching schoolchildren more about media literacy and critical thinking can help prepare future citizens to separate fact from fiction in a complex world filled with competing information.

Another potential approach is to expand civics and history lessons in school classrooms, to give students the ability to learn from the past and – we can all hope – not repeat its mistakes.

Social media companies are part of the potential solution, too. In recent years, they have disbanded teams meant to monitor content and boost users’ trust in the information available on their platforms. Recent Supreme Court rulings make clear that these companies are free to actively police their platforms for disinformation, misinformation and conspiracy theories if they wish. But companies and purported “ free speech absolutists ” including X owner Elon Musk, who refuse to remove controversial, though technically legal, internet content from their platforms may well endanger public safety.

Traditional media organizations bear responsibility for objectively informing the public without giving voice to unverified conspiracy theories or misinformation. Ideally, qualified guests invited to news programs will add useful facts and informed opinion to the public discourse instead of speculation. And serious news hosts will avoid the rhetorical technique of “ just asking questions ” or engaging in “ bothsiderism ” as ways to move fringe theories – often from the internet – into the news cycle, where they gain traction and amplification.

The public has a role, too.

Responsible citizens could focus on electing officials and supporting political parties that refuse to embrace conspiracy theories and personal attacks as normal strategies. Voters could make clear that they will reward politicians who focus on policy accomplishments, not their media imagery and social media follower counts.

That could, over time, deliver the message that the spectacle of modern internet political narratives generally serve no useful purpose beyond sowing social discord and degrading the ability of government to function – and potentially leading to political violence and terrorism.

Understandably, these are not instant remedies. Many of these efforts will take time – potentially even years – and money and courage to accomplish.

Until then, maybe Americans can revisit the golden rule – doing onto others what we would have them do unto us. Emphasizing facts in the news cycle, integrity in the public square, and media literacy in our schools seem like good places to start as well.

- Social media

- Political violence

- Disinformation

- Social media disinformation

- right-wing media

- Right-wing violence

- Online misinformation

- January 6 US Capitol attack

- Trump assassination attempt

Administration Officer

Apply for State Library of Queensland's next round of research opportunities

Associate Professor, Psychology

Professor and Head of School, School of Communication and Arts

Management Information Systems & Analytics – Limited Term Contract

News Releases

Hate speech in social media: How platforms can do better

February 17, 2022

ANN ARBOR—With all of the resources, power and influence they possess, social media platforms could and should do more to detect hate speech, says a University of Michigan researcher.

In a report from the Anti-Defamation League , Libby Hemphill , an associate research professor at U-M’s Institute for Social Research and an ADL Belfer Fellow, explores social media platforms’ shortcomings when it comes to white supremacist speech and how it differs from general or nonextremist speech, and recommends ways to improve automated hate speech identification methods.

“We also sought to determine whether and how white supremacists adapt their speech to avoid detection,” said Hemphill, who is also a professor at U-M’s School of Information. “We found that platforms often miss discussions of conspiracy theories about white genocide and Jewish power and malicious grievances against Jews and people of color. Platforms also let decorous but defamatory speech persist.”

How platforms can do better

White supremacist speech is readily detectable, Hemphill says, detailing the ways it is distinguishable from commonplace speech in social media, including:

- Frequently referencing racial and ethnic groups using plural noun forms (whites, etc.)

- Appending “white” to otherwise unmarked terms (e.g., power)

- Using less profanity than is common in social media to elude detection based on “offensive” language

- Being congruent on both extremist and mainstream platforms

- Keeping complaints and messaging consistent from year to year

- Describing Jews in racial, rather than religious, terms

“Given the identifiable linguistic markers and consistency across platforms, social media companies should be able to recognize white supremacist speech and distinguish it from general, nontoxic speech,” Hemphill said.

The research team used commonly available computing resources, existing algorithms from machine learning and dynamic topic modeling to conduct the study.

“We needed data from both extremist and mainstream platforms,” said Hemphill, noting that mainstream user data comes from Reddit and extremist website user data comes from Stormfront.

What should happen next?