- Search Menu

- Advance Articles

- Editor's Choice

- CME Reviews

- Best of 2021 collection

- Abbreviated Breast MRI Virtual Collection

- Contrast-enhanced Mammography Collection

- Author Guidelines

- Submission Site

- Open Access

- Self-Archiving Policy

- Accepted Papers Resource Guide

- About Journal of Breast Imaging

- About the Society of Breast Imaging

- Guidelines for Reviewers

- Resources for Reviewers and Authors

- Editorial Board

- Advertising Disclaimer

- Advertising and Corporate Services

- Journals on Oxford Academic

- Books on Oxford Academic

- < Previous

A Step-by-Step Guide to Writing a Scientific Review Article

- Article contents

- Figures & tables

- Supplementary Data

Manisha Bahl, A Step-by-Step Guide to Writing a Scientific Review Article, Journal of Breast Imaging , Volume 5, Issue 4, July/August 2023, Pages 480–485, https://doi.org/10.1093/jbi/wbad028

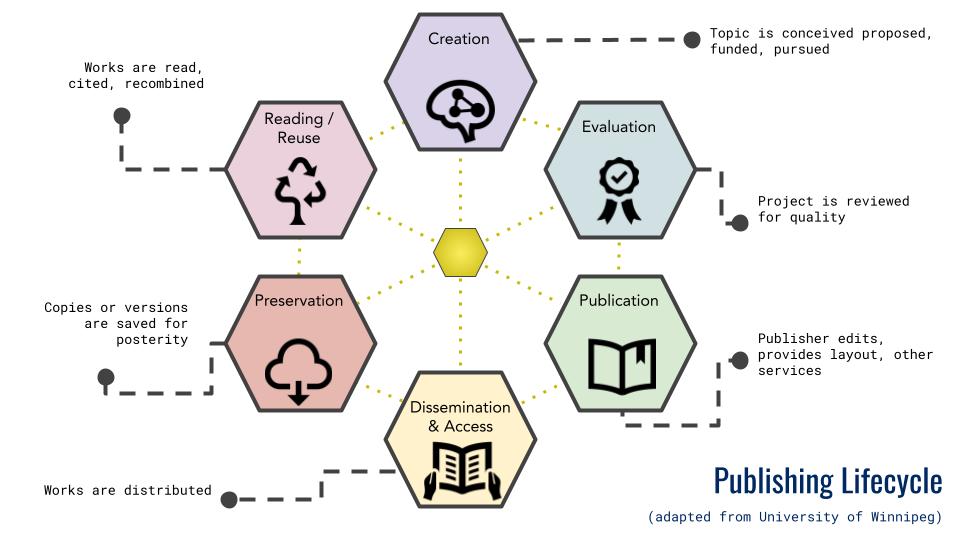

- Permissions Icon Permissions

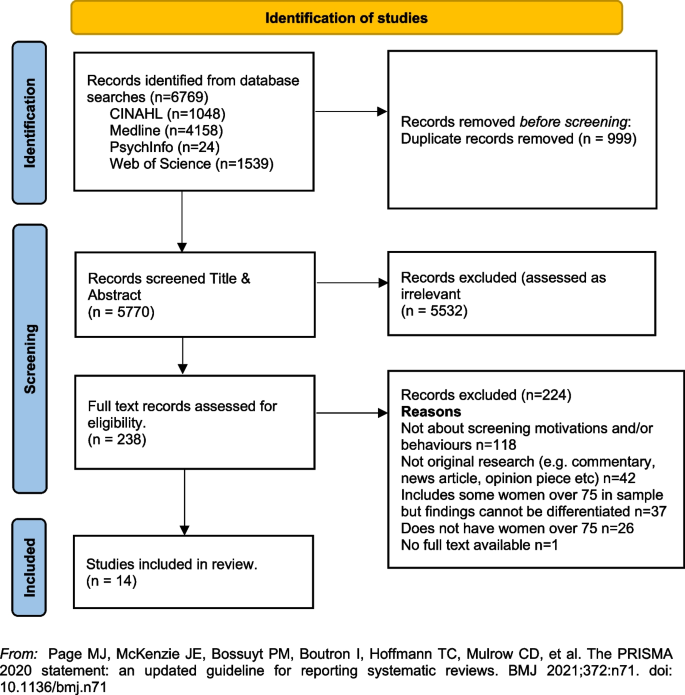

Scientific review articles are comprehensive, focused reviews of the scientific literature written by subject matter experts. The task of writing a scientific review article can seem overwhelming; however, it can be managed by using an organized approach and devoting sufficient time to the process. The process involves selecting a topic about which the authors are knowledgeable and enthusiastic, conducting a literature search and critical analysis of the literature, and writing the article, which is composed of an abstract, introduction, body, and conclusion, with accompanying tables and figures. This article, which focuses on the narrative or traditional literature review, is intended to serve as a guide with practical steps for new writers. Tips for success are also discussed, including selecting a focused topic, maintaining objectivity and balance while writing, avoiding tedious data presentation in a laundry list format, moving from descriptions of the literature to critical analysis, avoiding simplistic conclusions, and budgeting time for the overall process.

- narrative discourse

Email alerts

Citing articles via.

- Recommend to your Librarian

- Journals Career Network

Affiliations

- Online ISSN 2631-6129

- Print ISSN 2631-6110

- Copyright © 2024 Society of Breast Imaging

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Advertisement

- Previous Article

- Next Article

1. INTRODUCTION

2. journal evaluation in china, 3. the leading journal evaluation systems of academic journals in china, 4. comparative analysis of journal evaluation systems in china, 5. conclusions and discussion, acknowledgments, author contributions, competing interests, funding information, data availability, a comprehensive analysis of the journal evaluation system in china.

Handling Editor: Liying Yang

- Cite Icon Cite

- Open the PDF for in another window

- Permissions

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Search Site

Ying Huang , Ruinan Li , Lin Zhang , Gunnar Sivertsen; A comprehensive analysis of the journal evaluation system in China. Quantitative Science Studies 2021; 2 (1): 300–326. doi: https://doi.org/10.1162/qss_a_00103

Download citation file:

- Ris (Zotero)

- Reference Manager

Journal evaluation systems reflect how new insights are critically reviewed and published, and the prestige and impact of a discipline’s journals is a key metric in many research assessment, performance evaluation, and funding systems. With the expansion of China’s research and innovation systems and its rise as a major contributor to global innovation, journal evaluation has become an especially important issue. In this paper, we first describe the history and background of journal evaluation in China and then systematically introduce and compare the most currently influential journal lists and indexing services. These are the Chinese Science Citation Database (CSCD), the Journal Partition Table (JPT), the AMI Comprehensive Evaluation Report (AMI), the Chinese S&T Journal Citation Report (CJCR), “A Guide to the Core Journals of China” (GCJC), the Chinese Social Sciences Citation Index (CSSCI), and the World Academic Journal Clout Index (WAJCI). Some other influential lists produced by government agencies, professional associations, and universities are also briefly introduced. Through the lens of these systems, we provide comprehensive coverage of the tradition and landscape of the journal evaluation system in China and the methods and practices of journal evaluation in China with some comparisons to how other countries assess and rank journals.

China is among the many countries where the career prospects of researchers, in part, depend on the journals in which they publish. Knowledge of which journals are considered prestigious and which are of dubious quality is critical to the scientific community for assessing the standing of a research institution, tenure decisions, grant funding, performance evaluations, etc.

The process of journal evaluation dates back to Gross and Gross (1927) , who postulated that the number of citations one journal receives over another similar journal suggests something about its importance to the field. Shortly after, the British mathematician, librarian, and documentalist Samuel C. Bradford published his study on publications in geophysics and lubrication. The paper presented the concept of “core-area journals” and an empirical law that would, by 1948, become Bradford’s well-known law of scattering ( Bradford, 1934 , 1984 ). In turn, Bradford influenced Eugene Garfield of the United States, who subsequently published a groundbreaking paper on citation indexing called “Citation Indexes for Science: A New Dimension in Documentation through Association of Ideas.” According to Garfield (1955) , “the citation index … may help a historian to measure the influence of an article—that is, its ‘impact factor’.” In the 1960s, Garfield conducted a large-scale statistical analysis of citations in the literature, reaching the conclusion that many citations were concentrated in just a few journals and the many remaining journals only accounted for a few citations ( Garfield, 1963 , 1964 ). Garfield went on to create the Institute for Scientific Information (ISI), then successively published the Science Citation Index (SCI), the Social Science Citation Index (SSCI), and the Art and Humanities Citation Index (A&HCI) databases.

Assessing the quality of published research output is important in all contexts where research assessment takes place—for example, when evaluating the success of research projects or when distributing research funding ( Su, Shang et al., 2017 ). As part of the assessment, evaluating and ranking the quality of the journals where the output was published has become increasingly important ( Mingers & Yang, 2017 ). Journal evaluation and rankings are used by governments, organizations, universities, schools, and departments to evaluate the quality and quantity of faculty research productivity, ranging from promotion and tenure to monetary rewards ( Black, Stainbank et al., 2017 ). Even though the merit of using such a system is not universally agreed upon ( Dobson, 2014 ), and is sometimes even contested ( Zhang, Rousseau, & Sivertsen, 2017 ), it is, however, widely believed that the rank or citation impact of a journal is supposed to reflect its prestige, influence, and even difficulty of having a paper accepted for publication ( Su et al., 2017 ).

Over the past few years, the number of papers published in international journals by Chinese researchers has seen a dramatic increase, to the point that, today, China is the largest contributor to international journals covered by Web of Science (WoS) and Scopus. In tandem, government policies and guidance, especially the call to “publish your best work in your motherland to benefit local society,” proposed by President Xi in 2016 1 , are seeing more and more papers published in China’s domestic journals. Therefore, with these increases in the number of papers and journals, it will be an important task to explore the strengths and weaknesses of various methods for evaluating journals as well as the types of ranking systems that may be suitable for China’s national conditions.

The journal evaluation system in China was established gradually, beginning with the introduction of Western journal evaluation theories about 60 years ago. Over the last 30 years, in particular, these foreign theories have been adopted, adapted, researched, and vigorously redeveloped. In the past, journal evaluation and selection results were mainly used to help librarians develop their collections and to help readers better identify a discipline’s core journals. However, in recent years, the results of journal evaluation and ranking have increasingly been applied to scientific research evaluation and management (i.e., in tenure decisions, grant funding, and performance evaluations). ( Shu, Quan et al., 2020 ). Many institutions are increasingly relying on journal impact factors (JIFs) to evaluate papers and researchers. This is commonly referred to in China as “evaluating a paper based on the journal and ranking list” (以刊评文, Yi Kan Ping Wen ). The higher the journal’s rank and JIF, the higher the expected outcome of evaluations.

In the ever-changing environment of scientific research evaluation, the research and practice of journal evaluation in China is also evolving to meet different needs. Many influential journal evaluations and indexing systems have been established since the 1990s, with their evaluation methods and standards becoming increasingly mature. These activities have played a positive role in promoting the development of scientific research and have also been helpful for improving the quality of academic journals.

The aim of this study is to review the progress of journal evaluation in China and present a comprehensive analysis of the current state of the art. Hence, the main body of this article is a comparative analysis of the journal lists that are most influential in China’s academic landscape. The results not only offer a deeper understanding of China’s journal evaluation methods and practices but also reveal some insights into the journal evaluation activities of other countries. Overall, our aim is to make a valuable contribution to improving the theory and practice of journal evaluation and to promote the sustainable and healthy development of journal management and evaluation systems, both in China and abroad.

2.1. A Brief History

Journal evaluation in China dates back to the 1960s, with some fairly distinct stages during its development. Qiyu Zhang and Enguang Wang first introduced the Science Citation Index (SCI) to Chinese readers in 1964 ( Zhang, 2015 ). In 1973, Erzhong Wu introduced a core journal list for chemistry. This was the first mention of the concept of a “core journal” ( Wu, 1973 ). In 1982, Liansheng Meng finished his Master’s thesis entitled “Chinese science citation analysis” ( Meng, 1982 ), and then, in 1989, he built the Chinese Science Citation Index (CSCI), now called the Chinese Science Citation Database (CSCD), with the support of the Documentation and Information Center of the Chinese Academy of Sciences. At this stage of development, international journal evaluation practices were simply applied to the Chinese context almost without making any changes to the underlying methodologies. At the same time, exploring bibliometric laws and potential applications became an important topic for researchers in library and information science.

In 1988, Jing and Xian used the “citation method” to identify a list of “Chinese Natural Science Core Journals,” which included 104 core Chinese journals in the natural sciences. This is now typically recognized as the first Chinese journal list ( Jing & Xian, 1988 ). Around that same time, some institutions began to undertake journal evaluation activities. For example, in 1987, the Institute of Scientific and Technical Information of China (ISTIC) (commissioned by the Ministry of Science and Technology—formerly the National Scientific and Technological Commission) began to analyze publications in the SCI, the Index to Scientific Reviews (ISR), and the Index to Scientific&Technical Proceeding (ISTP), and in 1989 it began selecting domestic scientific journals for analysis. During this process, 1,189 journals were selected from 3,025 scientific journals nationwide as statistical sources of journal selection, which have been adjusted annually ever since ( Qian, 2006 ). Hence, this second stage of development saw the beginnings of adapting international evaluation systems and approaches to local journals, and some institutions building their own citation and bibliographic indexes.

From the 1990s onwards, journal evaluation activities moved on to rapid development with equal emphasis on theoretical research and practical applications. On the theoretical side, bibliometric researchers and information scientists were engaged in developing more advanced evaluation methods and better indicators. The theories and methods of journal evaluation spread from the natural sciences to the social sciences and humanities (SSH). In terms of practical applications, more and more researchers in the library and information science fields began to depart from individual research agendas and move into joint working groups and professional evaluation institutions to promote journal evaluation practices. The number of journal lists burgeoned as well. Some combined “quantitative” methods and “qualitative” approaches, such as "A Guide to the Core Journals of China" (GCJC) by the Peking University Library and the Chinese Social Sciences Citation Index (CSSCI) from the China Social Sciences Research Center of Nanjing University. Others were proposed by joint working groups and research institutions, and these lists began to be used to support scientific research evaluation and management.

On the whole, these advances in methods and standards played a positive role in promoting the quality of academic journals. However, over time, JIFs have tended to become a proxy for the quality of the papers and authors published within their pages (i.e., “evaluating a paper based on the journal and ranking list” [以刊评文, Yi Kan Ping Wen ]). This phenomenon has been causing a wide debate nationwide, with many calling for papers to be judged by their content, not by their wrapping ( Zhang et al., 2017 ). In this regard, the number of solutions proposed to improve the standards of journal selection and to avoid improper or even misleading use continues to multiply.

2.2. Motivations for Performing Journal Evaluation in China

Advances in science and technology and the rapid growth of scientific research have brought a change in the way that journals are evaluated. Initially, the assessments were reader oriented, serving as a guide for journal audiences to understand research trends and developments in the various disciplines. Later, greater focus was placed on the needs of libraries and other organizations. English core journals were translated into Chinese and introduced to China to ensure better use of the most valuable journals with limited funds and to optimize the journal collections of China’s libraries.

However, with the rapid development of information network technology and the popularization of reading on screen, electronic journal databases are having an unprecedented impact on journal subscriptions. Further, early use of journal evaluation systems by pioneering institutions has spread beyond the library and information science community. Today, journal evaluations are inextricably tied to many aspects of assessing research performance.

The Journal Citation Reports (JCR), an annual publication by Clarivate, contains a relatively transparent data set for calculating JIFs and citation-based performance metrics at the article and the journal level. Further, JCR clearly outlines a network of references that represent the journal’s voice in the global scholarly dialog, highlighting the institutional and international players who are part of the journal’s community. However, many journals selected for inclusion in JCR are from English-speaking countries, as shown in Table 1 . To fulfill a growing demand to extend the universe of journals in JCR, the WoS platform launched the Emerging Sources Citation Index (ESCI) in November 2015. However, ESCI has also done very little to promote journals from non-English-speaking countries and regions ( Huang, Zhu et al., 2017 ). Although English is the working language of the international scientific community, for many reasons, it is not a wise choice for researchers to only publish their scholarly contributions in English. Building domestic evaluation systems turns out to be very necessary for fostering domestic collaborations, appropriately evaluating research performance, and keeping up with research trends close to home.

The top 10 countries with the highest journal numbers in JCR 2019

Data Source: Web of Science Group (2019) .

Note: Some journals in the portfolio of international publishers have no genuine national affiliation.

Moreover, China’s economy is growing rapidly and, along with it, the country’s scientific activity is also flourishing. As Figure 1 shows, China’s scientific research inputs and outputs have consistently increased over the past few decades, exceeding that of the United States in 2019 to become the most productive country in the world. With such a large number of papers, the work of researchers cannot be assessed without shortcuts. Thus, for want of a better system, the quality of the journals in which a researcher’s papers are published has become a proxy for evaluating the quality of the researcher themselves, and ways to define “core journals” and how to select those indexed journals have attracted wide attention, especially from the Chinese government.

The 10 countries with the largest number of publications in WoS (1975–2019).Note: Indexes = SCIE, SSCI, A&HCI; Document types = article, review.

Furthermore, national policies, such as those listed in Table 2 , are now playing a vital role in these evaluation activities. Early in China’s history of journal evaluation, the policies implemented were designed to support the development of some influential journals across the natural and social sciences. More recently, however, the government’s policies have sought to reverse the excessive emphasis that has come to be placed on the volume of a researcher’s output and the JIF of their venues ( Zhang & Sivertsen, 2020 ). Research institutions and universities are now being encouraged to adopt a more comprehensive evaluation method that combines qualitative and quantitative methods and pays more attention to the quality, contribution, and impact of a researcher’s masterpiece. Hence, more indicators are being taken into account and, upon these, a culture more conducive to exploration is being established that does not prioritize SCI/SSCI/A&HCI journals to the exclusion of all else.

The related policies about journal evaluation in China (selected)

Note: Ministry of Education of the People’s Republic of China (MOE); China Association for Science and Technology (CAST); State Administration of Press, Publication, Radio, Film and Television of the People’s Republic of China (SAPPRFT), and it was renamed as National Radio and Television Administration of the People’s Republic of China (NRTA) in 2018; Chinese Academy of Sciences (CAS); Chinese Academy of Engineering (CAE); General Office of the State Council of the People’s Republic of China (GOSC); Ministry of Science and Technology of the People´s Republic of China (MOST); Communist Party of China (CPC).

Through these three stages of development, multiple institutions in China have established comprehensive journal evaluation systems that combine quantitative and qualitative methods and a variety of different indicators, many of which have had a significant influence on scientific research activities. Hence, what follows is a comparison of the current journal indexes in China. These are the CSCD and the Journal Partition Table (JPT) from the National Science Library, Chinese Academy of Sciences (NSLC); the AMI journal list from the Chinese Academy of Social Sciences Evaluation Studies (CASSES); the Chinese S&T Journal Citation Report (CJCR) from ISTIC; “A Guide to the Core Journals of China” (GCJC) from Peking University Library; CSSCI from the Institute for Chinese Social Science Research and Assessment (ICSSRA) of Nanjing University, and the World Academic Journal Clout Index (WAJCI) from the China National Knowledge Infrastructure (CNKI). In addition, some other influential lists produced by government agencies, professional associations, and universities are also briefly discussed.

3.1. NSLC: CSCD Journal List

3.1.1. background.

The CSCD was established in 1989 by the National Science Library of CAS, with the aim of disseminating excellent scientific research achievements in China and helping scientists to discover information. This database covers more than 1,000 of the top academic journals in the areas of engineering, medicine, mathematics, physics, chemistry, the life and earth sciences, agricultural science, industrial technology, the environmental sciences, and so on ( National Science Library of CAS, 2019b ). Since its inception, the CSCD has amassed 5.5 million articles and 80.5 million citation records. As the first Chinese citation database, the CSCD published the first printed book of journals in 1995 and the first retrieval CD-ROM in 1998, followed by an online version in 2003. In 1999, it launched the “CSCD ESI Annual Report” and in 2005 the “CSCD JCR Annual Report”, which are similar to the ESI and JCR and very well known across China. However, perhaps the most notable feature of CSCD is its cooperation with Clarivate Analytics (formerly, Thomson-Reuters) in 2007 to offer a cross-database search with the WoS, giving rise to the first-ever database of non-English-language journals.

CSCD provides information discovery services for analyzing China from the perspective of the world and analyzing the world from the perspective of China. Therefore, it is widely used by research institutes and universities for subject searches, funding support, project evaluations, declaring achievements, talent selection, literature measurement, and evaluation research. It is also an authoritative document retrieval tool ( Jin & Wang, 1999 ). Jin, Zhang et al. (2002) and Rousseau, Jin, and Yang (2001) both provide relatively thorough explorations and discussions of this journal list.

3.1.2. Journal selection criteria

The CSCD journal list is updated every 2 years, using both quantitative and qualitative methods. The most recent report (2019–2020) was released in April 2019 and listed 1,229 source journals in total: 228 English journals published in China and 1,001 Chinese journals. The selection criteria are summarized below ( National Science Library of CAS, 2019a ).

3.1.2.1. Journal scope

The journal must be published in either Chinese or English in China, with both an International Standard Serial Number (ISSN) and a China Domestic Uniform Serial Publication Number (CN). The subject coverage includes mathematics, physics, chemistry, earth science, biological science, agricultural science, medicine and health, engineering technology, environmental science, interdisciplinary disciplines, and some other similar subject areas.

3.1.2.2. Research fields

The research fields are mainly derived from the Level 1 and 2 classes of the 5th Chinese Library Classification (CLC). However, the Level 2 classes might be further subdivided based on the coupling strength between the citations and semantic similarity of articles published in the corresponding journal set. In the most recent edition, there are 61 research fields. To avoid the possible bias of subjectively allocating journals to fields, classifications are based on cross-citation relationships, and any journal can be classified into more than one field.

3.1.2.3. Evaluation indicators

To ensure fairness to all candidate journals, journal self-citations are excluded. The qualitative indicators used to measure different aspects of a journal’s quality are shown in Table 3 .

Quantitative indicators of CSCD journal list

3.2. NSLC: JPT Journal List

3.2.1. background.

The JPT was built and is maintained by the Centre of Scientometrics, NSLC. The idea behind the partitioned design of this list began in 2000 with the goal of helping Chinese researchers distinguish between the JIFs of journals across different disciplines. The list was first released in 2004 in Excel format and only included 13 broad research areas. In 2007, these research areas were expanded to include the JCR categories, and, since 2012, the entire list has been published online to meet the growing number of retrieval requests.

This list provides reference data for administrators and researchers to evaluate the influence of international academic journals, and is widely recognized by many research institutions as a metric in their cash reward policies ( Quan, Chen, & Shu, 2017 ).

In 2019, the NSLC released a trial variation of the list while continuing to publish the official version. The upgraded JPT (trial) includes 11930 journals that are classified into 18 major disciplines. The journals cover most of the journals indexed in SCI and SSCI, as well as the ESCI journals published in China.

3.2.2. Journal selection criteria

Journals on the list are assessed using a rich array of citation metrics, including 3-year average JIFs. The list is divided into four partitions according to the 3-year average JIFs by research areas/fields. Using averages somewhat reduces any instability caused by significant annual fluctuations in JIFs. The partitions follow a pyramidal distribution. The top partition contains the top 5% of journals with the highest 3-year average JIFs in their discipline. Partition 2 covers 6%–20% and partition 3 covers 21%–50%, with the remaining journals in the fourth partition. Additionally, all the journals in the first partition and the top 10% of the journals in the second partition with the highest total citations (TC) are marked as “Top Journals.”

In the 2019 edition, multidisciplinary journals, such as Nature and Science , were ranked according to the average impact of each paper in an assigned discipline as determined by the majority of references given in the paper ( Research Services Group at Clarivate, 2019 ). That said, the papers in these journals are counted as multidisciplinary, despite the fact that many of them are highly specialized and represent research in specific fields, such as immunology, physics, neuroscience, etc.

Compared to the official version, the trial version has incorporated several essential updates ( Centre of Scientometrics of NSLC, 2020 ). First, the journals are classified based on the average impact of each paper published in the journal, and the papers are assigned to specific topics according to both citation relationship and text similarity ( Waltman & van Eck, 2012 ). Second, this version introduces a citation success index ( Franceschini, Galetto et al., 2012 ; Kosmulski, 2011 ) to replace JIFs as a measure of a journal’s impact. The citation success index of the target journal compared with the reference journal is defined as the probability of the citation count of a randomly selected paper from the target journal being larger than that of a random paper from a reference journal ( Milojević, Radicchi, & Bar-Ilan, 2017 ). Third, it extends the coverage of disciplines from the natural sciences into the social sciences to support the internationalization process of domestic titles. More specifically, coverage is extended to some local journals that are not listed in the JCR but are listed in the ESCI.

The initial purpose of the list was to evaluate the academic influence of SCIE journals, to provide academic submission references for scientific researchers, and to support macro analysis for research management departments. Although the Centre of Scientometrics, NSLC, has consistently stated that the list should not be used to make judgments at the micro level (e.g., to evaluate the performance of an individual), many institutions still use the JCR as a tool to evaluate the research of their employees. The list’s prominent position and strong influence in China’s scientific research evaluation has caused extensive debate, especially in the field of nuclear physics in 2018 ( Wang, 2018 ).

3.3. CASSES: AMI Journal List

3.3.1. background.

The AMI journal list is managed by the Chinese Academy of Social Sciences Evaluation Studies (CASSES), which was established in July 2017 out of the Centre of Social Sciences Evaluation, Chinese Academy of Social Sciences (CASS). CASSES has conducted a series of journal evaluation systems for Chinese journals based on the characteristics of disciplines and journals to form a comprehensive evaluation report of Chinese journals in the SSH. CASSES’ mandate is to optimize the utilization of scientific research journals and literature resources, as well as to provide references for journal evaluation, scientific research performance evaluation, scientific research management, talent selection, etc. ( Ma, 2016 ). The purpose of AMI is to focus on formative evaluation “to help and improve” rather than perform a summative evaluation “to judge” a journal’s quality. Another goal is to increase recognition of journals in the SSH by collaborating nationally across institutions, rather than competing to support good journals. The basic principle of AMI is to provide well-informed judgments about journals, not simple indicators, that translates to reliable advice on where to publish.

CASSES also provides evaluations on both new journals and English-language journals published in China to promote their development. New journals are defined as less than 5 years old. At present, no other domestic evaluation scheme has undertaken a similar expansion, which turns out to be one of the innovations of this index.

3.3.2. Journal selection criteria

The AMI journal list is updated every 4 years, and its comprehensive evaluation method combines quantitative evaluation with expert qualitative evaluation. According to the latest report of 2018, 1,291 academic journals in the field of SSH founded in 2012 or before are published in mainland China, and 164 new journals and 68 English journals were targets of particular evaluation. The reports divide the journals into categories: the Top Journals (5), Authoritative Journals (56), Core Journals (546), Extended Journals (711), and Indexed Journals (179) ( CASSES, 2018 ). And 26 English journals without CN have not evaluated.

The selection criteria for inclusion in the list are summarized below ( CASSES, 2018 ; Su, 2019 ):

3.3.2.1. Journal scope

The journals in the AMI list include some 2000 SSH journals listed by the former State Administration of Press, Publication, Radio, Film and Television of the People’s Republic of China in 2014 and 2017 ( SAPPRFT, 2014 , 2017 ). The lists include English-language journals and new journals that were founded in 2013–2017, and the final scope of journal evaluation is 1,291 Chinese academic journals, 164 new journals, and 68 English-language journals.

3.3.2.2. Research fields

The journals are divided into three broad subject categories, 23 subject categories, and 33 subject subcategories based on the university degree and academic training directory published by the Ministry of Education of the People’s Republic of China, Classification and the disciplines codes GB/T 13745-2009 in the Chinese Library Classification (fifth edition).

3.3.2.3. Evaluation indicators

There are three evaluation metrics: attraction, management power, and influence. Attraction gauges the journal’s external environment, its reputation among readers and researchers, and its ability to acquire external resources. Management power refers to the ability of the editorial team to promote the journal’s development. Influence represents the journal’s academic, social, and international influence, which is affected by the other two powers (attraction and management).

In addition to these three indicators, there are a further 10 second-level indicators and 24 third-level indicators, as shown in Table 4 . Looking closely at the list, one can see that most of the quantitative indicators can be obtained from different data sources (e.g., the journal’s website, academic news sources, citation platforms). Data to inform the remaining qualitative indicators is drawn from a broad survey and follow-up interviews. Note that the weights of the first-level indicators for pure humanities journals (H) versus the social sciences (SS) and multidisciplinary journals (MJ) are different, as indicated in the table by H/SS/MJ.

Quantitative indicators of AMI journal list

Note: The indicator type S means measurements add to the total score; O means measurements will reduce the total score; N means measurements do not affect the score in the current edition.

This refers to the proportion of papers that are funded by national funds in a journal.

This indicator is a point deduction indicator. If there is no academic misconduct, the score is “0”; rather, if there is such behavior, points will be deducted.

3.4. ISTIC: CJCR Journal List

3.4.1. background.

As late as 1987, few Chinese knew how many papers were published by Chinese scientists in the world, and no one knew how many papers were published domestically. As a result, the Institute of Science and Technical Information of China (ISTIC) was commissioned to conduct a paper “census.” Thus, initiated by ISTIC and sponsored by the Ministry of Science and Technology (then the State Science and Technology Commission), the China Scientific and Technical Papers and Citations Database (CSTPCD) was born as a database dedicated to the partial evaluation of the research performance of China’s scientists and engineers ( Wu, Pan et al., 2004 ).

ISTIC took advantage of the CSTPCD data to conduct statistical analyses on various categories of China’s scientific output each year. The results were then published in the form of an annual report and an accompanying press conference to inform society of China’s academic progress. The report includes the Chinese S&T Papers Statistics and Analysis: Annual Research Report and the Chinese S&T Journal Citation Reports (Core Edition), which provides a wealth of information and decision support for government administration departments, colleges and universities, research institutions, and researchers ( ISTIC, 2020a ).

3.4.2. Journal selection criteria

The list of journals selected by CSTPCD is called the “Statistical Source Journal of Chinese Science and Technology.” These journals are selected from a rigorous peer review and quantitative evaluation, and so are regarded as important scientific and technical journals in various disciplines in China. Currently, the list includes 2049 journals (1933 Chinese-language journals and 116 English-language journals) in the fields of natural sciences, engineering and technology, and 395 journals in the social sciences ( ISTIC, 2020b ). More details on the selection criteria are provided below ( ISTIC, 2020a ).

3.4.2.1. Journal scope

The catalog of China’s core S&T journals is adjusted once a year. The candidate journals to be evaluated include the core S&T journals selected in the previous year, along with applications to be considered for the current year that have held a CN number for more than 2 years. Further, the journal’s impact indicators must be ranked at the forefront of their discipline; should operate in line with national regulations and academic publishing norms; and must meet publishing integrity and ethical requirements. If a journal fails to meet these criteria or its peer assessment, its application is rejected or, if a journal is already listed in the catalog, it is withdrawn and can be reevaluated 1 year later.

3.4.2.2. Research fields

The journals are distributed across 112 subject classifications in the natural sciences and 40 in the social sciences.

3.4.2.3. Evaluation indicators

The evaluation system is based on multiple indexes, mostly bibliometric, and a combination of quantitative and qualitative methods. Specific indexes include citation frequency, JIF, important database collection, and overall evaluation score ( Ma, 2019 ).

3.5. Peking University Library: GCJC Journal List

3.5.1. background.

The GCJC is a research project conducted by researchers at the Peking University Library in conjunction with a dozen other university libraries and experts from other institutions. The guide is regularly updated to reflect the changing dynamics of journal development and has been published every 4 years since 1992 and every 3 years since 2011. It is only published in a printed book, and to date eight editions have been published by Peking University Press.

Whether and how the guide is used is up to the institutions that make use of it. It is worth noting that the guide is not an evaluation criterion for academic research and has no legal or administrative effectiveness, but some institutions do use it this way, which can create conflict. The selection principles emphasize that core journals are a relative concept to specific disciplines and periods. For the most part, the guide is used by library intelligence departments as an informational reference to purchase and reserve books, and to help tutors develop reading lists ( Committee for A Guide to the Core Journals of China, 2018 ).

3.5.2. Journal selection criteria

The 2017 edition of GCJC contains 1983 core journals assigned to seven categories and 78 disciplines. The selection criteria are provided below ( Chen, Zhu et al., 2018 ).

3.5.2.1. Journal scope

Any Chinese journal published in mainland China can be a candidate.

3.5.2.2. Research fields

Fields are based on the CLC categories of Philosophy, Sociology, Politics, and Law (Part I); Economy (Part 2); Culture, Education and History (Part 3); Natural Science (Part 4); Medicine and Health (Part 5); Agricultural Science (Part 6); Industrial Technology (Part 7).

3.5.2.3. Evaluation indicators

Comprehensive quantitative and qualitative analysis of 16 evaluation indicators, combined with the opinions of experts and scholars, are the basis of selection, as shown in Table 5 .

Quantitative indicators of GCJC journal list

3.6. ICSSRA: CSSCI Journal List

3.6.1. background.

CSSCI was developed by Nanjing University in 1997 and launched in 2000. CSSCI collects all source and citation information from all papers in source journals and source collections (published as one volume). The index records in CSSCI contain most of the bibliographic information in the papers. The content is normative, and the reference data are searchable ( Qiu & Lou, 2014 ).

The focus is on the social sciences in China and is gathered for the purposes of providing an efficient repository of information about Chinese knowledge innovation and cutting-edge research in the SSH, coupled with a comprehensive evaluation of China’s academic influence in these areas ( Su, Deng, & Shen, 2012 ; Su, Han, & Han, 2001 ). The journal data in CSSCI provides a wealth of raw information and statistics for researchers and institutions to study or to conduct evaluations based on authentic records of research output and citations.

3.6.2. Journal selection criteria

Through quantitative and qualitative evaluation methods, the 2019–2020 edition of CSSCI contains 568 core source journals and 214 extended source journals assigned among 25 disciplines ( ICSSRA, 2019 ). Extended source journals are evaluated and those that qualify are transferred to the core source journal list. The selection criteria are summarized below ( CSSCI Editorial Department, 2018 ; ICSSRA, 2019 ).

3.6.2.1. Journal scope

The journals must be Chinese and publish mainly original academic articles and reviews in the social sciences

Journals published in mainland China must have a CN number. Journals published in Hong Kong, Macao, or Taiwan must have an ISSN, and academic collections must have an ISBN.

Journals must be published regularly according to an established publishing cycle and must conform to the standards of journal editing and publication with complete and standardized bibliographic information.

3.6.2.2. Research fields

Each article in the CSSCI database is categorized according to the Classification and Code of Discipline (GBT 13745-2009) with reference to the Catalogue of Degree Awarding and Personnel Training (2011) (degree [2011] No. 11) and the Subject Classification Catalogue of National Social Science Foundation in China. At present, there are 23 journal categories based on subject classification, and two general journal categories: multidisciplinary university journals and multidisciplinary social science journals.

3.6.2.3. Evaluation indicators

The source journals of CSSCI are determined according to their 2-year JIF (excluding self-citations), total times cited, other quantitative indicators, and the opinions of experts from various disciplines.

3.7. CNKI: WAJCI Journal List

3.7.1. background.

The China National Knowledge Infrastructure (CNKI) is the largest comprehensive database in China. It is a key national information project led by Tsinghua University, first launched in 1996 in conjunction with the Tsinghua Tongfang Company. In 1999, CNKI began to develop online databases. In October 2009, it unveiled the construction of an international digital library together with world-famous foreign partners. At present, CNKI contains literature published since 1915 in over 7,000 academic journals published in China, including nearly 2,700 core and other significant journals. The database is divided into 10 series, 168 subjects, and 3,600 subsubjects ( CNKI, 2020 ). When a Chinese scholar wants to read a paper, they typically go to CNKI as the first port of call.

Since 2009, CNKI has invested and managed the “International and Domestic Influence of Chinese Academic Journals Statistics and Analysis Database.” This database publishes international and domestic evaluation indicators for nearly 6,000 academic journals officially published in China across four reports: the “Annual Report for Chinese Academic Journal Impact Factors,” the “Annual Report for International Citation of Chinese Academic Journals,” the “Journal Influence Statistical and Analysis Database,” and the “Statistical Report for Journal Network Dissemination” ( CNKI, 2018b ).

Since 2018, CNKI has also released the “Annual Report for World Academic Journal Impact Index (WAJCI).” This report aims to explore a scientific and comprehensive method for evaluating the academic influence of journals and provides objective statistics and comprehensive ranking for the academic impact of the world’s journals. This idea is not only conducive to building an open, diversified, and fairer evaluation system for journals; it is also helpful for improving the representation of Chinese journals in Western-dominated international indexes ( CNKI, 2018a ).

3.7.2. Journal selection criteria

The WAJCI journal list is updated annually; the most recent report was released in October 2019. The statistics shown in this report were derived from 22,325 source journals from 113 countries and regions (21,165 from the WoS database, including 9,211 from SCIE, 3,409 from SSCI, 7,814 from ESCI, and 1,827 from A&HCI, plus 1,160 Chinese journals). The WoS database does not provide JCR evaluation reports for some journals. In the case of new journals, this is because the citation frequency is typically very low. Excluding these journals without a JCR report leaves 13,088 journals to be evaluated, comprising 1,429 journals from mainland China and 11,659 from other countries and regions. Of these, 486 journals are in the field of SSH, 957 journals are in the field of science, technology, engineering and medicine (STEM), and 14 journals are interdisciplinary ( CNKI, 2019 ).

The selection criteria follow.

3.7.2.1. Journal scope

Journals should be published continuously and publicly.

Journals must predominantly publish original academic achievements, which should be peer reviewed.

Journals should comply with the requirements of international publishing and professional ethics.

Papers published in journals must conform to international editorial standards, which include editorial and publishing teams of high standing in their disciplines and a high level of originality, scientific rigor, and excellent readability.

3.7.2.2. Research fields

CNKI mainly follows a hybrid of the JCR classification system, the International Classification for Standards (ICS), and the CLC. Chinese journals that cannot be found in JCR are categorized into disciplines in one of the other lists. Moreover, the disciplines of JCR journals with serious duplication are appropriately merged. The final list spans 175 STEM disciplines and 62 SSH disciplines. Ultimately, all 13,088 journals are assigned into relatively accurate disciplines to ensure that the journals are ranked and compared within a unified discipline system.

3.7.2.3. Evaluation indicators

To comprehensively assess the international influence of journals, CNKI developed a complex indicator, called the CI index (clout index), that combines JIF with citation counts ( Wu, Xiao et al., 2015 ). It is generally believed that the most influential journal in a field should be the journal with the highest JIF and TC. The meaning of the CI value represents the degree of closeness between the journal influence and the optimal state of journal influence in the field. The smaller the gap, the closer the distance, which indicates that the influence of journals is closer to the optimal state. Furthermore, to compare journals on an international scale, CNKI publishes the indicators in WAJCI. The higher the value of the WAJCI, the higher the journal’s influence. WAJCI reflects the relative position of academic influence of journals within a discipline, so it can be used for interdisciplinary and even cross-year comparison, which has practical value.

3.8. Other Lists

In addition to the above seven main journal lists in China, another influential list, called the Research Center for Chinese Science Evaluation (RCCSE) core journal list , was developed by the RCCSE of Wuhan University in the early 2000s to provide multidisciplinary and comprehensive Chinese journal rankings ( Zhang & Lun, 2019 ). The evaluated journals mainly include pure academic journals and semiacademic journals from the natural sciences or humanities and social sciences ( Qiu, Li, & Shu, 2009 ). It adopts a mixture of quantitative and qualitative methods to evaluate the target journals and mainly concerns the quality, level, and academic influence of the journal ( Qiu, 2011 ). In the process of journal evaluation, the general principles are classified evaluation and hierarchical management ( Qiu, 2011 ). In addition, there are some other lists published by government agencies, professional associations, and universities that warrant mention, and they are briefly described below.

3.8.1. CDGDC: A-class journal list

In 2016, the fourth China University Subject Rankings (CUSR) was launched to evaluate the subjects of universities and colleges in mainland China in line with the “Subject Catalogue of Degree Awarding and Personnel Training” approved by the Ministry of Education. Organized by the China Academic Degrees and Graduate Education Development Centre (CDGDC), the aim was to acquaint participating universities and institutions with the merits and demerits of their subject constructions and curriculums, and to provide relevant information on national graduate education ( CDGDC, 2016 ). The instructions of the fourth CUSR specifically point out that the number of papers published in A-class journals (both Chinese and international) is a critical indicator of the quality of a subject offering ( Ministry of Education, 2016a ).

As described by the Ministry of Education ( Ministry of Education, 2016a , 2016b ), the process for selecting which journals were “A-class” was as follows. First, the publishers and bibliometric data providers (e.g., Thomson Reuters, Elsevier, CNKI, CSSCI, CSCD) were invited to provide a preliminary list of journals based on bibliometric indicators, such as JIF and reputation indices. Then, doctoral tutors were invited to participate in online voting for the candidates. Last, the voting results were submitted to the Academic Degrees Committee of the State Council for review, who finalized the journal list. The A-class journal list exercise was an attempt to combine bibliometric indicators and expert opinions. However, the list was abandoned only 2 weeks after release as a fiery debate erupted among many scientific communities.

3.8.2. Chinese Computing Federation: CCF-recommended journal list

The China Computer Federation (CCF) is a national academic association in China, established in 1956. Their publication ranking list, released in 2012, divides well-known international computer science conferences and journals into 10 subfields. A rank of A indicates the top conferences and journals, B is for journals and conferences with significant impact, and important conferences and journals are placed in the C bracket.

In April 2019, the CCF released the 5th edition List of International Academic Conferences and Periodicals Recommended by the CCF. In the course of the review, the CCF Committee on Academic Affairs brought experts together to thoroughly discuss and analyze these suggestions. The candidates were reviewed and shortlisted by an initial assessment panel, then examined by a final evaluation panel before announcing the final results. Factors such as the venue’s influence and an approximate balance between different fields were considered when compiling the list ( China Computer Federation, 2019 ). Today, this list is widely recognized in computer science fields and has accelerated the process of publishing more papers in top conferences, as well as improving the quality of those publications ( Li, Rong et al., 2018 ).

3.8.3. School or departmental journal lists

With the rapidly increasing and burdensome number of scholarly outlets for academic assessment, administrators and research managers are constantly looking to improve the speed and efficiency of their assessment processes. Many construct their own school or departmental list as a guide for evaluating faculty research ( Beets, Kelton, & Lewis, 2015 ). Business schools particularly prefer internal journal lists to inform their promotion and tenure decisions ( Bales, Hubbard et al., 2019 ). In fact, almost all of the 137 Chinese universities that receive government funding have created their own internal journal lists as indicators of faculty performance ( Li, Lu et al., 2019 ).

What is clear from the descriptions of each of the major journal lists is that each was established to fill specific objectives, and each has its own selection criteria, yet there may be as many similarities between the seven systems as there are differences. Therefore, for a broader picture of the evaluation system landscape, we undertook a comprehensive comparative analysis. Our findings are presented in this section.

4.1. Profiles of Journal List and Indexed Journals

CJCR was first established by ISTIC in 1987. GCJC, CSCD, CSSCI, and JPT followed shortly after. AMI and WAJCI joined the club more recently. As indicated in Table 6 , studies on journal selection have included a wide variety of participants, such as research institutes, universities, and private enterprises. Another observation is that the regularity with which journal lists are updated is not the same. Currently, JPT, CJCR, and WAJCI are updated once a year; CSCD and CSSCI are updated every 2 years; GCJC is updated every 3 years, and AMI every 4 years.

Profiles of the leading academic journal lists in China

Note: The research areas are classified into five broad categories: Arts & Humanities (AH); Life Sciences & Biomedicine (LB); Physical Sciences (PS); Social Sciences (SS); Technology Engineering (TE).

Clearly, the number of journals, scope, languages, and research areas of each journal list are different. JPT and WAJCI count the most journals, both of which have a domestic and international scope. All of the other lists only cover domestic journals, obviously making them smaller than the previous two. Although most of the journal lists include English-language journals, these are few in China. In terms of disciplines, JPT and CSCD focus more on the natural sciences; AMI and CSSCI focus on the SSH field; and CJCR, GCJC, and WAJCI span all disciplines.

There are also different requirements for ISSNs and CNs. AMI, CJCR, and GCJC only accept journals with CNs, and the JPT only accepts journals with an ISSN. CSSCI and WAJCI have the least stringent requirements, requiring that the journal has one or the other. By contrast, CSCD requires its journals to have both.

4.2. The Evaluation Characteristics of Journal lists

How journals are assessed is the most critical aspect of any evaluation system. Further, as indicated in Table 7 and Table 8 , these systems were designed with many different objectives in mind. Although often used in scientific evaluation, the purpose of most is to provide readers, librarians, and information agencies with reference material to help them purchase and manage journal stocks. This is certainly the case with JPT, CJCR, GCJC, CSSCI. CSCD, CJCR, and CSSCI extend this mission further by seeking to provide references for research management and academic evaluation. However, the objectives of AMI and WAJCI are different. AMI’s goal is to increase the quality and recognition of journals in the SSH, while CNKI built WAJCI to provide “apples with apples” statistics on the world’s journals.

Evaluation purposes, methods, and results of the leading academic journal lists in China

Evaluation criteria, indicators, data sources of the leading academic journal lists in China

Note: Partly refer to Ma (2019) .

The methods of calculation and indicators that each system uses are different. Most rely on a combination of quantitative and qualitative tools, while JPT and WAJCI are largely quantitative systems. Both are heavily dependent on JIFs, but JPT relies on a 3-year average, while WAJCI combines JIFs with TC to create its indicator. The other lists mainly evaluate the attraction and management ability of journals through bibliometric indicators, such as JIFs and citations, supplemented by peer review. AMI, however, adds extra indicators over and above the standard set.

Moreover, the weight of each indicator changes depending on the purposes of lists. For example, AMI’s mission is to improve the quality and influence of both China’s SSH journals and the evaluation systems that rank them. Therefore, AMI houses a comprehensive set of indicators that cover processes, talent, management, the editorial team, etc., each of which is measured against the three “powers” (i.e., attraction, management, and influence). By contrast, the fundamental purpose of GCJC is to help librarians optimize their journal collections and provide readers with guidance on the titles in their disciplines. Hence, GCJC rests more on bibliometric indicators and quantitative analyses of the growth trends and scatter law patterns of journals in a field.

Data sources of indicators are another characteristic for comparison. JPT is mainly an international database. WAJCI and GCJC combine international databases with local Chinese databases to expand the type and volume of data provided. Although the indicators data of AMI draws from a wide range of sources, such as the self-built and self-collected data of CASSES (e.g., CHSSCD), the third-party data, the self-evaluated data of the journal editorial departments, and the data included are mostly determined by the producers of the original indexes. The same is true for CSCD, CSSCI, and GCJC.

The last criterion for comparison is the grading system. All divide their listed journals into disciplines, and most calculate their rankings relative to that discipline. JPT and WAJCI each have four tiers, but the JPT system is a pyramid, whereas the WAJCI scheme is equally divided, the same as JCR. AMI’s system is more complicated because the journals are divided into three categories (A-journals, new journals and English-language journals), then further subdivided into five levels according to quality. CSCD and CSSCI are divided into two levels—core and extended journals—and CJCR and GCJC do not have grades. To some extent, these divisions are hierarchical and systematic, which is convenient for users. However, how many journals appear in more than one index and how similar their rankings are across indexes needs further analysis and discussion.

There is no doubt that China’s journal evaluation and selection systems have achieved remarkable growth and impact, resulting in some influential journal lists. In the 60 years since journal evaluation was first introduced to China, the functions of these lists have grown, diversified, and generated their fair share of controversy. Some lists simply seek to provide decision-making support for information consultants, journal managers, research managers and funders, editors, and others. Others are designed to help optimize library collections, provide reading-list guidance or support referencing and citation services. Further, and more controversially, journal evaluations are increasingly becoming proxies for evaluating the academic achievements of individual researchers. As an extension of the original purposes, the evaluation of core journals has an important influence on a journal’s editorial procedures and strategies. To maintain the continuous development of their academic journals, publishers and publishing houses must conduct journal evaluations as well as supervision ( Ren & Rousseau, 2004 ).

5.1. Greater Cooperation Among the Different Journal List Providers

Seven different journal lists is a lot, even for a country as large and diverse as China. However, what is more notable is the number of different institutions that contribute to informing these lists, and the fact that individual universities still feel the need to create their own internal lists to complement the published systems. Everyone in this landscape is gathering their data, and most are constructing their own data sets, classifying and ranking the journals and papers, calculating their own rankings and metrics, etc. The result is simply an overlap of effort in many cases. We know that if institutions want to build an influential and authoritative journal evaluation or selection system, it not only needs to be based on sound indicators but also a on comprehensive range of triangulated data sources. An obvious solution is for the producers of these evaluation instruments to collaborate on research and development. They could build a national platform for coordination, influence, and collaboration on developing shared information resources and tools and agreed definitions and protocols ( Zhang & Sivertsen, 2020 ). Cooperation would be conducive to establishing a unified and authoritative journal evaluation and selection system and, more importantly, it could significantly increase the objectivity and fairness of the results.

5.2. More Compatibility Between Subject Classifications

Our analysis shows that each scheme adopts a different subject classification system. However, many articles are interdisciplinary and, because papers are assessed relative to their discipline, a publication can be evaluated with very different results in each category. Therefore, when evaluating and selecting journals, institutions should pay attention to the subject classification of journals to ensure the relative accuracy of the grades.

5.3. Exercise Caution when Using Journal Evaluations for Scientific Research Assessment and Management

Although the practice of China’s journal evaluation and selection systems is scientific and somewhat accurate, it is worth noting that journal rankings (such as JIFs) are not suitable for assessing the quality of individual research. The phenomenon of emphasizing JIFs and rankings in research evaluation is prevalent and persistent in China, but at least awareness of its adverse effects is growing ( Ministry of Education, 2020 ). Journal evaluation systems can make a strong contribution to research evaluation at the macro level, but applying those rankings as measures of impact and quality at the micro level (to individuals, institutions, subjects and the like) should be done with extreme caution. We should focus on the macro information about whether a given journal has been indexed in such systems, such as CSCD and CSSCI. A good example of wise macrolevel use of these evaluation systems can be found in the National Science Fund for Distinguished Young Scholars. At one time, the bibliometric data indexed in the CSCD was considered in the Fund’s document preparation and subsequent peer review.

Moreover, and in line with the new research evaluation policy in China as of 2020, the use of information such as journal quartiles and JIFs for microlevel evaluations should be reduced. Individual institutions need to establish their own guidelines on how to use journal ranking lists in their decisions ( Black et al., 2017 ), but when journal rankings are used they should be combined with other indicators. Research managers are also beginning to notice that there is no direct link between the influence of a journal and a single paper published in it. The use of JIFs for measuring the performance of individual researchers and their publications is highly contested and has been demonstrated to be based on wrong assumptions ( Zhang et al., 2017 ).

Accordingly, there have been some recent efforts to improve research evaluation at the article level as opposed to the level of journal, such as the F5000 project from ISTIC ( http://f5000.istic.ac.cn/ ). In this project, 5,000 outstanding articles from the top journals are selected each year to showcase the quality of Chinese STM journals ( Ma, 2019 ). The excellent articles project by the CAST ( http://www.cast.org.cn/art/2020/4/30/art_458_120103.html ) is another similar initiative. Reform will not be achieved overnight; that is long-term and arduous work. However, the many steps that need to be taken to get there begin with collaborative efforts such as these.

5.4. Collaborate with the International Bibliographic Databases

At present, there is no interconnection between the international evaluation systems and China’s, especially in the SSH. Journal list producers should try to cooperate with the international bibliographic databases to promote the internationalization of China’s journal evaluation systems. For example, linkages between CSCD and SCI over citation data have been established, and other joint systems, such as CSSCI and SSCI, might be promoted in the future. However, we should also realize that SSCI and A&HCI only partly represent the world’s scholarly publishing in the SSH ( Aksnes & Sivertsen, 2019 ). Therefore, China’s journal evaluation institutions should try to cooperate with international evaluation systems on the basis of improving their own systems as much as possible.

5.5. Accelerate the Establishment of an Authoritative Evaluation System

At present, there are seven main predominant journal lists in China, each established with its own evaluation objectives. This is a dispersed system, but not an integrated system. Although diversity allowed for the exploration of evaluation methods and data sources, there is no unified, authoritative standard. With the new research evaluation policy as of 2020, China is moving away from indicators based on the WoS as standard. This should empower China’s research institutions and funding organizations to define new standards, but this is a process that needs to be coordinated ( Zhang & Sivertsen, 2020 ). We contend that one comprehensive journal list, both domestic and international, should be created that reflects the full continuum of research fields, including interdisciplinary and marginalized fields. The list needs to be dynamic to reflect the changing journal market, and the evaluations need to be organized, balanced, and representative of a range of interinstitutional expert advice. A national evaluation system would not only conserve resources but also increase the credibility and authority of the core journal list. As an example, South Korea has only one system managed by the National Research Foundation of Korea. The same is true of several European, African, and Latin-American countries. So, while a national system is not a new idea, it is perhaps a proposal that deserves to be reconsidered for the scientific community in China.

We would like to thank Professor Xiaomin LIU (National Science Library, Chinese Academy of Sciences), Professor Liying YANG (National Science Library, Chinese Academy of Sciences), Professor Jinyan SU (Chinese Academy of Social Sciences Evaluation Studies), and Professor Jianhua LIU (Wanfang Data Co., LTD) for providing valuable data and materials. We also thank Ronald Rousseau (KU Leuven & University of Antwerp) and Tim Engels (University of Antwerp) for providing insightful comments.

Ying Huang: Funding acquisition, Formal analysis, Methodology, Resources, Supervision, Writing—original draft, Writing—review & editing. Ruinan Li: Formal analysis, Investigation, Writing—original draft, Writing—review & editing. Lin Zhang: Funding acquisition, Investigation, Resources, Supervision, Writing—review & editing. Gunnar Sivertsen: Funding acquisition, Methodology, Writing—review & editing.

The authors have no competing interests.

This work is supported by the National Natural Science Foundation of China (Grant No. 72004169; 71974150; 71904096; 71573085), the Research Council of Norway (Grant No. 256223), and the MOE (Ministry of Education in China) project of humanities and social sciences (18YJC630066), and the National Laboratory Center for Library and Information Science in Wuhan University.

The raw bibliometric data were collected from Clarivate Analytics. A license is required to access the Web of Science database. Therefore, the data used in this paper cannot be posted in a repository.

http://www.xinhuanet.com//politics/2016-05/31/c_1118965169.htm

Abbreviations and full names used in the article

Author notes

Email alerts, related articles, affiliations.

- Online ISSN 2641-3337

A product of The MIT Press

Mit press direct.

- About MIT Press Direct

Information

- Accessibility

- For Authors

- For Customers

- For Librarians

- Direct to Open

- Open Access

- Media Inquiries

- Rights and Permissions

- For Advertisers

- About the MIT Press

- The MIT Press Reader

- MIT Press Blog

- Seasonal Catalogs

- MIT Press Home

- Give to the MIT Press

- Direct Service Desk

- Terms of Use

- Privacy Statement

- Crossref Member

- COUNTER Member

- The MIT Press colophon is registered in the U.S. Patent and Trademark Office

This Feature Is Available To Subscribers Only

Sign In or Create an Account

- Hirsh Health Sciences

- Webster Veterinary

Scholarly Publishing

- Choosing a journal to publish in

Evaluating journals

Predatory publishers, journal directories, article analyzers & journal suggesters, undergraduate research journals, tools to measure journal impact.

- Author's rights, copyright & permissions

- Funding & discounts for open access publishing

- Resources for publishing & sharing your work

- Your scholarly profile

Any questions?

How can you identify journals to publish your work in? To start, look at the journals you read, that your colleagues read and publish in, and at who you cite in your work. Is there a pattern to those journals?

There are also additional tools that you can use to identify & evaluate journals you're considering publishing in. Browse this section of the guide to learn more about evaluating a journal; tools to use for finding appropriate journals such as journal directories & article analyzers; tools to measure the impact of a journal; and finding an undergraduate research journal to publish in.

When considering a journal as a potential place to publish, here are some things you might ask yourself:

Is the journal the right place for my work?

- Does the subject matter covered in the journal match your scholarship?

- Do the types of articles published and article length guidelines match with what you want to submit?

- Who is the audience of the journal?

Is this a trusted journal?

Look for journals where you can answer yes to many of the following questions:

- Can you identify the publisher? Are they affiliated with an organization you're familiar with? Is there contact information present?

- Do the affiliations & backgrounds of the editorial board and authors publishing in the journal appear to be appropriate for the subject matter of the journal?

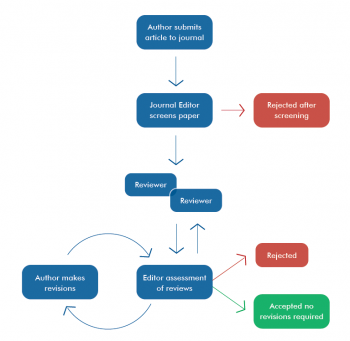

- Are articles peer-reviewed?

- Does the journal have an ISSN, and do articles have DOIs?

- Are the journal's copyright policies & any fees to publish clear? If you'd like to publish open access, are there options?

- Web of Science for journals spanning the humanities, social sciences, and STEM fields (select "Publication Name" from the drop down menu next to the search box)

- Scopus for journals in the social sciences and STEM fields

- SciFinder for journals in Chemistry and related fields (select "Journal" under the References bar)

- PubMed for life sciences, biomedical, clinical, and public/community health journals (choose "Journal" from the drop down menu next to the search box)

- JSTOR for journals spanning the arts, humanities, social sciences, and sciences (scroll down and search using the "Publication Title" search box)

You can also look at the Think Check Submit checklist, use a journal evaluation tool [pdf] , or talk to the library!

"Predatory journals and publishers are entities that prioritize self-interest at the expense of scholarship and are characterized by false or misleading information, deviation from best editorial and publication practices, a lack of transparency, and/or the use of aggressive and indiscriminate solicitation practices."

Grudniewicz, Agnes, et. al. (2019). Predatory journals: No definition, no defence. Nature (London) , 576 (7786), 210–212. https://doi.org/10.1038/d41586-019-03759-y .

Visit the website for the journal and consider the questions in the Evaluating journals section above. Some red flags include:

- The journal is not listed in the Directory of Open Access Journals (DOAJ)

- It's not listed in Ulrichs (Tufts login required), which is an authoritative source on publisher information, including Open Access titles

- It's not widely available within major databases

- You don't recognize previously published authors or members of the editorial board

- The journal isn't affiliated with a university or scholarly organization you are familiar with

- You can't easily identify if they have author processing fees and/or how much they cost.

- The journal doesn't appear professional - look for an impact factor, an ISSN, DOIs for individual articles, and easy to find contact information

- There isn't clear information about a peer-review process, or the journal promises extremely fast turn-around times to publishing that don't allow enough time for review

Use these resources to browse for an appropriate journal for your work, or to research a title that you're considering publishing in.

- Directory of Open Access Journals Use DOAJ to search or browse high-quality, peer-reviewed open access journal titles in all subjects and languages.

- MLA Directory of Periodicals Find out information for thousands of journals and book series that cover literature, literary theory, dramatic arts, folklore, language, linguistics, pedagogy, rhetoric and composition, and the history of printing and publishing.

- Ulrichsweb Ulrichsweb is the authoritative source of bibliographic and publisher information on more than 300,000 periodicals of all types: academic and scholarly journals, Open Access publications, peer-reviewed titles, popular magazines, newspapers, newsletters, and more from around the world.

If you've written an article but aren't sure where to submit it, these tools can help. They use your article's title, keywords, abstract, or full text to find journals that have published similar articles. The description for each resource below notes if it's limited to a specific publisher or discipline.

- B!SON Open Access Journal Finder Enter the title, abstract, and/or references of your paper to find an open access journal suitable to publish in.

- JSTOR Text Analyzer Drag and drop a copy of your article into the Text Analyzer, and the tool will find similar content in JSTOR. Consider the journals that those papers are published in.

- Jane (Journal Author/Name Estimator) Enter your article title and/or abstract of the paper in the box, and click on 'Find journals', 'Find authors' or 'Find Articles'. Jane will then compare your document to millions of documents indexed in Medline to find the best matching journals, authors or articles.

- Elsevier Journal Finder Elsevier Journal Finder uses smart search technology and field-of-research specific vocabularies to match your article to Elsevier journals.

- IEEE Publication Recommender Searches 170+ periodicals and 1500+ conferences from IEEE, provides factors such as Impact Factor and Submission-To-Publication Time.

- ChronosHub Journal Finder Browse, search, filter, sort, and compare more than 40,000 journals to find the right journal without worry about publishing in compliance with your funders’ Open Access policy.

Undergraduate research journals aren't indexed in many of the sources we typically use for finding journals, so lists of academic journals focused on publishing undergraduate research compiled by universities and organizations are good starting places for finding a place to publish your work:

- Undergraduate Research Journal Listing from the Council on Undergraduate Research

- Where to Publish Your Research (compiled by Sacred Heart University)

- Undergraduate Research Journals (compiled by University of Nebraska)

- Undergraduate Research Journals (compiled by CUNY)

- Student Journals hosted on the bepress platform

Some things to consider while looking for an undergraduate research journal to publish your scholarship in include:

- Is there a submission deadline?

- Does the journal appear to be currently publishing?

- Are the journal's copyright policies clear?

- Journal Citation Reports Provides Impact Factors, and Eigenfactors and Article Influence Scores for science and social science journals.

- Scopus Journal Analyzer Use the Journal Analyzer to compare up to 10 Scopus sources on a variety of parameters: CiteScore, SJR (Scimago Journal and Country Rank), and SNIP (source normalised impact per paper)

Read more about these tools & measures on Hirsh Library's Measuring Research Impact guide .

- << Previous: Home

- Next: Author's rights, copyright & permissions >>

- Last Updated: Apr 11, 2024 4:20 PM

- URL: https://researchguides.library.tufts.edu/publishing

- Corrections

Search Help

Get the most out of Google Scholar with some helpful tips on searches, email alerts, citation export, and more.

Finding recent papers

Your search results are normally sorted by relevance, not by date. To find newer articles, try the following options in the left sidebar:

- click "Since Year" to show only recently published papers, sorted by relevance;

- click "Sort by date" to show just the new additions, sorted by date;

- click the envelope icon to have new results periodically delivered by email.

Locating the full text of an article

Abstracts are freely available for most of the articles. Alas, reading the entire article may require a subscription. Here're a few things to try:

- click a library link, e.g., "FindIt@Harvard", to the right of the search result;

- click a link labeled [PDF] to the right of the search result;

- click "All versions" under the search result and check out the alternative sources;

- click "Related articles" or "Cited by" under the search result to explore similar articles.

If you're affiliated with a university, but don't see links such as "FindIt@Harvard", please check with your local library about the best way to access their online subscriptions. You may need to do search from a computer on campus, or to configure your browser to use a library proxy.

Getting better answers