IEEE Account

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

V-Model: a new perspective for EHR-based phenotyping

Affiliation.

- 1 Interdisciplinary Program of Biomedical Engineering, Seoul National University, Seoul, Republic of Korea. [email protected].

- PMID: 25341558

- PMCID: PMC4283133

- DOI: 10.1186/1472-6947-14-90

Background: Narrative resources in electronic health records make clinical phenotyping study difficult to achieve. If a narrative patient history can be represented in a timeline, this would greatly enhance the efficiency of information-based studies. However, current timeline representations have limitations in visualizing narrative events. In this paper, we propose a temporal model named the 'V-Model' which visualizes clinical narratives into a timeline.

Methods: We developed the V-Model which models temporal clinical events in v-like graphical structure. It visualizes patient history on a timeline in an intuitive way. For the design, the representation, reasoning, and visualization (readability) aspects were considered. Furthermore, the unique graphical notation helps to find hidden patterns of a specific patient group. For evaluation, we verified our distinctive solutions, and surveyed usability. The experiments were carried out between the V-Model and a conventional timeline model group. Eighty medical students and physicians participated in this evaluation.

Results: The V-Model was proven to be superior in representing narrative medical events, provide sufficient information for temporal reasoning, and outperform in readability compared to a conventional timeline model. The usability of the V-Model was assessed as positive.

Conclusions: The V-Model successfully resolves visualization issues of clinical documents, and provides better usability compared to a conventional timeline model.

PubMed Disclaimer

Similar articles

- Heimdall, a Computer Program for Electronic Health Records Data Visualization. Martignene N, Balcaen T, Bouzille G, Calafiore M, Beuscart JB, Lamer A, Legrand B, Ficheur G, Chazard E. Martignene N, et al. Stud Health Technol Inform. 2020 Jun 16;270:247-251. doi: 10.3233/SHTI200160. Stud Health Technol Inform. 2020. PMID: 32570384 Review.

- Timeline representation of clinical data: usability and added value for pharmacovigilance. Ledieu T, Bouzillé G, Thiessard F, Berquet K, Van Hille P, Renault E, Polard E, Cuggia M. Ledieu T, et al. BMC Med Inform Decis Mak. 2018 Oct 19;18(1):86. doi: 10.1186/s12911-018-0667-x. BMC Med Inform Decis Mak. 2018. PMID: 30340483 Free PMC article.

- TimeLine: visualizing integrated patient records. Bui AA, Aberle DR, Kangarloo H. Bui AA, et al. IEEE Trans Inf Technol Biomed. 2007 Jul;11(4):462-73. doi: 10.1109/titb.2006.884365. IEEE Trans Inf Technol Biomed. 2007. PMID: 17674629

- Review of Temporal Reasoning in the Clinical Domain for Timeline Extraction: Where we are and where we need to be. Olex AL, McInnes BT. Olex AL, et al. J Biomed Inform. 2021 Jun;118:103784. doi: 10.1016/j.jbi.2021.103784. Epub 2021 Apr 14. J Biomed Inform. 2021. PMID: 33862232 Review.

- Feasibility of Using EN 13606 Clinical Archetypes for Defining Computable Phenotypes. Tapuria A, Kalra D, Curcin V. Tapuria A, et al. Stud Health Technol Inform. 2020 Jun 16;270:228-232. doi: 10.3233/SHTI200156. Stud Health Technol Inform. 2020. PMID: 32570380

- Seeing Your Stories: Visualization for Narrative Medicine. Ma H, Yuan X, Sun X, Lawson G, Wang Q. Ma H, et al. Health Data Sci. 2024 Jan 23;4:0103. doi: 10.34133/hds.0103. eCollection 2024. Health Data Sci. 2024. PMID: 38486622 Free PMC article.

- Temporal Segmentation for Capturing Snapshots of Patient Histories in Korean Clinical Narrative. Lee W, Choi J. Lee W, et al. Healthc Inform Res. 2018 Jul;24(3):179-186. doi: 10.4258/hir.2018.24.3.179. Epub 2018 Jul 31. Healthc Inform Res. 2018. PMID: 30109151 Free PMC article.

- A Simulation Study on Handoffs and Cross-coverage: Results of an Error Analysis. Blondon K, Zotto MD, Rochat J, Nendaz MR, Lovis C. Blondon K, et al. AMIA Annu Symp Proc. 2018 Apr 16;2017:448-457. eCollection 2017. AMIA Annu Symp Proc. 2018. PMID: 29854109 Free PMC article.

- Roden DM, Pulley JM, Basford MA, Bernard GR, Clayton EW, Balser JR, Masys DR. Development of a large-scale de-identified DNA biobank to enable personalized medicine. Clin Pharmacol Ther. 2008;84:362–369. doi: 10.1038/clpt.2008.89. - DOI - PMC - PubMed

- Murphy S, Churchill S, Bry L, Chueh H, Weiss S, Lazarus R, Zeng Q, Dubey A, Gainer V, Mendis M, Glaser J, Kohane I. Instrumenting the health care enterprise for discovery research in the genomic era. Genome Res. 2009;19:1675–1681. doi: 10.1101/gr.094615.109. - DOI - PMC - PubMed

- Murphy SN, Mendis ME, Berkowitz DA, Kohane I, Chueh HC. Integration of clinical and genetic data in the i2b2 architecture. AMIA Annu Symp Proc. 2006;2006:1040. - PMC - PubMed

- Manolio TA. Collaborative genome-wide association studies of diverse diseases: programs of the NHGRI's office of population genomics. Pharmacogenomics. 2009;10:235–241. doi: 10.2217/14622416.10.2.235. - DOI - PMC - PubMed

- Kaiser Permanente, UCSF Scientists Complete NIH-Funded Genomics Project Involving 100,000 People [ http://www.dor.kaiser.org/external/news/press_releases/Kaiser_Permanente... ]

Pre-publication history

- The pre-publication history for this paper can be accessed here: http://www.biomedcentral.com/1472-6947/14/90/prepub

Publication types

- Search in MeSH

Related information

Linkout - more resources, full text sources.

- BioMed Central

- Europe PubMed Central

- PubMed Central

Other Literature Sources

- The Lens - Patent Citations

- scite Smart Citations

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- BMC Med Inform Decis Mak

V-Model: a new perspective for EHR-based phenotyping

Heekyong park.

Interdisciplinary Program of Biomedical Engineering, Seoul National University, Seoul, Republic of Korea

Jinwook Choi

Department of Biomedical Engineering, College of Medicine, Seoul National University, Seoul, Republic of Korea

Associated Data

Narrative resources in electronic health records make clinical phenotyping study difficult to achieve. If a narrative patient history can be represented in a timeline, this would greatly enhance the efficiency of information-based studies. However, current timeline representations have limitations in visualizing narrative events. In this paper, we propose a temporal model named the ‘V-Model’ which visualizes clinical narratives into a timeline.

We developed the V-Model which models temporal clinical events in v-like graphical structure. It visualizes patient history on a timeline in an intuitive way. For the design, the representation, reasoning, and visualization (readability) aspects were considered. Furthermore, the unique graphical notation helps to find hidden patterns of a specific patient group. For evaluation, we verified our distinctive solutions, and surveyed usability. The experiments were carried out between the V-Model and a conventional timeline model group. Eighty medical students and physicians participated in this evaluation.

The V-Model was proven to be superior in representing narrative medical events, provide sufficient information for temporal reasoning, and outperform in readability compared to a conventional timeline model. The usability of the V-Model was assessed as positive.

Conclusions

The V-Model successfully resolves visualization issues of clinical documents, and provides better usability compared to a conventional timeline model.

Electronic supplementary material

The online version of this article (doi:10.1186/1472-6947-14-90) contains supplementary material, which is available to authorized users.

As electronic health record (EHR) systems rapidly become popular, studies on EHR-driven phenotyping have begun to emerge across countries [ 1 – 5 ]. Identifying patient cohorts is an essential part in EHR-driven genomic research. Various types of EHR data, ranging from structured data to unstructured narrative documentation, are selected and reviewed for validation. Of the many types of data that EHR provides, clinical documentation is considered to be the best resource. It contains rich information, and relations among events (such as why the medication was used) which are not provided under a predefined structural input system. However, Natural Language Processing (NLP) content is the most difficult part in phenotype algorithm construction [ 6 ]. Although there are many NLP tools for medical domains [ 7 – 11 ] and previous studies have adopted tools to extract useful information from enormous clinical documentations [ 12 ], human interference is still required. In the i2b2 project, clinical experts reviewed the full clinical narrative text of a random subsample to establish a “gold-standard” phenotype [ 2 ]. Kho et al. [ 13 ] reported that the eMERGE project also validated the EMR phenotype through manual review by experts. The process is time consuming and may cause mistakes.

Hripcsak and Albers [ 14 ] emphasized the need for a new model populated with characteristics learned from the data. We paid attention to temporal information and causality information, which constitute the main stream of clinical documentation. It would be greatly beneficial if narrative patient data and causality were incorporated into a timeline. The i2b2 project tried to adopt a timeline for phenotyping [ 15 , 16 ]. To support the validation of newly derived NLP data, the i2b2 Workbench rendered a timeline of the observed data. Lifelines2 displayed clinical data generated by the i2b2 query system to help find hidden patterns in the EHR, by aligning patient histories on sentinel events [ 17 ]. However, these timelines are limited to temporally explicit events and therefore not applicable to implicit events which frequently appear in clinical documentation. In this paper, we propose a novel model to visualize narrative patient history called the V-Model. The V-Model displays narrative patient data and causal relation on a timeline in a patterned format.

Related works

There have been attempts to visualize patient history using timelines. Various types of data have been used. Many of the systems have proposed an interface design for raw time-oriented data. Cousins and Kahn [ 18 ] developed Time Line Browsers, an interactive environment for displaying and manipulating sets of timelines. Plaisant et al. [ 19 , 20 ] developed LifeLines that reorganize and visualize personal history to enhance navigation and analysis. Bui et al. [ 21 , 22 ] introduced the TimeLine system with the goal to provide a generalized methodology that could be applied to tailor UIs for different medical problems. More advanced attempts that have used abstracted data were also studied. The Knave-II offered timeline visualization on both raw data and on multiple levels of concepts abstracted from the data [ 23 – 25 ]. However, little work has been done that targets narrative clinical documents and events that have been visualized selectively. Bashyam et al. [ 26 ] developed a problem-oriented medical record (POMR) system for clinical documents. The existence of problems or findings was visualized on a timeline grid which is a collection of explicit date cells. However, the timeline does not display other useful information, which does not belong to the problem list of their interests (e.g., narrative descriptions about situations that caused problematic symptoms). In addition, POMR view is difficult for reviewing clinical flow for general purposes. Jung et al. [ 27 ] developed a system that constructs timelines of narrative clinical records, applying the deep natural language understanding technique. The approach was generic covering a variety of clinical domains. However, they focused on explicit temporal expressions and present tense sentences only. LifeLines2 displays selected temporal categorical data from multiple patients. The data are not numerical in nature but time-stamped ones [ 17 , 28 ]. The restrictive implementations are due to difficulties in the NLP of clinical documents.

There have been studies suggesting solutions for visualization problems. In regards to granularity issues, LifeLines suggested a zooming function [ 19 , 20 ] and KNAVE-II proposed a content-based zoom method [ 25 ] to solve multiple granularity problems. Implicit problems were solved by graphical variations of the point/interval notation. TVQL modeled ambiguous temporal relationships with sliders, boxes, and line segments [ 29 ]. Combi et al. [ 30 ] defined graphical symbols that represented a starting/ending instant, and minimum/maximum duration to represent undefined durations. Causality relation is one of the key features to understanding clinical context in its original description. Hallet [ 31 ] used color-coded arrows only when a user requested causality information. However, previous solutions cannot fully support diverse visualization problems in medical texts.

Problem definition

Representing narrative patient history with conventional timeline representation (i.e., representing point/interval events as a point and time span proportion as time length) comes with specific problems. We reviewed fifty randomly selected discharge summaries from Seoul National University Hospital (SNUH), and categorized the difficulties below.

Representation

Causality should be extrapolated using medical knowledge. Sometimes it gets very hard even for physicians. For example, in Figure 1 (a), a CT test (marked in a red circle) was done twice on date ‘T1’. The original text depicts the reasons for each CT test. However, a physician cannot determine which one is the causal event from the timeline. Although rare, there have been attempts to show causality. However, they do not directly show the relation within a timeline to maintain visual clarity. Moreover, the quality of the information highly depends on the accuracy of the extraction system, which is not applicable to a broad medical domain.

Problems with conventional timeline representation. (a) Illustrates an example of a causality problem, and (b) shows an ambiguous sequence problem. The timeline view is generated from the LifeLines [ 32 ] program to show a conventional timeline example. For explanation purposes, we represented all unclear events as a point.

Non-explicitness

Implicitness, fuzziness and uncertainty, incomplete-interval problem, and omission of temporal expressions cannot be displayed on a conventional timeline. Point/interval variations to express as possible ranges have been attempted. However, a timeline full of such notations would make it more complex than the original text. Furthermore, some useful information contained in the original expression might be missed. For example, “Since this Korean Thanksgiving Day ” implies both time information and a possible reason that caused the symptom such as heavy housework.

Granularity

Clinical documents contain temporal expressions written in diverse granularity levels. For instance, “Seizure increased since three days ago. Since two hours before , respiration rate increased …” Current fixed granularity view requires additional zooming action for finer level information, and coarser information cannot be represented in a finer granularity view.

Temporal relation is often hard to infer. As shown in Figure 1 (b), the internal sequence can be interpreted ambiguously. The temporal relationship from a non-explicit time event is also a difficult problem.

Visualization

Many of the previous medical timeline systems have tried to organize events in semantic categories. However, the representation terribly disturbs readability when tracing a long history. As the conventional timelines expand vertically in proportion to unique event numbers, one should scroll the page up and down multiple times for understanding. When there is a long healthy period among one’s medical history, the timeline will contain a long blank space, which can cause confusion and unnecessary scrolling.

Main axis of design concepts

We set requirements that took into consideration the representation of narrative clinical events and its utilization (reasoning and visualization aspects).

The model should be able to represent any kind of medical event preserving the integrity of the original context. Especially, the model should be able to solve causality, non-explicit temporal information, and uneven granularity problems.

The model should provide sufficient information for quantitative and qualitative temporal reasoning.

The model should provide an intuitive view that helps to understand patient history.

The V-Model is a time model for narrative clinical events. Figure 2 shows the basic structure of the V-Model. With its special v-like structure and modeling strategies, the V-Model is able to resolve causality, non-explicitness, granularity, and reasoning issues. Furthermore, it conveys clinical situation: who (patients or health care providers, not specified but implied), what and how (Actions), where (Visit), when (TAP), and why (Problems).

V-Model structure.

It models why a patient visited the hospital on the Problem wing and what actions were done for the problems on the Action wing. Modeling causality is complicated work. It may differ depending on personal perspectives, and sometimes it cannot be explained as a simple one-dimensional causal relation. Therefore, we simplified the modeling strategy as follows. All symptoms and purposes are modeled as a Problem . For diagnoses and findings, we limit causality in the V-Model to the causality explicitly described in the original text. For example, for “cervix cancer” in “due to cervix cancer, concurrent chemo RT was done” the expression is modeled as a Problem , but the same event without causality expression is modeled as an Action . Our strategy is to convey the original context and let caregivers properly interpret the information for their use. The rest of clinical events are modeled as an Action. Action models any event that happened because of the patient’s problems. It includes diagnosis, clinical tests, findings, drug, plans, operations, treatments or any other kinds of events. Visit models administrative information such as outpatient/emergency room visit, transfer, consultation, and department information.

Temporal information is written in TAP . It can be displayed in both formal and informal temporal expressions, so that even a temporal proximity description can be represented. It is possible because we assume that the V-Model uses a dynamic scaled timeline, which implies the length between any two marking points ( TAP ) is not proportional to the temporal length. Therefore, we display v-structures in sequence, without considering the temporal length between two v-structures.

Events accompanying the same temporal expression share TAP . However, when there is more than one causal relationship within the same time expression, we visualize them as multiple v-structures. Representing only the Problem or Action wing is also available. When there are several events to be located on the same wing, the V-Model allows displaying them all within one wing, regardless of the number.

Semantic types are shown in Table 1 . The Semantic type for clinical events is shown ahead of a bunch of events which are in the same category. The V-Model uses a colored box to indicate a semantic tag: red for Problem events and blue for Action events.

Semantic types of the V-Model

| Notation | Explanation | Position |

|---|---|---|

| Purpose | Purpose | Problem |

| Sx | Symptom | Problem |

| Dx | Diagnosis | Problem/Action |

| Finding | Finding | Problem/Action |

| Drug | Drug | Action |

| Op | Operation | Action |

| Other | Any other events | Action |

| Plan | Plan | Action |

| Test | Test | Action |

| Tx | Treatment | Action |

| Adm | Admission | Visit |

| Death | Death | Visit |

| Disch | Discharge | Visit |

| Visit | Hospital/Department visit information | Visit |

Figure 3 shows part of a clinical text represented in the V-Model. Temporal information, events, and causality (problem-action) relationships are modeled, and the original context is successfully displayed.

V-Model example. Note that the gray context block rectangles are not part of the V-Model visualization. They are added to aid in understanding.

Characteristics

The distinctive features of the V-Model are listed in Table 2 .

Distinctive features of the V-Model

| Id | Distinctive features | Related issue | |

|---|---|---|---|

| Representation | P1 | Connection of Problem-Action relationship (P-A connection) | Causality |

| P2 | Non-explicit temporal expression (non-explicitness) | Granularity | |

| P3 | Temporal proximity implied in medical terms (proximity hint) | Non-explicitness | |

| P4 | Uneven granularity (uneven granularity) | Non-explicitness | |

| P5 | Implicit internal sequence | Non-explicitness | |

| Reasoning | R1 | Problem starts before Action (P precedes A) | Reasoning |

| R2 | Qualitative temporal relation (qualitative relation) | Reasoning | |

| R3 | Temporal distance from non-explicit event (implicit distance) | Reasoning | |

| Visualization | V1 | Intuitive view in discovering Problem-Action relationship (intuitive P-A relation) | Visual enhancement |

| V2 | Blocking effect of Problem-Action relationship among successive events (blocking effect) | Visual enhancement | |

| V3 | Overview of events' flow | Visual enhancement | |

| V4 | Dynamic scaled timeline (dynamic scale) | visual enhancement | |

| V5 | Highly readable history view in tracing long period events (long history) | visual enhancement |

Representation aspects

The V-Model can represent problem-action relations, non-explicit temporal information, and uneven granularity expressions.

First, the V-Model provides a frame to connect problem-action relations (P1). For example, in Figure 4 , we can clearly notice the two causal relations why the patient had to take MRI tests, which was impossible in Figure 1 (a). The opposite directed pair of wings also enables linking a problem to a temporally separate action event. For example, chief complaints starting in late July and related actions done in August are visually connected by the left and following right wing pair (Figure 3 ).

Multiple problem-action links in the V-Model.

Second, contrary to conventional methods, the model enables us to represent and understand implicit temporal information (P2). For example, in Figure 3 , the implicit temporal expressions, ‘86’ and ‘02.8’ , and the fuzzy and semi-interval temporal expression, ‘from late 02.7’ , are successfully described in TAP. The strategy allows TAP to contain diverse temporal expressions unless exactly matched to a calendar date. This is possible because the V-Model uses a dynamic scaled timeline, and events in a patient’s clinical documents mostly appear in chronological order.

Implicitness on a finer granularity level is also solved by simply wrapping in the wing structure (P5). For instance, in Figure 3 , ‘Br. MRI’ , ‘r/o CRP diagnosis’ , and ‘NTR of tm’. share the same temporal expression ‘02.8’. However, it seems clear that the events are sequential events that occurred on a different day or at a different time. The V-Model does not require an internal order or temporal gap to model such cases. Even non-temporal expressions are utilized as temporal proximity hints (P3). For example, we can approximate that the FAM treatments in Figure 3 were done temporally close to a previous operation by referencing the “postop (post operation)” expression in TAP.

Third, the V-Model can display uneven granularity expressions in one timeline view (P4). Medical texts tend to describe events occurring in the year as one in a very abstract manner with coarse granularity temporal expressions, i.e., at the years or decades level. Representing the coarser past histories together with the recent finer ones in one timeline is more natural and informative. Furthermore, the V-Model view can convey emergent situations described on the hours or minutes level, which generally is ignored in conventional timelines.

Reasoning aspects

Although the V-Model timeline handles non-explicit information, a user can determine temporal relationships from the timeline.

First, the V-Model illustrates that problems start before actions (R1). As the V-Model separately structures causal problems from related actions, we can intuitively extrapolate that Problems occurred before Actions although they have the same time expression. It is especially useful when a physician wants to know if the described symptom is a chief complaint or a newly developed symptom during the visit.

Second, reasoning the other temporal relationships is also possible (R2). This inference is done by calculating the TAP information manually whereas conventional timelines show the relationship intuitively. However, the weakness is deemed acceptable when considering the other powerful advantages that the TAP expression presents.

Third, the V-Model suggests TAP as a reference time point in temporal reasoning between non-explicit events (R3). When calculating the temporal distance between two operations, ‘TG c Roux-en-Y anastomosis’ and ‘NTR of tm’. in Figure 3 , the V-Model provides ‘86’ and ‘02.8’ as temporal reference information. One can simply infer that the distance is about sixteen years. It is much more natural and informative than previous attempts suggesting more accurate and concrete possible temporal distance ranges, like 15 years and 8 months ~16 years and 7 months.

Visualization aspects

The V-Model timeline helps reading and understanding a patient’s history. The two wings help to discover problem-action relation intuitively (V1). Moreover, successive events in a causal relation are visually grouped together (V2) (e.g., context blocks 1 and 2 in Figure 3 ). Semantic information tag helps to quickly grasp the situation (V3), without reading in detail. In addition, the use of the dynamic scaled timeline (V4) is effective when there are long periods of blank history. The V-Model is especially effective in reading long histories (V5). Many of the previous medical timeline systems have tried to organize events in semantic categories. However, the category collective representation terribly disturbs readability when tracing long history sequences. Because the conventional timelines expand vertically in proportion to a unique event, one should scroll the page up and down multiple times for understanding. Because our model visualizes events in both vertically and horizontally compact space (by dynamic scale and semantic tag position), one can review patient history by just reading a v-structure one by one. This would prevent accidentally missing sparse data, reduce scrolling work, and allow one to grasp a patient’s history faster.

Pattern recognition

The V-Model timeline can be used in finding distinctive patterns of a specific patient group. As described previously, the v-model shows problem-action relations intuitively (V1) and the relations may be extended to multiple wings (V2). If a context block is found repetitively in a specific patient group (e.g., a red context block followed by a green one in Figure 5 (a)), the block is recognized as a pattern. The pattern may be improved by further analysis, such as by refining temporal constraints, boundary redefinition, etc.

Pattern recognition by the V-Model timeline. (a) Patient timelines and common patterns in a target patient cohort. (b) Patient timelines beyond the target group.

The extracted patterns can be useful in developing a high throughput phenotype algorithm. Figure 5 shows the V-Model timelines each representing a patient’s lifelong clinical records (note that one V-shape in Figure 5 is an abstract representation of a context block to make the illustration simple). Figure 5 (a) shows timelines of a target patient cohort, and (b) shows timelines beyond the target group. In all timelines in (a), one red P-A relation directly followed by one green P-A relation is found. The red-green events are recognized as a pattern (pattern A). We could guess ‘pattern A’ might be an important feature to identify a patient group. In the beyond target group, there are some patients that also have ‘pattern A’. However, in this group, before ‘pattern A’ appears, another pattern B (red P-A directly followed by blue one) exists. From the visualization, we can induce a phenotype algorithm that includes ‘pattern A’ but excludes ‘pattern A’ following ‘pattern B’. This algorithm can be refined after further analysis. For example, if all the ‘pattern A’s in (a) occurred in early childhood, we could add temporal constraints for higher throughput.

Experimental design

An automatic visualization system for the V-Model has not yet been implemented. Therefore, we evaluated our model focusing on the suitability of the V-Model dealing with narrative documents. Effectiveness in detecting patterns over other phenotyping methods was not measured at this stage. We used LifeLines [ 32 ], which is one of the best known visualization environments in medicine, as a representative of a conventional timeline representation. Forty medical students and forty residents from SNUH participated in this experiment (Table 3 ). We selected departments as ones that largely used narrative clinical notes and had comparatively less emergent situations. Due to difficulties in recruiting participants, we designed all experiments to be completed in thirty minutes.

Demographics of the participants

| (a) Students | ||

|---|---|---|

| Clinical rotation experience | Group | |

| V-Model | Lifelines | |

| No | 10 | 10 |

| Yes | 10 | 10 |

| 20 | 20 | |

| Internal medicine | 10 | |

| Pediatrics | 10 | |

| Neurology | 5 | |

| Family medicine | 5 | |

| Rehabilitation medicine | 5 | |

| Psychiatry | 5 | |

| 40 | ||

| 1 year | 7 | 5 |

| 2 years | 3 | 6 |

| 3 years | 7 | 7 |

| 4 years | 3 | 2 |

| 20 | 20 | |

Step 1: verification of design requirements

In this step, we tested if our model was able to represent unresolved natural language issues (representation), provide sufficient information to reason temporal relations (reasoning), and enhance readability (visualization) (Table 2 ). The test consists of information seeking problems from a given timeline. Accuracy (i.e., percentage of correct answers within one evaluation item) and response time (i.e., time spent to solve one question) were used as the evaluation measurements. The questions were designed to maximize the difference between the V-Model and the conventional timeline properties. For example, a P1 question tested a causality relation from an ambiguous case (i.e., “why the patient had to take the CT test twice on date ‘T1’?” as in the case for Figure 1 (a)). In this case, we used accuracy to compare the representation power. However, in a V1 question, it targeted a simpler case that both model users could find the answer clearly. Additionally, we used response time to contrast readability.

Due to the time limitation, we carefully selected passages that definitely contrasted the two models. The reviewed documents were randomly selected from our database, a collection of anonymized discharge summaries generated from SNUH a . We used discharge summaries for this experiment because each of them presents overall clinical events, and contains a long history in narrative description. The selected documents contained up to 40 years of clinical histories in the present history section, and admission duration ranged from 0 to 224 days. Overall, nineteen timeline fragments from fourteen documents were selected for the evaluation.

We manually visualized all fifty-discharge summaries in the V-Model with the MS Visio tool, and reviewed them to find the best examples. Questions made for this experiment are listed in Additional file 1 . For the LifeLines experimental group, we generated corresponding timelines with the LifeLines program. To exclude any system specific influence such as zooming, we used captured images.

Although the V-Model supports implicit sequential events (P5) and overview of events’ flows (V3), the items were excluded from the evaluation because these features were very hard to evaluate objectively with simple questions. Non-explicitness (P2), proximity (P3), and implicit distance (R3) were tested only in the V-Model group because it was impossible to display in LifeLines. For uneven granularity (P4) and dynamic scale (V4), we exceptionally showed both types of timelines to both group participants. For P4 evaluation, we asked them to choose a model that represented uneven granularity. And for V4, we asked which type of representation (dynamic vs. static view) is more useful.

Step 2: usability evaluation

To compare the usability of the two visualization models, we adapted the System Usability Scale (SUS) questionnaire [ 33 ]. SUS is one of the popular and simple, ten-item attitude Likert scale assessing usability. We modified the general questions for the V-Model evaluation [see Additional file 1 ].

The step 1 experimental results are shown in Table 4 . Although we explained that the response time is an important measurement and urged participants to concentrate on this experiment, uncontrollable interruptions occurred frequently (e.g., request for an immediate order, phone call, etc.). They mostly took just a few seconds but these interruptions significantly affected the distribution of the results. Therefore, we analyzed the difference in the response time with the Mann–Whitney U -test (MWU test). We used the chi-squared test (or Fisher’s exact test) for an accuracy analysis. The results were analyzed at a 0.95 confidence level.

Step 1 experimental results

| Evaluation item | n | LifeLines (N = 40) | V-Model (N = 40) | Statistical analysis | |||

|---|---|---|---|---|---|---|---|

| n_c (accuracy) | RT (sec.) median, IQR(25–75) | n_c (accuracy) | RT (sec.) median, IQR (25–75) | chi-squared test (p-value) | MWU test (p-value) | ||

| [P1] P-A connection | 140 | 71 (50.71) | 43.32 (33.08-61.91) | 115 (82.14) | 35.31 (24.99-50.24) | <0.000 | <0.000 |

| [P2] non-explicitness | 40 | - | - | 36 (90.00) | - | - | - |

| [P3] proximity | 80 | - | - | 74 (92.50) | - | - | - |

| [R1] P precedes A | 40 | 36 (90.00) | 35.48 (25.23-47.77) | 40 (100.00) | 14.9 (12.15-18.74) | 0.116 | <0.000 |

| [R2] qualitative relation | 80 | 76 (95.00) | 7.6 (5.16-11.97) | 69 (86.25) | 9.95 (6.23-13.84) | 0.058 | 0.036 |

| [R3] implicit distance | 40 | - | - | 40 (100.00) | - | - | - |

| [V1] intuitive P-A relation | 140 | 104 (74.29) | 32.17 (21.46-54.07) | 125 (89.29) | 19.48 (15- 29.58) | <0.000 | <0.000 |

| 40 | 25 (62.50) | 56.82 (44.92-80.35) | 21 (52.50) | 45.72 (33.93-62.79) | 0.366 | 0.006 | |

| [V5] long history | 40 | 22 (55.00) | 92.7 (69.64-134.48) | 38 (95.00) | 35.23 (25.84-40.46) | <0.000 | <0.000 |

| [P04] uneven granularity | V-Model participants | 3.75 | 96.25 | ||||

| (selection) | LifeLines participants | 7.5 | 92.5 | ||||

| mean | 5.63 | 94.38 | |||||

| [V04] dynamic scale | V-Model participants | 5 | 95 | ||||

| (preference) | LifeLines participants | 25 | 75 | ||||

| mean | 15 | 85 | |||||

N , number of data; N , number of participants; n_c , number of correct answers.

We used accuracy to evaluate the representation power. For the P-A connection (P1), the V-Model showed about 32% higher accuracy. The test considered finding the causality relation, which could be interpreted ambiguously in a conventional timeline. The problem-action link made the participants find the right answers significantly better. Ninety percent of the participants in the V-Model group provided correct responses for the non-explicitness (P2) test. This result shows that the non-explicit temporal expression case was successfully modeled and conveyed in our timeline. Next, 92.5% of the V-Model participants could determine the temporal proximity (P3) from the non-temporal TAP expressions. Overall, 94.38% of the participants from both groups agreed that the V-Model represented uneven granularity in one view (P4).

To evaluate reasoning aspects, we used both accuracy and response time as measurements. Accuracy showed whether the timeline sufficiently provided information for temporal reasoning. And response time measured the easiness of the temporal reasoning process. In reasoning, P precedes A (R1), and both groups showed high performance. However, the V-Model group took less than one-third the response time compared to the LifeLines group with the help of the graphical separation of the problem and action. In reasoning the qualitative relation, the two groups showed no statistical difference in accuracy. However, the LifeLines group completed the questions in a statistically significantly less time. All of the V-Model participants provided a correct response to the question that required implicit distance reasoning (R3) (which was impossible in the LifeLines view).

To measure the easy-to-catch property established by the visualization aspects, we compared response times. The response times were statistically significantly faster in the V-Model group for all the evaluation items (V1, V2, and V5). In regards to the dynamic scale preference problem (V4), a prominent number of participants (85%) from both groups selected the dynamic timeline of the V-Model as more appropriate for patient history. Although the LifeLines users were unfamiliar with the V-Model, 75% of the LifeLines users chose this unfamiliar timeline description as better.

Figure 6 shows the answer distribution of the usability questionnaire, grouping the results related to positive questions and negative ones. The V-Model was assessed as superior to LifeLines in terms of usability. In regards to positive questions (questions 1, 3, 5, 7, and 9), a prominent number of participants expressed (strong) agreement (score 4 and 5). Negative opinions were very few, and there was no strong disagreement (score 1). Conversely, in the results for the LifeLines group, we could not find a consensus. In the results for the negative aspect questions (questions 2, 4, 6, 8, and 10), the majority percentage of the V-Modelgroup answers were in disagreement (score 1 and 2), except for question 10. However, all participants could understand the V-Model without any information. We suppose the necessity for an explanation to the multiple P-A link problem affected the result. In the LifeLines group, a different factor affected the result. It is our guess that the lack of causality and difficulties in tracing patient history were the main reasons.

Usability questionnaire results.

The V-Model enables visualization of textual information in a timeline. It represents apparent P-A relations only and describes the other minor relationships in natural language. There might be a criticism that the P-A link is insufficient to cover the various contexts that clinical documents have. However, it is our belief that we compensated well balancing sustaining simplicity and informing the original context at an adequate level.

The V-Model deals with universal natural language problems, such as causality, granularity, and non-explicitness problems. Although the experiment was performed based on Korean EHR data only, the results can be applied to any other language. It was proven that the V-Model functionally achieved our design goals. Furthermore, it outperformed in the overall evaluation aspects. However, recognizing qualitative temporal relations (R2) took more time than conventional timeline representation. Providing qualitative temporal information while preserving the V-Model’s simplicity would be a challenging work.

For the evaluation, we compared manually-visualized V-Model timelines with conventional timelines that were automatically generated by LifeLines. The comparison was fairly performed as we focused on timeline properties, rather than systematic issues. System implementation using the V-Model is not covered by this paper.

We demonstrated that the V-Model reflects design considerations for the NLP. For example, we simplified semantic tags as only 14 categories, clarifying how to determine an event as a Problem or an Action , and how to visualize events in the order of appearance when temporal information is missing.

The ultimate goal of our model is practical use in patient treatment and medical research. We anticipate that the V-Model would play a crucial role in phenotype definition and algorithm development. The model extends our perspective on the data unit from a concept to a sequence of concepts (context block). The V-Model timeline integrates distributed patient data regardless of its original source, type or institution. It enables a user to trace patient history considering semantic, temporal, and causality information in a short time. The view would ease and shorten the unavoidable manual reviewing process accelerating phenotyping more efficiently.

To enable chronological visualization of narrative patient history, we developed the V-Model. We devised a unique v-shaped structure and modeling strategies to solve natural language representation problems. The V-Model displays clinical events in a restrained format. Especially, the Problem-Action relationship is intuitive, and the relation is extensible to neighboring events. This feature facilitates pattern finding, which would promote high-throughput phenotyping. The V-Model was shown to excel in representing narrative medical events, provide sufficient information for temporal reasoning, and outperform in readability. The only disadvantage was taking a longer time in recognizing qualitative relationships. Subjects assessed our model positively on the usability evaluation. We conclude that the V-Model can be a new model for narrative clinical events, and it would make EHR data more reusable.

a Approved by SNUH Institutional Review Board (IRB) (IRB No. 1104-027-357).

Acknowledgements

This research was supported by a grant of the Korean Health Technology R&D Project, Ministry of Health & Welfare, Republic of Korea. (A112005), and was supported by the National Research Foundation of Korea (NRF) funded by the Korea government (MSIP) (No. 2010–0028631).

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

Both authors contributed to the conception and design of the study, analysis, and interpretation of data, drafting of the article and final approval for submission. HP and JC designed the study. HP carried out data collection, analysis, and drafted the manuscript, with significant contributions from JC. HP and JC performed data interpretation. Both authors read and approved the final manuscript.

Contributor Information

Heekyong Park, Email: rk.ca.uns@10erac .

Jinwook Choi, Email: rk.ca.uns@iohcnij .

Pre-publication history

- The pre-publication history for this paper can be accessed here: http://www.biomedcentral.com/1472-6947/14/90/prepub

Performance and Technical Details

Sa-v dataset, installation, sam comparison vs yolov8, limitations, citations and acknowledgements.

- MobileSAM (Mobile Segment Anything Model)

- FastSAM (Fast Segment Anything Model)

- YOLO-NAS (Neural Architecture Search)

- RT-DETR (Realtime Detection Transformer)

- YOLO-World (Real-Time Open-Vocabulary Object Detection)

- NEW 🚀 Solutions

- Integrations

SAM 2: Segment Anything Model 2

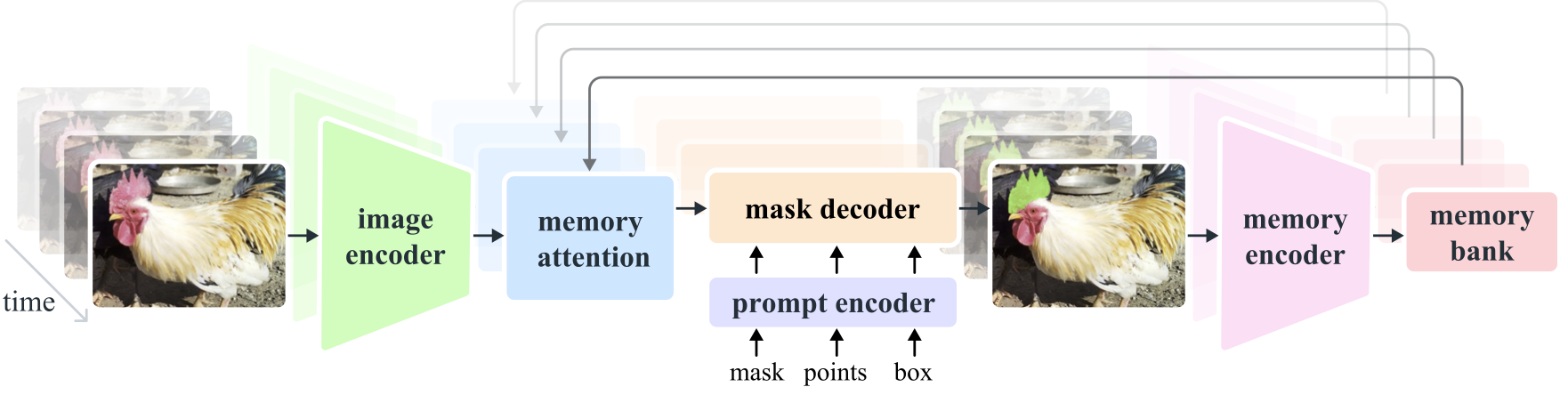

SAM 2, the successor to Meta's Segment Anything Model (SAM) , is a cutting-edge tool designed for comprehensive object segmentation in both images and videos. It excels in handling complex visual data through a unified, promptable model architecture that supports real-time processing and zero-shot generalization.

Key Features

Unified model architecture.

SAM 2 combines the capabilities of image and video segmentation in a single model. This unification simplifies deployment and allows for consistent performance across different media types. It leverages a flexible prompt-based interface, enabling users to specify objects of interest through various prompt types, such as points, bounding boxes, or masks.

Real-Time Performance

The model achieves real-time inference speeds, processing approximately 44 frames per second. This makes SAM 2 suitable for applications requiring immediate feedback, such as video editing and augmented reality.

Zero-Shot Generalization

SAM 2 can segment objects it has never encountered before, demonstrating strong zero-shot generalization. This is particularly useful in diverse or evolving visual domains where pre-defined categories may not cover all possible objects.

Interactive Refinement

Users can iteratively refine the segmentation results by providing additional prompts, allowing for precise control over the output. This interactivity is essential for fine-tuning results in applications like video annotation or medical imaging.

Advanced Handling of Visual Challenges

SAM 2 includes mechanisms to manage common video segmentation challenges, such as object occlusion and reappearance. It uses a sophisticated memory mechanism to keep track of objects across frames, ensuring continuity even when objects are temporarily obscured or exit and re-enter the scene.

For a deeper understanding of SAM 2's architecture and capabilities, explore the SAM 2 research paper .

SAM 2 sets a new benchmark in the field, outperforming previous models on various metrics:

| Metric | SAM 2 | Previous SOTA |

|---|---|---|

| - | ||

| Baseline | ||

| SAM | ||

| SAM |

Model Architecture

Core components.

- Image and Video Encoder : Utilizes a transformer-based architecture to extract high-level features from both images and video frames. This component is responsible for understanding the visual content at each timestep.

- Prompt Encoder : Processes user-provided prompts (points, boxes, masks) to guide the segmentation task. This allows SAM 2 to adapt to user input and target specific objects within a scene.

- Memory Mechanism : Includes a memory encoder, memory bank, and memory attention module. These components collectively store and utilize information from past frames, enabling the model to maintain consistent object tracking over time.

- Mask Decoder : Generates the final segmentation masks based on the encoded image features and prompts. In video, it also uses memory context to ensure accurate tracking across frames.

Memory Mechanism and Occlusion Handling

The memory mechanism allows SAM 2 to handle temporal dependencies and occlusions in video data. As objects move and interact, SAM 2 records their features in a memory bank. When an object becomes occluded, the model can rely on this memory to predict its position and appearance when it reappears. The occlusion head specifically handles scenarios where objects are not visible, predicting the likelihood of an object being occluded.

Multi-Mask Ambiguity Resolution

In situations with ambiguity (e.g., overlapping objects), SAM 2 can generate multiple mask predictions. This feature is crucial for accurately representing complex scenes where a single mask might not sufficiently describe the scene's nuances.

The SA-V dataset, developed for SAM 2's training, is one of the largest and most diverse video segmentation datasets available. It includes:

- 51,000+ Videos : Captured across 47 countries, providing a wide range of real-world scenarios.

- 600,000+ Mask Annotations : Detailed spatio-temporal mask annotations, referred to as "masklets," covering whole objects and parts.

- Dataset Scale : It features 4.5 times more videos and 53 times more annotations than previous largest datasets, offering unprecedented diversity and complexity.

Video Object Segmentation

SAM 2 has demonstrated superior performance across major video segmentation benchmarks:

| Dataset | J&F | J | F |

|---|---|---|---|

| 82.5 | 79.8 | 85.2 | |

| 81.2 | 78.9 | 83.5 |

Interactive Segmentation

In interactive segmentation tasks, SAM 2 shows significant efficiency and accuracy:

| Dataset | NoC@90 | AUC |

|---|---|---|

| 1.54 | 0.872 |

To install SAM 2, use the following command. All SAM 2 models will automatically download on first use.

How to Use SAM 2: Versatility in Image and Video Segmentation

The following table details the available SAM 2 models, their pre-trained weights, supported tasks, and compatibility with different operating modes like Inference , Validation , Training , and Export .

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| SAM 2 tiny | ✅ | ❌ | ❌ | ❌ | ||

| SAM 2 small | ✅ | ❌ | ❌ | ❌ | ||

| SAM 2 base | ✅ | ❌ | ❌ | ❌ | ||

| SAM 2 large | ✅ | ❌ | ❌ | ❌ |

SAM 2 Prediction Examples

SAM 2 can be utilized across a broad spectrum of tasks, including real-time video editing, medical imaging, and autonomous systems. Its ability to segment both static and dynamic visual data makes it a versatile tool for researchers and developers.

Segment with Prompts

Use prompts to segment specific objects in images or videos.

Segment Everything

Segment the entire image or video content without specific prompts.

- This example demonstrates how SAM 2 can be used to segment the entire content of an image or video if no prompts (bboxes/points/masks) are provided.

Here we compare Meta's smallest SAM model, SAM-b, with Ultralytics smallest segmentation model, YOLOv8n-seg :

| Model | Size | Parameters | Speed (CPU) |

|---|---|---|---|

| Meta's SAM-b | 358 MB | 94.7 M | 51096 ms/im |

| 40.7 MB | 10.1 M | 46122 ms/im | |

| with YOLOv8 backbone | 23.7 MB | 11.8 M | 115 ms/im |

| Ultralytics | (53.4x smaller) | (27.9x less) | (866x faster) |

This comparison shows the order-of-magnitude differences in the model sizes and speeds between models. Whereas SAM presents unique capabilities for automatic segmenting, it is not a direct competitor to YOLOv8 segment models, which are smaller, faster and more efficient.

Tests run on a 2023 Apple M2 Macbook with 16GB of RAM. To reproduce this test:

Auto-Annotation: Efficient Dataset Creation

Auto-annotation is a powerful feature of SAM 2, enabling users to generate segmentation datasets quickly and accurately by leveraging pre-trained models. This capability is particularly useful for creating large, high-quality datasets without extensive manual effort.

How to Auto-Annotate with SAM 2

To auto-annotate your dataset using SAM 2, follow this example:

Auto-Annotation Example

| Argument | Type | Description | Default |

|---|---|---|---|

| Path to a folder containing images to be annotated. | |||

| , optional | Pre-trained YOLO detection model. Defaults to 'yolov8x.pt'. | ||

| , optional | Pre-trained SAM 2 segmentation model. Defaults to 'sam2_b.pt'. | ||

| , optional | Device to run the models on. Defaults to an empty string (CPU or GPU, if available). | ||

| , , optional | Directory to save the annotated results. Defaults to a 'labels' folder in the same directory as 'data'. |

This function facilitates the rapid creation of high-quality segmentation datasets, ideal for researchers and developers aiming to accelerate their projects.

Despite its strengths, SAM 2 has certain limitations:

- Tracking Stability : SAM 2 may lose track of objects during extended sequences or significant viewpoint changes.

- Object Confusion : The model can sometimes confuse similar-looking objects, particularly in crowded scenes.

- Efficiency with Multiple Objects : Segmentation efficiency decreases when processing multiple objects simultaneously due to the lack of inter-object communication.

- Detail Accuracy : May miss fine details, especially with fast-moving objects. Additional prompts can partially address this issue, but temporal smoothness is not guaranteed.

If SAM 2 is a crucial part of your research or development work, please cite it using the following reference:

We extend our gratitude to Meta AI for their contributions to the AI community with this groundbreaking model and dataset.

What is SAM 2 and how does it improve upon the original Segment Anything Model (SAM)?

SAM 2, the successor to Meta's Segment Anything Model (SAM) , is a cutting-edge tool designed for comprehensive object segmentation in both images and videos. It excels in handling complex visual data through a unified, promptable model architecture that supports real-time processing and zero-shot generalization. SAM 2 offers several improvements over the original SAM, including:

- Unified Model Architecture : Combines image and video segmentation capabilities in a single model.

- Real-Time Performance : Processes approximately 44 frames per second, making it suitable for applications requiring immediate feedback.

- Zero-Shot Generalization : Segments objects it has never encountered before, useful in diverse visual domains.

- Interactive Refinement : Allows users to iteratively refine segmentation results by providing additional prompts.

- Advanced Handling of Visual Challenges : Manages common video segmentation challenges like object occlusion and reappearance.

For more details on SAM 2's architecture and capabilities, explore the SAM 2 research paper .

How can I use SAM 2 for real-time video segmentation?

SAM 2 can be utilized for real-time video segmentation by leveraging its promptable interface and real-time inference capabilities. Here's a basic example:

For more comprehensive usage, refer to the How to Use SAM 2 section.

What datasets are used to train SAM 2, and how do they enhance its performance?

SAM 2 is trained on the SA-V dataset, one of the largest and most diverse video segmentation datasets available. The SA-V dataset includes:

- Dataset Scale : Features 4.5 times more videos and 53 times more annotations than previous largest datasets, offering unprecedented diversity and complexity.

This extensive dataset allows SAM 2 to achieve superior performance across major video segmentation benchmarks and enhances its zero-shot generalization capabilities. For more information, see the SA-V Dataset section.

How does SAM 2 handle occlusions and object reappearances in video segmentation?

SAM 2 includes a sophisticated memory mechanism to manage temporal dependencies and occlusions in video data. The memory mechanism consists of:

- Memory Encoder and Memory Bank : Stores features from past frames.

- Memory Attention Module : Utilizes stored information to maintain consistent object tracking over time.

- Occlusion Head : Specifically handles scenarios where objects are not visible, predicting the likelihood of an object being occluded.

This mechanism ensures continuity even when objects are temporarily obscured or exit and re-enter the scene. For more details, refer to the Memory Mechanism and Occlusion Handling section.

How does SAM 2 compare to other segmentation models like YOLOv8?

SAM 2 and Ultralytics YOLOv8 serve different purposes and excel in different areas. While SAM 2 is designed for comprehensive object segmentation with advanced features like zero-shot generalization and real-time performance, YOLOv8 is optimized for speed and efficiency in object detection and segmentation tasks. Here's a comparison:

For more details, see the SAM comparison vs YOLOv8 section.

- Ocean, Offshore and Arctic Engineering Division

- Previous Paper

Research on a Fast Resistance Performance Prediction Method for SUBOFF Model Based on Physics-Informed Neural Networks (PINNs)

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Cite Icon Cite

- Permissions

- Search Site

Wu, Y, Han, F, Peng, X, Gao, L, Zhao, Y, & Zhang, J. "Research on a Fast Resistance Performance Prediction Method for SUBOFF Model Based on Physics-Informed Neural Networks (PINNs)." Proceedings of the ASME 2024 43rd International Conference on Ocean, Offshore and Arctic Engineering . Volume 6: Polar and Arctic Sciences and Technology; CFD, FSI, and AI . Singapore, Singapore. June 9–14, 2024. V006T08A041. ASME. https://doi.org/10.1115/OMAE2024-126300

Download citation file:

- Ris (Zotero)

- Reference Manager

Physics-Informed Neural Networks (PINNs) represent a novel intelligent algorithm for solving partial differential equations (PDEs), which has been partially validated in solving Navier-Stokes equations. However, numerous challenges remain in the application of PINNs to the calculation of hydrodynamic performance of ships.

This paper utilizes discrete solutions of the Navier-Stokes (N-S) equations obtained via the Finite Volume Method (FVM) and Reynolds Averaged Navier-Stokes (RANS) approach of the CFD method for training PINNs to directly solve flow field information. This approach achieves a solution with approximate accuracy to that based on the CFD method, but with significantly reduced time consumption. The development of this algorithm could offer a novel perspective for calculating the hydrodynamic performance of ships.

The study focuses on the DARPA SUBOFF-B model, using field information obtained at specific speeds through the CFD method, including pressure and velocity fields, as the training set to train the neural parameters within PINNs. This enables the PINNs model to predict the flow field information around the hull and calculate the hull resistance through integration. The results predicted by the PINNs method are compared and analyzed against those calculated via the CFD method, Shows the comparison of the predicted results of the hull surface pressure field, as well as the relative error of the predicted total resistance., thereby proving the feasibility of the method.

Purchase this Content

Product added to cart., email alerts, related proceedings papers, related articles, related chapters, affiliations.

- ASME Conference Publications and Proceedings

- Conference Proceedings Author Guidelines

- Indexing and Discovery

ASME Journals

- About ASME Journals

- Information for Authors

- Submit a Paper

- Call for Papers

- Title History

ASME Conference Proceedings

- About ASME Conference Publications and Proceedings

ASME eBooks

- About ASME eBooks

- ASME Press Advisory & Oversight Committee

- Book Proposal Guidelines

- Frequently Asked Questions

- Publication Permissions & Reprints

- ASME Membership

Opportunities

- Faculty Positions

- ASME Instagram

- Accessibility

- Privacy Statement

- Terms of Use

- Get Adobe Acrobat Reader

This Feature Is Available To Subscribers Only

Sign In or Create an Account

Action recognition method based on multi-stream attention-enhanced recursive graph convolution

- Published: 07 August 2024

Cite this article

- Huaijun Wang 1 , 2 ,

- Bingqian Bai 1 ,

- Junhuai Li ORCID: orcid.org/0000-0001-5483-5175 1 , 2 ,

- Hui Ke 1 &

- Wei Xiang 3

15 Accesses

Explore all metrics

Skeleton-based action recognition methods have become a research hotspot due to their robustness against variations in lighting, complex backgrounds, and viewpoint changes. Addressing the issues of long-distance joint associations and time-varying joint correlations in skeleton data, this paper proposes a Multi-Stream Attention-Enhanced Recursive Graph Convolution method for action recognition. This method extracts four types of features from the skeleton data: joints, bones, joint movements, and bone movements. It models the potential relationships between non-adjacent nodes through adaptive graph convolution and utilizes a long short-term memory network for recursive learning of the graph structure to capture the temporal correlations of joints. Additionally, a spatio-temporal channel attention module is introduced to enable the model to focus more on important joints, frames, and channel features, further improving performance. Finally, the recognition results of the four branches are fused at the decision level to complete the action recognition. Experimental results on public datasets (UTD-MHAD, CZU-MHAD) and a self-constructed dataset (KTH-Skeleton) demonstrate that the proposed method achieves accuracies of 94.65%, 95.01%, and 97.50%, respectively, fully proving the good performance of the method.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Multi-scale Dilated Attention Graph Convolutional Network for Skeleton-Based Action Recognition

Multi-stream adaptive 3D attention graph convolution network for skeleton-based action recognition

Combining channel-wise joint attention and temporal attention in graph convolutional networks for skeleton-based action recognition

Availability of data and materials.

The data sets analyzed in the current study are publicly available data sets: https://personal.utdallas.edu/~kehtar/UTD-MHAD.html , https://github.com/yujmo/CZU_MHAD .

Code availability

Code availability not applicable.

Saroja M, Baskaran K, Priyanka P (2021) Human pose estimation approaches for human activity recognition. In: 2021 International Conference on Advancements in Electrical, Electronics, Communication, Computing and Automation (ICAECA), pp 1–4

Wang H, Yang J, Cui C, Tu P, Li J, Fu B, Xiang W (2024) Human activity recognition based on local linear embedding and geodesic flow kernel on grassmann manifolds. Expert Syst Appl 241:122696

Article Google Scholar

Zheng B, Chen L, Wu M, Pedrycz W, Hirota K (2022) Skeleton-based action recognition using two-stream graph convolutional network with pose refinement. In: 2022 41st Chinese Control Conference (CCC), pp 6353–6356

Aggarwal JK, Xia L (2014) Human activity recognition from 3d data: a review. Pattern Recognit Lett 48:70–80

Liu K, Gao L, Khan NM, Qi L, Guan L (2021) Integrating vertex and edge features with graph convolutional networks for skeleton-based action recognition. Neurocomputing 466:190–201

Feng L, Zhao Y, Zhao W, Tang J (2022) A comparative review of graph convolutional networks for human skeleton-based action recognition. Artif Intell Rev 1–31

Si C, Jing Y, Wang W, Wang L, Tan T (2018) Skeleton-based action recognition with spatial reasoning and temporal stack learning. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 103–118

Du Y, Wang W, Wang L (2015) Hierarchical recurrent neural network for skeleton based action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1110–1118

Zhang P, Lan C, Xing J, Zeng W, Xue J, Zheng N (2017) View adaptive recurrent neural networks for high performance human action recognition from skeleton data. In: Proceedings of the IEEE International conference on computer vision, pp 2117–2126

Zhang H, Song Y, Zhang Y (2019) Graph convolutional lstm model for skeleton-based action recognition. In: 2019 IEEE International Conference on Multimedia and Expo (ICME), pp 412–417

Wang H, Wang L (2017) Modeling temporal dynamics and spatial configurations of actions using two-stream recurrent neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 499–508

Yang W, Zhang J, Cai J, Xu Z (2023) Hybridnet: Integrating gcn and cnn for skeleton-based action recognition. Appl Intell 53(1):574–585

Hou Y, Li Z, Wang P, Li W (2016) Skeleton optical spectra-based action recognition using convolutional neural networks. IEEE Trans Circuits Syst Video Technol 28(3):807–811

Cao C, Lan C, Zhang Y, Zeng W, Lu H, Zhang Y (2018) Skeleton-based action recognition with gated convolutional neural networks. IEEE Trans Circuits Syst Video Technol 29(11):3247–3257

Li C, Hou Y, Wang P, Li W (2017) Joint distance maps based action recognition with convolutional neural networks. IEEE Signal Process Lett 24(5):624–628

Khezeli F, Mohammadzade H (2019) Time-invariant 3d human action recognition with positive and negative movement memory using convolutional neural networks. In: 2019 4th International Conference on Pattern Recognition and Image Analysis (IPRIA), pp 26–31

Liu M, Liu H, Chen C (2017) Enhanced skeleton visualization for view invariant human action recognition. Pattern Recognit 68:346–362

Caetano C, Brémond F, Schwartz WR (2019) Skeleton image representation for 3d action recognition based on tree structure and reference joints. In: 2019 32nd SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), pp 16–23

Ke Q, An S, Bennamoun M, Sohel F, Boussaid F (2017) Skeletonnet: Mining deep part features for 3-d action recognition. IEEE Signal Process Lett 24(6):731–735

Li B, He M, Dai Y, Cheng X, Chen Y (2018) 3d skeleton based action recognition by video-domain translation-scale invariant mapping and multi-scale dilated cnn. Multimed Tools Appl 77:22901–22921

Zhang H, Zhang X, Yu D, Guan L, Wang D, Zhou F, Zhang W (2023) Multi-modality adaptive feature fusion graph convolutional network for skeleton-based action recognition. Sensors 23(12):5414

Zhu Q, Deng H (2023) Spatial adaptive graph convolutional network for skeleton-based action recognition. Appl Intell 1–13

Yan S, Xiong Y, Lin D (2018) Spatial temporal graph convolutional networks for skeleton-based action recognition. In: Proceedings of the AAAI conference on artificial intelligence, vol 32

Tang Y, Tian Y, Lu J, Li P, Zhou J (2018) Deep progressive reinforcement learning for skeleton-based action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5323–5332

Qin Y, Mo L, Li C, Luo J (2020) Skeleton-based action recognition by part-aware graph convolutional networks. Vis Comput 36:621–631

Zhang X, Xu C, Tian X, Tao D (2019) Graph edge convolutional neural networks for skeleton-based action recognition. IEEE Trans Neural Netw Learn Syst 31(8):3047–3060

Shi L, Zhang Y, Cheng J, Lu H (2019) Skeleton-based action recognition with directed graph neural networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7912–7921

Lu L, Lu Y, Yu R, Di H, Zhang L, Wang S (2019) Gaim: Graph attention interaction model for collective activity recognition. IEEE Trans Multimedia 22(2):524–539

Song Y-F, Zhang Z, Shan C, Wang L (2020) Richly activated graph convolutional network for robust skeleton-based action recognition. IEEE Trans Circuits Syst Video Technol 31(5):1915–1925

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Diba A, Fayyaz M, Sharma V, Arzani MM, Yousefzadeh R, Gall J, Van Gool L (2018) Spatio-temporal channel correlation networks for action classification. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 284–299

Yu L, Tian L, Du Q, Bhutto JA (2022) Multi-stream adaptive spatial-temporal attention graph convolutional network for skeleton-based action recognition. IET Comput Vis 16(2):143–158

Shi L, Zhang Y, Cheng J, Lu H (2019) Two-stream adaptive graph convolutional networks for skeleton-based action recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12026–12035

Zhang P, Lan C, Xing J, Zeng W, Xue J, Zheng N (2017) View adaptive recurrent neural networks for high performance human action recognition from skeleton data. In: Proceedings of the IEEE international conference on computer vision, pp 2117–2126

Usmani A, Siddiqui N, Islam S (2023) Skeleton joint trajectories based human activity recognition using deep rnn. Multimed Tools Appl 1–25

Wang J, Liu Z, Wu Y, Yuan J (2012) Mining actionlet ensemble for action recognition with depth cameras. In: 2012 IEEE Conference on computer vision and pattern recognition, pp 1290–1297

Si C, Chen W, Wang W, Wang L, Tan T (2019) An attention enhanced graph convolutional lstm network for skeleton-based action recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1227–1236

Chen C, Jafari R, Kehtarnavaz N (2015) Utd-mhad: A multimodal dataset for human action recognition utilizing a depth camera and a wearable inertial sensor. In: 2015 IEEE International Conference on Image Processing (ICIP), pp 168–172. https://doi.org/10.1109/ICIP.2015.7350781

Chao X, Hou Z, Mo Y (2022) Czu-mhad: A multimodal dataset for human action recognition utilizing a depth camera and 10 wearable inertial sensors. IEEE Sens J 22(7):7034–7042. https://doi.org/10.1109/JSEN.2022.3150225

Schuldt C, Laptev I, Caputo B (2004) Recognizing human actions: a local svm approach. In: Proceedings of the 17th International conference on pattern recognition, 2004. ICPR 2004., vol 3, pp 32–36

Chen Y, Wang Z, Peng Y, Zhang Z, Yu G, Sun, J (2018) Cascaded pyramid network for multi-person pose estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7103–7112

Ahmad Z, Khan N (2018) Towards improved human action recognition using convolutional neural networks and multimodal fusion of depth and inertial sensor data, 223–230

Wang X, Lv T, Gan Z, He M, Jin L (2021) Fusion of skeleton and inertial data for human action recognition based on skeleton motion maps and dilated convolution. IEEE Sens J 21(21):24653–24664

Liu J, Shahroudy A, Xu D, Kot AC, Wang G (2017) Skeleton-based action recognition using spatio-temporal lstm network with trust gates. IEEE Trans Pattern Anal Mach Intell 40(12):3007–3021

Soo Kim T, Reiter A (2017) Interpretable 3d human action analysis with temporal convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 20–28

Zhu G, Zhang L, Li H, Shen P, Shah SAA, Bennamoun M (2020) Topology-learnable graph convolution for skeleton-based action recognition. Pattern Recognit Lett 135:286–292

Liu Z, Zhang H, Chen Z, Wang Z, Ouyang W (2020) Disentangling and unifying graph convolutions for skeleton-based action recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 143–152

Yoon Y, Yu J, Jeon M (2022) Predictively encoded graph convolutional network for noise-robust skeleton-based action recognition. Appl Intell 1–15

Download references

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 61971347), Doctoral Innovation Foundation of Xi’an University of Technology (No. 252072118), Natural Science Foundation of Shaanxi Province of China (No. 2021JM-344), Xi’an Science and Technology Plan Project (2022JH-RYFW-007) (24DCYJSGG0020), Key research and development program of Shaanxi Province (2022SF-353).

Author information

Authors and affiliations.

School of Computer Science and Engineering, Xi’an University of Technology, No. 5 South Jinhua Road, Xi’an, 710048, Shaanxi, China

Huaijun Wang, Bingqian Bai, Junhuai Li & Hui Ke

Shaanxi Key Laboratory for Network Computing and Security Technology, No. 5 South Jinhua Road, Xi’an, 710048, Shaanxi, China

Huaijun Wang & Junhuai Li

School of Computing, Engineering and Mathematical Sciences, La Trobe University, Melbourne, 3086, Australia

You can also search for this author in PubMed Google Scholar

Contributions

All authors contributed to the study conception and design. Huaijun Wang and Junhuai Li are responsible for the overall research design and guiding research work, providing research direction and guidance, and assisting in the writing and proofreading of papers. Hui Ke participated in the experiment, data collection, data analysis and other work, and also participated in the writing of the paper. Xiang Wei not only provided resource support, but also provided academic support and guidance. All authors read and approved the fnal manuscript.

Corresponding author

Correspondence to Junhuai Li .

Ethics declarations

Conflict of interest.

All authors declare that they have no conflicts of interest.

Ethics approval

Not applicable.

Consent to participate

Consent for publication, additional information, publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Wang, H., Bai, B., Li, J. et al. Action recognition method based on multi-stream attention-enhanced recursive graph convolution. Appl Intell (2024). https://doi.org/10.1007/s10489-024-05719-0

Download citation

Accepted : 27 July 2024

Published : 07 August 2024

DOI : https://doi.org/10.1007/s10489-024-05719-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Skeleton data

- Multi-stream attention

- Graph convolution

- Action recognition

- Find a journal

- Publish with us

- Track your research

Grab your spot at the free arXiv Accessibility Forum

Help | Advanced Search

Computer Science > Computation and Language

Title: exaone 3.0 7.8b instruction tuned language model.

Abstract: We introduce EXAONE 3.0 instruction-tuned language model, the first open model in the family of Large Language Models (LLMs) developed by LG AI Research. Among different model sizes, we publicly release the 7.8B instruction-tuned model to promote open research and innovations. Through extensive evaluations across a wide range of public and in-house benchmarks, EXAONE 3.0 demonstrates highly competitive real-world performance with instruction-following capability against other state-of-the-art open models of similar size. Our comparative analysis shows that EXAONE 3.0 excels particularly in Korean, while achieving compelling performance across general tasks and complex reasoning. With its strong real-world effectiveness and bilingual proficiency, we hope that EXAONE keeps contributing to advancements in Expert AI. Our EXAONE 3.0 instruction-tuned model is available at this https URL

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI) |

| Cite as: | [cs.CL] |

| (or [cs.CL] for this version) | |

| Focus to learn more arXiv-issued DOI via DataCite |

Submission history

Access paper:.

- HTML (experimental)

- Other Formats