- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Secondary Research: Definition, Methods and Examples.

In the world of research, there are two main types of data sources: primary and secondary. While primary research involves collecting new data directly from individuals or sources, secondary research involves analyzing existing data already collected by someone else. Today we’ll discuss secondary research.

One common source of this research is published research reports and other documents. These materials can often be found in public libraries, on websites, or even as data extracted from previously conducted surveys. In addition, many government and non-government agencies maintain extensive data repositories that can be accessed for research purposes.

LEARN ABOUT: Research Process Steps

While secondary research may not offer the same level of control as primary research, it can be a highly valuable tool for gaining insights and identifying trends. Researchers can save time and resources by leveraging existing data sources while still uncovering important information.

What is Secondary Research: Definition

Secondary research is a research method that involves using already existing data. Existing data is summarized and collated to increase the overall effectiveness of the research.

One of the key advantages of secondary research is that it allows us to gain insights and draw conclusions without having to collect new data ourselves. This can save time and resources and also allow us to build upon existing knowledge and expertise.

When conducting secondary research, it’s important to be thorough and thoughtful in our approach. This means carefully selecting the sources and ensuring that the data we’re analyzing is reliable and relevant to the research question . It also means being critical and analytical in the analysis and recognizing any potential biases or limitations in the data.

LEARN ABOUT: Level of Analysis

Secondary research is much more cost-effective than primary research , as it uses already existing data, unlike primary research, where data is collected firsthand by organizations or businesses or they can employ a third party to collect data on their behalf.

LEARN ABOUT: Data Analytics Projects

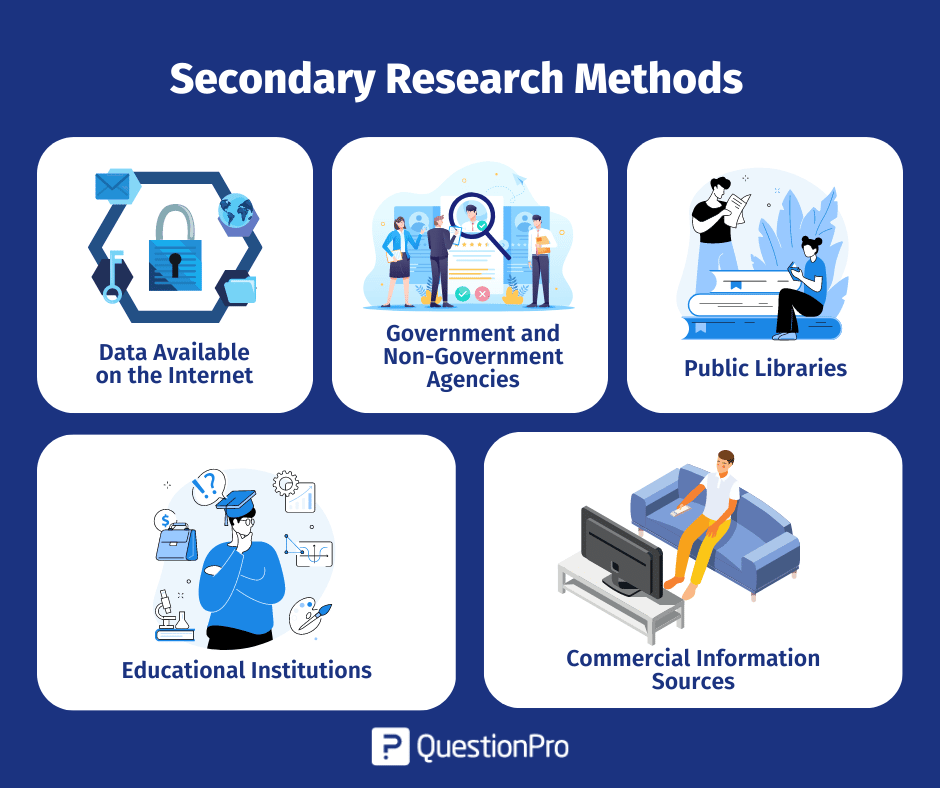

Secondary Research Methods with Examples

Secondary research is cost-effective, one of the reasons it is a popular choice among many businesses and organizations. Not every organization is able to pay a huge sum of money to conduct research and gather data. So, rightly secondary research is also termed “ desk research ”, as data can be retrieved from sitting behind a desk.

The following are popularly used secondary research methods and examples:

1. Data Available on The Internet

One of the most popular ways to collect secondary data is the internet. Data is readily available on the internet and can be downloaded at the click of a button.

This data is practically free of cost, or one may have to pay a negligible amount to download the already existing data. Websites have a lot of information that businesses or organizations can use to suit their research needs. However, organizations need to consider only authentic and trusted website to collect information.

2. Government and Non-Government Agencies

Data for secondary research can also be collected from some government and non-government agencies. For example, US Government Printing Office, US Census Bureau, and Small Business Development Centers have valuable and relevant data that businesses or organizations can use.

There is a certain cost applicable to download or use data available with these agencies. Data obtained from these agencies are authentic and trustworthy.

3. Public Libraries

Public libraries are another good source to search for data for this research. Public libraries have copies of important research that were conducted earlier. They are a storehouse of important information and documents from which information can be extracted.

The services provided in these public libraries vary from one library to another. More often, libraries have a huge collection of government publications with market statistics, large collection of business directories and newsletters.

4. Educational Institutions

Importance of collecting data from educational institutions for secondary research is often overlooked. However, more research is conducted in colleges and universities than any other business sector.

The data that is collected by universities is mainly for primary research. However, businesses or organizations can approach educational institutions and request for data from them.

5. Commercial Information Sources

Local newspapers, journals, magazines, radio and TV stations are a great source to obtain data for secondary research. These commercial information sources have first-hand information on economic developments, political agenda, market research, demographic segmentation and similar subjects.

Businesses or organizations can request to obtain data that is most relevant to their study. Businesses not only have the opportunity to identify their prospective clients but can also know about the avenues to promote their products or services through these sources as they have a wider reach.

Key Differences between Primary Research and Secondary Research

Understanding the distinction between primary research and secondary research is essential in determining which research method is best for your project. These are the two main types of research methods, each with advantages and disadvantages. In this section, we will explore the critical differences between the two and when it is appropriate to use them.

How to Conduct Secondary Research?

We have already learned about the differences between primary and secondary research. Now, let’s take a closer look at how to conduct it.

Secondary research is an important tool for gathering information already collected and analyzed by others. It can help us save time and money and allow us to gain insights into the subject we are researching. So, in this section, we will discuss some common methods and tips for conducting it effectively.

Here are the steps involved in conducting secondary research:

1. Identify the topic of research: Before beginning secondary research, identify the topic that needs research. Once that’s done, list down the research attributes and its purpose.

2. Identify research sources: Next, narrow down on the information sources that will provide most relevant data and information applicable to your research.

3. Collect existing data: Once the data collection sources are narrowed down, check for any previous data that is available which is closely related to the topic. Data related to research can be obtained from various sources like newspapers, public libraries, government and non-government agencies etc.

4. Combine and compare: Once data is collected, combine and compare the data for any duplication and assemble data into a usable format. Make sure to collect data from authentic sources. Incorrect data can hamper research severely.

4. Analyze data: Analyze collected data and identify if all questions are answered. If not, repeat the process if there is a need to dwell further into actionable insights.

Advantages of Secondary Research

Secondary research offers a number of advantages to researchers, including efficiency, the ability to build upon existing knowledge, and the ability to conduct research in situations where primary research may not be possible or ethical. By carefully selecting their sources and being thoughtful in their approach, researchers can leverage secondary research to drive impact and advance the field. Some key advantages are the following:

1. Most information in this research is readily available. There are many sources from which relevant data can be collected and used, unlike primary research, where data needs to collect from scratch.

2. This is a less expensive and less time-consuming process as data required is easily available and doesn’t cost much if extracted from authentic sources. A minimum expenditure is associated to obtain data.

3. The data that is collected through secondary research gives organizations or businesses an idea about the effectiveness of primary research. Hence, organizations or businesses can form a hypothesis and evaluate cost of conducting primary research.

4. Secondary research is quicker to conduct because of the availability of data. It can be completed within a few weeks depending on the objective of businesses or scale of data needed.

As we can see, this research is the process of analyzing data already collected by someone else, and it can offer a number of benefits to researchers.

Disadvantages of Secondary Research

On the other hand, we have some disadvantages that come with doing secondary research. Some of the most notorious are the following:

1. Although data is readily available, credibility evaluation must be performed to understand the authenticity of the information available.

2. Not all secondary data resources offer the latest reports and statistics. Even when the data is accurate, it may not be updated enough to accommodate recent timelines.

3. Secondary research derives its conclusion from collective primary research data. The success of your research will depend, to a greater extent, on the quality of research already conducted by primary research.

LEARN ABOUT: 12 Best Tools for Researchers

In conclusion, secondary research is an important tool for researchers exploring various topics. By leveraging existing data sources, researchers can save time and resources, build upon existing knowledge, and conduct research in situations where primary research may not be feasible.

There are a variety of methods and examples of secondary research, from analyzing public data sets to reviewing previously published research papers. As students and aspiring researchers, it’s important to understand the benefits and limitations of this research and to approach it thoughtfully and critically. By doing so, we can continue to advance our understanding of the world around us and contribute to meaningful research that positively impacts society.

QuestionPro can be a useful tool for conducting secondary research in a variety of ways. You can create online surveys that target a specific population, collecting data that can be analyzed to gain insights into consumer behavior, attitudes, and preferences; analyze existing data sets that you have obtained through other means or benchmark your organization against others in your industry or against industry standards. The software provides a range of benchmarking tools that can help you compare your performance on key metrics, such as customer satisfaction, with that of your peers.

Using QuestionPro thoughtfully and strategically allows you to gain valuable insights to inform decision-making and drive business success. Start today for free! No credit card is required.

LEARN MORE FREE TRIAL

MORE LIKE THIS

NPS Survey Platform: Types, Tips, 11 Best Platforms & Tools

Apr 26, 2024

User Journey vs User Flow: Differences and Similarities

Best 7 Gap Analysis Tools to Empower Your Business

Apr 25, 2024

12 Best Employee Survey Tools for Organizational Excellence

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Using an existing data set to answer new research questions: a methodological review

Affiliation.

- 1 University of San Francisco, California, USA. [email protected]

- PMID: 19769213

- DOI: 10.1891/1541-6577.23.3.203

The vast majority of the research methods literature assumes that the researcher designs the study subsequent to determining research questions. This assumption is not met for the many researchers involved in secondary data analysis. Researchers doing secondary data analysis need not only understand research concepts related to designing a new study, but additionally must be aware of challenges specific to conducting research using an existing data set. Techniques are discussed to determine if secondary data analysis is appropriate. Suggestions are offered on how to best identify, obtain, and evaluate a data set; refine research questions; manage data; calculate power; and report results. Examples from nursing research are provided. If an existing data set is suitable for answering a new research question, then a secondary analysis is preferable since it can be completed in less time, for less money, and with far lower risks to subjects. The researcher must carefully consider if the existing data set's available power and data quality are adequate to answer the proposed research questions.

Publication types

- Research Support, Non-U.S. Gov't

- Case-Control Studies

- Data Collection / methods*

- Data Collection / standards

- Nursing Methodology Research / methods*

- Nursing Research / methods*

- Reproducibility of Results

- Research Design*

- Review Literature as Topic

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Employee Exit Interviews

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

Market Research

- Artificial Intelligence

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Secondary Research

Try Qualtrics for free

Secondary research: definition, methods, & examples.

19 min read This ultimate guide to secondary research helps you understand changes in market trends, customers buying patterns and your competition using existing data sources.

In situations where you’re not involved in the data gathering process ( primary research ), you have to rely on existing information and data to arrive at specific research conclusions or outcomes. This approach is known as secondary research.

In this article, we’re going to explain what secondary research is, how it works, and share some examples of it in practice.

Free eBook: The ultimate guide to conducting market research

What is secondary research?

Secondary research, also known as desk research, is a research method that involves compiling existing data sourced from a variety of channels . This includes internal sources (e.g.in-house research) or, more commonly, external sources (such as government statistics, organizational bodies, and the internet).

Secondary research comes in several formats, such as published datasets, reports, and survey responses , and can also be sourced from websites, libraries, and museums.

The information is usually free — or available at a limited access cost — and gathered using surveys , telephone interviews, observation, face-to-face interviews, and more.

When using secondary research, researchers collect, verify, analyze and incorporate it to help them confirm research goals for the research period.

As well as the above, it can be used to review previous research into an area of interest. Researchers can look for patterns across data spanning several years and identify trends — or use it to verify early hypothesis statements and establish whether it’s worth continuing research into a prospective area.

How to conduct secondary research

There are five key steps to conducting secondary research effectively and efficiently:

1. Identify and define the research topic

First, understand what you will be researching and define the topic by thinking about the research questions you want to be answered.

Ask yourself: What is the point of conducting this research? Then, ask: What do we want to achieve?

This may indicate an exploratory reason (why something happened) or confirm a hypothesis. The answers may indicate ideas that need primary or secondary research (or a combination) to investigate them.

2. Find research and existing data sources

If secondary research is needed, think about where you might find the information. This helps you narrow down your secondary sources to those that help you answer your questions. What keywords do you need to use?

Which organizations are closely working on this topic already? Are there any competitors that you need to be aware of?

Create a list of the data sources, information, and people that could help you with your work.

3. Begin searching and collecting the existing data

Now that you have the list of data sources, start accessing the data and collect the information into an organized system. This may mean you start setting up research journal accounts or making telephone calls to book meetings with third-party research teams to verify the details around data results.

As you search and access information, remember to check the data’s date, the credibility of the source, the relevance of the material to your research topic, and the methodology used by the third-party researchers. Start small and as you gain results, investigate further in the areas that help your research’s aims.

4. Combine the data and compare the results

When you have your data in one place, you need to understand, filter, order, and combine it intelligently. Data may come in different formats where some data could be unusable, while other information may need to be deleted.

After this, you can start to look at different data sets to see what they tell you. You may find that you need to compare the same datasets over different periods for changes over time or compare different datasets to notice overlaps or trends. Ask yourself: What does this data mean to my research? Does it help or hinder my research?

5. Analyze your data and explore further

In this last stage of the process, look at the information you have and ask yourself if this answers your original questions for your research. Are there any gaps? Do you understand the information you’ve found? If you feel there is more to cover, repeat the steps and delve deeper into the topic so that you can get all the information you need.

If secondary research can’t provide these answers, consider supplementing your results with data gained from primary research. As you explore further, add to your knowledge and update your findings. This will help you present clear, credible information.

Primary vs secondary research

Unlike secondary research, primary research involves creating data first-hand by directly working with interviewees, target users, or a target market. Primary research focuses on the method for carrying out research, asking questions, and collecting data using approaches such as:

- Interviews (panel, face-to-face or over the phone)

- Questionnaires or surveys

- Focus groups

Using these methods, researchers can get in-depth, targeted responses to questions, making results more accurate and specific to their research goals. However, it does take time to do and administer.

Unlike primary research, secondary research uses existing data, which also includes published results from primary research. Researchers summarize the existing research and use the results to support their research goals.

Both primary and secondary research have their places. Primary research can support the findings found through secondary research (and fill knowledge gaps), while secondary research can be a starting point for further primary research. Because of this, these research methods are often combined for optimal research results that are accurate at both the micro and macro level.

Sources of Secondary Research

There are two types of secondary research sources: internal and external. Internal data refers to in-house data that can be gathered from the researcher’s organization. External data refers to data published outside of and not owned by the researcher’s organization.

Internal data

Internal data is a good first port of call for insights and knowledge, as you may already have relevant information stored in your systems. Because you own this information — and it won’t be available to other researchers — it can give you a competitive edge . Examples of internal data include:

- Database information on sales history and business goal conversions

- Information from website applications and mobile site data

- Customer-generated data on product and service efficiency and use

- Previous research results or supplemental research areas

- Previous campaign results

External data

External data is useful when you: 1) need information on a new topic, 2) want to fill in gaps in your knowledge, or 3) want data that breaks down a population or market for trend and pattern analysis. Examples of external data include:

- Government, non-government agencies, and trade body statistics

- Company reports and research

- Competitor research

- Public library collections

- Textbooks and research journals

- Media stories in newspapers

- Online journals and research sites

Three examples of secondary research methods in action

How and why might you conduct secondary research? Let’s look at a few examples:

1. Collecting factual information from the internet on a specific topic or market

There are plenty of sites that hold data for people to view and use in their research. For example, Google Scholar, ResearchGate, or Wiley Online Library all provide previous research on a particular topic. Researchers can create free accounts and use the search facilities to look into a topic by keyword, before following the instructions to download or export results for further analysis.

This can be useful for exploring a new market that your organization wants to consider entering. For instance, by viewing the U.S Census Bureau demographic data for that area, you can see what the demographics of your target audience are , and create compelling marketing campaigns accordingly.

2. Finding out the views of your target audience on a particular topic

If you’re interested in seeing the historical views on a particular topic, for example, attitudes to women’s rights in the US, you can turn to secondary sources.

Textbooks, news articles, reviews, and journal entries can all provide qualitative reports and interviews covering how people discussed women’s rights. There may be multimedia elements like video or documented posters of propaganda showing biased language usage.

By gathering this information, synthesizing it, and evaluating the language, who created it and when it was shared, you can create a timeline of how a topic was discussed over time.

3. When you want to know the latest thinking on a topic

Educational institutions, such as schools and colleges, create a lot of research-based reports on younger audiences or their academic specialisms. Dissertations from students also can be submitted to research journals, making these places useful places to see the latest insights from a new generation of academics.

Information can be requested — and sometimes academic institutions may want to collaborate and conduct research on your behalf. This can provide key primary data in areas that you want to research, as well as secondary data sources for your research.

Advantages of secondary research

There are several benefits of using secondary research, which we’ve outlined below:

- Easily and readily available data – There is an abundance of readily accessible data sources that have been pre-collected for use, in person at local libraries and online using the internet. This data is usually sorted by filters or can be exported into spreadsheet format, meaning that little technical expertise is needed to access and use the data.

- Faster research speeds – Since the data is already published and in the public arena, you don’t need to collect this information through primary research. This can make the research easier to do and faster, as you can get started with the data quickly.

- Low financial and time costs – Most secondary data sources can be accessed for free or at a small cost to the researcher, so the overall research costs are kept low. In addition, by saving on preliminary research, the time costs for the researcher are kept down as well.

- Secondary data can drive additional research actions – The insights gained can support future research activities (like conducting a follow-up survey or specifying future detailed research topics) or help add value to these activities.

- Secondary data can be useful pre-research insights – Secondary source data can provide pre-research insights and information on effects that can help resolve whether research should be conducted. It can also help highlight knowledge gaps, so subsequent research can consider this.

- Ability to scale up results – Secondary sources can include large datasets (like Census data results across several states) so research results can be scaled up quickly using large secondary data sources.

Disadvantages of secondary research

The disadvantages of secondary research are worth considering in advance of conducting research :

- Secondary research data can be out of date – Secondary sources can be updated regularly, but if you’re exploring the data between two updates, the data can be out of date. Researchers will need to consider whether the data available provides the right research coverage dates, so that insights are accurate and timely, or if the data needs to be updated. Also, fast-moving markets may find secondary data expires very quickly.

- Secondary research needs to be verified and interpreted – Where there’s a lot of data from one source, a researcher needs to review and analyze it. The data may need to be verified against other data sets or your hypotheses for accuracy and to ensure you’re using the right data for your research.

- The researcher has had no control over the secondary research – As the researcher has not been involved in the secondary research, invalid data can affect the results. It’s therefore vital that the methodology and controls are closely reviewed so that the data is collected in a systematic and error-free way.

- Secondary research data is not exclusive – As data sets are commonly available, there is no exclusivity and many researchers can use the same data. This can be problematic where researchers want to have exclusive rights over the research results and risk duplication of research in the future.

When do we conduct secondary research?

Now that you know the basics of secondary research, when do researchers normally conduct secondary research?

It’s often used at the beginning of research, when the researcher is trying to understand the current landscape . In addition, if the research area is new to the researcher, it can form crucial background context to help them understand what information exists already. This can plug knowledge gaps, supplement the researcher’s own learning or add to the research.

Secondary research can also be used in conjunction with primary research. Secondary research can become the formative research that helps pinpoint where further primary research is needed to find out specific information. It can also support or verify the findings from primary research.

You can use secondary research where high levels of control aren’t needed by the researcher, but a lot of knowledge on a topic is required from different angles.

Secondary research should not be used in place of primary research as both are very different and are used for various circumstances.

Questions to ask before conducting secondary research

Before you start your secondary research, ask yourself these questions:

- Is there similar internal data that we have created for a similar area in the past?

If your organization has past research, it’s best to review this work before starting a new project. The older work may provide you with the answers, and give you a starting dataset and context of how your organization approached the research before. However, be mindful that the work is probably out of date and view it with that note in mind. Read through and look for where this helps your research goals or where more work is needed.

- What am I trying to achieve with this research?

When you have clear goals, and understand what you need to achieve, you can look for the perfect type of secondary or primary research to support the aims. Different secondary research data will provide you with different information – for example, looking at news stories to tell you a breakdown of your market’s buying patterns won’t be as useful as internal or external data e-commerce and sales data sources.

- How credible will my research be?

If you are looking for credibility, you want to consider how accurate the research results will need to be, and if you can sacrifice credibility for speed by using secondary sources to get you started. Bear in mind which sources you choose — low-credibility data sites, like political party websites that are highly biased to favor their own party, would skew your results.

- What is the date of the secondary research?

When you’re looking to conduct research, you want the results to be as useful as possible , so using data that is 10 years old won’t be as accurate as using data that was created a year ago. Since a lot can change in a few years, note the date of your research and look for earlier data sets that can tell you a more recent picture of results. One caveat to this is using data collected over a long-term period for comparisons with earlier periods, which can tell you about the rate and direction of change.

- Can the data sources be verified? Does the information you have check out?

If you can’t verify the data by looking at the research methodology, speaking to the original team or cross-checking the facts with other research, it could be hard to be sure that the data is accurate. Think about whether you can use another source, or if it’s worth doing some supplementary primary research to replicate and verify results to help with this issue.

We created a front-to-back guide on conducting market research, The ultimate guide to conducting market research , so you can understand the research journey with confidence.

In it, you’ll learn more about:

- What effective market research looks like

- The use cases for market research

- The most important steps to conducting market research

- And how to take action on your research findings

Download the free guide for a clearer view on secondary research and other key research types for your business.

Related resources

Market intelligence 10 min read, marketing insights 11 min read, ethnographic research 11 min read, qualitative vs quantitative research 13 min read, qualitative research questions 11 min read, qualitative research design 12 min read, primary vs secondary research 14 min read, request demo.

Ready to learn more about Qualtrics?

- IRB-SBS Home

- Contact IRB-SBS

- IRB SBS Staff Directory

- IRB SBS Board Members

- About the IRB-SBS

- CITI Training

- Education Events

- Virginia IRB Consortium

- IRB-SBS Learning Shots

- HRPP Education & Training

- Student Support

- Access iProtocol

- Getting Started

- iProtocol Question Guide

- iProtocol Management

- Protocol Review Process

- Certificate of Confidentiality

- Deception and/or Withholding Information from a Participant

- Ethnographic Research

- IRB-SBS 101

- IRB-SBS Glossary

- Participant Pools

- Paying Participants

- Research in an Educational Setting

- Research in an International Setting and/or Location

- Risk-Sensitive Populations

- Student Researchers and Faculty Sponsors

- Study Funding and the IRB

- Understanding Risk in Research

- Vulnerable Participants

- IRB-SBS PAM & Ed

- Federal Regulations

- Ethical Principals

- Partner Offices

- Determining Human Subjects Research

- Determining HSR or SBS

Secondary Use of Existing Data

Secondary use of existing (archival) data studies includes all of the following:

- Data that are collected for non-research purposes (i.e. student records) or collected for a research study other than the proposed study (i.e. another study’s data set)

- The proposed study plans to use the existing data as opposed to gathering new data (or possibly in conjunction with newly gathered data)

- Data contains information that can be linked to individuals (though not necessarily to the individual’s identity)

- Data are the primary source (versus a secondary source where the data was analyzed for another publication)

- While data usually exists prior to the protocol’s approval, there are instances in which the data can continue to accumulate; however, the researcher cannot be engaged in gathering the data. For example, students can continue to add content to their student records that can be accessed by the researcher (with appropriate permission) but the researcher is not engaged in contacting the students directly for this information.

In order for the Board to assess the risks to the participants through the use of existing data sources and make recommendations for ethical use of the data, they will need to know the following:

- How did you obtain access to the data? The Board will need to know if the data are publicly available or if there are restrictions for accessing the data. If the second is true, the Board will need to know how you obtained permission to access the data.

- What do the data consist of? The Board will need to know if you are using data sets, video tapes, audio tapes, journal entries, transcripts, etc. If you are using data sets, they will need to know what data fields you will use.

- How many records will you access? Will the data be combined with other data sources? How easy is it to deduce the identities of the participants? The Board needs to understand the complete picture of the data and the potential to deduce identity which could compromise confidentiality.

- Can the participants be linked to their data? The Board will need to know in what form you will receive the data. Can the data be de-identified? Are the data linked and stripped of identifiers? Who prepared the data for you? Will you merge multiple data sets?

For suggestions on how to create a "Secondary Data" iProtocol, see Creating and Submitting a New iProtocol .

Exempt studies are not under the same obligation to obtain consent from participants (though the Board often asks researchers to provide information about the study to participants using a Study Notification). The federal regulations allow the Board to exempt research involving the secondary use of existing data if either of the following are true :

- the identifiable private information is publicly available

- the information is recorded by the investigator in such a manner that the identity of the participant cannot be readily ascertained directly or through identifiers linked to the subject, the investigator does not contact the subjects, and the investigator will not re-identify the subjects.

In addition, data sets that specifically targets prisoners cannot be exempted. If a prisoner is incidentally included in a data set, the data set can be exempted.

The Board evaluates the existing data source (i.e. public or private) and if the data can identify the participants to determine exemption. If the protocol qualifies for exemption, the Board does not require researchers to obtain consent from participants. If the protocol does not qualify for exemption, the board may consider waiving consent or they may require that researcher obtain consent from participants.

The data sets listed below do not require IRB review except in the case where the data sets are merged with other data or if the data archive requires IRB review:

- Inter-University Consortium for Political and Social Research (ICPSR)

- National Center for Health Statistics

- National Center for Education Statistics

- National Election Studies

- U.S. Bureau of the Census

Additional data sets and archives may quality for inclusion on this list. Investigators who wish to have a specific data set or data archive considered for inclusion on this list should submit the following information to [email protected] :

- In the email subject, please add the following: IRB-SBS archival data set review

- The name of data set or data archive; and

- The URL for the data set/archive or other specific information on how to obtain the data set; and

An abstract that describes the content and potential uses of the data set/archive.

Data collected by various government agencies and academic institutions make their data available to the public for research purposes. Any data set that is made available to the public and does not require special permission to access the data is considered a publicly available data set. Publicly available data sets are exempt.

Private data sets may include (but are not limited to): data collected previously by another researcher for another study, data collected by another agency for evaluative or research purposes, or your own data that you collected for a previous study. Private data sets generally require permission to access the data, and the Board will need to know that you will obtain (or have already obtained) proper permission from the appropriate entity.

Private records are data that were not collected with the intent to conduct research, but instead exists for the purpose of collecting information on individuals for the individual’s own sake. For example, student records, medical records, credit histories, etc, are private records that are maintained by agencies other than the individual but contain personal information about the individual. Some of these records are collected by government agencies and by law are accessible to the public—thus they fall under the publicly-available data sets category. Private records can be governed by privacy laws and regulations, thus requiring special permission to access the records as well as additional safeguards for using the data. Some researchers may have access to private records as part of their professional role; for example you may be able to access student records as a professor but you will still need to obtain permission to access records as a researcher (particularly because these records are also protected by FERPA regulations). These records can still qualify for exemption if the data are received stripped of identifiers .

Student Records and Classroom Data: Please see Education: Student Records .

Medical records and hipaa:.

The IRB-SBS does not review studies where a medical record is used; these studies are reviewed by the IRB-HSR . If you have any questions regarding which IRB should review your study, check out the HSR/SBS decision algorithm . If this doesn’t answer your question, please contact our office (or the HSR) before completing our protocol form as each IRB has separate submission procedures.

Combining data sets can provide interesting insights into behavior and provide rich information for statistical models. However, combining data can also increase the ability to identify individuals in de-identified data sets. From the OHRP website :

“A subset of “big data research” uses ongoing and constantly replenished and revised data systems, with analysis updated in real time as new information becomes available. In some instances these may be ongoing “longitudinal” studies; may involve Bayesian designs for data collection and analysis; and can involve “adaptive” study designs that change as new information becomes available and is added to the data being analyzed. Increasingly in the social and behavioral research context, longitudinal data systems link multiple ongoing data streams (e.g., student records, employment, social welfare services, health records, police encounters, arrest records), and these study designs can, over time, create risks of re-identification and misuse that are not present in studies using static data sets.”

The IRB regulations require that researcher obtain IRB approval/ exemption prior to collecting any data. The Board cannot retroactively approve the collection of data that falls under our definition of research. However, the regulations recognize that there are instances where data is collected without the intention to conduct human subjects research and this data could prove to be valuable information in a later study. For example, information collected in a pilot study to test the feasibility of conducting a full study may be viable data to include in the full study. A pilot study doesn’t necessarily qualify as “research” according the IRB regulations. The same could be true for a class project where data was collected for a brief paper submitted to a professor, but later provided necessary information for a full dissertation or thesis project. This should not be considered a loophole for avoiding IRB review, however. In order to approve the use of this data, the IRB will review the collection of the data and hold it to the same standards required for any collection of data. If the IRB finds that the data was not collected according to our ethical guidelines and regulations, the Board will not allow that the data be used. For example, if you collect sensitive information that can be linked to an individual but the participant did not consent to the collection of this data, the Board may not approve the use of this data because of the manner in which it was collected. In order to avoid this scenario, we recommend that you contact our office for further guidance regarding data collection. Depending on the project, we may advise that you submit a protocol for a pilot study or class project , which will help you avoid any question about the viability of your data. If you don’t need to submit a protocol at this time, we can provide suggestions and recommendations for collecting your data so that it can be approved at a later date if you decide to use it.

- Describe research involving the secondary research of existing data by creating a Data Source in the Data Source section.

- Upload any additional resources that describe the existing data in the Data Source Upload .

- Upload any files that document permission to access data in the Permissions section.

- If you have more than one Data Source and the sources are linked, the Associate Data Sources with Data Sources is the section where you can demonstrate and describe this relationship.

- The Associate Data Sources with Participant Groups is the section where you can demonstrate the relationship between Participant Groups and Data Sources (if you have more than one of both).

- Creating and Submitting a New iProtocol

- Data Source

- Data Source Upload

- Exempt Protocols

- Permissions

- Associate Data Sources with Data Sources

- Associate Data Sources with Participant Groups

- HSR/SBS decision algorithm

- Identifiers

- OHRP website

What Is Secondary Data? A Complete Guide

What is secondary data, and why is it important? Find out in this post.

Within data analytics, there are many ways of categorizing data. A common distinction, for instance, is that between qualitative and quantitative data . In addition, you might also distinguish your data based on factors like sensitivity. For example, is it publicly available or is it highly confidential?

Probably the most fundamental distinction between different types of data is their source. Namely, are they primary, secondary, or third-party data? Each of these vital data sources supports the data analytics process in its own way. In this post, we’ll focus specifically on secondary data. We’ll look at its main characteristics, provide some examples, and highlight the main pros and cons of using secondary data in your analysis.

We’ll cover the following topics:

What is secondary data?

- What’s the difference between primary, secondary, and third-party data?

- What are some examples of secondary data?

- How to analyse secondary data

- Advantages of secondary data

- Disadvantages of secondary data

- Wrap-up and further reading

Ready to learn all about secondary data? Then let’s go.

1. What is secondary data?

Secondary data (also known as second-party data) refers to any dataset collected by any person other than the one using it.

Secondary data sources are extremely useful. They allow researchers and data analysts to build large, high-quality databases that help solve business problems. By expanding their datasets with secondary data, analysts can enhance the quality and accuracy of their insights. Most secondary data comes from external organizations. However, secondary data also refers to that collected within an organization and then repurposed.

Secondary data has various benefits and drawbacks, which we’ll explore in detail in section four. First, though, it’s essential to contextualize secondary data by understanding its relationship to two other sources of data: primary and third-party data. We’ll look at these next.

2. What’s the difference between primary, secondary, and third-party data?

To best understand secondary data, we need to know how it relates to the other main data sources: primary and third-party data.

What is primary data?

‘Primary data’ (also known as first-party data) are those directly collected or obtained by the organization or individual that intends to use them. Primary data are always collected for a specific purpose. This could be to inform a defined goal or objective or to address a particular business problem.

For example, a real estate organization might want to analyze current housing market trends. This might involve conducting interviews, collecting facts and figures through surveys and focus groups, or capturing data via electronic forms. Focusing only on the data required to complete the task at hand ensures that primary data remain highly relevant. They’re also well-structured and of high quality.

As explained, ‘secondary data’ describes those collected for a purpose other than the task at hand. Secondary data can come from within an organization but more commonly originate from an external source. If it helps to make the distinction, secondary data is essentially just another organization’s primary data.

Secondary data sources are so numerous that they’ve started playing an increasingly vital role in research and analytics. They are easier to source than primary data and can be repurposed to solve many different problems. While secondary data may be less relevant for a given task than primary data, they are generally still well-structured and highly reliable.

What is third-party data?

‘Third-party data’ (sometimes referred to as tertiary data) refers to data collected and aggregated from numerous discrete sources by third-party organizations. Because third-party data combine data from numerous sources and aren’t collected with a specific goal in mind, the quality can be lower.

Third-party data also tend to be largely unstructured. This means that they’re often beset by errors, duplicates, and so on, and require more processing to get them into a usable format. Nevertheless, used appropriately, third-party data are still a useful data analytics resource. You can learn more about structured vs unstructured data here .

OK, now that we’ve placed secondary data in context, let’s explore some common sources and types of secondary data.

3. What are some examples of secondary data?

External secondary data.

Before we get to examples of secondary data, we first need to understand the types of organizations that generally provide them. Frequent sources of secondary data include:

- Government departments

- Public sector organizations

- Industry associations

- Trade and industry bodies

- Educational institutions

- Private companies

- Market research providers

While all these organizations provide secondary data, government sources are perhaps the most freely accessible. They are legally obliged to keep records when registering people, providing services, and so on. This type of secondary data is known as administrative data. It’s especially useful for creating detailed segment profiles, where analysts hone in on a particular region, trend, market, or other demographic.

Types of secondary data vary. Popular examples of secondary data include:

- Tax records and social security data

- Census data (the U.S. Census Bureau is oft-referenced, as well as our favorite, the U.S. Bureau of Labor Statistics )

- Electoral statistics

- Health records

- Books, journals, or other print media

- Social media monitoring, internet searches, and other online data

- Sales figures or other reports from third-party companies

- Libraries and electronic filing systems

- App data, e.g. location data, GPS data, timestamp data, etc.

Internal secondary data

As mentioned, secondary data is not limited to that from a different organization. It can also come from within an organization itself.

Sources of internal secondary data might include:

- Sales reports

- Annual accounts

- Quarterly sales figures

- Customer relationship management systems

- Emails and metadata

- Website cookies

In the right context, we can define practically any type of data as secondary data. The key takeaway is that the term ‘secondary data’ doesn’t refer to any inherent quality of the data themselves, but to how they are used. Any data source (external or internal) used for a task other than that for which it was originally collected can be described as secondary data.

4. How to analyse secondary data

The process of analysing secondary data can be performed either quantitatively or qualitatively, depending on the kind of data the researcher is dealing with. The quantitative method of secondary data analysis is used on numerical data and is analyzed mathematically. The qualitative method uses words to provide in-depth information about data.

There are different stages of secondary data analysis, which involve events before, during, and after data collection. These stages include:

- Statement of purpose: Before collecting secondary data, you need to know your statement of purpose. This means you should have a clear awareness of the goal of the research work and how this data will help achieve it. This will guide you to collect the right data, then choosing the best data source and method of analysis.

- Research design: This is a plan on how the research activities will be carried out. It describes the kind of data to be collected, the sources of data collection, the method of data collection, tools used, and method of analysis. Once the purpose of the research has been identified, the researcher should design a research process that will guide the data analysis process.

- Developing the research questions: Once you’ve identified the research purpose, an analyst should also prepare research questions to help identify secondary data. For example, if a researcher is looking to learn more about why working adults are increasingly more interested in the “gig economy” as opposed to full-time work, they may ask, “What are the main factors that influence adults decisions to engage in freelance work?” or, “Does education level have an effect on how people engage in freelance work?

- Identifying secondary data: Using the research questions as a guide, researchers will then begin to identify relevant data from the sources provided. If the kind of data to be collected is qualitative, a researcher can filter out qualitative data—for example.

- Evaluating secondary data: Once relevant data has been identified and collates, it will be evaluated to ensure it fulfils the criteria of the research topic. Then, it is analyzed either using the quantitative or qualitative method, depending on the type of data it is.

You can learn more about secondary data analysis in this post .

5. Advantages of secondary data

Secondary data is suitable for any number of analytics activities. The only limitation is a dataset’s format, structure, and whether or not it relates to the topic or problem at hand.

When analyzing secondary data, the process has some minor differences, mainly in the preparation phase. Otherwise, it follows much the same path as any traditional data analytics project.

More broadly, though, what are the advantages and disadvantages of using secondary data? Let’s take a look.

Advantages of using secondary data

It’s an economic use of time and resources: Because secondary data have already been collected, cleaned, and stored, this saves analysts much of the hard work that comes from collecting these data firsthand. For instance, for qualitative data, the complex tasks of deciding on appropriate research questions or how best to record the answers have already been completed. Secondary data saves data analysts and data scientists from having to start from scratch.

It provides a unique, detailed picture of a population: Certain types of secondary data, especially government administrative data, can provide access to levels of detail that it would otherwise be extremely difficult (or impossible) for organizations to collect on their own. Data from public sources, for instance, can provide organizations and individuals with a far greater level of population detail than they could ever hope to gather in-house. You can also obtain data over larger intervals if you need it., e.g. stock market data which provides decades’-worth of information.

Secondary data can build useful relationships: Acquiring secondary data usually involves making connections with organizations and analysts in fields that share some common ground with your own. This opens the door to a cross-pollination of disciplinary knowledge. You never know what nuggets of information or additional data resources you might find by building these relationships.

Secondary data tend to be high-quality: Unlike some data sources, e.g. third-party data, secondary data tends to be in excellent shape. In general, secondary datasets have already been validated and therefore require minimal checking. Often, such as in the case of government data, datasets are also gathered and quality-assured by organizations with much more time and resources available. This further benefits the data quality , while benefiting smaller organizations that don’t have endless resources available.

It’s excellent for both data enrichment and informing primary data collection: Another benefit of secondary data is that they can be used to enhance and expand existing datasets. Secondary data can also inform primary data collection strategies. They can provide analysts or researchers with initial insights into the type of data they might want to collect themselves further down the line.

6. Disadvantages of secondary data

They aren’t always free: Sometimes, it’s unavoidable—you may have to pay for access to secondary data. However, while this can be a financial burden, in reality, the cost of purchasing a secondary dataset usually far outweighs the cost of having to plan for and collect the data firsthand.

The data isn’t always suited to the problem at hand: While secondary data may tick many boxes concerning its relevance to a business problem, this is not always true. For instance, secondary data collection might have been in a geographical location or time period ill-suited to your analysis. Because analysts were not present when the data were initially collected, this may also limit the insights they can extract.

The data may not be in the preferred format: Even when a dataset provides the necessary information, that doesn’t mean it’s appropriately stored. A basic example: numbers might be stored as categorical data rather than numerical data. Another issue is that there may be gaps in the data. Categories that are too vague may limit the information you can glean. For instance, a dataset of people’s hair color that is limited to ‘brown, blonde and other’ will tell you very little about people with auburn, black, white, or gray hair.

You can’t be sure how the data were collected: A structured, well-ordered secondary dataset may appear to be in good shape. However, it’s not always possible to know what issues might have occurred during data collection that will impact their quality. For instance, poor response rates will provide a limited view. While issues relating to data collection are sometimes made available alongside the datasets (e.g. for government data) this isn’t always the case. You should therefore treat secondary data with a reasonable degree of caution.

Being aware of these disadvantages is the first step towards mitigating them. While you should be aware of the risks associated with using secondary datasets, in general, the benefits far outweigh the drawbacks.

7. Wrap-up and further reading

In this post we’ve explored secondary data in detail. As we’ve seen, it’s not so different from other forms of data. What defines data as secondary data is how it is used rather than an inherent characteristic of the data themselves.

To learn more about data analytics, check out this free, five-day introductory data analytics short course . You can also check out these articles to learn more about the data analytics process:

- What is data cleaning and why is it important?

- What is data visualization? A complete introductory guide

- 10 Great places to find free datasets for your next project

- Privacy Policy

Home » Research Data – Types Methods and Examples

Research Data – Types Methods and Examples

Table of Contents

Research Data

Research data refers to any information or evidence gathered through systematic investigation or experimentation to support or refute a hypothesis or answer a research question.

It includes both primary and secondary data, and can be in various formats such as numerical, textual, audiovisual, or visual. Research data plays a critical role in scientific inquiry and is often subject to rigorous analysis, interpretation, and dissemination to advance knowledge and inform decision-making.

Types of Research Data

There are generally four types of research data:

Quantitative Data

This type of data involves the collection and analysis of numerical data. It is often gathered through surveys, experiments, or other types of structured data collection methods. Quantitative data can be analyzed using statistical techniques to identify patterns or relationships in the data.

Qualitative Data

This type of data is non-numerical and often involves the collection and analysis of words, images, or sounds. It is often gathered through methods such as interviews, focus groups, or observation. Qualitative data can be analyzed using techniques such as content analysis, thematic analysis, or discourse analysis.

Primary Data

This type of data is collected by the researcher directly from the source. It can include data gathered through surveys, experiments, interviews, or observation. Primary data is often used to answer specific research questions or to test hypotheses.

Secondary Data

This type of data is collected by someone other than the researcher. It can include data from sources such as government reports, academic journals, or industry publications. Secondary data is often used to supplement or support primary data or to provide context for a research project.

Research Data Formates

There are several formats in which research data can be collected and stored. Some common formats include:

- Text : This format includes any type of written data, such as interview transcripts, survey responses, or open-ended questionnaire answers.

- Numeric : This format includes any data that can be expressed as numerical values, such as measurements or counts.

- Audio : This format includes any recorded data in an audio form, such as interviews or focus group discussions.

- Video : This format includes any recorded data in a video form, such as observations of behavior or experimental procedures.

- Images : This format includes any visual data, such as photographs, drawings, or scans of documents.

- Mixed media: This format includes any combination of the above formats, such as a survey response that includes both text and numeric data, or an observation study that includes both video and audio recordings.

- Sensor Data: This format includes data collected from various sensors or devices, such as GPS, accelerometers, or heart rate monitors.

- Social Media Data: This format includes data collected from social media platforms, such as tweets, posts, or comments.

- Geographic Information System (GIS) Data: This format includes data with a spatial component, such as maps or satellite imagery.

- Machine-Readable Data : This format includes data that can be read and processed by machines, such as data in XML or JSON format.

- Metadata: This format includes data that describes other data, such as information about the source, format, or content of a dataset.

Data Collection Methods

Some common research data collection methods include:

- Surveys : Surveys involve asking participants to answer a series of questions about a particular topic. Surveys can be conducted online, over the phone, or in person.

- Interviews : Interviews involve asking participants a series of open-ended questions in order to gather detailed information about their experiences or perspectives. Interviews can be conducted in person, over the phone, or via video conferencing.

- Focus groups: Focus groups involve bringing together a small group of participants to discuss a particular topic or issue in depth. The group is typically led by a moderator who asks questions and encourages discussion among the participants.

- Observations : Observations involve watching and recording behaviors or events as they naturally occur. Observations can be conducted in person or through the use of video or audio recordings.

- Experiments : Experiments involve manipulating one or more variables in order to measure the effect on an outcome of interest. Experiments can be conducted in a laboratory or in the field.

- Case studies: Case studies involve conducting an in-depth analysis of a particular individual, group, or organization. Case studies typically involve gathering data from multiple sources, including interviews, observations, and document analysis.

- Secondary data analysis: Secondary data analysis involves analyzing existing data that was collected for another purpose. Examples of secondary data sources include government records, academic research studies, and market research reports.

Analysis Methods

Some common research data analysis methods include:

- Descriptive statistics: Descriptive statistics involve summarizing and describing the main features of a dataset, such as the mean, median, and standard deviation. Descriptive statistics are often used to provide an initial overview of the data.

- Inferential statistics: Inferential statistics involve using statistical techniques to draw conclusions about a population based on a sample of data. Inferential statistics are often used to test hypotheses and determine the statistical significance of relationships between variables.

- Content analysis : Content analysis involves analyzing the content of text, audio, or video data to identify patterns, themes, or other meaningful features. Content analysis is often used in qualitative research to analyze open-ended survey responses, interviews, or other types of text data.

- Discourse analysis: Discourse analysis involves analyzing the language used in text, audio, or video data to understand how meaning is constructed and communicated. Discourse analysis is often used in qualitative research to analyze interviews, focus group discussions, or other types of text data.

- Grounded theory : Grounded theory involves developing a theory or model based on an analysis of qualitative data. Grounded theory is often used in exploratory research to generate new insights and hypotheses.

- Network analysis: Network analysis involves analyzing the relationships between entities, such as individuals or organizations, in a network. Network analysis is often used in social network analysis to understand the structure and dynamics of social networks.

- Structural equation modeling: Structural equation modeling involves using statistical techniques to test complex models that include multiple variables and relationships. Structural equation modeling is often used in social science research to test theories about the relationships between variables.

Purpose of Research Data

Research data serves several important purposes, including:

- Supporting scientific discoveries : Research data provides the basis for scientific discoveries and innovations. Researchers use data to test hypotheses, develop new theories, and advance scientific knowledge in their field.

- Validating research findings: Research data provides the evidence necessary to validate research findings. By analyzing and interpreting data, researchers can determine the statistical significance of relationships between variables and draw conclusions about the research question.

- Informing policy decisions: Research data can be used to inform policy decisions by providing evidence about the effectiveness of different policies or interventions. Policymakers can use data to make informed decisions about how to allocate resources and address social or economic challenges.

- Promoting transparency and accountability: Research data promotes transparency and accountability by allowing other researchers to verify and replicate research findings. Data sharing also promotes transparency by allowing others to examine the methods used to collect and analyze data.

- Supporting education and training: Research data can be used to support education and training by providing examples of research methods, data analysis techniques, and research findings. Students and researchers can use data to learn new research skills and to develop their own research projects.

Applications of Research Data

Research data has numerous applications across various fields, including social sciences, natural sciences, engineering, and health sciences. The applications of research data can be broadly classified into the following categories:

- Academic research: Research data is widely used in academic research to test hypotheses, develop new theories, and advance scientific knowledge. Researchers use data to explore complex relationships between variables, identify patterns, and make predictions.

- Business and industry: Research data is used in business and industry to make informed decisions about product development, marketing, and customer engagement. Data analysis techniques such as market research, customer analytics, and financial analysis are widely used to gain insights and inform strategic decision-making.

- Healthcare: Research data is used in healthcare to improve patient outcomes, develop new treatments, and identify health risks. Researchers use data to analyze health trends, track disease outbreaks, and develop evidence-based treatment protocols.

- Education : Research data is used in education to improve teaching and learning outcomes. Data analysis techniques such as assessments, surveys, and evaluations are used to measure student progress, evaluate program effectiveness, and inform policy decisions.

- Government and public policy: Research data is used in government and public policy to inform decision-making and policy development. Data analysis techniques such as demographic analysis, cost-benefit analysis, and impact evaluation are widely used to evaluate policy effectiveness, identify social or economic challenges, and develop evidence-based policy solutions.

- Environmental management: Research data is used in environmental management to monitor environmental conditions, track changes, and identify emerging threats. Data analysis techniques such as spatial analysis, remote sensing, and modeling are used to map environmental features, monitor ecosystem health, and inform policy decisions.

Advantages of Research Data

Research data has numerous advantages, including:

- Empirical evidence: Research data provides empirical evidence that can be used to support or refute theories, test hypotheses, and inform decision-making. This evidence-based approach helps to ensure that decisions are based on objective, measurable data rather than subjective opinions or assumptions.

- Accuracy and reliability : Research data is typically collected using rigorous scientific methods and protocols, which helps to ensure its accuracy and reliability. Data can be validated and verified using statistical methods, which further enhances its credibility.

- Replicability: Research data can be replicated and validated by other researchers, which helps to promote transparency and accountability in research. By making data available for others to analyze and interpret, researchers can ensure that their findings are robust and reliable.

- Insights and discoveries : Research data can provide insights into complex relationships between variables, identify patterns and trends, and reveal new discoveries. These insights can lead to the development of new theories, treatments, and interventions that can improve outcomes in various fields.

- Informed decision-making: Research data can inform decision-making in a range of fields, including healthcare, business, education, and public policy. Data analysis techniques can be used to identify trends, evaluate the effectiveness of interventions, and inform policy decisions.

- Efficiency and cost-effectiveness: Research data can help to improve efficiency and cost-effectiveness by identifying areas where resources can be directed most effectively. By using data to identify the most promising approaches or interventions, researchers can optimize the use of resources and improve outcomes.

Limitations of Research Data

Research data has several limitations that researchers should be aware of, including:

- Bias and subjectivity: Research data can be influenced by biases and subjectivity, which can affect the accuracy and reliability of the data. Researchers must take steps to minimize bias and subjectivity in data collection and analysis.

- Incomplete data : Research data can be incomplete or missing, which can affect the validity of the findings. Researchers must ensure that data is complete and representative to ensure that their findings are reliable.

- Limited scope: Research data may be limited in scope, which can limit the generalizability of the findings. Researchers must carefully consider the scope of their research and ensure that their findings are applicable to the broader population.

- Data quality: Research data can be affected by issues such as measurement error, data entry errors, and missing data, which can affect the quality of the data. Researchers must ensure that data is collected and analyzed using rigorous methods to minimize these issues.

- Ethical concerns: Research data can raise ethical concerns, particularly when it involves human subjects. Researchers must ensure that their research complies with ethical standards and protects the rights and privacy of human subjects.

- Data security: Research data must be protected to prevent unauthorized access or use. Researchers must ensure that data is stored and transmitted securely to protect the confidentiality and integrity of the data.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Primary Data – Types, Methods and Examples

Qualitative Data – Types, Methods and Examples

Quantitative Data – Types, Methods and Examples

Secondary Data – Types, Methods and Examples

Information in Research – Types and Examples

Data Module #3 - Finding & Collecting Data for Your Research

- Collecting Data: An Overview

- Learning Through Example: Scenarios

- Identifying Specific Data Needs

- Research Scenario: Existing Data

- Research Scenario: Your Data

- Let's Review

Quick Navigation

Using existing data in your research.

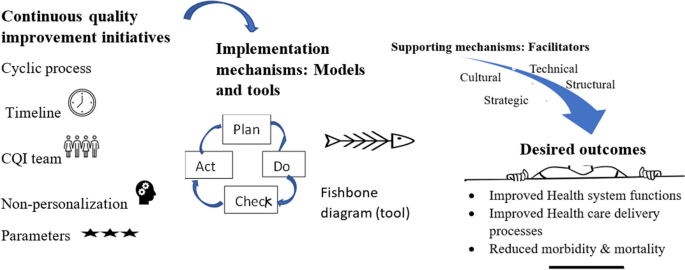

When choosing existing data it is vital you understand how the data was collected. For example, if you use polling data, you may want to know collection method, sample size, and demographics of people surveyed. Sometimes, term definitions change over time, making comparisons difficult. For example, the United States federal government's definition of unemployment has changed more than once during the time it has collected that data. To find information about your data, look for metadata and documentation accompanying it. You can also look at other studies that have used the data to find possible critiques and limitations.

Exploring Potential Data Sources

Where can you find existing data available to use in your research? Is the best answer to "Just use Google?" Sometimes! Google is a great resource to use. There are a lot of web sites that make data available. Often, these sites include their own tools for finding data that allow you to be more focused in your searching. Think about who has a stake in providing the data you need or is an advocate for the topic. Check to see if they collect or publish any data that might be helpful to your research.

Look for research publications (books, articles, websites, etc.) on your topic to discover what data sources other researchers have used. Their data may be just what you need. If the data are not easily obtainable, either in the publication or elsewhere, try contacting the researcher directly.

Governments all over the world collect lots of data. The United States government, along with many state and local agencies, provide open (free) data. Identify the government agencies that have a stake in tracking or regulating the topic of your research and check to see what data they make available.

There are a wide range of international organizations, non-profit research centers, foundations, trade associations, and advocacy groups that collect data and make it available. Check to see if there is an organization that focuses on your research topic.

Data repositories are curated spaces for storing research data. Contributors may include individual researchers, organizations, and government agencies. Benefits to using a repository are the data are findable, reusable, citable, and preserved. There are several general and subject specific data respositories. Look for the data repositories available in your broad subject area.

Finding Data on the Web