Definitions of Computational Thinking, Algorithmic Thinking & Design Thinking

by Lcom Team | Jun 7, 2022 | Blogs

Share this article!

While there are differences between each, these methods all blend critical thinking and creativity, follow iterative processes to formulate effective solutions, and help students embrace ambiguous and open-ended questions . So, without further ado…

Definition of Computational Thinking

Computational thinking is a set of skills and processes that enable students to navigate complex problems. It relies on a four-step process that can be applied to nearly any problem: decomposition, pattern recognition, abstraction and algorithmic thinking.

The computational thinking process starts with data as the input and quests to derive meaning and answers from it. The output is not only an answer but a process for arriving at it. As well as an output, computational thinking also plots the journey to the solution to ensure that the process can be replicated and others can learn from it and use it.

The computational thinking process includes four key concepts:

- Decomposition : Break the problem down into smaller, more manageable parts.

- Pattern Recognition : Analyze data and identify similarities and connections among its different parts.

- Abstraction : Identify the most relevant information needed to solve the problem and eliminate the extraneous details.

- Algorithmic Thinking : Develop a step-by-step process to solve the problem so that the work is replicable by humans or computers.

Examples of Computational Thinking

Computational thinking is a multi-disciplinary tool that can be broadly applied in both plugged and unplugged ways. These are some examples of computational thinking in a variety of contexts.

1. Computational Thinking for Collaborative Classroom Projects

To navigate the different concepts of computational thinking – decomposition, pattern recognition, abstraction and algorithmic thinking – guided practice is essential for students.

In these classroom-ready lesson plans, students cultivate understanding of computational thinking with hands-on, collaborative activities that guide them through the problem and deliver a clearly articulated and replicable process – an algorithm 😉 – that groups present to the class.

- Computational Thinking Lesson Plan, Grades K-2

- Computational Thinking Lesson Plan, Grades 3-5

- Computational Thinking Lesson Plan, Grades 6-8

2. Computational Thinking for Data-Driven Instruction

In this example , the New Mexico School for the Arts sought a more defined process for using data to better inform decision-making across the school. To do so, they developed interim assessments that generate actionable data, but the process of mining the data for relevant information was incredibly cumbersome.

Expediting and improving the data analysis process, they designed a coherent process for analyzing the data quickly to find the most important information. This process can now be applied time and time again and has enabled them to tailor instructional planning to the needs of students.

3. Computational Thinking for Journalism

To measure gender stereotypes in films, Julia Silge, data scientist and author of Text Mining with R , coalesced data from 2000 movie scripts. Decomposing the problem, she specified that she would specifically look at the verb association with male and female pronouns in screen direction.

By identifying patterns in sentence structure, Silge was able to measure and abstract data from these on a mass scale, which made the research possible. Her analysis then resulted in this article, She Giggles, He Gallops .

Definition of Algorithmic Thinking

Algorithmic thinking is not solving for a specific answer; instead, it solves how to build a replicable process –an algorithm, which is a formula for calculating answers, processing data, or automating tasks.

Algorithmic thinking is a derivative of computer science and coding . It seeks to automate the problem-solving process by creating a series of systematic logical steps that process a defined set of inputs and produce a defined set of outputs.

Examples of Algorithmic Thinking

Here are three examples that cover algorithms in basic arithmetic, standardized testing and our good ol’ friend, Google.

1. Algorithmic Thinking in Long Division

Without having to dive into technology, there are algorithms we teach students, whether or not we realize it. For example, long division follows the standard division algorithm for dividing multi-digit integers to calculate the quotient.

The division algorithm enables both people and computers to solve division problems with a systematic set of logical steps, which this video shows . Rather than having to analyze and parse through these problems, we are able to automate solving for quotients because of the algorithm.

2. Algorithmic Thinking in Standardized Testing

A somewhat recent development in standardized testing is the advent of computer adaptive assessments that pick questions based on student ability as determined by correct and incorrect answers given.

If students select the correct answer to a question, then the next question would be moderately more difficult. But if they answer wrong, then the assessment offers a moderately easier question. This occurs through an iterative algorithm that starts with a pool of questions. After an answer, the pool is adjusted accordingly. This repeats continuously.

The purpose of this algorithmic approach to assessment is to measure student performance in a more targeted way. This iterative algorithm isn’t just limited to standardized tests; personalized and adaptive learning programs use this same algorithm, too.

3. Algorithmic Thinking in Google

Have you ever wondered why the chosen results appear for a query as opposed to those on the second, third, fourth, or tenth pages of a google search?

You guessed it! Google’s search results are determined (in part) by the PageRank algorithm, which assigns a webpage’s importance based on the number of sites linking to it. In other words, the algorithm looks at hyperlinks to a webpage as an upvote.

So, if we google ‘what is an algorithm,’ we can bet that the chosen pages have the most links to them for the topic ‘what is an algorithm.’ It’s still more complicated than this, of course. PageRank also looks at the score for the site that is linking to the webpage to rank the authority of the link. And there is still much more; if you are interested, this article goes into the intricacies of the PageRank algorithm.

What can we take away from this? There are over 1.5 billion websites with billions more pages to count, but thanks to algorithmic thinking we can type just about anything into Google and expect to be delivered a curated list of resources in under a second. This right here is the power of algorithmic thinking .

Definition of Design Thinking

Design thinking is a problem-solving method that helps solve problems that are vague, open-ended and don’t have a defined output. Design thinking starts with asking, “Why is this a problem?” It uses empathy, definition, ideation, prototypes, testing and improvements to design a unique output.

Design thinking is a user-centered approach to problem solving . The process ends with a deliverable of sorts, whether technological or constructed with tape and paper. Rather than being a replicable approach like computational thinking or algorithmic thinking, design thinking is conceptual and its outputs are unique .

The design thinking process contains the following steps: empathize , define , ideate , prototype , ideate and test (plus improve ).

- Empathize: Research the needs of the user to understand why they have the problem and identify their pain points.

- (re)Define: Specify and articulate the problem based on feedback from the empathize phase.

- Ideate: Strategize different ways to solve the problem that fit the user’s needs.

- Prototype: Build models of sample solutions.

- Test: Try the prototypes, experiment with them and seek feedback.

- Improve: Consider what worked and what did not from the testing prototypes, return to the ideate phase to develop enhanced prototypes and test again.

Design thinking is a non-linear process, meaning that we return to steps and restart in certain areas. Design thinking is deliverable focused, making sure what we create best serves and represents the end user’s needs .

Examples of Design Thinking

Design thinking is widely applied. Here are a few examples of innovative and disruptive ways teachers, schools and organizations are using design thinking.

1. Design Thinking Student Projects

In this article , Kristen Magyar, fifth-grade teacher and STREAM enthusiast, shares how she was inspired to create a toy invention unit based on the popular show, Toy Box. What makes this project so excellent is that Magyar tailored it to the students’ interests, knowing that learning is far more likely to resonate when instruction is relevant to their personal experiences and interests.

The Toy Box unit was project-based and centered on the design thinking process. Students invented entirely new toys and pitched them to a panel of judges. Learn more about this collaborative project here !

2. Design Thinking for School Improvement

This interview features Sam Seidel , Director of K12 Strategy + Research at the Stanford D.School . He is passionate about using design thinking to reimagine education. He focuses in part on school initiatives like project-based learning and state programs like standardized testing.

Seidel’s message is that as schools seek to innovate their processes and programs, they need to bring teachers into the conversations. Initiatives will not be as effective without the buy-in from teachers. He encourages school and district leaders to empathize with problems teachers may have, develop solutions that match their needs and their student needs, and embrace an iterative process for honing the efficacy of these.

3. Design Thinking for Business Growth

Now we get to talk about my second favorite topics (education being the first), which is food. As one of many food delivery applications, UberEats uses design thinking to improve on a city-by-city basis. UberEats affirms that their work must be relevant to that of the users, and as a multinational company, that means they must tailor their program to each city in which they operate.

To do so, UberEats immerses their employees in different cities by exploring and eating their way through the various cuisines ( Um… can I sign up for this? ), talking with restaurants, and meeting with platform users.

UberEats then translates the findings into prototyped solutions. They iterate quickly and are not afraid of making improvements on the fly to uphold their belief that a user-centered product will grow its market and outperform its competition.

Teaching Students Computational Thinking, Algorithmic Thinking, & Design Thinking

Learning.com Team

Staff Writers

Founded in 1999, Learning.com provides educators with solutions to prepare their students with critical digital skills. Our web-based curriculum for grades K-12 engages students as they learn keyboarding, online safety, applied productivity tools, computational thinking, coding and more.

Further Reading

- Teaching Students to Think Like Programmers | Learning.com

by Lcom Team | Aug 27, 2024

Recent discussions in education emphasize the importance of teaching students to think like computer programmers. Computational thinking involves...

- Defining Computational Thinking

by Lcom Team | Aug 24, 2024

Computational thinking is a problem-solving process that involves various techniques and thought processes borrowed from computer science. It...

- Supporting Texas Students in Becoming Tech-Strong: Expert Strategies

by Lcom Team | Aug 22, 2024

The rapidly evolving technological landscape means students must develop robust digital skills to thrive in future careers. Texas recognizes this...

Quick Links

- Request More Info

Recent news & Articles

- How Computer Fundamentals Equip Students for Success

- Algorithmic Thinking: A Critical Skill for Today’s Students

- Our Mission

Computational Thinking is Critical Thinking—and Belongs in Every Subject

Identifying patterns and groupings is a useful way of thinking not just for computer scientists but for students in all fields.

Computational thinking, a problem-solving process often used by computer scientists, is not that different from critical thinking and can be used in any discipline, writes Stephen Noonoo in “ Computational Thinking Is Critical Thinking. And It Works in Any Subject, ” for EdSurge.

Elements of computational thinking, like pattern recognition, are easily transferred to unexpected areas of study like social studies or English, says Tom Hammond, a former teacher who is now an education professor at Lehigh University. Hammond says that students like the computational thinking approach because it’s engaging: “Ask yourself, would you rather get to play with a data set or would you rather listen to the teacher tell you about the data set?”

For example, in history classes students make use of data-rich, often open-source geographic information systems, or GIS, to plot election results from the colonial era to reimagine the way politics unfolded in the 1700s. These kinds of data visualization exercises offer a way for students to actively manipulate real-world information for deeper engagement and understanding.

There are three steps to bring computational thinking into your classroom, regardless of your subject area. First, consider the dataset. Hammond offers an example of incorporating computational thinking into a social studies class: A student is asked to give five state names which Hammond writes on the board. Then a different student lists five more states.

Once all the information is on the table, students execute the second step: identifying patterns. “Typically, this involves shifting to greater levels of abstraction—or conversely, getting more granular,” Noonoo writes. For students looking for commonalities or trends, this kind of critical thinking “cues them into the subtleties.” In the states example, students try to identify why Hammond grouped the states in the way he did. Is it by geography? Is it by what date they became part of the United States? Slowly, students begin to identify patterns—something the brain is already hardwired to do, according to Hammond.

In the final stage—decomposition—students break down information into digestible parts and then decide “What’s a trend versus what’s an outlier to the trend? Where do things correlate, and where can you find causal inference?” Establish a rule from the data—a process that requires that students make fine distinctions about how complex datasets can be reliably interpreted, Hammond says.

“It definitely took some practice to help them understand the difference between just finding a relationship and then a cause-and-effect relationship,” says Shannon Salter, a social studies teacher in Allentown, Pennsylvania, who collaborates with Hammond.

An entire curriculum can be dedicated to incorporating computational thinking, but that kind of “major overhaul” isn’t required, Hammond says. “It can be inserted on a lesson-by-lesson basis and only where it makes sense.”

Computational thinking is not that far afield from critical thinking. The processes mirror each other: “look at the provided information, narrow it down to the most valuable data, find patterns and identify themes,” Noonoo writes. Students become more agile thinkers when they exercise these transferrable skills in subjects not often associated with computer science, like history or literature.

- Lesson 1: What is computational thinking?

- Edit on GitHub

Lesson 1: What is computational thinking?

Being able to think is one of the hallmarks of being a human being - especially meta thinking which involves thinking about your own thoughts. We often overlook the complexity of thinking because it comes so naturally to us. It seems so easy because we do it everyday with little to no thought about how the process works. It only becomes apparent that it is complex when something or someone interrupts our thought process e.g. try explaining a specific idea you have to someone in one or two sentences; or try follow a lecture in a topic you don’t know anything about. These tasks become difficult because in our daily life we strip away what we can safely assume to make communication easier and more effective. This stripping away of “unnecessary” information becomes a problem when we need it. This is most prominent when working with computer programming because a computer cannot assume any information, so you have to provide commands without exception. This means that you have to adjust your thinking slightly to work through problems in the same way a computer program would. This isn’t as daunting as it sounds because it is quite straightforward and it can also help with the way in which you solve problems outside of programming too.

The main purpose of computational thinking is to identify problems and solve them. This seems simple, but problems themselves can be quite complex and made up of different parts, each of which forms its own separate problem. The complex nature of problems can make them seem to be overwhelming, but when you follow a few simple steps, you are able to break them down into manageable pieces. This is very effective because not only does it work for computational problems, but it also works for most problems you will face in life. There are four key steps in this process:

Decomposition

Pattern recognition

Abstraction

Algorithmic thinking

Using these four components, you will be able to successfully solve problems by “dividing and conquering” them. There are also some additional factors that need to be considered that are not directly related to the core way of solving the problem - constraints. There could be several constraints that you are facing which were unforeseeable, imposed upon your project or factors that weren’t considered when starting. In this course, you will learn to use these four components as well as how to deal with constraints and what you can do to mitigate the effects of them.

Another point that is important to understand involves the following three words: what , why and how . It is always important to understand what you are doing because if you don’t understand that then you cannot reorientate yourself when you face problems in your project. In the same way, you need to understand why you are doing something. Keeping a goal in mind when you break down a problem into smaller pieces will keep you focused on the big picture even when you are working on the smaller issues. This is vital for not losing sight of what your final goal is. Lastly, having a clear understanding of how you are going to do something will put an element of realism to a project and reveal any potential unrealistic expectations right in the beginning. These are three important components to keep in mind when you are working on any step of the computational thinking process. They are supplementary because even though they help keep you on track, you still need the plan and the action to achieve the goal.

This course will teach you how to effectively use these concepts to become a self-sufficient learner and problem-solver. Not only in the computational world, but also in the real world where you are faced with problems that are much less logical and solutions that are suboptimal at best. The principles behind both real world and computational problems are essentially the same, so these lessons are applicable in most - if not all - domains of study and life. The main point you need to keep in mind is that the purpose of the exercises isn’t necessarily to achieve that specific goal, but it’s rather to go through the process so that you learn the formula to achieve any goal.

The four components of computational thinking

1. decomposition .

In simple terms, decomposition is the process of breaking down a large problem into smaller problems. There are a few reasons why this is helpful in the bigger picture. It gives you insight into the practicalities associated with solving the problem. You can view the smaller tasks with more understanding of what needs to be done because the goal is clearer. This can help you develop actionable steps and get started on solving them. Image for a moment you moved to a new house and there is nothing in it yet, but you want to make a cup of coffee after a day of moving. This seems like a simple enough problem to solve - except that it isn’t. You are actually dealing with a complex problem because it’s made up of several problems disguised as one, so the problem actually seems to be impossible. You cannot make coffee because you don’t have any of the ingredients in your house. So there are two main tasks to solve: buy ingredients and make the coffee.

There two tasks can be further broken down into even simpler tasks. When you buy ingredients, you need to do three things:

Make of list of ingredients you need. Decide where you are going to buy them. Cecide how you are going to get there.

Instructor note

This can further be divided into separate tasks for each item that you need to buy.

coffee powder

You can buy all of those items at the supermarket, so you decide to go there.

The fastest why to the supermarket is by bus, so you decide to take the bus.

You also need to make the same trip home after you are done at the supermarket.

2. Pattern recognition

When you have broken the problem down into smaller tasks, you can look for patterns. The first step (the list of ingredients) is made up of several smaller steps even though it looks like one step.

Take the bus to the supermarket

Purchase the milk

Take the bus back home

Repeat the process for all the items

This isn’t an efficient way of doing this, so we can look for patterns in the tasks. The glaringly obvious pattern is that if we buy all the items at once then we only have to make one trip to the supermarket and one trip back home. This is the process of pattern recognition which is very useful in using previous knowledge to apply to new problems. For example, perhaps you are going to a new supermarket because your regular supermarket is closed for the day. You don’t need to go through the entire process of planning everything out because you can use the same pattern as usual, but adjusting a few key points. You would have to take a different bus and walk an extra few meters to get to the new supermarket, but buying the ticket, purchasing the items inside the supermarket and returning home is still the same process. You have recognized a pattern that you can use for other problems which have similar characteristics.

3. Abstraction

The process of abstraction is to discard unnecessary details that are not relevant to solving the problem. You cannot take everything into account when making a decision, so you filter out any unnecessary details and focus on what is relevant to the problem you are solving. In the above example, you take the bus to get to the supermarket. Is it important that you know every stop on the way to the bus stop? No. Is it important that you know the model of the bus you are taking? No. Is it important that you know the bus drivers name? No. These are all factors that could be relevant to someone else if they have a task that involves those details. For example, if you are a bus driver and you need to change shifts with a bus driver named John then it’s important to know the name of the bus driver. So, it’s not necessarily the case that the details are not important, but rather that there are details that are not important to your own task.

4. Algorithmic thinking

When you have decomposed the problem, identified any patterns and filtered out the unnecessary details, you are ready to create a step-by-step guide on how to solve the actual problems. At this point you need to make detailed plans for each step. You have to specify actions in the right order and with sufficient detail, so you can’t just say “take the bus to the supermarket and come back when you’re done”. You need to specify the smaller details such as the time you need to catch the bus, where you need to catch the bus and which number bus you need to catch. Then you need to specify where to get off, which direction to take towards the supermarket and how to long walk from the bus stop. Once you’re in the supermarket, you need to find all the items, collect them in a basket and pay for them. Then you repeat the bus process in reverse order making sure to take the bus from the opposite side of the street.

The relevance of the four components

The importance of the four components is to focus your thinking on the details of the problem, remove any inferences you might have and realistically show what kind of problem you are dealing with. This may seem a bit strange with the first example about coffee. What is important, however, isn’t the example itself, but rather the way in which it was broken down and solved. This forms a blueprint for solving problems and you can use this blueprint to solve other problems. After doing a simple example, you can scale up the complexity of the problems until you are able to this for any problem you face. However, there are other factors to take into account because after all, the world we live in isn’t a static place, so things often change.

A note on the difference between decomposition and algorithmic thinking

While creating a step-by-step plan can be seen as a form of decomposition, it’s important to note that decomposition is a broader concept that encompasses the identification of major components or subproblems. Algorithmic thinking is a more detailed and specific step that involves designing the precise instructions or actions to solve those subproblems. Both steps are crucial in computational thinking as they contribute to breaking down complex problems and devising effective solutions.

You can think of this using the following analogy: if you were to organize a trip overseas then you would break the problem down into a few smaller parts i.e. travel to destination, book into accomodation, organize a few external trips, finish trip and book out, travel back home. This is a broad overview of the solution which is how the decomposition step works. When you are on the algorithmic thinking step, you take each of those smaller parts and create a plan to solve them. Take for example the first part “travel to destination”: decide which mode of transport (boat, car, train or air travel), then decide on the dates and time of departure, etc. At this stage, you should be breaking each part down into very specific and actionable solutions.

Constraints

There are often things that change along the way, so it’s important to understand that most of the time you will have to work within some constraints because you hardly ever have the ideal conditions for carrying out your plan. For example if the supermarket doesn’t have any coffee in stock then what is the solution for that? You could buy tea instead or buy some takeout coffee from the restaurant next door. These aren’t optimal solutions, but they are alternatives due to the constraints that you may face in the real world. What if you find out that the busses have changed their payment systems and now you need to pay with a transit card. The only problem is that you’ve never used a transit card before, so you need to figure out how that works. In this case, the decomposition of your plan is still valid, but you need to adjust the algorithmic thinking portion of the four components. You would need to prioritize getting a bus transit card and loading it with money before going to the bus stop. This would form a new tasks which takes a higher priority to the other tasks since you cannot complete any of the other tasks without first getting the bus transit card.

Something to think about

In your own life think back to a time when you had a problem that seemed overwhelming. How did you manage to solve it at the end of the day?

Using the four components of computational thinking described above, could you have created a better plan to solve that problem that seemed so overwhelming before?

Digital teaching and learning equipped with PD

The one about algorithmic thinking in computational thinking.

- Share on Twitter

- Share on LinkedIn

- Share on Facebook

- Send to Pinterest

- Share via Facebook

An algorithm is a process or formula for calculating answers, sorting data, and automating tasks; and algorithmic thinking is the process for developing an algorithm.

“Effective algorithms make assumptions, show a bias toward simple solutions, trade off the costs of error against the cost of delay, and take chances.” Brian Christian, Tom Griffiths

With algorithmic thinking, students endeavor to construct a step-by-step process for solving a problem and like problems so that the work is replicable by humans or computers.

Algorithmic thinking is a derivative of computer science and the process to develop code and program applications. This approach a utomates the problem-solving process by creating a series of systematic, logical steps that intake a defined set of inputs and produce a defined set of outputs based on these.

In other words, algorithmic thinking is not solving for a specific answer; instead, it solves how to build a sequential, complete, and replicable process that has an end point – an algorithm. Designing an algorithm helps students to both communicate and interpret clear instructions for a predictable, reliable output. This is the crux of computational thinking .

Examples of Algorithms in Everyday Life

And like computational thinking and its other elements we’ve discussed, algorithms are something we experience regularly in our lives.

If you’re an amateur chef or a frozen meal aficionado, you follow recipes and directions for preparing food , and that’s an algorithm.

When you’re feeling groovy and bust out in a dance routine – maybe the Cha Cha Slide, the Macarena, or Flossing – you are also following a routine that emulates an algorithm and simultaneously being really cool .

Outlining a process for checking out books in a school library or instructions for cleaning up at the end of the day is developing an algorithm and letting your inner computer scientist shine.

Examples of Algorithms in Curriculum

Beginning to develop students’ algorithmic prowess, however, does not require formal practice with coding or even access to technology. Have students map directions for a peer to navigate a maze, create visual flowcharts for tasks, or develop a coded language.

To get started, here are ideas for incorporating algorithmic thinking in different subjects .

English Language Arts: Students map a flow chart that details directions for determining whether to use a colon or dash in a sentence.

Mathematics: In a word problem, students develop a step-by-step process for how they answered a question that can then be applied to similar problems.

Science: Students articulate how to classify elements in the periodic table.

Social Studies: Students describe a sequence of smaller events in history that precipitated a much larger event.

Languages: Students apply new vocabulary and practice speaking skills to direct another student to perform a task, whether it’s ordering coffee at a café or navigating from one point in a classroom to another.

Arts: Students create instructions for drawing a picture that another student then has to use to recreate the image.

Examples of Algorithms in Computer Science

These are obviously more elementary examples; algorithms – especially those used in coding – are often far more intricate and complex. To contextualize algorithms in computer science and programming , below are two examples.

Standardized Testing and Algorithms: Coding enables the adaptive technology often leveraged in classrooms today.

For example, the shift to computer-based standardized tests has led to the advent of adaptive assessments that pick questions based on student ability as determined by correct and incorrect answers given.

If students select the correct answer to a question, then the next question is moderately more difficult. But if they answer wrong, then the assessment offers a moderately easier question. This occurs through an iterative algorithm that starts with a pool of questions. After an answer, the pool is adjusted accordingly. This repeats continuously.

The Omnipotent Google and Algorithms: Google’s search results are determined (in part) by the PageRank algorithm, which assigns a webpage’s importance based on the number of sites linking to it.

So, if we google ‘what is an algorithm,’ we can bet that the chosen pages have some of the most links to them for the topic ‘what is an algorithm.’ It’s still more complicated than this, of course; if you are interested, this article goes into the intricacies of the PageRank algorithm.

There are over 1.5 billion websites with billions more pages to count, but thanks to algorithmic thinking we can type just about anything into Google and expect to be delivered a curated list of resources in under a second. This right here is the power of algorithmic thinking.

“The Google algorithm was a significant development. I've had thank-you emails from people whose lives have been saved by information on a medical website or who have found the love of their life on a dating website.” Tim Berners-Lee

In whatever way it’s approached in the classroom, algorithmic thinking encourages students to communicate clearly and logically . Students learn to persevere throughout its multiple iterations, challenges, and solutions.

To arrive at an algorithm (especially as algorithms advance in complexity), they must apply computational thinking and practice metacognition as they do so. In this process, students become more adept critical thinkers, eloquent communicators, and curious problem solvers that ask bold questions and flourish in ambiguity and uncertainty.

What's Next? Check out our articles on decomposition , pattern recognition , and abstraction .

Related Articles

Computational Thinking: Algorithm Basics for Grades 3-5

In this lesson plan, students develop a written algorithm to guide a partner in drawing a mystery animal.

Computational Thinking, Algorithmic Thinking, & Design Thinking Defined

Learn how using these approaches to problem solving encourages students to blend critical thinking and creativity to design effective solutions.

Computational Thinking: Decomposition and Design for Grades 6-8

In this lesson plan, students design solutions to for an egg-drop experiment. They will use an iterative process to test and modify their solutions.

Presented by

© Copyright 2023 equip by Learning.com

Cookie Settings

End of page. Back to Top

Popular Searches

Next generation science.

- Designing Challenge Based Science Learning

- Unit Library

What is Computational Thinking?

- Inclusive Integration of Computational Thinking

- Data Practices

- Creating Algorithms

- Understanding Systems with Computational Models

Computational thinking is an interrelated set of skills and practices for solving complex problems, a way to learn topics in many disciplines, and a necessity for fully participating in a computational world.

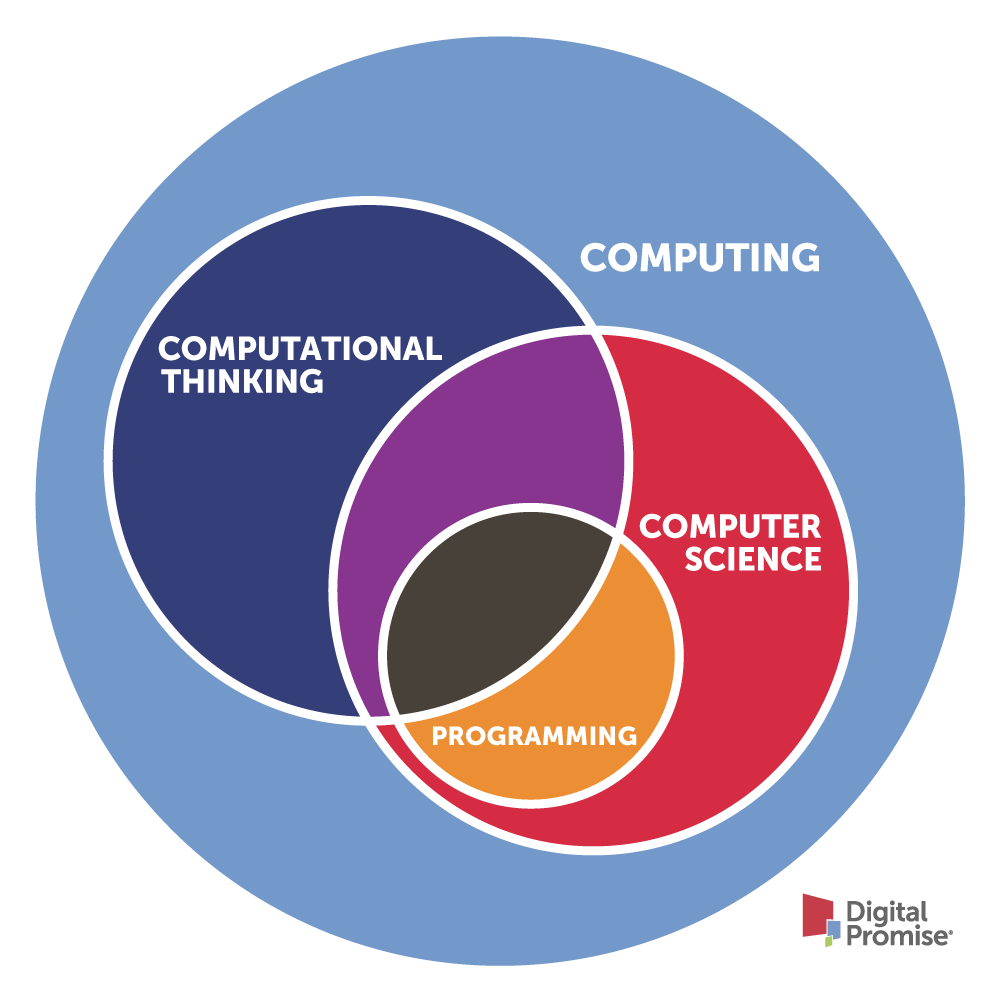

Many different terms are used when talking about computing, computer science, computational thinking, and programming. Computing encompasses the skills and practices in both computer science and computational thinking. While computer science is an individual academic discipline, computational thinking is a problem-solving approach that integrates across activities, and programming is the practice of developing a set of instructions that a computer can understand and execute, as well as debugging, organizing, and applying that code to appropriate problem-solving contexts. The skills and practices requiring computational thinking are broader, leveraging concepts and skills from computer science and applying them to other contexts, such as core academic disciplines (e.g. arts, English language arts, math, science, social studies) and everyday problem solving. For educators integrating computational thinking into their classrooms, we believe computational thinking is best understood as a series of interrelated skills and competencies.

Figure 1. The relationship between computer science (CS), computational thinking (CT), programming and computing.

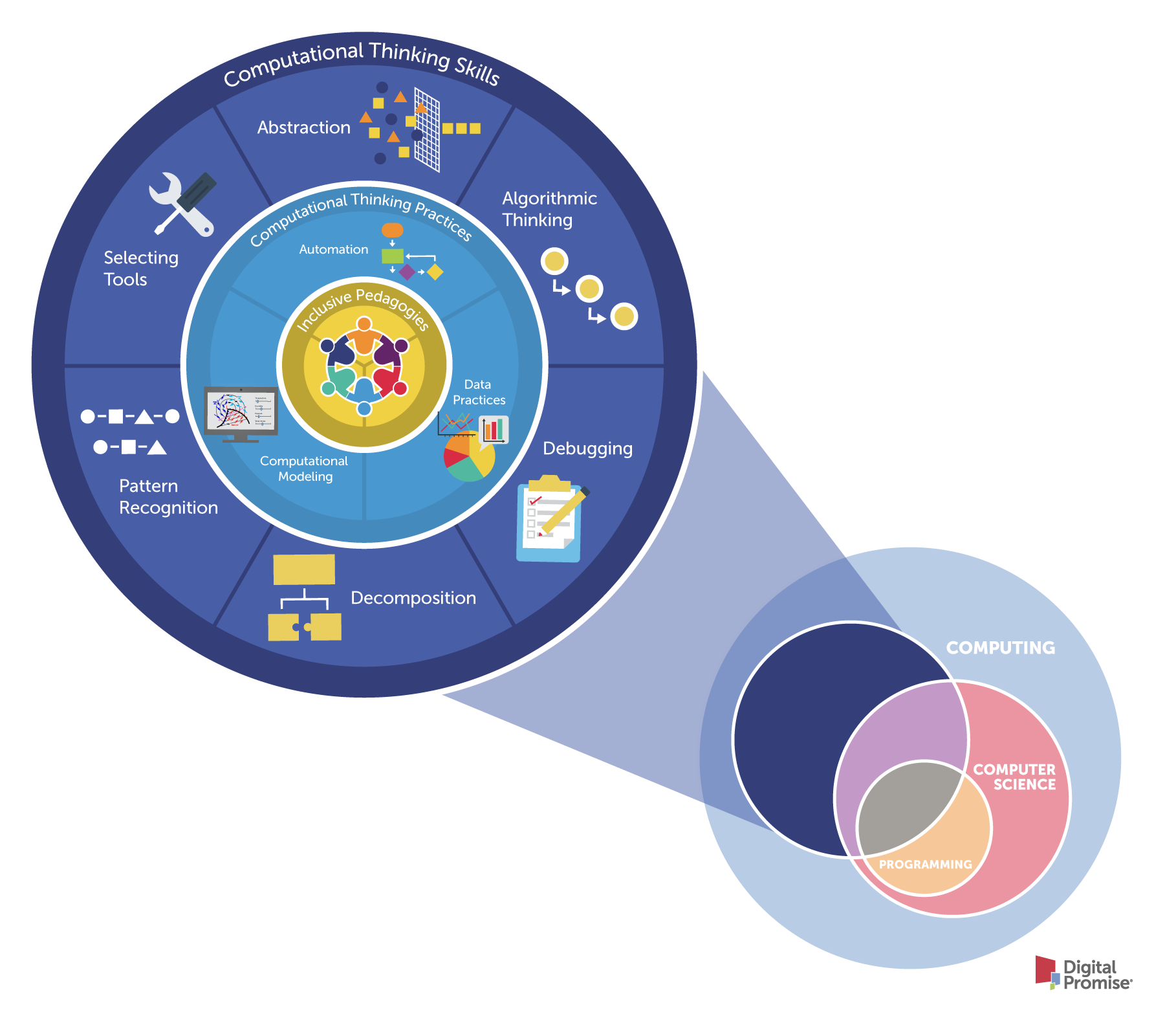

In order to integrate computational thinking into K-12 teaching and learning, educators must define what students need to know and be able to do to be successful computational thinkers. Our recommended framework has three concentric circles.

- Computational thinking skills , in the outermost circle, are the cognitive processes necessary to engage with computational tools to solve problems. These skills are the foundation to engage in any computational problem solving and should be integrated into early learning opportunities in K-3.

- Computational thinking practices , in the middle circle, combine multiple computational skills to solve an applied problem. Students in the older grades (4-12) may use these practices to develop artifacts such as a computer program, data visualization, or computational model.

- Inclusive pedagogies , in the innermost circle, are strategies for engaging all learners in computing, connecting applications to students’ interests and experiences, and providing opportunities to acknowledge, and combat biases and stereotypes within the computing field.

Figure 2. A framework for computational thinking integration.

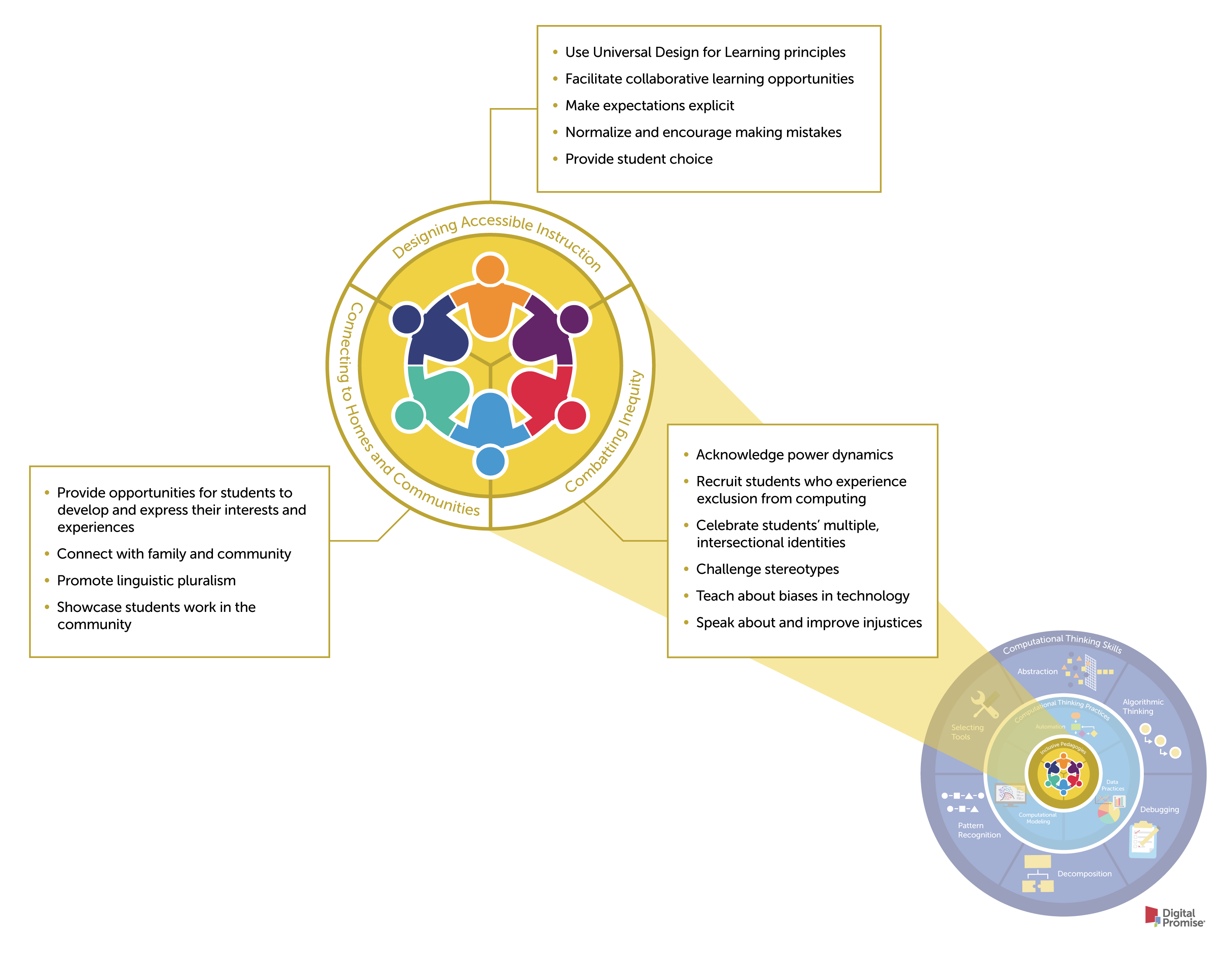

What does inclusive computational thinking look like in a classroom? In the image below, we provide examples of inclusive computing pedagogies in the classroom. The pedagogies are divided into three categories to emphasize different pedagogical approaches to inclusivity. Designing Accessible Instruction refers to strategies teachers should use to engage all learners in computing. Connecting to Students’ Interests, Homes, and Communities refers to drawing on the experiences of students to design learning experiences that are connected with their homes, communities, interests and experiences to highlight the relevance of computing in their lives. Acknowledging and Combating Inequity refers to a teacher supporting students to recognize and take a stand against the oppression of marginalized groups in society broadly and specifically in computing. Together these pedagogical approaches promote a more inclusive computational thinking classroom environment, life-relevant learning, and opportunities to critique and counter inequalities. Educators should attend to each of the three approaches as they plan and teach lessons, especially related to computing.

Figure 3. Examples of inclusive pedagogies for teaching computing in the classroom adapted from Israel et al., 2017; Kapor Center, 2021; Madkins et al., 2020; National Center for Women & Information Technology, 2021b; Paris & Alim, 2017; Ryoo, 2019; CSTeachingTips, 2021

Micro-credentials for computational thinking

A micro-credential is a digital certificate that verifies an individual’s competence in a specific skill or set of skills. To earn a micro-credential, teachers submit evidence of student work from classroom activities, as well as documentation of lesson planning and reflection.

Because the integration of computational thinking is new to most teachers, micro-credentials can be a useful tool for professional learning and/or credentialing pathways. Digital Promise has created micro-credentials for Computational Thinking Practices . These micro-credentials are framed around practices because the degree to which students have built foundational skills cannot be assessed until they are manifested through the applied practices.

Visit Digital Promise’s micro-credential platform to find out more and start earning micro-credentials today!

Sign up for updates!

What is Algorithmic Thinking? A Beginner’s Guide

This post may contain affiliate links. As an Amazon Associate, I earn from qualifying purchases.

Sharing is caring!

Algorithmic thinking is a way of approaching problems that involves breaking them down into smaller, more manageable parts. It is a process that involves identifying the steps needed to solve a problem and then implementing those steps in a logical and efficient manner. Algorithmic thinking is a key component of computational thinking, which is the ability to think like a computer and approach problems in a way that is both systematic and creative.

At its core, algorithmic thinking is about problem-solving. It is a way of thinking that involves breaking down complex problems into smaller, more manageable parts and then solving those parts one at a time. This approach can be applied to a wide range of problems, from simple math equations to complex programming challenges. By breaking problems down into smaller parts, algorithmic thinking allows us to approach problems in a way that is both logical and efficient.

Algorithmic thinking is closely related to critical thinking and logic. It involves the ability to analyze problems, identify patterns, and develop solutions that are both effective and efficient. By developing these skills, individuals can become better problem solvers and more effective communicators. Whether you are a student, a professional, or simply someone who wants to improve your problem-solving skills, algorithmic thinking is a valuable tool that can help you achieve your goals.

What is Algorithmic Thinking?

Algorithmic thinking is a problem-solving approach that involves breaking down complex problems into smaller, more manageable steps. It is a process of logically analyzing and organizing procedures to create a set of instructions that can be executed by a computer or human.

Algorithmic thinking involves using logic and critical thinking skills to develop algorithms, which are sets of instructions that can be used to solve problems. These algorithms can be used by computers or humans to efficiently and effectively solve problems. Algorithmic thinking, like computational thinking, involves exploring, decomposing, pattern recognition, and testing to develop efficient solutions to complex problems.

Algorithmic thinking is an essential skill in the fields of computer science , programming, and STEM . It is a valuable tool for analyzing and solving complex problems, making it an essential skill for success in today’s technology-driven world. Algorithmic thinking also helps to develop logic and critical thinking skills which are essential for success in any field.

Applications

Algorithmic thinking has numerous applications in various fields, including education, data analysis, machine learning, robotics, and operating systems. In education, it is used to develop data-driven instruction and instructional planning. In data analysis, it is used to develop algorithms for sorting and analyzing data. In machine learning, it is used to develop algorithms for recognizing patterns and making predictions. In robotics, it is used to develop algorithms for controlling robots. In operating systems, it is used to develop algorithms for managing resources and scheduling tasks.

Algorithmic Thinking Unplugged

Here at Teach Your Kids Code, we have designed a variety of algorithmic thinking activities for kids that don’t need a computer.

Check out a few of our activities here:

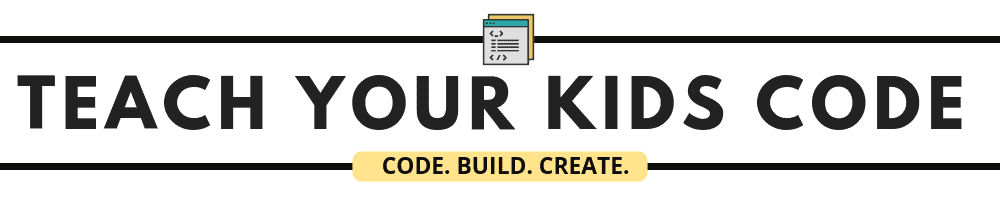

Learn Algorithms With A Deck of Cards

‘Program’ your robot to navigate a maze of cards and obstacles. Students will learn the basics of designing an algorithm with this activity.

Learn Algorithms with Snakes and Ladders

This twist on the classic board game Snakes and Ladders will teach your students all about Algorithms.

Algorithmic Thinking Process

Algorithmic thinking is a systematic approach to problem-solving that involves breaking down complex problems into smaller, more manageable parts. The algorithmic thinking process involves several steps that help in solving complex problems.

Exploring the Problem

The first step in the algorithmic thinking process is to explore the problem. This involves understanding the problem, identifying the constraints, and defining the goals. It is important to ask questions and gather information about the problem to gain a deeper understanding of it.

Decomposition

The next step is decomposition, which involves breaking down the problem into smaller, more manageable parts. This involves identifying the sub-problems and organizing them in a logical way. Decomposition helps in simplifying the problem and making it easier to solve.

Pattern Recognition

After decomposition, the next step is pattern recognition. This involves identifying patterns in the data and finding similarities between the sub-problems. Pattern recognition helps in identifying the relationships between the sub-problems and finding common solutions.

Abstraction

The next step is abstraction, which involves identifying the essential elements of the problem and ignoring the non-essential details. Abstraction helps in simplifying the problem and making it easier to understand.

Algorithm Design

The next step is algorithm design, which involves designing a solution to the problem. This involves creating a step-by-step plan for solving the problem. The plan should be clear, concise, and easy to follow.

Testing and Iteration

The final step is testing and iteration. This involves testing the solution and making any necessary changes. Iteration helps in refining the solution and making it more efficient.

Free Computational Thinking Worksheets

We’ve designed a set of worksheets to teach algorithmic and computational thinking concepts in the classroom. Worksheets will introduce the basic concepts of computational thinking. Answer guides are included.

Final Thoughts

Algorithmic thinking is a fundamental skill that is becoming increasingly important in today’s digital age. It is the process of breaking down complex problems into smaller, more manageable parts and developing a step-by-step approach to solving them.

Algorithmic thinking is not just limited to computer science and programming but can be applied to a wide range of fields, including mathematics, engineering, and business. By developing this skill, individuals can become more efficient problem-solvers, making them more valuable in the workforce.

In addition, algorithmic thinking can be a valuable tool for students of all ages. By introducing this concept early on in education, students can develop effective habits in processing tasks and problem-solving.

Overall, algorithmic thinking is a valuable skill that can benefit individuals in both their personal and professional lives. By breaking down complex problems into smaller, more manageable parts, individuals can become more efficient problem-solvers and better equipped to tackle the challenges of the digital age.

Kate is mom of two rambunctious boys and a self-proclaimed super nerd. With a background in neuroscience, she is passionate about sharing her love of all things STEM with her kids. She loves to find creative ways to teach kids computer science and geek out about coding and math. She has authored several books on coding for kids which can be found at Hachette UK .

Similar Posts

12 Ways Kids Can Learn with Minecraft

If your kids are anything like mine, they absolutely love Minecraft. And guess what? This popular game is more than just fun—it can be an educational tool! Here are 12 ways kids can learn with…

Best Rubik’s Cube

The Rubik’s Cube is an incredibly popular mechanical puzzle that has captured attention for decades and has managed to retain enough cultural capital to still be popular today. The 3D puzzle was invented all the…

14 Ways to Boost Critical Thinking Skills for Kids

Hey fellow STEM friends! I’ve been on a mission to find fun and engaging ways to boost critical thinking skills in our little ones. We all know how important it is for our kids to…

50 Interesting STEM Facts

Do you know what STEM is? STEM stands for Science, Technology, Engineering and Mathematics. STEM represents a unique set of interlinked subjects used in a variety of fields. STEM education is a common focus on…

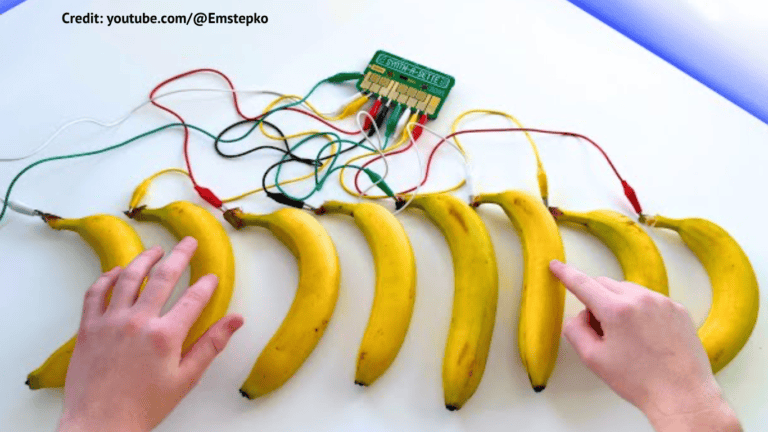

This Clip is Bananas! Viral Banana Piano in Action

Ever imagined making music with bananas? You’re not alone—this viral video is turning heads by showcasing an incredibly cool and innovative way to create your own musical keyboard using the Synth-a-Sette! The Synth-a-Sette is a…

Spooky DIY Fun: 3D Pen Pumpkin Carving

With Halloween just around the corner, it’s time to get your young learners excited about the season with a fun and unique DIY activity: 3D pen pumpkin carving. This creative endeavor combines art, technology, and…

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Last Updated on June 6, 2023 by Kaitlyn Siu

Computational/Algorithmic Thinking

- Reference work entry

- First Online: 01 January 2020

- Cite this reference work entry

- Max Stephens 2 &

- Djordje M. Kadijevich 3

953 Accesses

13 Citations

Introduction

In many countries, the curricular relationship with digital technologies is moving very rapidly (Stephens 2018 ). These technologies are not only seen as learning and teaching tools for existing disciplines such as mathematics but are also associated with new forms of literacy to be developed for scientific, societal, and economic reasons (Bocconi et al. 2016 ). Computational thinking, a term coined by Papert ( 1980 ), a key element of the new digital literacy, has been described by Wing ( 2011 ) as a fundamental personal ability like reading, writing, and arithmetic which enables a person to recognize aspects of computations in various problem situations and to deal appropriately with those aspects by applying tools and techniques from computer science (The Royal Society 2011 ).

To support an appropriate integration of digital technology in mathematics education, research must focus on the way in which the use of this technology can mediate the learning of mathematics (Drijvers 2018...

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Abramovich S (2015) Mathematical problem posing as a link between algorithmic thinking and conceptual knowledge. Teach Math 18(2):45–60. http://elib.mi.sanu.ac.rs/files/journals/tm/35/tmn35p45-60.pdf

Google Scholar

Artigue M (2010) The future of teaching and learning mathematics with digital technologies. In: Hoyles C, Lagrange JB (eds) Mathematics education and technology – rethinking the terrain. The 17th ICMI study. Springer, New York, pp 463–476. https://doi.org/10.1007/978-1-4419-0146-0_23

Chapter Google Scholar

Australian Curriculum, Assessment and reporting Authority (ACARA) (2016) Digital technologies. Retrieved from http://docs.acara.edu.au/resources/Digital_Technologies_-_Sequence_of_content.pdf

Bocconi S, Chioccariello A, Dettori G, Ferrari A, Engelhardt K (2016) Developing computational thinking in compulsory education. European Union, European Commission, Joint Research Centre, Luxemburg

Brennan K, Resnick M (2012) New frameworks for studying and assessing the development of computational thinking. In: Proceedings of the 2012 annual meeting of the American Educational Research Association, Vancouver. https://web.media.mit.edu/~kbrennan/files/Brennan_Resnick_AERA2012_CT.pdf

Department of Education (UK) (2013) National Curriculum in England: computing programmes of study . https://www.gov.uk/government/publications/national-curriculum-in-england-computing-programmes-of-study/national-curriculum-in-england-computing-programmes-of-study

Drijvers P (2018) Tools and taxonomies: a response to Hoyles. Res Math Edu 20(3):229–235. https://doi.org/10.1080/14794802.2018.1522269

Article Google Scholar

Drijvers P, Kodde-Buitenhuis H, Doorman M (2019) Assessing mathematical thinking as part of curriculum reform in the Netherlands. Educ Stud Math. https://doi.org/10.1007/s10649-019-09905-7

Hickmott D, Prieto-Rodriguez E, Holmes K (2018) A scoping review of studies on computational thinking in K–12 mathematics classrooms. Digit Exp Math Edu 4(1):48–69. https://doi.org/10.1007/s40751-017-0038-8

Hoyles C. Noss R (2015) Revisiting programming to enhance mathematics learning. In: Paper presented at Math + coding symposium. Western University, London

Kadijevich DM (2018) A cycle of computational thinking. In: Trebinjac B, Jovanović S (eds) Proceedings of the 9th international conference on e-learning. Metropolitan University, Belgrade, pp 75–77. https://econference.metropolitan.ac.rs/wp-content/uploads/2019/05/e-learning-2018-final.pdf

Kanemune S, Shirai S, Tani S (2017) Informatics and programming education at primary and secondary schools in Japan. Olympiads Inf 11:143–150. https://ioinformatics.org/journal/v11_2017_143_150.pdf

Kenderov PS (2018) Powering knowledge versus pouring facts. In: Kaiser G, Forgasz H, Graven M, Kuzniak A, Simmt E, Xu B (eds) Invited lectures from the 13th international congress on mathematical education. ICME-13 monographs. Springer, Cham. https://doi.org/10.1007/978-3-319-72170-5_17

Kotsopoulos D, Floyd L, Khan S, Namukasa IK, Somanath S, Weber J, Yiu C (2017) A pedagogical framework for computational thinking. Digit Exp Math Edu 3(2):154–171

Lee I, Martin F, Denner J, Coulter B, Allan W, Erickson J, Malyn-Smith J, Werner L (2011) Computational thinking for youth in practice. ACM Inroads 2(1):33–37. https://users.soe.ucsc.edu/~linda/pubs/ACMInroads.pdf

Lockwood EE, DeJarnette A, Asay A, Thomas M (2016) Algorithmic thinking: an initial characterization of computational thinking in mathematics. In: Wood MB, Turner EE, Civil M, Eli JA (eds) Proceedings of the 38th annual meeting of the north American chapter of the International Group for the Psychology of mathematics education. The University of Arizona, Tucson, pp 1588–1595. https://files.eric.ed.gov/fulltext/ED583797.pdf

Ministere de l’Education Nationale (2016) Algorithmique et programmation. Author: Paris. http://cache.media.eduscol.education.fr/file/Algorithmique_et_programmation/67/9/RA16_C4_MATH_algorithmique_et_programmation_N.D_551679.pdf

Modeste S (2016) Impact of informatics on mathematics and its teaching. In: Gadducci F, Tavosanis M (eds) History and philosophy of computing. HaPoC 2015. IFIP advances in information and communication technology, vol 487. Springer, Cham, pp 243–255

Mouza C, Yang H, Pan Y-C, Ozden SY, Pollock L (2017) Resetting educational technology coursework for pre-service teachers: a computational thinking approach to the development of technological pedagogical content knowledge (TPACK). Australas J Educ Technol 33(3):61–76. https://doi.org/10.14742/ajet.3521

Papert S (1980) Mindstorms: children, computers, and powerful ideas. Basic Books, New York

Prime Minister’s Office (2016) Comprehensive schools in the digital age. Author: Helsinki Finland. https://valtioneuvosto.fi/en/article/-/asset_publisher/10616/selvitys-perusopetuksen-digitalisaatiosta-valmistunut

Scantamburlo T (2013) Philosophical aspects in pattern recognition research. A PhD dissertation, Department of informatics, Ca’ Foscari University of Venice, Venice. https://pdfs.semanticscholar.org/c36d/b973c9ed1fd666b3d14cdf464e4a74bdceb7.pdf

Shute VJ, Sun C, Asbell-Clarke J (2017) Demystifying computational thinking. Educ Res Rev 22:142–158. Internet. https://doi.org/10.1016/j.edurev.2017.09.003

Stephens M (2018) Embedding algorithmic thinking more clearly in the mathematics curriculum. In: Shimizu Y, Withal R (eds) Proceedings of ICMI study 24 School mathematics curriculum reforms: challenges, changes and opportunities. University of Tsukuba, pp 483–490. https://protect-au.mimecast.com/s/oa4TCJypvAf26XL9fVkPOr?domain=human.tsukuba.ac.jp

The Royal Society (2011) Shut down or restart? The way forward for computing in UK schools. The Author, London. https://royalsociety.org/~/media/education/computing-in-schools/2012-01-12-computing-in-schools.pdf

Victorian Curriculum and Assessment Authority (2017) Victorian certificate of education – algorithmics (a higher education scored subject) – study design (2017–2021). https://www.vcaa.vic.edu.au/Documents/vce/algorithmics/AlgorithmicsSD-2017.pdf

Weintrop D, Beheshti E, Horn M, Orno K, Jona K, Trouille L, Wilensky U (2016) Defining computational thinking for mathematics and science classroom. J Sci Educ Technol 25(1):127–141. https://doi.org/10.1007/s10956-015-9581-5

Webb M, Davis N, Bell T, Katz YJ, Reynolds N, Chambers DP, Sysło MM (2017) Computer science in K-12 school curricula of the 2lst century: why, what and when? Educ Inf Technol 22(2):445–468. https://doi.org/10.1007/s10639-016-9493-x

Wing JM (2011) Research notebook: computational thinking—what and why? Link Newslett 6:1–32. https://www.cs.cmu.edu/~CompThink/resources/TheLinkWing.pdf

Download references

Acknowledgments

The authors are grateful to Michèle Artigue for her generous suggestions about the structure of this entry and the content of its sections, as well as to John G Moala for specific comments regarding curricular issues.

Author information

Authors and affiliations.

MGSE, The University of Melbourne, Melbourne, VIC, Australia

Max Stephens

Institute for Educational Research, Belgrade, Serbia

Djordje M. Kadijevich

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Max Stephens .

Editor information

Editors and affiliations.

Department of Education, Centre for Mathematics Education, London South Bank University, London, UK

Stephen Lerman

Section Editor information

Laboratoire de Didactique André Revuz (EA4434), Université Paris-Diderot, Paris, France

Michèle Artigue

Rights and permissions

Reprints and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this entry

Cite this entry.

Stephens, M., Kadijevich, D.M. (2020). Computational/Algorithmic Thinking. In: Lerman, S. (eds) Encyclopedia of Mathematics Education. Springer, Cham. https://doi.org/10.1007/978-3-030-15789-0_100044

Download citation

DOI : https://doi.org/10.1007/978-3-030-15789-0_100044

Published : 23 February 2020

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-15788-3

Online ISBN : 978-3-030-15789-0

eBook Packages : Education Reference Module Humanities and Social Sciences Reference Module Education

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- DOI: 10.1007/s40692-017-0090-9

- Corpus ID: 13788344

Algorithmic thinking, cooperativity, creativity, critical thinking, and problem solving: exploring the relationship between computational thinking skills and academic performance

- Tenzin Doleck , Paul Bazelais , +2 authors Ram B. Basnet

- Published in Journal of Computers in… 11 August 2017

- Computer Science, Education

114 Citations

Creativity in the acquisition of computational thinking, the significance of investigating the relationship between mathematical thinking and computational thinking using linguistic aspects, constructivism learning theory: a paradigm for students’ critical thinking, creativity, and problem solving to affect academic performance in higher education.

- Highly Influenced

The Computational Thinking Scale for Computer Literacy Education

Effect of scratch on computational thinking skills of chinese primary school students, computational thinking and metacognition, what influences computational thinking a theoretical and empirical study based on the influence of learning engagement on computational thinking in higher education, problem-solving and computational thinking practices: lesson learned from the implementation of expression model, the associations between computational thinking and creativity: the role of personal characteristics, computational thinking and coding across content areas to develop digital skills, 59 references, computational thinking is critical thinking: connecting to university discourse, goals, and learning outcomes, problem solving and computational thinking in a learning environment, computational thinking for all: pedagogical approaches to embedding 21st century problem solving in k-12 classrooms, thinking about computational thinking.

- Highly Influential

Which cognitive abilities underlie computational thinking? Criterion validity of the Computational Thinking Test

Defining computational thinking for mathematics and science classrooms, computational thinking in compulsory education: towards an agenda for research and practice, review on teaching and learning of computational thinking through programming: what is next for k-12, enhancing teaching through constructive alignment, targeting critical thinking within teacher education: the potential impact on society, related papers.

Showing 1 through 3 of 0 Related Papers

- Open access

- Published: 09 September 2024

The transfer effect of computational thinking (CT)-STEM: a systematic literature review and meta-analysis

- Zuokun Li 1 &

- Pey Tee Oon ORCID: orcid.org/0000-0002-1732-7953 1

International Journal of STEM Education volume 11 , Article number: 44 ( 2024 ) Cite this article

257 Accesses

1 Altmetric

Metrics details

Integrating computational thinking (CT) into STEM education has recently drawn significant attention, strengthened by the premise that CT and STEM are mutually reinforcing. Previous CT-STEM studies have examined theoretical interpretations, instructional strategies, and assessment targets. However, few have endeavored to delineate the transfer effects of CT-STEM on the development of cognitive and noncognitive benefits. Given this research gap, we conducted a systematic literature review and meta-analysis to provide deeper insights.

We analyzed results from 37 studies involving 7,832 students with 96 effect sizes. Our key findings include: (i) identification of 36 benefits; (ii) a moderate overall transfer effect, with moderate effects also observed for both near and far transfers; (iii) a stronger effect on cognitive benefits compared to noncognitive benefits, regardless of the transfer type; (iv) significant moderation by educational level, sample size, instructional strategies, and intervention duration on overall and near-transfer effects, with only educational level and sample size being significant moderators for far-transfer effects.

Conclusions

This study analyzes the cognitive and noncognitive benefits arising from CT-STEM’s transfer effects, providing new insights to foster more effective STEM classroom teaching.

Introduction

In recent years, computational thinking (CT) has emerged as one of the driving forces behind the resurgence of computer science in school curriculums, spanning from pre-school to higher education (Bers et al., 2014 ; Polat et al., 2021 ; Tikva & Tambouris, 2021a ). CT is complex, with many different definitions (Shute et al., 2017 ). Wing ( 2006 , p. 33) defines CT as a process that involves solving problems, designing systems, and understanding human behavior by drawing on the concepts fundamental to computer science (CS). Contrary to a common perception that CT belongs solely to CS, gradually, it has come to represent a universally applicable attitude and skill set (Tekdal, 2021 ) involving cross-disciplinary literacy (Ye et al., 2022 ), which can be applied to solving a wide range of problems within CS and other disciplines (Lai & Wong, 2022 ). Simply put, CT involves thinking like a computer scientist when solving problems, and it is a universal competence that everyone, not just computer scientists, should acquire (Hsu et al., 2018 ). Developing CT competency not only helps one acquire domain-specific knowledge but enhances one’s general ability to solve problems across various academic fields (Lu et al., 2022 ; Wing, 2008 ; Woo & Falloon, 2022 ; Xu et al., 2022 ), including STEM (science, technology, engineering, and mathematics) (Chen et al., 2023a ; Lee & Malyn-Smith, 2020 ; Wang et al., 2022a ; Waterman et al., 2020 ; Weintrop et al., 2016 ), the social sciences, and liberal arts (Knochel & Patton, 2015 ).

Given the importance of CT competency, integrating it into STEM education (CT-STEM) has emerged as a trend in recent years (Lee et al., 2020 ; Li & Anderson, 2020 ; Merino-Armero et al., 2022 ). CT-STEM represents the integration of CT practices with STEM learning content or context, grounded in the premise that a reciprocal relationship between STEM content learning and CT can enrich student learning (Cheng et al., 2023 ). Existing research supports that CT-STEM enhances student learning in two ways (Li et al., 2020b ). First, CT, viewed as a set of practices for bridging disciplinary teaching, shifts traditional subject forms towards computational-based STEM content learning (Wiebe et al., 2020 ). Engaging students in discipline-specific CT practices like modeling and simulation has been shown to improve their content understanding (Grover & Pea, 2013 ; Hurt et al., 2023 ) and enhance learning (Aksit & Wiebe, 2020 ; Rodríguez-Martínez et al., 2019 ; Yin et al., 2020 ). Another way is to take CT as a transdisciplinary thinking process and practice, providing a structured problem-solving framework that can reduce subject fixation (Ng et al., 2023 ). Aligning with integrated STEM (iSTEM) teaching, this approach equips students with critical skills such as analytical thinking, data manipulation, algorithmic thinking, collaboration, and creative solution development in authentic contexts (Tikva & Tambouris, 2021b ). Such skills are increasingly vital for addressing complex problems in a rapidly evolving digital and artificial intelligence-driven world.

Despite the growing interest in CT-STEM (Li et al., 2020b ; Tekdal, 2021 ), recent reviews indicate a focus on theoretical interpretations (Lee & Malyn-Smith, 2020 ; Weintrop et al., 2016 ), instructional strategies (Hutchins et al., 2020a ; Ma et al., 2021 ; Rachmatullah & Wiebe, 2022 ), and assessment targets (Bortz et al., 2020 ; Román- González et al., 2017). Although previous meta-analyses have shown CT-STEM’s positive impact on students meeting learning outcomes (Cheng et al., 2023 ), there is a gap in systematically analyzing its benefits, particularly in differentiating student learning via transfer effects (Popat & Starkey, 2019 ; Ye et al., 2022 ). Transfer, a key educational concept categorized as near and far transfer based on the theory of “common elements” (Perkins & Salomon, 1992 ), is crucial for understanding and evaluating CT-STEM’s utility and developing effective pedagogies. Previous studies have concentrated on cognitive learning outcomes (Cheng et al., 2023 ; Zhang & Wong, 2023 ) but offer limited insight into CT-STEM’s transfer effects on noncognitive outcomes like affective and social skills (Lai et al., 2023 ; Tang et al., 2020 ; Zhang et al., 2023 ). Given that CT-STEM effects extend beyond the cognitive domain (Ezeamuzie & Leung, 2021; Lu et al., 2022 ), it is equally important to recognize and nurture noncognitive benefits like self-efficacy, cooperativity, and communication in CT-STEM practices (Yun & Cho, 2022 ).

To better understand and evaluate CT-STEM transfer effects on students’ cognitive and noncognitive benefits acquisition, we systematically review published CT-STEM effects using PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines (Moher et al., 2010 ). We employ meta-analysis to quantify these effects and identify moderating variables. The following research questions guide our study:

RQ1: What cognitive and noncognitive benefits are acquired from CT-STEM’s near and far transfer effects?

RQ2: (a) What are the overall transfer effects of CT-STEM on cognitive and noncognitive benefits mentioned in Q1? and (b) What are the moderators of this effect?

RQ3: (a) What are the near and far transfer effects of CT-STEM on cognitive and noncognitive benefits mentioned in Q1? and (b) What are the moderators of these effects?

Literature review

- Computational thinking (CT)

The concept of procedural thinking was first introduced by Papert ( 1980 ), who connected programming to procedural thinking and laid a foundation for CT (Merino-Armero et al., 2022 ). Although Papert was the first to describe CT, Wing ( 2006 , 2008 , 2011 ) brought considerable attention back to the term, a focus that continues to date (Brennan & Resnick, 2012 ; Chen et al., 2023a ). Various other definitions have emerged in the literature, and there is no consensus definition of CT (Barr & Stephenson, 2011 ; Grover & Pea, 2013 ; Shute et al., 2017 ). The definitions of CT often incorporate programming and computing concepts (e.g., Israel-Fishelson & Hershkovitz, 2022 ) or consider CT to be a set of elements associated with both computing concepts and problem-solving skills (e.g., Kalelioglu et al., 2016 ; Piatti et al., 2022 ). From the former perspective, many researchers defined CT based on programming and computing concepts. For example, Denner et al. ( 2012 ) defined CT as a united competence composed of three key dimensions of CT: programming, documenting and understanding software, and designing for usability. An alternative defining framework (Brennan & Resnick, 2012 ), originating from a programming context (i.e., Scratch), focuses on CT concepts and practices, including computational terms of sequences, loops, conditionals, debugging, and reusing.

Viewed from the latter perspective, CT deviates from the competencies typically associated with simple computing or programming activities. Instead, it is characterized as a set of competencies encompassing domain-specific knowledge/skills in programming and problem-solving skills for non-programming scenarios (Lai & Ellefson, 2023 ; Li et al., 2020a ; Tsai et al., 2021 , 2022 ). Using this broad viewpoint, CT can be defined as a universally applicable skill set involved in problem-solving processes. For instance, ISTE and CSTA (2011) developed an operational definition of CT, which refers to a problem-solving process covering core skills, such as abstraction , problem reformulation , data practices , algorithmic thinking , automation & modeling & simulation, and generalization . Selby and Woollard ( 2013 ) proposed a process-oriented definition of CT based on its five essential practices: abstraction , decomposition , algorithmic thinking , evaluation , and generalization . Shute et al. ( 2017 ) provided a cross-disciplinary definition centered on solving problems effectively and efficiently, categorizing CT into six practices: decomposition , abstraction , algorithm design , debugging , iteration , and generalization . In all these cases, the essence of CT lies in a computer scientist’s approach to problems, which is a skill applicable to everyone’s daily life and across all learning domains.

The above classification of definitions mainly focuses on the cognitive aspect of CT. Other researchers have suggested that CT contains not only a cognitive component (Román-González et al., 2017 ) but also a noncognitive component, highlighting important dispositions and attitudes, including confidence in dealing with complexity, persistence in working with difficult problems, tolerance for ambiguity, the ability to deal with open-ended problems, and the ability to communicate and work with others to achieve a common goal or solution (Barr & Stephenson, 2011 ; CSTA & ISTE, 2011 ).

In short, while computational thinking (CT) is frequently associated with programming, its scope has significantly expanded over the years (Hurt et al., 2023 ; Kafai & Proctor, 2022 ). Building on these prior efforts, we define CT as a problem-solving/thought process that involves selecting and applying the appropriate tools and practices for solving problems effectively and efficiently. As a multifaceted set of skills and attitudes, CT includes both cognitive aspects, highlighting students’ interdisciplinary practices/skills, and noncognitive aspects like communication and collaboration.

Integrating CT in STEM education (CT-STEM)

There is an urgent need to bring CT into disciplinary classrooms to prepare students for new integrated fields (e.g., computational biology, computational physics, etc.) as practiced in the realistic professional world. To address this, a growing body of research and practice has focused on integrating CT into specific and iSTEM lessons (Jocius et al., 2021 ). This integration, i.e., CT-STEM, refers to the infusion of CT practices with STEM content/context, with the aim of enhancing students’ CT skills and STEM knowledge (Cheng et al., 2023 ). Accordingly, CT-STEM serves a dual purpose: one, it has the potential to foster the development of student CT practices and skills; and another, it simultaneously deepens students’ disciplinary understanding and improves learning performance within and across disciplines (Waterman et al., 2020 ). Current research reveals two potential ways this integration facilitates students’ STEM learning. First, integrating CT into STEM provides students with an essential, structured framework by characterizing CT as a thought process and general competency, with disciplinary classrooms offering “a meaningful context (and set of problems) within which CT can be applied” (Weintrop et al., 2016 , p. 128). Key processes of this problem-solving approach include: formulating problems computationally, data processing for solving problems, automating/simulating/modeling solutions, evaluating solutions, and generalizing solutions (Lyon & Magana, 2021 ; Wang et al., 2022a ). Engaging in these practices aids students in applying STEM content to complex problem-solving and develops their potential as future scientists and innovators, aligning with iSTEM teaching.

In addition, introducing CT within disciplinary classroom instruction transforms traditional STEM subject formats into an integrated computational-based approach. This way takes a specific set of CT practices naturally integrated into different STEM disciplines to facilitate students’ content learning (Li et al., 2020b ; Weller et al., 2022 ). Weintrop et al. ( 2016 ) identified four categories of CT practices in math and science education: data practices , modeling and simulation practices , computational problem-solving practices , and systems thinking practices . Engaging students in systems thinking practices can simplify the understanding of systems and phenomena within the STEM disciplines (Grover & Pea, 2013 ). Integrating CT involves students in data practices , modeling , simulation and/or using computational tools such as programming to generate representations, rules, and reasoning structures (Phillips et al., 2023 ). This aids in formulating predictions and explanations, visualizing systems, testing hypotheses, and enhancing students’ understanding of scientific phenomena and mechanisms (Eidin et al., 2024 ). When comparing the previously mentioned two integrated ways, the first places specific attention on developing discipline-general CT, while the second emphasizes improving students’ learning of disciplinary content and developing discipline-specific CT (Li et al., 2020b ).