6.1 Overview of Non-Experimental Research

Learning objectives.

- Define non-experimental research, distinguish it clearly from experimental research, and give several examples.

- Explain when a researcher might choose to conduct non-experimental research as opposed to experimental research.

What Is Non-Experimental Research?

Non-experimental research is research that lacks the manipulation of an independent variable. Rather than manipulating an independent variable, researchers conducting non-experimental research simply measure variables as they naturally occur (in the lab or real world).

Most researchers in psychology consider the distinction between experimental and non-experimental research to be an extremely important one. This is because although experimental research can provide strong evidence that changes in an independent variable cause differences in a dependent variable, non-experimental research generally cannot. As we will see, however, this inability to make causal conclusions does not mean that non-experimental research is less important than experimental research.

When to Use Non-Experimental Research

As we saw in the last chapter , experimental research is appropriate when the researcher has a specific research question or hypothesis about a causal relationship between two variables—and it is possible, feasible, and ethical to manipulate the independent variable. It stands to reason, therefore, that non-experimental research is appropriate—even necessary—when these conditions are not met. There are many times in which non-experimental research is preferred, including when:

- the research question or hypothesis relates to a single variable rather than a statistical relationship between two variables (e.g., How accurate are people’s first impressions?).

- the research question pertains to a non-causal statistical relationship between variables (e.g., is there a correlation between verbal intelligence and mathematical intelligence?).

- the research question is about a causal relationship, but the independent variable cannot be manipulated or participants cannot be randomly assigned to conditions or orders of conditions for practical or ethical reasons (e.g., does damage to a person’s hippocampus impair the formation of long-term memory traces?).

- the research question is broad and exploratory, or is about what it is like to have a particular experience (e.g., what is it like to be a working mother diagnosed with depression?).

Again, the choice between the experimental and non-experimental approaches is generally dictated by the nature of the research question. Recall the three goals of science are to describe, to predict, and to explain. If the goal is to explain and the research question pertains to causal relationships, then the experimental approach is typically preferred. If the goal is to describe or to predict, a non-experimental approach will suffice. But the two approaches can also be used to address the same research question in complementary ways. For example, Similarly, after his original study, Milgram conducted experiments to explore the factors that affect obedience. He manipulated several independent variables, such as the distance between the experimenter and the participant, the participant and the confederate, and the location of the study (Milgram, 1974) [1] .

Types of Non-Experimental Research

Non-experimental research falls into three broad categories: cross-sectional research, correlational research, and observational research.

First, cross-sectional research involves comparing two or more pre-existing groups of people. What makes this approach non-experimental is that there is no manipulation of an independent variable and no random assignment of participants to groups. Imagine, for example, that a researcher administers the Rosenberg Self-Esteem Scale to 50 American college students and 50 Japanese college students. Although this “feels” like a between-subjects experiment, it is a cross-sectional study because the researcher did not manipulate the students’ nationalities. As another example, if we wanted to compare the memory test performance of a group of cannabis users with a group of non-users, this would be considered a cross-sectional study because for ethical and practical reasons we would not be able to randomly assign participants to the cannabis user and non-user groups. Rather we would need to compare these pre-existing groups which could introduce a selection bias (the groups may differ in other ways that affect their responses on the dependent variable). For instance, cannabis users are more likely to use more alcohol and other drugs and these differences may account for differences in the dependent variable across groups, rather than cannabis use per se.

Cross-sectional designs are commonly used by developmental psychologists who study aging and by researchers interested in sex differences. Using this design, developmental psychologists compare groups of people of different ages (e.g., young adults spanning from 18-25 years of age versus older adults spanning 60-75 years of age) on various dependent variables (e.g., memory, depression, life satisfaction). Of course, the primary limitation of using this design to study the effects of aging is that differences between the groups other than age may account for differences in the dependent variable. For instance, differences between the groups may reflect the generation that people come from (a cohort effect) rather than a direct effect of age. For this reason, longitudinal studies in which one group of people is followed as they age offer a superior means of studying the effects of aging. Once again, cross-sectional designs are also commonly used to study sex differences. Since researchers cannot practically or ethically manipulate the sex of their participants they must rely on cross-sectional designs to compare groups of men and women on different outcomes (e.g., verbal ability, substance use, depression). Using these designs researchers have discovered that men are more likely than women to suffer from substance abuse problems while women are more likely than men to suffer from depression. But, using this design it is unclear what is causing these differences. So, using this design it is unclear whether these differences are due to environmental factors like socialization or biological factors like hormones?

When researchers use a participant characteristic to create groups (nationality, cannabis use, age, sex), the independent variable is usually referred to as an experimenter-selected independent variable (as opposed to the experimenter-manipulated independent variables used in experimental research). Figure 6.1 shows data from a hypothetical study on the relationship between whether people make a daily list of things to do (a “to-do list”) and stress. Notice that it is unclear whether this is an experiment or a cross-sectional study because it is unclear whether the independent variable was manipulated by the researcher or simply selected by the researcher. If the researcher randomly assigned some participants to make daily to-do lists and others not to, then the independent variable was experimenter-manipulated and it is a true experiment. If the researcher simply asked participants whether they made daily to-do lists or not, then the independent variable it is experimenter-selected and the study is cross-sectional. The distinction is important because if the study was an experiment, then it could be concluded that making the daily to-do lists reduced participants’ stress. But if it was a cross-sectional study, it could only be concluded that these variables are statistically related. Perhaps being stressed has a negative effect on people’s ability to plan ahead. Or perhaps people who are more conscientious are more likely to make to-do lists and less likely to be stressed. The crucial point is that what defines a study as experimental or cross-sectional l is not the variables being studied, nor whether the variables are quantitative or categorical, nor the type of graph or statistics used to analyze the data. It is how the study is conducted.

Figure 6.1 Results of a Hypothetical Study on Whether People Who Make Daily To-Do Lists Experience Less Stress Than People Who Do Not Make Such Lists

Second, the most common type of non-experimental research conducted in Psychology is correlational research. Correlational research is considered non-experimental because it focuses on the statistical relationship between two variables but does not include the manipulation of an independent variable. More specifically, in correlational research , the researcher measures two continuous variables with little or no attempt to control extraneous variables and then assesses the relationship between them. As an example, a researcher interested in the relationship between self-esteem and school achievement could collect data on students’ self-esteem and their GPAs to see if the two variables are statistically related. Correlational research is very similar to cross-sectional research, and sometimes these terms are used interchangeably. The distinction that will be made in this book is that, rather than comparing two or more pre-existing groups of people as is done with cross-sectional research, correlational research involves correlating two continuous variables (groups are not formed and compared).

Third, observational research is non-experimental because it focuses on making observations of behavior in a natural or laboratory setting without manipulating anything. Milgram’s original obedience study was non-experimental in this way. He was primarily interested in the extent to which participants obeyed the researcher when he told them to shock the confederate and he observed all participants performing the same task under the same conditions. The study by Loftus and Pickrell described at the beginning of this chapter is also a good example of observational research. The variable was whether participants “remembered” having experienced mildly traumatic childhood events (e.g., getting lost in a shopping mall) that they had not actually experienced but that the researchers asked them about repeatedly. In this particular study, nearly a third of the participants “remembered” at least one event. (As with Milgram’s original study, this study inspired several later experiments on the factors that affect false memories.

The types of research we have discussed so far are all quantitative, referring to the fact that the data consist of numbers that are analyzed using statistical techniques. But as you will learn in this chapter, many observational research studies are more qualitative in nature. In qualitative research , the data are usually nonnumerical and therefore cannot be analyzed using statistical techniques. Rosenhan’s observational study of the experience of people in a psychiatric ward was primarily qualitative. The data were the notes taken by the “pseudopatients”—the people pretending to have heard voices—along with their hospital records. Rosenhan’s analysis consists mainly of a written description of the experiences of the pseudopatients, supported by several concrete examples. To illustrate the hospital staff’s tendency to “depersonalize” their patients, he noted, “Upon being admitted, I and other pseudopatients took the initial physical examinations in a semi-public room, where staff members went about their own business as if we were not there” (Rosenhan, 1973, p. 256) [2] . Qualitative data has a separate set of analysis tools depending on the research question. For example, thematic analysis would focus on themes that emerge in the data or conversation analysis would focus on the way the words were said in an interview or focus group.

Internal Validity Revisited

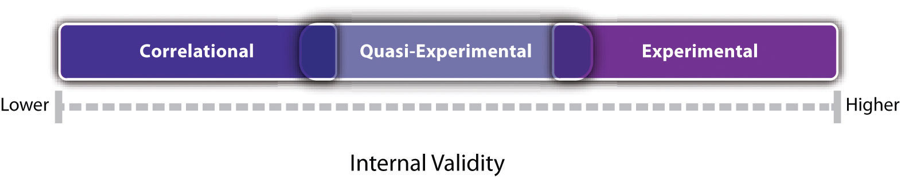

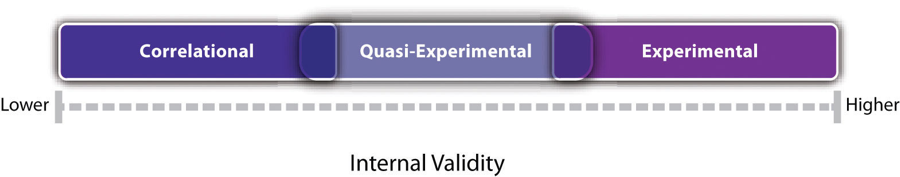

Recall that internal validity is the extent to which the design of a study supports the conclusion that changes in the independent variable caused any observed differences in the dependent variable. Figure 6.2 shows how experimental, quasi-experimental, and non-experimental (correlational) research vary in terms of internal validity. Experimental research tends to be highest in internal validity because the use of manipulation (of the independent variable) and control (of extraneous variables) help to rule out alternative explanations for the observed relationships. If the average score on the dependent variable in an experiment differs across conditions, it is quite likely that the independent variable is responsible for that difference. Non-experimental (correlational) research is lowest in internal validity because these designs fail to use manipulation or control. Quasi-experimental research (which will be described in more detail in a subsequent chapter) is in the middle because it contains some, but not all, of the features of a true experiment. For instance, it may fail to use random assignment to assign participants to groups or fail to use counterbalancing to control for potential order effects. Imagine, for example, that a researcher finds two similar schools, starts an anti-bullying program in one, and then finds fewer bullying incidents in that “treatment school” than in the “control school.” While a comparison is being made with a control condition, the lack of random assignment of children to schools could still mean that students in the treatment school differed from students in the control school in some other way that could explain the difference in bullying (e.g., there may be a selection effect).

Figure 6.2 Internal Validity of Correlation, Quasi-Experimental, and Experimental Studies. Experiments are generally high in internal validity, quasi-experiments lower, and correlation studies lower still.

Notice also in Figure 6.2 that there is some overlap in the internal validity of experiments, quasi-experiments, and correlational studies. For example, a poorly designed experiment that includes many confounding variables can be lower in internal validity than a well-designed quasi-experiment with no obvious confounding variables. Internal validity is also only one of several validities that one might consider, as noted in Chapter 5.

Key Takeaways

- Non-experimental research is research that lacks the manipulation of an independent variable.

- There are two broad types of non-experimental research. Correlational research that focuses on statistical relationships between variables that are measured but not manipulated, and observational research in which participants are observed and their behavior is recorded without the researcher interfering or manipulating any variables.

- In general, experimental research is high in internal validity, correlational research is low in internal validity, and quasi-experimental research is in between.

- A researcher conducts detailed interviews with unmarried teenage fathers to learn about how they feel and what they think about their role as fathers and summarizes their feelings in a written narrative.

- A researcher measures the impulsivity of a large sample of drivers and looks at the statistical relationship between this variable and the number of traffic tickets the drivers have received.

- A researcher randomly assigns patients with low back pain either to a treatment involving hypnosis or to a treatment involving exercise. She then measures their level of low back pain after 3 months.

- A college instructor gives weekly quizzes to students in one section of his course but no weekly quizzes to students in another section to see whether this has an effect on their test performance.

- Milgram, S. (1974). Obedience to authority: An experimental view . New York, NY: Harper & Row. ↵

- Rosenhan, D. L. (1973). On being sane in insane places. Science, 179 , 250–258. ↵

Share This Book

- Increase Font Size

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- v.11(2); 2019 Feb

Planning and Conducting Clinical Research: The Whole Process

Boon-how chew.

1 Family Medicine, Universiti Putra Malaysia, Serdang, MYS

The goal of this review was to present the essential steps in the entire process of clinical research. Research should begin with an educated idea arising from a clinical practice issue. A research topic rooted in a clinical problem provides the motivation for the completion of the research and relevancy for affecting medical practice changes and improvements. The research idea is further informed through a systematic literature review, clarified into a conceptual framework, and defined into an answerable research question. Engagement with clinical experts, experienced researchers, relevant stakeholders of the research topic, and even patients can enhance the research question’s relevance, feasibility, and efficiency. Clinical research can be completed in two major steps: study designing and study reporting. Three study designs should be planned in sequence and iterated until properly refined: theoretical design, data collection design, and statistical analysis design. The design of data collection could be further categorized into three facets: experimental or non-experimental, sampling or census, and time features of the variables to be studied. The ultimate aims of research reporting are to present findings succinctly and timely. Concise, explicit, and complete reporting are the guiding principles in clinical studies reporting.

Introduction and background

Medical and clinical research can be classified in many different ways. Probably, most people are familiar with basic (laboratory) research, clinical research, healthcare (services) research, health systems (policy) research, and educational research. Clinical research in this review refers to scientific research related to clinical practices. There are many ways a clinical research's findings can become invalid or less impactful including ignorance of previous similar studies, a paucity of similar studies, poor study design and implementation, low test agent efficacy, no predetermined statistical analysis, insufficient reporting, bias, and conflicts of interest [ 1 - 4 ]. Scientific, ethical, and moral decadence among researchers can be due to incognizant criteria in academic promotion and remuneration and too many forced studies by amateurs and students for the sake of research without adequate training or guidance [ 2 , 5 - 6 ]. This article will review the proper methods to conduct medical research from the planning stage to submission for publication (Table (Table1 1 ).

a Feasibility and efficiency are considered during the refinement of the research question and adhered to during data collection.

Epidemiologic studies in clinical and medical fields focus on the effect of a determinant on an outcome [ 7 ]. Measurement errors that happen systematically give rise to biases leading to invalid study results, whereas random measurement errors will cause imprecise reporting of effects. Precision can usually be increased with an increased sample size provided biases are avoided or trivialized. Otherwise, the increased precision will aggravate the biases. Because epidemiologic, clinical research focuses on measurement, measurement errors are addressed throughout the research process. Obtaining the most accurate estimate of a treatment effect constitutes the whole business of epidemiologic research in clinical practice. This is greatly facilitated by clinical expertise and current scientific knowledge of the research topic. Current scientific knowledge is acquired through literature reviews or in collaboration with an expert clinician. Collaboration and consultation with an expert clinician should also include input from the target population to confirm the relevance of the research question. The novelty of a research topic is less important than the clinical applicability of the topic. Researchers need to acquire appropriate writing and reporting skills from the beginning of their careers, and these skills should improve with persistent use and regular reviewing of published journal articles. A published clinical research study stands on solid scientific ground to inform clinical practice given the article has passed through proper peer-reviews, revision, and content improvement.

Systematic literature reviews

Systematic literature reviews of published papers will inform authors of the existing clinical evidence on a research topic. This is an important step to reduce wasted efforts and evaluate the planned study [ 8 ]. Conducting a systematic literature review is a well-known important step before embarking on a new study [ 9 ]. A rigorously performed and cautiously interpreted systematic review that includes in-process trials can inform researchers of several factors [ 10 ]. Reviewing the literature will inform the choice of recruitment methods, outcome measures, questionnaires, intervention details, and statistical strategies – useful information to increase the study’s relevance, value, and power. A good review of previous studies will also provide evidence of the effects of an intervention that may or may not be worthwhile; this would suggest either no further studies are warranted or that further study of the intervention is needed. A review can also inform whether a larger and better study is preferable to an additional small study. Reviews of previously published work may yield few studies or low-quality evidence from small or poorly designed studies on certain intervention or observation; this may encourage or discourage further research or prompt consideration of a first clinical trial.

Conceptual framework

The result of a literature review should include identifying a working conceptual framework to clarify the nature of the research problem, questions, and designs, and even guide the latter discussion of the findings and development of possible solutions. Conceptual frameworks represent ways of thinking about a problem or how complex things work the way they do [ 11 ]. Different frameworks will emphasize different variables and outcomes, and their inter-relatedness. Each framework highlights or emphasizes different aspects of a problem or research question. Often, any single conceptual framework presents only a partial view of reality [ 11 ]. Furthermore, each framework magnifies certain elements of the problem. Therefore, a thorough literature search is warranted for authors to avoid repeating the same research endeavors or mistakes. It may also help them find relevant conceptual frameworks including those that are outside one’s specialty or system.

Conceptual frameworks can come from theories with well-organized principles and propositions that have been confirmed by observations or experiments. Conceptual frameworks can also come from models derived from theories, observations or sets of concepts or even evidence-based best practices derived from past studies [ 11 ].

Researchers convey their assumptions of the associations of the variables explicitly in the conceptual framework to connect the research to the literature. After selecting a single conceptual framework or a combination of a few frameworks, a clinical study can be completed in two fundamental steps: study design and study report. Three study designs should be planned in sequence and iterated until satisfaction: the theoretical design, data collection design, and statistical analysis design [ 7 ].

Study designs

Theoretical Design

Theoretical design is the next important step in the research process after a literature review and conceptual framework identification. While the theoretical design is a crucial step in research planning, it is often dealt with lightly because of the more alluring second step (data collection design). In the theoretical design phase, a research question is designed to address a clinical problem, which involves an informed understanding based on the literature review and effective collaboration with the right experts and clinicians. A well-developed research question will have an initial hypothesis of the possible relationship between the explanatory variable/exposure and the outcome. This will inform the nature of the study design, be it qualitative or quantitative, primary or secondary, and non-causal or causal (Figure (Figure1 1 ).

A study is qualitative if the research question aims to explore, understand, describe, discover or generate reasons underlying certain phenomena. Qualitative studies usually focus on a process to determine how and why things happen [ 12 ]. Quantitative studies use deductive reasoning, and numerical statistical quantification of the association between groups on data often gathered during experiments [ 13 ]. A primary clinical study is an original study gathering a new set of patient-level data. Secondary research draws on the existing available data and pooling them into a larger database to generate a wider perspective or a more powerful conclusion. Non-causal or descriptive research aims to identify the determinants or associated factors for the outcome or health condition, without regard for causal relationships. Causal research is an exploration of the determinants of an outcome while mitigating confounding variables. Table Table2 2 shows examples of non-causal (e.g., diagnostic and prognostic) and causal (e.g., intervention and etiologic) clinical studies. Concordance between the research question, its aim, and the choice of theoretical design will provide a strong foundation and the right direction for the research process and path.

A problem in clinical epidemiology is phrased in a mathematical relationship below, where the outcome is a function of the determinant (D) conditional on the extraneous determinants (ED) or more commonly known as the confounding factors [ 7 ]:

For non-causal research, Outcome = f (D1, D2…Dn) For causal research, Outcome = f (D | ED)

A fine research question is composed of at least three components: 1) an outcome or a health condition, 2) determinant/s or associated factors to the outcome, and 3) the domain. The outcome and the determinants have to be clearly conceptualized and operationalized as measurable variables (Table (Table3; 3 ; PICOT [ 14 ] and FINER [ 15 ]). The study domain is the theoretical source population from which the study population will be sampled, similar to the wording on a drug package insert that reads, “use this medication (study results) in people with this disease” [ 7 ].

The interpretation of study results as they apply to wider populations is known as generalization, and generalization can either be statistical or made using scientific inferences [ 16 ]. Generalization supported by statistical inferences is seen in studies on disease prevalence where the sample population is representative of the source population. By contrast, generalizations made using scientific inferences are not bound by the representativeness of the sample in the study; rather, the generalization should be plausible from the underlying scientific mechanisms as long as the study design is valid and nonbiased. Scientific inferences and generalizations are usually the aims of causal studies.

Confounding: Confounding is a situation where true effects are obscured or confused [ 7 , 16 ]. Confounding variables or confounders affect the validity of a study’s outcomes and should be prevented or mitigated in the planning stages and further managed in the analytical stages. Confounders are also known as extraneous determinants in epidemiology due to their inherent and simultaneous relationships to both the determinant and outcome (Figure (Figure2), 2 ), which are usually one-determinant-to-one outcome in causal clinical studies. The known confounders are also called observed confounders. These can be minimized using randomization, restriction, or a matching strategy. Residual confounding has occurred in a causal relationship when identified confounders were not measured accurately. Unobserved confounding occurs when the confounding effect is present as a variable or factor not observed or yet defined and, thus, not measured in the study. Age and gender are almost universal confounders followed by ethnicity and socio-economic status.

Confounders have three main characteristics. They are a potential risk factor for the disease, associated with the determinant of interest, and should not be an intermediate variable between the determinant and the outcome or a precursor to the determinant. For example, a sedentary lifestyle is a cause for acute coronary syndrome (ACS), and smoking could be a confounder but not cardiorespiratory unfitness (which is an intermediate factor between a sedentary lifestyle and ACS). For patients with ACS, not having a pair of sports shoes is not a confounder – it is a correlate for the sedentary lifestyle. Similarly, depression would be a precursor, not a confounder.

Sample size consideration: Sample size calculation provides the required number of participants to be recruited in a new study to detect true differences in the target population if they exist. Sample size calculation is based on three facets: an estimated difference in group sizes, the probability of α (Type I) and β (Type II) errors chosen based on the nature of the treatment or intervention, and the estimated variability (interval data) or proportion of the outcome (nominal data) [ 17 - 18 ]. The clinically important effect sizes are determined based on expert consensus or patients’ perception of benefit. Value and economic consideration have increasingly been included in sample size estimations. Sample size and the degree to which the sample represents the target population affect the accuracy and generalization of a study’s reported effects.

Pilot study: Pilot studies assess the feasibility of the proposed research procedures on small sample size. Pilot studies test the efficiency of participant recruitment with minimal practice or service interruptions. Pilot studies should not be conducted to obtain a projected effect size for a larger study population because, in a typical pilot study, the sample size is small, leading to a large standard error of that effect size. This leads to bias when projected for a large population. In the case of underestimation, this could lead to inappropriately terminating the full-scale study. As the small pilot study is equally prone to bias of overestimation of the effect size, this would lead to an underpowered study and a failed full-scale study [ 19 ].

The Design of Data Collection

The “perfect” study design in the theoretical phase now faces the practical and realistic challenges of feasibility. This is the step where different methods for data collection are considered, with one selected as the most appropriate based on the theoretical design along with feasibility and efficiency. The goal of this stage is to achieve the highest possible validity with the lowest risk of biases given available resources and existing constraints.

In causal research, data on the outcome and determinants are collected with utmost accuracy via a strict protocol to maximize validity and precision. The validity of an instrument is defined as the degree of fidelity of the instrument, measuring what it is intended to measure, that is, the results of the measurement correlate with the true state of an occurrence. Another widely used word for validity is accuracy. Internal validity refers to the degree of accuracy of a study’s results to its own study sample. Internal validity is influenced by the study designs, whereas the external validity refers to the applicability of a study’s result in other populations. External validity is also known as generalizability and expresses the validity of assuming the similarity and comparability between the study population and the other populations. Reliability of an instrument denotes the extent of agreeableness of the results of repeated measurements of an occurrence by that instrument at a different time, by different investigators or in a different setting. Other terms that are used for reliability include reproducibility and precision. Preventing confounders by identifying and including them in data collection will allow statistical adjustment in the later analyses. In descriptive research, outcomes must be confirmed with a referent standard, and the determinants should be as valid as those found in real clinical practice.

Common designs for data collection include cross-sectional, case-control, cohort, and randomized controlled trials (RCTs). Many other modern epidemiology study designs are based on these classical study designs such as nested case-control, case-crossover, case-control without control, and stepwise wedge clustered RCTs. A cross-sectional study is typically a snapshot of the study population, and an RCT is almost always a prospective study. Case-control and cohort studies can be retrospective or prospective in data collection. The nested case-control design differs from the traditional case-control design in that it is “nested” in a well-defined cohort from which information on the cohorts can be obtained. This design also satisfies the assumption that cases and controls represent random samples of the same study base. Table Table4 4 provides examples of these data collection designs.

Additional aspects in data collection: No single design of data collection for any research question as stated in the theoretical design will be perfect in actual conduct. This is because of myriad issues facing the investigators such as the dynamic clinical practices, constraints of time and budget, the urgency for an answer to the research question, and the ethical integrity of the proposed experiment. Therefore, feasibility and efficiency without sacrificing validity and precision are important considerations in data collection design. Therefore, data collection design requires additional consideration in the following three aspects: experimental/non-experimental, sampling, and timing [ 7 ]:

Experimental or non-experimental: Non-experimental research (i.e., “observational”), in contrast to experimental, involves data collection of the study participants in their natural or real-world environments. Non-experimental researches are usually the diagnostic and prognostic studies with cross-sectional in data collection. The pinnacle of non-experimental research is the comparative effectiveness study, which is grouped with other non-experimental study designs such as cross-sectional, case-control, and cohort studies [ 20 ]. It is also known as the benchmarking-controlled trials because of the element of peer comparison (using comparable groups) in interpreting the outcome effects [ 20 ]. Experimental study designs are characterized by an intervention on a selected group of the study population in a controlled environment, and often in the presence of a similar group of the study population to act as a comparison group who receive no intervention (i.e., the control group). Thus, the widely known RCT is classified as an experimental design in data collection. An experimental study design without randomization is referred to as a quasi-experimental study. Experimental studies try to determine the efficacy of a new intervention on a specified population. Table Table5 5 presents the advantages and disadvantages of experimental and non-experimental studies [ 21 ].

a May be an issue in cross-sectional studies that require a long recall to the past such as dietary patterns, antenatal events, and life experiences during childhood.

Once an intervention yields a proven effect in an experimental study, non-experimental and quasi-experimental studies can be used to determine the intervention’s effect in a wider population and within real-world settings and clinical practices. Pragmatic or comparative effectiveness are the usual designs used for data collection in these situations [ 22 ].

Sampling/census: Census is a data collection on the whole source population (i.e., the study population is the source population). This is possible when the defined population is restricted to a given geographical area. A cohort study uses the census method in data collection. An ecologic study is a cohort study that collects summary measures of the study population instead of individual patient data. However, many studies sample from the source population and infer the results of the study to the source population for feasibility and efficiency because adequate sampling provides similar results to the census of the whole population. Important aspects of sampling in research planning are sample size and representation of the population. Sample size calculation accounts for the number of participants needed to be in the study to discover the actual association between the determinant and outcome. Sample size calculation relies on the primary objective or outcome of interest and is informed by the estimated possible differences or effect size from previous similar studies. Therefore, the sample size is a scientific estimation for the design of the planned study.

A sampling of participants or cases in a study can represent the study population and the larger population of patients in that disease space, but only in prevalence, diagnostic, and prognostic studies. Etiologic and interventional studies do not share this same level of representation. A cross-sectional study design is common for determining disease prevalence in the population. Cross-sectional studies can also determine the referent ranges of variables in the population and measure change over time (e.g., repeated cross-sectional studies). Besides being cost- and time-efficient, cross-sectional studies have no loss to follow-up; recall bias; learning effect on the participant; or variability over time in equipment, measurement, and technician. A cross-sectional design for an etiologic study is possible when the determinants do not change with time (e.g., gender, ethnicity, genetic traits, and blood groups).

In etiologic research, comparability between the exposed and the non-exposed groups is more important than sample representation. Comparability between these two groups will provide an accurate estimate of the effect of the exposure (risk factor) on the outcome (disease) and enable valid inference of the causal relation to the domain (the theoretical population). In a case-control study, a sampling of the control group should be taken from the same study population (study base), have similar profiles to the cases (matching) but do not have the outcome seen in the cases. Matching important factors minimizes the confounding of the factors and increases statistical efficiency by ensuring similar numbers of cases and controls in confounders’ strata [ 23 - 24 ]. Nonetheless, perfect matching is neither necessary nor achievable in a case-control study because a partial match could achieve most of the benefits of the perfect match regarding a more precise estimate of odds ratio than statistical control of confounding in unmatched designs [ 25 - 26 ]. Moreover, perfect or full matching can lead to an underestimation of the point estimates [ 27 - 28 ].

Time feature: The timing of data collection for the determinant and outcome characterizes the types of studies. A cross-sectional study has the axis of time zero (T = 0) for both the determinant and the outcome, which separates it from all other types of research that have time for the outcome T > 0. Retrospective or prospective studies refer to the direction of data collection. In retrospective studies, information on the determinant and outcome have been collected or recorded before. In prospective studies, this information will be collected in the future. These terms should not be used to describe the relationship between the determinant and the outcome in etiologic studies. Time of exposure to the determinant, the time of induction, and the time at risk for the outcome are important aspects to understand. Time at risk is the period of time exposed to the determinant risk factors. Time of induction is the time from the sufficient exposure to the risk or causal factors to the occurrence of a disease. The latent period is when the occurrence of a disease without manifestation of the disease such as in “silence” diseases for example cancers, hypertension and type 2 diabetes mellitus which is detected from screening practices. Figure Figure3 3 illustrates the time features of a variable. Variable timing is important for accurate data capture.

The Design of Statistical Analysis

Statistical analysis of epidemiologic data provides the estimate of effects after correcting for biases (e.g., confounding factors) measures the variability in the data from random errors or chance [ 7 , 16 , 29 ]. An effect estimate gives the size of an association between the studied variables or the level of effectiveness of an intervention. This quantitative result allows for comparison and assessment of the usefulness and significance of the association or the intervention between studies. This significance must be interpreted with a statistical model and an appropriate study design. Random errors could arise in the study resulting from unexplained personal choices by the participants. Random error is, therefore, when values or units of measurement between variables change in non-concerted or non-directional manner. Conversely, when these values or units of measurement between variables change in a concerted or directional manner, we note a significant relationship as shown by statistical significance.

Variability: Researchers almost always collect the needed data through a sampling of subjects/participants from a population instead of a census. The process of sampling or multiple sampling in different geographical regions or over different periods contributes to varied information due to the random inclusion of different participants and chance occurrence. This sampling variation becomes the focus of statistics when communicating the degree and intensity of variation in the sampled data and the level of inference in the population. Sampling variation can be influenced profoundly by the total number of participants and the width of differences of the measured variable (standard deviation). Hence, the characteristics of the participants, measurements and sample size are all important factors in planning a study.

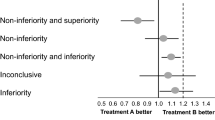

Statistical strategy: Statistical strategy is usually determined based on the theoretical and data collection designs. Use of a prespecified statistical strategy (including the decision to dichotomize any continuous data at certain cut-points, sub-group analysis or sensitive analyses) is recommended in the study proposal (i.e., protocol) to prevent data dredging and data-driven reports that predispose to bias. The nature of the study hypothesis also dictates whether directional (one-tailed) or non-directional (two-tailed) significance tests are conducted. In most studies, two-sided tests are used except in specific instances when unidirectional hypotheses may be appropriate (e.g., in superiority or non-inferiority trials). While data exploration is discouraged, epidemiological research is, by nature of its objectives, statistical research. Hence, it is acceptable to report the presence of persistent associations between any variables with plausible underlying mechanisms during the exploration of the data. The statistical methods used to produce the results should be explicitly explained. Many different statistical tests are used to handle various kinds of data appropriately (e.g., interval vs discrete), and/or the various distribution of the data (e.g., normally distributed or skewed). For additional details on statistical explanations and underlying concepts of statistical tests, readers are recommended the references as cited in this sentence [ 30 - 31 ].

Steps in statistical analyses: Statistical analysis begins with checking for data entry errors. Duplicates are eliminated, and proper units should be confirmed. Extremely low, high or suspicious values are confirmed from the source data again. If this is not possible, this is better classified as a missing value. However, if the unverified suspicious data are not obviously wrong, they should be further examined as an outlier in the analysis. The data checking and cleaning enables the analyst to establish a connection with the raw data and to anticipate possible results from further analyses. This initial step involves descriptive statistics that analyze central tendency (i.e., mode, median, and mean) and dispersion (i.e., (minimum, maximum, range, quartiles, absolute deviation, variance, and standard deviation) of the data. Certain graphical plotting such as scatter plot, a box-whiskers plot, histogram or normal Q-Q plot are helpful at this stage to verify data normality in distribution. See Figure Figure4 4 for the statistical tests available for analyses of different types of data.

Once data characteristics are ascertained, further statistical tests are selected. The analytical strategy sometimes involves the transformation of the data distribution for the selected tests (e.g., log, natural log, exponential, quadratic) or for checking the robustness of the association between the determinants and their outcomes. This step is also referred to as inferential statistics whereby the results are about hypothesis testing and generalization to the wider population that the study’s sampled participants represent. The last statistical step is checking whether the statistical analyses fulfill the assumptions of that particular statistical test and model to avoid violation and misleading results. These assumptions include evaluating normality, variance homogeneity, and residuals included in the final statistical model. Other statistical values such as Akaike information criterion, variance inflation factor/tolerance, and R2 are also considered when choosing the best-fitted models. Transforming raw data could be done, or a higher level of statistical analyses can be used (e.g., generalized linear models and mixed-effect modeling). Successful statistical analysis allows conclusions of the study to fit the data.

Bayesian and Frequentist statistical frameworks: Most of the current clinical research reporting is based on the frequentist approach and hypotheses testing p values and confidence intervals. The frequentist approach assumes the acquired data are random, attained by random sampling, through randomized experiments or influences, and with random errors. The distribution of the data (its point estimate and confident interval) infers a true parameter in the real population. The major conceptual difference between Bayesian statistics and frequentist statistics is that in Bayesian statistics, the parameter (i.e., the studied variable in the population) is random and the data acquired is real (true or fix). Therefore, the Bayesian approach provides a probability interval for the parameter. The studied parameter is random because it could vary and be affected by prior beliefs, experience or evidence of plausibility. In the Bayesian statistical approach, this prior belief or available knowledge is quantified into a probability distribution and incorporated into the acquired data to get the results (i.e., the posterior distribution). This uses mathematical theory of Bayes’ Theorem to “turn around” conditional probabilities.

The goal of research reporting is to present findings succinctly and timely via conference proceedings or journal publication. Concise and explicit language use, with all the necessary details to enable replication and judgment of the study applicability, are the guiding principles in clinical studies reporting.

Writing for Reporting

Medical writing is very much a technical chore that accommodates little artistic expression. Research reporting in medicine and health sciences emphasize clear and standardized reporting, eschewing adjectives and adverbs extensively used in popular literature. Regularly reviewing published journal articles can familiarize authors with proper reporting styles and help enhance writing skills. Authors should familiarize themselves with standard, concise, and appropriate rhetoric for the intended audience, which includes consideration for journal reviewers, editors, and referees. However, proper language can be somewhat subjective. While each publication may have varying requirements for submission, the technical requirements for formatting an article are usually available via author or submission guidelines provided by the target journal.

Research reports for publication often contain a title, abstract, introduction, methods, results, discussion, and conclusions section, and authors may want to write each section in sequence. However, best practices indicate the abstract and title should be written last. Authors may find that when writing one section of the report, ideas come to mind that pertains to other sections, so careful note taking is encouraged. One effective approach is to organize and write the result section first, followed by the discussion and conclusions sections. Once these are drafted, write the introduction, abstract, and the title of the report. Regardless of the sequence of writing, the author should begin with a clear and relevant research question to guide the statistical analyses, result interpretation, and discussion. The study findings can be a motivator to propel the author through the writing process, and the conclusions can help the author draft a focused introduction.

Writing for Publication

Specific recommendations on effective medical writing and table generation are available [ 32 ]. One such resource is Effective Medical Writing: The Write Way to Get Published, which is an updated collection of medical writing articles previously published in the Singapore Medical Journal [ 33 ]. The British Medical Journal’s Statistics Notes series also elucidates common and important statistical concepts and usages in clinical studies. Writing guides are also available from individual professional societies, journals, or publishers such as Chest (American College of Physicians) medical writing tips, PLoS Reporting guidelines collection, Springer’s Journal Author Academy, and SAGE’s Research methods [ 34 - 37 ]. Standardized research reporting guidelines often come in the form of checklists and flow diagrams. Table Table6 6 presents a list of reporting guidelines. A full compilation of these guidelines is available at the EQUATOR (Enhancing the QUAlity and Transparency Of health Research) Network website [ 38 ] which aims to improve the reliability and value of medical literature by promoting transparent and accurate reporting of research studies. Publication of the trial protocol in a publicly available database is almost compulsory for publication of the full report in many potential journals.

Graphics and Tables

Graphics and tables should emphasize salient features of the underlying data and should coherently summarize large quantities of information. Although graphics provide a break from dense prose, authors must not forget that these illustrations should be scientifically informative, not decorative. The titles for graphics and tables should be clear, informative, provide the sample size, and use minimal font weight and formatting only to distinguish headings, data entry or to highlight certain results. Provide a consistent number of decimal points for the numerical results, and with no more than four for the P value. Most journals prefer cell-delineated tables created using the table function in word processing or spreadsheet programs. Some journals require specific table formatting such as the absence or presence of intermediate horizontal lines between cells.

Decisions of authorship are both sensitive and important and should be made at an early stage by the study’s stakeholders. Guidelines and journals’ instructions to authors abound with authorship qualifications. The guideline on authorship by the International Committee of Medical Journal Editors is widely known and provides a standard used by many medical and clinical journals [ 39 ]. Generally, authors are those who have made major contributions to the design, conduct, and analysis of the study, and who provided critical readings of the manuscript (if not involved directly in manuscript writing).

Picking a target journal for submission

Once a report has been written and revised, the authors should select a relevant target journal for submission. Authors should avoid predatory journals—publications that do not aim to advance science and disseminate quality research. These journals focus on commercial gain in medical and clinical publishing. Two good resources for authors during journal selection are Think-Check-Submit and the defunct Beall's List of Predatory Publishers and Journals (now archived and maintained by an anonymous third-party) [ 40 , 41 ]. Alternatively, reputable journal indexes such as Thomson Reuters Journal Citation Reports, SCOPUS, MedLine, PubMed, EMBASE, EBSCO Publishing's Electronic Databases are available areas to start the search for an appropriate target journal. Authors should review the journals’ names, aims/scope, and recently published articles to determine the kind of research each journal accepts for publication. Open-access journals almost always charge article publication fees, while subscription-based journals tend to publish without author fees and instead rely on subscription or access fees for the full text of published articles.

Conclusions

Conducting a valid clinical research requires consideration of theoretical study design, data collection design, and statistical analysis design. Proper study design implementation and quality control during data collection ensures high-quality data analysis and can mitigate bias and confounders during statistical analysis and data interpretation. Clear, effective study reporting facilitates dissemination, appreciation, and adoption, and allows the researchers to affect real-world change in clinical practices and care models. Neutral or absence of findings in a clinical study are as important as positive or negative findings. Valid studies, even when they report an absence of expected results, still inform scientific communities of the nature of a certain treatment or intervention, and this contributes to future research, systematic reviews, and meta-analyses. Reporting a study adequately and comprehensively is important for accuracy, transparency, and reproducibility of the scientific work as well as informing readers.

Acknowledgments

The author would like to thank Universiti Putra Malaysia and the Ministry of Higher Education, Malaysia for their support in sponsoring the Ph.D. study and living allowances for Boon-How Chew.

The content published in Cureus is the result of clinical experience and/or research by independent individuals or organizations. Cureus is not responsible for the scientific accuracy or reliability of data or conclusions published herein. All content published within Cureus is intended only for educational, research and reference purposes. Additionally, articles published within Cureus should not be deemed a suitable substitute for the advice of a qualified health care professional. Do not disregard or avoid professional medical advice due to content published within Cureus.

The materials presented in this paper is being organized by the author into a book.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

7.1 Overview of Nonexperimental Research

Learning objectives.

- Define nonexperimental research, distinguish it clearly from experimental research, and give several examples.

- Explain when a researcher might choose to conduct nonexperimental research as opposed to experimental research.

What Is Nonexperimental Research?

Nonexperimental research is research that lacks the manipulation of an independent variable, random assignment of participants to conditions or orders of conditions, or both.

In a sense, it is unfair to define this large and diverse set of approaches collectively by what they are not . But doing so reflects the fact that most researchers in psychology consider the distinction between experimental and nonexperimental research to be an extremely important one. This is because while experimental research can provide strong evidence that changes in an independent variable cause differences in a dependent variable, nonexperimental research generally cannot. As we will see, however, this does not mean that nonexperimental research is less important than experimental research or inferior to it in any general sense.

When to Use Nonexperimental Research

As we saw in Chapter 6 “Experimental Research” , experimental research is appropriate when the researcher has a specific research question or hypothesis about a causal relationship between two variables—and it is possible, feasible, and ethical to manipulate the independent variable and randomly assign participants to conditions or to orders of conditions. It stands to reason, therefore, that nonexperimental research is appropriate—even necessary—when these conditions are not met. There are many ways in which this can be the case.

- The research question or hypothesis can be about a single variable rather than a statistical relationship between two variables (e.g., How accurate are people’s first impressions?).

- The research question can be about a noncausal statistical relationship between variables (e.g., Is there a correlation between verbal intelligence and mathematical intelligence?).

- The research question can be about a causal relationship, but the independent variable cannot be manipulated or participants cannot be randomly assigned to conditions or orders of conditions (e.g., Does damage to a person’s hippocampus impair the formation of long-term memory traces?).

- The research question can be broad and exploratory, or it can be about what it is like to have a particular experience (e.g., What is it like to be a working mother diagnosed with depression?).

Again, the choice between the experimental and nonexperimental approaches is generally dictated by the nature of the research question. If it is about a causal relationship and involves an independent variable that can be manipulated, the experimental approach is typically preferred. Otherwise, the nonexperimental approach is preferred. But the two approaches can also be used to address the same research question in complementary ways. For example, nonexperimental studies establishing that there is a relationship between watching violent television and aggressive behavior have been complemented by experimental studies confirming that the relationship is a causal one (Bushman & Huesmann, 2001). Similarly, after his original study, Milgram conducted experiments to explore the factors that affect obedience. He manipulated several independent variables, such as the distance between the experimenter and the participant, the participant and the confederate, and the location of the study (Milgram, 1974).

Types of Nonexperimental Research

Nonexperimental research falls into three broad categories: single-variable research, correlational and quasi-experimental research, and qualitative research. First, research can be nonexperimental because it focuses on a single variable rather than a statistical relationship between two variables. Although there is no widely shared term for this kind of research, we will call it single-variable research . Milgram’s original obedience study was nonexperimental in this way. He was primarily interested in one variable—the extent to which participants obeyed the researcher when he told them to shock the confederate—and he observed all participants performing the same task under the same conditions. The study by Loftus and Pickrell described at the beginning of this chapter is also a good example of single-variable research. The variable was whether participants “remembered” having experienced mildly traumatic childhood events (e.g., getting lost in a shopping mall) that they had not actually experienced but that the research asked them about repeatedly. In this particular study, nearly a third of the participants “remembered” at least one event. (As with Milgram’s original study, this study inspired several later experiments on the factors that affect false memories.)

As these examples make clear, single-variable research can answer interesting and important questions. What it cannot do, however, is answer questions about statistical relationships between variables. This is a point that beginning researchers sometimes miss. Imagine, for example, a group of research methods students interested in the relationship between children’s being the victim of bullying and the children’s self-esteem. The first thing that is likely to occur to these researchers is to obtain a sample of middle-school students who have been bullied and then to measure their self-esteem. But this would be a single-variable study with self-esteem as the only variable. Although it would tell the researchers something about the self-esteem of children who have been bullied, it would not tell them what they really want to know, which is how the self-esteem of children who have been bullied compares with the self-esteem of children who have not. Is it lower? Is it the same? Could it even be higher? To answer this question, their sample would also have to include middle-school students who have not been bullied.

Research can also be nonexperimental because it focuses on a statistical relationship between two variables but does not include the manipulation of an independent variable, random assignment of participants to conditions or orders of conditions, or both. This kind of research takes two basic forms: correlational research and quasi-experimental research. In correlational research , the researcher measures the two variables of interest with little or no attempt to control extraneous variables and then assesses the relationship between them. A research methods student who finds out whether each of several middle-school students has been bullied and then measures each student’s self-esteem is conducting correlational research. In quasi-experimental research , the researcher manipulates an independent variable but does not randomly assign participants to conditions or orders of conditions. For example, a researcher might start an antibullying program (a kind of treatment) at one school and compare the incidence of bullying at that school with the incidence at a similar school that has no antibullying program.

The final way in which research can be nonexperimental is that it can be qualitative. The types of research we have discussed so far are all quantitative, referring to the fact that the data consist of numbers that are analyzed using statistical techniques. In qualitative research , the data are usually nonnumerical and are analyzed using nonstatistical techniques. Rosenhan’s study of the experience of people in a psychiatric ward was primarily qualitative. The data were the notes taken by the “pseudopatients”—the people pretending to have heard voices—along with their hospital records. Rosenhan’s analysis consists mainly of a written description of the experiences of the pseudopatients, supported by several concrete examples. To illustrate the hospital staff’s tendency to “depersonalize” their patients, he noted, “Upon being admitted, I and other pseudopatients took the initial physical examinations in a semipublic room, where staff members went about their own business as if we were not there” (Rosenhan, 1973, p. 256).

Internal Validity Revisited

Recall that internal validity is the extent to which the design of a study supports the conclusion that changes in the independent variable caused any observed differences in the dependent variable. Figure 7.1 shows how experimental, quasi-experimental, and correlational research vary in terms of internal validity. Experimental research tends to be highest because it addresses the directionality and third-variable problems through manipulation and the control of extraneous variables through random assignment. If the average score on the dependent variable in an experiment differs across conditions, it is quite likely that the independent variable is responsible for that difference. Correlational research is lowest because it fails to address either problem. If the average score on the dependent variable differs across levels of the independent variable, it could be that the independent variable is responsible, but there are other interpretations. In some situations, the direction of causality could be reversed. In others, there could be a third variable that is causing differences in both the independent and dependent variables. Quasi-experimental research is in the middle because the manipulation of the independent variable addresses some problems, but the lack of random assignment and experimental control fails to address others. Imagine, for example, that a researcher finds two similar schools, starts an antibullying program in one, and then finds fewer bullying incidents in that “treatment school” than in the “control school.” There is no directionality problem because clearly the number of bullying incidents did not determine which school got the program. However, the lack of random assignment of children to schools could still mean that students in the treatment school differed from students in the control school in some other way that could explain the difference in bullying.

Experiments are generally high in internal validity, quasi-experiments lower, and correlational studies lower still.

Notice also in Figure 7.1 that there is some overlap in the internal validity of experiments, quasi-experiments, and correlational studies. For example, a poorly designed experiment that includes many confounding variables can be lower in internal validity than a well designed quasi-experiment with no obvious confounding variables.

Key Takeaways

- Nonexperimental research is research that lacks the manipulation of an independent variable, control of extraneous variables through random assignment, or both.

- There are three broad types of nonexperimental research. Single-variable research focuses on a single variable rather than a relationship between variables. Correlational and quasi-experimental research focus on a statistical relationship but lack manipulation or random assignment. Qualitative research focuses on broader research questions, typically involves collecting large amounts of data from a small number of participants, and analyzes the data nonstatistically.

- In general, experimental research is high in internal validity, correlational research is low in internal validity, and quasi-experimental research is in between.

Discussion: For each of the following studies, decide which type of research design it is and explain why.

- A researcher conducts detailed interviews with unmarried teenage fathers to learn about how they feel and what they think about their role as fathers and summarizes their feelings in a written narrative.

- A researcher measures the impulsivity of a large sample of drivers and looks at the statistical relationship between this variable and the number of traffic tickets the drivers have received.

- A researcher randomly assigns patients with low back pain either to a treatment involving hypnosis or to a treatment involving exercise. She then measures their level of low back pain after 3 months.

- A college instructor gives weekly quizzes to students in one section of his course but no weekly quizzes to students in another section to see whether this has an effect on their test performance.

Bushman, B. J., & Huesmann, L. R. (2001). Effects of televised violence on aggression. In D. Singer & J. Singer (Eds.), Handbook of children and the media (pp. 223–254). Thousand Oaks, CA: Sage.

Milgram, S. (1974). Obedience to authority: An experimental view . New York, NY: Harper & Row.

Rosenhan, D. L. (1973). On being sane in insane places. Science, 179 , 250–258.

Research Methods in Psychology Copyright © 2016 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 7: Nonexperimental Research

Overview of Nonexperimental Research

Learning Objectives

- Define nonexperimental research, distinguish it clearly from experimental research, and give several examples.

- Explain when a researcher might choose to conduct nonexperimental research as opposed to experimental research.

What Is Nonexperimental Research?

Nonexperimental research is research that lacks the manipulation of an independent variable, random assignment of participants to conditions or orders of conditions, or both.

In a sense, it is unfair to define this large and diverse set of approaches collectively by what they are not . But doing so reflects the fact that most researchers in psychology consider the distinction between experimental and nonexperimental research to be an extremely important one. This distinction is because although experimental research can provide strong evidence that changes in an independent variable cause differences in a dependent variable, nonexperimental research generally cannot. As we will see, however, this inability does not mean that nonexperimental research is less important than experimental research or inferior to it in any general sense.

When to Use Nonexperimental Research

As we saw in Chapter 6 , experimental research is appropriate when the researcher has a specific research question or hypothesis about a causal relationship between two variables—and it is possible, feasible, and ethical to manipulate the independent variable and randomly assign participants to conditions or to orders of conditions. It stands to reason, therefore, that nonexperimental research is appropriate—even necessary—when these conditions are not met. There are many ways in which preferring nonexperimental research can be the case.

- The research question or hypothesis can be about a single variable rather than a statistical relationship between two variables (e.g., How accurate are people’s first impressions?).

- The research question can be about a noncausal statistical relationship between variables (e.g., Is there a correlation between verbal intelligence and mathematical intelligence?).

- The research question can be about a causal relationship, but the independent variable cannot be manipulated or participants cannot be randomly assigned to conditions or orders of conditions (e.g., Does damage to a person’s hippocampus impair the formation of long-term memory traces?).

- The research question can be broad and exploratory, or it can be about what it is like to have a particular experience (e.g., What is it like to be a working mother diagnosed with depression?).

Again, the choice between the experimental and nonexperimental approaches is generally dictated by the nature of the research question. If it is about a causal relationship and involves an independent variable that can be manipulated, the experimental approach is typically preferred. Otherwise, the nonexperimental approach is preferred. But the two approaches can also be used to address the same research question in complementary ways. For example, nonexperimental studies establishing that there is a relationship between watching violent television and aggressive behaviour have been complemented by experimental studies confirming that the relationship is a causal one (Bushman & Huesmann, 2001) [1] . Similarly, after his original study, Milgram conducted experiments to explore the factors that affect obedience. He manipulated several independent variables, such as the distance between the experimenter and the participant, the participant and the confederate, and the location of the study (Milgram, 1974) [2] .

Types of Nonexperimental Research

Nonexperimental research falls into three broad categories: single-variable research, correlational and quasi-experimental research, and qualitative research. First, research can be nonexperimental because it focuses on a single variable rather than a statistical relationship between two variables. Although there is no widely shared term for this kind of research, we will call it single-variable research . Milgram’s original obedience study was nonexperimental in this way. He was primarily interested in one variable—the extent to which participants obeyed the researcher when he told them to shock the confederate—and he observed all participants performing the same task under the same conditions. The study by Loftus and Pickrell described at the beginning of this chapter is also a good example of single-variable research. The variable was whether participants “remembered” having experienced mildly traumatic childhood events (e.g., getting lost in a shopping mall) that they had not actually experienced but that the research asked them about repeatedly. In this particular study, nearly a third of the participants “remembered” at least one event. (As with Milgram’s original study, this study inspired several later experiments on the factors that affect false memories.)

As these examples make clear, single-variable research can answer interesting and important questions. What it cannot do, however, is answer questions about statistical relationships between variables. This detail is a point that beginning researchers sometimes miss. Imagine, for example, a group of research methods students interested in the relationship between children’s being the victim of bullying and the children’s self-esteem. The first thing that is likely to occur to these researchers is to obtain a sample of middle-school students who have been bullied and then to measure their self-esteem. But this design would be a single-variable study with self-esteem as the only variable. Although it would tell the researchers something about the self-esteem of children who have been bullied, it would not tell them what they really want to know, which is how the self-esteem of children who have been bullied compares with the self-esteem of children who have not. Is it lower? Is it the same? Could it even be higher? To answer this question, their sample would also have to include middle-school students who have not been bullied thereby introducing another variable.

Research can also be nonexperimental because it focuses on a statistical relationship between two variables but does not include the manipulation of an independent variable, random assignment of participants to conditions or orders of conditions, or both. This kind of research takes two basic forms: correlational research and quasi-experimental research. In correlational research , the researcher measures the two variables of interest with little or no attempt to control extraneous variables and then assesses the relationship between them. A research methods student who finds out whether each of several middle-school students has been bullied and then measures each student’s self-esteem is conducting correlational research. In quasi-experimental research , the researcher manipulates an independent variable but does not randomly assign participants to conditions or orders of conditions. For example, a researcher might start an antibullying program (a kind of treatment) at one school and compare the incidence of bullying at that school with the incidence at a similar school that has no antibullying program.

The final way in which research can be nonexperimental is that it can be qualitative. The types of research we have discussed so far are all quantitative, referring to the fact that the data consist of numbers that are analyzed using statistical techniques. In qualitative research , the data are usually nonnumerical and therefore cannot be analyzed using statistical techniques. Rosenhan’s study of the experience of people in a psychiatric ward was primarily qualitative. The data were the notes taken by the “pseudopatients”—the people pretending to have heard voices—along with their hospital records. Rosenhan’s analysis consists mainly of a written description of the experiences of the pseudopatients, supported by several concrete examples. To illustrate the hospital staff’s tendency to “depersonalize” their patients, he noted, “Upon being admitted, I and other pseudopatients took the initial physical examinations in a semipublic room, where staff members went about their own business as if we were not there” (Rosenhan, 1973, p. 256). [3] Qualitative data has a separate set of analysis tools depending on the research question. For example, thematic analysis would focus on themes that emerge in the data or conversation analysis would focus on the way the words were said in an interview or focus group.

Internal Validity Revisited