- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

Data analysis in qualitative research

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,

- Content Analysis: It is widely accepted and the most frequently employed technique for data analysis in research methodology. It can be used to analyze the documented information from text, images, and sometimes from the physical items. It depends on the research questions to predict when and where to use this method.

- Narrative Analysis: This method is used to analyze content gathered from various sources such as personal interviews, field observation, and surveys . The majority of times, stories, or opinions shared by people are focused on finding answers to the research questions.

- Discourse Analysis: Similar to narrative analysis, discourse analysis is used to analyze the interactions with people. Nevertheless, this particular method considers the social context under which or within which the communication between the researcher and respondent takes place. In addition to that, discourse analysis also focuses on the lifestyle and day-to-day environment while deriving any conclusion.

- Grounded Theory: When you want to explain why a particular phenomenon happened, then using grounded theory for analyzing quality data is the best resort. Grounded theory is applied to study data about the host of similar cases occurring in different settings. When researchers are using this method, they might alter explanations or produce new ones until they arrive at some conclusion.

LEARN ABOUT: 12 Best Tools for Researchers

Data analysis in quantitative research

The first stage in research and data analysis is to make it for the analysis so that the nominal data can be converted into something meaningful. Data preparation consists of the below phases.

Phase I: Data Validation

Data validation is done to understand if the collected data sample is per the pre-set standards, or it is a biased data sample again divided into four different stages

- Fraud: To ensure an actual human being records each response to the survey or the questionnaire

- Screening: To make sure each participant or respondent is selected or chosen in compliance with the research criteria

- Procedure: To ensure ethical standards were maintained while collecting the data sample

- Completeness: To ensure that the respondent has answered all the questions in an online survey. Else, the interviewer had asked all the questions devised in the questionnaire.

Phase II: Data Editing

More often, an extensive research data sample comes loaded with errors. Respondents sometimes fill in some fields incorrectly or sometimes skip them accidentally. Data editing is a process wherein the researchers have to confirm that the provided data is free of such errors. They need to conduct necessary checks and outlier checks to edit the raw edit and make it ready for analysis.

Phase III: Data Coding

Out of all three, this is the most critical phase of data preparation associated with grouping and assigning values to the survey responses . If a survey is completed with a 1000 sample size, the researcher will create an age bracket to distinguish the respondents based on their age. Thus, it becomes easier to analyze small data buckets rather than deal with the massive data pile.

LEARN ABOUT: Steps in Qualitative Research

After the data is prepared for analysis, researchers are open to using different research and data analysis methods to derive meaningful insights. For sure, statistical analysis plans are the most favored to analyze numerical data. In statistical analysis, distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities. The method is again classified into two groups. First, ‘Descriptive Statistics’ used to describe data. Second, ‘Inferential statistics’ that helps in comparing the data .

Descriptive statistics

This method is used to describe the basic features of versatile types of data in research. It presents the data in such a meaningful way that pattern in the data starts making sense. Nevertheless, the descriptive analysis does not go beyond making conclusions. The conclusions are again based on the hypothesis researchers have formulated so far. Here are a few major types of descriptive analysis methods.

Measures of Frequency

- Count, Percent, Frequency

- It is used to denote home often a particular event occurs.

- Researchers use it when they want to showcase how often a response is given.

Measures of Central Tendency

- Mean, Median, Mode

- The method is widely used to demonstrate distribution by various points.

- Researchers use this method when they want to showcase the most commonly or averagely indicated response.

Measures of Dispersion or Variation

- Range, Variance, Standard deviation

- Here the field equals high/low points.

- Variance standard deviation = difference between the observed score and mean

- It is used to identify the spread of scores by stating intervals.

- Researchers use this method to showcase data spread out. It helps them identify the depth until which the data is spread out that it directly affects the mean.

Measures of Position

- Percentile ranks, Quartile ranks

- It relies on standardized scores helping researchers to identify the relationship between different scores.

- It is often used when researchers want to compare scores with the average count.

For quantitative research use of descriptive analysis often give absolute numbers, but the in-depth analysis is never sufficient to demonstrate the rationale behind those numbers. Nevertheless, it is necessary to think of the best method for research and data analysis suiting your survey questionnaire and what story researchers want to tell. For example, the mean is the best way to demonstrate the students’ average scores in schools. It is better to rely on the descriptive statistics when the researchers intend to keep the research or outcome limited to the provided sample without generalizing it. For example, when you want to compare average voting done in two different cities, differential statistics are enough.

Descriptive analysis is also called a ‘univariate analysis’ since it is commonly used to analyze a single variable.

Inferential statistics

Inferential statistics are used to make predictions about a larger population after research and data analysis of the representing population’s collected sample. For example, you can ask some odd 100 audiences at a movie theater if they like the movie they are watching. Researchers then use inferential statistics on the collected sample to reason that about 80-90% of people like the movie.

Here are two significant areas of inferential statistics.

- Estimating parameters: It takes statistics from the sample research data and demonstrates something about the population parameter.

- Hypothesis test: I t’s about sampling research data to answer the survey research questions. For example, researchers might be interested to understand if the new shade of lipstick recently launched is good or not, or if the multivitamin capsules help children to perform better at games.

These are sophisticated analysis methods used to showcase the relationship between different variables instead of describing a single variable. It is often used when researchers want something beyond absolute numbers to understand the relationship between variables.

Here are some of the commonly used methods for data analysis in research.

- Correlation: When researchers are not conducting experimental research or quasi-experimental research wherein the researchers are interested to understand the relationship between two or more variables, they opt for correlational research methods.

- Cross-tabulation: Also called contingency tables, cross-tabulation is used to analyze the relationship between multiple variables. Suppose provided data has age and gender categories presented in rows and columns. A two-dimensional cross-tabulation helps for seamless data analysis and research by showing the number of males and females in each age category.

- Regression analysis: For understanding the strong relationship between two variables, researchers do not look beyond the primary and commonly used regression analysis method, which is also a type of predictive analysis used. In this method, you have an essential factor called the dependent variable. You also have multiple independent variables in regression analysis. You undertake efforts to find out the impact of independent variables on the dependent variable. The values of both independent and dependent variables are assumed as being ascertained in an error-free random manner.

- Frequency tables: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Analysis of variance: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Researchers must have the necessary research skills to analyze and manipulation the data , Getting trained to demonstrate a high standard of research practice. Ideally, researchers must possess more than a basic understanding of the rationale of selecting one statistical method over the other to obtain better data insights.

- Usually, research and data analytics projects differ by scientific discipline; therefore, getting statistical advice at the beginning of analysis helps design a survey questionnaire, select data collection methods , and choose samples.

LEARN ABOUT: Best Data Collection Tools

- The primary aim of data research and analysis is to derive ultimate insights that are unbiased. Any mistake in or keeping a biased mind to collect data, selecting an analysis method, or choosing audience sample il to draw a biased inference.

- Irrelevant to the sophistication used in research data and analysis is enough to rectify the poorly defined objective outcome measurements. It does not matter if the design is at fault or intentions are not clear, but lack of clarity might mislead readers, so avoid the practice.

- The motive behind data analysis in research is to present accurate and reliable data. As far as possible, avoid statistical errors, and find a way to deal with everyday challenges like outliers, missing data, data altering, data mining , or developing graphical representation.

LEARN MORE: Descriptive Research vs Correlational Research The sheer amount of data generated daily is frightening. Especially when data analysis has taken center stage. in 2018. In last year, the total data supply amounted to 2.8 trillion gigabytes. Hence, it is clear that the enterprises willing to survive in the hypercompetitive world must possess an excellent capability to analyze complex research data, derive actionable insights, and adapt to the new market needs.

LEARN ABOUT: Average Order Value

QuestionPro is an online survey platform that empowers organizations in data analysis and research and provides them a medium to collect data by creating appealing surveys.

MORE LIKE THIS

NPS Survey Platform: Types, Tips, 11 Best Platforms & Tools

Apr 26, 2024

User Journey vs User Flow: Differences and Similarities

Best 7 Gap Analysis Tools to Empower Your Business

Apr 25, 2024

12 Best Employee Survey Tools for Organizational Excellence

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

6 How to Analyze Data in a Primary Research Study

Melody Denny and Lindsay Clark

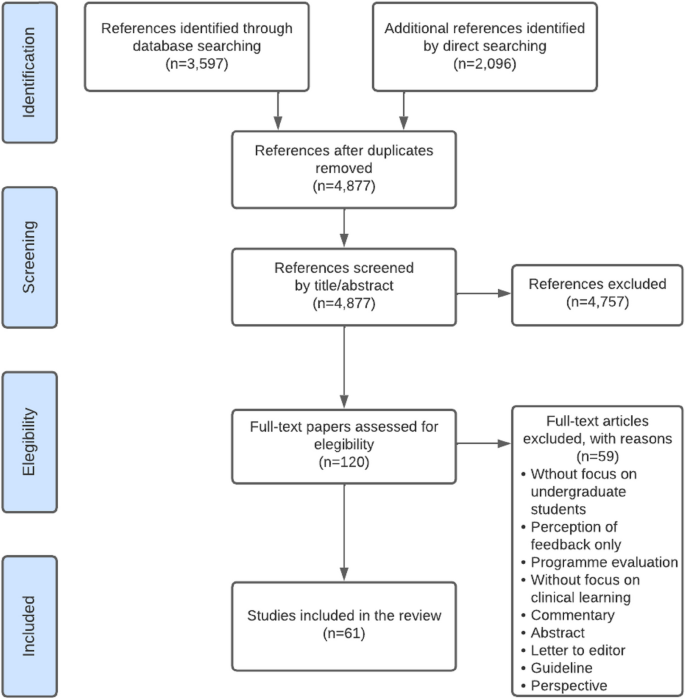

This chapter introduces students to the idea of working with primary research data grounded in qualitative inquiry, closed-and open-ended methods, and research ethics (Driscoll; Mackey and Gass; Morse; Scott and Garner). [1] We know this can seem intimidating to students, so we will walk them through the process of analyzing primary research, using information from public datasets including the Pew Research Center. Using sample data on teen social media use, we share our processes for analyzing sample data to demonstrate different approaches for analyzing primary research data (Charmaz; Creswell; Merriam and Tisdale; Saldaña). We also include links to additional public data sets, chapter discussion prompts, and sample activities for students to apply these strategies.

At this point in your education, you are familiar with what is known as secondary research or what many students think of as library research. Secondary research makes use of sources most often found in the library or, these days, online (books, journal articles, magazines, and many others). There’s another kind of research that you may or may not be familiar with: primary research. The Purdue OWL defines primary research as “any type of research you collect yourself” and lists examples as interviews, observations, and surveys (“What is Primary Research”).

Primary research is typically divided into two main types—quantitative and qualitative research. These two methods (or a mix of these) are used by many fields of study, so providing a singular definition for these is a bit tricky. Sheard explains that “quantitative research…deals with data that are numerical or that can be converted into numbers. The basic methods used to investigate numerical data are called ‘statistics’” (429). Guest, et al. explain that qualitative research is “information that is difficult to obtain through more quantitatively-oriented methods of data collection” and is used more “to answer the whys and hows of human behavior, opinion, and experience” (1).

This chapter focuses on qualitative methods that explore peoples’ behaviors, interpretations, and opinions. Rather than being only a reader and reporter of research, primary research allows you to be creators of research. Primary research provides opportunities to collect information based on your specific research questions and generate new knowledge from those questions to share with others. Generally, primary research tends to follow these steps:

- Develop a research question. Secondary research often uses this as a starting point as well. With primary research, however, rather than using library research to answer your research question, you’ll also collect data yourself to answer the question you developed. Data, in this case, is the information you collect yourself through methods such as interviews, surveys, and observations.

- Decide on a research method. According to Scott and Garner, “A research method is a recognized way of collecting or producing [primary data], such as a survey, interview, or content analysis of documents” (8). In other words, the method is how you obtain the data.

- Collect data. Merriam and Tisdale clarify what it means to collect data: “data collection is about asking, watching, and reviewing” (105-106). Primary research might include asking questions via surveys or interviews, watching or observing interactions or events, and examining documents or other texts.

- Analyze data. Once data is collected, it must then be analyzed. “Data analysis is the process of making sense out of the data… Basically, data analysis is the process used to answer your research question(s)” (Merriam and Tisdale 202). It’s worth noting that many researchers collect data and analyze at the same time, so while these may seem like different steps in the process, they actually overlap.

- Report findings. Once the researcher has spent time understanding and interpreting the data, they are then ready to write about their research, often called “findings.” You may also see this referred to as “results.”

While the entire research process is discussed, this chapter focuses on the analysis stage of the process (step 4). Depending on where you are in the research process, you may need to spend more time on step 1, 2, or 3 and review Driscoll’s “Introduction to Primary Research” (Volume 2 of Writing Spaces ).

Primary research can seem daunting, and some students might think that they can’t do primary research, that this type of research is for professionals and scholars, but that’s simply not true. It’s true that primary research data can be difficult to collect and even more difficult to analyze, but the findings are typically very revealing. This chapter and the examples included break down this research process and demonstrate how general curiosity can lead to exciting chances to learn and share information that is relevant and interesting. The goal of this chapter is to provide you with some information about data analysis and walk you through some activities to prepare you for your own data analysis. The next section discusses analyzing data from closed-ended methods and open-ended methods.

Data from Primary Research

As stated above, this chapter doesn’t focus on methods, but before moving on to analysis, it’s important to clarify a few things related to methods as they are directly connected to analyzing data. As a quick reminder, a research method is how researchers collect their data such as surveys, interviews, or textual analysis. No matter which method used, researchers need to think about the types of questions to ask for answering their overall research question. Generally, there are two types of questions to consider: closed-ended and open-ended. The next section provides examples of the data you might receive from asking closed-ended and open-ended questions and options for analyzing and presenting that data.

Data from Closed-Ended Methods

The data that is generated by closed-ended questions on methods such as surveys and polls is often easier to organize. Because the way respondents could answer those questions is limited to specific answers (Yes/No, numbered scales, multiple choice), the data can be analyzed by each question or by looking at the responses individually or as a whole. Though there are several approaches to analyzing the data that comes from closed-ended questions, this section will introduce you to a few different ways to make sense of this kind of data.

Closed-ended questions are those that have limited answers, like multiple choice or check-all-that-apply questions. These questions mean that respondents can provide only the answers given or they may select an “other” option. An example of a closed-ended question could be “Do you use YouTube? Yes, No, Sometimes.” Closed-ended questions have their perks because they (mostly) keep participants from misinterpreting the question or providing unhelpful responses. They also make data analysis a bit easier.

If you were to ask the “Yes, No, Sometimes” question about YouTube to 20 of your closest friends, you may get responses like Yes = 18, No = 1, and Sometimes = 1. But, if you were to ask a more detailed question like “Which of the following social media platforms do you use?” and provide respondents with a check-all-that-apply option, like “Facebook, YouTube, Twitter, Instagram, Snapchat, Reddit, and Tumblr,” you would get a very different set of data. This data might look like Facebook = 17, YouTube = 18, Twitter = 12, Instagram = 20, Snapchat = 15, Reddit = 8, and Tumblr = 3. The big takeaway here is that how you ask the question determines the type of data you collect.

Analyzing Closed-Ended Data

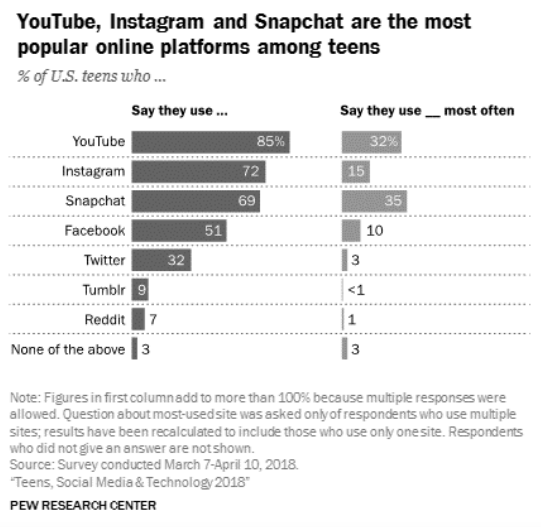

Now that you have data, it’s time to think about analyzing and presenting that data. Luckily, the Pew Research Center conducted a similar study that can be used as an example. The Pew Research Center is a “nonpartisan fact tank that informs the public about the issues, attitudes and trends shaping the world. It conducts public opinion polling, demographic research, media content analysis and other empirical social science research” (“About Pew Research Center”). The information provided below comes from their public dataset “Teens, Social Media, and Technology, 2018” (Anderson and Jiang). This example is used to show how you might analyze this type of data once collected and what that data might look like. “Teens, Social Media, and Technology 2018” reported responses to questions related to which online platforms teens use and which they use most often. In figure 1 below, Pew researchers show the final product of their analysis of the data:

Pew analyzed their data and organized the findings by percentages to show what they discovered. They had 743 teens who responded to these questions, so presenting their findings in percentages helps readers better “see” the data overall (rather than saying YouTube = 631 and Instagram = 535). However, results can be represented in different ways. When the Pew researchers were deciding how to present their data, they could have reported the frequency, or the number of people who said they used YouTube, Instagram, and Snapchat.

In the scenario of polling 20 of your closest friends, you, too, would need to decide how to present your data: Facebook = 17, YouTube = 18, Twitter = 12, Instagram = 20, Snapchat = 15, Reddit = 8, and Tumblr = 3. In your case, you might want to present the frequency (number) of responses rather than the percentages of responses like Pew did. You could choose a bar graph like Pew or maybe a simple table to show your data.

Looking again at the Pew data, researchers could use this data to generate further insights or questions about user preferences. For example, one could highlight the fact that 85% of respondents reported using YouTube the most, while only 7% reported using Reddit. Why is that? What conclusions might you be able to make based on these data? Does the data make you wonder if any additional questions might be explored? If you want to learn more about your respondents’ opinions or preference, you might need to ask open-ended questions.

Data from Open-Ended Methods

Whereas closed-ended questions limit how respondents might answer, open-ended questions do not limit respondents’ answers and allow them to answer more freely. An example of an open-ended question, to build off the question above, could be “Why do you use social media? Explain.” This type of question gives respondents more space to fully explain their responses. Open-ended questions can make the data varied because each respondent may answer differently. These questions, which can provide fruitful responses, can also mean unexpected responses or responses that don’t help to answer the overall research question, which can sometimes make data analysis challenging.

In that same Pew Research Center data, respondents were likely limited in how they were able to answer by selecting social media platforms from a list. Pew also shares selected data (Appendix A), and based on these data, it can be assumed they also asked open-ended questions, something about the positive or negative effects of social media platforms. Because their research method included both closed-ended questions about which platforms teens use as well as open-ended questions that invited their thoughts about social media, Pew researchers were able to learn more about these participants’ thoughts and perceptions. To give us, the readers, a clearer idea of how they justified their presentation of the data, Pew offers 15 sample excerpts from those open-ended questions. They explain that these excerpts are what the researchers believe are representative of the larger data set. We explain below how we might analyze those excerpts.

Analyzing Open-Ended Data

As Driscoll reminds us, ethical considerations impact all stages of the research process, and researchers should act ethically throughout the entire research process. You already know a little something about research ethics. For example, you know that ethical writers cite sources used in research papers by giving credit to the person who created that information. When creating primary sources, you have a few different ethical considerations for analyzing data, which will be discussed below.

To demonstrate how to analyze data from open-ended methods, we explain how we (Melody and Lindsay) analyzed the 15 excerpts from the Pew data using open coding. Open coding means analyzing the data without any predetermined categories or themes; researchers are just seeing what emerges or seems significant (Charmaz). Creswell suggests four specific steps when coding qualitative data, though he also stresses that these steps are iterative, meaning that researchers may need to revisit a step anywhere throughout the process. We use these four steps to explain our analysis process, including how we ethically coded the data, interpreted what the coding process revealed, and worked together to identify and explain categories we saw in the data.

Step 1: Organizing and Preparing the Data

The first part of the analysis stage is organizing the data before examining it. When organizing data, researchers must be careful to work with primary data ethically because that data often represents actual peoples’ information and opinions. Therefore, researchers need to carefully organize the data in such a way as to not identify their participants or reveal who they are. This is a key component to The Belmont Report , guidelines published in 1979 meant to guide researchers and help protect participants. Using pseudonyms or assigning numbers or codes (in place of names) to the data is a recommended ethical step to maintain participants’ confidentiality in a study. Anonymizing data, or removing names, has the additional effect of eliminating researcher bias, which can occur when researchers are so familiar with their own data and participants that the researchers may begin to think they already know the answers or see connections prior to analysis (Driscoll). By assigning pseudonyms, researchers can also ensure that they take an objective look at each participant’s answers without being persuaded by participant identity.

The first part of coding is to make notations while reading through the data (Merriam and Tisdale). At this point, researchers are open to many possibilities regarding their data. This is also where researchers begin to construct categories. Offering a simple example to illustrate this decision-making process, Merriam and Tisdale ask us to imagine sorting and categorizing two hundred grocery store items (204). Some items could be sorted into more than one category; for example, ice cream could be categorized as “frozen” or as “dessert.” How you decide to sort that item depends on your research question and what you want to learn.

For this step, we, Melody and Lindsay, each created a separate document that included the 15 excerpts. Melody created a table for the quotes, leaving a column for her coding notes, and Lindsay added spaces between the excerpts for her notes. For our practice analysis, we analyzed the data independently, and then shared what we did to compare, verify, and refine our analysis. This brings a second, objective view to the analysis, reduces the effect of researcher bias, and ensures that your analysis can be verified and supported by the data. To support your analysis, you need to demonstrate how you developed the opinions and conclusions you have about your data. After all, when researchers share their analyses, readers often won’t see all of the raw data, so they need to be able to trust the analysis process.

Step 2: Reading through All the Data

Creswell suggests getting a general sense of the data to understand its overall meaning. As you start reading through your data, you might begin to recognize trends, patterns, or recurring features that give you ideas about how to both analyze and later present the data. When we read through the interview excerpts of these 15 participants’ opinions of social media, we both realized that there were two major types of comments: positive and negative. This might be similar to categorizing the items in the grocery store (mentioned above) into fresh/frozen foods and non-perishable items.

To better organize the data for further analysis, Melody marked each positive comment with a plus sign and each negative comment with a minus sign. Lindsay color-coded the comments (red for negative, indicated by boldface type below; green for positive, indicated by grey type below) and then organized them on the page by type. This approach is in line with Merriam and Tisdale’s explanation of coding: “assigning some sort of shorthand designation to various aspects of your data so that you can easily retrieve specific pieces of the data. The designations can be single words, letters, numbers, phrases, colors, or combinations of these” (199). While we took different approaches, as shown the two sections below, both allowed us to visually recognize the major sections of the data:

Lindsay’s Coding Round 1, which shows her color coding indicated by boldface type

“[Social media] allows us to communicate freely and see what everyone else is doing. [It] gives us a voice that can reach many people.” (Boy, age 15) “It makes it harder for people to socialize in real life, because they become accustomed to not interacting with people in person.” (Girl, age 15) “[Teens] would rather go scrolling on their phones instead of doing their homework, and it’s so easy to do so. It’s just a huge distraction.” (Boy, age 17) “It enables people to connect with friends easily and be able to make new friends as well.” (Boy, age 15) “I think social media have a positive effect because it lets you talk to family members far away.” (Girl, age 14) “Because teens are killing people all because of the things they see on social media or because of the things that happened on social media.” (Girl, age 14) “We can connect easier with people from different places and we are more likely to ask for help through social media which can save people.” (Girl, age 15)

Melody’s Coding Round 1, showing her use of plus and minus signs to classify the comments as positive or negative, respectively

+ “[Social media] allows us to communicate freely and see what everyone else is doing. [It] gives us a voice that can reach many people.” (Boy, age 15) – “It makes it harder for people to socialize in real life, because they become accustomed to not interacting with people in person.” (Girl, age 15) – “[Teens] would rather go scrolling on their phones instead of doing their homework, and it’s so easy to do so. It’s just a huge distraction.” (Boy, age 17) + “It enables people to connect with friends easily and be able to make new friends as well.” (Boy, age 15) + “I think social media have a positive effect because it lets you talk to family members far away.” (Girl, age 14) – “Because teens are killing people all because of the things they see on social media or because of the things that happened on social media.” (Girl, age 14) + “We can connect easier with people from different places and we are more likely to ask for help through social media which can save people.” (Girl, age 15)

Step 3: Doing Detailed Coding Analysis of the Data

It’s important to mention that Creswell dedicates pages of description on coding data because there are various ways of approaching detailed analysis. To code our data, we added a descriptive word or phrase that “symbolically assigns a summative, salient, essence-capturing, and/or evocative attribute” to a portion of data (Saldaña 3). From the grocery store example above, that could mean looking at the category of frozen foods and dividing them into entrees, side dishes, desserts, appetizers, etc. We both coded for topics or what the teens were generally talking about in their responses. For example, one excerpt reads “Social media allows us to communicate freely and see what everyone else is doing. It gives us a voice that can reach many people.” To code that piece of data, researchers might assign words like communication, voice, or connection to explain what the data is describing.

In this way, we created the codes from what the data said, describing what we read in those excerpts. Notice in the section below that, even though we coded independently, we described these pieces of data in similar ways using bolded keywords:

Melody’s Coding Round 2, with key words added to summarize the meanings of the different quotes

– “Gives people a bigger audience to speak and teach hate and belittle each other.” (Boy, age 13) bullying – “It provides a fake image of someone’s life. It sometimes makes me feel that their life is perfect when it is not.” (Girl, age 15) fake + “Because a lot of things created or made can spread joy.” (Boy, age 17) reaching people + “I feel that social media can make people my age feel less lonely or alone. It creates a space where you can interact with people.” (Girl, age 15) connection + “[Social media] allows us to communicate freely and see what everyone else is doing. [It] gives us a voice that can reach many people.” (Boy, age 15) reaching people

Lindsay’s Coding Round 2, with key words added in capital letters to summarize the meanings of the quotations

“Gives people a bigger audience to speak and teach hate and belittle each other.” (Boy, age 13) OPPORTUNITIES TO COMMUNICATE NEGATIVELY/MORE EASILY “It provides a fake image of someone’s life. It sometimes makes me feel that their life is perfect when it is not.” (Girl, age 15) FAKE, NOT REALITY “Because a lot of things created or made can spread joy.” (Boy, age 17) SPREAD JOY “I feel that social media can make people my age feel less lonely or alone. It creates a space where you can interact with people.” (Girl, age 15) INTERACTION, LESS LONELY “[Social media] allows us to communicate freely and see what everyone else is doing. [It] gives us a voice that can reach many people.” (Boy, age 15) COMMUNICATE, VOICE

Though there are methods that allow for researchers to use predetermined codes (like from previous studies), “the traditional approach…is to allow the codes to emerge during the data analysis” (Creswell 187).

Step 4: Using the Codes to Create a Description Using Categories, Themes, Settings, or People

Our individual coding happened in phases, as we developed keywords and descriptions that could then be defined and relabeled into concise coding categories (Saldaña 11). We shared our work from Steps 1-3 to further define categories and determine which themes were most prominent in the data. A few times, we interpreted something differently and had to discuss and come to an agreement about which category was best.

In our process, one excerpt comment was interpreted as negative by one of us and positive by the other. Together we discussed and confirmed which comments were positive or negative and identified themes that seemed to appear more than once, such as positive feelings towards the interactional element of social media use and the negative impact of social media use on social skills. When two coders compare their results, this allows for qualitative validity, which means “the researcher checks for the accuracy of the findings” (Creswell 190). This could also be referred to as intercoder reliability (Lavrakas). For intercoder reliability, researchers sometimes calculate how often they agree in a percentage. Like many other aspects of primary research, there is no consensus on how best to establish or calculate intercoder reliability, but generally speaking, it’s a good idea to have someone else check your work and ensure you are ethically analyzing and reporting your data.

Interpreting Coded Data

Once we agreed on the common categories and themes in this dataset, we worked together on the final analysis phase of interpreting the data, asking “what does it mean?” Data interpretation includes “trying to give sense to the data by creatively producing insights about it” (Gibson and Brown 6). Though we acknowledge that this sample of only 15 excerpts is small, and it might be difficult to make claims about teens and social media from just this data, we can share a few insights we had as part of this practice activity.

Overall, we could report the frequency counts and percentages that came from our analysis. For example, we counted 8 positive comments and 7 negative comments about social media. Presented differently, those 8 positive comments represent 53% of the responses, so slightly over half. If we focus on just the positive comments, we are able to identify two common themes among those 8 responses: Interaction and Expression. People who felt positively about social media use identified the ability to connect with people and voice their feelings and opinions as the main reasons. When analyzing only the 7 negative responses, we identified themes of Bullying and Social Skills as recurring reasons people are critical of social media use among teens. Identifying these topics and themes in the data allows us to begin thinking about what we can learn and share with others about this data.

How we represent what we have learned from our data can demonstrate our ethical approach to data analysis. In short, we only want to make claims we can support, and we want to make those claims ethically, being careful to not exaggerate or be misleading.

To better understand a few common ethical dilemmas regarding the presentation of data, think about this example: A few years ago, Lindsay taught a class that had only four students. On her course evaluations, those four students rated the class experience as “Excellent.” If she reports that 100% of her students answered “Excellent,” is she being truthful? Yes. Do you see any potential ethical considerations here? If she said that 4/4 gave that rating, does that change how her data might be perceived by others? While Lindsay could show the raw data to support her claims, important contextual information could be missing if she just says 100%. Perhaps others would assume this was a regular class of 20-30 students, which would make that claim seem more meaningful and impressive than it might be.

Another word for this is cherry picking. Cherry picking refers to making conclusions based on thin (or not enough) data or focusing on data that’s not necessarily representative of the larger dataset (Morse). For example, if Lindsay reported the comment that one of her students made about this being the “best class ever,” she would be telling the truth but really only focusing on the reported opinion of 25% of the class (1 out of 4). Ideally, researchers want to make claims about the data based on ideas that are prominent, trending, or repeated. Less prominent pieces of data, like the opinion of that one student, are known as outliers, or data that seem to “be atypical of the rest of the dataset” (Mackey and Gass 257). Focusing on those less-representative portions might misrepresent or overshadow the aspects of the data that are prominent or meaningful, which could create ethical problems for your study. With these ethical considerations in mind, the last step of conducting primary research would be to write about the analysis and interpretation to share your process with others.

This chapter has introduced you to ethically analyzing data within the primary research tradition by focusing on close-ended and open-ended data. We’ve provided you with examples of how data might be analyzed, interpreted, and presented to help you understand the process of making sense of your data. This is just one way to approach data analysis, but no matter your research method, having a systematic approach is recommended. Data analysis is a key component in the overall primary research process, and we hope that you are now excited and curious to participate in a primary research project.

Works Cited

“About Pew Research Center.” Pew Research Center, 2020. www.pewresearch.org/about/ . Accessed 28 Dec 2020. Anderson, Monica, and Jingjing Jiang.

“Teens, Social Media & Technology 2018.” Pew Research Center, May 2018, www.pewresearch.org/internet/2018/05/31/teens-social-media-technology-2018/ .

The Belmont Report: Ethical Principles and Guidelines for the Protection of Human Subjects of Research, Office for Human Research Protections, www.hhs.gov/ohrp/regulations-and-policy/belmont-report/read-the-belmont-report/index.html . 18 Apr. 1979.

Charmaz, Kathy. “Grounded Theory.” Approaches to Qualitative Research: A Reader on Theory and Practice , edited by Sharlene Nagy Hesse-Biber and Patricia Leavy, Oxford UP, 2004, pp. 496-521.

Corpus of Contemporary American English (COCA) . (n.d.). Retrieved April 11, 2021, from https://www.english-corpora.org/coca/

Creswell, John W. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches , 3rd edition, Sage, 2009.

Data.gov . (2020). Retrieved April 11, 2021, from https://www.data.gov/

Driscoll, Dana Lynn. “Introduction to Primary Research: Observations, Surveys, and Interviews.” Writing Spaces: Readings on Writing , Volume 2, Parlor Press, 2011, pp. 153-174.

Explore Census Data . (n.d.). United States Census Bureau. Retrieved April 11, 2021, from https://data.census.gov/cedsci/

Gibson, William J., and Andrew Brown. Working with Qualitative Data . London, Sage, 2009.

Google Trends. (n.d.). Retrieved April 11, 2021, from https://trends.google.com/trends/explore

Guest, Greg, et al. Collecting Qualitative Data: A Field Manual for Applied Research . Sage, 2013.

HealthData.gov . (n.d.). Retrieved April 11, 2021, from https://healthdata.gov/

Lavrakas, Paul J. Encyclopedia of Survey Research Methods . Sage, 2008.

Mackey, Allison, and Sue M. Gass. Second Language Research: Methodology and Design . Lawrence Erlbaum Associates, 2005.

Merriam, Sharan B., and Elizabeth J. Tisdell. Qualitative Research: A Guide to Design and Implementation , John Wiley & Sons, Incorporated, 2015. ProQuest Ebook Central, https://ebookcentral.proquest.com/lib/unco/detail.action?docID=2089475 .

Michigan Corpus of Academic Spoken English. (n.d.). Retrieved April 11, 2021, from https://quod.lib.umich.edu/cgi/c/corpus/corpus?c=micase;page=simple

Morse, Janice. M. “‘Cherry Picking’: Writing from Thin Data.” Qualitative Health Research , vol. 20, no. 1, 2009, p. 3.

Pew Research Center . (2021). Retrieved April 11, 2021, from https://www.pewresearch.org/

Saldaña, Johnny. The Coding Manual for Qualitative Researchers , 2nd edition, Sage, 2013.

Scott, Greg, and Roberta Garner. Doing Qualitative Research: Designs, Methods, and Techniques , 1st edition, Pearson, 2012.

Sheard, Judithe. “Quantitative Data Analysis.” Research Methods Information, Systems, and Contexts , edited by Kirsty Williamson and Graeme Johanson, Elsevier, 2018, pp. 429-452.

Teens and Social Media , Google Trends, trends.google.com/trends/explore?-date=all&q=teens%20and%20social%20media . Accessed 15 Jul. 2020.

“What is Primary Research and How Do I Get Started?” The Writing Lab and OWL at Purdue and Purdue U , 2020. owl.purdue.edu/owl . Accessed 21 Dec. 2020.

Zhao, Alice. “How Text Messages Change from Dating to Marriage.” Huffington Post , 21 Oct. 2014, www.huffpost.com .

“My mom had to get a ride to the library to get what I have in my hand all the time. She reminds me of that a lot.” (Girl, age 14)

“Gives people a bigger audience to speak and teach hate and belittle each other.” (Boy, age 13)

“It provides a fake image of someone’s life. It sometimes makes me feel that their life is perfect when it is not.” (Girl, age 15)

“Because a lot of things created or made can spread joy.” (Boy, age 17)

“I feel that social media can make people my age feel less lonely or alone. It creates a space where you can interact with people.” (Girl, age 15)

“[Social media] allows us to communicate freely and see what everyone else is doing. [It] gives us a voice that can reach many people.” (Boy, age 15)

“It makes it harder for people to socialize in real life, because they become accustomed to not interacting with people in person.” (Girl, age 15)

“[Teens] would rather go scrolling on their phones instead of doing their homework, and it’s so easy to do so. It’s just a huge distraction.” (Boy, age 17)

“It enables people to connect with friends easily and be able to make new friends as well.” (Boy, age 15)

“I think social media have a positive effect because it lets you talk to family members far away.” (Girl, age 14)

“Because teens are killing people all because of the things they see on social media or because of the things that happened on social media.” (Girl, age 14)

“We can connect easier with people from different places and we are more likely to ask for help through social media which can save people.” (Girl, age 15)

“It has given many kids my age an outlet to express their opinions and emotions, and connect with people who feel the same way.” (Girl, age 15)

“People can say whatever they want with anonymity and I think that has a negative impact.” (Boy, age 15)

“It has a negative impact on social (in-person) interactions.” (Boy, age 17)

Teacher Resources for How to Analyze Data in a Primary Research Study

Overview and teaching strategies.

This chapter is intended as an overview of analyzing qualitative research data and was written as a follow-up piece to Dana Lynn Driscoll’s “Introduction to Primary Research: Observations, Surveys, and Interviews” in Volume 2 of this collection. This chapter could work well for leading students through their own data analysis of a primary research project or for introducing students to the idea of primary research by using outside data sources, those in the chapter and provided in the activities below, or data you have access to.

From our experiences, students usually have limited experience with primary research methods outside of conducting a small survey for other courses, like sociology. We have found that few of our students have been formally introduced to primary research and analysis. Therefore, this chapter strives to briefly introduce students to primary research while focusing on analysis. We’ve presented analysis by categorizing data as open-ended and closed-ended without getting into too many details about qualitative versus quantitative. Our students tend to produce data collection tools with a mix of these types of questions, so we feel it’s important to cover the analysis of both.

In this chapter, we bring students real examples of primary data and lead them through analysis by showing examples. Any of these exercises and the activities below may be easily supplemented with additional outside data. One way that teachers can bring in outside data is through the use of public datasets.

Public Data Sets

There are many public data sets that teachers can use to acquaint their students with analyzing data. Be aware that some of these datasets are for experienced researchers and provide the data in CSV files or include metadata, all of which is probably too advanced for most of our students. But if you are comfortable converting this data, it could be valuable for a data analysis activity.

- In the chapter, we pulled from Pew Research, and their website contains many free and downloadable data sets (Pew Research Center).

- The site Data.gov provides searchable datasets, but you can also explore their data by clicking on “data” and seeing what kinds of reports they offer.

- The U.S. Census Bureau offers some datasets as well (Explore Census Data): Much of this data is presented in reports, but teachers could pull information from reports and have students analyze the data and compare their results to those in the report, much like we did with the Pew Research data in the chapter.

- Similarly, HealthData.gov offers research-based reports packed with data for students to analyze.

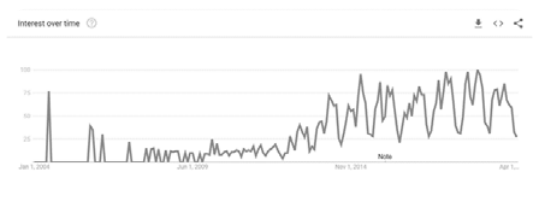

- In one of the activities below, we used Google Trends to look at searches over a period of time. There are some interesting data and visuals provided on the homepage to help students get started.

- If you’re looking for something a bit more academic, the Michigan Corpus of Academic Spoken English is a great database of transcripts from academic interactions and situations.

- Similarly, the Corpus of Contemporary American English allows users to search for words or word strings to see their frequency and in which genre and when these occur.

Before moving on to student activities, we’d like to offer one additional suggestion for teachers to consider.

Class Google Form

One thing that Melody does at the beginning of almost all of her research-based writing courses is ask students to complete a Google Form at the beginning of the semester. Sometimes, these forms are about their experiences with research. Other times, they revolve around a class topic (recently, she’s been interested in Generation Z or iGeneration and has asked students questions related to that). Then, when it’s time to start thinking about primary research, she uses that Google Form to help students understand more about the primary research process. Here are some ways that teachers can employ the data gathered from Google Form given to students.

- Ask students to look at the questions asked on the survey and deduce the overall research question.

- • Ask students to look at the types of questions asked (open- and closed-ended) and consider why they were constructed that way.

- Ask students to evaluate the wording of the questions asked.

- Ask students to examine the results of a few (or more) or the questions on the survey. This can be done in groups with each group looking at 1-3 questions, depending on the size of your Google Form.

- Ask students to think about how they might present that data in visual form. Yes, Google provides some visuals, but you can give them the raw data and see what they come up with.

- Ask students to come up with 1-3 major takeaways based on all the data.

This exercise allows students to work with real data and data that’s directly related to them and their classmates. It’s also completely within ethical boundaries because it’s data collected in the classroom, for educational purposes, and it stays within the classroom.

Below we offer some guiding questions to help move students through the chapter and the activities as well as some additional activities.

Discussion Questions

- In the opening of this chapter, we introduced you to primary research , or “any type of research you collect yourself” (“What is Primary Research”). Have you completed primary research before? How did you decide on your research method, based on your research question? If you have not worked on primary research before, brainstorm a potential research question for a topic you want to know more about. Discuss what research method you might use, including closed- or open-ended methods and why.

- Looking at the chart from the Pew Research dataset, “Teens, Social Media, and Technology 2018,” would you agree that the distributions among online platforms remain similar, or have trends changed?

- What do you make of the “none of the above” category on the Pew table? Do you think teens are using online platforms that aren’t listed, or do you think those respondents don’t use any online platforms?

- When analyzing data from open-ended questions, which step seems most challenging to you? Explain.

Activity #1: TurnItIn and Infographics

Infographics can be a great way to help you see and understand data, while also giving you a way to think about presenting your own data. Multiple infographics are available on TurnItIn, downloadable for free, that provide information about plagiarism.

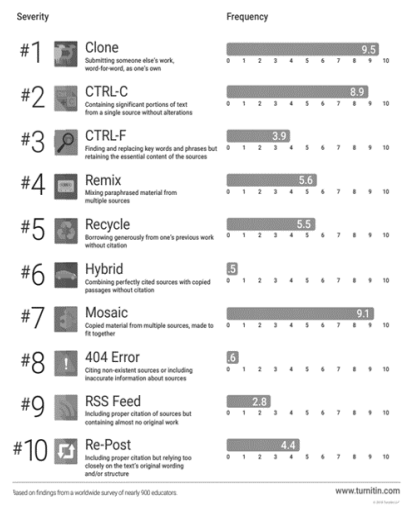

Figure 3, titled “The Plagiarism Spectrum,” provides you with the “severity” and “frequency” based on survey findings of nearly 900 high school and college instructors from around the world. TurnItIn encourages educators to print this infographic and hang in their classroom:

This infographic provides some great data analysis examples: specific categories with definitions (and visual representation of their categories), frequency counts with bar graphs, and color gradient bars to show higher vs. lower numbers.

- Write a summary of how this infographic presents data.

- How do you think they analyzed the data based on this visual?

Activity #2: How Text Messages Change from Dating to Marriage

In Alice Zhao’s Huffington Post piece, she analyzes text messages that she collected during her relationship with her boyfriend, turned fiancé, turned husband to answer the question of how text messages (or communication) change over the course of a relationship. While Zhao offers some insight into her data, she also provides readers with some really cool graphics that you can use to practice your analysis skills.

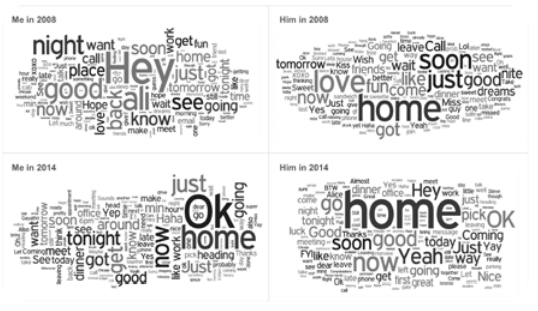

These first graphics are word clouds. In figure 4, Zhao put her textual data into a program that creates these images based on the most frequently occurring words. Word clouds are another option for analyzing your data. If you have a lot of textual data and want to know what participants said the most, placing your data into a word cloud program is an easy way to “see” the data in a new way. This is usually one of the first steps of analysis, and additional analysis is almost always needed.

- What do you notice about the texts from 2008 to 2014?

- What do you notice between her texts (me) and his texts (him)?

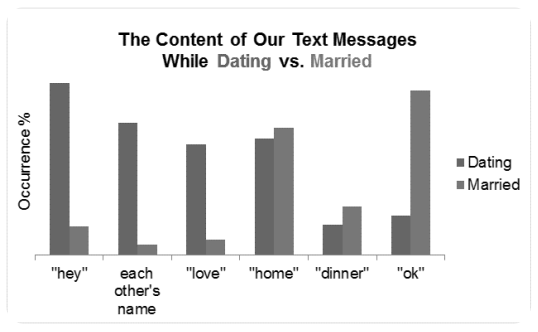

Zhao also provided this graphic (figure 5), a comparative look at what she saw as the most frequently occurring words from the word clouds. This could be another step in your data analysis procedure: zooming in on a few key aspects and digging a bit deeper.

- What do you make of this data? Why might the word “hey” occur more frequently in the dating time frame and the word “ok” occur more frequently in the married time frame?

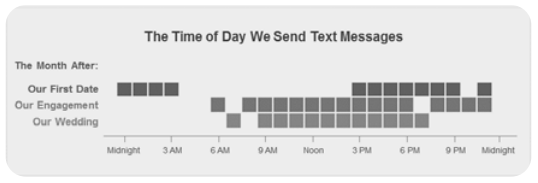

As part of her research, Zhao also looked at the time of day text messages were sent, shown below in figure 6:

Here, Zhao looked at messages sent a month after their first date, a month after their engagement, and a month after their wedding.

- She offers her own interpretation in her piece in figure 6, but what do you think of this?

- Also make note of this graphic. It’s a great way to look at the data another way. If your data may be time sensitive, this type of graphic may help you better analyze and understand your data.

- This work is licensed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License (CC BY-NC-ND 4.0) and is subject to the Writing Spaces Terms of Use. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/4.0/ , email [email protected] , or send a letter to Creative Commons, PO Box 1866, Mountain View, CA 94042, USA. To view the Writing Spaces Terms of Use, visit http://writingspaces.org/terms-of-use . ↵

How to Analyze Data in a Primary Research Study Copyright © 2021 by Melody Denny and Lindsay Clark is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License , except where otherwise noted.

Share This Book

Loading metrics

Open Access

Eleven quick tips for finding research data

Contributed equally to this work with: Kathleen Gregory, Siri Jodha Khalsa, William K. Michener, Fotis E. Psomopoulos, Anita de Waard, Mingfang Wu

Affiliation Data Archiving and Networked Services, Royal Netherlands Academy of Arts and Sciences, The Hague, Netherlands

Affiliation National Snow and Ice Data Centre, Cooperative Institute for Research in Environmental Sciences, University of Colorado, Boulder, Colorado, United States of America

Affiliation College of University Libraries & Learning Sciences, The University of New Mexico, Albuquerque, New Mexico, United States of America

Affiliation Institute of Applied Biosciences, Centre for Research and Technology Hellas, Thessaloniki, Greece

Affiliation Research Data Management Solutions, Elsevier, Jericho, Vermont, United States of America

* E-mail: [email protected]

Affiliation Australia National Data Service, Melbourne, Australia

- Kathleen Gregory,

- Siri Jodha Khalsa,

- William K. Michener,

- Fotis E. Psomopoulos,

- Anita de Waard,

- Mingfang Wu

Published: April 12, 2018

- https://doi.org/10.1371/journal.pcbi.1006038

- Reader Comments

Citation: Gregory K, Khalsa SJ, Michener WK, Psomopoulos FE, de Waard A, Wu M (2018) Eleven quick tips for finding research data. PLoS Comput Biol 14(4): e1006038. https://doi.org/10.1371/journal.pcbi.1006038

Editor: Francis Ouellette, Genome Quebec, CANADA

Copyright: © 2018 Gregory et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: William K. Michener was supported by NSF (#IIA-1301346 and #ACI-1430508). The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

This is a PLOS Computational Biology Education paper.

Introduction

Over the past decades, science has experienced rapid growth in the volume of data available for research—from a relative paucity of data in many areas to what has been recently described as a data deluge [ 1 ]. Data volumes have increased exponentially across all fields of science and human endeavour, including data from sky, earth, and ocean observatories; social media such as Facebook and Twitter; wearable health-monitoring devices; gene sequences and protein structures; and climate simulations [ 2 ]. This brings opportunities to enable more research, especially cross-disciplinary research that could not be done before. However, it also introduces challenges in managing, describing, and making data findable, accessible, interoperable, and reusable by researchers [ 3 ].

When this vast amount and variety of data is made available, finding relevant data to meet a research need is increasingly a challenge. In the past, when data were relatively sparse, researchers discovered existing data by searching literature, attending conferences, and asking colleagues. In today’s data-rich environment, with accompanying advances in computational and networking technologies, researchers increasingly conduct web searches to find research data. The success of such searches varies greatly and depends to a large degree on the expertise of the person looking for data, the tools used, and, partially, on luck. This article offers the following 11 quick tips that researchers can follow to more effectively and precisely discover data that meet their specific needs.

- Tip 1: Think about the data you need and why you need them.

- Tip 2: Select the most appropriate resource.

- Tip 3: Construct your query strategically.

- Tip 4: Make the repository work for you.

- Tip 5: Refine your search.

- Tip 6: Assess data relevance and fitness -for -use.

- Tip 7: Save your search and data- source details.

- Tip 8: Look for data services, not just data.

- Tip 9: Monitor the latest data.

- Tip 10: Treat sensitive data responsibly.

- Tip 11: Give back (cite and share data).

Tip 1: Think about the data you need and why you need them

Before embarking on a search for data, consider how you will use the desired data in the context of your overall research question. Are you seeking data for comparison or validation, as the basis for a new study, or for another reason? List the characteristics that the data must have in order to fulfil your identified purpose(s), including requirements such as data format, spatial or temporal coverage, availability, and author or research group. In many cases, your initial data requirements and the identified constraints will change as you progress with the search. Pausing to first analyse what you need and why you need it can lead to a more analytic search, save searching time and facilitating the actions described in Tips 2–6.

Tip 2: Select the most appropriate resource

Directories of research-data repositories, such as re3data.org ( http://www.re3data.org ) and FAIRsharing ( https://fairsharing.org ), web search engines, and colleagues can be consulted to discover domain-specific portals in your discipline. Subject domain is but one criterion to consider when selecting an appropriate data repository. Various certification processes have also been implemented to help develop trustworthiness in repositories and to make their data-governing policies more transparent. For example, repositories earning the CoreTrustSeal ( https://www.coretrustseal.org/about ) Trustworthy Data Repository certification must meet 16 requirements measuring the accessibility, usability, reliability, and long-term stability of their data. Knowing what standards and criteria a repository applies to data and metadata provides more confidence in understanding and reusing the data from that repository.

Domain-specific portals provide ways to quickly narrow your search, offering interfaces and filters tailored to match the data and needs of specific disciplinary domains. Map interfaces for data collected from specific locations (see the National Water Information System, https://maps.waterdata.usgs.gov/mapper/index.html ) and specific search fields and tools (see the National Centre for Biotechnology Information’s complement of databases, ( https://www.ncbi.nlm.nih.gov/guide/all/ ) facilitate discovering disciplinary data. Other domain-focused repositories, such as the National Snow and Ice Data Centre (NSIDC, http://nsidc.org/data/search/ ), collect and apply knowledge about user requirements and incorporate domain semantics into their search engines to help data seekers quickly find appropriate data. Data aggregators, including DataONE ( https://www.dataone.org ) for environmental and earth observation data, VertNet ( http://vertnet.org ) and Global Biodiversity Information Facility (GBIF, https://www.gbif.org ) for museum specimen and biodiversity data, or DataMed ( https://datamed.org ) for biomedical datasets, enable searching multiple data repositories or collections through a single search interface. Some portals may not provide data-search functionality but instead provide a catalogue of data resources. A notable example is the AgBioData ( https://www.agbiodata.org/databases ) portal, which lists links to 12 agricultural biological databases dedicated to specific species (e.g., cotton, grain, or hardwood), where you can directly search for data.

The accessibility of data resources is another important consideration. University librarians can provide advice about particular subscription-based resources available at your institution. Research papers in your field can also point to available data repositories. In domains such as astronomy and genomics, for example, citations of datasets within journal articles are commonplace. These references usually include dataset access information that can be used to locate datasets of interest or to point toward data repositories favoured within a discipline.

Tip 3: Construct your query strategically

Describing your desired data effectively is key to communicating with the search system. Your description will determine if relevant data are retrieved and may inform the order of the hits in the results list. Help pages provide tips on how to construct basic and advanced searches within particular repositories (see for example Research Data Australia https://researchdata.ands.org.au —click on Advanced Search → Help). Note that not all repositories operate in the same manner. Some portals, such as DataONE ( https://www.dataone.org ), use semantic technologies to automatically expand the keywords entered in the search box to include synonyms. If a portal does not use automatic expansion, you may need to manually add various synonyms to your search query (e.g., in addition to ‘demography’ as a search term, one might also add ‘population density’, ‘population growth’, ‘census’, or ‘anthropology’).

- sea level (site:.edu)

Tip 4: Make the repository work for you

Repository developers invest significant time and energy organizing data in ways to make them more discoverable; use their work to your advantage. Familiarize yourself with the controlled vocabularies, subject categories, and search fields used in particular repositories. Searching for and successfully locating data is dependent on the information about the data, termed metadata, that are contained in these fields; this is particularly true for numeric or nontextual data. Browsing subject categories can also help to gauge the appropriateness of a resource, home in on an area of interest, or find related data that have been classified in the same category.

Researchers can also register or create profiles with many data repositories. By registering, you may be able to indicate your general research data interests which can be utilized in subsequent searches or receive alerts about datasets that you have previously downloaded (see also Tip 7).

Tip 5: Refine your search

In many cases, your initial search may not retrieve relevant data or all of the data that you need. Based on the retrieved results, you may need to broaden or narrow your approach. Apart from rephrasing your search query and using search operators, as discussed in Tip 3, facets or filters specific to individual repositories can be used to narrow the scope of your results. Refinements such as data format, types of analysis, and data availability allow users to quickly find usable data.

Examining results that look interesting (for example, by clicking on links for ‘more information’) can be a signal of the type of information that you find relevant. These results can then be linked to related ones (e.g., from the data provider, from different time series), and in subsequent searches, other results algorithmically determined to be related will be brought to the top of the results list.

Tip 6: Assess data relevance and fitness for use

Conduct a preliminary assessment of the retrieved data prior to investing time in subsequent data download, integration, and analytic and visualization efforts. A quick perusal of the metadata (text and/or images) can often enable you to verify that the data satisfy the initial requirements and constraints set forth in Tip 1 (e.g., spatial, temporal, and thematic coverage and data-sharing restrictions). Ideally, the metadata will also contain documentation sufficient to comprehensively assess the relevance and fitness for use of the data, including information about how the data were collected and quality assured, how the data have been previously used, etc. Some data repositories such as the National Science Foundation’s Arctic Data Centre ( https://arcticdata.io ) enable the data seeker to generate and download a metadata quality report that assesses how well the metadata adhere to community best practices for discovery and reusability. Clearly, if none of your criteria for data are met, you may not wish to download and use the associated data.

Attention should also be paid to quality parameters or flags within the data files. Make use of a visualization tool or statistics analysis tool, if provided, to examine quality or fitness of data for intended use before downloading data, especially if the data volume is large and the dataset includes many files.

Tip 7: Save your search and data-source details

Record the data source and data version if you access or download a data product. This may be accomplished by noting the persistent identifier, such as a digital object identifier (DOI) or another Global Unique Identifier (GUID) assigned to the data. Recording the URL from which you obtained the data can be a quick way of returning to it but should not be trusted in the long term for providing access to the data, as URLs can change. It is also a good practice to save a copy of any original data products that you downloaded [ 5 ]. You may, for example, need to go back to original data sources and check if there have been any changes or corrections to data. Registering with the data portal (as described in Tip 3) or registering as a user of a specific data product allows the repository to contact you when necessary. Such information may be needed when you publish a paper that builds on the data you accessed. If there are any errors found in the original data, registering with the data service allows them to contact you to see if there is an impact on any research conclusions that you have drawn from this data.

If you have registered with a portal, it may also be possible to save your searches, allowing you to resume your data search at a later time with all previously defined search criteria. Some portals use RESTful search interfaces, which means you can bookmark a results set or dataset and return to it later simply by going to the bookmark.

Tip 8: Look for data services, not just data