20 Advantages and Disadvantages of Survey Research

Survey research is a critical component of measurement and applied social research. It is a broad area that encompasses many procedures that involve asking questions to specific respondents.

A survey can be anything from a short feedback form to intensive, in-depth interviews that attempt to gather specific data about situations, events, or circumstances. Although there are several methods of application that researchers can apply using this tool, you can divide surveys into two generic categories: interviews and questionnaires.

Innovations in this area in recent years allow for advanced software solutions to provide more data to researchers because of the availability of online and mobile surveys. That means the people who are in the most challenging places to reach can still provide feedback on critical ideas, services, or solutions.

Several survey research advantages and disadvantages exist, so reviewing each critical point is necessary to determine if there is value in using this approach for your next project.

List of the Advantages of Survey Research

1. It is an inexpensive method of conducting research. Surveys are one of the most inexpensive methods of gathering quantitative data that is currently available. Some questionnaires can be self-administered, making it a possibility to avoid in-person interviews. That means you have access to a massive level of information from a large demographic in a relatively short time. You can place this option on your website, email it to individuals, or post it on social media profile.

Some of these methods have no financial cost at all, relying on personal efforts to post and collect the information. Robust targeting is necessary to ensure that the highest possible response rate becomes available to create a more accurate result.

2. Surveys are a practical solution for data gathering. Surveys or a practical way to gather information about something specific. You can target them to a demographic of your choice or manage them in several different ways. It is up to you to determine what questions get asked and in what format. You can use polls, questionnaires, quizzes, open-ended questions, and multiple-choice to collect info in real-time situations so that the feedback is immediately useful.

3. It is a fast way to get the results that you need. Surveys provide fast and comfortable results because of today’s mobile and online tools. It is not unusual for this method of data collection to generate results in as little as one day, and sometimes it can be even less than that depending on the scale and reach of your questions. You no longer need to wait for another company to deliver the solutions that you need because these questionnaires give you insights immediately. That means you can start making decisions in the shortest amount of time possible.

4. Surveys provide opportunities for scalability. A well-constructed survey allows you to gather data from an audience of any size. You can distribute your questions to anyone in the world today because of the reach of the Internet. All you need to do is send them a link to the page where you solicit information from them. This process can be done automatically, allowing companies to increase the efficiency of their customer onboarding processes.

Marketers can also use surveys as a way to create lead nurturing campaigns. Scientific research gains a benefit through this process as well because it can generate social insights at a personal level that other methods are unable to achieve.

5. It allows for data to come from multiple sources at once. When you construct a survey to meet the needs of a demographic, then you have the ability to use multiple data points collected from various geographic locations. There are fewer barriers in place today with this method than ever before because of the online access we have around the world.

Some challenges do exist because of this benefit, namely because of the cultural differences that exist between different countries. If you conduct a global survey, then you will want to review all of the questions to ensure that an offense is not unintentionally given.

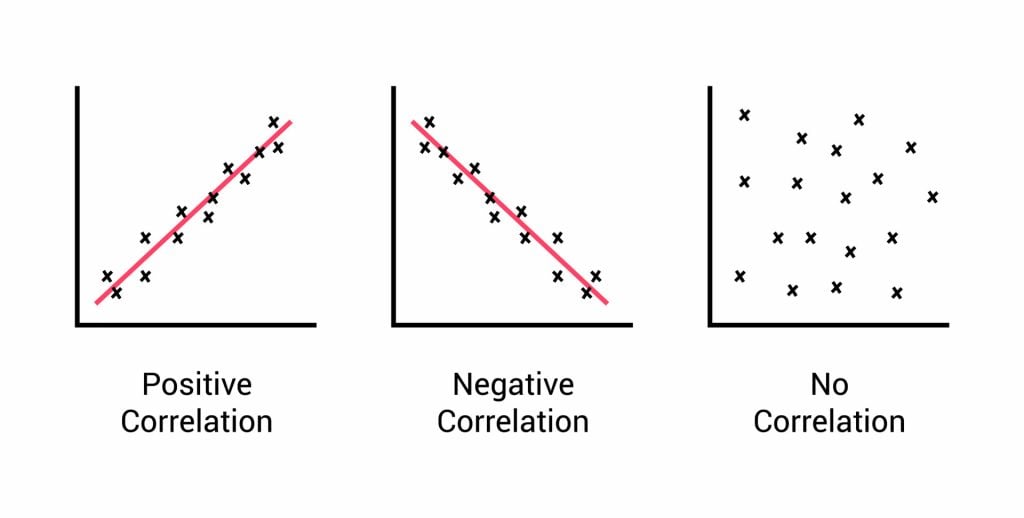

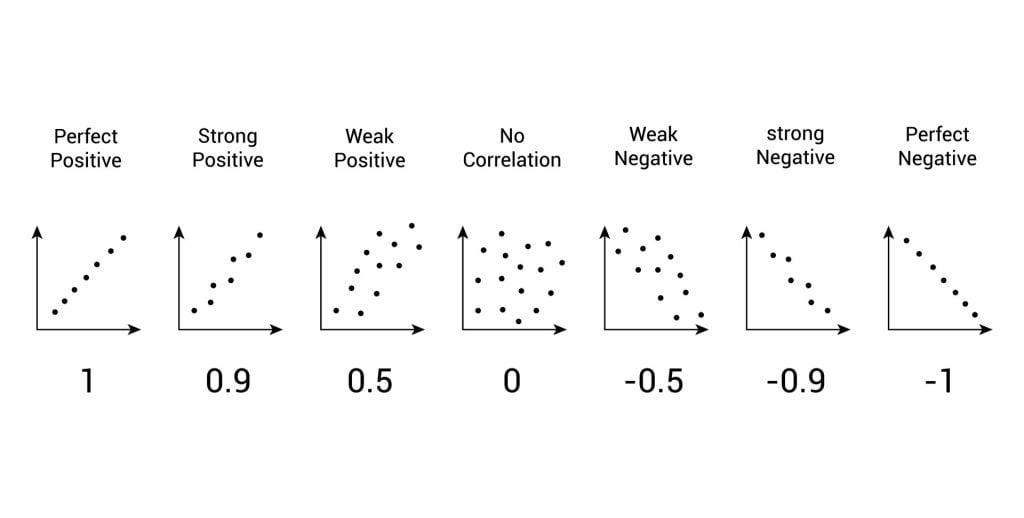

6. Surveys give you the opportunity to compare results. After researchers quantify the information collected from surveys, the data can be used to compare and contrast the results from other research efforts. This benefit makes it possible to use the info to measure change. That means a questionnaire that goes out every month or each year becomes more valuable over time.

When you can gather a significant amount of data, then the picture you are trying to interpret will become much clearer. Surveys provide the capability of generating new strategies or identifying new trends to create more opportunities.

7. It offers a straightforward analysis and visualization of the data. Most surveys are quantitative by design. This process allows for the advantage of a straightforward analysis process so that the results can be quickly visualized. That means a data scientist doesn’t need to be available to start the work of interpreting the results. You can take advantage of third-party software tools that can turn this info into usable reports, charts, and tables to facilitate the presentation efforts.

8. Survey respondents can stay anonymous with this research approach. If you choose to use online or email surveys, then there is a fantastic opportunity to allow respondents to remain anonymous. Complete invisibility is also possible with postal questionnaires, allowing researchers to maximize the levels of comfort available to the individuals who offer answers. Even a phone conversation doesn’t require a face-to-face meeting, creating this unique benefit.

When people have confidence in the idea that their responses will not be directly associated with their reputation, then researchers have an opportunity to collect information with greater accuracy.

9. It is a research tool with fewer time constraints. Surveys have fewer time limits associated with them when compared to other research methods. There is no one on the other end of an email or postal questionnaire that wants an immediate answer. That means a respondent can take additional time to complete each answer in the most comfortable way possible. This benefit is another way to encourage more honesty within the results since having a researcher presence can often lead to socially desirable answers.

10. Surveys can cover every component of any topic. Another critical advantage that surveys provide is the ability to ask as many questions as you want. There is a benefit in keeping an individual questionnaire short because a respondent may find a lengthy process to be frustrating. The best results typically come when you can create an experience that involves 10 or fewer questions.

Since this is a low-cost solution for gathering data, there is no harm in creating multiple surveys that have an easy mode of delivery. This benefit gives you the option to cover as many sub-topics as possible so that you can build a complete profile of almost any subject matter.

List of the Disadvantages of Survey Research

1. There is always a risk that people will provide dishonest answers. The risk of receiving a dishonest answer is lower when you use anonymous surveys, but it does not disappear entirely. Some people want to help researchers come to whatever specific conclusion they think the process is pursuing. There is also a level of social desirability bias that creeps into the data based on the interactions that respondents have with questionnaires. You can avoid some of this disadvantage by assuring individuals that their privacy is a top priority and that the process you use prevents personal information leaks, but you can’t stop this problem 100% of the time.

2. You might discover that some questions don’t get answers. If you decide to use a survey to gather information, then there is a risk that some questions will be left unanswered or ignored. If some questions are not required, then respondents might choose not to answer them. An easy way to get around this disadvantage is to use an online solution that makes answering questions a required component of each step. Then make sure that your survey stays short and to the point to avoid having people abandon the process altogether.

3. There can be differences in how people understand the survey questions. There can be a lot of information that gets lost in translation when researchers opt to use a survey instead of other research methods. When there is not someone available to explain a questionnaire entirely, then the results can be somewhat subjective. You must give everyone an opportunity to have some understanding of the process so that you can encourage accurate answers.

It is not unusual to have respondents struggle to grasp the meaning of some questions, even though the text might seem clear to the people who created it. Whenever miscommunication is part of the survey process, the results will skew in unintended directions. The only way to avoid this problem is to make the questions as simple as possible.

4. Surveys struggle to convey emotions with the achievable results. A survey does not do a good job of capturing a person’s emotional response to the questions then counter. The only way to gather this information is to have an in-person interview with every respondent. Facial expressions and other forms of body language can add subtlety to a conversation that isn’t possible when someone is filling out an online questionnaire.

Some researchers get stuck trying to interpret feelings in the data they receive. A sliding-scale response that includes various levels of agreement or disagreement can try to replicate the concept of emotion, but it isn’t quite the same as being in the same room as someone. Assertion and strength will always be better information-gathering tools than multiple-choice questions.

5. Some answers can be challenging to classify. Surveys produce a lot of data because of their nature. You can tabulate multiple-choice questions, graph agreement or disagreement in specific areas, or create open-ended questions that can be challenging to analyze. Individualized answers can create a lot of useful information, but they can also provide you with data that cannot be quantified. If you incorporate several questions of this nature into a questionnaire, then it will take a long time to analyze what you received.

Only 10% of the questions on the survey should have an open-ended structure. If the questions are confusing or bothersome, then you might find that the information you must manually review is mostly meaningless.

6. You must remove someone with a hidden agenda as soon as possible. Respondent bias can be a problem in any research type. Participants in your survey could have an interest in your idea, service, or product. Others might find themselves being influenced to participate because of the subject material found in your questionnaire. These issues can lead to inaccurate data gathering because it generates an imbalance of respondents who either see the process as overly positive or negative.

This disadvantage of survey research can be avoided by using effective pre-screening tools that use indirect questions that identify this bias.

7. Surveys don’t provide the same level of personalization. Any marketing effort will feel impersonal unless you take the time to customize the process. Because the information you want to collect on a questionnaire is generic by nature, it can be challenging to generate any interest in this activity because there is no value promised to the respondent. Some people can be put off by the idea of filling out a generic form, leading them to abandon the process.

This issue is especially difficult when your survey is taken voluntarily online, regardless of an email subscription or recent purchase.

8. Some respondents will choose answers before reading the questions. Every researcher hopes that respondents will provide conscientious responses to the questions offered in a survey. The problem here is that there is no way to know if the person filling out the questionnaire really understood the content provided to them. You don’t even have a guarantee that the individual read the question thoroughly before offering a response.

There are times when answers are chosen before someone fully reads the question and all of the answers. Some respondents skip through questions or make instant choices without reading the content at all. Because you have no way to know when this issue occurs, there will always be a measure of error in the collected data.

9. Accessibility issues can impact some surveys. A lack of accessibility is always a threat that researchers face when using surveys. This option might be unsuitable for individuals who have a visual or hearing impairment. Literacy is often necessary to complete this process. These issues should come under consideration during the planning stages of the research project to avoid this potential disadvantage. Then make the effort to choose a platform that has the accessibility options you need already built into it.

10. Survey fatigue can be a real issue that some respondents face. There are two issues that manifest themselves because of this disadvantage. The first problem occurs before someone even encounters your questionnaire. Because they feel overwhelmed by the growing number of requests for information, a respondent is automatically less inclined to participate in a research project. That results in a lower overall response rate.

Then there is the problem of fatigue that happens while taking a survey. This issue occurs when someone feels like the questionnaire is too long or contains questions that seem irrelevant. You can tell when this problem happens because a low completion rate is the result. Try to make the process as easy as possible to avoid the issues with this disadvantage.

Surveys sometimes have a poor reputation. Researchers have seen response rates decline because this method of data gathering has become unpopular since the 1990s. Part of the reason for this perception is due to the fact that everyone tries to use it online since it is a low-cost way to collect information for decision-making purposes.

That’s why researchers are moving toward a rewards-based system to encourage higher participation and completion rates. The most obvious way to facilitate this behavior is to offer something tangible, such as a gift card or a contest entry. You can generate more responses by creating an anonymous process that encourages direct and honest answers.

These survey research advantages and disadvantages prove that this process isn’t as easy as it might see from the outside. Until you sit down to start writing the questions, you may not entirely know where you want to take this data collection effort. By incorporating the critical points above, you can begin to craft questions in a way that encourages the completion of the activity.

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

When to Use Surveys in Psychology Research

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

James Lacy, MLS, is a fact-checker and researcher.

:max_bytes(150000):strip_icc():format(webp)/James-Lacy-1000-73de2239670146618c03f8b77f02f84e.jpg)

Reasons to Use Surveys in Psychology

- How to Use Surveys

- Disadvantages

Types of Psychological Surveys

- Important Considerations

A survey is a data collection tool used to gather information about individuals. Surveys are commonly used in psychology research to collect self-report data from study participants. A survey may focus on factual information about individuals, or it might aim to obtain the opinions of the survey takers.

Psychology surveys involve asking participants a series of questions to learn more about a phenomenon, such as how they think, feel, or behave. Such tools can be helpful for learning about behaviors, conditions, traits, or other topics that interest researchers.

At a Glance

Psychological surveys are a valuable research tool that allow scientists to collect large quantities of data relatively quickly. However, such surveys sometimes have low response rates that can lead to biased results. Learning more about how surveys are used in psychology can give you a better understanding of how this type of research can be used to learn more about the human mind and behavior.

So why do psychologists opt to use surveys so often in psychology research?

Surveys are one of the most commonly used research tools because they can be utilized to collect data and describe naturally occurring phenomena that exist in the real world.

They offer researchers a way to collect a great deal of information in a relatively quick and easy way. A large number of responses can be obtained quite quickly, which allows scientists to work with a lot of data.

Surveys in psychology are vital because they allow researchers to:

- Understand the experiences, opinions, and behaviors of the participants

- Evaluate respondent attitudes and beliefs

- Look at the risk factors in a sample

- Assess individual differences

- Evaluate the success of interventions or preventative programs

How to Use Surveys in Psychology

A survey can be used to investigate the characteristics, behaviors, or opinions of a group of people. These research tools can be used to ask questions about demographic information about characteristics such as sex, religion, ethnicity, and income.

They can also collect information on experiences, opinions, and even hypothetical scenarios. For example, researchers might present people with a possible scenario and then ask them how they might respond in that situation.

How do researchers go about collecting information using surveys?

How Surveys Are Administered

A survey can be administered in a couple of different ways:

- Structured interview: The researcher asks each participant the questions

- Questionnaire: the participant fills out the survey independently

You have probably taken many different surveys in the past, although the questionnaire method tends to be the most common.

Surveys are generally standardized to ensure that they have reliability and validity . Standardization is also important so that the results can be generalized to the larger population.

Advantages of Psychological Surveys

One of the big benefits of using surveys in psychological research is that they allow researchers to gather a large quantity of data relatively quickly and cheaply.

A survey can be administered as a structured interview or as a self-report measure, and data can be collected in person, over the phone, or on a computer.

- Data collection : Surveys allow researchers to collect a large amount of data in a relatively short period.

- Cost-effectiveness : Surveys are less expensive than many other data collection techniques.

- Ease of administration : Surveys can be created quickly and administered easily.

- Usefulness : Surveys can be used to collect information on a broad range of things, including personal facts, attitudes, past behaviors, and opinions.

Disadvantages of Using Surveys in Psychology

One potential problem with written surveys is the nonresponse bias.

Experts suggest that return rates of 85% or higher are considered excellent, but anything below 60% might severely impact the sample's representativeness .

- Problems with construction and administration : Poor survey construction and administration can undermine otherwise well-designed studies.

- Inaccuracy : The answer choices provided in a survey may not be an accurate reflection of how the participants actually feel.

- Poor response rates : While random sampling is generally used to select participants, response rates can bias the results of a survey. Strategies to improve response rates sometimes include offering financial incentives, sending personalized invitations, and reminding participants to complete the survey.

- Biased results : The social desirability bias can lead people to respond in a way that makes them look better than they really are. For example, a respondent might report that they engage in more healthy behaviors than they do in real life.

Less expensive

Easy to create and administer

Diverse uses

Subject to nonresponse bias

May be poorly designed

Limited answer choices can influence results

Subject to social desirability bias

Surveys can be implemented in a number of different ways. The chances are good that you have participated in a number of different market research surveys in the past.

Some of the most common ways to administer surveys include:

- Mail : An example might include an alumni survey distributed via direct mail by your alma mater.

- Telephone : An example of a telephone survey would be a market research call about your experiences with a certain consumer product.

- Online : Online surveys might focus on your experience with a particular retailer, product, or website.

- At-home interviews : The U.S. Census is a good example of an at-home interview survey administration.

Important Considerations When Using Psychological Surveys

When researchers are using surveys in psychology research, there are important ethical factors they need to consider while collecting data.

- Obtaining informed consent is vital : Before administering a psychological survey, all participants should be informed about the purpose and potential risks before responding.

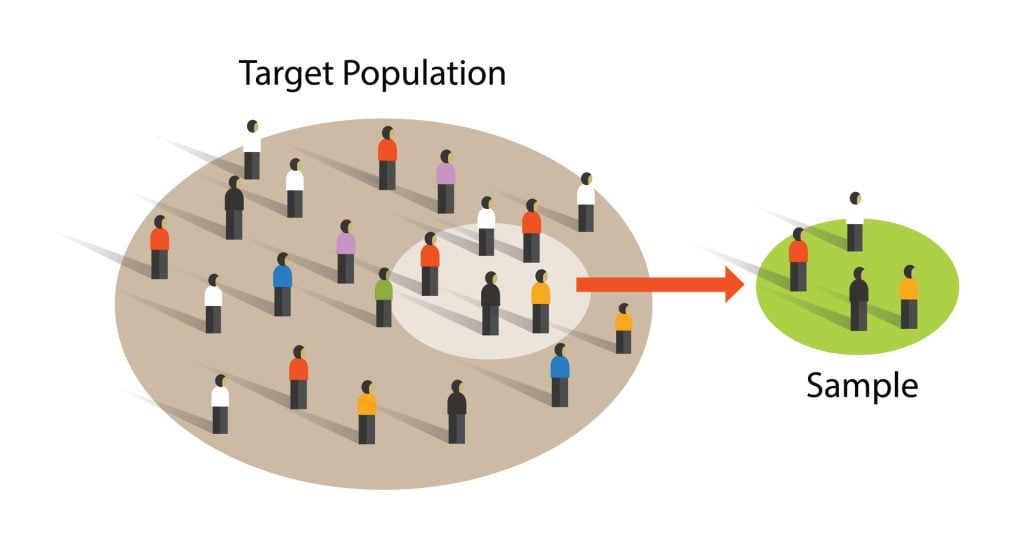

- Creating a representative sample : Researchers should ensure that their participant sample is representative of the larger population. This involves including participants who are part of diverse populations.

- Participation must be voluntary : Anyone who responds to a survey must do so of their own free will. They should not feel coerced or bribed into participating.

- Take steps to reduce bias : Questions should be carefully constructed so they do not affect how the participants respond. Researchers should also be cautious to avoid insensitive or offensive questions.

- Confidentiality : All survey responses should be kept confidential. Researchers often utilize special software that ensures privacy, protects data, and avoids using identifiable information.

Psychological surveys can be powerful, convenient, and informative research tools. Researchers often utilize surveys in psychology to collect data about how participants think, feel, or behave. While useful, it is important to construct these surveys carefully to avoid asking leading questions and reduce bias.

National Science Foundation. Directorate for Education and Human Resources Division of Research, Evaluation and Communication. The 2002 User-Friendly Handbook for Project Evaluation. Section III. An Overview of Quantitative and Qualitative Data Collection Methods. 5. Data collection methods: Some tips and comparisons. Arlington, Va.: The National Science Foundation, 2002.

Jones TL, Baxter MA, Khanduja V. A quick guide to survey research. Ann R Coll Surg Engl. 2013;95(1):5-7. doi:10.1308/003588413X13511609956372

Finkel EJ, Eastwick PW, Reis HT. Best research practices in psychology: Illustrating epistemological and pragmatic considerations with the case of relationship science. J Pers Soc Psychol . 2015;108(2):275-97. doi:10.1037/pspi0000007

Harris LR, Brown GTL. Mixing interview and questionnaire methods: Practical problems in aligning data . Practical Assessment, Research, and Evaluation. 2010;15 (1). doi:10.7275/959j-ky83

Fincham JE. Response rates and responsiveness for surveys, standards, and the Journal . Am J Pharm Educ . 2008;72(2):43. doi:10.5688/aj720243

Shiyab W, Ferguson C, Rolls K, Halcomb E. Solutions to address low response rates in online surveys . Eur J Cardiovasc Nurs . 2023;22(4):441-444. doi:10.1093/eurjcn/zvad030

Latkin CA, Mai NV, Ha TV, et al. Social desirability response bias and other factors that may influence self-reports of substance use and HIV risk behaviors: A qualitative study of drug users in Vietnam. AIDS Educ Prev. 2016;28(5):417-425. doi:10.1521/aeap.2016.28.5.417

American Psychological Association. Ethical principles of psychologists and code of conduct .

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

9.1 Overview of Survey Research

Learning objectives.

- Define what survey research is, including its two important characteristics.

- Describe several different ways that survey research can be used and give some examples.

What Is Survey Research?

Survey research is a quantitative approach that has two important characteristics. First, the variables of interest are measured using self-reports. In essence, survey researchers ask their participants (who are often called respondents in survey research) to report directly on their own thoughts, feelings, and behaviors. Second, considerable attention is paid to the issue of sampling. In particular, survey researchers have a strong preference for large random samples because they provide the most accurate estimates of what is true in the population. In fact, survey research may be the only approach in psychology in which random sampling is routinely used. Beyond these two characteristics, almost anything goes in survey research. Surveys can be long or short. They can be conducted in person, by telephone, through the mail, or over the Internet. They can be about voting intentions, consumer preferences, social attitudes, health, or anything else that it is possible to ask people about and receive meaningful answers.

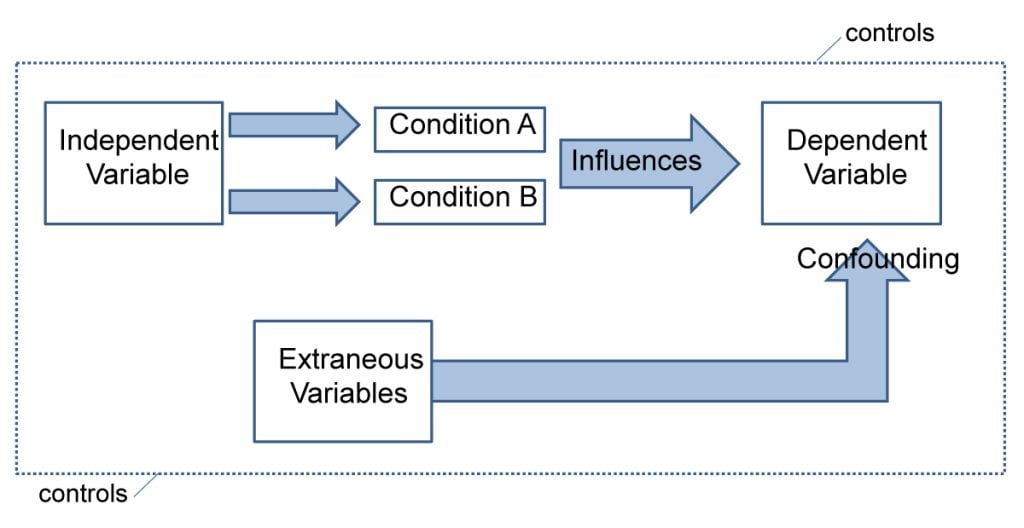

Most survey research is nonexperimental. It is used to describe single variables (e.g., the percentage of voters who prefer one presidential candidate or another, the prevalence of schizophrenia in the general population) and also to assess statistical relationships between variables (e.g., the relationship between income and health). But surveys can also be experimental. The study by Lerner and her colleagues is a good example. Their use of self-report measures and a large national sample identifies their work as survey research. But their manipulation of an independent variable (anger vs. fear) to assess its effect on a dependent variable (risk judgments) also identifies their work as experimental.

History and Uses of Survey Research

Survey research may have its roots in English and American “social surveys” conducted around the turn of the 20th century by researchers and reformers who wanted to document the extent of social problems such as poverty (Converse, 1987). By the 1930s, the US government was conducting surveys to document economic and social conditions in the country. The need to draw conclusions about the entire population helped spur advances in sampling procedures. At about the same time, several researchers who had already made a name for themselves in market research, studying consumer preferences for American businesses, turned their attention to election polling. A watershed event was the presidential election of 1936 between Alf Landon and Franklin Roosevelt. A magazine called Literary Digest conducted a survey by sending ballots (which were also subscription requests) to millions of Americans. Based on this “straw poll,” the editors predicted that Landon would win in a landslide. At the same time, the new pollsters were using scientific methods with much smaller samples to predict just the opposite—that Roosevelt would win in a landslide. In fact, one of them, George Gallup, publicly criticized the methods of Literary Digest before the election and all but guaranteed that his prediction would be correct. And of course it was. (We will consider the reasons that Gallup was right later in this chapter.)

From market research and election polling, survey research made its way into several academic fields, including political science, sociology, and public health—where it continues to be one of the primary approaches to collecting new data. Beginning in the 1930s, psychologists made important advances in questionnaire design, including techniques that are still used today, such as the Likert scale. (See “What Is a Likert Scale?” in Section 9.2 “Constructing Survey Questionnaires” .) Survey research has a strong historical association with the social psychological study of attitudes, stereotypes, and prejudice. Early attitude researchers were also among the first psychologists to seek larger and more diverse samples than the convenience samples of college students that were routinely used in psychology (and still are).

Survey research continues to be important in psychology today. For example, survey data have been instrumental in estimating the prevalence of various mental disorders and identifying statistical relationships among those disorders and with various other factors. The National Comorbidity Survey is a large-scale mental health survey conducted in the United States (see http://www.hcp.med.harvard.edu/ncs ). In just one part of this survey, nearly 10,000 adults were given a structured mental health interview in their homes in 2002 and 2003. Table 9.1 “Some Lifetime Prevalence Results From the National Comorbidity Survey” presents results on the lifetime prevalence of some anxiety, mood, and substance use disorders. (Lifetime prevalence is the percentage of the population that develops the problem sometime in their lifetime.) Obviously, this kind of information can be of great use both to basic researchers seeking to understand the causes and correlates of mental disorders and also to clinicians and policymakers who need to understand exactly how common these disorders are.

Table 9.1 Some Lifetime Prevalence Results From the National Comorbidity Survey

And as the opening example makes clear, survey research can even be used to conduct experiments to test specific hypotheses about causal relationships between variables. Such studies, when conducted on large and diverse samples, can be a useful supplement to laboratory studies conducted on college students. Although this is not a typical use of survey research, it certainly illustrates the flexibility of this approach.

Key Takeaways

- Survey research is a quantitative approach that features the use of self-report measures on carefully selected samples. It is a flexible approach that can be used to study a wide variety of basic and applied research questions.

- Survey research has its roots in applied social research, market research, and election polling. It has since become an important approach in many academic disciplines, including political science, sociology, public health, and, of course, psychology.

Discussion: Think of a question that each of the following professionals might try to answer using survey research.

- a social psychologist

- an educational researcher

- a market researcher who works for a supermarket chain

- the mayor of a large city

- the head of a university police force

Converse, J. M. (1987). Survey research in the United States: Roots and emergence, 1890–1960 . Berkeley, CA: University of California Press.

Research Methods in Psychology Copyright © 2016 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Survey Research

34 Overview of Survey Research

Learning objectives.

- Define what survey research is, including its two important characteristics.

- Describe several different ways that survey research can be used and give some examples.

What Is Survey Research?

Survey research is a quantitative and qualitative method with two important characteristics. First, the variables of interest are measured using self-reports (using questionnaires or interviews). In essence, survey researchers ask their participants (who are often called respondents in survey research) to report directly on their own thoughts, feelings, and behaviors. Second, considerable attention is paid to the issue of sampling. In particular, survey researchers have a strong preference for large random samples because they provide the most accurate estimates of what is true in the population. In fact, survey research may be the only approach in psychology in which random sampling is routinely used. Beyond these two characteristics, almost anything goes in survey research. Surveys can be long or short. They can be conducted in person, by telephone, through the mail, or over the Internet. They can be about voting intentions, consumer preferences, social attitudes, health, or anything else that it is possible to ask people about and receive meaningful answers. Although survey data are often analyzed using statistics, there are many questions that lend themselves to more qualitative analysis.

Most survey research is non-experimental. It is used to describe single variables (e.g., the percentage of voters who prefer one presidential candidate or another, the prevalence of schizophrenia in the general population, etc.) and also to assess statistical relationships between variables (e.g., the relationship between income and health). But surveys can also be used within experimental research. The study by Lerner and her colleagues is a good example. Their use of self-report measures and a large national sample identifies their work as survey research. But their manipulation of an independent variable (anger vs. fear) to assess its effect on a dependent variable (risk judgments) also identifies their work as experimental.

History and Uses of Survey Research

Survey research may have its roots in English and American “social surveys” conducted around the turn of the 20th century by researchers and reformers who wanted to document the extent of social problems such as poverty (Converse, 1987) [1] . By the 1930s, the US government was conducting surveys to document economic and social conditions in the country. The need to draw conclusions about the entire population helped spur advances in sampling procedures. At about the same time, several researchers who had already made a name for themselves in market research, studying consumer preferences for American businesses, turned their attention to election polling. A watershed event was the presidential election of 1936 between Alf Landon and Franklin Roosevelt. A magazine called Literary Digest conducted a survey by sending ballots (which were also subscription requests) to millions of Americans. Based on this “straw poll,” the editors predicted that Landon would win in a landslide. At the same time, the new pollsters were using scientific methods with much smaller samples to predict just the opposite—that Roosevelt would win in a landslide. In fact, one of them, George Gallup, publicly criticized the methods of Literary Digest before the election and all but guaranteed that his prediction would be correct. And of course, it was, demonstrating the effectiveness of careful survey methodology (We will consider the reasons that Gallup was right later in this chapter). Gallup’s demonstration of the power of careful survey methods led later researchers to to local, and in 1948, the first national election survey by the Survey Research Center at the University of Michigan. This work eventually became the American National Election Studies ( https://electionstudies.org/ ) as a collaboration of Stanford University and the University of Michigan, and these studies continue today.

From market research and election polling, survey research made its way into several academic fields, including political science, sociology, and public health—where it continues to be one of the primary approaches to collecting new data. Beginning in the 1930s, psychologists made important advances in questionnaire design, including techniques that are still used today, such as the Likert scale. (See “What Is a Likert Scale?” in Section 7.2 “Constructing Survey Questionnaires” .) Survey research has a strong historical association with the social psychological study of attitudes, stereotypes, and prejudice. Early attitude researchers were also among the first psychologists to seek larger and more diverse samples than the convenience samples of university students that were routinely used in psychology (and still are).

Survey research continues to be important in psychology today. For example, survey data have been instrumental in estimating the prevalence of various mental disorders and identifying statistical relationships among those disorders and with various other factors. The National Comorbidity Survey is a large-scale mental health survey conducted in the United States (see http://www.hcp.med.harvard.edu/ncs ). In just one part of this survey, nearly 10,000 adults were given a structured mental health interview in their homes in 2002 and 2003. Table 7.1 presents results on the lifetime prevalence of some anxiety, mood, and substance use disorders. (Lifetime prevalence is the percentage of the population that develops the problem sometime in their lifetime.) Obviously, this kind of information can be of great use both to basic researchers seeking to understand the causes and correlates of mental disorders as well as to clinicians and policymakers who need to understand exactly how common these disorders are.

And as the opening example makes clear, survey research can even be used as a data collection method within experimental research to test specific hypotheses about causal relationships between variables. Such studies, when conducted on large and diverse samples, can be a useful supplement to laboratory studies conducted on university students. Survey research is thus a flexible approach that can be used to study a variety of basic and applied research questions.

- Converse, J. M. (1987). Survey research in the United States: Roots and emergence, 1890–1960 . Berkeley, CA: University of California Press. ↵

A quantitative and qualitative method with two important characteristics; variables are measured using self-reports and considerable attention is paid to the issue of sampling.

Participants in a survey or study.

Research Methods in Psychology Copyright © 2019 by Rajiv S. Jhangiani, I-Chant A. Chiang, Carrie Cuttler, & Dana C. Leighton is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Survey Research | Definition, Examples & Methods

Survey Research | Definition, Examples & Methods

Published on August 20, 2019 by Shona McCombes . Revised on June 22, 2023.

Survey research means collecting information about a group of people by asking them questions and analyzing the results. To conduct an effective survey, follow these six steps:

- Determine who will participate in the survey

- Decide the type of survey (mail, online, or in-person)

- Design the survey questions and layout

- Distribute the survey

- Analyze the responses

- Write up the results

Surveys are a flexible method of data collection that can be used in many different types of research .

Table of contents

What are surveys used for, step 1: define the population and sample, step 2: decide on the type of survey, step 3: design the survey questions, step 4: distribute the survey and collect responses, step 5: analyze the survey results, step 6: write up the survey results, other interesting articles, frequently asked questions about surveys.

Surveys are used as a method of gathering data in many different fields. They are a good choice when you want to find out about the characteristics, preferences, opinions, or beliefs of a group of people.

Common uses of survey research include:

- Social research : investigating the experiences and characteristics of different social groups

- Market research : finding out what customers think about products, services, and companies

- Health research : collecting data from patients about symptoms and treatments

- Politics : measuring public opinion about parties and policies

- Psychology : researching personality traits, preferences and behaviours

Surveys can be used in both cross-sectional studies , where you collect data just once, and in longitudinal studies , where you survey the same sample several times over an extended period.

Prevent plagiarism. Run a free check.

Before you start conducting survey research, you should already have a clear research question that defines what you want to find out. Based on this question, you need to determine exactly who you will target to participate in the survey.

Populations

The target population is the specific group of people that you want to find out about. This group can be very broad or relatively narrow. For example:

- The population of Brazil

- US college students

- Second-generation immigrants in the Netherlands

- Customers of a specific company aged 18-24

- British transgender women over the age of 50

Your survey should aim to produce results that can be generalized to the whole population. That means you need to carefully define exactly who you want to draw conclusions about.

Several common research biases can arise if your survey is not generalizable, particularly sampling bias and selection bias . The presence of these biases have serious repercussions for the validity of your results.

It’s rarely possible to survey the entire population of your research – it would be very difficult to get a response from every person in Brazil or every college student in the US. Instead, you will usually survey a sample from the population.

The sample size depends on how big the population is. You can use an online sample calculator to work out how many responses you need.

There are many sampling methods that allow you to generalize to broad populations. In general, though, the sample should aim to be representative of the population as a whole. The larger and more representative your sample, the more valid your conclusions. Again, beware of various types of sampling bias as you design your sample, particularly self-selection bias , nonresponse bias , undercoverage bias , and survivorship bias .

There are two main types of survey:

- A questionnaire , where a list of questions is distributed by mail, online or in person, and respondents fill it out themselves.

- An interview , where the researcher asks a set of questions by phone or in person and records the responses.

Which type you choose depends on the sample size and location, as well as the focus of the research.

Questionnaires

Sending out a paper survey by mail is a common method of gathering demographic information (for example, in a government census of the population).

- You can easily access a large sample.

- You have some control over who is included in the sample (e.g. residents of a specific region).

- The response rate is often low, and at risk for biases like self-selection bias .

Online surveys are a popular choice for students doing dissertation research , due to the low cost and flexibility of this method. There are many online tools available for constructing surveys, such as SurveyMonkey and Google Forms .

- You can quickly access a large sample without constraints on time or location.

- The data is easy to process and analyze.

- The anonymity and accessibility of online surveys mean you have less control over who responds, which can lead to biases like self-selection bias .

If your research focuses on a specific location, you can distribute a written questionnaire to be completed by respondents on the spot. For example, you could approach the customers of a shopping mall or ask all students to complete a questionnaire at the end of a class.

- You can screen respondents to make sure only people in the target population are included in the sample.

- You can collect time- and location-specific data (e.g. the opinions of a store’s weekday customers).

- The sample size will be smaller, so this method is less suitable for collecting data on broad populations and is at risk for sampling bias .

Oral interviews are a useful method for smaller sample sizes. They allow you to gather more in-depth information on people’s opinions and preferences. You can conduct interviews by phone or in person.

- You have personal contact with respondents, so you know exactly who will be included in the sample in advance.

- You can clarify questions and ask for follow-up information when necessary.

- The lack of anonymity may cause respondents to answer less honestly, and there is more risk of researcher bias.

Like questionnaires, interviews can be used to collect quantitative data: the researcher records each response as a category or rating and statistically analyzes the results. But they are more commonly used to collect qualitative data : the interviewees’ full responses are transcribed and analyzed individually to gain a richer understanding of their opinions and feelings.

Next, you need to decide which questions you will ask and how you will ask them. It’s important to consider:

- The type of questions

- The content of the questions

- The phrasing of the questions

- The ordering and layout of the survey

Open-ended vs closed-ended questions

There are two main forms of survey questions: open-ended and closed-ended. Many surveys use a combination of both.

Closed-ended questions give the respondent a predetermined set of answers to choose from. A closed-ended question can include:

- A binary answer (e.g. yes/no or agree/disagree )

- A scale (e.g. a Likert scale with five points ranging from strongly agree to strongly disagree )

- A list of options with a single answer possible (e.g. age categories)

- A list of options with multiple answers possible (e.g. leisure interests)

Closed-ended questions are best for quantitative research . They provide you with numerical data that can be statistically analyzed to find patterns, trends, and correlations .

Open-ended questions are best for qualitative research. This type of question has no predetermined answers to choose from. Instead, the respondent answers in their own words.

Open questions are most common in interviews, but you can also use them in questionnaires. They are often useful as follow-up questions to ask for more detailed explanations of responses to the closed questions.

The content of the survey questions

To ensure the validity and reliability of your results, you need to carefully consider each question in the survey. All questions should be narrowly focused with enough context for the respondent to answer accurately. Avoid questions that are not directly relevant to the survey’s purpose.

When constructing closed-ended questions, ensure that the options cover all possibilities. If you include a list of options that isn’t exhaustive, you can add an “other” field.

Phrasing the survey questions

In terms of language, the survey questions should be as clear and precise as possible. Tailor the questions to your target population, keeping in mind their level of knowledge of the topic. Avoid jargon or industry-specific terminology.

Survey questions are at risk for biases like social desirability bias , the Hawthorne effect , or demand characteristics . It’s critical to use language that respondents will easily understand, and avoid words with vague or ambiguous meanings. Make sure your questions are phrased neutrally, with no indication that you’d prefer a particular answer or emotion.

Ordering the survey questions

The questions should be arranged in a logical order. Start with easy, non-sensitive, closed-ended questions that will encourage the respondent to continue.

If the survey covers several different topics or themes, group together related questions. You can divide a questionnaire into sections to help respondents understand what is being asked in each part.

If a question refers back to or depends on the answer to a previous question, they should be placed directly next to one another.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Before you start, create a clear plan for where, when, how, and with whom you will conduct the survey. Determine in advance how many responses you require and how you will gain access to the sample.

When you are satisfied that you have created a strong research design suitable for answering your research questions, you can conduct the survey through your method of choice – by mail, online, or in person.

There are many methods of analyzing the results of your survey. First you have to process the data, usually with the help of a computer program to sort all the responses. You should also clean the data by removing incomplete or incorrectly completed responses.

If you asked open-ended questions, you will have to code the responses by assigning labels to each response and organizing them into categories or themes. You can also use more qualitative methods, such as thematic analysis , which is especially suitable for analyzing interviews.

Statistical analysis is usually conducted using programs like SPSS or Stata. The same set of survey data can be subject to many analyses.

Finally, when you have collected and analyzed all the necessary data, you will write it up as part of your thesis, dissertation , or research paper .

In the methodology section, you describe exactly how you conducted the survey. You should explain the types of questions you used, the sampling method, when and where the survey took place, and the response rate. You can include the full questionnaire as an appendix and refer to it in the text if relevant.

Then introduce the analysis by describing how you prepared the data and the statistical methods you used to analyze it. In the results section, you summarize the key results from your analysis.

In the discussion and conclusion , you give your explanations and interpretations of these results, answer your research question, and reflect on the implications and limitations of the research.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

A questionnaire is a data collection tool or instrument, while a survey is an overarching research method that involves collecting and analyzing data from people using questionnaires.

A Likert scale is a rating scale that quantitatively assesses opinions, attitudes, or behaviors. It is made up of 4 or more questions that measure a single attitude or trait when response scores are combined.

To use a Likert scale in a survey , you present participants with Likert-type questions or statements, and a continuum of items, usually with 5 or 7 possible responses, to capture their degree of agreement.

Individual Likert-type questions are generally considered ordinal data , because the items have clear rank order, but don’t have an even distribution.

Overall Likert scale scores are sometimes treated as interval data. These scores are considered to have directionality and even spacing between them.

The type of data determines what statistical tests you should use to analyze your data.

The priorities of a research design can vary depending on the field, but you usually have to specify:

- Your research questions and/or hypotheses

- Your overall approach (e.g., qualitative or quantitative )

- The type of design you’re using (e.g., a survey , experiment , or case study )

- Your sampling methods or criteria for selecting subjects

- Your data collection methods (e.g., questionnaires , observations)

- Your data collection procedures (e.g., operationalization , timing and data management)

- Your data analysis methods (e.g., statistical tests or thematic analysis )

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, June 22). Survey Research | Definition, Examples & Methods. Scribbr. Retrieved March 25, 2024, from https://www.scribbr.com/methodology/survey-research/

Is this article helpful?

Shona McCombes

Other students also liked, qualitative vs. quantitative research | differences, examples & methods, questionnaire design | methods, question types & examples, what is a likert scale | guide & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 9: Survey Research

Overview of Survey Research

Learning Objectives

- Define what survey research is, including its two important characteristics.

- Describe several different ways that survey research can be used and give some examples.

What Is Survey Research?

Survey research is a quantitative and qualitative method with two important characteristics. First, the variables of interest are measured using self-reports. In essence, survey researchers ask their participants (who are often called respondents in survey research) to report directly on their own thoughts, feelings, and behaviours. Second, considerable attention is paid to the issue of sampling. In particular, survey researchers have a strong preference for large random samples because they provide the most accurate estimates of what is true in the population. In fact, survey research may be the only approach in psychology in which random sampling is routinely used. Beyond these two characteristics, almost anything goes in survey research. Surveys can be long or short. They can be conducted in person, by telephone, through the mail, or over the Internet. They can be about voting intentions, consumer preferences, social attitudes, health, or anything else that it is possible to ask people about and receive meaningful answers. Although survey data are often analyzed using statistics, there are many questions that lend themselves to more qualitative analysis.

Most survey research is nonexperimental. It is used to describe single variables (e.g., the percentage of voters who prefer one presidential candidate or another, the prevalence of schizophrenia in the general population) and also to assess statistical relationships between variables (e.g., the relationship between income and health). But surveys can also be experimental. The study by Lerner and her colleagues is a good example. Their use of self-report measures and a large national sample identifies their work as survey research. But their manipulation of an independent variable (anger vs. fear) to assess its effect on a dependent variable (risk judgments) also identifies their work as experimental.

History and Uses of Survey Research

Survey research may have its roots in English and American “social surveys” conducted around the turn of the 20th century by researchers and reformers who wanted to document the extent of social problems such as poverty (Converse, 1987) [1] . By the 1930s, the US government was conducting surveys to document economic and social conditions in the country. The need to draw conclusions about the entire population helped spur advances in sampling procedures. At about the same time, several researchers who had already made a name for themselves in market research, studying consumer preferences for American businesses, turned their attention to election polling. A watershed event was the presidential election of 1936 between Alf Landon and Franklin Roosevelt. A magazine called Literary Digest conducted a survey by sending ballots (which were also subscription requests) to millions of Americans. Based on this “straw poll,” the editors predicted that Landon would win in a landslide. At the same time, the new pollsters were using scientific methods with much smaller samples to predict just the opposite—that Roosevelt would win in a landslide. In fact, one of them, George Gallup, publicly criticized the methods of Literary Digest before the election and all but guaranteed that his prediction would be correct. And of course it was. (We will consider the reasons that Gallup was right later in this chapter.) Interest in surveying around election times has led to several long-term projects, notably the Canadian Election Studies which has measured opinions of Canadian voters around federal elections since 1965. Anyone can access the data and read about the results of the experiments in these studies.

From market research and election polling, survey research made its way into several academic fields, including political science, sociology, and public health—where it continues to be one of the primary approaches to collecting new data. Beginning in the 1930s, psychologists made important advances in questionnaire design, including techniques that are still used today, such as the Likert scale. (See “What Is a Likert Scale?” in Section 9.2 “Constructing Survey Questionnaires” .) Survey research has a strong historical association with the social psychological study of attitudes, stereotypes, and prejudice. Early attitude researchers were also among the first psychologists to seek larger and more diverse samples than the convenience samples of university students that were routinely used in psychology (and still are).

Survey research continues to be important in psychology today. For example, survey data have been instrumental in estimating the prevalence of various mental disorders and identifying statistical relationships among those disorders and with various other factors. The National Comorbidity Survey is a large-scale mental health survey conducted in the United States . In just one part of this survey, nearly 10,000 adults were given a structured mental health interview in their homes in 2002 and 2003. Table 9.1 presents results on the lifetime prevalence of some anxiety, mood, and substance use disorders. (Lifetime prevalence is the percentage of the population that develops the problem sometime in their lifetime.) Obviously, this kind of information can be of great use both to basic researchers seeking to understand the causes and correlates of mental disorders as well as to clinicians and policymakers who need to understand exactly how common these disorders are.

And as the opening example makes clear, survey research can even be used to conduct experiments to test specific hypotheses about causal relationships between variables. Such studies, when conducted on large and diverse samples, can be a useful supplement to laboratory studies conducted on university students. Although this approach is not a typical use of survey research, it certainly illustrates the flexibility of this method.

Key Takeaways

- Survey research is a quantitative approach that features the use of self-report measures on carefully selected samples. It is a flexible approach that can be used to study a wide variety of basic and applied research questions.

- Survey research has its roots in applied social research, market research, and election polling. It has since become an important approach in many academic disciplines, including political science, sociology, public health, and, of course, psychology.

Discussion: Think of a question that each of the following professionals might try to answer using survey research.

- a social psychologist

- an educational researcher

- a market researcher who works for a supermarket chain

- the mayor of a large city

- the head of a university police force

- Converse, J. M. (1987). Survey research in the United States: Roots and emergence, 1890–1960 . Berkeley, CA: University of California Press. ↵

- The lifetime prevalence of a disorder is the percentage of people in the population that develop that disorder at any time in their lives. ↵

A quantitative approach in which variables are measured using self-reports from a sample of the population.

Participants of a survey.

Research Methods in Psychology - 2nd Canadian Edition Copyright © 2015 by Paul C. Price, Rajiv Jhangiani, & I-Chant A. Chiang is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

7.2 Assessing survey research

Learning objectives.

- Identify and explain the strengths of survey research

- Identify and explain the weaknesses of survey research

- Define response rate, and discuss some of the current thinking about response rates

Survey research, as with all methods of data collection, comes with both strengths and weaknesses. We’ll examine both in this section.

Strengths of survey methods

Researchers employing survey methods to collect data enjoy a number of benefits. First, surveys are an excellent way to gather lots of information from many people. Some methods of administering surveys can be cost effective. In a study of older people’s experiences in the workplace, researchers were able to mail a written questionnaire to around 500 people who lived throughout the state of Maine at a cost of just over $1,000. This cost included printing copies of a seven-page survey, printing a cover letter, addressing and stuffing envelopes, mailing the survey, and buying return postage for the survey. In some contexts, $,1,000 is a lot of money, but just imagine what it might have cost to visit each of those people individually to interview them in person. You would have to dedicate a few weeks of your life at least, drive around the state, and pay for meals and lodging to interview each person individually. We could double, triple, or even quadruple our costs pretty quickly by opting for an in-person method of data collection over a mailed survey.

Related to the benefit of cost-effectiveness is a survey’s potential for generalizability. Because surveys allow researchers to collect data from very large samples for a relatively low cost, survey methods lend themselves to probability sampling techniques, which we discussed in Chapter 6. Of all the data collection methods described in this textbook, survey research is probably the best method to use when one hopes to gain a representative picture of the attitudes and characteristics of a large group.

Survey research also tends to be a reliable method of inquiry. This is because surveys are standardized in that the same questions, phrased in exactly the same way, are posed to participants. Other methods, such as qualitative interviewing, which we’ll learn about in Chapter 9, do not offer the same consistency that a quantitative survey offers. This is not to say that all surveys are always reliable. A poorly phrased question can cause respondents to interpret its meaning differently, which can reduce that question’s reliability. Assuming well-constructed questions and survey design, one strength of this methodology is its potential to produce reliable results.

The versatility of survey research is also an asset. Surveys are used by all kinds of people in all kinds of professions. The versatility offered by survey research means that understanding how to construct and administer surveys is a useful skill to have for all kinds of jobs. Lawyers might use surveys in their efforts to select juries, social service and other organizations (e.g., churches, clubs, fundraising groups, activist groups) use them to evaluate the effectiveness of their efforts, businesses use them to learn how to market their products, governments use them to understand community opinions and needs, and politicians and media outlets use surveys to understand their constituencies.

In sum, the following are benefits of survey research:

- Cost-effectiveness

- Generalizability

- Reliability

- Versatility

Weaknesses of survey methods

As with all methods of data collection, survey research also comes with a few drawbacks. First, while one might argue that surveys are flexible in the sense that we can ask any number of questions on any number of topics in them, the fact that the survey researcher is generally stuck with a single instrument for collecting data, the questionnaire. Surveys are in many ways rather inflexible . Let’s say you mail a survey out to 1,000 people and then discover, as responses start coming in, that your phrasing on a particular question seems to be confusing a number of respondents. At this stage, it’s too late for a do-over or to change the question for the respondents who haven’t yet returned their surveys. When conducting in-depth interviews, on the other hand, a researcher can provide respondents further explanation if they’re confused by a question and can tweak their questions as they learn more about how respondents seem to understand them.

Depth can also be a problem with surveys. Survey questions are usually standardized; thus, it can be difficult to ask anything other than very general questions that a broad range of people will understand. Because of this, survey results may not be as valid as results obtained using methods of data collection that allow a researcher to more comprehensively examine whatever topic is being studied. Let’s say, for example, that you want to learn something about voters’ willingness to elect an African American president, as in our opening example in this chapter. General Social Survey respondents were asked, “If your party nominated an African American for president, would you vote for him if he were qualified for the job?” Respondents were then asked to respond either yes or no to the question. But what if someone’s opinion was more complex than could be answered with a simple yes or no? What if, for example, a person was willing to vote for a qualified African American but not if he chose a vice president the respondent didn’t like?

In sum, potential drawbacks to survey research include the following:

- Inflexibility

- Lack of depth

Response rates

The relative strength or weakness of an individual survey is strongly affected by the response rate , the percent of people invited to take the survey who actually complete it. Let’s say researcher sends a survey to 100 people. It would be wonderful if all 100 returned completed the questionnaire, but the chances of that happening are about zero. If the researcher is incredibly lucky, perhaps 75 or so will return completed questionnaires. In this case, the response rate would be 75%. The response rate is calculated by dividing the number of surveys returned by the number of surveys distributed.

Though response rates vary, and researchers don’t always agree about what makes a good response rate; having 75% of your surveys returned would be considered good—even excellent—by most survey researchers. There has been a lot of research done on how to improve a survey’s response rate. Suggestions include personalizing questionnaires by, for example, addressing them to specific respondents rather than to some generic recipient, such as “madam” or “sir”; enhancing the questionnaire’s credibility by providing details about the study, contact information for the researcher, and perhaps partnering with agencies likely to be respected by respondents such as universities, hospitals, or other relevant organizations; sending out pre-questionnaire notices and post-questionnaire reminders; and including some token of appreciation with mailed questionnaires even if small, such as a $1 bill.

The major concern with response rates is that a low rate of response may introduce nonresponse bias into a study’s findings. What if only those who have strong opinions about your study topic return their questionnaires? If that is the case, we may well find that our findings don’t at all represent how things really are or, at the very least, we are limited in the claims we can make about patterns found in our data. While high return rates are certainly ideal, a recent body of research shows that concern over response rates may be overblown (Langer, 2003). Several studies have shown that low response rates did not make much difference in findings or in sample representativeness (Curtin, Presser, & Singer, 2000; Keeter, Kennedy, Dimock, Best, & Craighill, 2006; Merkle & Edelman, 2002). For now, the jury may still be out on what makes an ideal response rate and on whether, or to what extent, researchers should be concerned about response rates. Nevertheless, certainly no harm can come from aiming for as high a response rate as possible.

Key Takeaways

- Strengths of survey research include its cost effectiveness, generalizability, reliability, and versatility.

- Weaknesses of survey research include inflexibility and issues with depth.

- While survey researchers should always aim to obtain the highest response rate possible, some recent research argues that high return rates on surveys may be less important than we once thought.

- Nonresponse bias- bias reflected differences between people who respond to your survey and those who do not respond

- Response rate- the number of people who respond to your survey divided by the number of people to whom the survey was distributed

Image attributions

experience by mohamed_hassan CC-0

Foundations of Social Work Research Copyright © 2020 by Rebecca L. Mauldin is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Social Science Works

Center of Deliberation

The Limits Of Survey Data: What Questionnaires Can’t Tell Us

- Latest Posts

- Alienation Online: An Analysis of Populist Facebook Pages In Brandenburg - October 24, 2017

- Shy Tories & Virtue Signalling: How Labour Surged Online - June 16, 2017

- The Limits Of Survey Data: What Questionnaires Can’t Tell Us - March 7, 2017

All research methodologies have their limitations, as many authors have pointed before (see for example Visser, Krosnick and Lavrakas, 2000). From the generalisabilty of data to the nitty-gritty of bias and question wording, every method has its flaws. In fact, the in-fighting between methodological approaches is one of social science’s worst kept secrets: the hostility between quantitative and qualitative data scholars knows almost no bounds (admittedly that’s ‘almost no bounds’ within the polite world of academic debate) and doesn’t look set to be resolved any time soon. That said, there are some methods that are better suited than others to certain types of studies. This article will examine the role of survey data in values studies and argue that it is a blunt tool for this kind of research and that qualitative study methods, particularly via deliberation, are more appropriate. This article will do so via an examination of a piece of 2016 research published by the German ministry for migrants and refugees (the BAMF) which explored both the demographics and the social values held by refugees that have arrived in Germany in the last three years. This article will argue that surveys are unfit to get at the issues that are most important to people.

The Good, The Bad & The Survey

Germany has been Europe’s leading figure as the refugee crisis has deepened worldwide following the collapse of government in Syria and the rise of ISIS. Today, there are 65.3 million displaced people from across the world and 21.3 million refugees (UNHCR, 2016) , a number that surpasses even the number of refugees following the Second World War. The exact number of refugees living in Germany (official statistics typically count all migrants seeking protection as refugees, although there is some difference between the various legal statuses) is not entirely clear and the figure is unstable. And while this figure still lags behind the efforts made by countries like Turkey and Jordan, this represents the highest total number of refugees in a European country and matches the pro capita efforts of Sweden. Meanwhile, there are signs that Germany’s residents do not always welcome their new neighbours. For example, in 2016, there were almost 2,000 reported attacks on refugees and refugee homes (Antonio-Amedeo Stiftung, 2017) a similar trend was established by Benček and Strasheim (2016), and the rise of the far-right and anti-migrant party, the AfD in local elections last year points to unresolved resentment towards the newcomers.

In this context then, it makes sense for the BAMF ( Bundesamt für Migration und Flüchtlinge ) , the ministry responsible for refugees and migrants in Germany, to respond to pressure in the media and from politicians to get a better overall picture about the kinds of people the refugees to Germany are. As such, their 2016 paper: “Survey of refugees – flight, arrival in Germany and first steps of integration” [1] details a host of information about newcomers in Germany. The study, which relied on questionnaires given by BAMF officials in a number of languages, and a face-to-face or online format (BAMF, 2016, 11), asked questions of 4,500 refugee respondents. For the most part, the study offers excellent insight into the demographic history of refugees to Germany and will be helpful for policymakers looking to ensure that efforts to help settles refugees are appropriately targeted. For example, the study detailed the relatively high level of education enjoyed by typical refugees to Germany (an average between 10 and 11 years of schooling) (ibid., 37) and some of the specific difficulties this group have in successfully navigating the job market (ibid., 46) and where this group turns to for help for this.

In addition to offering the most up-to-date information about refugees’ home countries and their path into Germany, the study is extremely helpful for politicians and scholars looking to enhance their understanding of logistical and practical issues facing migrants; for example, who has access to integration courses? How many unaccompanied children are in Germany? How many men and how many women fled to Germany? Here, the study is undoubtedly helpful. However, the latter stages of the report purport to examine the social values held by refugees, and it is this part of the study that this article takes issue with.

Respondents were asked to answer questions about their values. The topics included: the right form a government should take, the role of democracy, voting rights of women, the role of religion in the state, men and women’s equality in a marriage, and perceived difference between the values of refugees and Germans among others. While this article doesn’t take issue with the veracity of the findings reported in the article, it does argue that the methods used here are inappropriate for the task at hand. Consider first the questions relating to refugees’ attitudes toward democracy and government. The report found that 96% of refugee respondents agreed with the statement: “One should have a democratic system” [2] compared with 95% of the German control group (ibid., 52). This finding was picked up in the liberal media and heralded as a sign that refugees share central German social values. It is entirely possible that this is true. However, it isn’t difficult to see the ways in which this number might have been accidentally manufactured and should hence be treated with considerable caution.