Handbook of Research Methods in Health Social Sciences pp 805–826 Cite as

Conducting a Systematic Review: A Practical Guide

- Freya MacMillan 2 ,

- Kate A. McBride 3 ,

- Emma S. George 4 &

- Genevieve Z. Steiner 5

- Reference work entry

- First Online: 13 January 2019

2235 Accesses

1 Citations

It can be challenging to conduct a systematic review with limited experience and skills in undertaking such a task. This chapter provides a practical guide to undertaking a systematic review, providing step-by-step instructions to guide the individual through the process from start to finish. The chapter begins with defining what a systematic review is, reviewing its various components, turning a research question into a search strategy, developing a systematic review protocol, followed by searching for relevant literature and managing citations. Next, the chapter focuses on documenting the characteristics of included studies and summarizing findings, extracting data, methods for assessing risk of bias and considering heterogeneity, and undertaking meta-analyses. Last, the chapter explores creating a narrative and interpreting findings. Practical tips and examples from existing literature are utilized throughout the chapter to assist readers in their learning. By the end of this chapter, the reader will have the knowledge to conduct their own systematic review.

- Systematic review

- Search strategy

- Risk of bias

- Heterogeneity

- Meta-analysis

- Forest plot

- Funnel plot

- Meta-synthesis

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Barbour RS. Checklists for improving rigour in qualitative research: a case of the tail wagging the dog? BMJ. 2001;322(7294):1115–7.

Article Google Scholar

Butler A, Hall H, Copnell B. A guide to writing a qualitative systematic review protocol to enhance evidence-based practice in nursing and health care. Worldviews Evid-Based Nurs. 2016;13(3):241–9.

Cook DJ, Mulrow CD, Haynes RB. Systematic reviews: synthesis of best evidence for clinical decisions. Ann Intern Med. 1997;126(5):376–80.

Dixon-Woods M, Bonas S, Booth A, Jones DR, Miller T, Sutton AJ, … Young B. How can systematic reviews incorporate qualitative research? A critical perspective. Qual Res. 2006;6(1):27–44. https://doi.org/10.1177/1468794106058867 .

Greenhalgh T. How to read a paper: the basics of evidence-based medicine. 4th ed. Chichester/Hoboken: Wiley-Blackwell; 2010.

Google Scholar

Hannes K, Lockwood C, Pearson A. A comparative analysis of three online appraisal instruments’ ability to assess validity in qualitative research. Qual Health Res. 2010;20(12):1736–43. https://doi.org/10.1177/1049732310378656 .

Higgins JPT, Green S. Cochrane handbook for systematic reviews of interventions (Version 5.1.0 [updated March 2011]). The Cochrane Collaboration; 2011. http://handbook-5-1.cochrane.org/

Higgins JPT, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, … Sterne JAC. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ. 2011;343. https://doi.org/10.1136/bmj.d5928 .

Hillier S, Grimmer-Somers K, Merlin T, Middleton P, Salisbury J, Tooher R, Weston A. FORM: an Australian method for formulating and grading recommendations in evidence-based clinical guidelines. BMC Med Res Methodol. 2011;11:23. https://doi.org/10.1186/1471-2288-11-23 .

Humphreys DK, Panter J, Ogilvie D. Questioning the application of risk of bias tools in appraising evidence from natural experimental studies: critical reflections on Benton et al., IJBNPA 2016. Int J Behav Nutr Phys Act. 2017; 14 (1):49. https://doi.org/10.1186/s12966-017-0500-4 .

King R, Hooper B, Wood W. Using bibliographic software to appraise and code data in educational systematic review research. Med Teach. 2011;33(9):719–23. https://doi.org/10.3109/0142159x.2011.558138 .

Koelemay MJ, Vermeulen H. Quick guide to systematic reviews and meta-analysis. Eur J Vasc Endovasc Surg. 2016;51(2):309. https://doi.org/10.1016/j.ejvs.2015.11.010 .

Lucas PJ, Baird J, Arai L, Law C, Roberts HM. Worked examples of alternative methods for the synthesis of qualitative and quantitative research in systematic reviews. BMC Med Res Methodol. 2007;7:4–4. https://doi.org/10.1186/1471-2288-7-4 .

MacMillan F, Kirk A, Mutrie N, Matthews L, Robertson K, Saunders DH. A systematic review of physical activity and sedentary behavior intervention studies in youth with type 1 diabetes: study characteristics, intervention design, and efficacy. Pediatr Diabetes. 2014;15(3):175–89. https://doi.org/10.1111/pedi.12060 .

MacMillan F, Karamacoska D, El Masri A, McBride KA, Steiner GZ, Cook A, … George ES. A systematic review of health promotion intervention studies in the police force: study characteristics, intervention design and impacts on health. Occup Environ Med. 2017. https://doi.org/10.1136/oemed-2017-104430 .

Matthews L, Kirk A, MacMillan F, Mutrie N. Can physical activity interventions for adults with type 2 diabetes be translated into practice settings? A systematic review using the RE-AIM framework. Transl Behav Med. 2014;4(1):60–78. https://doi.org/10.1007/s13142-013-0235-y .

Moher D, Schulz KF, Altman DG. The CONSORT statement: revised recommendations for improving the quality of reports of parallel group randomized trials. BMC Med Res Methodol. 2001;1:2. https://doi.org/10.1186/1471-2288-1-2 .

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6(7):e1000097. https://doi.org/10.1371/journal.pmed.1000097 .

Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev. 2015;4:1. https://doi.org/10.1186/2046-4053-4-1 .

Mulrow CD, Cook DJ, Davidoff F. Systematic reviews: critical links in the great chain of evidence. Ann Intern Med. 1997;126(5):389–91.

Peters MDJ. Managing and coding references for systematic reviews and scoping reviews in EndNote. Med Ref Serv Q. 2017;36(1):19–31. https://doi.org/10.1080/02763869.2017.1259891 .

Steiner GZ, Mathersul DC, MacMillan F, Camfield DA, Klupp NL, Seto SW, … Chang DH. A systematic review of intervention studies examining nutritional and herbal therapies for mild cognitive impairment and dementia using neuroimaging methods: study characteristics and intervention efficacy. Evid Based Complement Alternat Med. 2017;2017:21. https://doi.org/10.1155/2017/6083629 .

Sterne JA, Hernán MA, Reeves BC, Savović J, Berkman ND, Viswanathan M, … Higgins JP. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355. https://doi.org/10.1136/bmj.i4919 .

Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. 2007;19(6):349–57. https://doi.org/10.1093/intqhc/mzm042 .

Tong A, Palmer S, Craig JC, Strippoli GFM. A guide to reading and using systematic reviews of qualitative research. Nephrol Dial Transplant. 2016;31(6):897–903. https://doi.org/10.1093/ndt/gfu354 .

Uman LS. Systematic reviews and meta-analyses. J Can Acad Child Adolesc Psychiatry. 2011;20(1):57–9.

Download references

Author information

Authors and affiliations.

School of Science and Health and Translational Health Research Institute (THRI), Western Sydney University, Penrith, NSW, Australia

Freya MacMillan

School of Medicine and Translational Health Research Institute, Western Sydney University, Sydney, NSW, Australia

Kate A. McBride

School of Science and Health, Western Sydney University, Sydney, NSW, Australia

Emma S. George

NICM and Translational Health Research Institute (THRI), Western Sydney University, Penrith, NSW, Australia

Genevieve Z. Steiner

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Freya MacMillan .

Editor information

Editors and affiliations.

School of Science and Health, Western Sydney University, Penrith, NSW, Australia

Pranee Liamputtong

Rights and permissions

Reprints and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this entry

Cite this entry.

MacMillan, F., McBride, K.A., George, E.S., Steiner, G.Z. (2019). Conducting a Systematic Review: A Practical Guide. In: Liamputtong, P. (eds) Handbook of Research Methods in Health Social Sciences. Springer, Singapore. https://doi.org/10.1007/978-981-10-5251-4_113

Download citation

DOI : https://doi.org/10.1007/978-981-10-5251-4_113

Published : 13 January 2019

Publisher Name : Springer, Singapore

Print ISBN : 978-981-10-5250-7

Online ISBN : 978-981-10-5251-4

eBook Packages : Social Sciences Reference Module Humanities and Social Sciences Reference Module Business, Economics and Social Sciences

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Easy guide to conducting a systematic review

Affiliations.

- 1 Discipline of Child and Adolescent Health, University of Sydney, Sydney, New South Wales, Australia.

- 2 Department of Nephrology, The Children's Hospital at Westmead, Sydney, New South Wales, Australia.

- 3 Education Department, The Children's Hospital at Westmead, Sydney, New South Wales, Australia.

- PMID: 32364273

- DOI: 10.1111/jpc.14853

A systematic review is a type of study that synthesises research that has been conducted on a particular topic. Systematic reviews are considered to provide the highest level of evidence on the hierarchy of evidence pyramid. Systematic reviews are conducted following rigorous research methodology. To minimise bias, systematic reviews utilise a predefined search strategy to identify and appraise all available published literature on a specific topic. The meticulous nature of the systematic review research methodology differentiates a systematic review from a narrative review (literature review or authoritative review). This paper provides a brief step by step summary of how to conduct a systematic review, which may be of interest for clinicians and researchers.

Keywords: research; research design; systematic review.

© 2020 Paediatrics and Child Health Division (The Royal Australasian College of Physicians).

Publication types

- Systematic Review

- Research Design*

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Systematic Review | Definition, Example, & Guide

Systematic Review | Definition, Example & Guide

Published on June 15, 2022 by Shaun Turney . Revised on November 20, 2023.

A systematic review is a type of review that uses repeatable methods to find, select, and synthesize all available evidence. It answers a clearly formulated research question and explicitly states the methods used to arrive at the answer.

They answered the question “What is the effectiveness of probiotics in reducing eczema symptoms and improving quality of life in patients with eczema?”

In this context, a probiotic is a health product that contains live microorganisms and is taken by mouth. Eczema is a common skin condition that causes red, itchy skin.

Table of contents

What is a systematic review, systematic review vs. meta-analysis, systematic review vs. literature review, systematic review vs. scoping review, when to conduct a systematic review, pros and cons of systematic reviews, step-by-step example of a systematic review, other interesting articles, frequently asked questions about systematic reviews.

A review is an overview of the research that’s already been completed on a topic.

What makes a systematic review different from other types of reviews is that the research methods are designed to reduce bias . The methods are repeatable, and the approach is formal and systematic:

- Formulate a research question

- Develop a protocol

- Search for all relevant studies

- Apply the selection criteria

- Extract the data

- Synthesize the data

- Write and publish a report

Although multiple sets of guidelines exist, the Cochrane Handbook for Systematic Reviews is among the most widely used. It provides detailed guidelines on how to complete each step of the systematic review process.

Systematic reviews are most commonly used in medical and public health research, but they can also be found in other disciplines.

Systematic reviews typically answer their research question by synthesizing all available evidence and evaluating the quality of the evidence. Synthesizing means bringing together different information to tell a single, cohesive story. The synthesis can be narrative ( qualitative ), quantitative , or both.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Systematic reviews often quantitatively synthesize the evidence using a meta-analysis . A meta-analysis is a statistical analysis, not a type of review.

A meta-analysis is a technique to synthesize results from multiple studies. It’s a statistical analysis that combines the results of two or more studies, usually to estimate an effect size .

A literature review is a type of review that uses a less systematic and formal approach than a systematic review. Typically, an expert in a topic will qualitatively summarize and evaluate previous work, without using a formal, explicit method.

Although literature reviews are often less time-consuming and can be insightful or helpful, they have a higher risk of bias and are less transparent than systematic reviews.

Similar to a systematic review, a scoping review is a type of review that tries to minimize bias by using transparent and repeatable methods.

However, a scoping review isn’t a type of systematic review. The most important difference is the goal: rather than answering a specific question, a scoping review explores a topic. The researcher tries to identify the main concepts, theories, and evidence, as well as gaps in the current research.

Sometimes scoping reviews are an exploratory preparation step for a systematic review, and sometimes they are a standalone project.

Prevent plagiarism. Run a free check.

A systematic review is a good choice of review if you want to answer a question about the effectiveness of an intervention , such as a medical treatment.

To conduct a systematic review, you’ll need the following:

- A precise question , usually about the effectiveness of an intervention. The question needs to be about a topic that’s previously been studied by multiple researchers. If there’s no previous research, there’s nothing to review.

- If you’re doing a systematic review on your own (e.g., for a research paper or thesis ), you should take appropriate measures to ensure the validity and reliability of your research.

- Access to databases and journal archives. Often, your educational institution provides you with access.

- Time. A professional systematic review is a time-consuming process: it will take the lead author about six months of full-time work. If you’re a student, you should narrow the scope of your systematic review and stick to a tight schedule.

- Bibliographic, word-processing, spreadsheet, and statistical software . For example, you could use EndNote, Microsoft Word, Excel, and SPSS.

A systematic review has many pros .

- They minimize research bias by considering all available evidence and evaluating each study for bias.

- Their methods are transparent , so they can be scrutinized by others.

- They’re thorough : they summarize all available evidence.

- They can be replicated and updated by others.

Systematic reviews also have a few cons .

- They’re time-consuming .

- They’re narrow in scope : they only answer the precise research question.

The 7 steps for conducting a systematic review are explained with an example.

Step 1: Formulate a research question

Formulating the research question is probably the most important step of a systematic review. A clear research question will:

- Allow you to more effectively communicate your research to other researchers and practitioners

- Guide your decisions as you plan and conduct your systematic review

A good research question for a systematic review has four components, which you can remember with the acronym PICO :

- Population(s) or problem(s)

- Intervention(s)

- Comparison(s)

You can rearrange these four components to write your research question:

- What is the effectiveness of I versus C for O in P ?

Sometimes, you may want to include a fifth component, the type of study design . In this case, the acronym is PICOT .

- Type of study design(s)

- The population of patients with eczema

- The intervention of probiotics

- In comparison to no treatment, placebo , or non-probiotic treatment

- The outcome of changes in participant-, parent-, and doctor-rated symptoms of eczema and quality of life

- Randomized control trials, a type of study design

Their research question was:

- What is the effectiveness of probiotics versus no treatment, a placebo, or a non-probiotic treatment for reducing eczema symptoms and improving quality of life in patients with eczema?

Step 2: Develop a protocol

A protocol is a document that contains your research plan for the systematic review. This is an important step because having a plan allows you to work more efficiently and reduces bias.

Your protocol should include the following components:

- Background information : Provide the context of the research question, including why it’s important.

- Research objective (s) : Rephrase your research question as an objective.

- Selection criteria: State how you’ll decide which studies to include or exclude from your review.

- Search strategy: Discuss your plan for finding studies.

- Analysis: Explain what information you’ll collect from the studies and how you’ll synthesize the data.

If you’re a professional seeking to publish your review, it’s a good idea to bring together an advisory committee . This is a group of about six people who have experience in the topic you’re researching. They can help you make decisions about your protocol.

It’s highly recommended to register your protocol. Registering your protocol means submitting it to a database such as PROSPERO or ClinicalTrials.gov .

Step 3: Search for all relevant studies

Searching for relevant studies is the most time-consuming step of a systematic review.

To reduce bias, it’s important to search for relevant studies very thoroughly. Your strategy will depend on your field and your research question, but sources generally fall into these four categories:

- Databases: Search multiple databases of peer-reviewed literature, such as PubMed or Scopus . Think carefully about how to phrase your search terms and include multiple synonyms of each word. Use Boolean operators if relevant.

- Handsearching: In addition to searching the primary sources using databases, you’ll also need to search manually. One strategy is to scan relevant journals or conference proceedings. Another strategy is to scan the reference lists of relevant studies.

- Gray literature: Gray literature includes documents produced by governments, universities, and other institutions that aren’t published by traditional publishers. Graduate student theses are an important type of gray literature, which you can search using the Networked Digital Library of Theses and Dissertations (NDLTD) . In medicine, clinical trial registries are another important type of gray literature.

- Experts: Contact experts in the field to ask if they have unpublished studies that should be included in your review.

At this stage of your review, you won’t read the articles yet. Simply save any potentially relevant citations using bibliographic software, such as Scribbr’s APA or MLA Generator .

- Databases: EMBASE, PsycINFO, AMED, LILACS, and ISI Web of Science

- Handsearch: Conference proceedings and reference lists of articles

- Gray literature: The Cochrane Library, the metaRegister of Controlled Trials, and the Ongoing Skin Trials Register

- Experts: Authors of unpublished registered trials, pharmaceutical companies, and manufacturers of probiotics

Step 4: Apply the selection criteria

Applying the selection criteria is a three-person job. Two of you will independently read the studies and decide which to include in your review based on the selection criteria you established in your protocol . The third person’s job is to break any ties.

To increase inter-rater reliability , ensure that everyone thoroughly understands the selection criteria before you begin.

If you’re writing a systematic review as a student for an assignment, you might not have a team. In this case, you’ll have to apply the selection criteria on your own; you can mention this as a limitation in your paper’s discussion.

You should apply the selection criteria in two phases:

- Based on the titles and abstracts : Decide whether each article potentially meets the selection criteria based on the information provided in the abstracts.

- Based on the full texts: Download the articles that weren’t excluded during the first phase. If an article isn’t available online or through your library, you may need to contact the authors to ask for a copy. Read the articles and decide which articles meet the selection criteria.

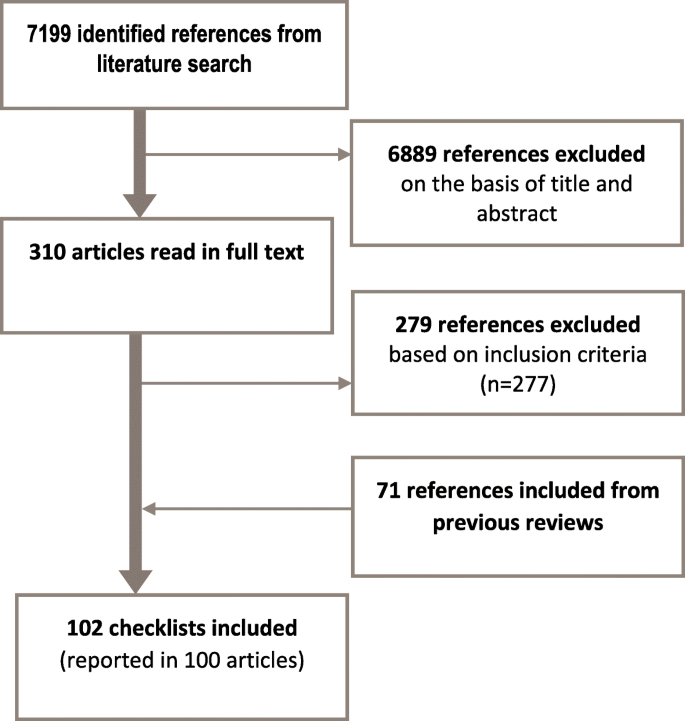

It’s very important to keep a meticulous record of why you included or excluded each article. When the selection process is complete, you can summarize what you did using a PRISMA flow diagram .

Next, Boyle and colleagues found the full texts for each of the remaining studies. Boyle and Tang read through the articles to decide if any more studies needed to be excluded based on the selection criteria.

When Boyle and Tang disagreed about whether a study should be excluded, they discussed it with Varigos until the three researchers came to an agreement.

Step 5: Extract the data

Extracting the data means collecting information from the selected studies in a systematic way. There are two types of information you need to collect from each study:

- Information about the study’s methods and results . The exact information will depend on your research question, but it might include the year, study design , sample size, context, research findings , and conclusions. If any data are missing, you’ll need to contact the study’s authors.

- Your judgment of the quality of the evidence, including risk of bias .

You should collect this information using forms. You can find sample forms in The Registry of Methods and Tools for Evidence-Informed Decision Making and the Grading of Recommendations, Assessment, Development and Evaluations Working Group .

Extracting the data is also a three-person job. Two people should do this step independently, and the third person will resolve any disagreements.

They also collected data about possible sources of bias, such as how the study participants were randomized into the control and treatment groups.

Step 6: Synthesize the data

Synthesizing the data means bringing together the information you collected into a single, cohesive story. There are two main approaches to synthesizing the data:

- Narrative ( qualitative ): Summarize the information in words. You’ll need to discuss the studies and assess their overall quality.

- Quantitative : Use statistical methods to summarize and compare data from different studies. The most common quantitative approach is a meta-analysis , which allows you to combine results from multiple studies into a summary result.

Generally, you should use both approaches together whenever possible. If you don’t have enough data, or the data from different studies aren’t comparable, then you can take just a narrative approach. However, you should justify why a quantitative approach wasn’t possible.

Boyle and colleagues also divided the studies into subgroups, such as studies about babies, children, and adults, and analyzed the effect sizes within each group.

Step 7: Write and publish a report

The purpose of writing a systematic review article is to share the answer to your research question and explain how you arrived at this answer.

Your article should include the following sections:

- Abstract : A summary of the review

- Introduction : Including the rationale and objectives

- Methods : Including the selection criteria, search method, data extraction method, and synthesis method

- Results : Including results of the search and selection process, study characteristics, risk of bias in the studies, and synthesis results

- Discussion : Including interpretation of the results and limitations of the review

- Conclusion : The answer to your research question and implications for practice, policy, or research

To verify that your report includes everything it needs, you can use the PRISMA checklist .

Once your report is written, you can publish it in a systematic review database, such as the Cochrane Database of Systematic Reviews , and/or in a peer-reviewed journal.

In their report, Boyle and colleagues concluded that probiotics cannot be recommended for reducing eczema symptoms or improving quality of life in patients with eczema. Note Generative AI tools like ChatGPT can be useful at various stages of the writing and research process and can help you to write your systematic review. However, we strongly advise against trying to pass AI-generated text off as your own work.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a thesis, dissertation , or research paper , in order to situate your work in relation to existing knowledge.

A literature review is a survey of credible sources on a topic, often used in dissertations , theses, and research papers . Literature reviews give an overview of knowledge on a subject, helping you identify relevant theories and methods, as well as gaps in existing research. Literature reviews are set up similarly to other academic texts , with an introduction , a main body, and a conclusion .

An annotated bibliography is a list of source references that has a short description (called an annotation ) for each of the sources. It is often assigned as part of the research process for a paper .

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Turney, S. (2023, November 20). Systematic Review | Definition, Example & Guide. Scribbr. Retrieved April 2, 2024, from https://www.scribbr.com/methodology/systematic-review/

Is this article helpful?

Shaun Turney

Other students also liked, how to write a literature review | guide, examples, & templates, how to write a research proposal | examples & templates, what is critical thinking | definition & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

- Methodology

- Open access

- Published: 17 October 2017

A proposed framework for developing quality assessment tools

- Penny Whiting ORCID: orcid.org/0000-0003-1138-5682 1 , 2 ,

- Robert Wolff 3 ,

- Susan Mallett 4 , 5 ,

- Iveta Simera 6 &

- Jelena Savović 1 , 2

Systematic Reviews volume 6 , Article number: 204 ( 2017 ) Cite this article

21k Accesses

42 Citations

134 Altmetric

Metrics details

Assessment of the quality of included studies is an essential component of any systematic review. A formal quality assessment is facilitated by using a structured tool. There are currently no guidelines available for researchers wanting to develop a new quality assessment tool.

This paper provides a framework for developing quality assessment tools based on our experiences of developing a variety of quality assessment tools for studies of differing designs over the last 14 years. We have also drawn on experience from the work of the EQUATOR Network in producing guidance for developing reporting guidelines.

We do not recommend a single ‘best’ approach. Instead, we provide a general framework with suggestions as to how the different stages can be approached. Our proposed framework is based around three key stages: initial steps, tool development and dissemination.

Conclusions

We recommend that anyone who would like to develop a new quality assessment tool follow the stages outlined in this paper. We hope that our proposed framework will increase the number of tools developed using robust methods.

Peer Review reports

Systematic reviews are generally considered to provide the most reliable form of evidence for decision makers [ 1 ]. A formal assessment of the quality of the included studies is an essential component of any systematic review [ 2 , 3 ]. Quality can be considered to have three components—internal validity (risk of bias), external validity (applicability/variability) and reporting quality. The quality of included studies depends on them being sufficiently well designed and conducted to be able to provide reliable results [ 4 ]. Poor design, conduct or analysis can introduce bias or systematic error affecting study results and conclusions—this is also known as internal validity. External validity or the applicability of the study to the review question is also an important component of study quality. Reporting quality relates to how well the study is reported—it is difficult to assess other components of study quality if the study is not reported with the appropriate level of detail.

When conducting a systematic review, stronger conclusions can be derived from studies at low risk of bias, rather than when evidence is based on studies with serious methodological flaws. Formal quality assessment as part of a systematic review, therefore, provides an indication of the strength of the evidence on which conclusions are based and allows comparisons between studies based on risk of bias [ 3 ]. The GRADE system for rating the overall quality of the evidence included in a systematic review is recommended by many guidelines and systematic review organisations such as National Institute for Health and Care Excellence (NICE) and Cochrane. Risk of bias is a key component of this along with publication bias, imprecision, inconsistency, indirectness and magnitude of effect [ 5 , 6 ].

A formal quality assessment is facilitated by using a structured tool. Although it is possible for reviewers to simply assess what they consider to be key components of quality, this may result in important sources of bias being omitted, inappropriate items included or too much emphasis being given to particular items guided by reviewers’ subjective opinions. In contrast, a structured tool provides a convenient standardised way to assess quality providing consistency across reviews. Robust tools are usually developed based on empirical evidence refined by expert consensus.

This paper provides a framework for developing quality assessment tools. We use the term ‘quality assessment tool’ to refer to any tool designed to target one or more aspects of the quality of a research study. This term can apply to any tool whether focused specifically on one aspect of study quality (usually risk of bias) or for broader tools covering additional aspects such as applicability/generalisability and reporting quality. We do not place any restrictions on the type of ‘tool’ to which this framework can be approach—it should be appropriate for a variety of different approaches such as checklists, domain-based approaches, tables or graphics or any other format that developers may want to consider. We do not recommend a single ‘best’ approach. Instead, we provide a general framework with suggestions on how the different stages can be approached. This is based on our experience of developing quality assessment tools for studies of differing designs over the last 14 years. These include QUADAS [ 7 ] and QUADAS-2 [ 8 ] for diagnostic accuracy studies, ROBIS [ 9 ] for systematic reviews, PROBAST [ 10 ] for prediction modelling studies, ROBINS-I [ 11 ] for non-randomised studies of interventions and the new version of the Cochrane risk of bias tool for randomised trials (RoB 2.0) [ 12 ]. We have also drawn on experience from the work of the EQUATOR Network in producing guidance for developing reporting guidelines [ 13 ].

Over the years that we have been involved in the development of quality assessment tools and through involvement in different development processes, we noticed that the methods used to develop each tool could be mapped to a similar underlying process. The proposed framework evolved through discussion among the team, describing the steps involved in developing the different tools, and then grouping these into appropriate headings and stages.

Results: Proposed framework

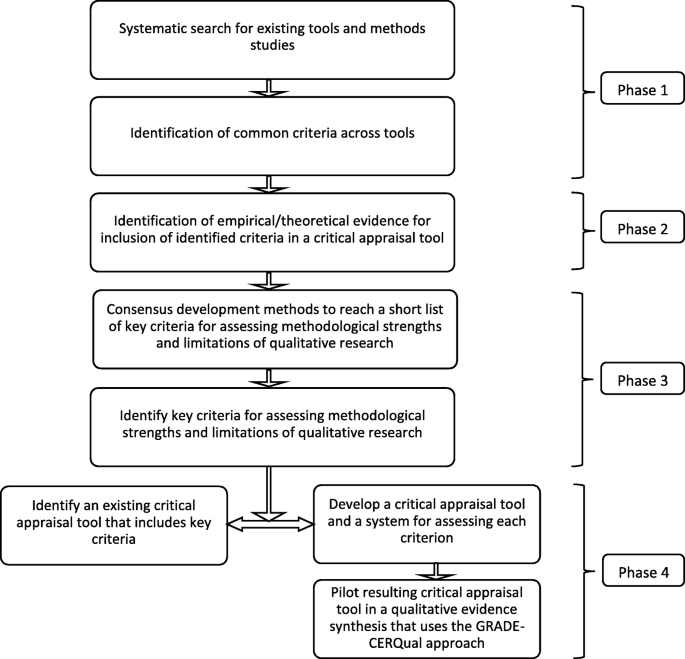

The Fig. 1 and Table 1 outline the proposed steps in our framework, grouped into three stages. The table also includes examples of how each step was approached for the tools that we have been involved in developing. Each step is discussed in detail below.

Overview of proposed framework

Stage 1: initial steps

Identify the need for a new tool.

The first step in developing a new quality assessment (QA) tool is to identify the need for a new tool: What is the rationale for developing the new tool? In their guidance on developing reporting guidelines, Moher et al. [ 13 ] stated that “developing a reporting guidelines is complex and time consuming, so a compelling rationale is needed”. The same applies to the development of QA tools. It may be that there is no existing QA tool for the specific study design of interest; a QA tool is available but not directly targeted to the specific context required (e.g. tools designed for clinical interventions may not be appropriate for public health interventions), existing tools might not be up to date, new evidence on particular sources of bias may have emerged that is not adequately addressed by existing tools, or new approaches to quality assessment mean that a new approach is needed. For example, QUADAS-2 and RoB 2.0 were developed as experience, anecdotal reports, and feedback suggested areas for improvement of the original QUADAS and Cochrane risk of bias tools [ 7 ]. ROBIS was developed as we felt there was no tool that specifically addressed risk of bias in systematic reviews [ 9 ].

It is important to consider whether a completely new tool is needed or whether it may be possible to modify or adapt an existing tool. If modifying an existing tool, then the original can act as a starting point, although in practice, the new tool may look very different from the original. Both QUADAS-2 [ 8 ] and the new Cochrane risk of bias tool used the original versions of these tools as a starting point [ 12 ].

Obtain funding for the tool development

There are costs involved in developing a new QA tool. These will vary depending on the approach taken but items that may need to be funded include researcher time, literature searching, travel and subsistence for attending meetings, face-to-face meetings, piloting the tool, online survey software, open access publication costs, website fees and conference attendance for dissemination. We have used different approaches to fund the development of quality assessment tools. QUADAS-2 [ 8 ] was funded by the UK Medical Research Council Methodology Programme as part of a larger project grant. ROBIS, [ 9 ] ROBINS-I [ 11 ] and Cochrane ROB 2.0 [ 12 ] were funded through smaller project-specific grants, and PROBAST [ 10 ] received no specific funding. Instead, the host institutions for each steering group member allowed them time to work on the project and covered travel and subsistence for regular steering group meetings and conference attendance. Freely available survey monkey software ( www.surveymonkey.co.uk ) was used to run an online Delphi process.

Assemble team

Assembling a team with the appropriate expertise is a key step in developing a quality assessment tool. As tool development usually relies on expert consensus, it is essential that the team includes people with an appropriate range of expertise. This generally includes methodologists with expertise in the study designs targeted by the tool, people with expertise in QA tool development and also end users, i.e. reviewers who will be using the tool. Reviewers are a group that may sometimes be overlooked but are essential to ensure that the final tool is usable by those for whom it is developed. If the tool is likely to be used in different content areas, then it is important to include reviewers who will be using the tool in all contexts. For example, ROBIS is targeted at different types of systematic reviews including reviews of interventions, diagnostic accuracy, aetiology and prognosis. We included team members who were familiar with all different types of review to ensure that the team included the appropriate expertise to develop the tool. It can also be helpful to include reviewers with a range of expertise from those new to quality assessment to more experienced reviewers. Including representatives from a wide range of organisations can also be helpful for the future uptake and dissemination of the tool. Thinking about this at an early stage is helpful. The more organisations that are involved in the development of the tool, the more likely these organisations are to feel some ownership of the tool and to want to implement the tool within their organisation in the future. The total number of people involved in tool development varies. For our tools, the number of people involved directly in the development of each tool ranged from 27 to 51 with a median of 40.

Manage the project

The size and the structure of the project team also need to be carefully considered. In order to cover an appropriate range of expertise, it is generally necessary to include a relatively large group of people. It may not be practical for such a large group to be involved in the day-to-day development of the tool, and so it may be desirable to have a smaller group responsible for driving the project by leading and coordinating all activities, and involving the larger group where their input is required. For example, when developing QUADAS-2 and PROBAST, a steering group of around 6–8 people led the development of the tool, bringing in a larger consensus group to help inform decisions on the scope and content of the tool. For ROBINS-I and Cochrane ROB 2.0, a smaller steering group led the development with domain-based working groups developing specific areas of the tool.

Define the scope

The scope of the quality assessment tool needs to be defined at an early stage. The Table 2 outlines key questions to consider when defining the scope. Tools generally target one specific type of study. The specific study design to be considered is one of the first components to define. For example, QUADAS-2 [ 8 ] focused on diagnostic accuracy studies, PROBAST [ 10 ] on prediction modelling studies and the Cochrane Risk of Bias tool on randomised trials. Some tools may be broader, targeted at multiple related designs. For example, ROBINS-I targets all non-randomised studies of interventions rather than one single study design such as cohort studies. When deciding on the focus of the tool, it is important to clearly define the design and topic areas targeted. Trade-offs of different approaches need consideration. A more focused tool can be tailored to a specific topic area. A broader tool may not be as specific but can be used to assess a wider variety of studies. For example, we developed ROBIS to be used to assess any type of systematic review, e.g. intervention, prognostic, diagnostic or aetiology. Previous tools, such as the AMSTAR tool, were developed to assess reviews of RCTs [ 14 ]. Key to any quality assessment tool is a definition of quality as addressed by the tool, i.e. defining what exactly the tool is trying to address. We have found that once the definition of quality has been clearly agreed, then it becomes much easier to decide on which items to include in the tool.

Other features to consider include whether to address both internal (risk of bias) and external validity (applicability) and the structure of the tool. The original QUADAS tool used a simple checklist design and combined items on risk of bias, reporting quality and applicability. Our more recently developed tools have followed a domain-based approach with a clear focus on assessment of risk of bias. Many of these domain-based tools also include sections covering applicability/relevance. How to rate individual items included in the tool also forms part of the scope. The original QUADAS tool [ 7 ] used a simple ‘yes, no or unclear’ rating for each question. The domain-based tools such as QUADAS-2, [ 8 ] ROBIS [ 9 ] and PROBAST [ 10 ] have signalling questions which flag the potential for bias. These are generally factual questions and can be answered as ‘yes, no or no information’. Some tools include a ‘probably yes’ or ‘probably no’ response to help reviewers answer these questions when there is not sufficient information for a more definite response. The overall domain ratings then use decision ratings like ‘high, low or unclear’ risk of bias. Some tools, such as ROBINS-I [ 11 ] and the RoB 2.0 [ 12 ], include additional domain level ratings such as ‘critical, severe, moderate or low’ and ‘low, some concerns, high’. We strongly recommend that at this stage, tool developers are explicit that quality scores should not be incorporated into the tools. Numerical summary quality scores have been shown to be poor indicators of study quality, and so, alternatives to their use should be encouraged [ 15 , 16 ]. When developing many of our tools, we were explicit at the scope stage that we wanted to come up an overall assessment of study quality but avoid the use of quality scores. One of the reasons for introducing the domain level structure first used with the QUADAS-2 tool was explicit to avoid users calculating quality scores by simply summing the number of items fulfilled.

Agreeing the scope of the tool may not be straightforward and can require much discussion between team members. An additional consideration is how decisions on scope will be made. Will this be by a single person, by the steering group and should some or all decisions be agreed by the larger group? The approach that we have often taken is for a smaller group (e.g. steering group) to propose the scope of the tool with the agreement reached following consultation with the larger group. Questions on the scope can often form the first discussion points at a face-to-face meeting (e.g. ROBIS [ 9 ] and QUADAS-2 [ 8 ]) or the first questions on a web-based survey (e.g. PROBAST [ 10 ]).

As with any research project, a protocol that clearly defines the scope and proposed plans for the development of the tool should be produced at an early stage of the tool development process.

Stage 2: tool development

Generate initial list of items for inclusion.

The starting point for a tool is an initial list of items to consider for inclusion. There are various ways in which this list can be generated. These include looking at existing tools, evidence reviews and expert knowledge. The most comprehensive way is to review the literature for potential sources of bias and to provide a systematic review summarising the evidence for the effects of these. This is the approach we took for the original QUADAS tool [ 7 ] and also the updated QUADAS-2 [ 8 , 17 , 18 ]. Reviewing the items included in existing tools and summarising the number of tools that included each potential item can be a useful initial step as it shows which potential items of bias have been considered as important by previous tool developers. This process was followed for the original QUADAS tool [ 7 ] and for ROBIS [ 9 ]. Examining how previous systematic reviews have incorporated quality into their results can also be helpful to provide an indication of the requirements of a QA tool. If you are updating a previous QA tool then this will often form the starting point for potential items to include in the updated tool. This was the case for QUADAS-2 [ 8 ] and the RoB 2.0 [ 12 ]. For ROBINS-I [ 11 ], domains were agreed at a consensus meeting, and then expert working groups identified potential items to include in each domain. Generating the list of items for inclusion was, therefore, based on expert consensus rather than reviewing existing evidence. This can also be a valid approach. The development of PROBAST used a combined approach of using an existing tool for a related area as the starting point (QUADAS-2), non-systematic literature reviews and expert input from both steering group members and wider PROBAST group [ 10 ].

Agree initial items and scope

After the initial stages of tool development which can often be performed by a smaller group, input from the larger group should be sought. Methods for gaining input from the larger group include holding a face-to-face meeting or a web-based survey. At this stage, the scope defined in step 1.5 can be brought to the larger group for further discussion and refinement. The initial list of items needs to be further refined until agreement is reached on which items should be included in an initial draft of the tool. If a face-to-face meeting is held, smaller break-out groups focussing on specific domains can be a helpful structure to the meeting. QUADAS-2, ROBIS and ROBINS-I all involved face-to-face meetings with smaller break-out groups early in the development process [ 8 , 9 , 11 ]. If moving straight to a web-based survey, then respondents can be asked about the scope with initial questions considering possible items to include. This approach was taken for PROBAST [ 10 ] and the original QUADAS tool [ 7 ]. For PROBAST, we also asked group members to provide supporting evidence for why items should be included in the tool [ 10 ]. Items should be turned into potential questions/signalling questions for inclusion in the tool at this relatively early stage in the development of the tool.

Produce first draft of tool and develop guidance

Following the face-to-face meeting or initial survey rounds, a first draft of the tool can be produced. The initial draft may be produced by a smaller group (e.g. steering group), single person, or by taking a domain-based approach with the larger group split into groups with each taking responsibility for single domains. For QUADAS-2 [ 8 ] and PROBAST [ 10 ], a single person developed the first draft which was then agreed by the steering group before moving forwards. The first draft of ROBIS was developed following the face-to-face meeting by two team members. Initial drafts of ROBINS-I [ 11 ] and the RoB 2.0 [ 12 ] were produced by teams working on single domains proposing initial versions for their domains. Drafts for each domain were then put together by the steering group to give a first draft of the tool. Once a first draft of the tool is available, it may be helpful to start producing a clear guidance document describing how to assess each of the items included in the tool. The earlier such a guide can be produced, the more opportunity there will be to pilot and refine it alongside the tool.

Pilot and refine

The first draft of the tool needs to go through a process of refinement until a final version that has agreement of the wider group is achieved. Consensus may be achieved in various ways. Online surveys consisting of multiple rounds until agreement on the final tool is reached are a good way of involving large numbers of experts in this process. This is the approach used for QUADAS, [ 7 ], QUADAS-2 [ 8 ], ROBIS, [ 9 ] and PROBAST [ 10 ]. If domain-based working groups were adopted for the initial development of the tool, these can also be used to finalise the tool. Members of the full group can then provide feedback on draft versions, including domains that they were not initially assigned to. This approach was used for ROBINS-I and RoB 2.0. It would also be feasible to combine such an approach with a web-based survey.

Whilst the tool is being refined, initial piloting work can be undertaken. If a guidance document has been produced, then it can be included in the piloting process. If the tool is available in different formats, for example paper-based or Access database, then these could also be made available and tested as part of the piloting. The research team may ask reviewers working on appropriate review topics to pilot the tool in their review. Alternatively, reviewers can be asked to pilot the tool on a series of sample papers and to provide feedback on their experience of using the tool. An efficient way of completing such a process is to hold a piloting event where reviewers try out the tool on a sample of papers which they can either bring with them or that are provided to them. This can be a good approach to get feedback in a timely and interactive manner. However, there are costs associated with running such an event. Asking reviewers to pilot the tool in ongoing reviews can result in delays as piloting cannot be started until the review is at the data extraction stage. Identifying reviews at an appropriate stage with reviewers willing to spend the extra time needed to pilot a new tool is not always straightforward. We held a piloting event when developing the RoB 2.0 and found this to be very efficient in providing immediate feedback on the tool. We were also able to hold a group discussion for reviewers to provide suggestions for improvements to the tool and to highlight any items that they found difficult. For previous tools, we used remote piloting which provided helpful feedback but was not as efficient as the piloting event. Ideally, any piloting process should involve reviewers with a broad range of experience ranging from those with extensive experience of conducting quality assessment of studies of a variety of designs to those relatively new to the process.

The time taken for piloting and refining the tool can vary considerably. For some tools, such as ROBIS and QUADAS-2, this process was completed in around 6–9 months. For PROBAST and ROBINS-I, the process took over 4 years.

Stage 3: dissemination

Develop a publication strategy.

A strategy to disseminate the tool is required. This should be discussed at the start of the project but may evolve as the tool is developed. The primary means of dissemination is usually through publication in a peer-reviewed journal. A more detailed guidance document can accompany the publication and be made available as a web appendix. Another option is to have dual publications, one reporting the tool and outlining how it was developed, and a second providing additional guidance on how to use the tool. This is sometimes known as an ‘E&E’ (explanation and elaboration) publication and is an approach adopted by many reporting guidelines [ 13 ].

Establish a website

Developing a website for the tool can help with dissemination. Ideally, the website should be developed before publication of the tool so that details can be included in the publication. The final version of the tool can be posted on the website together with the full guidance document. Details on who contributed to the tool development and any funding should also be acknowledged on the website. Additional resources to help reviewers use the tool can also be posted there. For example, the ROBIS ( www.robis-tool.info ) and QUADAS ( www.quadas.org ) websites both contain Microsoft Access database that reviewers can use to complete their assessment and templates to produce graphical and tabular displays. They also contain links to other relevant resources and details of training opportunities. Other resources that may be useful to include on tool websites include worked examples and translations of the tools, where available. QUADAS-2 has been translated into Italian and Japanese, and the translations of these tools can be accessed via its website. If the tool has been endorsed or recommended for use by particular organisations (e.g. Cochrane, UK National Institute for Health and Care Excellence (NICE)), then this could also be included on the website.

The website is also a helpful way to encourage comments about the tool, which can lead to its further improvement, and exchange of experiences with the tool implementation.

Encourage uptake of tool by leading organisations

Encouraging organisations, both national and international, to recommend the tool for use in their systematic reviews is a very effective means of making sure that, once developed, the tool is used. There are different ways this can be achieved. Involving representatives from a wide range of organisations as part of the development team may mean that they are more likely to recommend the use of the tool in their organisations. Presentations at conferences, for example the Cochrane Colloquium or Health Technology Assessment Conference, may increase knowledge of the tool within that organisation making it more likely that the tool may be recommended for use. Running workshops on the tool for organisations can help increase familiarity and usability of the tool. These can also provide helpful feedback for what to include in guidance documents and to inform future updates of the tool. For example, we have been running workshops on QUADAS and ROBIS within Cochrane for a number of years. We have also provided training to institutions such as NICE on how to use the tools. QUADAS is now recommended by both these organisations, among many others, for use in diagnostic accuracy reviews. We have also run workshops on ROBIS, PROBAST, ROBINS-I and RoB 2.0 at the annual Cochrane Colloquium. We were recently approached by the Estonian Health Insurance Fund with a request to provide training to some of their reviewers so that they could implement ROBIS within their guideline development process. We supported this by running a specific training session for them.

Ultimately, the best way to encourage tool uptake is to make sure that the tool was developed robustly and fills a gap where there is currently no existing tool or there are limitations with existing tools. Ensuring that the tool is widely disseminated also means that the tool is more likely to be used and recommended.

Translate tools

After the tool has been published, you may receive requests to translate the tool. Translation can help to disseminate the tool and encourage its use in a much broader range of countries. Tool translations, therefore, should be encouraged but it is important to reassure yourself that the translation has been completed appropriately. One method to do this is via back translation.

In this paper, we suggest a framework for developing quality assessment tools. The framework consists of three stages: (1) initial steps, (2) tool development and (3) dissemination. Each stage includes defined steps that we consider important to follow when developing a tool; there is some flexibility on how these stages may be approached. In developing this framework, we have drawn on our extensive experience of developing quality assessment tools. Despite having used different approaches to the development of each of these tools, we found that all approaches shared common features and processes. This led to the development of the framework. We recommend that anyone who would like to develop a new quality assessment tool follow the stages outlined in this paper.

When developing a new tool, you need to decide how to approach each of the proposed stages. We have given some examples of how to do this, other approaches may also be valid. Factors that may influence how you choose to approach the development of your tool include available funding, topic area, number and range of people to involve, target audience and tool complexity. For example, holding face-to-face meetings and running piloting events incur greater costs than web-based surveys or asking reviewers to pilot the tool at their own convenience. More complex tools may take longer, require additional expertise, and require more piloting and refinement.

We are not aware of any existing guidance on how to develop QA tools. Moher and colleagues have produced guidance on how to develop reporting guidelines [ 13 ]. These have been cited over 190 times, mainly by new reporting guidelines, suggesting that many reporting guideline developers have found a structured approach helpful. In the absence of guidance specifically for the development of QA tools, we also based our development of QUADAS-2 [ 8 ] and ROBIS [ 9 ] on the guidance for developing reporting guidance. Although many of the steps proposed by Moher et al. apply to the development of QA tool, there are areas where these are not directly relevant and where specific guidance on developing QA tools would be helpful.

There are a very large number of quality assessment tools available. When developing ROBIS and QUADAS, we conducted reviews of existing quality assessment tools. These identified 40 tools to assess the quality of systematic reviews [ 19 ] and 91 tools to assess the quality of diagnostic accuracy studies [ 20 ]. However, only three systematic review tools (7.5%) [ 19 ] and two diagnostic tools (2%) reported being rigorously developed [ 20 ]. The lack of a rigorous development process for most tools suggests a need for guidance on how to develop quality assessment tools. We hope that our proposed framework will increase the number of tools developed using robust methods.

The large number of quality assessment tools available makes it difficult for people working on systematic reviews to choose the most appropriate tool(s) for use in their reviews. Therefore, we are developing an initiative similar to the EQUATOR Network to improve the process of quality assessment in systematic reviews. This will be known as the LATITUDES Network ( www.latitudes-network.org ). LATITUDES aims to highlight and increase the use of key risk of bias assessment tools, help people to use these tools more effectively, improve incorporation of results of the risk of bias assessment into the review and to disseminate best practice in risk of bias assessment.

Murad MH, Montori VM. Synthesizing evidence: shifting the focus from individual studies to the body of evidence. JAMA. 2013;309(21):2217–8.

Article PubMed Google Scholar

Centre for Reviews and Dissemination. Systematic reviews: CRD’s guidance for undertaking reviews in health care [internet]. In . York: University of York; 2009. [accessed 23 Mar 2011].

Higgins JPT, Green S (eds.): Cochrane handbook for systematic reviews of interventions [Internet]. Version 5.1.0 [updated March 2011]: The Cochrane Collaboration; 2011. [accessed 23 Mar 2011 ].

Torgerson D, Torgerson C. Designing randomised trials in health, education and the social sciences: an introduction. New York: Palgrave MacMillan; 2008.

Book Google Scholar

Guyatt GH, Oxman AD, Vist G, Kunz R, Brozek J, Alonso-Coello P, Montori V, Akl EA, Djulbegovic B, Falck-Ytter Y, et al. GRADE guidelines: 4. Rating the quality of evidence—study limitations (risk of bias). J Clin Epidemiol. 2011;64(4):407–15.

Balshem H, Helfand M, Schunemann HJ, Oxman AD, Kunz R, Brozek J, Vist GE, Falck-Ytter Y, Meerpohl J, Norris S, et al. GRADE guidelines: 3. Rating the quality of evidence. J Clin Epidemiol. 2011;64(4):401–6.

Whiting P, Rutjes AW, Reitsma JB, Bossuyt PM, Kleijnen J. The development of QUADAS: a tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews. BMC Med Res Methodol. 2003;3:25.

Article PubMed PubMed Central Google Scholar

Whiting PF, Rutjes AW, Westwood ME, Mallett S, Deeks JJ, Reitsma JB, Leeflang MM, Sterne JA, Bossuyt PM. QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med. 2011;155(8):529–36.

Whiting P, Savovic J, Higgins JP, Caldwell DM, Reeves BC, Shea B, Davies P, Kleijnen J, Churchill R, group R. ROBIS: a new tool to assess risk of bias in systematic reviews was developed. J Clin Epidemiol. 2016;69:225–34.

Mallett S, Wolff R, Whiting P, Riley R, Westwood M, Kleinen J, Collins G, Reitsma H, Moons K. Methods for evaluating medical tests and biomarkers. 04 prediction model study risk of bias assessment tool (PROBAST). Diagn Prognostic Res. 2017;1(1):7.

Google Scholar

Sterne JA, Hernán MA, Reeves BC, Savović J, Berkman ND, Viswanathan M, Henry D, Altman DG, Ansari MT, Boutron I. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355:i4919.

Higgins J, Sterne J, Savović J, Page M, Hróbjartsson A, Boutron I, Reeves B, Eldridge S. A revised tool for assessing risk of bias in randomized trials. In: Chandler J, McKenzie J, Boutron I, Welch V, editors. Cochrane methods Cochrane database of systematic reviews volume issue 10 (Suppl 1); 2016.

Moher D, Schulz KF, Simera I, Altman DG. Guidance for developers of health research reporting guidelines. PLoS Med. 2010;7(2):e1000217.

Shea BJ, Grimshaw JM, Wells GA, Boers M, Andersson N, Hamel C, Porter AC, Tugwell P, Moher D, Bouter LM. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol. 2007;7:10.

Whiting P, Harbord R, Kleijnen J. No role for quality scores in systematic reviews of diagnostic accuracy studies. BMC Med Res Methodol. 2005;5:19.

Juni P, Witschi A, Bloch R, Egger M. The hazards of scoring the quality of clinical trials for meta-analysis. JAMA. 1999;282(11):1054–60.

Article CAS PubMed Google Scholar

Whiting P, Rutjes AW, Reitsma JB, Glas AS, Bossuyt PM, Kleijnen J. Sources of variation and bias in studies of diagnostic accuracy: a systematic review. Ann Intern Med. 2004;140(3):189–202.

Whiting PF, Rutjes AW, Westwood ME, Mallett S, Group Q-S. A systematic review classifies sources of bias and variation in diagnostic test accuracy studies. J Clin Epidemiol. 2013;66(10):1093–104.

Whiting P, Davies P, Savović J, Caldwell D, Churchill R. Evidence to inform the development of ROBIS, a new tool to assess the risk of bias in systematic reviews. 2013.

Whiting P, Rutjes AW, Dinnes J, Reitsma JB, Bossuyt PM, Kleijnen J. A systematic review finds that diagnostic reviews fail to incorporate quality despite available tools. J Clin Epidemiol. 2005;58(1):1–12.

Moons KG, Altman DG, Reitsma JB, Ioannidis JP, Macaskill P, Steyerberg EW, Vickers AJ, Ransohoff DF, Collins GS. Transparent reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD): explanation and elaboration The TRIPOD statement: explanation and elaboration. Ann Intern Med. 2015;162(1):W1–W73.

Moons KG, de Groot JA, Bouwmeester W, Vergouwe Y, Mallett S, Altman DG, Reitsma JB, Collins GS. Critical appraisal and data extraction for systematic reviews of prediction modelling studies: the CHARMS checklist. PLoS Med. 2014;11(10):e1001744.

Higgins JP, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, Savović J, Schulz KF, Weeks L, Sterne JA. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. Bmj. 2011;343:d5928.

Download references

Acknowledgements

Not applicable.

The development of QUADAS-2, ROBIS and the new version of the Cochrane risk of bias tool for randomised trials (RoB 2.0) were funded by grants from the UK Medical Research Council (G0801405/1, MR/K01465X/1, MR/L004933/1- N61 and MR/K025643/1). ROBINS-I was funded by the Cochrane Methods Innovation Fund.

PW and JS time was partially supported by the National Institute for Health Research (NIHR) Collaboration for Leadership in Applied Health Research and Care (CLAHRC) West at University Hospitals Bristol NHS Foundation Trust. SM received support from the NIHR Birmingham Biomedical Research Centre.

The views expressed in this article are those of the authors and not necessarily those of the NHS, NIHR, MRC and Cochrane or the Department of Health. The funders had no role in the design of the study, data collection and analysis, decision to publish or preparation of the manuscript.

Availability of data and materials

Author information, authors and affiliations.

NIHR CLAHRC West, University Hospitals Bristol NHS Foundation Trust, Bristol, UK

Penny Whiting & Jelena Savović

School of Social and Community Medicine, University of Bristol, Bristol, UK

Kleijnen Systematic Reviews Ltd., Escrick, York, UK

Robert Wolff

Institute of Applied Health Research, University of Birmingham, Birmingham, UK

Susan Mallett

National Institute for Health Research (NIHR) Birmingham Biomedical Research Centre, Birmingham, UK

Centre for Tropical Medicine and Global Health, University of Oxford, Oxford, UK

Iveta Simera

You can also search for this author in PubMed Google Scholar

Contributions

PW conceived the idea for this paper and drafted the manuscript. JS, IS, RW and SM contributed to the writing of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Correspondence to Penny Whiting .

Ethics declarations

Ethics approval and consent to participate, consent for publication, competing interests.

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Whiting, P., Wolff, R., Mallett, S. et al. A proposed framework for developing quality assessment tools. Syst Rev 6 , 204 (2017). https://doi.org/10.1186/s13643-017-0604-6

Download citation

Received : 11 July 2017

Accepted : 04 October 2017

Published : 17 October 2017

DOI : https://doi.org/10.1186/s13643-017-0604-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Risk of bias

- Systematic reviews

Systematic Reviews

ISSN: 2046-4053

- Submission enquiries: Access here and click Contact Us

- General enquiries: [email protected]

- Research article

- Open access

- Published: 04 June 2019

Systematic mapping of existing tools to appraise methodological strengths and limitations of qualitative research: first stage in the development of the CAMELOT tool

- Heather Menzies Munthe-Kaas 1 ,

- Claire Glenton 1 ,

- Andrew Booth 2 ,

- Jane Noyes 3 &

- Simon Lewin 1 , 4

BMC Medical Research Methodology volume 19 , Article number: 113 ( 2019 ) Cite this article

29k Accesses

24 Citations

41 Altmetric

Metrics details

Qualitative evidence synthesis is increasingly used alongside reviews of effectiveness to inform guidelines and other decisions. To support this use, the GRADE-CERQual approach was developed to assess and communicate the confidence we have in findings from reviews of qualitative research. One component of this approach requires an appraisal of the methodological limitations of studies contributing data to a review finding. Diverse critical appraisal tools for qualitative research are currently being used. However, it is unclear which tool is most appropriate for informing a GRADE-CERQual assessment of confidence.

Methodology

We searched for tools that were explicitly intended for critically appraising the methodological quality of qualitative research. We searched the reference lists of existing methodological reviews for critical appraisal tools, and also conducted a systematic search in June 2016 for tools published in health science and social science databases. Two reviewers screened identified titles and abstracts, and then screened the full text of potentially relevant articles. One reviewer extracted data from each article and a second reviewer checked the extraction. We used a best-fit framework synthesis approach to code checklist criteria from each identified tool and to organise these into themes.

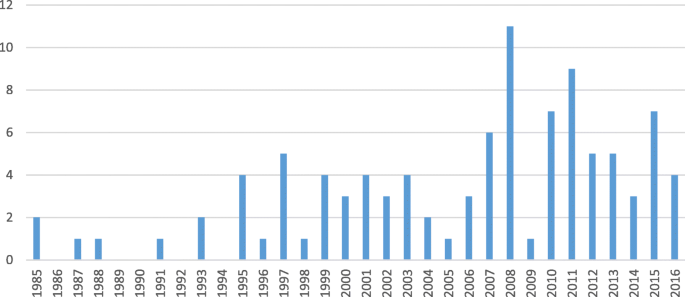

We identified 102 critical appraisal tools: 71 tools had previously been included in methodological reviews, and 31 tools were identified from our systematic search. Almost half of the tools were published after 2010. Few authors described how their tool was developed, or why a new tool was needed. After coding all criteria, we developed a framework that included 22 themes. None of the tools included all 22 themes. Some themes were included in up to 95 of the tools.

It is problematic that researchers continue to develop new tools without adequately examining the many tools that already exist. Furthermore, the plethora of tools, old and new, indicates a lack of consensus regarding the best tool to use, and an absence of empirical evidence about the most important criteria for assessing the methodological limitations of qualitative research, including in the context of use with GRADE-CERQual.

Peer Review reports

Qualitative evidence syntheses (also called systematic reviews of qualitative evidence) are becoming increasingly common and are used for diverse purposes [ 1 ]. One such purpose is their use, alongside reviews of effectiveness, to inform guidelines and other decisions, with the first Cochrane qualitative evidence synthesis published in 2013 [ 2 ]. However, there are challenges in using qualitative synthesis findings to inform decision making because methods to assess how much confidence to place in these findings are poorly developed [ 3 ]. The ‘Confidence in the Evidence from Reviews of Qualitative research’ (GRADE-CERQual) approach aims to transparently and systematically assess how much confidence to place in individual findings from qualitative evidence syntheses [ 3 ]. Confidence here is defined as “an assessment of the extent to which the review finding is a reasonable representation of the phenomenon of interest” ([ 3 ] p.5). GRADE-CERQual draws on the conceptual approach used by the GRADE tool for assessing certainty in evidence from systematic reviews of effectiveness [ 4 ]. However, GRADE- CERQual is designed specifically for findings from qualitative evidence syntheses and is informed by the principles and methods of qualitative research [ 3 , 5 ].

The GRADE-CERQual approach bases its assessment of confidence on four components: the methodological limitations of the individual studies contributing to a review finding; the adequacy of data supporting a review finding; the coherence of each review finding; and the relevance of a review finding [ 5 ]. In order to assess the methodological limitations of the studies contributing data to a review finding, a critical appraisal tool is necessary. Critical appraisal tools “provide analytical evaluations of the quality of the study, in particular the methods applied to minimise biases in a research project” [ 6 ]. Debate continues over whether or not one should critically appraisal qualitative research [ 7 , 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 ]. Arguments against using criteria to appraise qualitative research have centred on the idea that “research paradigms in the qualitative tradition are philosophically based on relativism, which is fundamentally at odds with the purpose of criteria to help establish ‘truth’” [ 16 ]. The starting point in this paper, however, is that it is both possible and desirable to establish a set of criteria for critically appraising the methodological strengths and limitations of qualitative research. End users of findings from primary qualitative research and from syntheses of qualitative research often make judgments regarding the quality of the research they are reading, and this is often done in an ad hoc manner [ 3 ]. Within a decision making context, such as formulating clinical guideline recommendations, the implicit nature of such judgements limits the ability of other users to understand or critique these judgements. A set of criteria to appraise methodological limitations allows such judgements to be conducted, and presented, in a more systematic and transparent manner. We understand and accept that these judgements are likely to differ between end users – explicit criteria help to make these differences more transparent.

The terms “qualitative research” and “qualitative evidence synthesis” refer to an ever-growing multitude of research and synthesis methods [ 17 , 18 , 19 , 20 ]. Thus far, the GRADE-CERQual approach has mostly been applied to syntheses producing a primarily descriptive rather than theoretical type of finding [ 5 ]. Consequently, it is primarily this descriptive standpoint from which the analysis presented in the current paper is conducted. The authors acknowledge, however, the potential need for different criteria when appraising the methodological strengths and limitations of different types of primary qualitative research. While accepting that there is probably no universal set of critical appraisal criteria for qualitative research, we maintain that some general principles of good practice by which qualitative research should be conducted do exist. We hope that our work in this area, and the work of others, will help us to develop a better understanding of this important area.

In health science environments, there is now widespread acceptance of the use of tools to critically appraise individual studies, and as Hannes and Macaitis have observed, “it becomes more important to shift the academic debate from whether or not to make an appraisal to what criteria to use” [ 21 ]. This shift is paramount because a plethora of critical appraisal tools and checklists [ 22 , 23 , 24 ] exists and yet there is little, if any, agreement on the best approach for assessing the methodological limitations of qualitative studies [ 25 ]. To the best of our knowledge, few tools have been designed for appraising qualitative studies in the context of qualitative synthesis [ 26 , 27 ]. Furthermore, there is a paucity of tools designed to critically appraise qualitative research to inform a practical decision or recommendation, as opposed to critical appraisal as an academic exercise by researchers or students.

In the absence of consensus, the Cochrane Qualitative & Implementation Methods Group (QIMG) provide a set of criteria that can be used to select an appraisal tool, noting that review authors can potentially apply critical appraisal tools specific to the methods used in the studies being assessed, and that the chosen critical appraisal tool should focus on methodological strengths and limitations (and not reporting standards) [ 11 ]. A recent review of qualitative evidence syntheses found that the majority of identified syntheses (92%; 133/145) reported appraising the quality of included studies. However, a wide range of tools were used (30 different tools) and some reviews reported using multiple critical appraisal tools [ 28 ]. So far, authors of Cochrane qualitative evidence syntheses have adopted different approaches, including adapting existing appraisal tools and using tools that are familiar to the review team.