Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

One of my student researchers is likely falsifying some results/data. What are the consequences?

Relevant info and background:

I'm an engineering Post Doc at an American university. One of my roles is to basically function as a 'project manager' for a couple projects that have a number of Graduate-level RAs working on them. I have a good relationship with all the RAs and all are hard-working.

I'm convinced that one of the graduate RAs is falsifying computational results/data (also called "rigging data" by many) in some cases. Note that the individual appears to be doing this for only some cases, not all. I have many reasons to believe this, but here is a few: (1) inability to replicate various results, (2) finishing the work at a pace I think is not feasible, (3) finishing his work at home where he surely does not have the software environment to actually complete the work. There are also other reasons I believe this to be the case, but you get the point. I'm also convinced this has occurred for over 1 semester, so I probably need to report this since I am responsible for overseeing all the work. However, the student in general is a good person and hard worker. He has passed the preliminary exams and is finished with all classes - I'd hate to see him expelled from the university since he's this far into the program.

I have some questions:

What do you think could be the maximum punishment for this grad student/researcher? I'd feel terrible if it resulted in expulsion. I would think that you would have to receive at least one warning from the university before an expulsion, except in very extreme cases. I'd be fine if this resulted in suspension, and even losing funding, but for anything more I'd feel bad. What is the standard maximum punishment for these cases? Also, what is the most likely punishment?

What is the punishment for me if I don't report this problem? For instance, say I just pretended ignorance. It is extremely unlikely I would do this, but it's worth asking.

How common is this? I would think this happens once in a while - a grad student decides to be lazy and fabricate a small portion of the overall results to avoid working the weekend or something. An experienced professional would know this is seriously wrong, but not necessarily a mid-level PhD student.

Any advice from people with experience in this, professors, grad students, principle investigators, etc would be great

- supervision

- research-misconduct

- 84 This is a very serious issue. If you are confident the student is falsifying data, you have an ethical and professional obligation to report them, even if it ruins their career. Frankly, if they are falsifying data, the worst possible thing would be for them to continue into an academic career: at some point, their deception will be found out, and then all of their work since they received their degree will be discredited and they will probably be permanently ostracized from the academic community. The consequences they would receive if reported now, however severe, would be less damaging. – Kevin Commented Jun 1, 2016 at 23:13

- 21 ...Thinking about your question some more, though, it looks to me like while you have good reason to be concerned about the student's behavior, you don't have strong proof that the student is in fact falsifying data. I would move forward cautiously. I'm not very experienced (I only have my terminal masters degree), so I'm not entirely sure what to recommend doing. Perhaps you could meet with a more experienced researcher in your lab to discuss your concerns and get advice on what steps forward you should take? – Kevin Commented Jun 2, 2016 at 0:38

- 76 The "finishing his work at home" thing doesn't read as very strong evidence to me, unless there's more to the situation that's not apparent. Lots of work can be done by remote access, using tools like SSH, remote desktop, VNC, LogMeIn, etc. I even know physical laboratory experimentalists that have full remote access to their equipment and sensors. Unless there's some unique resource necessary for this work, that's strictly inaccessible over a network, you would need to rule out actual use of such mechanisms. – Phil Miller Commented Jun 2, 2016 at 0:49

- 30 You should probably address the non-reproducible results before anything else. The most common reason people make non-reproducible results is honest mistakes, but even if the mistake is honest, once you're aware something is wrong it's not really honest to publish the data as if you think it's true. Even if he's not intentionally falsifying results this is a problem in itself. – Owen Commented Jun 2, 2016 at 10:01

- 10 Even if (1), (2) and (3) are correct, I would first assume (without knowing more specifics) that the student is working honestly but incorrectly (in line with @Owen's comment). Students, and even faculty, make mistakes all the time. I would express my concerns to the student about the correctness of their work, and discuss in detail what they did to figure out what exactly went on. – Kimball Commented Jun 2, 2016 at 12:14

12 Answers 12

You have suspicions, but the evidence, as you sketch it here, is circumstantial. You need hard proof. Then you can (and must) act.

Falsifying data is a capital crime in academia. It wastes time, possibly years of other people's work. Don't let it get through. This person, if they indeed falsified data and would come through with this, will taint anybody and anything they had to do with - you, your group, your department, your university. Their results will be worthless, and so will be the degree you bestow on them.

You would feel sorry for that person if expulsed; but how sorry would you feel for a person who for 2 years will try to reproduce this grad student's results and fail for no fault of their own? How about their life and career? An honest mistake is one thing, but faking data? You are feeling sorry for the wrong person here; you'll spare the guilty and will let the innocent being impaled? A grad student is sufficiently mature to know better than to produce "synthetic" data.

How about the person abetting such a fabrication? Frankly, if caught, depending on the power structure that person may get away with a milder penalty "for not knowing what was going on", but in principle they should get the same, if not a harsher penalty, because they certainly cannot claim they didn't know that this is wrong; and they know the repercussions.

How common is it? Hard to say, but there were a number of large scandals (Jan Hendrik Schoen comes to mind), there is probably a halo of minor such attempts. From my own anecdotal stock: I once heard the conspiracy theory that spectroscopists would intentionally introduce "innocent" wrong factors into published formulas that could be interpreted as honest mistakes to prevent competitors from progressing. I didn't believe it, however, once I had to use such a formula from a paper, and to be satisfied I rederived it and some of its "brothers" myself in a tortuous process taking several weeks; lo and behold: I found that one of them had an integer factor wrong. It goes without saying that I have no real reason to assume it was intentional, but the conspiracy theory still lodges in the back of the mind.

Bottom line: if he really fakes data, letting this happen is not an option ; but the evidence must be carefully and (important for fairness to the accused) confidentially vetted to establish whether this is indeed the case.

- 42 Great answer, but I disagree with "you must have incontrovertible proof". It is perfectly fine and indeed desirable to report strong suspicions based on less-than-solid proof to the PI, the dept. chair, or anyone else who has the ability to investigate the case and determine if misconduct occurred. Of course, in that case, when reporting suspicions the OP would make clear that they are suspicions and may turn out to be wrong. My point is that when suspicions are strong enough there is an ethical duty to report them, just like there is a duty to report a strongly suspected crime to the police. – Dan Romik Commented Jun 2, 2016 at 3:42

- 2 @DanRomik In principle, I agree with you: "incontrovertible" may be too strong. Still, the evidence must be carefully and - initially - confidentially vetted and should be sufficiently close to certainty - more than anywhere, reputation is central in science. And even if one is wrongly accused and thus wrongly perceived as falsifying data, that person's career will take a dive, whether deservedly or not. I think this is the case one needs to worry about, not about harshly treating someone who has provably falsified. The knife cuts both ways. – Captain Emacs Commented Jun 2, 2016 at 11:32

- 15 why not talk to the student and communicate your concerns with him? Based on the OP's question, it seems like he does have very legitimate cause for concern. However, going over his head and getting the administration involved would be a step I would take AFTER communicating with the student and letting him know that his methods "don't seem as rigorous as expected." If he ignores OP's admonishment/advice, then OP has to do what he has to do. But OP is, in fact, the superior directly in charge. – sig_seg_v Commented Jun 2, 2016 at 11:53

- 4 @CaptainEmacs thanks for agreeing. I agree that "the evidence must be carefully and initially vetted". That is the point of having an investigation, which is what will happen before the student can be punished and certainly before any misconduct is made public (if indeed it ever is). US universities have well-oiled machinery for carrying out such processes, so I see no reason for the vetting of the evidence to be done by OP. OP will of course aid in the investigation by providing information and expertise, but acting as an investigator is way beyond the scope of a postdoc's job. – Dan Romik Commented Jun 2, 2016 at 14:38

- To summarize, I suggest changing or removing the last sentence of your answer to make your otherwise great answer more precise. – Dan Romik Commented Jun 2, 2016 at 14:39

This misconduct is considered the ultimate misconduct in the research community. The offender is often stripped of his credentials and because of the tight knit nature of the scientific community, even if the credentials are not stripped the researcher may never find work as a researcher again. It will impact the ability to secure funding in the future.

If you are aware of it, as you claim to be, you can also be affected, ESPECIALLY if your name is on or associated with the paper. Additionally, if you are the one who secured the grant, this could backfire for you trying to secure grants in the future.

This is not common or uncommon, some people purposefully falsify data to support their hypothesis, but it is not always inaccurate. Sometimes researchers choose to only highlight some information and not other so that their hypothesis is supported and this is a more grey area.

BOTTOM LINE: If you know your student is falsifying data, then don't allow them to do so, for their career and for yours.

Communicate with the student. Let the student know your concerns.

The question seems rigged to determine what penalty may be appropriate, and how to kindly dish out the pain.

However, if we show good faith, then maybe we don't need to be quite as secretive. Say, "This resembles trouble. Here are the concerns." Then, if the student is innocent, the student may be able to explain things, and learn importance of proactively make things more clear so that suspicions don't grow into bigger problems than warranted.

If the student did do something wrong, maybe the student can correct things before they get further out of hand. The situation may be more correctable before more resources (including time) get spent on a road that may be wrong.

In education, the goal is often to help people do better. A common assumption is that people are typically inexperienced, and mistakes may be made. The goal isn't to try to maximize penalty for people who may be struggling with new skills. The goal is to try to get people in a good situation, including experience doing things desirably (including doing things properly, and successfully).

So, to re-cap this quite simply:

- if you're absolutely convinced that something is completely wrong, then go through the formal steps of handling such problems (reporting the issue, and whatever consequences follow through).

- (If this communication results in more trouble being discovered, be ready to shift over to the first bullet point, as needed.)

- 3 My concern here is that if the student has falsified data, your suggestion is to give them a heads up. This will enable them to adjust their methods to avoid being found out in future. Falsifying data is not a teachable moment or a mistake. It is deliberate and deeply immoral, and should quite rightly permanently taint a researcher who does it. – MJeffryes Commented Jun 2, 2016 at 16:07

- 15 "I cannot reproduce the results you obtained. Please write down your methods and provide to me all tools required to reproduce." is not giving him a heads up to adjust his methods to avoid being found out in the future. It is either bringing him back on the right track (not falsifying data anymore, for fear of being found out), or it won't change a thing, and then you can still decide to go to the chair/dean/whatever. – Alexander Commented Jun 2, 2016 at 17:46

- 6 @MJeffryes : Giving them a heads up is completely intentional. Attempting to keep actions secretive would be a more adversarial move, and I don't recommend that until you start to determine that adversarial actions are required. At this point, I'm recommending to take the friendly approach. The intended goal is helping to correct an apparent problem. If you declare enemies too quickly, you can eliminate some potential opportunities to still resolve things while on more friendly terms. Don't try to begin the punishment process before non-speculatively knowing the penalty is warranted. – TOOGAM Commented Jun 3, 2016 at 2:08

- 1 I wonder why none of the other more voted answers opt for this. Why isn't talking with the student the first option? Somehow it's implicitly assumed that whatever he's done he'll continue doing regardless... – hjhjhj57 Commented Jun 3, 2016 at 21:29

What do you think could be the maximum punishment for this grad student/researcher?

Whatever the maximum punishment is, that punishment has been decided by the people running the university. If you consider your university to be a reasonably well-functioning institution (and I would hope you feel this way about the place where you have decided to spend several years of your career), you need to remember that the people making such decisions have much, much more experience than you in handling all different kinds of academic misconduct. Thus, the punishment is likely to have been well-calibrated over many years and based on a large amount of cumulative experience. What makes you think that your personal judgment on this question is more wise or likely to be correct than such a body of accumulated knowledge and experience?

By not reporting your suspicions, you would essentially be saying "I know better than everyone else what needs to happen to this student, so I will usurp the institution's right to properly bring the student to account for his actions and just act based on my own gut feeling to save myself from the feeling of guilt over the punishment that the student would receive (even though any such punishment would be 100% the student's fault)." This line of thinking is simply wrong. The punishment is not, and shouldn't be, your decision. You have a duty to report the misconduct, and by not doing so you would be making yourself complicit in all its many potentially harmful consequences, which were described quite well in the other answers.

- 6 +1 for "I usurp the institutions' rights to bring the student to account". – Captain Emacs Commented Jun 1, 2016 at 23:33

- 19 Completely disagree: rules on the institution level are indeed built on accumulated experience, but not necessarily with the interest of either science or the PI. Rather based on maximizing the interest of the institution under their legal, financial and political constraints (which can be opposite to the interest and values of the OP). – Dilworth Commented Jun 1, 2016 at 23:43

- 3 @Dilworth in principle you may be right, which is why I added the caveat "If you consider your university to be a reasonably well-functioning institution ...". If OP has serious cause for concern that the university is staffed with incompetent or corrupt people, that might call for extra caution. However, the default assumption should be that large US universities have well-tested and reasonable procedures for handling misconduct. Thinking that one knows better than everyone else is a common human cognitive bias; in this case it would almost certainly be an incorrect assumption to make. – Dan Romik Commented Jun 2, 2016 at 14:29

- 2 Even large US universities have interests that may directly oppose the interests of the OP. It is not a case where the OP and the university have both the same goal, in which it is correct to assume that the university knows better than him how to act. It is about the possibility of completely contradicting goals. – Dilworth Commented Jun 3, 2016 at 14:29

You don't need to kick up a big fuss about it.

While it is definitely the case that any case of data fabrication is worthy of the levels of punishment it incurs in academia, it is not very clear that this is actually happening here. And in any case, the repercussions of scientific falsification should be very clear at any level, even for undergraduate students.

Inability to replicate results is extremely common in all scientific fields, and the overarching likelihood is that the analysis or experiments were carried out incorrectly for some reason. In the vast majority of cases, that is all there is to the story.

Simply deal with this problem as you would with any other inexplicable scientific result. Walk through the entire protocol, troubleshooting all potential issue spots, and exclude variables as required. In the extremely unlikely case that you find that the student was actually falsifying data, you must report it, but it seems unlikely to me that it is going to be the case.

If this person is falsifying data now, this person will continue to do so later as a PI. While you're sure to feel bad about it, science as a whole requires you to address the situation. When the public loses faith in science, we all suffer.

There are many ways to address this in a discreet manner (to ensure your intuition is accurate). Why not have this person walk you through the data/analysis step by step from ground zero?

- 2 How do you know the person will continue to do so later? – Jin Commented Jun 1, 2016 at 22:20

- 4 You don't know in any absolute sense, but it is the least assumption. – dmckee --- ex-moderator kitten Commented Jun 1, 2016 at 22:26

- 14 @Jin It is expensive to verify results (people do not get funds to reproduce known results); therefore, trust is absolutely central in science. If someone falsifies data once, he cannot be trusted anymore. Everything that person claims to find out, especially an expensive to produce result, needs to be independently verified anyway; their testimony is unreliable; so why waste attention and grants on them ever again? A person once caught taking a bit of money out of the cash register showed a "fluid" morals once, they won't be let handling the cash again. – Captain Emacs Commented Jun 1, 2016 at 22:44

- 3 @Jin The only question is whether the OP has hard proof that the person falsified in the past . It is irrelevant whether they will do later - for which I explained the reason above. Also, usually universities will have procedures in place to punish such transgressions, but what the precise consequences are, will depend on the uni and cannot be answered on SE. But you asked "How one knows that the person will do it later?" - and what I am trying to say is: it's not relevant whether they will really continue this or not - only that the costs for everybody in the future will be as if they do. – Captain Emacs Commented Jun 1, 2016 at 23:14

- 2 @Jin I would agree if it were just about you and Jack; but it's not. I thus fine-tune my example: Jack has stolen from you. You give him a dire warning. People know he has handled your money in the past (you didn't mention anything to them) and thus think he is honest. They leave their wallets lying on the table. Jack is around. Do you warn them off? – Captain Emacs Commented Jun 1, 2016 at 23:50

In terms of immediate authority, I assume you and the student in question both ultimately report to a professor. I expect that professor is one or more of the PI on the supporting grant, the student's thesis advisor, and your supervisor. I really hope you have a strong, trusting relationship with this professor, for a few reasons:

- they will be the first line of investigation and response in dealing with this situation, and likely carry more personal/reputational and institutional responsibility for it than you do

- the student has likely worked with them longer than you have (postdoc there maybe 1-2 years, vs ABD student)

Basically, you don't want to end up in a position where your actions lead the professor to hold this against you. That could lead to withdrawn/non-renewed funding for you, withheld or weakened recommendations for future positions, and so forth. You really need the professor on board with the suspicions before any wheels of process start moving.

If there's some administrator responsible for this sort of issue that you know and trust not to jump the gun, you could potentially speak to them first to get your concerns on record before bringing them to the professor, to avoid the risk of the professor trying to sweep them under the rug and/or throw you under the bus.

Edit to add 1:

Ultimately, though, resolving this situation now, while the student is still pre-PhD, is in their best interest. If they aren't doing anything wrong, then they'll learn how to conduct their work in a more traceable, transparent, supportable, and reproducible manner. If they are, there's at least a chance that they can get straight without a permanent black mark on their career. Once they've gotten that degree, any such allegation could lead to it being revoked, grants they've received being suspended or cancelled, etc. This is the last point in their career where they can learn appropriate boundaries and reasonably hope to rehabilitate themselves.

I think three statements that you make are just your impressions and as you know that these are your impressions, you are not completly sure that student in question falsifies the data. Otherwise, I think you would not have asked the question here.

The best thing to do in order to be sure 100 % is to replicate all results with this student in your office on your computer. Otherwise, I think your statements are just your own impressions, without any solid evidence.

If you see that data is falsified, then you should report it.

- 1 +1 This answer addresses an important aspect of the question. Whereas in the lab sciences the experiments are never 100% reproducible due to inevitable small environmental factors, in the computational realm everything, if properly documented, should be able to be verified and reproduced. Even random algorithms like Monte-Carlo can be exactly reproduced, especially in the testing stage, by seeding the pseudo-RNG with the same seed everytime. So if you want to make sure the student is doing his work: just get the code from him and run it on your own computer. – Willie Wong Commented Jun 2, 2016 at 13:25

- 2 @Willie Wong, Fully agree. The code that uses student would be useful to understand if there is any falsifaction. As in programming stuff, it is more difficult to understand the code of others than writing its own, I think it is better to reproduce all results with the student. By doing this, OP can understand also the methodology used and can verify the data used. Unfortunately, in some fields like economics, most of papers are not reproducible ; timeshighereducation.com/news/… – optimal control Commented Jun 2, 2016 at 13:46

- @WillieWong, I have the impression that computational experiments are also prone to variation due to hard-to-control environmental factors, including software versions and initial states of random number generators. Avoiding these pitfalls should be possible, as you say, but it doesn't seem straightforward to me ( journals.plos.org/ploscompbiol/article?id=10.1371/… ). – Vectornaut Commented Jun 2, 2016 at 17:11

- 1 @Vectornaut: initial states of random number generators can be controlled by properly seeding it as I wrote. See, for example, the documentation for the Julia language . Software versions can be documented; and if open-source software is used, the older versions can usually be tracked down in the appropriate repositories. Avoiding these pitfalls is in fact quite straightforward (and in fact the rules in your linked article make it even more so). Compare to the laboratory sciences the amount of documentation... – Willie Wong Commented Jun 2, 2016 at 17:27

- 2 ... is not more than what would be expected to go into a lab notebook keeping track of the conditions underwhich experiments are run etc. The fact that some individuals find it "hard" is more an indication that some computational scientists are not given the appropriate data management training that typical laboratory scientists would be given. In this day and age it should be the responsibility of the head of the lab (either the professor or the lab manager) to hold the students accountable for good data management practices. – Willie Wong Commented Jun 2, 2016 at 17:30

I agree with Captain Emacs 's answer, but there is something missing that I feel is important, namely:

Ask the RA directly whether he is fabricating any data, and while asking tell him why it is wrong to do so, and also that if he really does it and anyone finds out he can be expelled. At the same time tell him that at this juncture the best thing to do now is to redo all tests properly, meaning that he records all the random seeds used so that his data is completely reproducible.

After that it is likely that the problem will be resolved more or less satisfactorily, because it is generally difficult to write a program that looks normal and yet find a special random seed that causes it to have special behaviour. (It is possible but increasingly improbable for larger-scale tests.)

Falsifying data is a big no-no. It's on par with (and possibly worse than) plagiarism. It can ruin careers, and can lead to a whole host of huge problems (we don't need any more Andrew Wakefields). So if the student is doing this, you absolutely must report it and cannot feel bad.

That said, from the information you provided, there really isn't strong evidence. If I were on that jury, I would acquit without a second thought.

1) Working from home: Can he connect to a network to access the needed software? Can he run the program in the lab, get the raw data (say in a text file, or spreadsheet) take it home and do post processing/analysis there?

2) Not replicating data: I've written programs and ran simulations that performed beautifully and satisfied all the tests. But when I get to the group meeting, it fails. Why? Because I changed something that "wouldn't affect the results or the existing tests" (Ha!) between the time I originally got it working and the meeting. Or maybe an initial guess was changed. It might only take a few minutes to fix on my own, but in a meeting/high pressure environment I can't fix it right there. To me, that seems like a plausible explanation. (And I'm assuming there's no randomization in the code, I've had Monte Carlo approaches give significantly different results depending on the seed used).

3) Working faster than you expect: I see two possible explanations for this: a) The student is better than you think. b) The student is worse than you think. For (a), perhaps the student is able to crank out code fast, when he hits his stride and has a good mental map of where to go and how things should fit together (this "gunslinger" approach can be effective, but also can let bugs show up that make data replication difficult). Or he has written scripts to run several computations simultaneously or overnight. For (b), perhaps the student "hacks" everything in the code. Hardcodes things that should not be hardcoded, for example. Messes with things that shouldn't be messed with. This can give the illusion of working fast, but results in unmaintainable or inconsistent code, essentially borrowing time from the future.

Obviously, you have access to more information than we do, so perhaps these explanations don't apply. I would suggest talking to the student about the results, though not in an accusing way. Ask him to explain the results, explain what he did, and how he did it. Look through the code that he uses with him, make sure you both understand it. Perhaps there's honest mistakes to be corrected. Maybe there isn't a problem. If he seems to have no idea what he did or can't explain the procedure, then you probably want to bring up your concerns with the PI. But, under no circumstances can you let data falsification continue.

Tell the student that this doubt exists but in a one-on-one situation

To clear this doubt, ask him to make his results fully reproducible . It is in his own interest to show that he did not falsify anything. Show him that there is "immediate danger" that this gets investigated.

If he did not falsify anything (and your doubts were wrong), then this is a viable route. It requires some effort, but of course it can be done (and should be done, anyway). Then no damage is done, you only force him to work more transparently.

Allow him to redact work, if no harm has been done yet

This is the only "easy way out" that is in my opinion acceptable. In particular if nothing has been published outside of the university, you can allow him to redact falsified material, in order to replace it with real work. This may be punishment enough at this stage: It may set him back half a year towards graduation! But it may also require additional measures, depending on the severity.

He may then learn a key lesson here: while you may get away in highschool and maybe even undergrad, once the work gets more closely reviewed, misbehavior, copypasting and data fabrication is likely to be discovered, and this is not a good way of working. A backlash could come any time, and may ruin his reputation.

In the case that he admits cheating on this project, I would consider also reviewing earlier work, too.

If harm has been done, you want to redact anyway

If anything of this has been published yet, your name or your professors name is likely to appear on it, or at least be associated with it. In this case, you really will want to have this resolved...

At this point, it may be necessary for your own reputation to trigger a formal investigation; partially to clear yourself from any responsibility.

In a nutshell,

1) you must get a decent proof of misconduct, and

2) if/when you get it, you should report it immediately, even if this means the definite end of his career

Falsifying data is a cardinal sin for a scientist, and as many others remarked, it may waste years of work for other scientists try to replicate (and possibly improve) the faulty results. I was once caught in a situation where my student was trying to replicate somebody else's results, and it seriously impacted her PhD work, since the original authors' selective reporting (they later found that their solution works only in very limited cases, but did not share that finding until much later).

Now, before you rush to your superiors , I would advise you to talk to student about his methodology. Explain him that as (de-facto) project leader you have responsibility to guarantee that all results conform to scientific standards and that after you went through his work, you suspect there might be a problem with his results, as a result of unintentional mistakes or inexperience on his part. Start with this - if he made unintentional mistake (or even multiple mistakes), he will probably more than glad to learn from them and work hard to correct them. For a PhD student, this is sufficiently vague and yet serious that, if he is honest, he will work really hard to correct the problems (and redo the experiments). In that case it is your decision whether you trust him enough to have him on the team, and if you don't want, you should simply explain to your superiors that he does not fulfill your criteria, since he makes too many mistakes, and you do not need such people on the project, period.

I understand that this is a very difficult task for you, since you will have to waste your own time to go through his work and make sense of it, and probably you have your hands full with other work.

On the other hand, it may be pretty easy to spot if he is really dishonest or trying to hide something, because if he took a shortcut the first time by falsifying the results, I very much doubt he will "waste" his time correcting them - more likely he will try to weasel out or start making excuses, which then really means a red flag, and gives you a really good grounds to either confront him directly (usually it won't be necessary, as he will probably start digging his hole deeper and deeper) or just go and report him to the superiors. Because, if his mistakes were unintentional or result of carelessness but he does not feel he needs to correct them, he still deserves to be reported and sanctioned - refusing to learn from mistakes that others point out and refusing to correct them is almost as bad as falsifying data.

I do advise against going to superiors based only on a hunch, because an accusation of falsifying data may ruin his career even if he is not guilty, and further graduate students may become reluctant to work with you, fearing the same treatment (and some may even interpret your actions in a way that you got rid of competition down the road, which is the last thing you need).

And, since you are his superior, you are guilty if you do nothing, and the problems get discovered in his further career. You must act.

You must log in to answer this question.

Not the answer you're looking for browse other questions tagged ethics supervision research-misconduct ..

- Featured on Meta

- Site maintenance - Mon, Sept 16 2024, 21:00 UTC to Tue, Sept 17 2024, 2:00...

- User activation: Learnings and opportunities

- Join Stack Overflow’s CEO and me for the first Stack IRL Community Event in...

Hot Network Questions

- What is the best way to protect from polymorphic viruses?

- Place with signs in Chinese & Arabic

- I am an imaginary variance

- How can we speed up the process of returning our lost luggage?

- Why does quantum circuit need to be run even though the probability is already in the formula?

- Is it ok for a plugin to extend a class in some other module without declaring the other module as a dependency?

- Odorless color less , transparent fluid is leaking underneath my car

- Trying to find air crash for a case study

- Why is the Liar a problem?

- Removing lintel and bricks for larger wall oven

- Is Sagittarius A* smaller than we might expect given the mass of the Milky Way?

- I have been trying to solve this Gaussian integral, which comes up during the perturbation theory

- Do carbon fiber wings need a wing spar?

- Is Entropy time-symmetric?

- Very simple CSV-parser in Java

- How to reply to a revise and resubmit review, saying is all good?

- Connected components both topologically and graph-theoretically

- Positivity for the mild solution of a heat equation on the torus

- security concerns of executing mariadb-dump with password over ssh

- Why is steaming food faster than boiling it?

- Where are the DC-3 parked at KOPF?

- What makes amplifiers so expensive?

- Swapping front Shimano 105 R7000 34x50t 11sp Chainset with Shimano Deore FC-M5100 chainset; 11-speed 26x36t

- What does "иного толка" mean? Is it an obsolete meaning?

- Research Process

- Manuscript Preparation

- Manuscript Review

- Publication Process

- Publication Recognition

- Language Editing Services

- Translation Services

Research Fraud: Falsification and Fabrication in Research Data

- 4 minute read

- 89.7K views

Table of Contents

Although uncommon, it’s not unheard of that researchers are accused of falsifying and/or fabrication research. In addition to other malintent, like plagiarism, duplication in publishing or multiple submissions. The tricky part is finding and identifying true fraudulent activity, even through peer review processes. Even highly regarded journals have accepted articles that are suspected of research fraud. On the other hand, some researchers have had their careers and reputations compromised by false accusations. Even if the false accusation was cleared, intense media coverage on the accusation versus the acquittal can mean permanent damage to the researcher.

What is the difference between falsification and fabrication?

Any type of research fraud usually involves publishing conclusions, or even data, that were either made up or changed. There are two different types of research fraud; fabrication and falsification. Obviously, they are related. However, they are distinctly different.

Falsification essentially involves manipulating or changing data, research materials, processes, equipment and, of course, results. This can include altering data or results in a way where the research is not accurate. For example, a researcher might be looking for a particular outcome, and the actual research did not support their theory. They might manipulate the data or analysis to match the research to the desired results.

Fabrication, on the other hand, is more about making up research results and data, and reporting them as true. This can happen when a researcher, for example, states that a particular lab process was done when, in fact, it wasn’t. Or that the research didn’t take place at all, in the case of a study results from previous research were copied and published as original research.

Obviously, both falsification and fabrication of data and research are extremely serious forms of misconduct. Primarily because they can result in an inaccurate scientific record that does not reflect scientific truth. Additionally, research fraud deceives important stakeholders of the research, like sponsoring institutions, funders, employers, the readers of the research and the general public.

How to Detect Fabricated Data

Sometimes it’s easy to identify fraud and fabricated research. Maybe, for example, an evaluator of the research is aware that a particular lab doesn’t have the capability to conduct a particular form of research, contrary to the claims of the researcher. Or, data from the control experiment may be presented as too “perfect,” leading to suspicions of fabricated data.

Suspected data manipulation in research, fabrication or falsification is subject to reporting and investigation to determine if the intent was to commit fraud, or if it was a mistake or oversight. Most publishers have extremely strict policies about manipulation of images, as well as demanding access to the researcher’s data.

Image Manipulation in Research

Another fairly common fabrication relates to images that appear to have been manipulated. It should be noted that image enhancement is often acceptable, however any enhancement must relate to the actual data, and whatever image results from the enhancement must accurately represent the data. If an image is significantly manipulated, it must be disclosed in the figure caption and/or your “materials and methods” section of the manuscript.

So, the bottom line is that image manipulation in research is okay, as long as the manipulation is to improve clarity, no specific features are introduced, removed, moved, obscured or enhanced. Minor adjustments to brightness, color balance and contrast are acceptable if they don’t eliminate or obscure information that is present in the original image.

Protecting Your Reputation

A researcher’s worst nightmare might be the accusation of committing fraud. Of course, unintentional errors do happen, and unfortunately they can appear to be misconduct. However, it should be made clear that an honest error is not considered research misconduct.

To avoid any false accusations, make sure your research is 100% accurate and any methods and processes are expressed accurately. Ensure that any images that might be enhanced are noted as such, and include the original image with your submission. Keep records of all raw data; if falsification or fabrication are suspected, the journal or other investigative body will demand to review your information. Therefore, flawless records must be kept, analyzed and reported. Additionally, certain research topics, such as studies of human subjects, require a specific duration of data retention.

If you discover an accidental published error, follow the steps outlined in our article about retracting or withdrawing your research .

The Bottom Line

A researcher must take extra care to ensure that their data and research can not even be suspected of fraud via falsification and fabrication. You can do this by being transparent and honest about any and all research, data, analysis and conclusions. Remembering that the purpose of research is to further collective knowledge over supporting desired outcomes is key to ensuring integrity in research.

Language Editing Plus

Elsevier’s Language Editing Plus service includes unlimited rounds of language review for up to one year, manuscript formatting, reference checks, a customised cover letter and more.

How to Find and Select Reviewers for Journal Articles

Writing a Scientific Research Project Proposal

You may also like.

Essential for High-Quality Paper Editing: Three Tips to Efficient Spellchecks

If You’re a Researcher, Remember These Before You Are Submitting Your Manuscript to Journals!

Navigating “Chinglish” Errors in Academic English Writing

Is The Use of AI in Manuscript Editing Feasible? Here’s Three Tips to Steer Clear of Potential Issues

A profound editing experience with English-speaking experts: Elsevier Language Services to learn more!

Professor Anselmo Paiva: Using Computer Vision to Tackle Medical Issues with a Little Help from Elsevier Author Services

What is the main purpose of proofreading a paper?

Systematic Review VS Meta-Analysis

Input your search keywords and press Enter.

Ethics of Science Writing

Data fabrication and falsification.

Group Members: Will Burnham, Kelly Heffernan, Alyssa Morgan, and Evan Russell

The truth is always something that scientists and members of the scientific community should strive to achieve. Truth emerges at the intersection of transparency, trust, and honesty. These are the qualities that mark sound science which accurately disseminates findings and information. However, it is possible for scientists to fall short of this mark. Instead of scientists displaying truthful findings, we find that they choose the evidence they desire by manipulating it in their favor (Leng 2020, 159-172). This is known as data fabrication and falsification, and in this practice, scientists manipulate existing data or create new data with no basis in experimentation. Scientists are motivated to do this in order to form data that reflects their desired outcome or matches their hypothesis. This practice occurs more often in scientific writing than it would seem. As a result of fabricating and falsifying data, other scientists or researchers cite these papers that contain fabricated data and then the ongoing spiral of falsification commences. In the end, all the authors and or institutions that cited the original paper containing the falsified data are retracted once discovered. As data fabrication and falsification continues, the motivation for fabrication, the resulting implications and consequences, methods of detection, and preventative measures and ethical considerations will all be examined throughout this webpage in an effort to stop false data’s destructive path through science.

Motivation for Fabrication & Falsification

A frequent quote many people use today is “I believe in science”. There are t-shirts (see figure 1), book bags, and many Instagram posts espousing such a sentiment. This blind faith in science stems from the fact that society places scientists on a pedestal. They are expected to be trustworthy, unbiased, dedicated, and uncorrupted by ulterior motives. The reality, as shown in real life examples throughout this webpage, is much more nuanced. While many scientists do follow ethical practices, there are also many who fabricate and falsify the data they draw their conclusions from. This is a dangerous practice, as in fields like medicine where falsified results of drug trials can result in serious injury for future patients who are prescribed the drug. With all of these horrible consequences, why would scientists fabricate data?

Figure 1. This image shows a popular t-shirt design on the online retailer redbubble.com. The text on the t-shirt reads “Trust Science.” (Redbubble 2021).

One reason for fabricating data could be that some scientists do not realize that they are using poor data practices. For instance, in one heavily cited study about data fabrication (Fanelli 2009, 1), only 33.7% of scientists admitted to questionable research practice that they themselves had conducted, while 72% reported that they had seen questionable research practices in their peers’ work. This shows that people are much less likely to admit faults in their own data than in others (see figure 2). For this reason, scientists could be subconsciously fabricating data and falsifying other items, but genuinely not realizing it due to their own inherent biases.

Figure 2. This image is taken from (Fanelli 2009, 6). It shows the disparity between scientists admitting to their own mistakes, versus admitting to noticing peer’s mistakes in a survey. Note that QRP stands for questionable research practices.

Another reason is that many scientists in modern times feel pressured to publish as many articles as they can, as quickly as they can. They believe this will build their reputations as scientists through sheer volume of increased citations. One researcher who was held in high esteem at Bentley University (Nurunnabi and Hossain 2019, 4) was found to have fabricated data in two of his most prominent studies. This kind of behavior can be explained by the need for many scientists to either publish work, or perish. It would be tempting for a researcher to fudge the numbers in order to reach a statistical significance threshold that is needed for publication. Without set ethical guidelines to follow, problems like this can quickly become prevalent. Another young researcher named Joachim Boldt (Mayor 2013, 1) fabricated data in research relating to safe blood plasma substitutes for diabetics. This resulted in people believing that certain plasma substitutes were safe for diabetics when they actually increased death and injury rates, as discovered by a meta-analysis (Mayor 2013, 1). Boldt’s desire for influence overcame his desire to genuinely help people, which may have resulted in patient deaths.

Figure 3. This image shows the article authored prominently by Adeel Safdar. There is a retraction warning on it in order to show people that any conclusions or results drawn in it should not be relied upon for further research (Safdar et. al. 2015, 1).

Another scientist who fabricated data is Adeel Safdar from McMaster University in Ontario (Safdar et al. 2015, 1). As shown in figure 3, His article was recently retracted from the Skeletal Muscle Journal, due to fabrication of two images that led to false conclusions. Recently, concerns were raised about the scientific ethics of Safdar after he was accused of torture and domestic abuse of his wife and family, which can be seen in figure 4. As Safdar’s character was taken into question, this led the scientific community to also reexamine his work, and discover instances of data fabrication. Once the scientist’s character is seen as flawed, their data collection and overall experimental designs will be checked thoroughly to make sure they were not also dishonest in their work. Many other papers of Safdar’s are being examined for data fabrication, and as of now three of his papers have been retracted.

Figure 4. This image shows article headlines from The Hamilton Spectator, a Canadian newspaper. They outline the domestic violence charges Adeel Safdar has recently been accused of. (Editors of Hamilton Spectator 2021).

Overall, there are many different reasons that motivate scientists either consciously or unconsciously to fabricate their data. No matter the reason they are engaging in this type of false science, the consequences continue to be severe and lasting on the entire scientific community. These consequences will be examined in the next section of this webpage.

Implications & Consequences of Fabricating & Falsifying Data

Figure 5. This cartoon is from the Los Angeles Times created by Scott Adams. In this cartoon there are two researchers and/ or scientists who have realized that the results from their experiment were not what they had expected. Therefore, one of them brings up that they can just “adjust” (change) the data so that it “supports” what they had anticipated. This cartoon does a great job showing how fabricating and falsifying data takes place in the scientific community.

Figure 6. This is Table 1.: Potential Consequences* of Publication Fraud for Authors and Their Institutes from ‘Preventing Publication of Falsified and Fabricated Data: Roles of Scientists, Editors, Reviewers, and Readers’ in Journal of Cardiovascular Pharmacology. The table lists nine different punishments for fabricating and falsifying data in scientific writing.

Figure 7. This newspaper is from Science Magazine and has the article of Luk van Parijs, a former MIT Researcher, and how his contract with MIT was terminated because he was found guilty of committing data fabrication in some of his published work on RNA interference.

Figure 8. The top two maps are from ‘Ireland after NAMA’ and show the median prices for properties in different counties of Ireland in 2010 and 2012. The bottom two maps are also from ‘Ireland after NAMA’ and show the change in median price for properties from 2010 to 2012, both in actual value and percent change.

Figure 9. These two graphs are from the article ‘Researcher at the center of an epic fraud remains an enigma to those who exposed him’ from Science Magazine, Tide of Lies. The graph titled, Total scientific output, shows the number of scientific papers that Yoshihiro Sato published throughout his career until he died in 2016. The graph titled, Clinical trials, shows the number of patients from 33 of his clinical trials.

Figure 10. This diagram is from the article ‘Researcher at the center of an epic fraud remains an enigma to those who exposed him’ from Science Magazine, Tide of Lies. It shows the top 12 trials performed by Yoshihiro Sato that had been widely cited by other researchers, scientists, institutions, etc. More specifically, this diagram shows when the trial took place, the number of patients involved in that trial, the number of references that were made to that trial by year and when all corresponding documents that had cited that trial were retracted.

It is evident from these examples alone that the falsification and fabrication of data does not come without consequences. Its effects can powerfully alter the course of individual lives and science as a whole, often for the worst.

Methods of Detecting Fabrication & Falsification

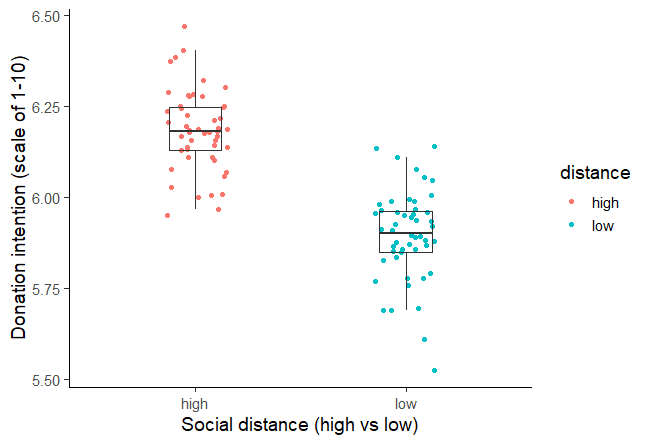

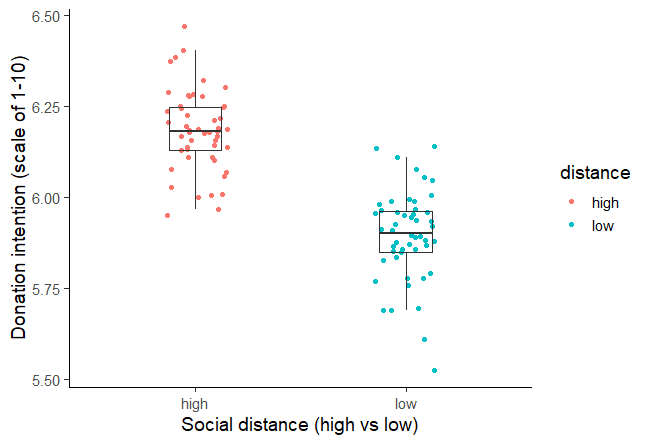

Detecting flaws in data is the first step to recognizing and retracting falsified or fabricated data to prevent its far-reaching consequences. However, detecting data fabrication is not a simple process, requiring standards of measuring the accuracy of various types of data (Kitchin 2021, 41). As stated by data researcher, Rob Kitchin, “The fact that myself and my colleagues continually struggle to discover such information and have to extensively practice data wrangling has made it clear to us that there is still a long way to go to improve data quality and its reporting” (Kitchin 2021, 44). Kitchin highlights that even those who are well-versed in analyzing the quality of data struggle to determine just how reliable data truly is and if data should be retracted, or withdrawn from publishing. In short, there is no clear cut way to determine if data is true or false, or simply if it is influenced by bias. However, data falsification can be recognized and can result in the successful retraction of unreliable works, as shown in Figure 11 (Nurunnabi 2019, 116). Data falsification often has warning signs, which can be applied in a case-by-case manner to the various fields in which data can be relevant.

One major concern with data is that of representativeness: how much is the data actually revealing what it was attempting to measure in the first place (Kitchin 2021, 41)? Although misrepresentation is not always intentional in its resulting deceit, often one’s manner of interpreting and conveying information can blur the true meaning and intentions of data, which turns into data falsification when intentional. Between collecting data and spreading it, there is always a party in charge of its interpretation to bridge the gap between these two steps (Leng and Leng 2020, 159-172). Data cannot interpret itself, and this is often where the issue of representativeness becomes a problem (Leng and Leng 2020, 159-172). Processes, including extraction, abstraction, generalization, and sampling, involve instances where bias and lacking precision can step in (Kitchin 2021, 41). It is important to be aware that the method of data collection can affect how it is interpreted and conveyed, including factors such as sample size, demographics, and the population from which data is derived (Kitchin 2021, 41). For example, a study with a very small sample size will be much less reproducible and will have lower statistical power than if the same results were obtained from a larger sample size. In this case, any generalizations made from the data must be placed in the context of a small sample size to avoid drawing conclusions based on the data that would not be applicable to a larger sample size. This same thought process must be applied to all data. It is fair to conclude that certain deceiving aspects of data collection are not always easy to recognize, especially for the general public, but an awareness of where data can become flawed is crucial, as intentional misrepresentation is directly linked to data fabrication.

Despite the importance of having an individual awareness of where data may be falsified, whether intentionally or through unnoticed bias in action, there are international-level efforts to detect poor data (Kitchin 2021, 42). In fact, there have been formal measurement systems for classifying data as either proper or poor quality. These measurements, as shown in Figure 12, involve veracity, the completeness of the data, timeliness, coverage, accessibility, lineage, and provenance (Kitchin 2021, 42). However, not everybody follows these standards to ensure that the data is of proper high quality, and it can be challenging to apply such general standards to specific fields. Oftentimes, efforts are only made when flaws in the data would affect a larger crowd, meaning it would be more likely for people to notice any flaws (Kitchin 2021, 42). For these reasons, when analyzing data and research, it is crucial to think about who the researcher’s intended audience is and how this could affect the researcher’s motives and possible shortcomings.

One field where data fabrication is prevalent and especially dangerous is in clinical trials, which evaluate new treatments and medicines in the medical field. Accidents in data collection and analysis can happen. For this reason, it is vital that quality standards are established for researching, experimenting, and citing and that these standards are made aware to everyone involved with research. However, to help identify data falsification, prominent distinctions can be identified between accidents in data falsification and purposely falsified data (Marzouki, et al. 2005, 267). In one study, two clinical trials were examined and their data analyzed to determine if these data were reliable or flawed in some way (Marzouki, et al. 2005, 267). The first trial in question was the diet trial, surrounding a controlled trial of how fruits and vegetables in a diet affect patients with coronary heart disease, who were stated to be randomly assigned to the experimental and control groups (Marzouki, et al. 2005, 267). The other trial was that of a randomized drug trial, studying how drug treatment affected patients with mild hypertensions (high blood pressure) (Marzouki, et al. 2005, 267). The data for both trials could be analyzed quantitatively, both separately and in comparison to one another, in order to come to recognize trends that could be possible indications of fabrication or falsification.

In an effort to recognize data falsification, the data was first checked for digit preference. Digit preference is defined as “the habit of reporting certain end digits more often than others” (Camarda, Eilers and Gampe 2015, 895). Human-recorded data often shows preference towards certain numbers, as opposed to machines, with humans often favoring either multiple of 5 or 10, or even numbers rather than odd, which can threaten the accuracy of data when nudging numerical data towards these favorable numbers (Camarda, Eilers and Gampe 2015, 895). For example, if a study’s data predominantly ends in even numbers, rather than odd, it can be assumed that possible rounding took place with the data to make the numbers seem more favorable. As a result, digit preference can be a strong indication of possibly falsified or unreliable data. Although chance can result in digit preference, the two clinical trials examined in the discussed study should have had a similar pattern of preference due to the randomization stated in creating the experimental and control groups (Marzouki, et al. 2005, 268). However, the results proved that the diet trial had notable differences in standard deviations for height and cholesterol measurements, while the digit presence for all variables was very strong (Marzouki, et al. 2005, 268). This led the researchers to believe that this data was not entirely raw and unaltered by its experimenters.

Another major difference in these data included the magnitude of the P values in each data set (Marzouki, et al. 2005, 268). The P value is a statistical tool used in science that indicates the strength of evidence, with smaller P values referring to a greater significance of data, and analyzes the probability of data/results being reproduced by chance (Nuzzo 2014, 152). The P value is a strong motivator for scientists when experimenting, as a P value can determine how a researcher’s work is received, and, therefore, if it is cited or not. Therefore, analyzing P values is a necessary step to determine if data was falsified to make it appear as if data is more significant than it truly is. There were noticeable differences in mean and variance between baseline variables in the diet trial, as shown in Figure 12, showing that the groups were not randomly allocated as the author had claimed (Marzouki, et al. 2005, 268). Likewise, the P value, together with the significant difference in digit preference, served as evidence that further flaws in data did not occur by chance (Marzouki, et al. 2005, 268).Therefore, the lack of randomization in this process could be due to differences between the means of the baseline variables (Marzouki, et. al 2005, 268). Although one irregular pattern, such as digit preference, is not a definite indication of data fabrication, as it could simply be attributed to different people recording data for each group, the combination of digit preference, with differences in means and variances, points towards data fabrication. As exemplified by the analysis of this data, the tools used in evaluating data are specific to the field and presented data, as this clinical trial is mainly concerned with quantitative data. However, these specific statistical tools may not be useful in determining the validity of data from other fields that involve more qualitative analysis.

It is evident through this single example just how shaky the evaluation of data fabrication can be. How can we truly be sure that data is made up or true? Can one ever be 100% certain that chance played a part in certain results? This field of recognizing and abolishing data fabrication is constantly evolving as more cases are studied in various fields, but for now, we have to work with existing evidence and intuition. The difficulty in spotting data fabrication and falsification only supports the idea that this practice is dangerous to the integrity of science.

Preventative Measures & Ethical Practices

Preventative measure and ethical practices are vital to creating an environment and culture in the scientific community that strives to prevent falsified and fabricated data. Preventative measures are found in two places in the research timeline. The research timeline is the sequential steps we take to conduct research. This begins with a question, followed by a hypothesis, testing, conclusion, and finally publishing your results.

Figure 14: Progression of the scientific process, and where to stop data fraud and falsification in the timeline.

The first section in the timeline is preventing falsified and fabricated data before it is created in the testing stage. Many examples of fraudulent work are caught after publication. For this reason, the second section focuses on snuffing out falsified and fabricated data after it has been created, but before it is published, so the incorrect information is not shared.

For a long time, science education has been about broadening the scope of our understanding. Now things may be shifting towards teaching ethics in science to students (Reiss, 1999). In a perfect world, science would be performed on a completely transparent and honest basis. While it is not possible for all scientists to be perfect, it is still important to create a culture of honest work. This can begin early in a student’s education. Educating students on data fraud will “… increase awareness, they will also encourage a mindset in which issues can be discussed earlier and easier” (Korte, 2017). By educating students on the dangers, they will be less likely to fabricate or falsify data. This increased awareness from education should also help break down the detrimental “publish or perish” culture found in labs around the world.

But learning shouldn’t stop with formal education up to, and through college. It is important that scientists continue to learn about ethics and honest work during their scientific careers. Many have explored the possibility of web based learning to continue our understanding of ethics in research. The ethicist Michael Pritchard found that post bachelors degree web based learning has the potential to create a collective responsibility for the research being done (Pritchard, 2005). This increased awareness for everyone involved in the research could help prevent the use of fraudulent and falsified data or have others notice before it is published. However, learning about ethical research conduct is often more subtle than a structured program, but it can be found in codes of ethics. A code of ethics is a guide of sorts that is adopted by a particular organization or scientific community that helps us distinguish right from wrong. Scientists are sometimes not even aware that they have created fraudulent data, but frequently referencing and being educated of the code of ethics, help us ensure we are operating within the guidelines that lead to “good science”. Many organizations draft or create their own code of ethics. For example, a group of students at Worcester Polytechnic Institute drafted a code of ethics for robotics, a relatively new field in the grand scheme of science. In this code they included a piece that stated “[To this end and to the best of my ability I will] not knowingly misinform, and if misinformation is spread do my best to correct it” (Ingram et al., 2010). Most codes of ethics or editorial policies will include a piece with this same idea, such as The Association of Clinical Research Professionals who set forth a code use in scholarly work. The code states researchers should “Report research findings accurately and avoid misrepresenting, fabricating or falsifying results” (ACRP, 2020). Enforcing that scientists adhere to their respective code will often mean that they are actively avoiding data fraud and fabrication by not spreading misinformation. Proactive solutions to catch and prevent problems before they

happen are better, but often are necessary to react when the latter methods fail.

While the scientific community generally prevents data fraud in the first place, it is something that happens, therefore it is important to have preventative measures for keeping fraudulent data from being published, this means performing checks like peer reviews and replica trials. Peer reviews are a great method for other members of the scientific community to review the publications to verify the work. Peer review can expose inconsistencies and false findings. This works by other members in your academic field reviewing your work by reading through it to identify pieces that don’t make sense or may be inconsistent with reality. There are many varieties and techniques for peer review but “Many believe [open review] is the best way to prevent malicious comments, stop plagiarism, prevent reviewers from following their own agenda, and encourage open, honest reviewing” (Elsevier, 2021). In the figure to the right, the steps of the peer review process are shown. It is very thorough and has multiple failsafe’s to catch fraudulent or falsified work. In addition to peer review some schools may have the resources for an in house reviewer. An in house review is someone with formal training in reviewing scholarly writing for things like reproducibility, accuracy, and the presence of falsified or fraudulent data. (give every paper a read for reproducibility).

Sometimes, it is found that peer review is not thorough enough to test the validity of a particular result from a study or research. This is the function of replica trials. If someone tells you it’s sunny outside, you will probably look out the window too before you head out, you never know, it could be raining. When researchers produce detailed methods, it allows other researchers to perform the exact same work and compare the results for validity. One challenge with replica trials is ensuring that a scientist’s work is in fact detailed enough to be reproducible. Detailed work means that another scientist may accurately reproduce the exact experiment and compare the resulting data to see that there is agreement between the independent trials. To ensure that scientists are producing replicable data there are several techniques that are seen as good solutions. A 2016 survey of scientists in a variety of fields found 90% of respondents deemed that experimental reproducibility could benefit from more robust experimental design, better statistics, and better mentorship (Baker, 2016). Another method of preventing data fraud through better reproducibility is simply not allowing people to fall into the temptation by keeping strict records of who is performing which experiments, and locking files after data collection to prevent manipulation. If researchers choose to keep data out, there must be a strong justification that the data should not be used. In the end, scrutiny and verification of others’ work by either method is an effective and respectful way to prevent the publication of fraudulent data.

Preventing data fraud is an ongoing challenge for the scientific community, and methods of prevention are constantly improving as we educate future generations of scientists and review each other’s work with a more critical lens. Data falsification and fabrication is a widespread issue that has the power to put the integrity of science and the trustworthiness of researchers in danger. Although there is no definite solution to stopping data fraud, the problem must be addressed through spreading awareness and recognizing trends. By recognizing why scientists falsify and fabricate data and how it affects both individual scientists and the world of science as a whole, tools can be implemented to recognize and prevent data falsification. Science is the foundation for our understanding of how the world works, so it is our responsibility as scientists to continuously search for truth and uphold the prestige of science.

Bibliography

Al-Marzouki, Sanaa, Stephen Evans, Tom Marshall, and Ian Roberts. “Are These Data Real? Statistical Methods For The Detection Of Data Fabrication In Clinical Trials.” BMJ: British Medical Journal 331, no. 7511 (2005): 267-70. Accessed April 8, 2021. http://www.jstor.org/stable/25460301 .

Brandon Ingram, Daniel Jones, Andrew Lewis, and Matthew Richards. “A CODE OF ETHICS FOR ROBOTICS ENGINEERS,” March 6, 2010.

Couzin, Jennifer. “MIT Terminates Researcher over Data Fabrication.” Science 310, no. 5749 (2005): 758. Accessed April 8, 2021. http://www.jstor.org/stable/3842728 .

Dyer, Clare. “Diabetologist and Former Journal Editor Faces Charges of Data Fabrication.” BMJ: British Medical Journal 356 (2017). Accessed April 12, 2021. https://www.jstor.org/stable/26949701 .

Eisenach, James C. 2009. “Data Fabrication and Article Retraction: How Not to Get Lost in the Woods.” Anesthesiology 110 (5): 955–56. https://doi.org/10.1097/ALN.0b013e3181a06bf9

Fanelli, Daniele. “How Many Scientists Fabricate and Falsify Research? A Systematic Review and Meta-Analysis of Survey Data.” PsycEXTRA Dataset, 2009. https://doi.org/10.1037/e521122012-010.

Fong, Eric A., and Allen W. Wilhite. 2017. “Authorship and Citation Manipulation in Academic Research.” PLOS ONE 12 (12): e0187394. https://doi.org/10.1371/journal.pone.0187394 .

FUENTES, GABRIEL A. “FEDERAL DETENTION AND “WILD FACTS” DURING THE COVID-19 PANDEMIC.” The Journal of Criminal Law and Criminology (1973-) 110, no. 3 (2020): 441-76. Accessed April 8, 2021. https://www.jstor.org/stable/48573788 .

García-Pérez, Miguel Ángel. “Bayesian Estimation with Informative Priors Is Indistinguishable from Data Falsification.” The Spanish Journal of Psychology 22 (2019). https://doi.org/10.1017/sjp.2019.41.

George, Stephen L, and Marc Buyse. “Data Fraud in Clinical Trials.” Clinical Investigation 5, no. 2 (2015): 161–73. https://doi.org/10.4155/cli.14.116 .

Gropp, Robert E., Scott Glisson, Stephen Gallo, and Lisa Thompson. “Peer Review: A System under Stress.” BioScience 67, no. 5 (May 1, 2017): 407–10. https://doi.org/10.1093/biosci/bix034 .

Kai Kupferschmidt Aug. 17, 2018, 2021 Jeffrey Mervis Apr. 26, 2021 Science News Staff Apr. 23, 2021 Cathleen O’Grady Apr. 22, 2021 Jocelyn Kaiser Apr. 22, Kai Kupferschmidt Apr. 22 Gretchen Vogel, 2021 Sofia Moutinho Apr. 7, et al. “Researcher at the Center of an Epic Fraud Remains an Enigma to Those Who Exposed Him.” Science, August 22, 2018. https://www.sciencemag.org/news/2018/08/researcher-center-epic-fraud-remains-enigma-those-who-exposed-hi .

Khaled, K. F. 2014. “Scientific Integrity in the Digital Age: Data Fabrication.” Research on Chemical Intermediates 40 (5): 1815–49. https://doi.org/10.1007/s11164-013-1084-5 .

Kitchin, Rob. “In Data We Trust.” In Data Lives: How Data Are Made and Shape Our World, 37-44. Bristol, UK: Bristol University Press, 2021. Accessed April 8, 2021. doi:10.2307/j.ctv1c9hmnq.9.

Korte, Sanne M., and Marcel A. G. van der Heyden. “Preventing Publication of Falsified and Fabricated Data: Roles of Scientists, Editors, Reviewers, and Readers.” Journal of Cardiovascular Pharmacology 69, no. 2 (February 2017): 65–70. https://doi.org/10.1097/FJC.0000000000000443 .

Leng, Gareth, and Rhodri Ivor Leng. “Where Are the Facts?” Essay. In The Matter of Facts: Skepticism, Persuasion, and Evidence in Science , 159–72. Cambridge, MA: The MIT Press, 2020.

Lu, Zaiming, Qiyong Guo, Aizhong Shi, Feng Xie, and Qingjie Lu. “Downregulation of NIN/RPN12 Binding Protein Inhibit the Growth of Human Hepatocellular Carcinoma Cells.” Molecular Biology Reports 39, no. 1 (2011): 501–7. https://doi.org/10.1007/s11033-011-0764-8.

Mayor, Susan. “Questions Raised over Safety of Common Plasma Substitute.” BMJ: British Medical Journal 346, no. 7896 (2013): 4. Accessed April 14, 2021. http://www.jstor.org/stable/23494149 .

Monya Baker. “Is There a Reproducibility Crisis?” Nature 533 (May 26, 2016): 452–54.

National Academies of Sciences, Engineering, and Medicine. Reproducibility and Replicability in Science . The National Academies Press. Accessed April 26, 2021. https://doi.org/10.17226/25303 .

National Academy of Sciences. 2009. RESEARCH MISCONDUCT. On Being a Scientist: A Guide to Responsible Conduct in Research: Third Edition. 3rd ed. National Academies Press (US). https://www.ncbi.nlm.nih.gov/books/NBK214564/ .

Nurunnabi, Mohammad, and Monirul Alam Hossain. “Data Falsification and Question on Academic Integrity.” Accountability in Research 26, no. 2 (2019): 108–22. https://doi.org/10.1080/08989621.2018.1564664 .

Nuzzo, Regina. “Statistical Errors.” Nature 506 (February 13, 2014): 150–52. https://www.nature.com/collections/prbfkwmwvz

Open Science Collaboration. “Estimating the Reproducibility of Psychological Science.” Science 349, no. 6251 (August 28, 2015): aac4716–aac4716. https://doi.org/10.1126/science.aac4716 .

“Peer-Review Fraud — Hacking the Scientific Publication Process – ProQuest.” Accessed April 26, 2021. https://search-proquest-com.ezpxy-web-p-u01.wpi.edu/docview/1750062674?accountid=29120&pq-origsite=primo .

Pritchard, Michael S. “Teaching Research Ethics and Working Together.” Science and Engineering Ethics 11, no. 3 (September 1, 2005): 367–71. https://doi.org/10.1007/s11948-005-0005-4 .

Reiss, Michael J. “Teaching Ethics in Science.” Studies in Science Education 34, no. 1 (January 1, 1999): 115–40. https://doi.org/10.1080/03057269908560151.

Resnik, David B. “Data Fabrication and Falsification and Empiricist Philosophy of Science.” Science and Engineering Ethics 20, no. 2 (2013): 423–31. https://doi.org/10.1007/s11948-013-9466-z .

The Association of Clinical Research Professionals. “Code of Ethics.” ACRP, June 17, 2020. https://acrpnet.org/about/code-of-ethics/.

Safdar, Adeel, Konstantin Khrapko, James M. Flynn, Ayesha Saleem, Michael De Lisio, Adam P. Johnston, Yevgenya Kratysberg, et al. “RETRACTED ARTICLE:Exercise-Induced Mitochondrial p53 Repairs MtDNA Mutations in Mutator Mice.” Skeletal Muscle 6, no. 1 (2015). https://doi.org/10.1186/s13395-016-0075-9 .

“What Is Peer Review?” Accessed April 26, 2021. https://www.elsevier.com/reviewers/what-is-peer-review.

Winchester, Catherine. “Give Every Paper a Read for Reproducibility.” Nature 557, no. 7705 (May 2018): 281–281. https://doi.org/10.1038/d41586-018-05140-x.

Image Citations:

Scott Adams. Dilbert. https://enewspaper.latimes.com/infinity/article_share.aspx?guid=87c2c088-17ed-424e-afd7-a784b0e73086

Editors of Hamilton Spectator. Adeel Safdar domestic violence trial: The Hamilton Spectator. Retrieved April 26, 2021, from https://www.thespec.com/news/hamilton-region/adeel-safdar.html

Ireland after NAMA, October 4, 2012, accessed April 28, 2021 https://irelandafternama.wordpress.com/2012/10/04/the-geography-of-actual-sales-prices/

Korte, Sanne M., and Marcel A. G. Van Der Heyden. “Preventing Publication of Falsified and Fabricated Data: Roles of Scientists, Editors, Reviewers, and Readers.” Journal of Cardiovascular Pharmacology 69, no. 2 (2017): 65-70. doi:10.1097/fjc.0000000000000443.

Redbubble. Trust science (Black BG) Classic t-shirt. Retrieved April 26, 2021, from https://www.redbubble.com/i/t-shirt/Trust-Science-Black-BG-by-Thelittlelord/48496274.IJ6L0.XYZ

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

Data Fabrication and Falsification and Empiricist Philosophy of Science

David b. resnik.

National Institute for Environmental Health Science, National Institutes of Health, 111 Alexander Drive, Box 12233, Mail Drop CU03, Research Triangle Park, NC, 27709, USA, vog.hin.shein@dkinser Phone: 919 541 5658 Fax: 919 541 9854