How To Write The Results/Findings Chapter

For quantitative studies (dissertations & theses).

By: Derek Jansen (MBA) | Expert Reviewed By: Kerryn Warren (PhD) | July 2021

So, you’ve completed your quantitative data analysis and it’s time to report on your findings. But where do you start? In this post, we’ll walk you through the results chapter (also called the findings or analysis chapter), step by step, so that you can craft this section of your dissertation or thesis with confidence. If you’re looking for information regarding the results chapter for qualitative studies, you can find that here .

Overview: Quantitative Results Chapter

- What exactly the results chapter is

- What you need to include in your chapter

- How to structure the chapter

- Tips and tricks for writing a top-notch chapter

- Free results chapter template

What exactly is the results chapter?

The results chapter (also referred to as the findings or analysis chapter) is one of the most important chapters of your dissertation or thesis because it shows the reader what you’ve found in terms of the quantitative data you’ve collected. It presents the data using a clear text narrative, supported by tables, graphs and charts. In doing so, it also highlights any potential issues (such as outliers or unusual findings) you’ve come across.

But how’s that different from the discussion chapter?

Well, in the results chapter, you only present your statistical findings. Only the numbers, so to speak – no more, no less. Contrasted to this, in the discussion chapter , you interpret your findings and link them to prior research (i.e. your literature review), as well as your research objectives and research questions . In other words, the results chapter presents and describes the data, while the discussion chapter interprets the data.

Let’s look at an example.

In your results chapter, you may have a plot that shows how respondents to a survey responded: the numbers of respondents per category, for instance. You may also state whether this supports a hypothesis by using a p-value from a statistical test. But it is only in the discussion chapter where you will say why this is relevant or how it compares with the literature or the broader picture. So, in your results chapter, make sure that you don’t present anything other than the hard facts – this is not the place for subjectivity.

It’s worth mentioning that some universities prefer you to combine the results and discussion chapters. Even so, it is good practice to separate the results and discussion elements within the chapter, as this ensures your findings are fully described. Typically, though, the results and discussion chapters are split up in quantitative studies. If you’re unsure, chat with your research supervisor or chair to find out what their preference is.

What should you include in the results chapter?

Following your analysis, it’s likely you’ll have far more data than are necessary to include in your chapter. In all likelihood, you’ll have a mountain of SPSS or R output data, and it’s your job to decide what’s most relevant. You’ll need to cut through the noise and focus on the data that matters.

This doesn’t mean that those analyses were a waste of time – on the contrary, those analyses ensure that you have a good understanding of your dataset and how to interpret it. However, that doesn’t mean your reader or examiner needs to see the 165 histograms you created! Relevance is key.

How do I decide what’s relevant?

At this point, it can be difficult to strike a balance between what is and isn’t important. But the most important thing is to ensure your results reflect and align with the purpose of your study . So, you need to revisit your research aims, objectives and research questions and use these as a litmus test for relevance. Make sure that you refer back to these constantly when writing up your chapter so that you stay on track.

As a general guide, your results chapter will typically include the following:

- Some demographic data about your sample

- Reliability tests (if you used measurement scales)

- Descriptive statistics

- Inferential statistics (if your research objectives and questions require these)

- Hypothesis tests (again, if your research objectives and questions require these)

We’ll discuss each of these points in more detail in the next section.

Importantly, your results chapter needs to lay the foundation for your discussion chapter . This means that, in your results chapter, you need to include all the data that you will use as the basis for your interpretation in the discussion chapter.

For example, if you plan to highlight the strong relationship between Variable X and Variable Y in your discussion chapter, you need to present the respective analysis in your results chapter – perhaps a correlation or regression analysis.

Need a helping hand?

How do I write the results chapter?

There are multiple steps involved in writing up the results chapter for your quantitative research. The exact number of steps applicable to you will vary from study to study and will depend on the nature of the research aims, objectives and research questions . However, we’ll outline the generic steps below.

Step 1 – Revisit your research questions

The first step in writing your results chapter is to revisit your research objectives and research questions . These will be (or at least, should be!) the driving force behind your results and discussion chapters, so you need to review them and then ask yourself which statistical analyses and tests (from your mountain of data) would specifically help you address these . For each research objective and research question, list the specific piece (or pieces) of analysis that address it.

At this stage, it’s also useful to think about the key points that you want to raise in your discussion chapter and note these down so that you have a clear reminder of which data points and analyses you want to highlight in the results chapter. Again, list your points and then list the specific piece of analysis that addresses each point.

Next, you should draw up a rough outline of how you plan to structure your chapter . Which analyses and statistical tests will you present and in what order? We’ll discuss the “standard structure” in more detail later, but it’s worth mentioning now that it’s always useful to draw up a rough outline before you start writing (this advice applies to any chapter).

Step 2 – Craft an overview introduction

As with all chapters in your dissertation or thesis, you should start your quantitative results chapter by providing a brief overview of what you’ll do in the chapter and why . For example, you’d explain that you will start by presenting demographic data to understand the representativeness of the sample, before moving onto X, Y and Z.

This section shouldn’t be lengthy – a paragraph or two maximum. Also, it’s a good idea to weave the research questions into this section so that there’s a golden thread that runs through the document.

Step 3 – Present the sample demographic data

The first set of data that you’ll present is an overview of the sample demographics – in other words, the demographics of your respondents.

For example:

- What age range are they?

- How is gender distributed?

- How is ethnicity distributed?

- What areas do the participants live in?

The purpose of this is to assess how representative the sample is of the broader population. This is important for the sake of the generalisability of the results. If your sample is not representative of the population, you will not be able to generalise your findings. This is not necessarily the end of the world, but it is a limitation you’ll need to acknowledge.

Of course, to make this representativeness assessment, you’ll need to have a clear view of the demographics of the population. So, make sure that you design your survey to capture the correct demographic information that you will compare your sample to.

But what if I’m not interested in generalisability?

Well, even if your purpose is not necessarily to extrapolate your findings to the broader population, understanding your sample will allow you to interpret your findings appropriately, considering who responded. In other words, it will help you contextualise your findings . For example, if 80% of your sample was aged over 65, this may be a significant contextual factor to consider when interpreting the data. Therefore, it’s important to understand and present the demographic data.

Step 4 – Review composite measures and the data “shape”.

Before you undertake any statistical analysis, you’ll need to do some checks to ensure that your data are suitable for the analysis methods and techniques you plan to use. If you try to analyse data that doesn’t meet the assumptions of a specific statistical technique, your results will be largely meaningless. Therefore, you may need to show that the methods and techniques you’ll use are “allowed”.

Most commonly, there are two areas you need to pay attention to:

#1: Composite measures

The first is when you have multiple scale-based measures that combine to capture one construct – this is called a composite measure . For example, you may have four Likert scale-based measures that (should) all measure the same thing, but in different ways. In other words, in a survey, these four scales should all receive similar ratings. This is called “ internal consistency ”.

Internal consistency is not guaranteed though (especially if you developed the measures yourself), so you need to assess the reliability of each composite measure using a test. Typically, Cronbach’s Alpha is a common test used to assess internal consistency – i.e., to show that the items you’re combining are more or less saying the same thing. A high alpha score means that your measure is internally consistent. A low alpha score means you may need to consider scrapping one or more of the measures.

#2: Data shape

The second matter that you should address early on in your results chapter is data shape. In other words, you need to assess whether the data in your set are symmetrical (i.e. normally distributed) or not, as this will directly impact what type of analyses you can use. For many common inferential tests such as T-tests or ANOVAs (we’ll discuss these a bit later), your data needs to be normally distributed. If it’s not, you’ll need to adjust your strategy and use alternative tests.

To assess the shape of the data, you’ll usually assess a variety of descriptive statistics (such as the mean, median and skewness), which is what we’ll look at next.

Step 5 – Present the descriptive statistics

Now that you’ve laid the foundation by discussing the representativeness of your sample, as well as the reliability of your measures and the shape of your data, you can get started with the actual statistical analysis. The first step is to present the descriptive statistics for your variables.

For scaled data, this usually includes statistics such as:

- The mean – this is simply the mathematical average of a range of numbers.

- The median – this is the midpoint in a range of numbers when the numbers are arranged in order.

- The mode – this is the most commonly repeated number in the data set.

- Standard deviation – this metric indicates how dispersed a range of numbers is. In other words, how close all the numbers are to the mean (the average).

- Skewness – this indicates how symmetrical a range of numbers is. In other words, do they tend to cluster into a smooth bell curve shape in the middle of the graph (this is called a normal or parametric distribution), or do they lean to the left or right (this is called a non-normal or non-parametric distribution).

- Kurtosis – this metric indicates whether the data are heavily or lightly-tailed, relative to the normal distribution. In other words, how peaked or flat the distribution is.

A large table that indicates all the above for multiple variables can be a very effective way to present your data economically. You can also use colour coding to help make the data more easily digestible.

For categorical data, where you show the percentage of people who chose or fit into a category, for instance, you can either just plain describe the percentages or numbers of people who responded to something or use graphs and charts (such as bar graphs and pie charts) to present your data in this section of the chapter.

When using figures, make sure that you label them simply and clearly , so that your reader can easily understand them. There’s nothing more frustrating than a graph that’s missing axis labels! Keep in mind that although you’ll be presenting charts and graphs, your text content needs to present a clear narrative that can stand on its own. In other words, don’t rely purely on your figures and tables to convey your key points: highlight the crucial trends and values in the text. Figures and tables should complement the writing, not carry it .

Depending on your research aims, objectives and research questions, you may stop your analysis at this point (i.e. descriptive statistics). However, if your study requires inferential statistics, then it’s time to deep dive into those .

Step 6 – Present the inferential statistics

Inferential statistics are used to make generalisations about a population , whereas descriptive statistics focus purely on the sample . Inferential statistical techniques, broadly speaking, can be broken down into two groups .

First, there are those that compare measurements between groups , such as t-tests (which measure differences between two groups) and ANOVAs (which measure differences between multiple groups). Second, there are techniques that assess the relationships between variables , such as correlation analysis and regression analysis. Within each of these, some tests can be used for normally distributed (parametric) data and some tests are designed specifically for use on non-parametric data.

There are a seemingly endless number of tests that you can use to crunch your data, so it’s easy to run down a rabbit hole and end up with piles of test data. Ultimately, the most important thing is to make sure that you adopt the tests and techniques that allow you to achieve your research objectives and answer your research questions .

In this section of the results chapter, you should try to make use of figures and visual components as effectively as possible. For example, if you present a correlation table, use colour coding to highlight the significance of the correlation values, or scatterplots to visually demonstrate what the trend is. The easier you make it for your reader to digest your findings, the more effectively you’ll be able to make your arguments in the next chapter.

Step 7 – Test your hypotheses

If your study requires it, the next stage is hypothesis testing. A hypothesis is a statement , often indicating a difference between groups or relationship between variables, that can be supported or rejected by a statistical test. However, not all studies will involve hypotheses (again, it depends on the research objectives), so don’t feel like you “must” present and test hypotheses just because you’re undertaking quantitative research.

The basic process for hypothesis testing is as follows:

- Specify your null hypothesis (for example, “The chemical psilocybin has no effect on time perception).

- Specify your alternative hypothesis (e.g., “The chemical psilocybin has an effect on time perception)

- Set your significance level (this is usually 0.05)

- Calculate your statistics and find your p-value (e.g., p=0.01)

- Draw your conclusions (e.g., “The chemical psilocybin does have an effect on time perception”)

Finally, if the aim of your study is to develop and test a conceptual framework , this is the time to present it, following the testing of your hypotheses. While you don’t need to develop or discuss these findings further in the results chapter, indicating whether the tests (and their p-values) support or reject the hypotheses is crucial.

Step 8 – Provide a chapter summary

To wrap up your results chapter and transition to the discussion chapter, you should provide a brief summary of the key findings . “Brief” is the keyword here – much like the chapter introduction, this shouldn’t be lengthy – a paragraph or two maximum. Highlight the findings most relevant to your research objectives and research questions, and wrap it up.

Some final thoughts, tips and tricks

Now that you’ve got the essentials down, here are a few tips and tricks to make your quantitative results chapter shine:

- When writing your results chapter, report your findings in the past tense . You’re talking about what you’ve found in your data, not what you are currently looking for or trying to find.

- Structure your results chapter systematically and sequentially . If you had two experiments where findings from the one generated inputs into the other, report on them in order.

- Make your own tables and graphs rather than copying and pasting them from statistical analysis programmes like SPSS. Check out the DataIsBeautiful reddit for some inspiration.

- Once you’re done writing, review your work to make sure that you have provided enough information to answer your research questions , but also that you didn’t include superfluous information.

If you’ve got any questions about writing up the quantitative results chapter, please leave a comment below. If you’d like 1-on-1 assistance with your quantitative analysis and discussion, check out our hands-on coaching service , or book a free consultation with a friendly coach.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

Thank you. I will try my best to write my results.

Awesome content 👏🏾

this was great explaination

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

11 Tips For Writing a Dissertation Data Analysis

Since the evolution of the fourth industrial revolution – the Digital World; lots of data have surrounded us. There are terabytes of data around us or in data centers that need to be processed and used. The data needs to be appropriately analyzed to process it, and Dissertation data analysis forms its basis. If data analysis is valid and free from errors, the research outcomes will be reliable and lead to a successful dissertation.

Considering the complexity of many data analysis projects, it becomes challenging to get precise results if analysts are not familiar with data analysis tools and tests properly. The analysis is a time-taking process that starts with collecting valid and relevant data and ends with the demonstration of error-free results.

So, in today’s topic, we will cover the need to analyze data, dissertation data analysis, and mainly the tips for writing an outstanding data analysis dissertation. If you are a doctoral student and plan to perform dissertation data analysis on your data, make sure that you give this article a thorough read for the best tips!

What is Data Analysis in Dissertation?

Dissertation Data Analysis is the process of understanding, gathering, compiling, and processing a large amount of data. Then identifying common patterns in responses and critically examining facts and figures to find the rationale behind those outcomes.

Even f you have the data collected and compiled in the form of facts and figures, it is not enough for proving your research outcomes. There is still a need to apply dissertation data analysis on your data; to use it in the dissertation. It provides scientific support to the thesis and conclusion of the research.

Data Analysis Tools

There are plenty of indicative tests used to analyze data and infer relevant results for the discussion part. Following are some tests used to perform analysis of data leading to a scientific conclusion:

11 Most Useful Tips for Dissertation Data Analysis

Doctoral students need to perform dissertation data analysis and then dissertation to receive their degree. Many Ph.D. students find it hard to do dissertation data analysis because they are not trained in it.

1. Dissertation Data Analysis Services

The first tip applies to those students who can afford to look for help with their dissertation data analysis work. It’s a viable option, and it can help with time management and with building the other elements of the dissertation with much detail.

Dissertation Analysis services are professional services that help doctoral students with all the basics of their dissertation work, from planning, research and clarification, methodology, dissertation data analysis and review, literature review, and final powerpoint presentation.

One great reference for dissertation data analysis professional services is Statistics Solutions , they’ve been around for over 22 years helping students succeed in their dissertation work. You can find the link to their website here .

For a proper dissertation data analysis, the student should have a clear understanding and statistical knowledge. Through this knowledge and experience, a student can perform dissertation analysis on their own.

Following are some helpful tips for writing a splendid dissertation data analysis:

2. Relevance of Collected Data

If the data is irrelevant and not appropriate, you might get distracted from the point of focus. To show the reader that you can critically solve the problem, make sure that you write a theoretical proposition regarding the selection and analysis of data.

3. Data Analysis

For analysis, it is crucial to use such methods that fit best with the types of data collected and the research objectives. Elaborate on these methods and the ones that justify your data collection methods thoroughly. Make sure to make the reader believe that you did not choose your method randomly. Instead, you arrived at it after critical analysis and prolonged research.

On the other hand, quantitative analysis refers to the analysis and interpretation of facts and figures – to build reasoning behind the advent of primary findings. An assessment of the main results and the literature review plays a pivotal role in qualitative and quantitative analysis.

The overall objective of data analysis is to detect patterns and inclinations in data and then present the outcomes implicitly. It helps in providing a solid foundation for critical conclusions and assisting the researcher to complete the dissertation proposal.

4. Qualitative Data Analysis

Qualitative data refers to data that does not involve numbers. You are required to carry out an analysis of the data collected through experiments, focus groups, and interviews. This can be a time-taking process because it requires iterative examination and sometimes demanding the application of hermeneutics. Note that using qualitative technique doesn’t only mean generating good outcomes but to unveil more profound knowledge that can be transferrable.

Presenting qualitative data analysis in a dissertation can also be a challenging task. It contains longer and more detailed responses. Placing such comprehensive data coherently in one chapter of the dissertation can be difficult due to two reasons. Firstly, we cannot figure out clearly which data to include and which one to exclude. Secondly, unlike quantitative data, it becomes problematic to present data in figures and tables. Making information condensed into a visual representation is not possible. As a writer, it is of essence to address both of these challenges.

Qualitative Data Analysis Methods

Following are the methods used to perform quantitative data analysis.

- Deductive Method

This method involves analyzing qualitative data based on an argument that a researcher already defines. It’s a comparatively easy approach to analyze data. It is suitable for the researcher with a fair idea about the responses they are likely to receive from the questionnaires.

- Inductive Method

In this method, the researcher analyzes the data not based on any predefined rules. It is a time-taking process used by students who have very little knowledge of the research phenomenon.

5. Quantitative Data Analysis

Quantitative data contains facts and figures obtained from scientific research and requires extensive statistical analysis. After collection and analysis, you will be able to conclude. Generic outcomes can be accepted beyond the sample by assuming that it is representative – one of the preliminary checkpoints to carry out in your analysis to a larger group. This method is also referred to as the “scientific method”, gaining its roots from natural sciences.

The Presentation of quantitative data depends on the domain to which it is being presented. It is beneficial to consider your audience while writing your findings. Quantitative data for hard sciences might require numeric inputs and statistics. As for natural sciences , such comprehensive analysis is not required.

Quantitative Analysis Methods

Following are some of the methods used to perform quantitative data analysis.

- Trend analysis: This corresponds to a statistical analysis approach to look at the trend of quantitative data collected over a considerable period.

- Cross-tabulation: This method uses a tabula way to draw readings among data sets in research.

- Conjoint analysis : Quantitative data analysis method that can collect and analyze advanced measures. These measures provide a thorough vision about purchasing decisions and the most importantly, marked parameters.

- TURF analysis: This approach assesses the total market reach of a service or product or a mix of both.

- Gap analysis: It utilizes the side-by-side matrix to portray quantitative data, which captures the difference between the actual and expected performance.

- Text analysis: In this method, innovative tools enumerate open-ended data into easily understandable data.

6. Data Presentation Tools

Since large volumes of data need to be represented, it becomes a difficult task to present such an amount of data in coherent ways. To resolve this issue, consider all the available choices you have, such as tables, charts, diagrams, and graphs.

Tables help in presenting both qualitative and quantitative data concisely. While presenting data, always keep your reader in mind. Anything clear to you may not be apparent to your reader. So, constantly rethink whether your data presentation method is understandable to someone less conversant with your research and findings. If the answer is “No”, you may need to rethink your Presentation.

7. Include Appendix or Addendum

After presenting a large amount of data, your dissertation analysis part might get messy and look disorganized. Also, you would not be cutting down or excluding the data you spent days and months collecting. To avoid this, you should include an appendix part.

The data you find hard to arrange within the text, include that in the appendix part of a dissertation . And place questionnaires, copies of focus groups and interviews, and data sheets in the appendix. On the other hand, one must put the statistical analysis and sayings quoted by interviewees within the dissertation.

8. Thoroughness of Data

It is a common misconception that the data presented is self-explanatory. Most of the students provide the data and quotes and think that it is enough and explaining everything. It is not sufficient. Rather than just quoting everything, you should analyze and identify which data you will use to approve or disapprove your standpoints.

Thoroughly demonstrate the ideas and critically analyze each perspective taking care of the points where errors can occur. Always make sure to discuss the anomalies and strengths of your data to add credibility to your research.

9. Discussing Data

Discussion of data involves elaborating the dimensions to classify patterns, themes, and trends in presented data. In addition, to balancing, also take theoretical interpretations into account. Discuss the reliability of your data by assessing their effect and significance. Do not hide the anomalies. While using interviews to discuss the data, make sure you use relevant quotes to develop a strong rationale.

It also involves answering what you are trying to do with the data and how you have structured your findings. Once you have presented the results, the reader will be looking for interpretation. Hence, it is essential to deliver the understanding as soon as you have submitted your data.

10. Findings and Results

Findings refer to the facts derived after the analysis of collected data. These outcomes should be stated; clearly, their statements should tightly support your objective and provide logical reasoning and scientific backing to your point. This part comprises of majority part of the dissertation.

In the finding part, you should tell the reader what they are looking for. There should be no suspense for the reader as it would divert their attention. State your findings clearly and concisely so that they can get the idea of what is more to come in your dissertation.

11. Connection with Literature Review

At the ending of your data analysis in the dissertation, make sure to compare your data with other published research. In this way, you can identify the points of differences and agreements. Check the consistency of your findings if they meet your expectations—lookup for bottleneck position. Analyze and discuss the reasons behind it. Identify the key themes, gaps, and the relation of your findings with the literature review. In short, you should link your data with your research question, and the questions should form a basis for literature.

The Role of Data Analytics at The Senior Management Level

From small and medium-sized businesses to Fortune 500 conglomerates, the success of a modern business is now increasingly tied to how the company implements its data infrastructure and data-based decision-making. According

The Decision-Making Model Explained (In Plain Terms)

Any form of the systematic decision-making process is better enhanced with data. But making sense of big data or even small data analysis when venturing into a decision-making process might

13 Reasons Why Data Is Important in Decision Making

Wrapping Up

Writing data analysis in the dissertation involves dedication, and its implementations demand sound knowledge and proper planning. Choosing your topic, gathering relevant data, analyzing it, presenting your data and findings correctly, discussing the results, connecting with the literature and conclusions are milestones in it. Among these checkpoints, the Data analysis stage is most important and requires a lot of keenness.

In this article, we thoroughly looked at the tips that prove valuable for writing a data analysis in a dissertation. Make sure to give this article a thorough read before you write data analysis in the dissertation leading to the successful future of your research.

Oxbridge Essays. Top 10 Tips for Writing a Dissertation Data Analysis.

Emidio Amadebai

As an IT Engineer, who is passionate about learning and sharing. I have worked and learned quite a bit from Data Engineers, Data Analysts, Business Analysts, and Key Decision Makers almost for the past 5 years. Interested in learning more about Data Science and How to leverage it for better decision-making in my business and hopefully help you do the same in yours.

Recent Posts

Causal vs Evidential Decision-making (How to Make Businesses More Effective)

In today’s fast-paced business landscape, it is crucial to make informed decisions to stay in the competition which makes it important to understand the concept of the different characteristics and...

Bootstrapping vs. Boosting

Over the past decade, the field of machine learning has witnessed remarkable advancements in predictive techniques and ensemble learning methods. Ensemble techniques are very popular in machine...

- +44 7897 053596

- [email protected]

Get an experienced writer start working

Review our examples before placing an order, learn how to draft academic papers, a step-by-step guide to dissertation data analysis.

How to Write a Dissertation Conclusion? | Tips & Examples

What is PhD Thesis Writing? | Beginner’s Guide

A data analysis dissertation is a complex and challenging project requiring significant time, effort, and expertise. Fortunately, it is possible to successfully complete a data analysis dissertation with careful planning and execution.

As a student, you must know how important it is to have a strong and well-written dissertation, especially regarding data analysis. Proper data analysis is crucial to the success of your research and can often make or break your dissertation.

To get a better understanding, you may review the data analysis dissertation examples listed below;

- Impact of Leadership Style on the Job Satisfaction of Nurses

- Effect of Brand Love on Consumer Buying Behaviour in Dietary Supplement Sector

- An Insight Into Alternative Dispute Resolution

- An Investigation of Cyberbullying and its Impact on Adolescent Mental Health in UK

3-Step Dissertation Process!

Get 3+ Topics

Dissertation Proposal

Get Final Dissertation

Types of data analysis for dissertation.

The various types of data Analysis in a Dissertation are as follows;

1. Qualitative Data Analysis

Qualitative data analysis is a type of data analysis that involves analyzing data that cannot be measured numerically. This data type includes interviews, focus groups, and open-ended surveys. Qualitative data analysis can be used to identify patterns and themes in the data.

2. Quantitative Data Analysis

Quantitative data analysis is a type of data analysis that involves analyzing data that can be measured numerically. This data type includes test scores, income levels, and crime rates. Quantitative data analysis can be used to test hypotheses and to look for relationships between variables.

3. Descriptive Data Analysis

Descriptive data analysis is a type of data analysis that involves describing the characteristics of a dataset. This type of data analysis summarizes the main features of a dataset.

4. Inferential Data Analysis

Inferential data analysis is a type of data analysis that involves making predictions based on a dataset. This type of data analysis can be used to test hypotheses and make predictions about future events.

5. Exploratory Data Analysis

Exploratory data analysis is a type of data analysis that involves exploring a data set to understand it better. This type of data analysis can identify patterns and relationships in the data.

Time Period to Plan and Complete a Data Analysis Dissertation?

When planning dissertation data analysis, it is important to consider the dissertation methodology structure and time series analysis as they will give you an understanding of how long each stage will take. For example, using a qualitative research method, your data analysis will involve coding and categorizing your data.

This can be time-consuming, so allowing enough time in your schedule is important. Once you have coded and categorized your data, you will need to write up your findings. Again, this can take some time, so factor this into your schedule.

Finally, you will need to proofread and edit your dissertation before submitting it. All told, a data analysis dissertation can take anywhere from several weeks to several months to complete, depending on the project’s complexity. Therefore, starting planning early and allowing enough time in your schedule to complete the task is important.

Essential Strategies for Data Analysis Dissertation

A. Planning

The first step in any dissertation is planning. You must decide what you want to write about and how you want to structure your argument. This planning will involve deciding what data you want to analyze and what methods you will use for a data analysis dissertation.

B. Prototyping

Once you have a plan for your dissertation, it’s time to start writing. However, creating a prototype is important before diving head-first into writing your dissertation. A prototype is a rough draft of your argument that allows you to get feedback from your advisor and committee members. This feedback will help you fine-tune your argument before you start writing the final version of your dissertation.

C. Executing

After you have created a plan and prototype for your data analysis dissertation, it’s time to start writing the final version. This process will involve collecting and analyzing data and writing up your results. You will also need to create a conclusion section that ties everything together.

D. Presenting

The final step in acing your data analysis dissertation is presenting it to your committee. This presentation should be well-organized and professionally presented. During the presentation, you’ll also need to be ready to respond to questions concerning your dissertation.

Data Analysis Tools

Numerous suggestive tools are employed to assess the data and deduce pertinent findings for the discussion section. The tools used to analyze data and get a scientific conclusion are as follows:

a. Excel

Excel is a spreadsheet program part of the Microsoft Office productivity software suite. Excel is a powerful tool that can be used for various data analysis tasks, such as creating charts and graphs, performing mathematical calculations, and sorting and filtering data.

b. Google Sheets

Google Sheets is a free online spreadsheet application that is part of the Google Drive suite of productivity software. Google Sheets is similar to Excel in terms of functionality, but it also has some unique features, such as the ability to collaborate with other users in real-time.

c. SPSS

SPSS is a statistical analysis software program commonly used in the social sciences. SPSS can be used for various data analysis tasks, such as hypothesis testing, factor analysis, and regression analysis.

d. STATA

STATA is a statistical analysis software program commonly used in the sciences and economics. STATA can be used for data management, statistical modelling, descriptive statistics analysis, and data visualization tasks.

SAS is a commercial statistical analysis software program used by businesses and organizations worldwide. SAS can be used for predictive modelling, market research, and fraud detection.

R is a free, open-source statistical programming language popular among statisticians and data scientists. R can be used for tasks such as data wrangling, machine learning, and creating complex visualizations.

g. Python

A variety of applications may be used using the distinctive programming language Python, including web development, scientific computing, and artificial intelligence. Python also has a number of modules and libraries that can be used for data analysis tasks, such as numerical computing, statistical modelling, and data visualization.

Testimonials

Very satisfied students

This is our reason for working. We want to make all students happy, every day. Review us on Sitejabber

Tips to Compose a Successful Data Analysis Dissertation

a. Choose a Topic You’re Passionate About

The first step to writing a successful data analysis dissertation is to choose a topic you’re passionate about. Not only will this make the research and writing process more enjoyable, but it will also ensure that you produce a high-quality paper.

Choose a topic that is particular enough to be covered in your paper’s scope but not so specific that it will be challenging to obtain enough evidence to substantiate your arguments.

b. Do Your Research

data analysis in research is an important part of academic writing. Once you’ve selected a topic, it’s time to begin your research. Be sure to consult with your advisor or supervisor frequently during this stage to ensure that you are on the right track. In addition to secondary sources such as books, journal articles, and reports, you should also consider conducting primary research through surveys or interviews. This will give you first-hand insights into your topic that can be invaluable when writing your paper.

c. Develop a Strong Thesis Statement

After you’ve done your research, it’s time to start developing your thesis statement. It is arguably the most crucial part of your entire paper, so take care to craft a clear and concise statement that encapsulates the main argument of your paper.

Remember that your thesis statement should be arguable—that is, it should be capable of being disputed by someone who disagrees with your point of view. If your thesis statement is not arguable, it will be difficult to write a convincing paper.

d. Write a Detailed Outline

Once you have developed a strong thesis statement, the next step is to write a detailed outline of your paper. This will offer you a direction to write in and guarantee that your paper makes sense from beginning to end.

Your outline should include an introduction, in which you state your thesis statement; several body paragraphs, each devoted to a different aspect of your argument; and a conclusion, in which you restate your thesis and summarize the main points of your paper.

e. Write Your First Draft

With your outline in hand, it’s finally time to start writing your first draft. At this stage, don’t worry about perfecting your grammar or making sure every sentence is exactly right—focus on getting all of your ideas down on paper (or onto the screen). Once you have completed your first draft, you can revise it for style and clarity.

And there you have it! Following these simple tips can increase your chances of success when writing your data analysis dissertation. Just remember to start early, give yourself plenty of time to research and revise, and consult with your supervisor frequently throughout the process.

How Does It Work ?

Fill the Form

Writer Starts Working

3+ Topics Emailed!

Studying the above examples gives you valuable insight into the structure and content that should be included in your own data analysis dissertation. You can also learn how to effectively analyze and present your data and make a lasting impact on your readers.

In addition to being a useful resource for completing your dissertation, these examples can also serve as a valuable reference for future academic writing projects. By following these examples and understanding their principles, you can improve your data analysis skills and increase your chances of success in your academic career.

You may also contact Premier Dissertations to develop your data analysis dissertation.

For further assistance, some other resources in the dissertation writing section are shared below;

How Do You Select the Right Data Analysis

How to Write Data Analysis For A Dissertation?

How to Develop a Conceptual Framework in Dissertation?

What is a Hypothesis in a Dissertation?

Get an Immediate Response

Discuss your requirments with our writers

WhatsApp Us Email Us Chat with Us

Get 3+ Free Dissertation Topics within 24 hours?

Your Number

Academic Level Select Academic Level Undergraduate Masters PhD

Area of Research

admin farhan

Related posts.

What Is a Covariate? Its Role in Statistical Modeling

What is Conventions in Writing | Definition, Importance & Examples

Understanding TOK Concepts: A Beginner’s Guide

Comments are closed.

Raw Data to Excellence: Master Dissertation Analysis

Discover the secrets of successful dissertation data analysis. Get practical advice and useful insights from experienced experts now!

Have you ever found yourself knee-deep in a dissertation, desperately seeking answers from the data you’ve collected? Or have you ever felt clueless with all the data that you’ve collected but don’t know where to start? Fear not, in this article we are going to discuss a method that helps you come out of this situation and that is Dissertation Data Analysis.

Dissertation data analysis is like uncovering hidden treasures within your research findings. It’s where you roll up your sleeves and explore the data you’ve collected, searching for patterns, connections, and those “a-ha!” moments. Whether you’re crunching numbers, dissecting narratives, or diving into qualitative interviews, data analysis is the key that unlocks the potential of your research.

Dissertation Data Analysis

Dissertation data analysis plays a crucial role in conducting rigorous research and drawing meaningful conclusions. It involves the systematic examination, interpretation, and organization of data collected during the research process. The aim is to identify patterns, trends, and relationships that can provide valuable insights into the research topic.

The first step in dissertation data analysis is to carefully prepare and clean the collected data. This may involve removing any irrelevant or incomplete information, addressing missing data, and ensuring data integrity. Once the data is ready, various statistical and analytical techniques can be applied to extract meaningful information.

Descriptive statistics are commonly used to summarize and describe the main characteristics of the data, such as measures of central tendency (e.g., mean, median) and measures of dispersion (e.g., standard deviation, range). These statistics help researchers gain an initial understanding of the data and identify any outliers or anomalies.

Furthermore, qualitative data analysis techniques can be employed when dealing with non-numerical data, such as textual data or interviews. This involves systematically organizing, coding, and categorizing qualitative data to identify themes and patterns.

Types of Research

When considering research types in the context of dissertation data analysis, several approaches can be employed:

1. Quantitative Research

This type of research involves the collection and analysis of numerical data. It focuses on generating statistical information and making objective interpretations. Quantitative research often utilizes surveys, experiments, or structured observations to gather data that can be quantified and analyzed using statistical techniques.

2. Qualitative Research

In contrast to quantitative research, qualitative research focuses on exploring and understanding complex phenomena in depth. It involves collecting non-numerical data such as interviews, observations, or textual materials. Qualitative data analysis involves identifying themes, patterns, and interpretations, often using techniques like content analysis or thematic analysis.

3. Mixed-Methods Research

This approach combines both quantitative and qualitative research methods. Researchers employing mixed-methods research collect and analyze both numerical and non-numerical data to gain a comprehensive understanding of the research topic. The integration of quantitative and qualitative data can provide a more nuanced and comprehensive analysis, allowing for triangulation and validation of findings.

Primary vs. Secondary Research

Primary research.

Primary research involves the collection of original data specifically for the purpose of the dissertation. This data is directly obtained from the source, often through surveys, interviews, experiments, or observations. Researchers design and implement their data collection methods to gather information that is relevant to their research questions and objectives. Data analysis in primary research typically involves processing and analyzing the raw data collected.

Secondary Research

Secondary research involves the analysis of existing data that has been previously collected by other researchers or organizations. This data can be obtained from various sources such as academic journals, books, reports, government databases, or online repositories. Secondary data can be either quantitative or qualitative, depending on the nature of the source material. Data analysis in secondary research involves reviewing, organizing, and synthesizing the available data.

If you wanna deepen into Methodology in Research, also read: What is Methodology in Research and How Can We Write it?

Types of Analysis

Various types of analysis techniques can be employed to examine and interpret the collected data. Of all those types, the ones that are most important and used are:

- Descriptive Analysis: Descriptive analysis focuses on summarizing and describing the main characteristics of the data. It involves calculating measures of central tendency (e.g., mean, median) and measures of dispersion (e.g., standard deviation, range). Descriptive analysis provides an overview of the data, allowing researchers to understand its distribution, variability, and general patterns.

- Inferential Analysis: Inferential analysis aims to draw conclusions or make inferences about a larger population based on the collected sample data. This type of analysis involves applying statistical techniques, such as hypothesis testing, confidence intervals, and regression analysis, to analyze the data and assess the significance of the findings. Inferential analysis helps researchers make generalizations and draw meaningful conclusions beyond the specific sample under investigation.

- Qualitative Analysis: Qualitative analysis is used to interpret non-numerical data, such as interviews, focus groups, or textual materials. It involves coding, categorizing, and analyzing the data to identify themes, patterns, and relationships. Techniques like content analysis, thematic analysis, or discourse analysis are commonly employed to derive meaningful insights from qualitative data.

- Correlation Analysis: Correlation analysis is used to examine the relationship between two or more variables. It determines the strength and direction of the association between variables. Common correlation techniques include Pearson’s correlation coefficient, Spearman’s rank correlation, or point-biserial correlation, depending on the nature of the variables being analyzed.

Basic Statistical Analysis

When conducting dissertation data analysis, researchers often utilize basic statistical analysis techniques to gain insights and draw conclusions from their data. These techniques involve the application of statistical measures to summarize and examine the data. Here are some common types of basic statistical analysis used in dissertation research:

- Descriptive Statistics

- Frequency Analysis

- Cross-tabulation

- Chi-Square Test

- Correlation Analysis

Advanced Statistical Analysis

In dissertation data analysis, researchers may employ advanced statistical analysis techniques to gain deeper insights and address complex research questions. These techniques go beyond basic statistical measures and involve more sophisticated methods. Here are some examples of advanced statistical analysis commonly used in dissertation research:

Regression Analysis

- Analysis of Variance (ANOVA)

- Factor Analysis

- Cluster Analysis

- Structural Equation Modeling (SEM)

- Time Series Analysis

Examples of Methods of Analysis

Regression analysis is a powerful tool for examining relationships between variables and making predictions. It allows researchers to assess the impact of one or more independent variables on a dependent variable. Different types of regression analysis, such as linear regression, logistic regression, or multiple regression, can be used based on the nature of the variables and research objectives.

Event Study

An event study is a statistical technique that aims to assess the impact of a specific event or intervention on a particular variable of interest. This method is commonly employed in finance, economics, or management to analyze the effects of events such as policy changes, corporate announcements, or market shocks.

Vector Autoregression

Vector Autoregression is a statistical modeling technique used to analyze the dynamic relationships and interactions among multiple time series variables. It is commonly employed in fields such as economics, finance, and social sciences to understand the interdependencies between variables over time.

Preparing Data for Analysis

1. become acquainted with the data.

It is crucial to become acquainted with the data to gain a comprehensive understanding of its characteristics, limitations, and potential insights. This step involves thoroughly exploring and familiarizing oneself with the dataset before conducting any formal analysis by reviewing the dataset to understand its structure and content. Identify the variables included, their definitions, and the overall organization of the data. Gain an understanding of the data collection methods, sampling techniques, and any potential biases or limitations associated with the dataset.

2. Review Research Objectives

This step involves assessing the alignment between the research objectives and the data at hand to ensure that the analysis can effectively address the research questions. Evaluate how well the research objectives and questions align with the variables and data collected. Determine if the available data provides the necessary information to answer the research questions adequately. Identify any gaps or limitations in the data that may hinder the achievement of the research objectives.

3. Creating a Data Structure

This step involves organizing the data into a well-defined structure that aligns with the research objectives and analysis techniques. Organize the data in a tabular format where each row represents an individual case or observation, and each column represents a variable. Ensure that each case has complete and accurate data for all relevant variables. Use consistent units of measurement across variables to facilitate meaningful comparisons.

4. Discover Patterns and Connections

In preparing data for dissertation data analysis, one of the key objectives is to discover patterns and connections within the data. This step involves exploring the dataset to identify relationships, trends, and associations that can provide valuable insights. Visual representations can often reveal patterns that are not immediately apparent in tabular data.

Qualitative Data Analysis

Qualitative data analysis methods are employed to analyze and interpret non-numerical or textual data. These methods are particularly useful in fields such as social sciences, humanities, and qualitative research studies where the focus is on understanding meaning, context, and subjective experiences. Here are some common qualitative data analysis methods:

Thematic Analysis

The thematic analysis involves identifying and analyzing recurring themes, patterns, or concepts within the qualitative data. Researchers immerse themselves in the data, categorize information into meaningful themes, and explore the relationships between them. This method helps in capturing the underlying meanings and interpretations within the data.

Content Analysis

Content analysis involves systematically coding and categorizing qualitative data based on predefined categories or emerging themes. Researchers examine the content of the data, identify relevant codes, and analyze their frequency or distribution. This method allows for a quantitative summary of qualitative data and helps in identifying patterns or trends across different sources.

Grounded Theory

Grounded theory is an inductive approach to qualitative data analysis that aims to generate theories or concepts from the data itself. Researchers iteratively analyze the data, identify concepts, and develop theoretical explanations based on emerging patterns or relationships. This method focuses on building theory from the ground up and is particularly useful when exploring new or understudied phenomena.

Discourse Analysis

Discourse analysis examines how language and communication shape social interactions, power dynamics, and meaning construction. Researchers analyze the structure, content, and context of language in qualitative data to uncover underlying ideologies, social representations, or discursive practices. This method helps in understanding how individuals or groups make sense of the world through language.

Narrative Analysis

Narrative analysis focuses on the study of stories, personal narratives, or accounts shared by individuals. Researchers analyze the structure, content, and themes within the narratives to identify recurring patterns, plot arcs, or narrative devices. This method provides insights into individuals’ live experiences, identity construction, or sense-making processes.

Applying Data Analysis to Your Dissertation

Applying data analysis to your dissertation is a critical step in deriving meaningful insights and drawing valid conclusions from your research. It involves employing appropriate data analysis techniques to explore, interpret, and present your findings. Here are some key considerations when applying data analysis to your dissertation:

Selecting Analysis Techniques

Choose analysis techniques that align with your research questions, objectives, and the nature of your data. Whether quantitative or qualitative, identify the most suitable statistical tests, modeling approaches, or qualitative analysis methods that can effectively address your research goals. Consider factors such as data type, sample size, measurement scales, and the assumptions associated with the chosen techniques.

Data Preparation

Ensure that your data is properly prepared for analysis. Cleanse and validate your dataset, addressing any missing values, outliers, or data inconsistencies. Code variables, transform data if necessary, and format it appropriately to facilitate accurate and efficient analysis. Pay attention to ethical considerations, data privacy, and confidentiality throughout the data preparation process.

Execution of Analysis

Execute the selected analysis techniques systematically and accurately. Utilize statistical software, programming languages, or qualitative analysis tools to carry out the required computations, calculations, or interpretations. Adhere to established guidelines, protocols, or best practices specific to your chosen analysis techniques to ensure reliability and validity.

Interpretation of Results

Thoroughly interpret the results derived from your analysis. Examine statistical outputs, visual representations, or qualitative findings to understand the implications and significance of the results. Relate the outcomes back to your research questions, objectives, and existing literature. Identify key patterns, relationships, or trends that support or challenge your hypotheses.

Drawing Conclusions

Based on your analysis and interpretation, draw well-supported conclusions that directly address your research objectives. Present the key findings in a clear, concise, and logical manner, emphasizing their relevance and contributions to the research field. Discuss any limitations, potential biases, or alternative explanations that may impact the validity of your conclusions.

Validation and Reliability

Evaluate the validity and reliability of your data analysis by considering the rigor of your methods, the consistency of results, and the triangulation of multiple data sources or perspectives if applicable. Engage in critical self-reflection and seek feedback from peers, mentors, or experts to ensure the robustness of your data analysis and conclusions.

In conclusion, dissertation data analysis is an essential component of the research process, allowing researchers to extract meaningful insights and draw valid conclusions from their data. By employing a range of analysis techniques, researchers can explore relationships, identify patterns, and uncover valuable information to address their research objectives.

Turn Your Data Into Easy-To-Understand And Dynamic Stories

Decoding data is daunting and you might end up in confusion. Here’s where infographics come into the picture. With visuals, you can turn your data into easy-to-understand and dynamic stories that your audience can relate to. Mind the Graph is one such platform that helps scientists to explore a library of visuals and use them to amplify their research work. Sign up now to make your presentation simpler.

Subscribe to our newsletter

Exclusive high quality content about effective visual communication in science.

Unlock Your Creativity

Create infographics, presentations and other scientifically-accurate designs without hassle — absolutely free for 7 days!

About Sowjanya Pedada

Sowjanya is a passionate writer and an avid reader. She holds MBA in Agribusiness Management and now is working as a content writer. She loves to play with words and hopes to make a difference in the world through her writings. Apart from writing, she is interested in reading fiction novels and doing craftwork. She also loves to travel and explore different cuisines and spend time with her family and friends.

Content tags

- Cookies & Privacy

- GETTING STARTED

- Introduction

- FUNDAMENTALS

Getting to the main article

Choosing your route

Setting research questions/ hypotheses

Assessment point

Building the theoretical case

Setting your research strategy

Data collection

Data analysis

CONSIDERATION ONE

The data analysis process.

The data analysis process involves three steps : (STEP ONE) select the correct statistical tests to run on your data; (STEP TWO) prepare and analyse the data you have collected using a relevant statistics package; and (STEP THREE) interpret the findings properly so that you can write up your results (i.e., usually in Chapter Four: Results ). The basic idea behind each of these steps is relatively straightforward, but the act of analysing your data (i.e., by selecting statistical tests, preparing your data and analysing it, and interpreting the findings from these tests) can be time consuming and challenging. We have tried to make this process as easy as possible by providing comprehensive, step-by-step guides in the Data Analysis part of Lærd Dissertation, but you should leave time at least one week to analyse your data.

STEP ONE Select the correct statistical tests to run on your data

It is common that dissertation students collect good data, but then report the wrong findings because of selecting the incorrect statistical tests to run in the first place. Selecting the correct statistical tests to perform on the data that you have collected will depend on (a) the research questions/hypotheses you have set, together with the research design you have adopted, and (b) the type and nature of your data:

The research questions/hypotheses you have set, together with the research design you have adopted

Your research questions/hypotheses and research design explain what variables you are measuring and how you plan to measure these variables. These highlight whether you want to (a) predict a score or a membership of a group, (b) find out differences between groups or treatments, or (c) explore associations/relationships between variables. These different aims determine the statistical tests that may be appropriate to run on your data. We highlight the word may because the most appropriate test that is identified based on your research questions/hypotheses and research design can change depending on the type and nature of the data you collect; something we discuss next.

The type and nature of the data you collected

Data is not all the same. As you will have identified by now, not all variables are measured in the same way; variables can be dichotomous, ordinal, or continuous. In addition, not all data is normal , as term we explain the Data Analysis section, nor is the data you have collected when comparing groups necessarily equal for each group. As a result, you might think that running a particular statistical test is correct (e.g., a dependent t-test), based on the research questions/hypotheses you have set, but the data you have collected fails certain assumptions that are important to this statistical test (i.e., normality and homogeneity of variance ). As a result, you have to run another statistical test (e.g., a Mann-Whitney U instead of a dependent t-test).

To select the correct statistical tests to run on the data in your dissertation, we have created a Statistical Test Selector to help guide you through the various options.

STEP TWO Prepare and analyse your data using a relevant statistics package

The preparation and analysis of your data is actually a much more practical step than many students realise. Most of the time required to get the results that you will present in your write up (i.e., usually in Chapter Four: Results ) comes from knowing (a) how to enter data into a statistics package (e.g., SPSS) so that it can be analysed correctly, and (b) what buttons to press in the statistics package to correctly run the statistical tests you need:

Entering data is not just about knowing what buttons to press, but: (a) how to code your data correctly to recognise the types of variables that you have, as well as issues such as reverse coding ; (b) how to filter your dataset to take into account missing data and outliers ; (c) how to split files (i.e., in SPSS) when analysing the data for separate subgroups (e.g., males and females) using the same statistical tests; (d) how to weight and unweight data you have collected; and (e) other things you need to consider when entering data. What you have to do when it comes to entering data (i.e., in terms of coding, filtering, splitting files, and weighting/unweighting data) will depend on the statistical tests you plan to run. Therefore, entering data starts with using the Statistical Test Selector to help guide you through the various options. In the Data Analysis section, we help you to understand what you need to know about entering data in the context of your dissertation.

Running statistical tests

Statistics packages do the hard work of statistically analysing your data, but they rely on you making a number of choices. This is not simply about selecting the correct statistical test, but knowing, when you have selected a given test to run on your data, what buttons to press to: (a) test for the assumptions underlying the statistical test; (b) test whether corrections can be made when assumptions are violated ; (c) take into account outliers and missing data ; (d) choose between the different numerical and graphical ways to approach your analysis; and (e) other standard and more advanced tips. In the Data Analysis section, we explain what these considerations are (i.e., assumptions, corrections, outliers and missing data, numerical and graphical analysis) so that you can apply them to your own dissertation. We also provide comprehensive , step-by-step instructions with screenshots that show you how to enter data and run a wide range of statistical tests using the statistics package, SPSS. We do this on the basis that you probably have little or no knowledge of SPSS.

STEP THREE Interpret the findings properly

SPSS produces many tables of output for the typical tests you will run. In addition, SPSS has many new methods of presenting data using its Model viewer. You need to know which of these tables is important for your analysis and what the different figures/numbers mean. Interpreting these findings properly and communicating your results is one of the most important aspects of your dissertation. In the Data Analysis section, we show you how to understand these tables of output, what part of this output you need to look at, and how to write up the results in an appropriate format (i.e., so that you can answer you research hypotheses).

ON YOUR 1ST ORDER

Mastering Dissertation Data Analysis: A Comprehensive Guide

By Laura Brown on 29th December 2023

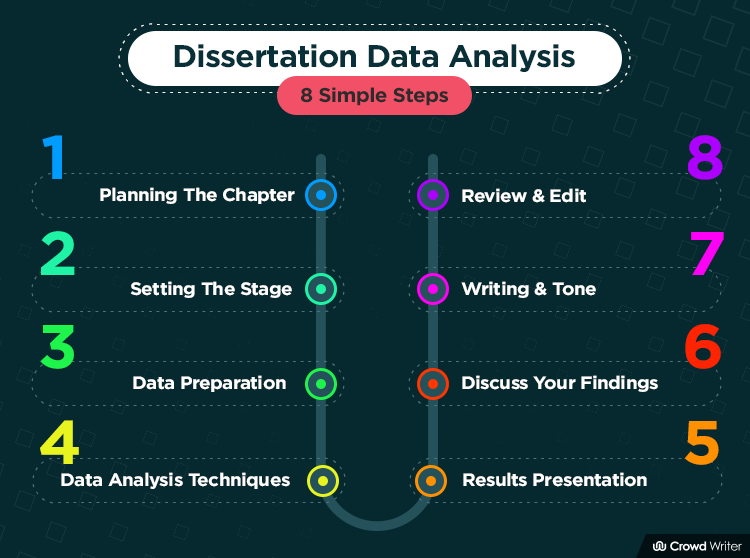

To craft an effective dissertation data analysis chapter, you need to follow some simple steps:

- Start by planning the structure and objectives of the chapter.

- Clearly set the stage by providing a concise overview of your research design and methodology.

- Proceed to thorough data preparation, ensuring accuracy and organisation.

- Justify your methods and present the results using visual aids for clarity.

- Discuss the findings within the context of your research questions.

- Finally, review and edit your chapter to ensure coherence.

This approach will ensure a well-crafted and impactful analysis section.

Before delving into details on how you can come up with an engaging data analysis show in your dissertation, we first need to understand what it is and why it is required.

What Is Data Analysis In A Dissertation?

The data analysis chapter is a crucial section of a research dissertation that involves the examination, interpretation, and synthesis of collected data. In this chapter, researchers employ statistical techniques, qualitative methods, or a combination of both to make sense of the data gathered during the research process.

Why Is The Data Analysis Chapter So Important?

The primary objectives of the data analysis chapter are to identify patterns, trends, relationships, and insights within the data set. Researchers use various tools and software to conduct a thorough analysis, ensuring that the results are both accurate and relevant to the research questions or hypotheses. Ultimately, the findings derived from this chapter contribute to the overall conclusions of the dissertation, providing a basis for drawing meaningful and well-supported insights.

Steps Required To Craft Data Analysis Chapter To Perfection

Now that we have an idea of what a dissertation analysis chapter is and why it is necessary to put it in the dissertation, let’s move towards how we can create one that has a significant impact. Our guide will move around the bulleted points that have been discussed initially in the beginning. So, it’s time to begin.

Step 1: Planning Your Data Analysis Chapter

Planning your data analysis chapter is a critical precursor to its successful execution.

- Begin by outlining the chapter structure to provide a roadmap for your analysis.

- Start with an introduction that succinctly introduces the purpose and significance of the data analysis in the context of your research.

- Following this, delineate the chapter into sections such as Data Preparation, where you detail the steps taken to organise and clean your data.

- Plan on to clearly define the Data Analysis Techniques employed, justifying their relevance to your research objectives.

- As you progress, plan for the Results Presentation, incorporating visual aids for clarity. Lastly, earmark a section for the Discussion of Findings, where you will interpret results within the broader context of your research questions.

This structured approach ensures a comprehensive and cohesive data analysis chapter, setting the stage for a compelling narrative that contributes significantly to your dissertation. You can always seek our dissertation data analysis help to plan your chapter.

Step 2: Setting The Stage – Introduction to Data Analysis

Your primary objective is to establish a solid foundation for the analytical journey. You need to skillfully link your data analysis to your research questions, elucidating the direct relevance and purpose of the upcoming analysis.

Simultaneously, define key concepts to provide clarity and ensure a shared understanding of the terms integral to your study. Following this, offer a concise overview of your data set characteristics, outlining its source, nature, and any noteworthy features.

This meticulous groundwork alongside our help with dissertation data analysis lays the base for a coherent and purposeful chapter, guiding readers seamlessly into the subsequent stages of your dissertation.

Step 3: Data Preparation

Now this is another pivotal phase in the data analysis process, ensuring the integrity and reliability of your findings. You should start with an insightful overview of the data cleaning and preprocessing procedures, highlighting the steps taken to refine and organise your dataset. Then, discuss any challenges encountered during the process and the strategies employed to address them.

Moving forward, delve into the specifics of data transformation procedures, elucidating any alterations made to the raw data for analysis. Clearly describe the methods employed for normalisation, scaling, or any other transformations deemed necessary. It will not only enhance the quality of your analysis but also foster transparency in your research methodology, reinforcing the robustness of your data-driven insights.

Step 4: Data Analysis Techniques

The data analysis section of a dissertation is akin to choosing the right tools for an artistic masterpiece. Carefully weigh the quantitative and qualitative approaches, ensuring a tailored fit for the nature of your data.

Quantitative Analysis

- Descriptive Statistics: Paint a vivid picture of your data through measures like mean, median, and mode. It’s like capturing the essence of your data’s personality.

- Inferential Statistics:Take a leap into the unknown, making educated guesses and inferences about your larger population based on a sample. It’s statistical magic in action.

Qualitative Analysis

- Thematic Analysis: Imagine your data as a novel, and thematic analysis as the tool to uncover its hidden chapters. Dissect the narrative, revealing recurring themes and patterns.

- Content Analysis: Scrutinise your data’s content like detectives, identifying key elements and meanings. It’s a deep dive into the substance of your qualitative data.

Providing Rationale for Chosen Methods

You should also articulate the why behind the chosen methods. It’s not just about numbers or themes; it’s about the story you want your data to tell. Through transparent rationale, you should ensure that your chosen techniques align seamlessly with your research goals, adding depth and credibility to the analysis.

Step 5: Presentation Of Your Results

You can simply break this process into two parts.

a. Creating Clear and Concise Visualisations

Effectively communicate your findings through meticulously crafted visualisations. Use tables that offer a structured presentation, summarising key data points for quick comprehension. Graphs, on the other hand, visually depict trends and patterns, enhancing overall clarity. Thoughtfully design these visual aids to align with the nature of your data, ensuring they serve as impactful tools for conveying information.

b. Interpreting and Explaining Results

Go beyond mere presentation by providing insightful interpretation by taking data analysis services for dissertation. Show the significance of your findings within the broader research context. Moreover, articulates the implications of observed patterns or relationships. By weaving a narrative around your results, you guide readers through the relevance and impact of your data analysis, enriching the overall understanding of your dissertation’s key contributions.

Step 6: Discussion of Findings

While discussing your findings and dissertation discussion chapter , it’s like putting together puzzle pieces to understand what your data is saying. You can always take dissertation data analysis help to explain what it all means, connecting back to why you started in the first place.

Be honest about any limitations or possible biases in your study; it’s like showing your cards to make your research more trustworthy. Comparing your results to what other smart people have found before you adds to the conversation, showing where your work fits in.

Looking ahead, you suggest ideas for what future researchers could explore, keeping the conversation going. So, it’s not just about what you found, but also about what comes next and how it all fits into the big picture of what we know.

Step 7: Writing Style and Tone

In order to perfectly come up with this chapter, follow the below points in your writing and adjust the tone accordingly,

- Use clear and concise language to ensure your audience easily understands complex concepts.

- Avoid unnecessary jargon in data analysis for thesis, and if specialised terms are necessary, provide brief explanations.

- Keep your writing style formal and objective, maintaining an academic tone throughout.

- Avoid overly casual language or slang, as the data analysis chapter is a serious academic document.

- Clearly define terms and concepts, providing specific details about your data preparation and analysis procedures.

- Use precise language to convey your ideas, minimising ambiguity.

- Follow a consistent formatting style for headings, subheadings, and citations to enhance readability.

- Ensure that tables, graphs, and visual aids are labelled and formatted uniformly for a polished presentation.

- Connect your analysis to the broader context of your research by explaining the relevance of your chosen methods and the importance of your findings.

- Offer a balance between detail and context, helping readers understand the significance of your data analysis within the larger study.

- Present enough detail to support your findings but avoid overwhelming readers with excessive information.

- Use a balance of text and visual aids to convey information efficiently.