- Privacy Policy

Home » Data Collection – Methods Types and Examples

Data Collection – Methods Types and Examples

Table of Contents

Data Collection

Definition:

Data collection is the process of gathering and collecting information from various sources to analyze and make informed decisions based on the data collected. This can involve various methods, such as surveys, interviews, experiments, and observation.

In order for data collection to be effective, it is important to have a clear understanding of what data is needed and what the purpose of the data collection is. This can involve identifying the population or sample being studied, determining the variables to be measured, and selecting appropriate methods for collecting and recording data.

Types of Data Collection

Types of Data Collection are as follows:

Primary Data Collection

Primary data collection is the process of gathering original and firsthand information directly from the source or target population. This type of data collection involves collecting data that has not been previously gathered, recorded, or published. Primary data can be collected through various methods such as surveys, interviews, observations, experiments, and focus groups. The data collected is usually specific to the research question or objective and can provide valuable insights that cannot be obtained from secondary data sources. Primary data collection is often used in market research, social research, and scientific research.

Secondary Data Collection

Secondary data collection is the process of gathering information from existing sources that have already been collected and analyzed by someone else, rather than conducting new research to collect primary data. Secondary data can be collected from various sources, such as published reports, books, journals, newspapers, websites, government publications, and other documents.

Qualitative Data Collection

Qualitative data collection is used to gather non-numerical data such as opinions, experiences, perceptions, and feelings, through techniques such as interviews, focus groups, observations, and document analysis. It seeks to understand the deeper meaning and context of a phenomenon or situation and is often used in social sciences, psychology, and humanities. Qualitative data collection methods allow for a more in-depth and holistic exploration of research questions and can provide rich and nuanced insights into human behavior and experiences.

Quantitative Data Collection

Quantitative data collection is a used to gather numerical data that can be analyzed using statistical methods. This data is typically collected through surveys, experiments, and other structured data collection methods. Quantitative data collection seeks to quantify and measure variables, such as behaviors, attitudes, and opinions, in a systematic and objective way. This data is often used to test hypotheses, identify patterns, and establish correlations between variables. Quantitative data collection methods allow for precise measurement and generalization of findings to a larger population. It is commonly used in fields such as economics, psychology, and natural sciences.

Data Collection Methods

Data Collection Methods are as follows:

Surveys involve asking questions to a sample of individuals or organizations to collect data. Surveys can be conducted in person, over the phone, or online.

Interviews involve a one-on-one conversation between the interviewer and the respondent. Interviews can be structured or unstructured and can be conducted in person or over the phone.

Focus Groups

Focus groups are group discussions that are moderated by a facilitator. Focus groups are used to collect qualitative data on a specific topic.

Observation

Observation involves watching and recording the behavior of people, objects, or events in their natural setting. Observation can be done overtly or covertly, depending on the research question.

Experiments

Experiments involve manipulating one or more variables and observing the effect on another variable. Experiments are commonly used in scientific research.

Case Studies

Case studies involve in-depth analysis of a single individual, organization, or event. Case studies are used to gain detailed information about a specific phenomenon.

Secondary Data Analysis

Secondary data analysis involves using existing data that was collected for another purpose. Secondary data can come from various sources, such as government agencies, academic institutions, or private companies.

How to Collect Data

The following are some steps to consider when collecting data:

- Define the objective : Before you start collecting data, you need to define the objective of the study. This will help you determine what data you need to collect and how to collect it.

- Identify the data sources : Identify the sources of data that will help you achieve your objective. These sources can be primary sources, such as surveys, interviews, and observations, or secondary sources, such as books, articles, and databases.

- Determine the data collection method : Once you have identified the data sources, you need to determine the data collection method. This could be through online surveys, phone interviews, or face-to-face meetings.

- Develop a data collection plan : Develop a plan that outlines the steps you will take to collect the data. This plan should include the timeline, the tools and equipment needed, and the personnel involved.

- Test the data collection process: Before you start collecting data, test the data collection process to ensure that it is effective and efficient.

- Collect the data: Collect the data according to the plan you developed in step 4. Make sure you record the data accurately and consistently.

- Analyze the data: Once you have collected the data, analyze it to draw conclusions and make recommendations.

- Report the findings: Report the findings of your data analysis to the relevant stakeholders. This could be in the form of a report, a presentation, or a publication.

- Monitor and evaluate the data collection process: After the data collection process is complete, monitor and evaluate the process to identify areas for improvement in future data collection efforts.

- Ensure data quality: Ensure that the collected data is of high quality and free from errors. This can be achieved by validating the data for accuracy, completeness, and consistency.

- Maintain data security: Ensure that the collected data is secure and protected from unauthorized access or disclosure. This can be achieved by implementing data security protocols and using secure storage and transmission methods.

- Follow ethical considerations: Follow ethical considerations when collecting data, such as obtaining informed consent from participants, protecting their privacy and confidentiality, and ensuring that the research does not cause harm to participants.

- Use appropriate data analysis methods : Use appropriate data analysis methods based on the type of data collected and the research objectives. This could include statistical analysis, qualitative analysis, or a combination of both.

- Record and store data properly: Record and store the collected data properly, in a structured and organized format. This will make it easier to retrieve and use the data in future research or analysis.

- Collaborate with other stakeholders : Collaborate with other stakeholders, such as colleagues, experts, or community members, to ensure that the data collected is relevant and useful for the intended purpose.

Applications of Data Collection

Data collection methods are widely used in different fields, including social sciences, healthcare, business, education, and more. Here are some examples of how data collection methods are used in different fields:

- Social sciences : Social scientists often use surveys, questionnaires, and interviews to collect data from individuals or groups. They may also use observation to collect data on social behaviors and interactions. This data is often used to study topics such as human behavior, attitudes, and beliefs.

- Healthcare : Data collection methods are used in healthcare to monitor patient health and track treatment outcomes. Electronic health records and medical charts are commonly used to collect data on patients’ medical history, diagnoses, and treatments. Researchers may also use clinical trials and surveys to collect data on the effectiveness of different treatments.

- Business : Businesses use data collection methods to gather information on consumer behavior, market trends, and competitor activity. They may collect data through customer surveys, sales reports, and market research studies. This data is used to inform business decisions, develop marketing strategies, and improve products and services.

- Education : In education, data collection methods are used to assess student performance and measure the effectiveness of teaching methods. Standardized tests, quizzes, and exams are commonly used to collect data on student learning outcomes. Teachers may also use classroom observation and student feedback to gather data on teaching effectiveness.

- Agriculture : Farmers use data collection methods to monitor crop growth and health. Sensors and remote sensing technology can be used to collect data on soil moisture, temperature, and nutrient levels. This data is used to optimize crop yields and minimize waste.

- Environmental sciences : Environmental scientists use data collection methods to monitor air and water quality, track climate patterns, and measure the impact of human activity on the environment. They may use sensors, satellite imagery, and laboratory analysis to collect data on environmental factors.

- Transportation : Transportation companies use data collection methods to track vehicle performance, optimize routes, and improve safety. GPS systems, on-board sensors, and other tracking technologies are used to collect data on vehicle speed, fuel consumption, and driver behavior.

Examples of Data Collection

Examples of Data Collection are as follows:

- Traffic Monitoring: Cities collect real-time data on traffic patterns and congestion through sensors on roads and cameras at intersections. This information can be used to optimize traffic flow and improve safety.

- Social Media Monitoring : Companies can collect real-time data on social media platforms such as Twitter and Facebook to monitor their brand reputation, track customer sentiment, and respond to customer inquiries and complaints in real-time.

- Weather Monitoring: Weather agencies collect real-time data on temperature, humidity, air pressure, and precipitation through weather stations and satellites. This information is used to provide accurate weather forecasts and warnings.

- Stock Market Monitoring : Financial institutions collect real-time data on stock prices, trading volumes, and other market indicators to make informed investment decisions and respond to market fluctuations in real-time.

- Health Monitoring : Medical devices such as wearable fitness trackers and smartwatches can collect real-time data on a person’s heart rate, blood pressure, and other vital signs. This information can be used to monitor health conditions and detect early warning signs of health issues.

Purpose of Data Collection

The purpose of data collection can vary depending on the context and goals of the study, but generally, it serves to:

- Provide information: Data collection provides information about a particular phenomenon or behavior that can be used to better understand it.

- Measure progress : Data collection can be used to measure the effectiveness of interventions or programs designed to address a particular issue or problem.

- Support decision-making : Data collection provides decision-makers with evidence-based information that can be used to inform policies, strategies, and actions.

- Identify trends : Data collection can help identify trends and patterns over time that may indicate changes in behaviors or outcomes.

- Monitor and evaluate : Data collection can be used to monitor and evaluate the implementation and impact of policies, programs, and initiatives.

When to use Data Collection

Data collection is used when there is a need to gather information or data on a specific topic or phenomenon. It is typically used in research, evaluation, and monitoring and is important for making informed decisions and improving outcomes.

Data collection is particularly useful in the following scenarios:

- Research : When conducting research, data collection is used to gather information on variables of interest to answer research questions and test hypotheses.

- Evaluation : Data collection is used in program evaluation to assess the effectiveness of programs or interventions, and to identify areas for improvement.

- Monitoring : Data collection is used in monitoring to track progress towards achieving goals or targets, and to identify any areas that require attention.

- Decision-making: Data collection is used to provide decision-makers with information that can be used to inform policies, strategies, and actions.

- Quality improvement : Data collection is used in quality improvement efforts to identify areas where improvements can be made and to measure progress towards achieving goals.

Characteristics of Data Collection

Data collection can be characterized by several important characteristics that help to ensure the quality and accuracy of the data gathered. These characteristics include:

- Validity : Validity refers to the accuracy and relevance of the data collected in relation to the research question or objective.

- Reliability : Reliability refers to the consistency and stability of the data collection process, ensuring that the results obtained are consistent over time and across different contexts.

- Objectivity : Objectivity refers to the impartiality of the data collection process, ensuring that the data collected is not influenced by the biases or personal opinions of the data collector.

- Precision : Precision refers to the degree of accuracy and detail in the data collected, ensuring that the data is specific and accurate enough to answer the research question or objective.

- Timeliness : Timeliness refers to the efficiency and speed with which the data is collected, ensuring that the data is collected in a timely manner to meet the needs of the research or evaluation.

- Ethical considerations : Ethical considerations refer to the ethical principles that must be followed when collecting data, such as ensuring confidentiality and obtaining informed consent from participants.

Advantages of Data Collection

There are several advantages of data collection that make it an important process in research, evaluation, and monitoring. These advantages include:

- Better decision-making : Data collection provides decision-makers with evidence-based information that can be used to inform policies, strategies, and actions, leading to better decision-making.

- Improved understanding: Data collection helps to improve our understanding of a particular phenomenon or behavior by providing empirical evidence that can be analyzed and interpreted.

- Evaluation of interventions: Data collection is essential in evaluating the effectiveness of interventions or programs designed to address a particular issue or problem.

- Identifying trends and patterns: Data collection can help identify trends and patterns over time that may indicate changes in behaviors or outcomes.

- Increased accountability: Data collection increases accountability by providing evidence that can be used to monitor and evaluate the implementation and impact of policies, programs, and initiatives.

- Validation of theories: Data collection can be used to test hypotheses and validate theories, leading to a better understanding of the phenomenon being studied.

- Improved quality: Data collection is used in quality improvement efforts to identify areas where improvements can be made and to measure progress towards achieving goals.

Limitations of Data Collection

While data collection has several advantages, it also has some limitations that must be considered. These limitations include:

- Bias : Data collection can be influenced by the biases and personal opinions of the data collector, which can lead to inaccurate or misleading results.

- Sampling bias : Data collection may not be representative of the entire population, resulting in sampling bias and inaccurate results.

- Cost : Data collection can be expensive and time-consuming, particularly for large-scale studies.

- Limited scope: Data collection is limited to the variables being measured, which may not capture the entire picture or context of the phenomenon being studied.

- Ethical considerations : Data collection must follow ethical principles to protect the rights and confidentiality of the participants, which can limit the type of data that can be collected.

- Data quality issues: Data collection may result in data quality issues such as missing or incomplete data, measurement errors, and inconsistencies.

- Limited generalizability : Data collection may not be generalizable to other contexts or populations, limiting the generalizability of the findings.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Background of The Study – Examples and Writing...

APA Table of Contents – Format and Example

References in Research – Types, Examples and...

Significance of the Study – Examples and Writing...

Problem Statement – Writing Guide, Examples and...

Thesis – Structure, Example and Writing Guide

Data collection in research: Your complete guide

Last updated

31 January 2023

Reviewed by

Cathy Heath

Short on time? Get an AI generated summary of this article instead

In the late 16th century, Francis Bacon coined the phrase "knowledge is power," which implies that knowledge is a powerful force, like physical strength. In the 21st century, knowledge in the form of data is unquestionably powerful.

But data isn't something you just have - you need to collect it. This means utilizing a data collection process and turning the collected data into knowledge that you can leverage into a successful strategy for your business or organization.

Believe it or not, there's more to data collection than just conducting a Google search. In this complete guide, we shine a spotlight on data collection, outlining what it is, types of data collection methods, common challenges in data collection, data collection techniques, and the steps involved in data collection.

Analyze all your data in one place

Uncover hidden nuggets in all types of qualitative data when you analyze it in Dovetail

- What is data collection?

There are two specific data collection techniques: primary and secondary data collection. Primary data collection is the process of gathering data directly from sources. It's often considered the most reliable data collection method, as researchers can collect information directly from respondents.

Secondary data collection is data that has already been collected by someone else and is readily available. This data is usually less expensive and quicker to obtain than primary data.

- What are the different methods of data collection?

There are several data collection methods, which can be either manual or automated. Manual data collection involves collecting data manually, typically with pen and paper, while computerized data collection involves using software to collect data from online sources, such as social media, website data, transaction data, etc.

Here are the five most popular methods of data collection:

Surveys are a very popular method of data collection that organizations can use to gather information from many people. Researchers can conduct multi-mode surveys that reach respondents in different ways, including in person, by mail, over the phone, or online.

As a method of data collection, surveys have several advantages. For instance, they are relatively quick and easy to administer, you can be flexible in what you ask, and they can be tailored to collect data on various topics or from certain demographics.

However, surveys also have several disadvantages. For instance, they can be expensive to administer, and the results may not represent the population as a whole. Additionally, survey data can be challenging to interpret. It may also be subject to bias if the questions are not well-designed or if the sample of people surveyed is not representative of the population of interest.

Interviews are a common method of collecting data in social science research. You can conduct interviews in person, over the phone, or even via email or online chat.

Interviews are a great way to collect qualitative and quantitative data . Qualitative interviews are likely your best option if you need to collect detailed information about your subjects' experiences or opinions. If you need to collect more generalized data about your subjects' demographics or attitudes, then quantitative interviews may be a better option.

Interviews are relatively quick and very flexible, allowing you to ask follow-up questions and explore topics in more depth. The downside is that interviews can be time-consuming and expensive due to the amount of information to be analyzed. They are also prone to bias, as both the interviewer and the respondent may have certain expectations or preconceptions that may influence the data.

Direct observation

Observation is a direct way of collecting data. It can be structured (with a specific protocol to follow) or unstructured (simply observing without a particular plan).

Organizations and businesses use observation as a data collection method to gather information about their target market, customers, or competition. Businesses can learn about consumer behavior, preferences, and trends by observing people using their products or service.

There are two types of observation: participatory and non-participatory. In participatory observation, the researcher is actively involved in the observed activities. This type of observation is used in ethnographic research , where the researcher wants to understand a group's culture and social norms. Non-participatory observation is when researchers observe from a distance and do not interact with the people or environment they are studying.

There are several advantages to using observation as a data collection method. It can provide insights that may not be apparent through other methods, such as surveys or interviews. Researchers can also observe behavior in a natural setting, which can provide a more accurate picture of what people do and how and why they behave in a certain context.

There are some disadvantages to using observation as a method of data collection. It can be time-consuming, intrusive, and expensive to observe people for extended periods. Observations can also be tainted if the researcher is not careful to avoid personal biases or preconceptions.

Automated data collection

Business applications and websites are increasingly collecting data electronically to improve the user experience or for marketing purposes.

There are a few different ways that organizations can collect data automatically. One way is through cookies, which are small pieces of data stored on a user's computer. They track a user's browsing history and activity on a site, measuring levels of engagement with a business’s products or services, for example.

Another way organizations can collect data automatically is through web beacons. Web beacons are small images embedded on a web page to track a user's activity.

Finally, organizations can also collect data through mobile apps, which can track user location, device information, and app usage. This data can be used to improve the user experience and for marketing purposes.

Automated data collection is a valuable tool for businesses, helping improve the user experience or target marketing efforts. Businesses should aim to be transparent about how they collect and use this data.

Sourcing data through information service providers

Organizations need to be able to collect data from a variety of sources, including social media, weblogs, and sensors. The process to do this and then use the data for action needs to be efficient, targeted, and meaningful.

In the era of big data, organizations are increasingly turning to information service providers (ISPs) and other external data sources to help them collect data to make crucial decisions.

Information service providers help organizations collect data by offering personalized services that suit the specific needs of the organizations. These services can include data collection, analysis, management, and reporting. By partnering with an ISP, organizations can gain access to the newest technology and tools to help them to gather and manage data more effectively.

There are also several tools and techniques that organizations can use to collect data from external sources, such as web scraping, which collects data from websites, and data mining, which involves using algorithms to extract data from large data sets.

Organizations can also use APIs (application programming interface) to collect data from external sources. APIs allow organizations to access data stored in another system and share and integrate it into their own systems.

Finally, organizations can also use manual methods to collect data from external sources. This can involve contacting companies or individuals directly to request data, by using the right tools and methods to get the insights they need.

- What are common challenges in data collection?

There are many challenges that researchers face when collecting data. Here are five common examples:

Big data environments

Data collection can be a challenge in big data environments for several reasons. It can be located in different places, such as archives, libraries, or online. The sheer volume of data can also make it difficult to identify the most relevant data sets.

Second, the complexity of data sets can make it challenging to extract the desired information. Third, the distributed nature of big data environments can make it difficult to collect data promptly and efficiently.

Therefore it is important to have a well-designed data collection strategy to consider the specific needs of the organization and what data sets are the most relevant. Alongside this, consideration should be made regarding the tools and resources available to support data collection and protect it from unintended use.

Data bias is a common challenge in data collection. It occurs when data is collected from a sample that is not representative of the population of interest.

There are different types of data bias, but some common ones include selection bias, self-selection bias, and response bias. Selection bias can occur when the collected data does not represent the population being studied. For example, if a study only includes data from people who volunteer to participate, that data may not represent the general population.

Self-selection bias can also occur when people self-select into a study, such as by taking part only if they think they will benefit from it. Response bias happens when people respond in a way that is not honest or accurate, such as by only answering questions that make them look good.

These types of data bias present a challenge because they can lead to inaccurate results and conclusions about behaviors, perceptions, and trends. Data bias can be avoided by identifying potential sources or themes of bias and setting guidelines for eliminating them.

Lack of quality assurance processes

One of the biggest challenges in data collection is the lack of quality assurance processes. This can lead to several problems, including incorrect data, missing data, and inconsistencies between data sets.

Quality assurance is important because there are many data sources, and each source may have different levels of quality or corruption. There are also different ways of collecting data, and data quality may vary depending on the method used.

There are several ways to improve quality assurance in data collection. These include developing clear and consistent goals and guidelines for data collection, implementing quality control measures, using standardized procedures, and employing data validation techniques. By taking these steps, you can ensure that your data is of adequate quality to inform decision-making.

Limited access to data

Another challenge in data collection is limited access to data. This can be due to several reasons, including privacy concerns, the sensitive nature of the data, security concerns, or simply the fact that data is not readily available.

Legal and compliance regulations

Most countries have regulations governing how data can be collected, used, and stored. In some cases, data collected in one country may not be used in another. This means gaining a global perspective can be a challenge.

For example, if a company is required to comply with the EU General Data Protection Regulation (GDPR), it may not be able to collect data from individuals in the EU without their explicit consent. This can make it difficult to collect data from a target audience.

Legal and compliance regulations can be complex, and it's important to ensure that all data collected is done so in a way that complies with the relevant regulations.

- What are the key steps in the data collection process?

There are five steps involved in the data collection process. They are:

1. Decide what data you want to gather

Have a clear understanding of the questions you are asking, and then consider where the answers might lie and how you might obtain them. This saves time and resources by avoiding the collection of irrelevant data, and helps maintain the quality of your datasets.

2. Establish a deadline for data collection

Establishing a deadline for data collection helps you avoid collecting too much data, which can be costly and time-consuming to analyze. It also allows you to plan for data analysis and prompt interpretation. Finally, it helps you meet your research goals and objectives and allows you to move forward.

3. Select a data collection approach

The data collection approach you choose will depend on different factors, including the type of data you need, available resources, and the project timeline. For instance, if you need qualitative data, you might choose a focus group or interview methodology. If you need quantitative data , then a survey or observational study may be the most appropriate form of collection.

4. Gather information

When collecting data for your business, identify your business goals first. Once you know what you want to achieve, you can start collecting data to reach those goals. The most important thing is to ensure that the data you collect is reliable and valid. Otherwise, any decisions you make using the data could result in a negative outcome for your business.

5. Examine the information and apply your findings

As a researcher, it's important to examine the data you're collecting and analyzing before you apply your findings. This is because data can be misleading, leading to inaccurate conclusions. Ask yourself whether it is what you are expecting? Is it similar to other datasets you have looked at?

There are many scientific ways to examine data, but some common methods include:

looking at the distribution of data points

examining the relationships between variables

looking for outliers

By taking the time to examine your data and noticing any patterns, strange or otherwise, you can avoid making mistakes that could invalidate your research.

- How qualitative analysis software streamlines the data collection process

Knowledge derived from data does indeed carry power. However, if you don't convert the knowledge into action, it will remain a resource of unexploited energy and wasted potential.

Luckily, data collection tools enable organizations to streamline their data collection and analysis processes and leverage the derived knowledge to grow their businesses. For instance, qualitative analysis software can be highly advantageous in data collection by streamlining the process, making it more efficient and less time-consuming.

Secondly, qualitative analysis software provides a structure for data collection and analysis, ensuring that data is of high quality. It can also help to uncover patterns and relationships that would otherwise be difficult to discern. Moreover, you can use it to replace more expensive data collection methods, such as focus groups or surveys.

Overall, qualitative analysis software can be valuable for any researcher looking to collect and analyze data. By increasing efficiency, improving data quality, and providing greater insights, qualitative software can help to make the research process much more efficient and effective.

Learn more about qualitative research data analysis software

Should you be using a customer insights hub.

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 18 April 2023

Last updated: 27 February 2023

Last updated: 6 February 2023

Last updated: 6 October 2023

Last updated: 5 February 2023

Last updated: 16 April 2023

Last updated: 9 March 2023

Last updated: 12 December 2023

Last updated: 11 March 2024

Last updated: 4 July 2024

Last updated: 6 March 2024

Last updated: 5 March 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

Users report unexpectedly high data usage, especially during streaming sessions.

Users find it hard to navigate from the home page to relevant playlists in the app.

It would be great to have a sleep timer feature, especially for bedtime listening.

I need better filters to find the songs or artists I’m looking for.

Log in or sign up

Get started for free

Table of Contents

What is data collection, why do we need data collection, what are the different data collection methods, data collection tools, the importance of ensuring accurate and appropriate data collection, issues related to maintaining the integrity of data collection, what are common challenges in data collection, what are the key steps in the data collection process, data collection considerations and best practices, choose the right data science program, are you interested in a career in data science, what is data collection: methods, types, tools.

The process of gathering and analyzing accurate data from various sources to find answers to research problems, trends and probabilities, etc., to evaluate possible outcomes is Known as Data Collection. Knowledge is power, information is knowledge, and data is information in digitized form, at least as defined in IT. Hence, data is power. But before you can leverage that data into a successful strategy for your organization or business, you need to gather it. That’s your first step.

So, to help you get the process started, we shine a spotlight on data collection. What exactly is it? Believe it or not, it’s more than just doing a Google search! Furthermore, what are the different types of data collection? And what kinds of data collection tools and data collection techniques exist?

If you want to get up to speed about what is data collection process, you’ve come to the right place.

Transform raw data into captivating visuals with Simplilearn's hands-on Data Visualization Courses and captivate your audience. Also, master the art of data management with Simplilearn's comprehensive data management courses - unlock new career opportunities today!

Data collection is the process of collecting and evaluating information or data from multiple sources to find answers to research problems, answer questions, evaluate outcomes, and forecast trends and probabilities. It is an essential phase in all types of research, analysis, and decision-making, including that done in the social sciences, business, and healthcare.

Accurate data collection is necessary to make informed business decisions, ensure quality assurance, and keep research integrity.

During data collection, the researchers must identify the data types, the sources of data, and what methods are being used. We will soon see that there are many different data collection methods . There is heavy reliance on data collection in research, commercial, and government fields.

Before an analyst begins collecting data, they must answer three questions first:

- What’s the goal or purpose of this research?

- What kinds of data are they planning on gathering?

- What methods and procedures will be used to collect, store, and process the information?

Additionally, we can break up data into qualitative and quantitative types. Qualitative data covers descriptions such as color, size, quality, and appearance. Quantitative data, unsurprisingly, deals with numbers, such as statistics, poll numbers, percentages, etc.

Before a judge makes a ruling in a court case or a general creates a plan of attack, they must have as many relevant facts as possible. The best courses of action come from informed decisions, and information and data are synonymous.

The concept of data collection isn’t a new one, as we’ll see later, but the world has changed. There is far more data available today, and it exists in forms that were unheard of a century ago. The data collection process has had to change and grow with the times, keeping pace with technology.

Whether you’re in the world of academia, trying to conduct research, or part of the commercial sector, thinking of how to promote a new product, you need data collection to help you make better choices.

Now that you know what is data collection and why we need it, let's take a look at the different methods of data collection. While the phrase “data collection” may sound all high-tech and digital, it doesn’t necessarily entail things like computers, big data , and the internet. Data collection could mean a telephone survey, a mail-in comment card, or even some guy with a clipboard asking passersby some questions. But let’s see if we can sort the different data collection methods into a semblance of organized categories.

Primary and secondary methods of data collection are two approaches used to gather information for research or analysis purposes. Let's explore each data collection method in detail:

1. Primary Data Collection:

Primary data collection involves the collection of original data directly from the source or through direct interaction with the respondents. This method allows researchers to obtain firsthand information specifically tailored to their research objectives. There are various techniques for primary data collection, including:

a. Surveys and Questionnaires: Researchers design structured questionnaires or surveys to collect data from individuals or groups. These can be conducted through face-to-face interviews, telephone calls, mail, or online platforms.

b. Interviews: Interviews involve direct interaction between the researcher and the respondent. They can be conducted in person, over the phone, or through video conferencing. Interviews can be structured (with predefined questions), semi-structured (allowing flexibility), or unstructured (more conversational).

c. Observations: Researchers observe and record behaviors, actions, or events in their natural setting. This method is useful for gathering data on human behavior, interactions, or phenomena without direct intervention.

d. Experiments: Experimental studies involve the manipulation of variables to observe their impact on the outcome. Researchers control the conditions and collect data to draw conclusions about cause-and-effect relationships.

e. Focus Groups: Focus groups bring together a small group of individuals who discuss specific topics in a moderated setting. This method helps in understanding opinions, perceptions, and experiences shared by the participants.

2. Secondary Data Collection:

Secondary data collection involves using existing data collected by someone else for a purpose different from the original intent. Researchers analyze and interpret this data to extract relevant information. Secondary data can be obtained from various sources, including:

a. Published Sources: Researchers refer to books, academic journals, magazines, newspapers, government reports, and other published materials that contain relevant data.

b. Online Databases: Numerous online databases provide access to a wide range of secondary data, such as research articles, statistical information, economic data, and social surveys.

c. Government and Institutional Records: Government agencies, research institutions, and organizations often maintain databases or records that can be used for research purposes.

d. Publicly Available Data: Data shared by individuals, organizations, or communities on public platforms, websites, or social media can be accessed and utilized for research.

e. Past Research Studies: Previous research studies and their findings can serve as valuable secondary data sources. Researchers can review and analyze the data to gain insights or build upon existing knowledge.

Now that we’ve explained the various techniques, let’s narrow our focus even further by looking at some specific tools. For example, we mentioned interviews as a technique, but we can further break that down into different interview types (or “tools”).

Word Association

The researcher gives the respondent a set of words and asks them what comes to mind when they hear each word.

Sentence Completion

Researchers use sentence completion to understand what kind of ideas the respondent has. This tool involves giving an incomplete sentence and seeing how the interviewee finishes it.

Role-Playing

Respondents are presented with an imaginary situation and asked how they would act or react if it was real.

In-Person Surveys

The researcher asks questions in person.

Online/Web Surveys

These surveys are easy to accomplish, but some users may be unwilling to answer truthfully, if at all.

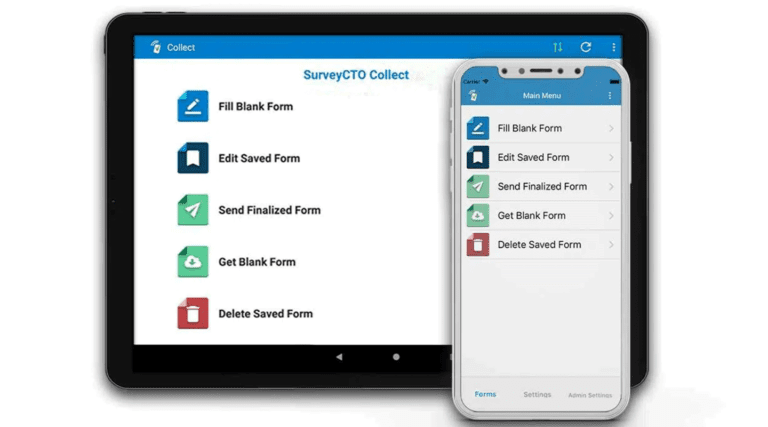

Mobile Surveys

These surveys take advantage of the increasing proliferation of mobile technology. Mobile collection surveys rely on mobile devices like tablets or smartphones to conduct surveys via SMS or mobile apps.

Phone Surveys

No researcher can call thousands of people at once, so they need a third party to handle the chore. However, many people have call screening and won’t answer.

Observation

Sometimes, the simplest method is the best. Researchers who make direct observations collect data quickly and easily, with little intrusion or third-party bias. Naturally, it’s only effective in small-scale situations.

Accurate data collecting is crucial to preserving the integrity of research, regardless of the subject of study or preferred method for defining data (quantitative, qualitative). Errors are less likely to occur when the right data gathering tools are used (whether they are brand-new ones, updated versions of them, or already available).

Among the effects of data collection done incorrectly, include the following -

- Erroneous conclusions that squander resources

- Decisions that compromise public policy

- Incapacity to correctly respond to research inquiries

- Bringing harm to participants who are humans or animals

- Deceiving other researchers into pursuing futile research avenues

- The study's inability to be replicated and validated

When these study findings are used to support recommendations for public policy, there is the potential to result in disproportionate harm, even if the degree of influence from flawed data collecting may vary by discipline and the type of investigation.

Let us now look at the various issues that we might face while maintaining the integrity of data collection.

In order to assist the errors detection process in the data gathering process, whether they were done purposefully (deliberate falsifications) or not, maintaining data integrity is the main justification (systematic or random errors).

Quality assurance and quality control are two strategies that help protect data integrity and guarantee the scientific validity of study results.

Each strategy is used at various stages of the research timeline:

- Quality control - tasks that are performed both after and during data collecting

- Quality assurance - events that happen before data gathering starts

Let us explore each of them in more detail now.

Quality Assurance

As data collecting comes before quality assurance, its primary goal is "prevention" (i.e., forestalling problems with data collection). The best way to protect the accuracy of data collection is through prevention. The uniformity of protocol created in the thorough and exhaustive procedures manual for data collecting serves as the best example of this proactive step.

The likelihood of failing to spot issues and mistakes early in the research attempt increases when guides are written poorly. There are several ways to show these shortcomings:

- Failure to determine the precise subjects and methods for retraining or training staff employees in data collecting

- List of goods to be collected, in part

- There isn't a system in place to track modifications to processes that may occur as the investigation continues.

- Instead of detailed, step-by-step instructions on how to deliver tests, there is a vague description of the data gathering tools that will be employed.

- Uncertainty regarding the date, procedure, and identity of the person or people in charge of examining the data

- Incomprehensible guidelines for using, adjusting, and calibrating the data collection equipment.

Now, let us look at how to ensure Quality Control.

Become a Data Scientist With Real-World Experience

Quality Control

Despite the fact that quality control actions (detection/monitoring and intervention) take place both after and during data collection, the specifics should be meticulously detailed in the procedures manual. Establishing monitoring systems requires a specific communication structure, which is a prerequisite. Following the discovery of data collection problems, there should be no ambiguity regarding the information flow between the primary investigators and staff personnel. A poorly designed communication system promotes slack oversight and reduces opportunities for error detection.

Direct staff observation conference calls, during site visits, or frequent or routine assessments of data reports to spot discrepancies, excessive numbers, or invalid codes can all be used as forms of detection or monitoring. Site visits might not be appropriate for all disciplines. Still, without routine auditing of records, whether qualitative or quantitative, it will be challenging for investigators to confirm that data gathering is taking place in accordance with the manual's defined methods. Additionally, quality control determines the appropriate solutions, or "actions," to fix flawed data gathering procedures and reduce recurrences.

Problems with data collection, for instance, that call for immediate action include:

- Fraud or misbehavior

- Systematic mistakes, procedure violations

- Individual data items with errors

- Issues with certain staff members or a site's performance

Researchers are trained to include one or more secondary measures that can be used to verify the quality of information being obtained from the human subject in the social and behavioral sciences where primary data collection entails using human subjects.

For instance, a researcher conducting a survey would be interested in learning more about the prevalence of risky behaviors among young adults as well as the social factors that influence these risky behaviors' propensity for and frequency. Let us now explore the common challenges with regard to data collection.

There are some prevalent challenges faced while collecting data, let us explore a few of them to understand them better and avoid them.

Data Quality Issues

The main threat to the broad and successful application of machine learning is poor data quality. Data quality must be your top priority if you want to make technologies like machine learning work for you. Let's talk about some of the most prevalent data quality problems in this blog article and how to fix them.

Inconsistent Data

When working with various data sources, it's conceivable that the same information will have discrepancies between sources. The differences could be in formats, units, or occasionally spellings. The introduction of inconsistent data might also occur during firm mergers or relocations. Inconsistencies in data have a tendency to accumulate and reduce the value of data if they are not continually resolved. Organizations that have heavily focused on data consistency do so because they only want reliable data to support their analytics.

Data Downtime

Data is the driving force behind the decisions and operations of data-driven businesses. However, there may be brief periods when their data is unreliable or not prepared. Customer complaints and subpar analytical outcomes are only two ways that this data unavailability can have a significant impact on businesses. A data engineer spends about 80% of their time updating, maintaining, and guaranteeing the integrity of the data pipeline. In order to ask the next business question, there is a high marginal cost due to the lengthy operational lead time from data capture to insight.

Schema modifications and migration problems are just two examples of the causes of data downtime. Data pipelines can be difficult due to their size and complexity. Data downtime must be continuously monitored, and it must be reduced through automation.

Ambiguous Data

Even with thorough oversight, some errors can still occur in massive databases or data lakes. For data streaming at a fast speed, the issue becomes more overwhelming. Spelling mistakes can go unnoticed, formatting difficulties can occur, and column heads might be deceptive. This unclear data might cause a number of problems for reporting and analytics.

Become a Data Science Expert & Get Your Dream Job

Duplicate Data

Streaming data, local databases, and cloud data lakes are just a few of the sources of data that modern enterprises must contend with. They might also have application and system silos. These sources are likely to duplicate and overlap each other quite a bit. For instance, duplicate contact information has a substantial impact on customer experience. If certain prospects are ignored while others are engaged repeatedly, marketing campaigns suffer. The likelihood of biased analytical outcomes increases when duplicate data are present. It can also result in ML models with biased training data.

Too Much Data

While we emphasize data-driven analytics and its advantages, a data quality problem with excessive data exists. There is a risk of getting lost in an abundance of data when searching for information pertinent to your analytical efforts. Data scientists, data analysts, and business users devote 80% of their work to finding and organizing the appropriate data. With an increase in data volume, other problems with data quality become more serious, particularly when dealing with streaming data and big files or databases.

Inaccurate Data

For highly regulated businesses like healthcare, data accuracy is crucial. Given the current experience, it is more important than ever to increase the data quality for COVID-19 and later pandemics. Inaccurate information does not provide you with a true picture of the situation and cannot be used to plan the best course of action. Personalized customer experiences and marketing strategies underperform if your customer data is inaccurate.

Data inaccuracies can be attributed to a number of things, including data degradation, human mistake, and data drift. Worldwide data decay occurs at a rate of about 3% per month, which is quite concerning. Data integrity can be compromised while being transferred between different systems, and data quality might deteriorate with time.

Hidden Data

The majority of businesses only utilize a portion of their data, with the remainder sometimes being lost in data silos or discarded in data graveyards. For instance, the customer service team might not receive client data from sales, missing an opportunity to build more precise and comprehensive customer profiles. Missing out on possibilities to develop novel products, enhance services, and streamline procedures is caused by hidden data.

Finding Relevant Data

Finding relevant data is not so easy. There are several factors that we need to consider while trying to find relevant data, which include -

- Relevant Domain

- Relevant demographics

- Relevant Time period and so many more factors that we need to consider while trying to find relevant data.

Data that is not relevant to our study in any of the factors render it obsolete and we cannot effectively proceed with its analysis. This could lead to incomplete research or analysis, re-collecting data again and again, or shutting down the study.

Deciding the Data to Collect

Determining what data to collect is one of the most important factors while collecting data and should be one of the first factors while collecting data. We must choose the subjects the data will cover, the sources we will be used to gather it, and the quantity of information we will require. Our responses to these queries will depend on our aims, or what we expect to achieve utilizing your data. As an illustration, we may choose to gather information on the categories of articles that website visitors between the ages of 20 and 50 most frequently access. We can also decide to compile data on the typical age of all the clients who made a purchase from your business over the previous month.

Not addressing this could lead to double work and collection of irrelevant data or ruining your study as a whole.

Dealing With Big Data

Big data refers to exceedingly massive data sets with more intricate and diversified structures. These traits typically result in increased challenges while storing, analyzing, and using additional methods of extracting results. Big data refers especially to data sets that are quite enormous or intricate that conventional data processing tools are insufficient. The overwhelming amount of data, both unstructured and structured, that a business faces on a daily basis.

The amount of data produced by healthcare applications, the internet, social networking sites social, sensor networks, and many other businesses are rapidly growing as a result of recent technological advancements. Big data refers to the vast volume of data created from numerous sources in a variety of formats at extremely fast rates. Dealing with this kind of data is one of the many challenges of Data Collection and is a crucial step toward collecting effective data.

Low Response and Other Research Issues

Poor design and low response rates were shown to be two issues with data collecting, particularly in health surveys that used questionnaires. This might lead to an insufficient or inadequate supply of data for the study. Creating an incentivized data collection program might be beneficial in this case to get more responses.

Now, let us look at the key steps in the data collection process.

In the Data Collection Process, there are 5 key steps. They are explained briefly below -

1. Decide What Data You Want to Gather

The first thing that we need to do is decide what information we want to gather. We must choose the subjects the data will cover, the sources we will use to gather it, and the quantity of information that we would require. For instance, we may choose to gather information on the categories of products that an average e-commerce website visitor between the ages of 30 and 45 most frequently searches for.

2. Establish a Deadline for Data Collection

The process of creating a strategy for data collection can now begin. We should set a deadline for our data collection at the outset of our planning phase. Some forms of data we might want to continuously collect. We might want to build up a technique for tracking transactional data and website visitor statistics over the long term, for instance. However, we will track the data throughout a certain time frame if we are tracking it for a particular campaign. In these situations, we will have a schedule for when we will begin and finish gathering data.

3. Select a Data Collection Approach

We will select the data collection technique that will serve as the foundation of our data gathering plan at this stage. We must take into account the type of information that we wish to gather, the time period during which we will receive it, and the other factors we decide on to choose the best gathering strategy.

4. Gather Information

Once our plan is complete, we can put our data collection plan into action and begin gathering data. In our DMP, we can store and arrange our data. We need to be careful to follow our plan and keep an eye on how it's doing. Especially if we are collecting data regularly, setting up a timetable for when we will be checking in on how our data gathering is going may be helpful. As circumstances alter and we learn new details, we might need to amend our plan.

5. Examine the Information and Apply Your Findings

It's time to examine our data and arrange our findings after we have gathered all of our information. The analysis stage is essential because it transforms unprocessed data into insightful knowledge that can be applied to better our marketing plans, goods, and business judgments. The analytics tools included in our DMP can be used to assist with this phase. We can put the discoveries to use to enhance our business once we have discovered the patterns and insights in our data.

Let us now look at some data collection considerations and best practices that one might follow.

We must carefully plan before spending time and money traveling to the field to gather data. While saving time and resources, effective data collection strategies can help us collect richer, more accurate, and richer data.

Below, we will be discussing some of the best practices that we can follow for the best results -

1. Take Into Account the Price of Each Extra Data Point

Once we have decided on the data we want to gather, we need to make sure to take the expense of doing so into account. Our surveyors and respondents will incur additional costs for each additional data point or survey question.

2. Plan How to Gather Each Data Piece

There is a dearth of freely accessible data. Sometimes the data is there, but we may not have access to it. For instance, unless we have a compelling cause, we cannot openly view another person's medical information. It could be challenging to measure several types of information.

Consider how time-consuming and difficult it will be to gather each piece of information while deciding what data to acquire.

3. Think About Your Choices for Data Collecting Using Mobile Devices

Mobile-based data collecting can be divided into three categories -

- IVRS (interactive voice response technology) - Will call the respondents and ask them questions that have already been recorded.

- SMS data collection - Will send a text message to the respondent, who can then respond to questions by text on their phone.

- Field surveyors - Can directly enter data into an interactive questionnaire while speaking to each respondent, thanks to smartphone apps.

We need to make sure to select the appropriate tool for our survey and responders because each one has its own disadvantages and advantages.

4. Carefully Consider the Data You Need to Gather

It's all too easy to get information about anything and everything, but it's crucial to only gather the information that we require.

It is helpful to consider these 3 questions:

- What details will be helpful?

- What details are available?

- What specific details do you require?

5. Remember to Consider Identifiers

Identifiers, or details describing the context and source of a survey response, are just as crucial as the information about the subject or program that we are actually researching.

In general, adding more identifiers will enable us to pinpoint our program's successes and failures with greater accuracy, but moderation is the key.

6. Data Collecting Through Mobile Devices is the Way to Go

Although collecting data on paper is still common, modern technology relies heavily on mobile devices. They enable us to gather many various types of data at relatively lower prices and are accurate as well as quick. There aren't many reasons not to pick mobile-based data collecting with the boom of low-cost Android devices that are available nowadays.

The Ultimate Ticket to Top Data Science Job Roles

1. What is data collection with example?

Data collection is the process of collecting and analyzing information on relevant variables in a predetermined, methodical way so that one can respond to specific research questions, test hypotheses, and assess results. Data collection can be either qualitative or quantitative. Example: A company collects customer feedback through online surveys and social media monitoring to improve their products and services.

2. What are the primary data collection methods?

As is well known, gathering primary data is costly and time intensive. The main techniques for gathering data are observation, interviews, questionnaires, schedules, and surveys.

3. What are data collection tools?

The term "data collecting tools" refers to the tools/devices used to gather data, such as a paper questionnaire or a system for computer-assisted interviews. Tools used to gather data include case studies, checklists, interviews, occasionally observation, surveys, and questionnaires.

4. What’s the difference between quantitative and qualitative methods?

While qualitative research focuses on words and meanings, quantitative research deals with figures and statistics. You can systematically measure variables and test hypotheses using quantitative methods. You can delve deeper into ideas and experiences using qualitative methodologies.

5. What are quantitative data collection methods?

While there are numerous other ways to get quantitative information, the methods indicated above—probability sampling, interviews, questionnaire observation, and document review—are the most typical and frequently employed, whether collecting information offline or online.

6. What is mixed methods research?

User research that includes both qualitative and quantitative techniques is known as mixed methods research. For deeper user insights, mixed methods research combines insightful user data with useful statistics.

7. What are the benefits of collecting data?

Collecting data offers several benefits, including:

- Knowledge and Insight

- Evidence-Based Decision Making

- Problem Identification and Solution

- Validation and Evaluation

- Identifying Trends and Predictions

- Support for Research and Development

- Policy Development

- Quality Improvement

- Personalization and Targeting

- Knowledge Sharing and Collaboration

8. What’s the difference between reliability and validity?

Reliability is about consistency and stability, while validity is about accuracy and appropriateness. Reliability focuses on the consistency of results, while validity focuses on whether the results are actually measuring what they are intended to measure. Both reliability and validity are crucial considerations in research to ensure the trustworthiness and meaningfulness of the collected data and measurements.

Are you thinking about pursuing a career in the field of data science? Simplilearn's Data Science courses are designed to provide you with the necessary skills and expertise to excel in this rapidly changing field. Here's a detailed comparison for your reference:

Program Name Data Scientist Master's Program Post Graduate Program In Data Science Post Graduate Program In Data Science Geo All Geos All Geos Not Applicable in US University Simplilearn Purdue Caltech Course Duration 11 Months 11 Months 11 Months Coding Experience Required Basic Basic No Skills You Will Learn 10+ skills including data structure, data manipulation, NumPy, Scikit-Learn, Tableau and more 8+ skills including Exploratory Data Analysis, Descriptive Statistics, Inferential Statistics, and more 8+ skills including Supervised & Unsupervised Learning Deep Learning Data Visualization, and more Additional Benefits Applied Learning via Capstone and 25+ Data Science Projects Purdue Alumni Association Membership Free IIMJobs Pro-Membership of 6 months Resume Building Assistance Upto 14 CEU Credits Caltech CTME Circle Membership Cost $$ $$$$ $$$$ Explore Program Explore Program Explore Program

We live in the Data Age, and if you want a career that fully takes advantage of this, you should consider a career in data science. Simplilearn offers a Caltech Post Graduate Program in Data Science that will train you in everything you need to know to secure the perfect position. This Data Science PG program is ideal for all working professionals, covering job-critical topics like R, Python programming , machine learning algorithms , NLP concepts , and data visualization with Tableau in great detail. This is all provided via our interactive learning model with live sessions by global practitioners, practical labs, and industry projects.

Data Science & Business Analytics Courses Duration and Fees

Data Science & Business Analytics programs typically range from a few weeks to several months, with fees varying based on program and institution.

| Program Name | Duration | Fees |

|---|---|---|

| Cohort Starts: | 11 months | € 2,290 |

| Cohort Starts: | 11 Months | € 2,790 |

| Cohort Starts: | 11 Months | € 3,790 |

| Cohort Starts: | 8 Months | € 1,790 |

| Cohort Starts: | 3 Months | € 1,999 |

| Cohort Starts: | 8 Months | € 2,790 |

| 11 Months | € 1,299 | |

| 11 Months | € 1,299 |

Recommended Reads

Data Science Career Guide: A Comprehensive Playbook To Becoming A Data Scientist

Difference Between Collection and Collections in Java

An Ultimate One-Stop Solution Guide to Collections in C# Programming With Examples

Managing Data

Capped Collection in MongoDB

What Are Java Collections and How to Implement Them?

Get Affiliated Certifications with Live Class programs

Data scientist.

- Industry-recognized Data Scientist Master’s certificate from Simplilearn

- Dedicated live sessions by faculty of industry experts

Caltech Data Sciences-Bootcamp

- Exclusive visit to Caltech’s Robotics Lab

Caltech Post Graduate Program in Data Science

- Earn a program completion certificate from Caltech CTME

- Curriculum delivered in live online sessions by industry experts

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Collection: What It Is, Methods & Tools + Examples

Let’s face it, no one wants to make decisions based on guesswork or gut feelings. The most important objective of data collection is to ensure that the data gathered is reliable and packed to the brim with juicy insights that can be analyzed and turned into data-driven decisions. There’s nothing better than good statistical analysis .

LEARN ABOUT: Level of Analysis

Collecting high-quality data is essential for conducting market research, analyzing user behavior, or just trying to get a handle on business operations. With the right approach and a few handy tools, gathering reliable and informative data.

So, let’s get ready to collect some data because when it comes to data collection, it’s all about the details.

Content Index

What is Data Collection?

Data collection methods, data collection examples, reasons to conduct online research and data collection, conducting customer surveys for data collection to multiply sales, steps to effectively conduct an online survey for data collection, survey design for data collection.

Data collection is the procedure of collecting, measuring, and analyzing accurate insights for research using standard validated techniques.

Put simply, data collection is the process of gathering information for a specific purpose. It can be used to answer research questions, make informed business decisions, or improve products and services.

To collect data, we must first identify what information we need and how we will collect it. We can also evaluate a hypothesis based on collected data. In most cases, data collection is the primary and most important step for research. The approach to data collection is different for different fields of study, depending on the required information.

LEARN ABOUT: Action Research

There are many ways to collect information when doing research. The data collection methods that the researcher chooses will depend on the research question posed. Some data collection methods include surveys, interviews, tests, physiological evaluations, observations, reviews of existing records, and biological samples. Let’s explore them.

LEARN ABOUT: Best Data Collection Tools

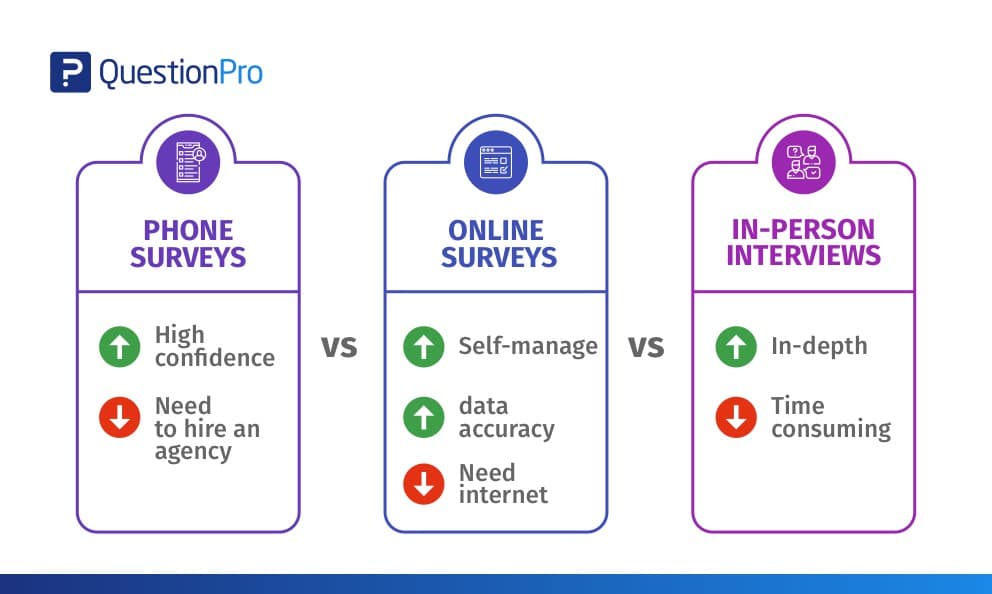

Phone vs. Online vs. In-Person Interviews

Essentially there are four choices for data collection – in-person interviews, mail, phone, and online. There are pros and cons to each of these modes.

- Pros: In-depth and a high degree of confidence in the data

- Cons: Time-consuming, expensive, and can be dismissed as anecdotal

- Pros: Can reach anyone and everyone – no barrier

- Cons: Expensive, data collection errors, lag time

- Pros: High degree of confidence in the data collected, reach almost anyone

- Cons: Expensive, cannot self-administer, need to hire an agency

- Pros: Cheap, can self-administer, very low probability of data errors

- Cons: Not all your customers might have an email address/be on the internet, customers may be wary of divulging information online.

In-person interviews always are better, but the big drawback is the trap you might fall into if you don’t do them regularly. It is expensive to regularly conduct interviews and not conducting enough interviews might give you false positives. Validating your research is almost as important as designing and conducting it.

We’ve seen many instances where after the research is conducted – if the results do not match up with the “gut-feel” of upper management, it has been dismissed off as anecdotal and a “one-time” phenomenon. To avoid such traps, we strongly recommend that data-collection be done on an “ongoing and regular” basis.

LEARN ABOUT: Research Process Steps

This will help you compare and analyze the change in perceptions according to marketing for your products/services. The other issue here is sample size. To be confident with your research, you must interview enough people to weed out the fringe elements.

A couple of years ago there was a lot of discussion about online surveys and their statistical analysis plan . The fact that not every customer had internet connectivity was one of the main concerns.

LEARN ABOUT: Statistical Analysis Methods

Although some of the discussions are still valid, the reach of the internet as a means of communication has become vital in the majority of customer interactions. According to the US Census Bureau, the number of households with computers has doubled between 1997 and 2001.

Learn more: Quantitative Market Research

In 2001 nearly 50% of households had a computer. Nearly 55% of all households with an income of more than 35,000 have internet access, which jumps to 70% for households with an annual income of 50,000. This data is from the US Census Bureau for 2001.

There are primarily three modes of data collection that can be employed to gather feedback – Mail, Phone, and Online. The method actually used for data collection is really a cost-benefit analysis. There is no slam-dunk solution but you can use the table below to understand the risks and advantages associated with each of the mediums:

| Paper | $20 – $30 | Medium | 100% |

| Phone | $20 – $35 | High | 95% |

| Online / Email | $1 – $5 | Medium | 50-70% |

Keep in mind, the reach here is defined as “All U.S. Households.” In most cases, you need to look at how many of your customers are online and determine. If all your customers have email addresses, you have a 100% reach of your customers.

Another important thing to keep in mind is the ever-increasing dominance of cellular phones over landline phones. United States FCC rules prevent automated dialing and calling cellular phone numbers and there is a noticeable trend towards people having cellular phones as the only voice communication device.

This introduces the inability to reach cellular phone customers who are dropping home phone lines in favor of going entirely wireless. Even if automated dialing is not used, another FCC rule prohibits from phoning anyone who would have to pay for the call.

Learn more: Qualitative Market Research

Multi-Mode Surveys

Surveys, where the data is collected via different modes (online, paper, phone etc.), is also another way of going. It is fairly straightforward and easy to have an online survey and have data-entry operators to enter in data (from the phone as well as paper surveys) into the system. The same system can also be used to collect data directly from the respondents.

Learn more: Survey Research

Data collection is an important aspect of research. Let’s consider an example of a mobile manufacturer, company X, which is launching a new product variant. To conduct research about features, price range, target market, competitor analysis, etc. data has to be collected from appropriate sources.

The marketing team can conduct various data collection activities such as online surveys or focus groups .

The survey should have all the right questions about features and pricing, such as “What are the top 3 features expected from an upcoming product?” or “How much are your likely to spend on this product?” or “Which competitors provide similar products?” etc.

For conducting a focus group, the marketing team should decide the participants and the mediator. The topic of discussion and objective behind conducting a focus group should be clarified beforehand to conduct a conclusive discussion.

Data collection methods are chosen depending on the available resources. For example, conducting questionnaires and surveys would require the least resources, while focus groups require moderately high resources.

Feedback is a vital part of any organization’s growth. Whether you conduct regular focus groups to elicit information from key players or, your account manager calls up all your marquee accounts to find out how things are going – essentially they are all processes to find out from your customers’ eyes – How are we doing? What can we do better?

Online surveys are just another medium to collect feedback from your customers , employees and anyone your business interacts with. With the advent of Do-It-Yourself tools for online surveys, data collection on the internet has become really easy, cheap and effective.

Learn more: Online Research

It is a well-established marketing fact that acquiring a new customer is 10 times more difficult and expensive than retaining an existing one. This is one of the fundamental driving forces behind the extensive adoption and interest in CRM and related customer retention tactics.