5.2 Experimental Design

Learning objectives.

- Explain the difference between between-subjects and within-subjects experiments, list some of the pros and cons of each approach, and decide which approach to use to answer a particular research question.

- Define random assignment, distinguish it from random sampling, explain its purpose in experimental research, and use some simple strategies to implement it

- Define several types of carryover effect, give examples of each, and explain how counterbalancing helps to deal with them.

In this section, we look at some different ways to design an experiment. The primary distinction we will make is between approaches in which each participant experiences one level of the independent variable and approaches in which each participant experiences all levels of the independent variable. The former are called between-subjects experiments and the latter are called within-subjects experiments.

Between-Subjects Experiments

In a between-subjects experiment , each participant is tested in only one condition. For example, a researcher with a sample of 100 university students might assign half of them to write about a traumatic event and the other half write about a neutral event. Or a researcher with a sample of 60 people with severe agoraphobia (fear of open spaces) might assign 20 of them to receive each of three different treatments for that disorder. It is essential in a between-subjects experiment that the researcher assigns participants to conditions so that the different groups are, on average, highly similar to each other. Those in a trauma condition and a neutral condition, for example, should include a similar proportion of men and women, and they should have similar average intelligence quotients (IQs), similar average levels of motivation, similar average numbers of health problems, and so on. This matching is a matter of controlling these extraneous participant variables across conditions so that they do not become confounding variables.

Random Assignment

The primary way that researchers accomplish this kind of control of extraneous variables across conditions is called random assignment , which means using a random process to decide which participants are tested in which conditions. Do not confuse random assignment with random sampling. Random sampling is a method for selecting a sample from a population, and it is rarely used in psychological research. Random assignment is a method for assigning participants in a sample to the different conditions, and it is an important element of all experimental research in psychology and other fields too.

In its strictest sense, random assignment should meet two criteria. One is that each participant has an equal chance of being assigned to each condition (e.g., a 50% chance of being assigned to each of two conditions). The second is that each participant is assigned to a condition independently of other participants. Thus one way to assign participants to two conditions would be to flip a coin for each one. If the coin lands heads, the participant is assigned to Condition A, and if it lands tails, the participant is assigned to Condition B. For three conditions, one could use a computer to generate a random integer from 1 to 3 for each participant. If the integer is 1, the participant is assigned to Condition A; if it is 2, the participant is assigned to Condition B; and if it is 3, the participant is assigned to Condition C. In practice, a full sequence of conditions—one for each participant expected to be in the experiment—is usually created ahead of time, and each new participant is assigned to the next condition in the sequence as he or she is tested. When the procedure is computerized, the computer program often handles the random assignment.

One problem with coin flipping and other strict procedures for random assignment is that they are likely to result in unequal sample sizes in the different conditions. Unequal sample sizes are generally not a serious problem, and you should never throw away data you have already collected to achieve equal sample sizes. However, for a fixed number of participants, it is statistically most efficient to divide them into equal-sized groups. It is standard practice, therefore, to use a kind of modified random assignment that keeps the number of participants in each group as similar as possible. One approach is block randomization . In block randomization, all the conditions occur once in the sequence before any of them is repeated. Then they all occur again before any of them is repeated again. Within each of these “blocks,” the conditions occur in a random order. Again, the sequence of conditions is usually generated before any participants are tested, and each new participant is assigned to the next condition in the sequence. Table 5.2 shows such a sequence for assigning nine participants to three conditions. The Research Randomizer website ( http://www.randomizer.org ) will generate block randomization sequences for any number of participants and conditions. Again, when the procedure is computerized, the computer program often handles the block randomization.

Random assignment is not guaranteed to control all extraneous variables across conditions. The process is random, so it is always possible that just by chance, the participants in one condition might turn out to be substantially older, less tired, more motivated, or less depressed on average than the participants in another condition. However, there are some reasons that this possibility is not a major concern. One is that random assignment works better than one might expect, especially for large samples. Another is that the inferential statistics that researchers use to decide whether a difference between groups reflects a difference in the population takes the “fallibility” of random assignment into account. Yet another reason is that even if random assignment does result in a confounding variable and therefore produces misleading results, this confound is likely to be detected when the experiment is replicated. The upshot is that random assignment to conditions—although not infallible in terms of controlling extraneous variables—is always considered a strength of a research design.

Matched Groups

An alternative to simple random assignment of participants to conditions is the use of a matched-groups design . Using this design, participants in the various conditions are matched on the dependent variable or on some extraneous variable(s) prior the manipulation of the independent variable. This guarantees that these variables will not be confounded across the experimental conditions. For instance, if we want to determine whether expressive writing affects people’s health then we could start by measuring various health-related variables in our prospective research participants. We could then use that information to rank-order participants according to how healthy or unhealthy they are. Next, the two healthiest participants would be randomly assigned to complete different conditions (one would be randomly assigned to the traumatic experiences writing condition and the other to the neutral writing condition). The next two healthiest participants would then be randomly assigned to complete different conditions, and so on until the two least healthy participants. This method would ensure that participants in the traumatic experiences writing condition are matched to participants in the neutral writing condition with respect to health at the beginning of the study. If at the end of the experiment, a difference in health was detected across the two conditions, then we would know that it is due to the writing manipulation and not to pre-existing differences in health.

Within-Subjects Experiments

In a within-subjects experiment , each participant is tested under all conditions. Consider an experiment on the effect of a defendant’s physical attractiveness on judgments of his guilt. Again, in a between-subjects experiment, one group of participants would be shown an attractive defendant and asked to judge his guilt, and another group of participants would be shown an unattractive defendant and asked to judge his guilt. In a within-subjects experiment, however, the same group of participants would judge the guilt of both an attractive and an unattractive defendant.

The primary advantage of this approach is that it provides maximum control of extraneous participant variables. Participants in all conditions have the same mean IQ, same socioeconomic status, same number of siblings, and so on—because they are the very same people. Within-subjects experiments also make it possible to use statistical procedures that remove the effect of these extraneous participant variables on the dependent variable and therefore make the data less “noisy” and the effect of the independent variable easier to detect. We will look more closely at this idea later in the book . However, not all experiments can use a within-subjects design nor would it be desirable to do so.

One disadvantage of within-subjects experiments is that they make it easier for participants to guess the hypothesis. For example, a participant who is asked to judge the guilt of an attractive defendant and then is asked to judge the guilt of an unattractive defendant is likely to guess that the hypothesis is that defendant attractiveness affects judgments of guilt. This knowledge could lead the participant to judge the unattractive defendant more harshly because he thinks this is what he is expected to do. Or it could make participants judge the two defendants similarly in an effort to be “fair.”

Carryover Effects and Counterbalancing

The primary disadvantage of within-subjects designs is that they can result in order effects. An order effect occurs when participants’ responses in the various conditions are affected by the order of conditions to which they were exposed. One type of order effect is a carryover effect. A carryover effect is an effect of being tested in one condition on participants’ behavior in later conditions. One type of carryover effect is a practice effect , where participants perform a task better in later conditions because they have had a chance to practice it. Another type is a fatigue effect , where participants perform a task worse in later conditions because they become tired or bored. Being tested in one condition can also change how participants perceive stimuli or interpret their task in later conditions. This type of effect is called a context effect (or contrast effect) . For example, an average-looking defendant might be judged more harshly when participants have just judged an attractive defendant than when they have just judged an unattractive defendant. Within-subjects experiments also make it easier for participants to guess the hypothesis. For example, a participant who is asked to judge the guilt of an attractive defendant and then is asked to judge the guilt of an unattractive defendant is likely to guess that the hypothesis is that defendant attractiveness affects judgments of guilt.

Carryover effects can be interesting in their own right. (Does the attractiveness of one person depend on the attractiveness of other people that we have seen recently?) But when they are not the focus of the research, carryover effects can be problematic. Imagine, for example, that participants judge the guilt of an attractive defendant and then judge the guilt of an unattractive defendant. If they judge the unattractive defendant more harshly, this might be because of his unattractiveness. But it could be instead that they judge him more harshly because they are becoming bored or tired. In other words, the order of the conditions is a confounding variable. The attractive condition is always the first condition and the unattractive condition the second. Thus any difference between the conditions in terms of the dependent variable could be caused by the order of the conditions and not the independent variable itself.

There is a solution to the problem of order effects, however, that can be used in many situations. It is counterbalancing , which means testing different participants in different orders. The best method of counterbalancing is complete counterbalancing in which an equal number of participants complete each possible order of conditions. For example, half of the participants would be tested in the attractive defendant condition followed by the unattractive defendant condition, and others half would be tested in the unattractive condition followed by the attractive condition. With three conditions, there would be six different orders (ABC, ACB, BAC, BCA, CAB, and CBA), so some participants would be tested in each of the six orders. With four conditions, there would be 24 different orders; with five conditions there would be 120 possible orders. With counterbalancing, participants are assigned to orders randomly, using the techniques we have already discussed. Thus, random assignment plays an important role in within-subjects designs just as in between-subjects designs. Here, instead of randomly assigning to conditions, they are randomly assigned to different orders of conditions. In fact, it can safely be said that if a study does not involve random assignment in one form or another, it is not an experiment.

A more efficient way of counterbalancing is through a Latin square design which randomizes through having equal rows and columns. For example, if you have four treatments, you must have four versions. Like a Sudoku puzzle, no treatment can repeat in a row or column. For four versions of four treatments, the Latin square design would look like:

You can see in the diagram above that the square has been constructed to ensure that each condition appears at each ordinal position (A appears first once, second once, third once, and fourth once) and each condition preceded and follows each other condition one time. A Latin square for an experiment with 6 conditions would by 6 x 6 in dimension, one for an experiment with 8 conditions would be 8 x 8 in dimension, and so on. So while complete counterbalancing of 6 conditions would require 720 orders, a Latin square would only require 6 orders.

Finally, when the number of conditions is large experiments can use random counterbalancing in which the order of the conditions is randomly determined for each participant. Using this technique every possible order of conditions is determined and then one of these orders is randomly selected for each participant. This is not as powerful a technique as complete counterbalancing or partial counterbalancing using a Latin squares design. Use of random counterbalancing will result in more random error, but if order effects are likely to be small and the number of conditions is large, this is an option available to researchers.

There are two ways to think about what counterbalancing accomplishes. One is that it controls the order of conditions so that it is no longer a confounding variable. Instead of the attractive condition always being first and the unattractive condition always being second, the attractive condition comes first for some participants and second for others. Likewise, the unattractive condition comes first for some participants and second for others. Thus any overall difference in the dependent variable between the two conditions cannot have been caused by the order of conditions. A second way to think about what counterbalancing accomplishes is that if there are carryover effects, it makes it possible to detect them. One can analyze the data separately for each order to see whether it had an effect.

When 9 Is “Larger” Than 221

Researcher Michael Birnbaum has argued that the lack of context provided by between-subjects designs is often a bigger problem than the context effects created by within-subjects designs. To demonstrate this problem, he asked participants to rate two numbers on how large they were on a scale of 1-to-10 where 1 was “very very small” and 10 was “very very large”. One group of participants were asked to rate the number 9 and another group was asked to rate the number 221 (Birnbaum, 1999) [1] . Participants in this between-subjects design gave the number 9 a mean rating of 5.13 and the number 221 a mean rating of 3.10. In other words, they rated 9 as larger than 221! According to Birnbaum, this difference is because participants spontaneously compared 9 with other one-digit numbers (in which case it is relatively large) and compared 221 with other three-digit numbers (in which case it is relatively small).

Simultaneous Within-Subjects Designs

So far, we have discussed an approach to within-subjects designs in which participants are tested in one condition at a time. There is another approach, however, that is often used when participants make multiple responses in each condition. Imagine, for example, that participants judge the guilt of 10 attractive defendants and 10 unattractive defendants. Instead of having people make judgments about all 10 defendants of one type followed by all 10 defendants of the other type, the researcher could present all 20 defendants in a sequence that mixed the two types. The researcher could then compute each participant’s mean rating for each type of defendant. Or imagine an experiment designed to see whether people with social anxiety disorder remember negative adjectives (e.g., “stupid,” “incompetent”) better than positive ones (e.g., “happy,” “productive”). The researcher could have participants study a single list that includes both kinds of words and then have them try to recall as many words as possible. The researcher could then count the number of each type of word that was recalled.

Between-Subjects or Within-Subjects?

Almost every experiment can be conducted using either a between-subjects design or a within-subjects design. This possibility means that researchers must choose between the two approaches based on their relative merits for the particular situation.

Between-subjects experiments have the advantage of being conceptually simpler and requiring less testing time per participant. They also avoid carryover effects without the need for counterbalancing. Within-subjects experiments have the advantage of controlling extraneous participant variables, which generally reduces noise in the data and makes it easier to detect a relationship between the independent and dependent variables.

A good rule of thumb, then, is that if it is possible to conduct a within-subjects experiment (with proper counterbalancing) in the time that is available per participant—and you have no serious concerns about carryover effects—this design is probably the best option. If a within-subjects design would be difficult or impossible to carry out, then you should consider a between-subjects design instead. For example, if you were testing participants in a doctor’s waiting room or shoppers in line at a grocery store, you might not have enough time to test each participant in all conditions and therefore would opt for a between-subjects design. Or imagine you were trying to reduce people’s level of prejudice by having them interact with someone of another race. A within-subjects design with counterbalancing would require testing some participants in the treatment condition first and then in a control condition. But if the treatment works and reduces people’s level of prejudice, then they would no longer be suitable for testing in the control condition. This difficulty is true for many designs that involve a treatment meant to produce long-term change in participants’ behavior (e.g., studies testing the effectiveness of psychotherapy). Clearly, a between-subjects design would be necessary here.

Remember also that using one type of design does not preclude using the other type in a different study. There is no reason that a researcher could not use both a between-subjects design and a within-subjects design to answer the same research question. In fact, professional researchers often take exactly this type of mixed methods approach.

Key Takeaways

- Experiments can be conducted using either between-subjects or within-subjects designs. Deciding which to use in a particular situation requires careful consideration of the pros and cons of each approach.

- Random assignment to conditions in between-subjects experiments or counterbalancing of orders of conditions in within-subjects experiments is a fundamental element of experimental research. The purpose of these techniques is to control extraneous variables so that they do not become confounding variables.

- You want to test the relative effectiveness of two training programs for running a marathon.

- Using photographs of people as stimuli, you want to see if smiling people are perceived as more intelligent than people who are not smiling.

- In a field experiment, you want to see if the way a panhandler is dressed (neatly vs. sloppily) affects whether or not passersby give him any money.

- You want to see if concrete nouns (e.g., dog ) are recalled better than abstract nouns (e.g., truth).

- Birnbaum, M.H. (1999). How to show that 9>221: Collect judgments in a between-subjects design. Psychological Methods, 4 (3), 243-249. ↵

Share This Book

- Increase Font Size

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.3: Threats to Internal Validity and Different Control Techniques

- Last updated

- Save as PDF

- Page ID 32915

- Yang Lydia Yang

- Kansas State University

Internal validity is often the focus from a research design perspective. To understand the pros and cons of various designs and to be able to better judge specific designs, we identify specific threats to internal validity . Before we do so, it is important to note that the primary challenge to establishing internal validity in social sciences is the fact that most of the phenomena we care about have multiple causes and are often a result of some complex set of interactions. For example, X may be only a partial cause of Y or X may cause Y, but only when Z is present. Multiple causation and interactive effects make it very difficult to demonstrate causality. Turning now to more specific threats, Figure 1.3.1 below identifies common threats to internal validity.

Different Control Techniques

All of the common threats mentioned above can introduce extraneous variables into your research design, which will potentially confound your research findings. In other words, we won't be able to tell whether it is the independent variable (i.e., the treatment we give participants), or the extraneous variable, that causes the changes in the dependent variable. Controlling for extraneous variables reduces its threats on the research design and gives us a better chance to claim the independent variable causes the changes in the dependent variable, i.e., internal validity. There are different techniques we can use to control for extraneous variables.

Random assignment

Random assignment is the single most powerful control technique we can use to minimize the potential threats of the confounding variables in research design. As we have seen in Dunn and her colleagues' study earlier, participants are not allowed to self select into either conditions (spend $20 on self or spend on others). Instead, they are randomly assigned into either group by the researcher(s). By doing so, the two groups are likely to be similar on all other factors except the independent variable itself. One confounding variable mentioned earlier is whether individuals had a happy childhood to begin with. Using random assignment, those who had a happy childhood will likely end up in each condition group. Similarly, those who didn't have a happy childhood will likely end up in each condition group too. As a consequence, we can expect the two condition groups to be very similar on this confounding variable. Applying the same logic, we can use random assignment to minimize all potential confounding variables (assuming your sample size is large enough!). With that, the only difference between the two groups is the condition participants are assigned to, which is the independent variable, then we are confident to infer that the independent variable actually causes the differences in the dependent variables.

It is critical to emphasize that random assignment is the only control technique to control for both known and unknown confounding variables. With all other control techniques mentioned below, we must first know what the confounding variable is before controlling it. Random assignment does not. With the simple act of randomly assigning participants into different conditions, we take care both the confounding variables we know of and the ones we don't even know that could threat the internal validity of our studies. As the saying goes, "what you don't know will hurt you." Random assignment take cares of it.

Matching is another technique we can use to control for extraneous variables. We must first identify the extraneous variable that can potentially confound the research design. Then we want to rank order the participants on this extraneous variable or list the participants in a ascending or descending order. Participants who are similar on the extraneous variable will be placed into different treatment groups. In other words, they are "matched" on the extraneous variable. Then we can carry out the intervention/treatment as usual. If different treatment groups do show differences on the dependent variable, we would know it is not the extraneous variables because participants are "matched" or equivalent on the extraneous variable. Rather it is more likely to the independent variable (i.e., the treatments) that causes the changes in the dependent variable. Use the example above (self-spending vs. others-spending on happiness) with the same extraneous variable of whether individuals had a happy childhood to begin with. Once we identify this extraneous variable, we do need to first collect some kind of data from the participants to measure how happy their childhood was. Or sometimes, data on the extraneous variables we plan to use may be already available (for example, you want to examine the effect of different types of tutoring on students' performance in Calculus I course and you plan to match them on this extraneous variable: college entrance test scores, which is already collected by the Admissions Office). In either case, getting the data on the identified extraneous variable is a typical step we need to do before matching. So going back to whether individuals had a happy childhood to begin with. Once we have data, we'd sort it in a certain order, for example, from the highest score (meaning participants reporting the happiest childhood) to the lowest score (meaning participants reporting the least happy childhood). We will then identify/match participants with the highest levels of childhood happiness and place them into different treatment groups. Then we go down the scale and match participants with relative high levels of childhood happiness and place them into different treatment groups. We repeat on the descending order until we match participants with the lowest levels of childhood happiness and place them into different treatment groups. By now, each treatment group will have participants with a full range of levels on childhood happiness (which is a strength...thinking about the variation, the representativeness of the sample). The two treatment groups will be similar or equivalent on this extraneous variable. If the treatments, self-spending vs. other-spending, eventually shows the differences on individual happiness, then we know it's not due to how happy their childhood was. We will be more confident it is due to the independent variable.

You may be thinking, but wait we have only taken care of one extraneous variable. What about other extraneous variables? Good thinking.That's exactly correct. We mentioned a few extraneous variables but have only matched them on one. This is the main limitation of matching. You can match participants on more than one extraneous variables, but it's cumbersome, if not impossible, to match them on 10 or 20 extraneous variables. More importantly, the more variables we try to match participants on, the less likely we will have a similar match. In other words, it may be easy to find/match participants on one particular extraneous variable (similar level of childhood happiness), but it's much harder to find/match participants to be similar on 10 different extraneous variables at once.

Holding Extraneous Variable Constant

Holding extraneous variable constant control technique is self-explanatory. We will use participants at one level of extraneous variable only, in other words, holding the extraneous variable constant. Using the same example above, for example we only want to study participants with the low level of childhood happiness. We do need to go through the same steps as in Matching: identifying the extraneous variable that can potentially confound the research design and getting the data on the identified extraneous variable. Once we have the data on childhood happiness scores, we will only include participants on the lower end of childhood happiness scores, then place them into different treatment groups and carry out the study as before. If the condition groups, self-spending vs. other-spending, eventually shows the differences on individual happiness, then we know it's not due to how happy their childhood was (since we already picked those on the lower end of childhood happiness only). We will be more confident it is due to the independent variable.

Similarly to Matching, we have to do this one extraneous variable at a time. As we increase the number of extraneous variables to be held constant, the more difficult it gets. The other limitation is by holding extraneous variable constant, we are excluding a big chunk of participants, in this case, anyone who are NOT low on childhood happiness. This is a major weakness, as we reduce the variability on the spectrum of childhood happiness levels, we decreases the representativeness of the sample and generalizabiliy suffers.

Building Extraneous Variables into Design

The last control technique building extraneous variables into research design is widely used. Like the name suggests, we would identify the extraneous variable that can potentially confound the research design, and include it into the research design by treating it as an independent variable. This control technique takes care of the limitation the previous control technique, holding extraneous variable constant, has. We don't need to excluding participants based on where they stand on the extraneous variable(s). Instead we can include participants with a wide range of levels on the extraneous variable(s). You can include multiple extraneous variables into the design at once. However, the more variables you include in the design, the large the sample size it requires for statistical analyses, which may be difficult to obtain due to limitations of time, staff, cost, access, etc.

Random Assignment in Psychology: Definition & Examples

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

In psychology, random assignment refers to the practice of allocating participants to different experimental groups in a study in a completely unbiased way, ensuring each participant has an equal chance of being assigned to any group.

In experimental research, random assignment, or random placement, organizes participants from your sample into different groups using randomization.

Random assignment uses chance procedures to ensure that each participant has an equal opportunity of being assigned to either a control or experimental group.

The control group does not receive the treatment in question, whereas the experimental group does receive the treatment.

When using random assignment, neither the researcher nor the participant can choose the group to which the participant is assigned. This ensures that any differences between and within the groups are not systematic at the onset of the study.

In a study to test the success of a weight-loss program, investigators randomly assigned a pool of participants to one of two groups.

Group A participants participated in the weight-loss program for 10 weeks and took a class where they learned about the benefits of healthy eating and exercise.

Group B participants read a 200-page book that explains the benefits of weight loss. The investigator randomly assigned participants to one of the two groups.

The researchers found that those who participated in the program and took the class were more likely to lose weight than those in the other group that received only the book.

Importance

Random assignment ensures that each group in the experiment is identical before applying the independent variable.

In experiments , researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. Random assignment increases the likelihood that the treatment groups are the same at the onset of a study.

Thus, any changes that result from the independent variable can be assumed to be a result of the treatment of interest. This is particularly important for eliminating sources of bias and strengthening the internal validity of an experiment.

Random assignment is the best method for inferring a causal relationship between a treatment and an outcome.

Random Selection vs. Random Assignment

Random selection (also called probability sampling or random sampling) is a way of randomly selecting members of a population to be included in your study.

On the other hand, random assignment is a way of sorting the sample participants into control and treatment groups.

Random selection ensures that everyone in the population has an equal chance of being selected for the study. Once the pool of participants has been chosen, experimenters use random assignment to assign participants into groups.

Random assignment is only used in between-subjects experimental designs, while random selection can be used in a variety of study designs.

Random Assignment vs Random Sampling

Random sampling refers to selecting participants from a population so that each individual has an equal chance of being chosen. This method enhances the representativeness of the sample.

Random assignment, on the other hand, is used in experimental designs once participants are selected. It involves allocating these participants to different experimental groups or conditions randomly.

This helps ensure that any differences in results across groups are due to manipulating the independent variable, not preexisting differences among participants.

When to Use Random Assignment

Random assignment is used in experiments with a between-groups or independent measures design.

In these research designs, researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables.

There is usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable at the onset of the study.

How to Use Random Assignment

There are a variety of ways to assign participants into study groups randomly. Here are a handful of popular methods:

- Random Number Generator : Give each member of the sample a unique number; use a computer program to randomly generate a number from the list for each group.

- Lottery : Give each member of the sample a unique number. Place all numbers in a hat or bucket and draw numbers at random for each group.

- Flipping a Coin : Flip a coin for each participant to decide if they will be in the control group or experimental group (this method can only be used when you have just two groups)

- Roll a Die : For each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1, 2, or 3 places them in a control group and rolling 3, 4, 5 lands them in an experimental group.

When is Random Assignment not used?

- When it is not ethically permissible: Randomization is only ethical if the researcher has no evidence that one treatment is superior to the other or that one treatment might have harmful side effects.

- When answering non-causal questions : If the researcher is just interested in predicting the probability of an event, the causal relationship between the variables is not important and observational designs would be more suitable than random assignment.

- When studying the effect of variables that cannot be manipulated: Some risk factors cannot be manipulated and so it would not make any sense to study them in a randomized trial. For example, we cannot randomly assign participants into categories based on age, gender, or genetic factors.

Drawbacks of Random Assignment

While randomization assures an unbiased assignment of participants to groups, it does not guarantee the equality of these groups. There could still be extraneous variables that differ between groups or group differences that arise from chance. Additionally, there is still an element of luck with random assignments.

Thus, researchers can not produce perfectly equal groups for each specific study. Differences between the treatment group and control group might still exist, and the results of a randomized trial may sometimes be wrong, but this is absolutely okay.

Scientific evidence is a long and continuous process, and the groups will tend to be equal in the long run when data is aggregated in a meta-analysis.

Additionally, external validity (i.e., the extent to which the researcher can use the results of the study to generalize to the larger population) is compromised with random assignment.

Random assignment is challenging to implement outside of controlled laboratory conditions and might not represent what would happen in the real world at the population level.

Random assignment can also be more costly than simple observational studies, where an investigator is just observing events without intervening with the population.

Randomization also can be time-consuming and challenging, especially when participants refuse to receive the assigned treatment or do not adhere to recommendations.

What is the difference between random sampling and random assignment?

Random sampling refers to randomly selecting a sample of participants from a population. Random assignment refers to randomly assigning participants to treatment groups from the selected sample.

Does random assignment increase internal validity?

Yes, random assignment ensures that there are no systematic differences between the participants in each group, enhancing the study’s internal validity .

Does random assignment reduce sampling error?

Yes, with random assignment, participants have an equal chance of being assigned to either a control group or an experimental group, resulting in a sample that is, in theory, representative of the population.

Random assignment does not completely eliminate sampling error because a sample only approximates the population from which it is drawn. However, random sampling is a way to minimize sampling errors.

When is random assignment not possible?

Random assignment is not possible when the experimenters cannot control the treatment or independent variable.

For example, if you want to compare how men and women perform on a test, you cannot randomly assign subjects to these groups.

Participants are not randomly assigned to different groups in this study, but instead assigned based on their characteristics.

Does random assignment eliminate confounding variables?

Yes, random assignment eliminates the influence of any confounding variables on the treatment because it distributes them at random among the study groups. Randomization invalidates any relationship between a confounding variable and the treatment.

Why is random assignment of participants to treatment conditions in an experiment used?

Random assignment is used to ensure that all groups are comparable at the start of a study. This allows researchers to conclude that the outcomes of the study can be attributed to the intervention at hand and to rule out alternative explanations for study results.

Further Reading

- Bogomolnaia, A., & Moulin, H. (2001). A new solution to the random assignment problem . Journal of Economic theory , 100 (2), 295-328.

- Krause, M. S., & Howard, K. I. (2003). What random assignment does and does not do . Journal of Clinical Psychology , 59 (7), 751-766.

Chapter 6: Experimental Research

6.2 experimental design, learning objectives.

- Explain the difference between between-subjects and within-subjects experiments, list some of the pros and cons of each approach, and decide which approach to use to answer a particular research question.

- Define random assignment, distinguish it from random sampling, explain its purpose in experimental research, and use some simple strategies to implement it.

- Define what a control condition is, explain its purpose in research on treatment effectiveness, and describe some alternative types of control conditions.

- Define several types of carryover effect, give examples of each, and explain how counterbalancing helps to deal with them.

In this section, we look at some different ways to design an experiment. The primary distinction we will make is between approaches in which each participant experiences one level of the independent variable and approaches in which each participant experiences all levels of the independent variable. The former are called between-subjects experiments and the latter are called within-subjects experiments.

Between-Subjects Experiments

In a between-subjects experiment , each participant is tested in only one condition. For example, a researcher with a sample of 100 college students might assign half of them to write about a traumatic event and the other half write about a neutral event. Or a researcher with a sample of 60 people with severe agoraphobia (fear of open spaces) might assign 20 of them to receive each of three different treatments for that disorder. It is essential in a between-subjects experiment that the researcher assign participants to conditions so that the different groups are, on average, highly similar to each other. Those in a trauma condition and a neutral condition, for example, should include a similar proportion of men and women, and they should have similar average intelligence quotients (IQs), similar average levels of motivation, similar average numbers of health problems, and so on. This is a matter of controlling these extraneous participant variables across conditions so that they do not become confounding variables.

Random Assignment

The primary way that researchers accomplish this kind of control of extraneous variables across conditions is called random assignment , which means using a random process to decide which participants are tested in which conditions. Do not confuse random assignment with random sampling. Random sampling is a method for selecting a sample from a population, and it is rarely used in psychological research. Random assignment is a method for assigning participants in a sample to the different conditions, and it is an important element of all experimental research in psychology and other fields too.

In its strictest sense, random assignment should meet two criteria. One is that each participant has an equal chance of being assigned to each condition (e.g., a 50% chance of being assigned to each of two conditions). The second is that each participant is assigned to a condition independently of other participants. Thus one way to assign participants to two conditions would be to flip a coin for each one. If the coin lands heads, the participant is assigned to Condition A, and if it lands tails, the participant is assigned to Condition B. For three conditions, one could use a computer to generate a random integer from 1 to 3 for each participant. If the integer is 1, the participant is assigned to Condition A; if it is 2, the participant is assigned to Condition B; and if it is 3, the participant is assigned to Condition C. In practice, a full sequence of conditions—one for each participant expected to be in the experiment—is usually created ahead of time, and each new participant is assigned to the next condition in the sequence as he or she is tested. When the procedure is computerized, the computer program often handles the random assignment.

One problem with coin flipping and other strict procedures for random assignment is that they are likely to result in unequal sample sizes in the different conditions. Unequal sample sizes are generally not a serious problem, and you should never throw away data you have already collected to achieve equal sample sizes. However, for a fixed number of participants, it is statistically most efficient to divide them into equal-sized groups. It is standard practice, therefore, to use a kind of modified random assignment that keeps the number of participants in each group as similar as possible. One approach is block randomization . In block randomization, all the conditions occur once in the sequence before any of them is repeated. Then they all occur again before any of them is repeated again. Within each of these “blocks,” the conditions occur in a random order. Again, the sequence of conditions is usually generated before any participants are tested, and each new participant is assigned to the next condition in the sequence. Table 6.2 “Block Randomization Sequence for Assigning Nine Participants to Three Conditions” shows such a sequence for assigning nine participants to three conditions. The Research Randomizer website ( http://www.randomizer.org ) will generate block randomization sequences for any number of participants and conditions. Again, when the procedure is computerized, the computer program often handles the block randomization.

Table 6.2 Block Randomization Sequence for Assigning Nine Participants to Three Conditions

Random assignment is not guaranteed to control all extraneous variables across conditions. It is always possible that just by chance, the participants in one condition might turn out to be substantially older, less tired, more motivated, or less depressed on average than the participants in another condition. However, there are some reasons that this is not a major concern. One is that random assignment works better than one might expect, especially for large samples. Another is that the inferential statistics that researchers use to decide whether a difference between groups reflects a difference in the population takes the “fallibility” of random assignment into account. Yet another reason is that even if random assignment does result in a confounding variable and therefore produces misleading results, this is likely to be detected when the experiment is replicated. The upshot is that random assignment to conditions—although not infallible in terms of controlling extraneous variables—is always considered a strength of a research design.

Treatment and Control Conditions

Between-subjects experiments are often used to determine whether a treatment works. In psychological research, a treatment is any intervention meant to change people’s behavior for the better. This includes psychotherapies and medical treatments for psychological disorders but also interventions designed to improve learning, promote conservation, reduce prejudice, and so on. To determine whether a treatment works, participants are randomly assigned to either a treatment condition , in which they receive the treatment, or a control condition , in which they do not receive the treatment. If participants in the treatment condition end up better off than participants in the control condition—for example, they are less depressed, learn faster, conserve more, express less prejudice—then the researcher can conclude that the treatment works. In research on the effectiveness of psychotherapies and medical treatments, this type of experiment is often called a randomized clinical trial .

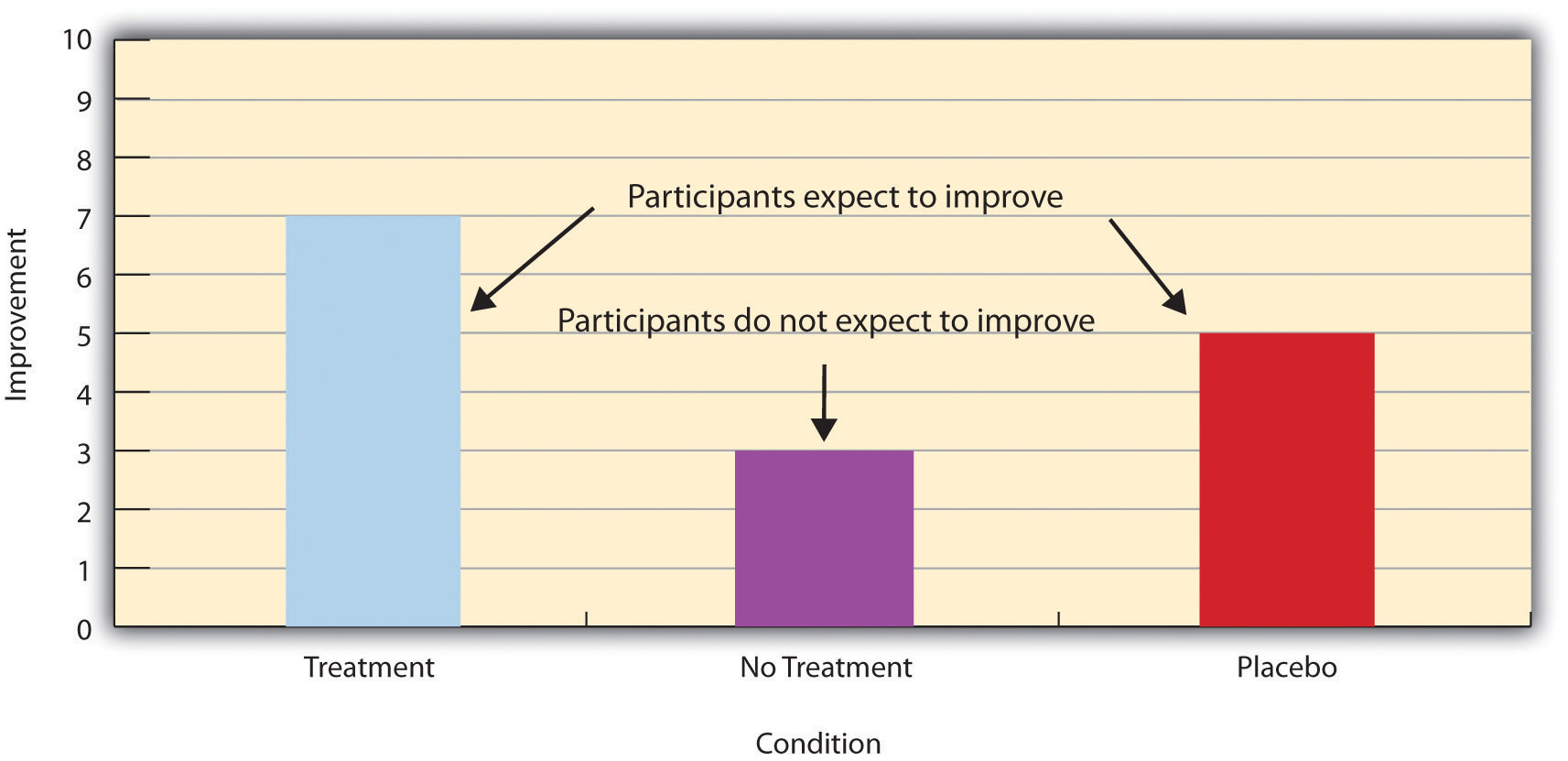

There are different types of control conditions. In a no-treatment control condition , participants receive no treatment whatsoever. One problem with this approach, however, is the existence of placebo effects. A placebo is a simulated treatment that lacks any active ingredient or element that should make it effective, and a placebo effect is a positive effect of such a treatment. Many folk remedies that seem to work—such as eating chicken soup for a cold or placing soap under the bedsheets to stop nighttime leg cramps—are probably nothing more than placebos. Although placebo effects are not well understood, they are probably driven primarily by people’s expectations that they will improve. Having the expectation to improve can result in reduced stress, anxiety, and depression, which can alter perceptions and even improve immune system functioning (Price, Finniss, & Benedetti, 2008).

Placebo effects are interesting in their own right (see Note 6.28 “The Powerful Placebo” ), but they also pose a serious problem for researchers who want to determine whether a treatment works. Figure 6.2 “Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions” shows some hypothetical results in which participants in a treatment condition improved more on average than participants in a no-treatment control condition. If these conditions (the two leftmost bars in Figure 6.2 “Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions” ) were the only conditions in this experiment, however, one could not conclude that the treatment worked. It could be instead that participants in the treatment group improved more because they expected to improve, while those in the no-treatment control condition did not.

Figure 6.2 Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions

Fortunately, there are several solutions to this problem. One is to include a placebo control condition , in which participants receive a placebo that looks much like the treatment but lacks the active ingredient or element thought to be responsible for the treatment’s effectiveness. When participants in a treatment condition take a pill, for example, then those in a placebo control condition would take an identical-looking pill that lacks the active ingredient in the treatment (a “sugar pill”). In research on psychotherapy effectiveness, the placebo might involve going to a psychotherapist and talking in an unstructured way about one’s problems. The idea is that if participants in both the treatment and the placebo control groups expect to improve, then any improvement in the treatment group over and above that in the placebo control group must have been caused by the treatment and not by participants’ expectations. This is what is shown by a comparison of the two outer bars in Figure 6.2 “Hypothetical Results From a Study Including Treatment, No-Treatment, and Placebo Conditions” .

Of course, the principle of informed consent requires that participants be told that they will be assigned to either a treatment or a placebo control condition—even though they cannot be told which until the experiment ends. In many cases the participants who had been in the control condition are then offered an opportunity to have the real treatment. An alternative approach is to use a waitlist control condition , in which participants are told that they will receive the treatment but must wait until the participants in the treatment condition have already received it. This allows researchers to compare participants who have received the treatment with participants who are not currently receiving it but who still expect to improve (eventually). A final solution to the problem of placebo effects is to leave out the control condition completely and compare any new treatment with the best available alternative treatment. For example, a new treatment for simple phobia could be compared with standard exposure therapy. Because participants in both conditions receive a treatment, their expectations about improvement should be similar. This approach also makes sense because once there is an effective treatment, the interesting question about a new treatment is not simply “Does it work?” but “Does it work better than what is already available?”

The Powerful Placebo

Many people are not surprised that placebos can have a positive effect on disorders that seem fundamentally psychological, including depression, anxiety, and insomnia. However, placebos can also have a positive effect on disorders that most people think of as fundamentally physiological. These include asthma, ulcers, and warts (Shapiro & Shapiro, 1999). There is even evidence that placebo surgery—also called “sham surgery”—can be as effective as actual surgery.

Medical researcher J. Bruce Moseley and his colleagues conducted a study on the effectiveness of two arthroscopic surgery procedures for osteoarthritis of the knee (Moseley et al., 2002). The control participants in this study were prepped for surgery, received a tranquilizer, and even received three small incisions in their knees. But they did not receive the actual arthroscopic surgical procedure. The surprising result was that all participants improved in terms of both knee pain and function, and the sham surgery group improved just as much as the treatment groups. According to the researchers, “This study provides strong evidence that arthroscopic lavage with or without débridement [the surgical procedures used] is not better than and appears to be equivalent to a placebo procedure in improving knee pain and self-reported function” (p. 85).

Research has shown that patients with osteoarthritis of the knee who receive a “sham surgery” experience reductions in pain and improvement in knee function similar to those of patients who receive a real surgery.

Army Medicine – Surgery – CC BY 2.0.

Within-Subjects Experiments

In a within-subjects experiment , each participant is tested under all conditions. Consider an experiment on the effect of a defendant’s physical attractiveness on judgments of his guilt. Again, in a between-subjects experiment, one group of participants would be shown an attractive defendant and asked to judge his guilt, and another group of participants would be shown an unattractive defendant and asked to judge his guilt. In a within-subjects experiment, however, the same group of participants would judge the guilt of both an attractive and an unattractive defendant.

The primary advantage of this approach is that it provides maximum control of extraneous participant variables. Participants in all conditions have the same mean IQ, same socioeconomic status, same number of siblings, and so on—because they are the very same people. Within-subjects experiments also make it possible to use statistical procedures that remove the effect of these extraneous participant variables on the dependent variable and therefore make the data less “noisy” and the effect of the independent variable easier to detect. We will look more closely at this idea later in the book.

Carryover Effects and Counterbalancing

The primary disadvantage of within-subjects designs is that they can result in carryover effects. A carryover effect is an effect of being tested in one condition on participants’ behavior in later conditions. One type of carryover effect is a practice effect , where participants perform a task better in later conditions because they have had a chance to practice it. Another type is a fatigue effect , where participants perform a task worse in later conditions because they become tired or bored. Being tested in one condition can also change how participants perceive stimuli or interpret their task in later conditions. This is called a context effect . For example, an average-looking defendant might be judged more harshly when participants have just judged an attractive defendant than when they have just judged an unattractive defendant. Within-subjects experiments also make it easier for participants to guess the hypothesis. For example, a participant who is asked to judge the guilt of an attractive defendant and then is asked to judge the guilt of an unattractive defendant is likely to guess that the hypothesis is that defendant attractiveness affects judgments of guilt. This could lead the participant to judge the unattractive defendant more harshly because he thinks this is what he is expected to do. Or it could make participants judge the two defendants similarly in an effort to be “fair.”

Carryover effects can be interesting in their own right. (Does the attractiveness of one person depend on the attractiveness of other people that we have seen recently?) But when they are not the focus of the research, carryover effects can be problematic. Imagine, for example, that participants judge the guilt of an attractive defendant and then judge the guilt of an unattractive defendant. If they judge the unattractive defendant more harshly, this might be because of his unattractiveness. But it could be instead that they judge him more harshly because they are becoming bored or tired. In other words, the order of the conditions is a confounding variable. The attractive condition is always the first condition and the unattractive condition the second. Thus any difference between the conditions in terms of the dependent variable could be caused by the order of the conditions and not the independent variable itself.

There is a solution to the problem of order effects, however, that can be used in many situations. It is counterbalancing , which means testing different participants in different orders. For example, some participants would be tested in the attractive defendant condition followed by the unattractive defendant condition, and others would be tested in the unattractive condition followed by the attractive condition. With three conditions, there would be six different orders (ABC, ACB, BAC, BCA, CAB, and CBA), so some participants would be tested in each of the six orders. With counterbalancing, participants are assigned to orders randomly, using the techniques we have already discussed. Thus random assignment plays an important role in within-subjects designs just as in between-subjects designs. Here, instead of randomly assigning to conditions, they are randomly assigned to different orders of conditions. In fact, it can safely be said that if a study does not involve random assignment in one form or another, it is not an experiment.

There are two ways to think about what counterbalancing accomplishes. One is that it controls the order of conditions so that it is no longer a confounding variable. Instead of the attractive condition always being first and the unattractive condition always being second, the attractive condition comes first for some participants and second for others. Likewise, the unattractive condition comes first for some participants and second for others. Thus any overall difference in the dependent variable between the two conditions cannot have been caused by the order of conditions. A second way to think about what counterbalancing accomplishes is that if there are carryover effects, it makes it possible to detect them. One can analyze the data separately for each order to see whether it had an effect.

When 9 Is “Larger” Than 221

Researcher Michael Birnbaum has argued that the lack of context provided by between-subjects designs is often a bigger problem than the context effects created by within-subjects designs. To demonstrate this, he asked one group of participants to rate how large the number 9 was on a 1-to-10 rating scale and another group to rate how large the number 221 was on the same 1-to-10 rating scale (Birnbaum, 1999). Participants in this between-subjects design gave the number 9 a mean rating of 5.13 and the number 221 a mean rating of 3.10. In other words, they rated 9 as larger than 221! According to Birnbaum, this is because participants spontaneously compared 9 with other one-digit numbers (in which case it is relatively large) and compared 221 with other three-digit numbers (in which case it is relatively small).

Simultaneous Within-Subjects Designs

So far, we have discussed an approach to within-subjects designs in which participants are tested in one condition at a time. There is another approach, however, that is often used when participants make multiple responses in each condition. Imagine, for example, that participants judge the guilt of 10 attractive defendants and 10 unattractive defendants. Instead of having people make judgments about all 10 defendants of one type followed by all 10 defendants of the other type, the researcher could present all 20 defendants in a sequence that mixed the two types. The researcher could then compute each participant’s mean rating for each type of defendant. Or imagine an experiment designed to see whether people with social anxiety disorder remember negative adjectives (e.g., “stupid,” “incompetent”) better than positive ones (e.g., “happy,” “productive”). The researcher could have participants study a single list that includes both kinds of words and then have them try to recall as many words as possible. The researcher could then count the number of each type of word that was recalled. There are many ways to determine the order in which the stimuli are presented, but one common way is to generate a different random order for each participant.

Between-Subjects or Within-Subjects?

Almost every experiment can be conducted using either a between-subjects design or a within-subjects design. This means that researchers must choose between the two approaches based on their relative merits for the particular situation.

Between-subjects experiments have the advantage of being conceptually simpler and requiring less testing time per participant. They also avoid carryover effects without the need for counterbalancing. Within-subjects experiments have the advantage of controlling extraneous participant variables, which generally reduces noise in the data and makes it easier to detect a relationship between the independent and dependent variables.

A good rule of thumb, then, is that if it is possible to conduct a within-subjects experiment (with proper counterbalancing) in the time that is available per participant—and you have no serious concerns about carryover effects—this is probably the best option. If a within-subjects design would be difficult or impossible to carry out, then you should consider a between-subjects design instead. For example, if you were testing participants in a doctor’s waiting room or shoppers in line at a grocery store, you might not have enough time to test each participant in all conditions and therefore would opt for a between-subjects design. Or imagine you were trying to reduce people’s level of prejudice by having them interact with someone of another race. A within-subjects design with counterbalancing would require testing some participants in the treatment condition first and then in a control condition. But if the treatment works and reduces people’s level of prejudice, then they would no longer be suitable for testing in the control condition. This is true for many designs that involve a treatment meant to produce long-term change in participants’ behavior (e.g., studies testing the effectiveness of psychotherapy). Clearly, a between-subjects design would be necessary here.

Remember also that using one type of design does not preclude using the other type in a different study. There is no reason that a researcher could not use both a between-subjects design and a within-subjects design to answer the same research question. In fact, professional researchers often do exactly this.

Key Takeaways

- Experiments can be conducted using either between-subjects or within-subjects designs. Deciding which to use in a particular situation requires careful consideration of the pros and cons of each approach.

- Random assignment to conditions in between-subjects experiments or to orders of conditions in within-subjects experiments is a fundamental element of experimental research. Its purpose is to control extraneous variables so that they do not become confounding variables.

- Experimental research on the effectiveness of a treatment requires both a treatment condition and a control condition, which can be a no-treatment control condition, a placebo control condition, or a waitlist control condition. Experimental treatments can also be compared with the best available alternative.

Discussion: For each of the following topics, list the pros and cons of a between-subjects and within-subjects design and decide which would be better.

- You want to test the relative effectiveness of two training programs for running a marathon.

- Using photographs of people as stimuli, you want to see if smiling people are perceived as more intelligent than people who are not smiling.

- In a field experiment, you want to see if the way a panhandler is dressed (neatly vs. sloppily) affects whether or not passersby give him any money.

- You want to see if concrete nouns (e.g., dog ) are recalled better than abstract nouns (e.g., truth ).

- Discussion: Imagine that an experiment shows that participants who receive psychodynamic therapy for a dog phobia improve more than participants in a no-treatment control group. Explain a fundamental problem with this research design and at least two ways that it might be corrected.

Birnbaum, M. H. (1999). How to show that 9 > 221: Collect judgments in a between-subjects design. Psychological Methods, 4 , 243–249.

Moseley, J. B., O’Malley, K., Petersen, N. J., Menke, T. J., Brody, B. A., Kuykendall, D. H., … Wray, N. P. (2002). A controlled trial of arthroscopic surgery for osteoarthritis of the knee. The New England Journal of Medicine, 347 , 81–88.

Price, D. D., Finniss, D. G., & Benedetti, F. (2008). A comprehensive review of the placebo effect: Recent advances and current thought. Annual Review of Psychology, 59 , 565–590.

Shapiro, A. K., & Shapiro, E. (1999). The powerful placebo: From ancient priest to modern physician . Baltimore, MD: Johns Hopkins University Press.

- Research Methods in Psychology. Provided by : University of Minnesota Libraries Publishing. Located at : http://open.lib.umn.edu/psychologyresearchmethods . License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

Privacy Policy

Matching and Randomization in Experiments

Thoughts on a classic paper on causality.

Jeremy Salfen

- Custom Social Profile Link

I recently read Donald Rubin’s classic paper Estimating Causal Effects of Treatments in Randomized and Nonrandomized Studies ( PDF ) as part of the Kickstarter Data team’s reading group.

Two arguments in this paper jumped out at me, the first about the value of matching and the second about the costs and benefits of conducting a randomized versus observational study.

Rubin proposes a hypothetical experiment on 2 N units in which the experimental treatment E is assigned to N units while the control treatment C is assigned to a different set of N units. If for every unit receiving E there is a matched unit receiving C such that we expect the pair to react identically to the same treatment, then there are N identically matched pairs. Rubin observes that

if one had N identically matched pairs, a “thoughtless” random assignment could be worse than a nonrandom assignment of E to one member of the pair and C to the other. By “thoughtless” we mean some random assignment that does not assure that the members of each matched pair get different treatments. (692)

In this case, “thoughtless” randomization “could be worse” in the sense that the statistical power of the experiment will suffer, and you will be less likely to detect an effect if there really is one.

Geography is a good example of this. If you’re running an experiment on users across the United States, matching similar geographic regions might be more effective than a completely randomized trial because the variation between regions might be higher than variation within regions , diluting the effect.

Of course, a multilevel model that takes into account region is one solution. For an insightful description of how Google has approached this problem, see Estimating causal effects using geo experiments .

Randomized vs. Observational Studies

Another one of Rubin’s claims that stood out to me is a comparison of the costs and benefits of typical randomized and observational studies.

One major advantage of randomized studies is that, with a large enough sample size, you often don’t have to worry about controlling for confounding factors that might bias your results.

There can be downsides to randomized studies though. They can have nontrivial setup costs, and running a randomized study over a long window (e.g. years) is often not feasible. Moreover, because randomized trials are often conducted in a controlled environment, Rubin claims that they tend to be less natural than an observational study — that is, the units of analysis are often constrained to a particular setting or selected to be a subset of the population of interest.

Granted, this is more the case for experiments in fields like psychology than in online experiments on the web, but it does suggest that generalizability is a factor we should consider when interpreting the results of a randomized trial.

Comparing these costs and benefits, Rubin argues that

the first issue, the effect of variables not explicitly controlled, is usually more serious in nonrandomized than in randomized studies, while the second, the applicability of the results to a population of interest, is often more serious in randomized than in nonrandomized studies. (698)

I take this as a reminder to think carefully about the generalizability of the results of an experiment. When we run experiments on specific parts of a website or on particular subsets of users, often our goal is to generalize these results to the entire website or to all users. Rubin reminds us that observational studies, when analyzed properly, may in fact be better suited to those kinds of claims, particularly when matching can be used.

UCCS Community

- Current Students

- Faculty Staff

- Alumni & Friends

- Parents & Families

Schools and Colleges

- College of Business

- College of Education

- College of Engineering and Applied Science

- College of Letters, Arts & Sciences

- College of Public Service

- Graduate School

- Helen and Arthur E. Johnson Beth-El College of Nursing and Health Sciences

Quick Links

- Search for Programs & Careers

- Academic Advising

- Ent Center for the Arts

- Kraemer Family Library

- Military and Veteran Affairs

- myUCCS Portal

- Campus Email

- Microsoft 365

- Mountain Lion Connect

- Support Network: Students

- Support Network: Faculty

- Account Help

Effect Size Calculators

Dr. Lee A. Becker

- Content, Part 1

- Content, Part 2

- Research Tools

Statistical Analysis of Quasi-Experimental Designs:

I. apriori selection techniques.

Content, part II

I. Overview

Random assignment is used in experimental designs to help assure that different treatment groups are equivalent prior to treatment. With small n 's randomization is messy, the groups may not be equivalent on some important characteristic.

In general, matching is used when you want to make sure that members of the various groups are equivalent on one or more characteristics. If you are want to make absolutely sure that the treatment groups are equivalent on some attribute you can use matched random assignment.

When you can't randomly assign to conditions you can still use matching techniques to try to equate groups on important characteristics. This set of notes makes the distinction between normative group matching and normative group equivalence. In normative group matching you select an exact match from normative comparison group for each participant in the treatment group. In normative group equivalence you select a comparison group that has approximately equivalent characteristics to the treatment group.

II. Matching in Experimental Designs: Matched Random Assignment

In an experimental design, matched random sampling can be used to equate the groups on one or more characteristics. Whitley (in chapter 8) uses an example of matching on IQ.

The Matching Process

Note: Tx = Treatment group, Ctl = Control Group.

Analysis of a Matched Random Assignment Design

If the matching variable is related to the dependent variable, (e.g., IQ is related to almost all studies of memory and learning), then you can incorporate the matching variable as a blocking variable in your analysis of variance. That is, in the 2 x 3 example, the first 6 participants can be entered as IQ block #1, the second 6 participants as IQ block #2. This removes the variance due to IQ from the error term, increasing the power of the study.

The analysis is treated as a repeated measures design where the measures for each block of participants are considered to be repeated measures. For example, in setting up the data for a two-group design (experimental vs. control) the data would look like this:

The analysis would be run as a repeated measures design with group (control vs. experimental) as a within-subjects factor.

If you were interested in analyzing the equivalence of the groups on the IQ score variable you could enter the IQ scores as separate variables. An analysis of variance of the IQ scores with treatment group (Treatment vs. Control) as a within-subjects factor should show no mean differences between the two groups. Entering the IQ data would allow you to find the correlation between IQ and performance scores within each treatment group.

One of the problems with this type of analysis is that if any score is missing then the entire block is set to missing. None of the performance data from Block 4 in Table 2 would be included in the analysis because the performance score is missing for the person in the control group. If you had a 6 cells in your design you would loose the data on all 6 people in a block that had only one missing data point.

I understand that Dr. Klebe has been writing a new data analysis program to take care of this kind of missing data problem.

SPSS Note

The SPSS syntax commands for running the data in Table 2 as a repeated measures analysis of variance are shown in Table 3. The SPSS syntax commands for running the data in Table 2 as a paired t test are shown in Table 4.

III. Matching in Quasi-Experimental Designs: Normative Group Matching

Suppose that you have a quasi-experiment where you want to compare an experimental group (e.g., people who have suffered mild head injury) with a sample from a normative population. Suppose that there are several hundred people in the normative population.

One strategy is to randomly select the same number of people from the normative population as you have in your experimental group. If the demographic characteristics of the normative group approximate those of your experimental group, then this process may be appropriate. But, what if the normative group contains equal numbers of males and females ranging in age from 6 to 102, and people in your experimental condition are all males ranging in age from 18 to 35? Then it is unlikely that the demographic characteristics of the people sampled from the normative group will match those of your experimental group. For that reason, simple random selection is rarely appropriate when sampling from a normative population.

The Normative Group Matching Procedure

Determine the relevant characteristics (e.g., age, gender, SES, etc.) of each person in your experimental group. E.g., Exp person #1 is a 27 year-old male. Then randomly select one of the 27 year-old males from the normative population as a match for Exp person #1. Exp person #2 is a 35 year-old male, then randomly select one of the 35 year-old males as a match for Exp person #2. If you have done randomize normative group matching then the matching variable should be used as a blocking factor in the ANOVA.

If you have a limited number of people in the normative group then you can do caliper matching . In caliper matching you select the matching person based a range of scores, for example, you can caliper match within a range of 3 years. Exp person #1 would be randomly selected from males whose age ranged from 26 to 27 years. If you used a five year caliper for age then for exp person #1 you randomly select a males from those whose age ranged from 25 to 29 years old. You would want a narrower age caliper for children and adolescents than for adults.