- Resources Home 🏠

- Try SciSpace Copilot

- Search research papers

- Add Copilot Extension

- Try AI Detector

- Try Paraphraser

- Try Citation Generator

- April Papers

- June Papers

- July Papers

AI for thesis writing — Unveiling 7 best AI tools

Table of Contents

Writing a thesis is akin to piecing together a complex puzzle. Each research paper, every data point, and all the hours spent reading and analyzing contribute to this monumental task.

For many students, this journey is a relentless pursuit of knowledge, often marked by sleepless nights and tight deadlines.

Here, the potential of AI for writing a thesis or research papers becomes clear: artificial intelligence can step in, not to take over but to assist and guide.

Far from being just a trendy term, AI is revolutionizing academic research, offering tools that can make the task of thesis writing more manageable, more precise, and a little less overwhelming.

In this article, we’ll discuss the impact of AI on academic writing process, and articulate the best AI tools for thesis writing to enhance your thesis writing process.

The Impact of AI on Thesis Writing

Artificial Intelligence offers a supportive hand in thesis writing, adeptly navigating vast datasets, suggesting enhancements in writing, and refining the narrative.

With the integration of AI writing assistant, instead of requiring you to manually sift through endless articles, AI tools can spotlight the most pertinent pieces in mere moments. Need clarity or the right phrasing? AI-driven writing assistants are there, offering real-time feedback, ensuring your work is both articulative and academically sound.

AI tools for thesis writing harness Natural Language Processing (NLP) to generate content, check grammar, and assist in literature reviews. Simultaneously, Machine Learning (ML) techniques enable data analysis, provide personalized research recommendations, and aid in proper citation.

And for the detailed tasks of academic formatting and referencing? AI streamlines it all, ensuring your thesis meets the highest academic standards.

However, understanding AI's role is pivotal. It's a supportive tool, not the primary author. Your thesis remains a testament to your unique perspective and voice.

AI for writing thesis is there to amplify that voice, ensuring it's heard clearly and effectively.

How AI tools supplement your thesis writing

AI tools have emerged as invaluable allies for scholars. With just a few clicks, these advanced platforms can streamline various aspects of thesis writing, from data analysis to literature review.

Let's explore how an AI tool can supplement and transform your thesis writing style and process.

Efficient literature review : AI tools can quickly scan and summarize vast amounts of literature, making the process of literature review more efficient. Instead of spending countless hours reading through papers, researchers can get concise summaries and insights, allowing them to focus on relevant content.

Enhanced data analysis : AI algorithms can process and analyze large datasets with ease, identifying patterns, trends, and correlations that might be difficult or time-consuming for humans to detect. This capability is especially valuable in fields with massive datasets, like genomics or social sciences.

Improved writing quality : AI-powered writing assistants can provide real-time feedback on grammar, style, and coherence. They can suggest improvements, ensuring that the final draft of a research paper or thesis is of high quality.

Plagiarism detection : AI tools can scan vast databases of academic content to ensure that a researcher's work is original and free from unintentional plagiarism .

Automated citations : Managing and formatting citations is a tedious aspect of academic writing. AI citation generators can automatically format citations according to specific journal or conference standards, reducing the chances of errors.

Personalized research recommendations : AI tools can analyze a researcher's past work and reading habits to recommend relevant papers and articles, ensuring that they stay updated with the latest in their field.

Interactive data visualization : AI can assist in creating dynamic and interactive visualizations, making it easier for researchers to present their findings in a more engaging manner.

Top 7 AI Tools for Thesis Writing

The academic field is brimming with AI tools tailored for academic paper writing. Here's a glimpse into some of the most popular and effective ones.

Here we'll talk about some of the best ai writing tools, expanding on their major uses, benefits, and reasons to consider them.

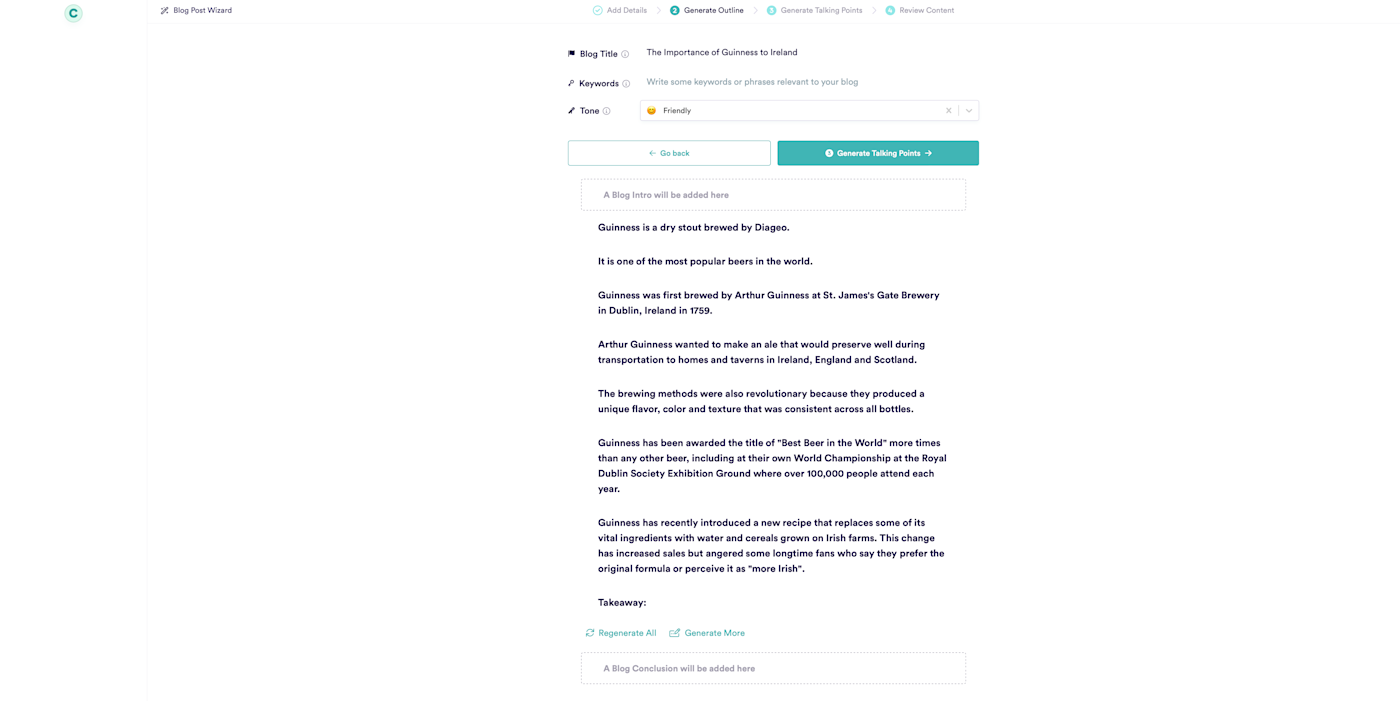

If you've ever been bogged down by the minutiae of formatting or are unsure about specific academic standards, Typeset is a lifesaver.

Typeset specializes in formatting, ensuring academic papers align with various journal and conference standards.

It automates the intricate process of academic formatting, saving you from the manual hassle and potential errors, inflating your writing experience.

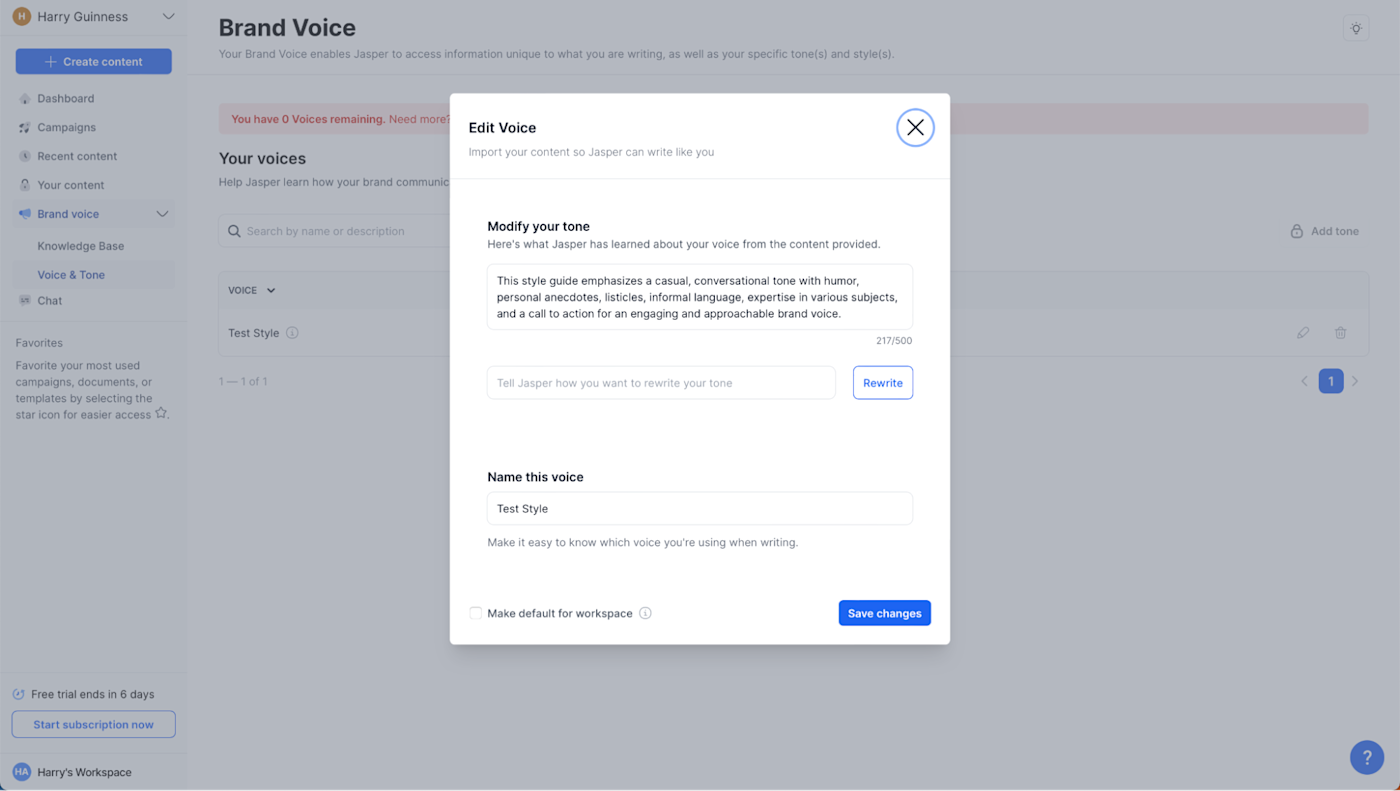

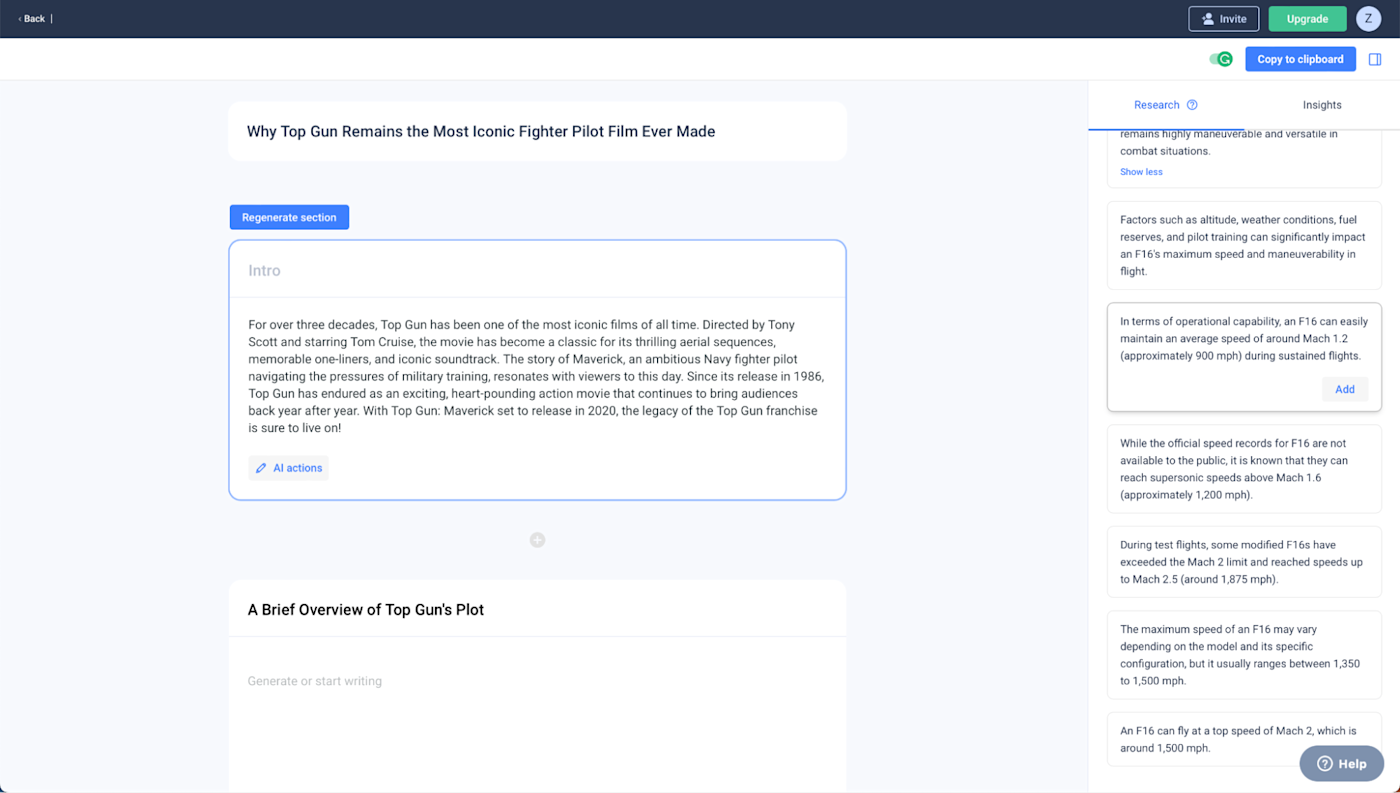

An AI-driven writing assistant, Wisio elevates the quality of your thesis content. It goes beyond grammar checks, offering style suggestions tailored to academic writing.

This ensures your thesis is both grammatically correct and maintains a scholarly tone. For moments of doubt or when maintaining a consistent style becomes challenging, Wisio acts as your personal editor, providing real-time feedback.

Known for its ability to generate and refine thesis content using AI algorithms, Texti ensures logical and coherent content flow according to the academic guidelines.

When faced with writer's block or a blank page, Texti can jumpstart your thesis writing process, aiding in drafting or refining content.

JustDone is an AI for thesis writing and content creation. It offers a straightforward three-step process for generating content, from choosing a template to customizing details and enjoying the final output.

JustDone AI can generate thesis drafts based on the input provided by you. This can be particularly useful for getting started or overcoming writer's block.

This platform can refine and enhance the editing process, ensuring it aligns with academic standards and is free from common errors. Moreover, it can process and analyze data, helping researchers identify patterns, trends, and insights that might be crucial for their thesis.

Tailored for academic writing, Writefull offers style suggestions to ensure your content maintains a scholarly tone.

This AI for thesis writing provides feedback on your language use, suggesting improvements in grammar, vocabulary, and structure . Moreover, it compares your written content against a vast database of academic texts. This helps in ensuring that your writing is in line with academic standards.

Isaac Editor

For those seeking an all-in-one solution for writing, editing, and refining, Isaac Editor offers a comprehensive platform.

Combining traditional text editor features with AI, Isaac Editor streamlines the writing process. It's an all-in-one solution for writing, editing, and refining, ensuring your content is of the highest quality.

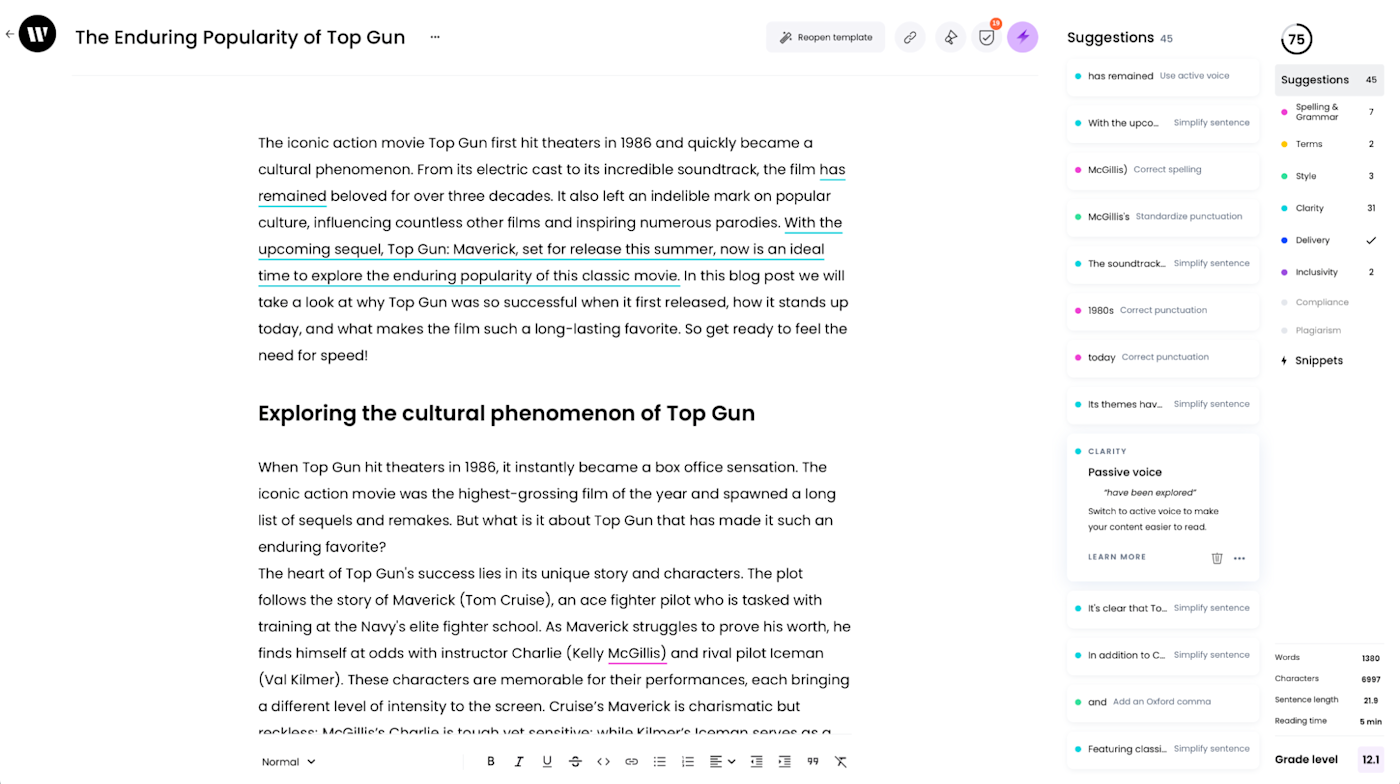

PaperPal , an AI-powered personal writing assistant, enhances academic writing skills, particularly for PhD thesis writing and English editing.

This AI for thesis writing offers comprehensive grammar, spelling, punctuation, and readability suggestions, along with detailed English writing tips.

It offers grammar checks, providing insights on rephrasing sentences, improving article structure, and other edits to refine academic writing.

The platform also offers tools like "Paperpal for Word" and "Paperpal for Web" to provide real-time editing suggestions, and "Paperpal for Manuscript" for a thorough check of completed articles or theses.

Is it ethical to use AI for thesis writing?

The AI for writing thesis has ignited discussions on authenticity. While AI tools offer unparalleled assistance, it's vital to maintain originality and not become overly reliant. Research thrives on unique contributions, and AI should be a supportive tool, not a replacement.

The key question: Can a thesis, significantly aided by AI, still be viewed as an original piece of work?

AI tools can simplify research, offer grammar corrections, and even produce content. However, there's a fine line between using AI as a helpful tool and becoming overly dependent on it.

In essence, while AI offers numerous advantages for thesis writing, it's crucial to use it judiciously. AI should complement human effort, not replace it. The challenge is to strike the right balance, ensuring genuine research contributions while leveraging AI's capabilities.

Wrapping Up

Nowadays, it's evident that AI tools are not just fleeting trends but pivotal game-changers.

They're reshaping how we approach, structure, and refine our theses, making the process more efficient and the output more impactful. But amidst this technological revolution, it's essential to remember the heart of any thesis: the researcher's unique voice and perspective .

AI tools are here to amplify that voice, not overshadow it. They're guiding you through the vast sea of information, ensuring our research stands out and resonates.

Try these tools out and let us know what worked for you the best.

Love using SciSpace tools? Enjoy discounts! Use SR40 (40% off yearly) and SR20 (20% off monthly). Claim yours here 👉 SciSpace Premium

Frequently Asked Questions

Yes, you can use AI to assist in writing your thesis. AI tools can help streamline various aspects of the writing process, such as data analysis, literature review, grammar checks, and content refinement.

However, it's essential to use AI as a supportive tool and not a replacement for original research and critical thinking. Your thesis should reflect your unique perspective and voice.

Yes, there are AI tools designed to assist in writing research papers. These tools can generate content, suggest improvements, help with formatting, and even provide real-time feedback on grammar and coherence.

Examples include Typeset, JustDone, Writefull, and Texti. However, while they can aid the process, the primary research, analysis, and conclusions should come from the researcher.

The "best" AI for writing papers depends on your specific needs. For content generation and refinement, Texti is a strong contender.

For grammar checks and style suggestions tailored to academic writing, Writefull is highly recommended. JustDone offers a user-friendly interface for content creation. It's advisable to explore different tools and choose one that aligns with your requirements.

To use AI for writing your thesis:

1. Identify the areas where you need assistance, such as literature review, data analysis, content generation, or grammar checks.

2. Choose an AI tool tailored for academic writing, like Typeset, JustDone, Texti, or Writefull.

3. Integrate the tool into your writing process. This could mean using it as a browser extension, a standalone application, or a plugin for your word processor.

4. As you write or review content, use the AI tool for real-time feedback, suggestions, or content generation.

5. Always review and critically assess the suggestions or content provided by the AI to ensure it aligns with your research goals and maintains academic integrity.

You might also like

What is a thesis | A Complete Guide with Examples

Analytics Insight

10 Best AI tools for Thesis Writing

Revolutionizing Academic Writing: The 10 Best AI Tools for Streamlining Thesis Creation

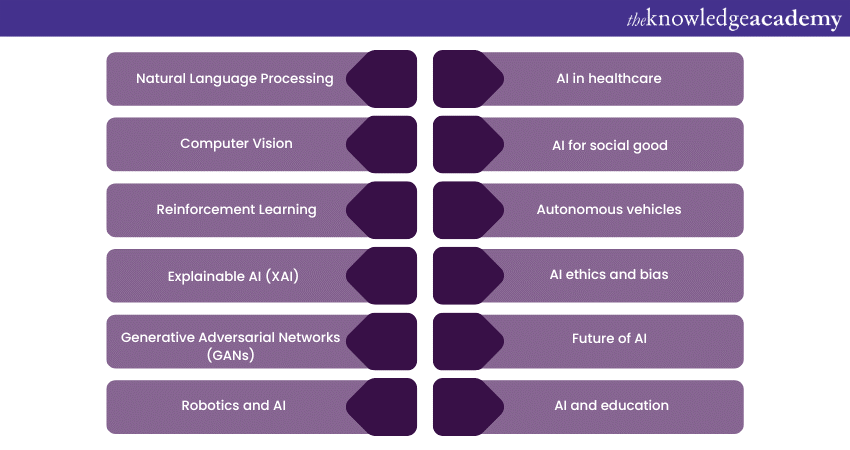

Thesis writing is a challenging yet integral part of academic pursuits, and the integration of Artificial Intelligence (AI) tools has revolutionized the research and writing process. In this article, we explore the top 10 AI tools that are invaluable for thesis writing, offering efficiency, precision, and enhanced productivity to researchers and students alike.

1. Grammarly:

Grammarly is an AI-powered writing assistant that goes beyond basic grammar checks. It provides advanced suggestions for clarity, tone, and style, ensuring your thesis is not only grammatically correct but also well-polished and professional.

Zotero is a reference management tool enhanced by AI capabilities. It helps researchers organize sources, generate citations, and create bibliographies effortlessly. The AI-driven features simplify the citation process, saving valuable time during thesis writing.

3. Copyscape:

Maintaining academic integrity is crucial, and Copyscape is an AI tool designed to detect plagiarism. It scans the content against a vast database, ensuring your thesis is original and free from unintentional similarities with existing works.

4. Ref-N-Write:

Ref-N-Write is an AI-powered academic writing tool that assists in generating research papers and thesis content. It offers a vast collection of academic phrases and sentence structures, aiding researchers in articulating their ideas with precision.

5. EndNote:

EndNote is a reference management tool that integrates AI for better organization and citation management. It streamlines the process of collecting, organizing, and citing references, allowing researchers to focus more on the content of their thesis.

6. Quetext:

Quetext is an AI-based plagiarism checker that provides a comprehensive analysis of your thesis content. Its advanced algorithms identify potential instances of plagiarism, ensuring the originality and authenticity of your research.

7. ProWritingAid:

ProWritingAid is an AI-driven writing tool that analyzes writing style, suggests improvements, and checks for issues like overused words and vague phrasing. It enhances the overall quality of your thesis by providing detailed insights into writing strengths and weaknesses.

8. Mendeley:

Mendeley is a reference manager and academic social network that incorporates AI for personalized recommendations. It suggests relevant research papers, articles, and resources based on your research interests, enriching the reference pool for your thesis.

9. IBM Watson Discovery:

IBM Watson Discovery is a powerful AI tool for data discovery and analysis. It can be employed to extract insights from large datasets, aiding researchers in gathering relevant information for their theses and making data-driven conclusions.

10. Evernote:

Evernote, equipped with AI capabilities, is a versatile note-taking tool. It allows researchers to organize and store research notes, ideas, and references in a systematic manner. The AI-enhanced search function makes retrieving information seamless.

Conclusion:

The integration of AI tools into the thesis writing process has ushered in a new era of efficiency and precision for researchers and students. From grammar checks to plagiarism detection and reference management, these 10 AI tools contribute to streamlining the academic writing journey, ensuring that the focus remains on the substance and quality of the thesis content. Embracing these tools empowers researchers to navigate the complexities of thesis writing with enhanced productivity and confidence.

Disclaimer: Any financial and crypto market information given on Analytics Insight are sponsored articles, written for informational purpose only and is not an investment advice. The readers are further advised that Crypto products and NFTs are unregulated and can be highly risky. There may be no regulatory recourse for any loss from such transactions. Conduct your own research by contacting financial experts before making any investment decisions. The decision to read hereinafter is purely a matter of choice and shall be construed as an express undertaking/guarantee in favour of Analytics Insight of being absolved from any/ all potential legal action, or enforceable claims. We do not represent nor own any cryptocurrency, any complaints, abuse or concerns with regards to the information provided shall be immediately informed here .

You May Also Like

Bitcoin Slots & Bonus Codes: Latest Bitcoin Slots Reviews & Promos 2023

How Have Chatbots Changed Customer Service?

Best Cash Advance Loans in the U.S – Top Choices for Fast Funds

Bitcoin will be the Worst Hit Crypto After G20 Releases Regulations: 2023 Predictions

Analytics Insight® is an influential platform dedicated to insights, trends, and opinion from the world of data-driven technologies. It monitors developments, recognition, and achievements made by Artificial Intelligence, Big Data and Analytics companies across the globe.

- Select Language:

- Privacy Policy

- Content Licensing

- Terms & Conditions

- Submit an Interview

Special Editions

- Dec – Crypto Weekly Vol-1

- 40 Under 40 Innovators

- Women In Technology

- Market Reports

- AI Glossary

- Infographics

Latest Issue

Disclaimer: Any financial and crypto market information given on Analytics Insight is written for informational purpose only and is not an investment advice. Conduct your own research by contacting financial experts before making any investment decisions, more information here .

Second Menu

Have a language expert improve your writing

Check your paper for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

Using AI tools

AI Writing Tools | Definition, Uses & Implications

AI writing tools are artificial intelligence (AI) software applications like ChatGPT that help to automate or assist the writing process. These tools use machine learning algorithms to generate human-sounding text in response to users’ text-based prompts.

Other AI tools, such as grammar checkers , paraphrasers and summarizers serve more specific functions, like identifying grammar and spelling mistakes or rephrasing text.

Table of contents

How do ai writing tools work, what can ai writing tools be used for, implications of ai writing tools, other interesting articles, frequently asked questions about ai writing tools.

AI writing tools (chatbots, grammar checkers, etc.) use natural language processing (NLP) algorithms, machine learning , and large language models (LLMs) to generate or improve written text. These tools are trained to identify patterns in vast amounts of data. The tools then use these patterns to analyze human inputs (or prompts) and generate natural-sounding outputs (similar to how predictive text works).

Different AI tools may be programmed in different ways. For example, ChatGPT, the popular chatbot developed by OpenAI and released in November 2022, uses generative pre-trained transformers (GPTs) and was trained using reinforcement learning from human feedback (RLHF) , meaning that the tool was given feedback from humans to help guide it towards the most helpful responses.

AI writing tools have a number of helpful applications. Some of these applications are discussed below.

Interactive learning and feedback

Generative AI tools can be helpful resources for conversational learning. By inputting questions, users can engage in interactive learning at any time.

You can also prompt these tools to provide feedback on your work to improve the clarity or consistency of your writing. However, it’s important not to upload sensitive information, as user inputs are generally saved by the tool for the purposes of training future models.

Below is an example of how ChatGPT can be used for interactive learning.

Example: Using ChatGPT for interactive learning

- Tell me three facts about Carthaginian culture

- Carthaginians were known for their maritime trade and commerce, and their navy was one of the strongest in the ancient Mediterranean world. They established colonies and trading posts throughout the Mediterranean, including in Sicily, Sardinia, and North Africa.

- Carthaginians had a polytheistic religion, and their chief deity was Baal Hammon, the god of fertility, agriculture, and the sky. They also worshiped a number of other gods and goddesses, including Tanit, the goddess of love, fertility, and war.

- Carthaginians were skilled metalworkers and produced a variety of metal goods, including weapons, armor, jewelry, and household objects. They were particularly famous for their bronze work, which was highly prized in the ancient world. Carthaginian bronze was so valued that it was used by the Romans to make their famous statues and other works of art.

- Tell me three more facts about the Carthaginian navy

- The Carthaginian navy was one of the most advanced and powerful navies of the ancient world. It was instrumental in establishing Carthage as a dominant power in the Mediterranean region.

- The Carthaginians were known for their skill in shipbuilding and developed a variety of ship types for different purposes, including warships, transport ships, and cargo ships. One of their most famous ship types was the quinquereme, a large warship with five rows of oars.

- The Carthaginian navy was led by a commander called the “admiral.” The admiral was responsible for overseeing the fleet and making strategic decisions about naval warfare. One of the most famous Carthaginian admirals was Hannibal Barca, who led the Carthaginian fleet during the First Punic War against Rome.

Generating research questions and outlines

AI writing tools can be used to generate potential research questions or research paper outlines . You can then refine the tool’s responses by further prompting it or directly editing the output yourself to ensure the result makes sense and fits your requirements.

Below is an example of how ChatGPT can be used to generate potential reseach questions and research method ideas.

Example: Using ChatGPT to generate research questions and methods

- Develop three possible research questions on the following topic: “The influence of technology on second language acquisition.”

- What is the impact of technology-assisted language learning (TALL) on the acquisition of a second language?

- How do different types of technology, such as mobile applications, virtual reality, and online platforms, affect second language acquisition outcomes?

- How do individual learner characteristics, such as age, proficiency level, and motivation, interact with the use of technology in second language acquisition?

- Suggest three quantitative research methods appropriate to the second question.

- Experimental research: This research method involves manipulating an independent variable (in this case, the type of technology used) to observe its effect on a dependent variable (second language acquisition outcomes). Participants would be randomly assigned to different groups using different types of technology to learn a second language, and then their language acquisition outcomes would be measured and compared.

- Survey research: This research method involves using questionnaires to gather data from a large group of participants. In this case, a survey could be designed to gather information on participants’ use of different types of technology to learn a second language, as well as their language acquisition outcomes. This would allow for a large-scale investigation of how different types of technology are being used, and what their impact might be.

- Correlational research: This research method involves examining the relationship between two or more variables. In this case, a correlational study could be conducted to investigate whether there is a relationship between the type of technology used and language acquisition outcomes. Data could be collected from participants using different types of technology to learn a second language, and then the correlation between the type of technology and language acquisition outcomes could be calculated.

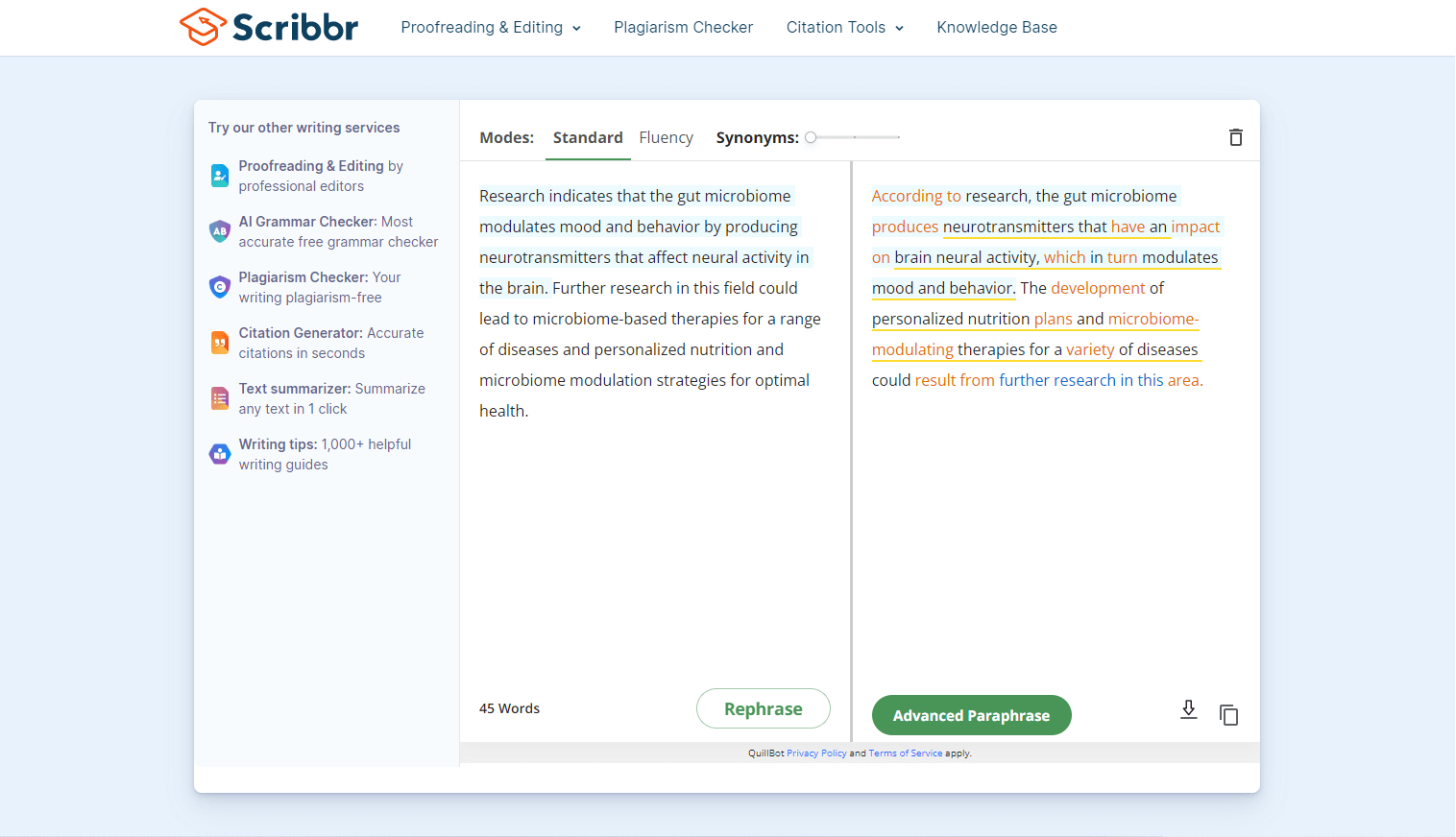

Paraphrasing text

AI tools like ChatGPT and Scribbr’s free paraphrasing tool can help you paraphrase text to express your ideas more clearly, avoid repetition, and maintain a consistent tone throughout your writing.

They can also help you incorporate scholarly sources in your writing in a more concise and fluent way, without the need for direct quotations. However, it’s important to correctly cite all sources to avoid accidental plagiarism.

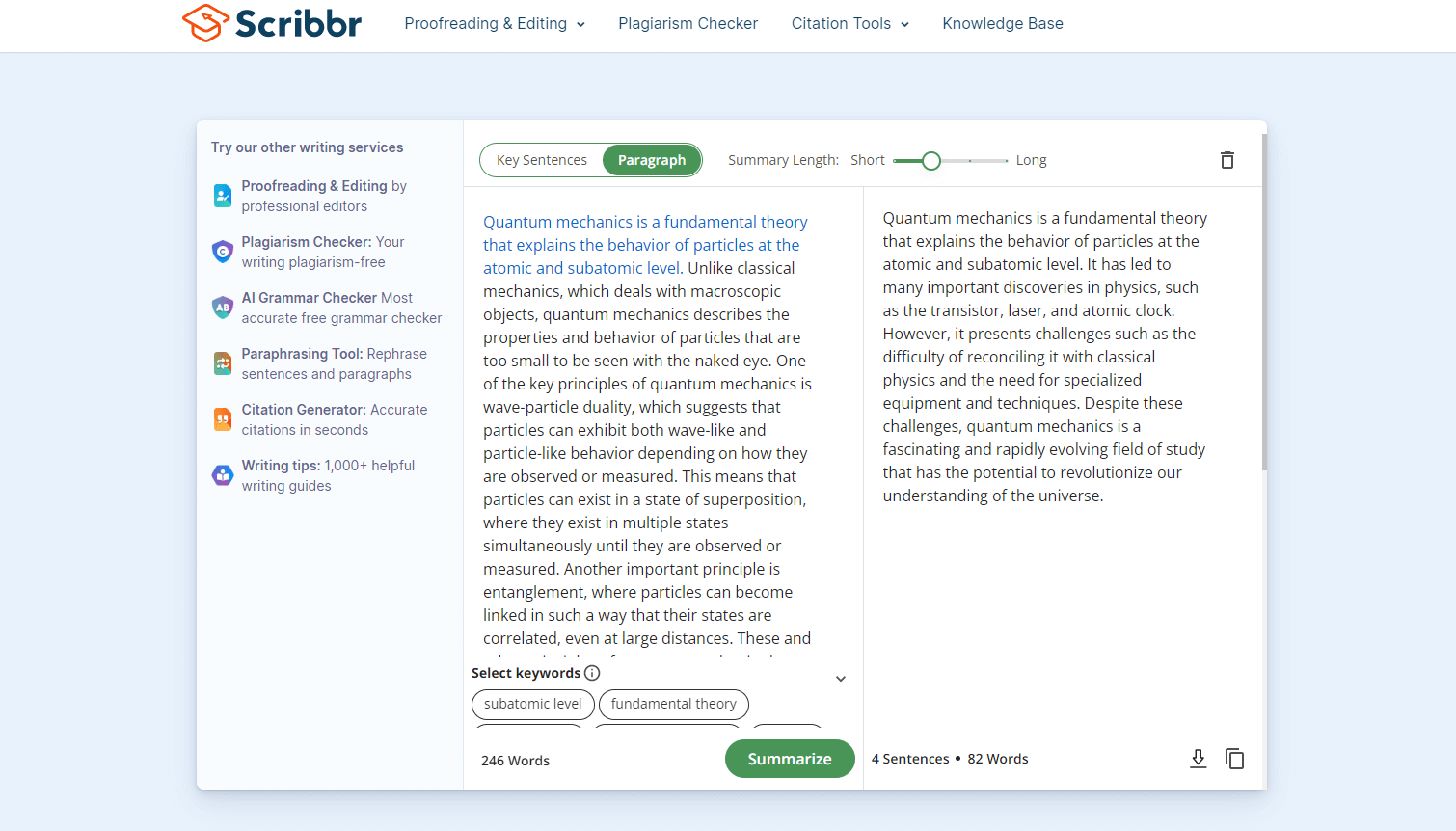

Summarizing text

AI writing tools can help condense a text to its most important and relevant ideas. This can help you understand complex information more easily. You can also use summarizer tools on your own work to summarize your central argument, clarify your research question, and form conclusions.

You can do this using generative AI tools or more specialized tools like Scribbr’s free text-summarizer .

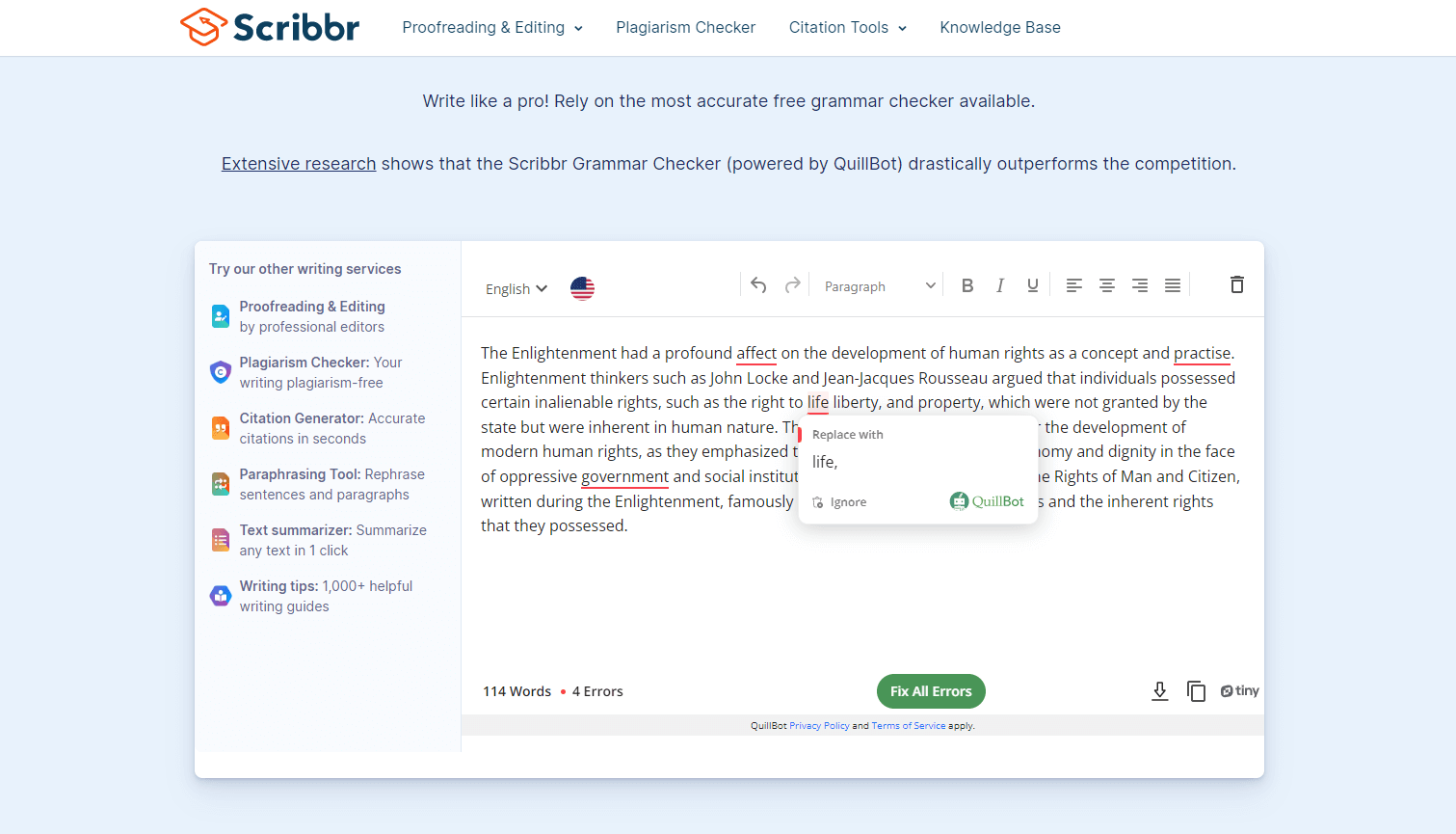

Proofreading text

AI writing tools can be used to identify spelling, grammar, and punctuation mistakes and suggest corrections. These tools can help to improve the clarity of your writing and avoid common mistakes .

While AI tools like ChatGPT offer useful suggestions, they can also potentially miss some mistakes or even introduce new grammatical errors into your writing.

We advise using Scribbr’s proofreading and editing service or a tool like Scribbr’s free grammar checker , which is designed specifically for this purpose.

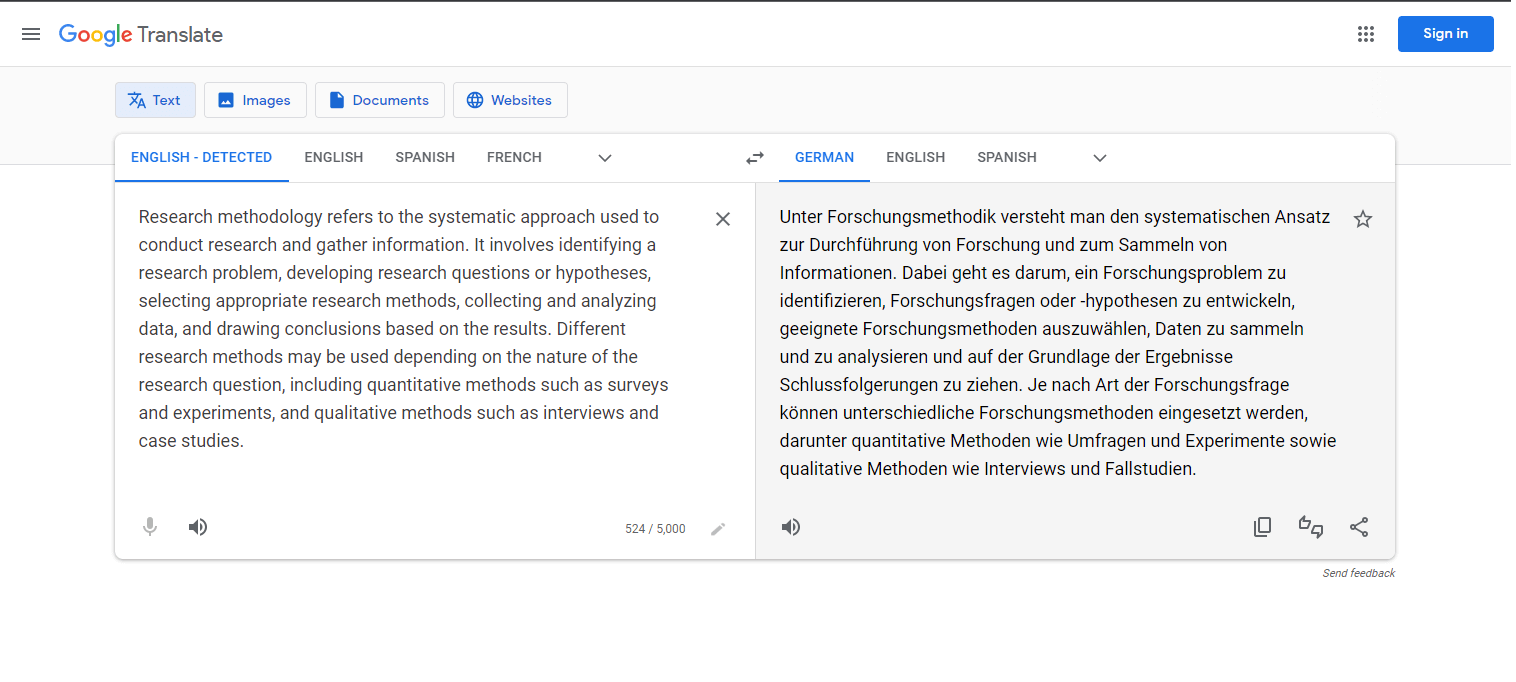

Translating text

AI translation tools like Google Translate can be used to translate text from a source language into various target languages. While the quality of these tools tend to vary depending on the languages used, they’re constantly developing and are increasingly accurate.

While there are many benefits to using AI writing tools, some commentators have emphasized the limitations of AI tools and the potential disadvantages of using them. These drawbacks are discussed below.

Impact on learning

One of the potential pitfalls of using AI writing tools is the effect they might have on a student’s learning and skill set. Using AI tools to generate a paper, thesis , or dissertation , for example, may impact a student’s research, critical thinking, and writing skills.

However, other commentators argue that AI tools can be used to promote critical thinking (e.g., by having a student evaluate a tool’s output and refine it).

Consistency and accuracy

Generative AI tools (such as ChatGPT) are not always trustworthy and sometimes produce results that are inaccurate or factually incorrect. Although these tools are programmed to answer questions, they can’t judge the accuracy of the information they provide and may generate incorrect answers or contradict themselves.

It’s important to verify AI-generated information against a credible source .

Grammatical mistakes

While generative AI tools can produce written text, they don’t actually understand what they’re saying and sometimes produce grammar, spelling, and punctuation mistakes.

You can combine the use of generative AI tools with Scribbr’s grammar checker , which is designed to catch these mistakes.

Ethics and plagiarism

As AI writing tools are trained on large sets of data, they may produce content that is similar to existing content (which they usually cannot cite correctly), which can be considered plagiarism.

Furthermore, passing off AI-generated text as your own work is usually considered a form of plagiarism and is likely to be prohibited by your university. This offense may be recognized by your university’s plagiarism checker or AI detector .

If you want more tips on using AI tools , understanding plagiarism , and citing sources , make sure to check out some of our other articles with explanations, examples, and formats.

- Citing ChatGPT

- Best grammar checker

- Best paraphrasing tool

- ChatGPT in your studies

- Is ChatGPT trustworthy?

- Types of plagiarism

- Self-plagiarism

- Avoiding plagiarism

- Academic integrity

- Best plagiarism checker

Citing sources

- Citation styles

- In-text citation

- Citation examples

- Annotated bibliography

AI writing tools can be used to perform a variety of tasks.

Generative AI writing tools (like ChatGPT ) generate text based on human inputs and can be used for interactive learning, to provide feedback, or to generate research questions or outlines.

These tools can also be used to paraphrase or summarize text or to identify grammar and punctuation mistakes. Y ou can also use Scribbr’s free paraphrasing tool , summarizing tool , and grammar checker , which are designed specifically for these purposes.

Using AI writing tools (like ChatGPT ) to write your essay is usually considered plagiarism and may result in penalization, unless it is allowed by your university . Text generated by AI tools is based on existing texts and therefore cannot provide unique insights. Furthermore, these outputs sometimes contain factual inaccuracies or grammar mistakes.

However, AI writing tools can be used effectively as a source of feedback and inspiration for your writing (e.g., to generate research questions ). Other AI tools, like grammar checkers, can help identify and eliminate grammar and punctuation mistakes to enhance your writing.

You can access ChatGPT by signing up for a free account:

- Follow this link to the ChatGPT website.

- Click on “Sign up” and fill in the necessary details (or use your Google account). It’s free to sign up and use the tool.

- Type a prompt into the chat box to get started!

A ChatGPT app is also available for iOS, and an Android app is planned for the future. The app works similarly to the website, and you log in with the same account for both.

Yes, ChatGPT is currently available for free. You have to sign up for a free account to use the tool, and you should be aware that your data may be collected to train future versions of the model.

To sign up and use the tool for free, go to this page and click “Sign up.” You can do so with your email or with a Google account.

A premium version of the tool called ChatGPT Plus is available as a monthly subscription. It currently costs $20 and gets you access to features like GPT-4 (a more advanced version of the language model). But it’s optional: you can use the tool completely free if you’re not interested in the extra features.

ChatGPT was publicly released on November 30, 2022. At the time of its release, it was described as a “research preview,” but it is still available now, and no plans have been announced so far to take it offline or charge for access.

ChatGPT continues to receive updates adding more features and fixing bugs. The most recent update at the time of writing was on May 24, 2023.

Is this article helpful?

Other students also liked.

- What Is ChatGPT? | Everything You Need to Know

- Is ChatGPT Trustworthy? | Accuracy Tested

- University Policies on AI Writing Tools | Overview & List

More interesting articles

- 9 Ways to Use ChatGPT for Language Learning

- Best AI Detector | Free & Premium Tools Tested

- Best Summary Generator | Tools Tested & Reviewed

- ChatGPT Citations | Formats & Examples

- ChatGPT Does Not Solve All Academic Writing Problems

- ChatGPT vs. Human Editor | Proofreading Experiment

- Easy Introduction to Reinforcement Learning

- Ethical Implications of ChatGPT

- Glossary of AI Terms | Acronyms & Terminology

- How Do AI Detectors Work? | Methods & Reliability

- How to Use ChatGPT | Basics & Tips

- How to use ChatGPT in your studies

- How to Write a Conclusion Using ChatGPT | Tips & Examples

- How to Write a Paper with ChatGPT | Tips & Examples

- How to Write an Essay with ChatGPT | Tips & Examples

- How to Write an Introduction Using ChatGPT | Tips & Examples

- How to Write Good ChatGPT Prompts

- Is ChatGPT Safe? | Quick Guide & Tips

- Is Using ChatGPT Cheating?

- Supervised vs. Unsupervised Learning: Key Differences

- Using ChatGPT for Assignments | Tips & Examples

- Using ChatGPT to Write a College Essay | Tips & Examples

- What Are the Legal Implications of ChatGPT?

- What Are the Limitations of ChatGPT?

- What Can ChatGPT Do? | Suggestions & Examples

- What Is an Algorithm? | Definition & Examples

- What Is Data Mining? | Definition & Techniques

- What Is Deep Learning? | A Beginner's Guide

- What Is Generative AI? | Meaning & Examples

- What Is Machine Learning? | A Beginner's Guide

"I thought AI Proofreading was useless but.."

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

The best AI tools for research papers and academic research (Literature review, grants, PDFs and more)

As our collective understanding and application of artificial intelligence (AI) continues to evolve, so too does the realm of academic research. Some people are scared by it while others are openly embracing the change.

Make no mistake, AI is here to stay!

Instead of tirelessly scrolling through hundreds of PDFs, a powerful AI tool comes to your rescue, summarizing key information in your research papers. Instead of manually combing through citations and conducting literature reviews, an AI research assistant proficiently handles these tasks.

These aren’t futuristic dreams, but today’s reality. Welcome to the transformative world of AI-powered research tools!

The influence of AI in scientific and academic research is an exciting development, opening the doors to more efficient, comprehensive, and rigorous exploration.

This blog post will dive deeper into these tools, providing a detailed review of how AI is revolutionizing academic research. We’ll look at the tools that can make your literature review process less tedious, your search for relevant papers more precise, and your overall research process more efficient and fruitful.

I know that I wish these were around during my time in academia. It can be quite confronting when trying to work out what ones you should and shouldn’t use. A new one seems to be coming out every day!

Here is everything you need to know about AI for academic research and the ones I have personally trialed on my Youtube channel.

Best ChatGPT interface – Chat with PDFs/websites and more

I get more out of ChatGPT with HeyGPT . It can do things that ChatGPT cannot which makes it really valuable for researchers.

Use your own OpenAI API key ( h e re ). No login required. Access ChatGPT anytime, including peak periods. Faster response time. Unlock advanced functionalities with HeyGPT Ultra for a one-time lifetime subscription

AI literature search and mapping – best AI tools for a literature review – elicit and more

Harnessing AI tools for literature reviews and mapping brings a new level of efficiency and precision to academic research. No longer do you have to spend hours looking in obscure research databases to find what you need!

AI-powered tools like Semantic Scholar and elicit.org use sophisticated search engines to quickly identify relevant papers.

They can mine key information from countless PDFs, drastically reducing research time. You can even search with semantic questions, rather than having to deal with key words etc.

With AI as your research assistant, you can navigate the vast sea of scientific research with ease, uncovering citations and focusing on academic writing. It’s a revolutionary way to take on literature reviews.

- Elicit – https://elicit.org

- Supersymmetry.ai: https://www.supersymmetry.ai

- Semantic Scholar: https://www.semanticscholar.org

- Connected Papers – https://www.connectedpapers.com/

- Research rabbit – https://www.researchrabbit.ai/

- Laser AI – https://laser.ai/

- Litmaps – https://www.litmaps.com

- Inciteful – https://inciteful.xyz/

- Scite – https://scite.ai/

- System – https://www.system.com

If you like AI tools you may want to check out this article:

- How to get ChatGPT to write an essay [The prompts you need]

AI-powered research tools and AI for academic research

AI research tools, like Concensus, offer immense benefits in scientific research. Here are the general AI-powered tools for academic research.

These AI-powered tools can efficiently summarize PDFs, extract key information, and perform AI-powered searches, and much more. Some are even working towards adding your own data base of files to ask questions from.

Tools like scite even analyze citations in depth, while AI models like ChatGPT elicit new perspectives.

The result? The research process, previously a grueling endeavor, becomes significantly streamlined, offering you time for deeper exploration and understanding. Say goodbye to traditional struggles, and hello to your new AI research assistant!

- Bit AI – https://bit.ai/

- Consensus – https://consensus.app/

- Exper AI – https://www.experai.com/

- Hey Science (in development) – https://www.heyscience.ai/

- Iris AI – https://iris.ai/

- PapersGPT (currently in development) – https://jessezhang.org/llmdemo

- Research Buddy – https://researchbuddy.app/

- Mirror Think – https://mirrorthink.ai

AI for reading peer-reviewed papers easily

Using AI tools like Explain paper and Humata can significantly enhance your engagement with peer-reviewed papers. I always used to skip over the details of the papers because I had reached saturation point with the information coming in.

These AI-powered research tools provide succinct summaries, saving you from sifting through extensive PDFs – no more boring nights trying to figure out which papers are the most important ones for you to read!

They not only facilitate efficient literature reviews by presenting key information, but also find overlooked insights.

With AI, deciphering complex citations and accelerating research has never been easier.

- Open Read – https://www.openread.academy

- Chat PDF – https://www.chatpdf.com

- Explain Paper – https://www.explainpaper.com

- Humata – https://www.humata.ai/

- Lateral AI – https://www.lateral.io/

- Paper Brain – https://www.paperbrain.study/

- Scholarcy – https://www.scholarcy.com/

- SciSpace Copilot – https://typeset.io/

- Unriddle – https://www.unriddle.ai/

- Sharly.ai – https://www.sharly.ai/

AI for scientific writing and research papers

In the ever-evolving realm of academic research, AI tools are increasingly taking center stage.

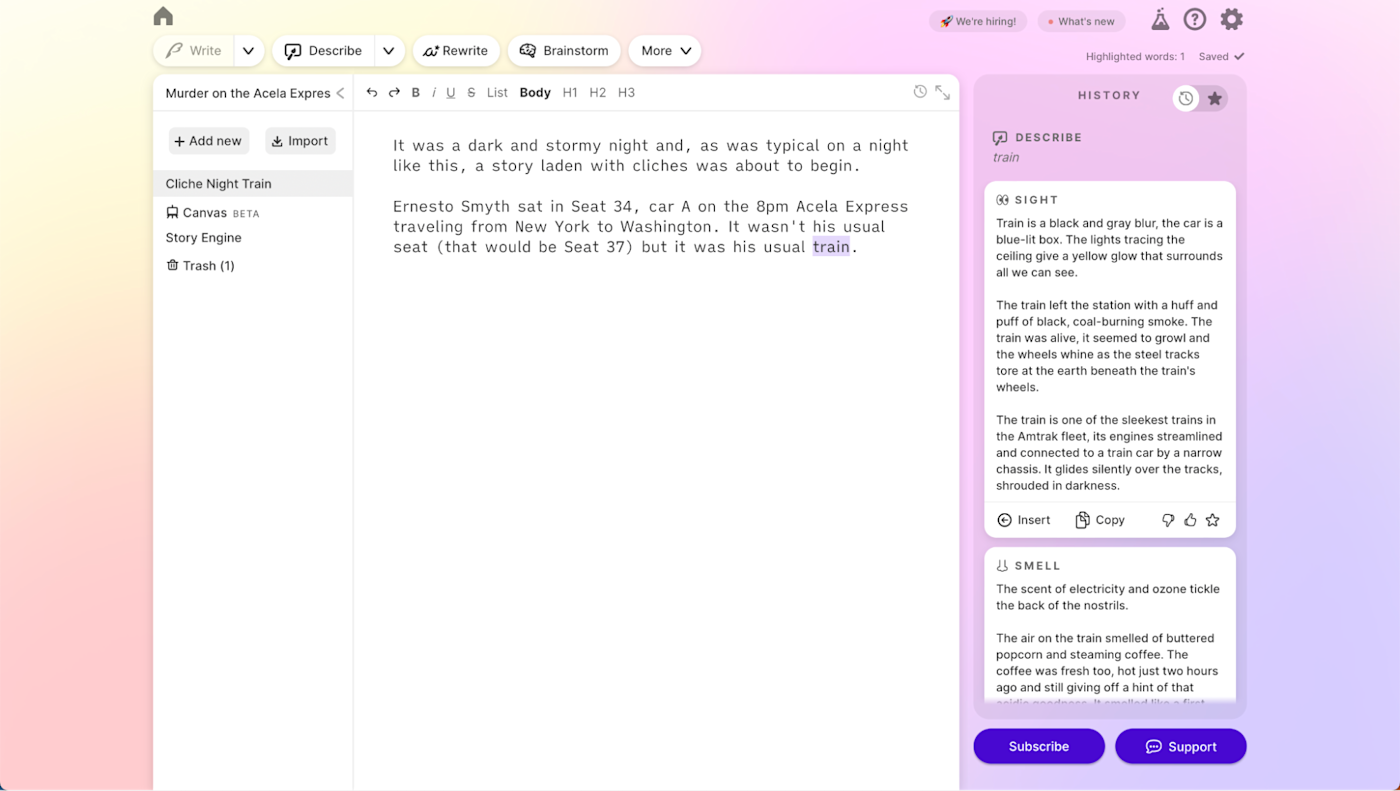

Enter Paper Wizard, Jenny.AI, and Wisio – these groundbreaking platforms are set to revolutionize the way we approach scientific writing.

Together, these AI tools are pioneering a new era of efficient, streamlined scientific writing.

- Paper Wizard – https://paperwizard.ai/

- Jenny.AI https://jenni.ai/ (20% off with code ANDY20)

- Wisio – https://www.wisio.app

AI academic editing tools

In the realm of scientific writing and editing, artificial intelligence (AI) tools are making a world of difference, offering precision and efficiency like never before. Consider tools such as Paper Pal, Writefull, and Trinka.

Together, these tools usher in a new era of scientific writing, where AI is your dedicated partner in the quest for impeccable composition.

- Paper Pal – https://paperpal.com/

- Writefull – https://www.writefull.com/

- Trinka – https://www.trinka.ai/

AI tools for grant writing

In the challenging realm of science grant writing, two innovative AI tools are making waves: Granted AI and Grantable.

These platforms are game-changers, leveraging the power of artificial intelligence to streamline and enhance the grant application process.

Granted AI, an intelligent tool, uses AI algorithms to simplify the process of finding, applying, and managing grants. Meanwhile, Grantable offers a platform that automates and organizes grant application processes, making it easier than ever to secure funding.

Together, these tools are transforming the way we approach grant writing, using the power of AI to turn a complex, often arduous task into a more manageable, efficient, and successful endeavor.

- Granted AI – https://grantedai.com/

- Grantable – https://grantable.co/

Free AI research tools

There are many different tools online that are emerging for researchers to be able to streamline their research processes. There’s no need for convience to come at a massive cost and break the bank.

The best free ones at time of writing are:

- Elicit – https://elicit.org

- Connected Papers – https://www.connectedpapers.com/

- Litmaps – https://www.litmaps.com ( 10% off Pro subscription using the code “STAPLETON” )

- Consensus – https://consensus.app/

Wrapping up

The integration of artificial intelligence in the world of academic research is nothing short of revolutionary.

With the array of AI tools we’ve explored today – from research and mapping, literature review, peer-reviewed papers reading, scientific writing, to academic editing and grant writing – the landscape of research is significantly transformed.

The advantages that AI-powered research tools bring to the table – efficiency, precision, time saving, and a more streamlined process – cannot be overstated.

These AI research tools aren’t just about convenience; they are transforming the way we conduct and comprehend research.

They liberate researchers from the clutches of tedium and overwhelm, allowing for more space for deep exploration, innovative thinking, and in-depth comprehension.

Whether you’re an experienced academic researcher or a student just starting out, these tools provide indispensable aid in your research journey.

And with a suite of free AI tools also available, there is no reason to not explore and embrace this AI revolution in academic research.

We are on the precipice of a new era of academic research, one where AI and human ingenuity work in tandem for richer, more profound scientific exploration. The future of research is here, and it is smart, efficient, and AI-powered.

Before we get too excited however, let us remember that AI tools are meant to be our assistants, not our masters. As we engage with these advanced technologies, let’s not lose sight of the human intellect, intuition, and imagination that form the heart of all meaningful research. Happy researching!

Thank you to Ivan Aguilar – Ph.D. Student at SFU (Simon Fraser University), for starting this list for me!

Dr Andrew Stapleton has a Masters and PhD in Chemistry from the UK and Australia. He has many years of research experience and has worked as a Postdoctoral Fellow and Associate at a number of Universities. Although having secured funding for his own research, he left academia to help others with his YouTube channel all about the inner workings of academia and how to make it work for you.

Thank you for visiting Academia Insider.

We are here to help you navigate Academia as painlessly as possible. We are supported by our readers and by visiting you are helping us earn a small amount through ads and affiliate revenue - Thank you!

2024 © Academia Insider

Artificial Intelligence

Recent advances in artificial intelligence (AI) for writing (including CoPilot and ChatGPT ) can quickly create coherent, cohesive prose and paragraphs on a seemingly limitless set of topics. The potential for abuse in academic integrity is clear, and our students are likely using these tools already. There are similar AI tools for creating images, computer code, and many other domains. Most of this guide concerns generative AI (GenAI) such as large-language models (LLMs) that function as word-predictors and can generate text and entire essays. As AI represents a permanent addition to society and students’ tools, we need to permanently re-envision how we assign college writing and other projects. As such, FCTL has assembled this set of ideas to consider.

Category 1: Lean into the Software’s Abilities

- Re-envision writing as editing/revising . Assign students to create an AI essay with a given prompt, and then heavily edit the AI output using Track Changes and margin comments. Such an assignment refocuses the work of writing away from composition and toward revision, which may be more common in an AI-rich future workplace. Generative AI (GenAI, such at Bing Chat or ChatGPT) is spectacular at providing summaries, but they lack details and specifics, which could be what the students are tasked to do. Other examples include better connecting examples to claims, and revising overall paragraph structure in service of a larger argument. Here are some example assignments using GenAI as part of the writing prompt.

- Re-envision writing as first and third stage human work, with AI performing the middle . Instead of asking students to generate the initials drafts (i.e., “writing as composition”), imagine the student work instead focusing on creating effective prompts for the AI, as well editing the AI output.

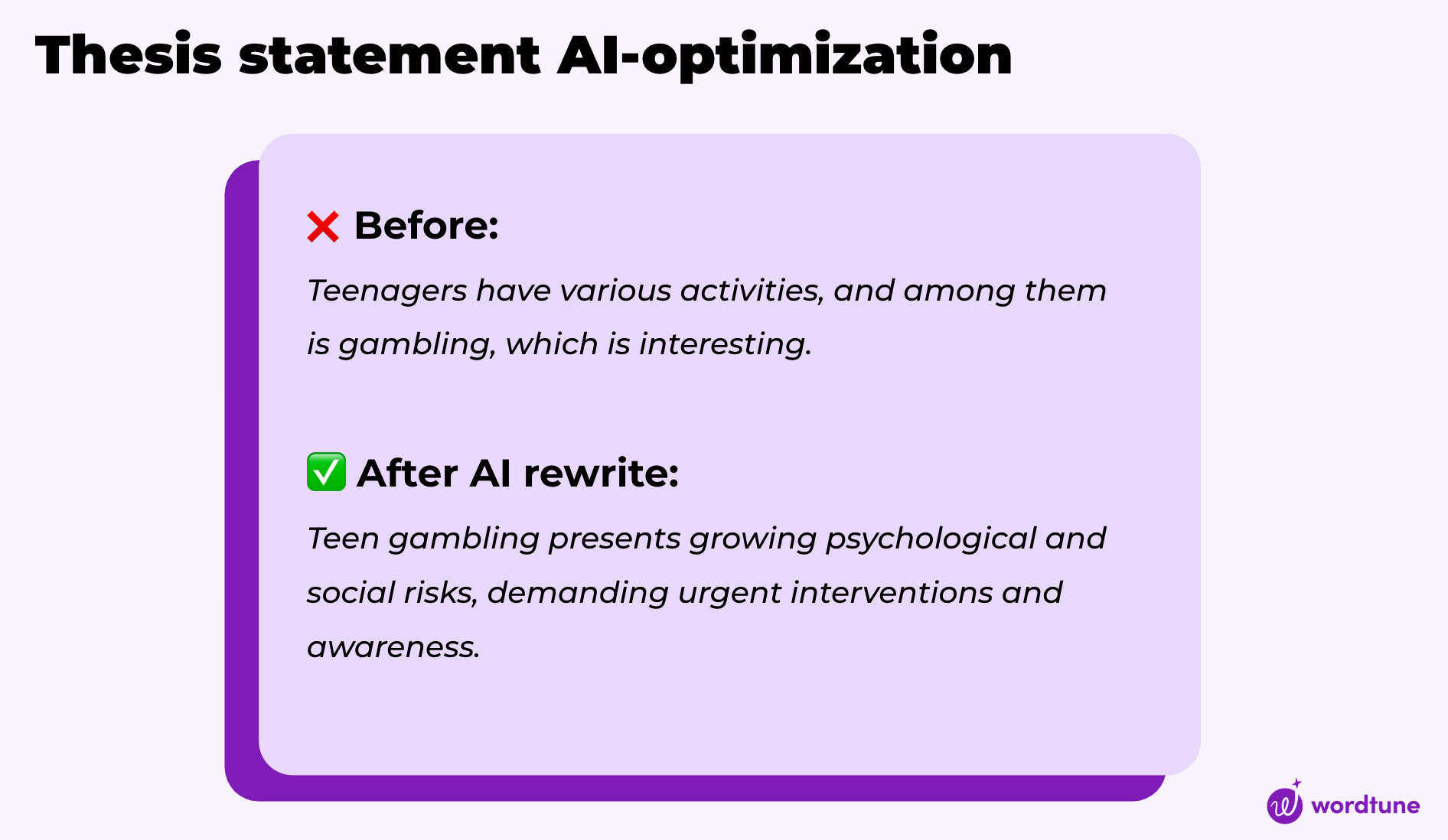

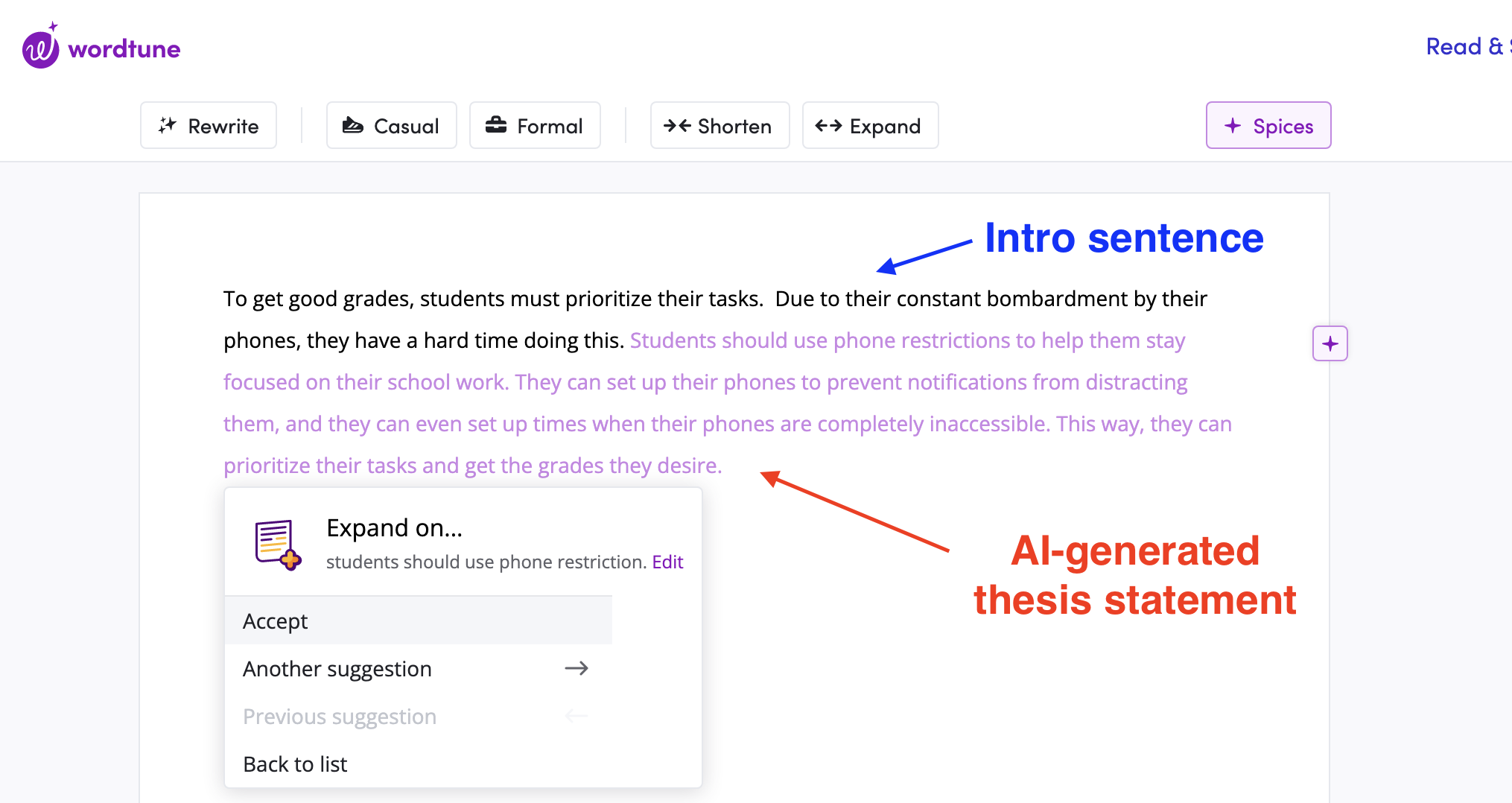

- Focus student learning on creative thesis writing by editing AI-created theses . The controlling statement for most AI essays can be characterized as summary in nature, rather than analytical. Students can be challenged to transform AI output into more creative, analytical theses.

- Refine editing skills via grading . Assign students to create an AI essay and grade it, providing specific feedback justifying each of the scores on the rubric. This assignment might be paired with asking students to create their own essay responding to the same prompt.

- Write rebuttals. Ask the AI to produce a custom output you’ve intentionally designed, then assign students to write a rebuttal of the AI output.

- Create counterarguments . Provide the AI with your main argument and ask it to create counterarguments, which can be incorporated – then overcome – in the main essay.

- Evaluate AI writing for bias . Because the software is only as good as information it finds and ingests (remember the principle of GIGO: garbage in, garbage out), it may well create prose that mimics structural bias and racism that is present in its source material. AI writing might also reveal assumptions about the “cultural war” separating political parties in the United States.

- Teach information literacy through AI . Many students over-trust information they find on websites; use AI software to fuel a conversation about when to trust, when to verify, and when to use information found online.

- Give only open-book exams (especially online) . Assume that students can and will use the Internet and any available AI to assist them.

- Assign essays, projects, and tests that aim for “application” and above in Bloom’s taxonomy . Since students can look up knowledge/information answers and facts, it’s better to avoid testing them on such domains, especially online.

- Teach debate and critical thinking skills . Ask the AI to produce a stance, then using the tools of your discipline evaluate and find flaws/holes in its position or statements.

- Ask the AI to role play as a character or historical figure . Since GenAI is conversation-based, holding a conversation with an in-character personality yields insights.

- Overcome writer’s block . The AI output could provide a starting point for an essay outline, a thesis statement, or even ideas for paragraphs. Even if none of the paragraphs (or even sentences) are used, asking the AI can be useful for ideation to be put into one’s own words.

- Treat it like a Spellchecker . Ask your students to visit GenAI, type “suggest grammar and syntax fixes:” and then paste their pre-written essay to gain ideas before submission. (Note: for classes where writing ability is a main learning outcome, it might be advisable to require that students disclose any such assistance).

- Make the AI your teaching assistant . When preparing a course, ask the AI to explain why commonly-wrong answers are incorrect. Then, use the Canvas feedback options on quiz/homework questions to paste the AI output for each question.

- Teach sentence diagramming and parts of speech . Since AI can quickly generate text with variety in sentence structures, use the AI output to teach grammar and help students how better to construct sophisticated sentences.

- Engage creativity and multiple modes of representation to foster better recall . Studies show that student recall increases when they use words to describe a picture, or draw a picture to capture information in words. Using AI output as the base, ask students to create artwork (or performances) that capture the same essence.

- Teach AI prompt strategies as a discreet subject related to your field . AI-created content is sure to be a constant in the workplace of the future. Our alumni will need to be versed in crafting specific and sophisticated inputs to obtain best AI outputs.

- Create sample test questions to study for your test . Given appropriate prompts, AI can generate college-level multiple choice test questions on virtually any subject, and provide the right answer. Students can use such questions as modern-day flash cards and test practice.

- View more ideas in this free e-book written by FCTL : “ 60+ Ideas for ChatGPT Assignments ,” which is housed in the UCF Library’s STARS system. Even though the ebook mentions ChatGPT in its title, the assignment prompts work for most GenAI, including CoPilot, our official university LLM.

Category 2: Use the software to make your teaching/faculty life easier

- Create grading rubrics for major assignments . Give specifics about the assignment when asking the software to create a rubric in table format. Optionally, give it the desired sub-grades of the rubric.

- Write simple or mechanical correspondence for you . GenAI is fairly good at writing letters and formulaic emails. The more specific the inputs are, the better the output is. However, always keep in mind the ethics of using AI-generated writing wholesale, representing the writing as your own words–particularly if you are evaluating or recommending anything. AI output should not be used, for instance, in submitting peer reviews.

- Adjust, simplify, shorten, or enhance your formal writing . The software could be asked to shorten (or lengthen) any professional writing you are composing, or to suggest grammar and syntax fixes (particularly useful for non-native speakers of English!) In short, you could treat it like Spellchecker before you submit it. However, again consider the ethics of using AI content wholesale–journals and granting agencies are still deciding how (or whether) to accept AI-assisted submissions, and some have banned it.

- Summarize one-minute papers . If you ask students for feedback, or to prove they understand a concept via one-minute papers, you can submit these en masse and ask the AI to provide a summary.

- Generate study guides for your students . If you input your lecture notes and ask for a summary, this can be given to students as a study guide.

- Create clinical case studies for students to analyze . You can generate different versions of a case with a similar prompt.

- Evaluate qualitative data . Provide the AI with raw data and ask it to identify patterns, not only in repeated words but in similar concepts.

- What about AI and research? It’s best to be cautious, if not outright paranoid, about privacy, legality, ethics, and many related concerns, when thinking about exposing your primary research to any AI platform–especially anything novel that could lead to patent and commercialization. Consult the IT department and the Office of Research before taking any action.

- Create test questions and banks . The AI can create nearly limitless multiple-choice questions (with correct answers identified) on many topics and sub-topics. Obviously, these need to be proof-read and verified before using with a student audience.

Category 3: Teach Ethics, Integrity, and Career-Related Skills

- Discuss the ethical and career implications of AI-writing with your students . Early in the semester (or at least when assigning a writing prompt), have a frank discussion with your students about the existence of AI writing. Point out to them the surface-level ethical problem with mis-representing their work if they choose to attempt it, as well as the deeper problem of “cheating themselves” by entering the workforce without adequate preparation for writing skills, a quality that employers highly prize.

- Create and prioritize an honor code in your class . Submitting AI-created work as one’s own is, fundamentally, dishonest. As professionals, we consider it among our top priorities to graduate individuals of character who can perform admirably in their chosen discipline, all of which requires a set of core beliefs rooted in honor. Make this chain of logic explicit to students (repeatedly if necessary) in an effort to convince them to adopt a similar alignment toward personal honesty. A class-specific honor code can aid this effort, particularly if invoked or attested to when submitting major assignments and tests.

- Reduce course-related workload to disincentivize cheating . Many instances of student cheating, including the use of AI-writing, is borne out of desperation and a lack of time. Consider how realistic the workload you expect of students is

Category 4: Attempt to neutralize the software

Faculty looking to continue assigning take-home writing and essays may be interested in this list of ideas to customize their assignments so that students do not benefit from generative AI. However, this approach will likely fail in time, as the technology is improving rapidly, and automated detection methods are already unreliable (at UCF, in fact, the office of Student Conduct and Academic Integrity will not pursue administrative cases against students where the only evidence is from AI detectors). Artificial intelligence is simply a fact of life in modern society, and its use will only become more widespread.

Possible Syllabus Statements

Faculty looking for syllabus language may consider one of these options:

- Use of AI prohibited . Only some Artificial Intelligence (AI) tools, such as spell-check or Grammarly, are acceptable for use in this class. Use of other AI tools via website, app, or any other access, is not permitted in this class. Representing work created by AI as your own is plagiarism, and will be prosecuted as such. Check with your instructor to be sure of acceptable use if you have any questions.

- Use of AI only with explicit permission . This class will make use of Artificial Intelligence (AI) in various ways. You are permitted to use AI only in the manner and means described in the assignments. Any attempt to represent AI output inappropriately as your own work will be treated as plagiarism.

- Use of AI only with acknowledgement . Students are allowed to use Artificial Intelligence (AI) tools on assignments if the usage is properly documented and credited. For example, text generated from Bing Chat Enterprise should include a citation such as: “Bing Chat Enterprise. Accessed 2023-12-03. Prompt: ‘Summarize the Geneva Convention in 50 words.’ Generated using http://bing.com/chat.”

- Use of AI is freely permitted with no acknowledgement . Students are allowed to use Artificial Intelligence (AI) tools in all assignments in this course, with no need to cite, document, or acknowledge any support received from AI tools.

If you write longer announcements or policies for students, try to aim for a level-headed tone that neither overly demonizes AI nor overly idolizes it. Students who are worried about artificial intelligence and/or privacy will be reassured by a steady, business-like tone.

AI Detection and Unauthorized Student Use

AI detectors are not always reliable, so UCF does not have a current contract with any detector. If you use third-party detectors, you should keep in mind that both false positives and false negatives can occur, and student use of Grammarly can return a result of “written by AI.”

Thus, independent verification is required. If you have other examples of this student’s writing that does not match, that might be reason enough to take action. Evidence of a hallucinated citation is even stronger. A confession of using AI by the student is, of course, the gold standard for taking action. One approach might be to call the student to a private (virtual?) conference and explain why you suspect the student used AI, and ask them how they would account for these facts. Or, you can inform them of your intention to fail the paper, but offer them the chance to perform proctored, in-person writing on a similar prompt to prove they can write at this level.

The S tudent Conduct and Academic Integrity office will not “prosecute” a case where the only evidence comes from an AI detector, due to the possibility of false positives and false negatives. A hallucinated citation does constitute evidence. They do still encourage you to file a report in any event, and can offer suggestions on how to proceed. Existing university-level policies ban students from representing work that they did not create as their own, so it’s not always necessary to have a specific AI policy in your syllabus – but it IS a best practice to have such a policy for transparency to students and to communicate your expectations. After all, the lived experience of students is that different faculty have different expectations regarding AI, and extreme clarity is always best.

At the end of the day, the final say about grading remains with the instructor. We recognize that in marginal cases, it might come down to a “gut feeling.” Every instructor has a spectrum of response available to them, from “F” for the term, an “F” or zero for the assignment, a grade penalty (10%? 20%?) applied to the assigned grade, a chance to rewrite the assignment (with or without a grade penalty), taking no grade action but warning the student not to do it again, or to simply letting it go without even approaching the student. Be aware that students have the right to appeal academic grades. For that reason, it may be advisable to check with your supervisor about how to proceed in specific cases.

Because of all of these uncertainties, FCTL suggests that faculty consider replacing essay writing with another deliverable that AI cannot today generate (examples include narrated PowerPoint, narrated Prezi, selfie video presentation WITHOUT reading from a script, digital poster, flowcharts, etc.) An alternative is to include AI-generated output as part of the assignment prompt, and then require the students to “do something” with the output, such as analyze or evaluate it.

The Faculty Center recommends that UCF faculty work with CoPilot (formerly Bing Chat Enterprise) over other large-language model AI tools. The term CoPilot is also used by Microsoft to refer to embedded AI in MS Office products, but the web-based chat tool is separate.

CoPilot with Commercial Protection is NOT the same thing as “CoPilot.” The latter is a the public model of Microsoft’s LLM, also available on the web. CoPilot with Commercial Protection (if logged in with a UCF NID) is a “walled garden” for UCF that offers several benefits:

- It searches the current Internet and is not limited to a fixed point in time when it was trained

- It uses GPT-4 (faster, better) without having to pay a premium

- It uses DALL-E 3.0 to generate images (right there inside CoPilot rather than on a different site)

- It provides a live Internet link to verify the information and confirm there was no hallucination

- It does not store history by user; each logout or new session wipes the memory. In fact, each query is a new blank slate even within the same session, so it’s not possible to have a “conversation” with CoPilot (like you can with ChatGPT)

- Faculty and students log in with their NID

- Data stays local and is NOT uploaded to Microsoft or the public model version of Bing Chat. Inputs into CoPilot are NOT added to the system’s memory, database, or future answers

CoPilot is accessed via this procedure:

- Start at https://copilot.microsoft.com/ (if it doesn’t recognize your UCF email, switch to http://bing.com/chat)

- Click “sign in” at the top-right

- Select “work or school” for the type of account

- Type your full UCF email (including @ucf.edu) and click NEXT

- Log in with your NID and NID password. (Note: you may need to alter your SafeSearch settings away from “Strict”)

- Above the box where you would type your question, you will see “Your personal and company data are protected in this chat” – this is how you know you are in CoPilot.

- Note: if image-generation isn’t working, switch to Edge browser and start at http://bing.com/chat and then sign in using NID.

We recommend that faculty approach the AI revolution with the recognition that AI is here to stay and will represent a needed skill in the workplace of the future (or even the present!) As such, both faculty and students need to develop AI Fluency skills, which we define as:

- Understanding how AI works – knowing how LLMs operate will help users calibrate how much they should (mis)trust the output.

- Deciding when to use AI (and when not to) – AI is just another tool. In some circumstances users will get better results than a web-based search engine, but in other circumstances the reverse may be true. There are also moments when it may be unethical to use AI without disclosing the help.

- Valuing AI – a dispositional change such as this one is often overshadowed by outcomes favored by faculty on the cognitive side, yet true fluency with AI – especially the AI of the future – will require a favorable disposition to using AI. Thus, we owe it to students to recognize AI’s value.

- Applying effective prompt engineering methods – as the phrase goes, “garbage in, garbage out” applies when it comes to the kind of output AI creates. Good prompts give better results than lazy or ineffective prompts. Writing effective prompts is likely to remain a tool-specific skill, with different AI interfaces needing to be learned separately.

- Evaluating AI output – even today’s advanced AI tools can create hallucinations or contain factual mistakes. Employees in the workplace of the future – and thus our students today – need expertise in order to know how trustworthy the output is, and they need practice in fixing/finalizing the output, as this is surely how workplaces will use AI.

- Adding human value – things that can be automated by AI will, in fact, eventually become fully automated. But there will always be a need for human involvement for elements such as judgment, creativity, or emotional intelligence. Our students need to hone the skill of constantly seeking how humans add value to AI output. This includes sensing where (or when) the output could use human input, extrapolation, or interpretation, and then creating effective examples of them. Since this will be context-dependent, it’s not a single skill needed so much as a set of tools that enable our alumni to flourish alongside AI.

- Displaying digital adaptability – today’s AI tools will evolve, or may be replaced by completely different AI tools. Students and faculty need to be prepared for a lifetime of changing AI landscapes. They will need the mental dexterity and agility to accept these changes as inevitable, and the disposition to not fight against these tidal forces. The learning about AI, in other words, should be expected to last a lifetime.

“60+ ChatGPT Assignments to Use in Your Classroom Today”

The Faculty Center staff assembled this open-source book to give you ideas about how to actually use AI in your assignments. It is free for anyone to use, and may be shared with others both inside and outside of UCF.

“Teach with AI” Conference

UCF’s Faculty Center and Center for Distributed Learning are co-hosts of the “Teach with AI” annual conference . This is a national sharing conference that uses short-format presentations and open forums to focus on the sharing of classroom practices by front-line faculty and administrators, rather than research about AI. Although this conference is not free for UCF faculty and staff, we hold separate internal events about AI that are free for UCF stakeholders.

AI Fundamentals for Educators Course

Interested in diving deeper in using AI, not just for teaching but also in your own research? Join the Faculty Center for this 6-week course! Held face to face on the Orlando campus, this course includes topics such as:

- LLM models (explore the differences in ChatGPT, Bard/Genesis, CoPilot, and Claude), the art of prompt engineering , and how to incorporate these tools into lesson planning, assignments, and assessments .

- Image, audio, and video generation tools and how to create interactive audio and video experiences using various GenAI tools while meeting digital accessibility requirements .

- Assignment and assessment alterations to include—or combat—the use of GenAI tools in student work.

- Interactive teaching tools for face-to-face AND online courses.

- AI tools that assist students—and faculty—with discipline-specific academic papers and research.

- Teaching AI fluency and ethics to students.

Registration details are on our “ AI Fundamentals page .”

Asynchronous Training Module on AI

Looking for a deeper dive into using AI in your teaching and research, but need a self-paced online option? We’ve got that too! Head to https://webcourses.ucf.edu/enroll/W7F47B to self-enroll in this Webcourse.

Repository of AI Tools

There are several repositories that attempt to catalog all AI tools (futurepedia.io and theresanaiforthat stand out in particular), but we’ve been curating a smaller, more targeted list here .

AI Glossary

- Canva – a “freemium” online image creating/editing tool that added AI-image generation in 2023

- ChatGPT – the text-generating AI created by OpenAI

- Claude – the text-generating AI created by Anthropic (ex-employees of OpenAI)

- CoPilot – a UCF-specific instance of Bing Chat, using UCF logins and keeping data local (note: confusingly, this name is ALSO used by Microsoft for AI embedded in Microsoft Office products, but UCF does not purchase this subscription.

- DALL-E – the image-generating AI created by OpenAI

- Gemini – an LLM from Google (formerly known as Bard)

- Generative AI – a type of AI that “generates” an output, such as text or images. Large language models like ChatGPT are generative AI

- Grok – the generative AI product launched by Elon Musk

- Khanmigo – Khan Academy’s GPT-powered AI, which will be integrated into Canvas/Webcourses (timeline uncertain)

- LLM (Large Language Model) – a type of software / generative AI that accesses large databases it’s been trained on to predict the next logical word in a sentence, given the task/question it’s been given. Advanced models have excellent “perplexity” (plausibility in the word choice) and “burstiness” (variation of the sentences).

- Midjourney – an industry-leading text-to-AI solution (for profit)

- OpenAI – the company that created ChatGPT and DALL-E

- Sora – a text-to-video generative AI from OpenAI

- Enroll & Pay

- New Faculty

Using AI ethically in writing assignments

The use of generative artificial intelligence in writing isn’t an either/or proposition. Rather, think of a continuum in which AI can be used at nearly any point to inspire, ideate, structure, and format writing. It can also help with research, feedback, summarization, and creation. You may also choose not to use any AI tools. This handout is intended to help you decide.

A starting point

Many instructors fear that students will use chatbots to complete assignments, bypassing the thinking and intellectual struggle involved in shaping and refining ideas and arguments. That’s a valid concern, and it offers a starting point for discussion:

Turning in unedited AI-generated work as one’s own creation is academic misconduct .

Most instructors agree on that point. After that, the view of AI becomes murkier. AI is already ubiquitous, and its integrations and abilities will only grow in the coming years. Students in grade school and high school are also using generative AI, and those students will arrive at college with expectations to do the same. So how do we respond?

Writing as process and product

We often think of writing as a product that demonstrates students’ understanding and abilities. It can serve that role, especially in upper-level classes. In most classes, though, we don’t expect perfection. Rather, we want students to learn the process of writing. Even as students gain experience and our expectations for writing quality rise, we don’t expect them to work in a vacuum. They receive feedback from instructors, classmates, friends, and others. They get help from the writing center. They work with librarians. They integrate the style and thinking of sources they draw on. That’s important because thinking about writing as a process involving many types of collaboration helps us consider how generative AI might fit in.

Generative AI as a writing assistant

We think students can learn to use generative AI effectively and ethically. Again, rather than thinking of writing as an isolated activity, think of it as a process that engages sources, ideas, tools, data, and other people in various ways. Generative AI is simply another point of engagement in that process. Here’s what that might look like at various points:

Early in the process

- Generating ideas . Most students struggle to identify appropriate topics for their writing. Generative AI can offer ideas and provide feedback on students’ ideas.

- Narrowing the scope of a topic . Most ideas start off too broad, and students often need help in narrowing the scope of writing projects. Instructors and peers already do that. Generative AI becomes just another voice in that process.

- Finding initial sources . Bing and Bard can help students find sources early in the writing process. Specialty tools like Semantic Scholar, Elicit, Prophy, and Dimensions can provide more focused searches, depending on the topic.

- Finding connections among ideas . Research Rabbit, Aria (a plug-in for Zotero) and similar tools can create concept maps of literature, showing how ideas and research are connected. Elicit identifies patterns across papers and points to related research. ChatGPT Pro can also find patterns in written work. When used with a plugin, it can also create word clouds and other visualizations.

- Gathering and formatting references . Software like EndNote and Zotero allow students to store and organize sources. They also save time by formatting sources in whatever style the writer needs.

- Summarizing others’ work . ChatGPT, Bing and specialty AI tools like Elicit do a good job of summarizing research papers and webpages, helping students decide whether a source is worth additional time.

- Interrogating research papers or websites . This is a new approach AI has made possible. An AI tool analyzes a paper (often a PDF) or a website. Then researchers can then ask questions about the content, ideas, approach, or other aspects of a work. Some tools can also provide additional sources related to a paper.

- Analyzing data . Many of the same tools that can summarize digital writing can also create narratives from data, offering new ways of bringing data into written work.

- Finding hidden patterns . Students can have an AI tool analyze their notes or ideas for research, asking it to identify patterns, connections, or structure they might not have seen on their own.

- Outlining . ChatGPT, Bing and other tools do an excellent job of outlining potential articles or papers. That can help students organize their thoughts throughout the research and writing process. Each area of an outline provides another entry point for diving deeper into ideas and potential writing topics.

- Creating an introduction . Many writers struggle with opening sentences or paragraphs. Generative AI can provide a draft of any part of a paper, giving students a boost as they bring their ideas together.

Deeper into the process

- Thinking critically . Creating good prompts for generative AI involves considerable critical thinking. This isn’t a process of asking a single question and receiving perfectly written work. It involves trial and error, clarification and repeated follow-ups. Even after that, students will need to edit, add sources, and check the work for AI-generated fabrication or errors.

- Creating titles or section headers for papers . This is an important but often overlooked part of the writing process, and the headings that generative AI produces can help students spot potential problems in focus.

- Helping with transitions and endings . These are areas where students often struggle or get stuck, just as they do with openings.

- Getting feedback on details . Students might ask an AI tool to provide advice on improving the structure, flow, grammar, and other elements of a paper.

- Getting feedback on a draft . Instructors already provide feedback on drafts of assignments and often have students work with peers to do the same. Students may also seek the help of the writing center or friends. Generative AI can also provide feedback, helping students think through large and small elements of a paper. We don’t see that as a substitute for any other part of the writing process. Rather, it is an addition.

Generative AI has many weaknesses. It is programmed to generate answers whether it has appropriate answers or not. Students can’t blame AI for errors, and they are still accountable for everything they turn in. Instructors need to help them understand both the strengths and the weaknesses of using generative AI, including the importance of checking all details.

A range of AI use

Better understanding of the AI continuum provides important context, but it doesn’t address a question most instructors are asking: How much is too much ? There’s no easy answer to that. Different disciplines may approach the use of generative AI in very different ways. Similarly, instructors may set different boundaries for different types of assignments or levels of students. Here are some ways to think through an approach:

- Discuss ethics . What are the ethical foundations of your field? What principles should guide students? Do students know and understand those principles? What happens to professionals who violate those principles?

- Be honest . Most professions, including academia, are trying to work through the very issues instructors are. We are all experimenting and trying to define boundaries even as the tools and circumstances change. Students need to understand those challenges. We should also bring students into conversations about appropriate use of generative AI. Many of them have more experience with AI than instructors do, and adding their voices to discussions will make it more likely that students will follow whatever guidelines we set.

- Set boundaries . You may ask students to avoid, for instance, AI for creating particular assignments or for generating complete drafts of assignments. (Again, this may vary by discipline.) Just make sure students understand why you want them to avoid AI use and how forgoing AI assistance will help them develop skills they need to succeed in future classes and in the professional world.

- Review your assignments . If AI can easily complete them, students may not see the value or purpose. How can you make assignments more authentic, focusing on real-world problems and issues students are likely to see in the workplace?

- Scaffold assignments . Having students create assignments in smaller increments reduces pressure and leads to better overall work.

- Include reflection . Have students think of AI as a method and have them reflect on their use of AI. This might be a paragraph or two at the end of a written assignment in which they explain what AI tools they have used, how they have used those tools, and what AI ultimately contributed to their written work. Also have them reflect on the quality of the material AI provided and on what they learned from using the AI tools. This type of reflection helps students develop metacognitive skills (thinking about their own thinking). It also provides important information to instructors about how students are approaching assignments and what additional revisions they might need to make.

- Engage with the Writing Center, KU Libraries , and other campus services about AI, information literacy, and the writing process. Talk with colleagues and watch for advice from disciplinary societies. This isn’t something you have to approach alone.

Generative AI is evolving rapidly. Large numbers of tools have incorporated it, and new tools are proliferating. Step back and consider how AI has already become part of academic life:

- AI-augmented tools like spell-check and auto-correct brought grumbles, but there was no panic.

- Grammar checkers followed, offering advice on word choice, sentence construction, and other aspects of writing. Again, few people complained.

- Citation software has evolved along with word-processing programs, easing the collection, organization, and formatting of sources.

- Search engines used AI long before generative AI burst into the public consciousness.

As novel as generative AI may seem, it offers nothing new in the way of cheating. Students could already buy papers on the internet, copy and paste from an online site, have someone else create a paper for them, or tweak a paper from the files of a fraternity or a sorority. So AI isn’t the problem. AI has simply forced instructors to deal with long-known issues in academic structure, grading, distrust, and purpose. That is beyond the scope of this handout, other than some final questions for thought: