Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

1. The main approaches to the part of speech classification

Related Papers

Kresta Niña Manipol

i n t ro d u c t i o n In every language we find groups of words that share grammatical characteristics. These groups are called " parts of speech, " and we examine them in this chapter and the next. Though many writers on language refer to " the eight parts of speech " (e.g., Weaver 1996: 254), the actual number of parts of speech we need to recognize in a language is determined by how fine-grained our analysis of the language is—the more fine-grained, the greater the number of parts of speech that will be distinguished. In this book we distinguish nouns, verbs, adjectives, and adverbs (the major parts of speech), and pronouns, wh-words, articles, auxiliary verbs, prepositions, intensifiers, conjunctions, and particles (the minor parts of speech). Every literate person needs at least a minimal understanding of parts of speech in order to be able to use such commonplace items as dictionaries and thesauruses, which classify words according to their parts (and sub-parts) of speech. For example, the American Heritage Dictionary (4 th edition, p. xxxi) distinguishes adjectives, adverbs, conjunctions, definite articles , indefinite articles, interjections, nouns, prepositions, pronouns, and verbs. It also distinguishes transitive, intransitive, and auxiliary verbs. Writers and writing teachers need to know about parts of speech in order to be able to use and teach about style manuals and school grammars. Regardless of their discipline, teachers need this information to be able to help students expand the contexts in which they can effectively communicate. A part of speech is a set of words with some grammatical characteristic(s) in common and each part of speech differs in grammatical characteristics from every other part of speech, e.g., nouns have different properties from verbs, which have different properties from adjectives, and so on. Part of speech analysis depends on knowing (or discovering) the distinguishing properties of the various word sets. This chapter describes several kinds of properties that separate the major parts of speech from each other and de

Maryna Pryadko

Aleksandra Zayats

Studies in Language

Luca Alfieri

Paco Aranda

mné ben hlima

Daniel García Velasco

In Martin Everaert, Marijana Marelj, and Eric Reuland (eds.), Concepts, Syntax and their Interfcae. MIT Press. 2016

Tal Siloni , Julia Horvath

Mohamad Nizar

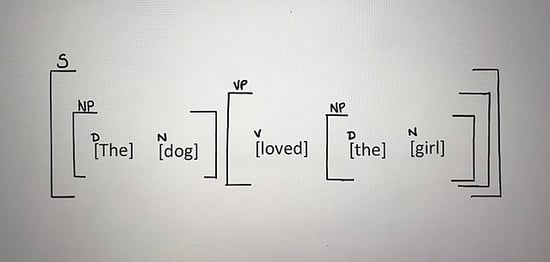

Syntax for Bloomfield (1933), is the study of free forms which consist entirely of free forms. What Bloomfield thought was criticized by later scholars, they highlighted as a criterion form class membership (and hence syntactic equivalence) best expressed in terms of substitution. A form class is a set of forms (simple or complex, free or bound), any one of which can be replaced by another form in a particular construction or set of constructions throughout a language's sentences. Word class or part of speech is a group of words in a language unit based on categories of form, function and meaning in the grammatical system. In the previous article, the author explained that the lexeme bundle is not a large homogeneous collection, but consists of the categories of nouns, verbs, adjectives, prepositions, inflections, determiners, comparative adverbs and complements which according to Newson et.al (2004: 5-6) are word category. A speech contains structured categories of words and is a study of the context of words in phrases, clauses and sentences in the language system (syntax). Newson et al. (2004: 6-10) explained that word categories are divided into two typologies, they are thematic and functional. Nouns, verbs, adjectives, and prepositions are thematic categories. Function categories are inflections, determiners, comparative adverbs, and complements. Start investigating nouns and groups of nouns as the most common elements in sentence construction that can be structured in a complex or sophisticated way with a lot of information. Again, observing words is sophisticated and this is important for linguistic researchers who are responsible for maintaining the rules of English that are referred to by linguistic theories. The importance of research is aimed at teaching English. Teachers are responsible for guiding their students about how sentences behave in English, whether written or spoken, as a mother tongue or a foreign language. Teachers are also responsible for guiding how to interpret other languages into English accurately. This is the current concern of linguistic studies in English. This article only presents the thoughts of linguistic experts about nouns and also the case examples they provide. This will encourage researchers to explore a number of noun cases, which provides a wide field for syntactic research in English. That's why this article has written only as an introduction to word analysis, helping linguistic students or novice syntax researchers in English. Weaknesses in writing this article are the responsibility of the author and are open to criticism.

RELATED PAPERS

mulia zuhelmi

shahzad Masih

MEGARON / Yıldız Technical University, Faculty of Architecture E-Journal

fulya üstün demirkaya , MERVE YADİGAROĞLU YAVRU

The Journal of Adhesive Dentistry

Atsushi Kameyama

Ingeniería del agua

Mariângela Fontes Santiago

Seminars in Cell & Developmental Biology

Yasuhito Sakuraba

Sushant Shinde

IEEE Transactions on Image Processing

Pulak Purkait

İnformasiya təhlükəsizliyinin aktual multidissiplinar elmi-praktiki problemləri V respublika konfransının materialları

Məsumə Məmmədova

Endangered Species Research

Scott Roberton

IRA WIRASARI

Wilson Enderson López Cardona

Nusret Mutlu

Scientific reports

Sandeep Gupta

Muhammad Kholid

Francisco Elzevir Dantas Júnior

Hungarológiai Közlemények

Brazilian Creative Industries Journal

Planta medica

O. Potterat

Ludomir Jankowski

Emerald reach proceedings series

Teresa Beste

Manufacturing review

Catalin DUCU

Sigrid Rettenbacher

DHEA ALISYAH

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

- Tools and Resources

- Customer Services

- Applied Linguistics

- Biology of Language

- Cognitive Science

- Computational Linguistics

- Historical Linguistics

- History of Linguistics

- Language Families/Areas/Contact

- Linguistic Theories

- Neurolinguistics

- Phonetics/Phonology

- Psycholinguistics

- Sign Languages

- Sociolinguistics

- Share This Facebook LinkedIn Twitter

Article contents

Parts of speech, lexical categories, and word classes in morphology.

- Jaklin Kornfilt Jaklin Kornfilt Department of Languages, Literatures, and Linguistics, Syracuse University

- https://doi.org/10.1093/acrefore/9780199384655.013.606

- Published online: 30 January 2020

The term “part of speech” is a traditional one that has been in use since grammars of Classical Greek (e.g., Dionysius Thrax) and Latin were compiled; for all practical purposes, it is synonymous with the term “word class.” The term refers to a system of word classes, whereby class membership depends on similar syntactic distribution and morphological similarity (as well as, in a limited fashion, on similarity in meaning—a point to which we shall return). By “morphological similarity,” reference is made to functional morphemes that are part of words belonging to the same word class. Some examples for both criteria follow: The fact that in English, nouns can be preceded by a determiner such as an article (e.g., a book , the apple ) illustrates syntactic distribution. Morphological similarity among members of a given word class can be illustrated by the many adverbs in English that are derived by attaching the suffix – ly , that is, a functional morpheme, to an adjective ( quick, quick-ly ). A morphological test for nouns in English and many other languages is whether they can bear plural morphemes. Verbs can bear morphology for tense, aspect, and mood, as well as voice morphemes such as passive, causative, or reflexive, that is, morphemes that alter the argument structure of the verbal root. Adjectives typically co-occur with either bound or free morphemes that function as comparative and superlative markers. Syntactically, they modify nouns, while adverbs modify word classes that are not nouns—for example, verbs and adjectives.

Most traditional and descriptive approaches to parts of speech draw a distinction between major and minor word classes. The four parts of speech just mentioned—nouns, verbs, adjectives, and adverbs—constitute the major word classes, while a number of others, for example, adpositions, pronouns, conjunctions, determiners, and interjections, make up the minor word classes. Under some approaches, pronouns are included in the class of nouns, as a subclass.

While the minor classes are probably not universal, (most of) the major classes are. It is largely assumed that nouns, verbs, and probably also adjectives are universal parts of speech. Adverbs might not constitute a universal word class.

There are technical terms that are equivalents to the terms of major versus minor word class, such as content versus function words, lexical versus functional categories, and open versus closed classes, respectively. However, these correspondences might not always be one-to-one.

More recent approaches to word classes don’t recognize adverbs as belonging to the major classes; instead, adpositions are candidates for this status under some of these accounts, for example, as in Jackendoff (1977). Under some other theoretical accounts, such as Chomsky (1981) and Baker (2003), only the three word classes noun, verb, and adjective are major or lexical categories. All of the accounts just mentioned are based on binary distinctive features; however, the features used differ from each other. While Chomsky uses the two category features [N] and [V], Jackendoff uses the features [Subj] and [Obj], among others, focusing on the ability of nouns, verbs, adjectives, and adpositions to take (directly, without the help of other elements) subjects (thus characterizing verbs and nouns) or objects (thus characterizing verbs and adpositions). Baker (2003), too, uses the property of taking subjects, but attributes it only to verbs. In his approach, the distinctive feature of bearing a referential index characterizes nouns, and only those. Adjectives are characterized by the absence of both of these distinctive features.

Another important issue addressed by theoretical studies on lexical categories is whether those categories are formed pre-syntactically, in a morphological component of the lexicon, or whether they are constructed in the syntax or post-syntactically. Jackendoff (1977) is an example of a lexicalist approach to lexical categories, while Marantz (1997), and Borer (2003, 2005a, 2005b, 2013) represent an account where the roots of words are category-neutral, and where their membership to a particular lexical category is determined by their local syntactic context. Baker (2003) offers an account that combines properties of both approaches: words are built in the syntax and not pre-syntactically; however, roots do have category features that are inherent to them.

There are empirical phenomena, such as phrasal affixation, phrasal compounding, and suspended affixation, that strongly suggest that a post-syntactic morphological component should be allowed, whereby “syntax feeds morphology.”

- parts of speech

- word classes

- lexical categories

- functional categories

- closed versus open word classes

- features of lexical categories

- pre-syntactic word formation

- post-syntactic word formation

You do not currently have access to this article

Please login to access the full content.

Access to the full content requires a subscription

Printed from Oxford Research Encyclopedias, Linguistics. Under the terms of the licence agreement, an individual user may print out a single article for personal use (for details see Privacy Policy and Legal Notice).

date: 18 April 2024

- Cookie Policy

- Privacy Policy

- Legal Notice

- [66.249.64.20|193.7.198.129]

- 193.7.198.129

Character limit 500 /500

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Parts of speech

The 8 Parts of Speech | Chart, Definition & Examples

A part of speech (also called a word class ) is a category that describes the role a word plays in a sentence. Understanding the different parts of speech can help you analyze how words function in a sentence and improve your writing.

The parts of speech are classified differently in different grammars, but most traditional grammars list eight parts of speech in English: nouns , pronouns , verbs , adjectives , adverbs , prepositions , conjunctions , and interjections . Some modern grammars add others, such as determiners and articles .

Many words can function as different parts of speech depending on how they are used. For example, “laugh” can be a noun (e.g., “I like your laugh”) or a verb (e.g., “don’t laugh”).

Table of contents

- Prepositions

- Conjunctions

- Interjections

Other parts of speech

Interesting language articles, frequently asked questions.

A noun is a word that refers to a person, concept, place, or thing. Nouns can act as the subject of a sentence (i.e., the person or thing performing the action) or as the object of a verb (i.e., the person or thing affected by the action).

There are numerous types of nouns, including common nouns (used to refer to nonspecific people, concepts, places, or things), proper nouns (used to refer to specific people, concepts, places, or things), and collective nouns (used to refer to a group of people or things).

Ella lives in France .

Other types of nouns include countable and uncountable nouns , concrete nouns , abstract nouns , and gerunds .

Check for common mistakes

Use the best grammar checker available to check for common mistakes in your text.

Fix mistakes for free

A pronoun is a word used in place of a noun. Pronouns typically refer back to an antecedent (a previously mentioned noun) and must demonstrate correct pronoun-antecedent agreement . Like nouns, pronouns can refer to people, places, concepts, and things.

There are numerous types of pronouns, including personal pronouns (used in place of the proper name of a person), demonstrative pronouns (used to refer to specific things and indicate their relative position), and interrogative pronouns (used to introduce questions about things, people, and ownership).

That is a horrible painting!

A verb is a word that describes an action (e.g., “jump”), occurrence (e.g., “become”), or state of being (e.g., “exist”). Verbs indicate what the subject of a sentence is doing. Every complete sentence must contain at least one verb.

Verbs can change form depending on subject (e.g., first person singular), tense (e.g., simple past), mood (e.g., interrogative), and voice (e.g., passive voice ).

Regular verbs are verbs whose simple past and past participle are formed by adding“-ed” to the end of the word (or “-d” if the word already ends in “e”). Irregular verbs are verbs whose simple past and past participles are formed in some other way.

“I’ve already checked twice.”

“I heard that you used to sing .”

Other types of verbs include auxiliary verbs , linking verbs , modal verbs , and phrasal verbs .

An adjective is a word that describes a noun or pronoun. Adjectives can be attributive , appearing before a noun (e.g., “a red hat”), or predicative , appearing after a noun with the use of a linking verb like “to be” (e.g., “the hat is red ”).

Adjectives can also have a comparative function. Comparative adjectives compare two or more things. Superlative adjectives describe something as having the most or least of a specific characteristic.

Other types of adjectives include coordinate adjectives , participial adjectives , and denominal adjectives .

An adverb is a word that can modify a verb, adjective, adverb, or sentence. Adverbs are often formed by adding “-ly” to the end of an adjective (e.g., “slow” becomes “slowly”), although not all adverbs have this ending, and not all words with this ending are adverbs.

There are numerous types of adverbs, including adverbs of manner (used to describe how something occurs), adverbs of degree (used to indicate extent or degree), and adverbs of place (used to describe the location of an action or event).

Talia writes quite quickly.

Other types of adverbs include adverbs of frequency , adverbs of purpose , focusing adverbs , and adverbial phrases .

A preposition is a word (e.g., “at”) or phrase (e.g., “on top of”) used to show the relationship between the different parts of a sentence. Prepositions can be used to indicate aspects such as time , place , and direction .

I left the cup on the kitchen counter.

A conjunction is a word used to connect different parts of a sentence (e.g., words, phrases, or clauses).

The main types of conjunctions are coordinating conjunctions (used to connect items that are grammatically equal), subordinating conjunctions (used to introduce a dependent clause), and correlative conjunctions (used in pairs to join grammatically equal parts of a sentence).

You can choose what movie we watch because I chose the last time.

An interjection is a word or phrase used to express a feeling, give a command, or greet someone. Interjections are a grammatically independent part of speech, so they can often be excluded from a sentence without affecting the meaning.

Types of interjections include volitive interjections (used to make a demand or request), emotive interjections (used to express a feeling or reaction), cognitive interjections (used to indicate thoughts), and greetings and parting words (used at the beginning and end of a conversation).

Ouch ! I hurt my arm.

I’m, um , not sure.

The traditional classification of English words into eight parts of speech is by no means the only one or the objective truth. Grammarians have often divided them into more or fewer classes. Other commonly mentioned parts of speech include determiners and articles.

- Determiners

A determiner is a word that describes a noun by indicating quantity, possession, or relative position.

Common types of determiners include demonstrative determiners (used to indicate the relative position of a noun), possessive determiners (used to describe ownership), and quantifiers (used to indicate the quantity of a noun).

My brother is selling his old car.

Other types of determiners include distributive determiners , determiners of difference , and numbers .

An article is a word that modifies a noun by indicating whether it is specific or general.

- The definite article the is used to refer to a specific version of a noun. The can be used with all countable and uncountable nouns (e.g., “the door,” “the energy,” “the mountains”).

- The indefinite articles a and an refer to general or unspecific nouns. The indefinite articles can only be used with singular countable nouns (e.g., “a poster,” “an engine”).

There’s a concert this weekend.

If you want to know more about nouns , pronouns , verbs , and other parts of speech, make sure to check out some of our language articles with explanations and examples.

Nouns & pronouns

- Common nouns

- Proper nouns

- Collective nouns

- Personal pronouns

- Uncountable and countable nouns

- Verb tenses

- Phrasal verbs

- Types of verbs

- Active vs passive voice

- Subject-verb agreement

A is an indefinite article (along with an ). While articles can be classed as their own part of speech, they’re also considered a type of determiner .

The indefinite articles are used to introduce nonspecific countable nouns (e.g., “a dog,” “an island”).

In is primarily classed as a preposition, but it can be classed as various other parts of speech, depending on how it is used:

- Preposition (e.g., “ in the field”)

- Noun (e.g., “I have an in with that company”)

- Adjective (e.g., “Tim is part of the in crowd”)

- Adverb (e.g., “Will you be in this evening?”)

As a part of speech, and is classed as a conjunction . Specifically, it’s a coordinating conjunction .

And can be used to connect grammatically equal parts of a sentence, such as two nouns (e.g., “a cup and plate”), or two adjectives (e.g., “strong and smart”). And can also be used to connect phrases and clauses.

Is this article helpful?

Other students also liked, what is a collective noun | examples & definition.

- What Is an Adjective? | Definition, Types & Examples

- Using Conjunctions | Definition, Rules & Examples

More interesting articles

- Definite and Indefinite Articles | When to Use "The", "A" or "An"

- Ending a Sentence with a Preposition | Examples & Tips

- What Are Prepositions? | List, Examples & How to Use

- What Is a Determiner? | Definition, Types & Examples

- What Is an Adverb? Definition, Types & Examples

- What Is an Interjection? | Examples, Definition & Types

"I thought AI Proofreading was useless but.."

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Parts of Speech: The Ultimate Guide for Students and Teachers

This article is part of the ultimate guide to language for teachers and students. Click the buttons below to view these.

What are Parts of Speech ?

Just as a skilled bricklayer must get to grips with the trowel, brick hammer, tape measure, and spirit level, the student-writer must develop a thorough understanding of the tools of their trade too.

In English, words can be categorized according to their common syntactic function in a sentence, i.e. the job they perform.

We call these different categories Parts of Speech . Understanding the various parts of speech and how they work has several compelling benefits for our students.

Without first acquiring a firm grasp of the various parts of speech, students will struggle to fully comprehend how language works. This is essential not only for the development of their reading comprehension but their writing skills too.

Parts of speech are the core building blocks of grammar . To understand how a language works at a sentence and a whole-text level, we must first master parts of speech.

In English, we can identify eight of these individual parts of speech, and these will provide the focus for our Complete Guide to Parts of Speech .

THE EIGHT PARTS OF SPEECH (Click to jump to each section)

A complete unit on teaching figurative language.

❤️The use of FIGURATIVE LANGUAGE is like “SPECIAL EFFECTS FOR AUTHORS.” It is a powerful tool to create VIVID IMAGERY through words. This HUGE 110 PAGE UNIT guides you through a complete understanding of FIGURATIVE LANGUAGE as both a READER and WRITER covering.

Often the first word a child speaks will be a noun, for example, Mum , Dad , cow , dog , etc.

Nouns are naming words, and, as most school kids can recite, they are the names of people, places, and things . But, what isn’t as widely understood by many of our students is that nouns can be further classified into more specific categories.

These categories are:

Common Nouns

Proper nouns, concrete nouns, abstract nouns, collective nouns, countable nouns, uncountable nouns.

All nouns can be classified as either common or proper .

Common nouns are the general names of people, places, and things. They are groups or classes on their own, rather than specific types of people, places, or things such as we find in proper nouns.

Common nouns can be further classified as abstract or concrete – more on this shortly!

Some examples of common nouns include:

People: teacher, author, engineer, artist, singer.

Places: country, city, town, house, garden.

Things: language, trophy, magazine, movie, book.

Proper nouns are the specific names for people, places, and things. Unlike common nouns, which are always lowercase, proper nouns are capitalized. This makes them easy to identify in a text.

Where possible, using proper nouns in place of common nouns helps bring precision to a student’s writing.

Some examples of proper nouns include:

People: Mrs Casey, J.K. Rowling, Nikola Tesla, Pablo Picasso, Billie Eilish.

Places: Australia, San Francisco, Llandovery, The White House, Gardens of Versailles.

Things: Bulgarian, The World Cup, Rolling Stone, The Lion King, The Hunger Games.

Nouns Teaching Activity: Common vs Proper Nouns

- Provide students with books suitable for their current reading level.

- Instruct students to go through a page or two and identify all the nouns.

- Ask students to sort these nouns into two lists according to whether they are common nouns or proper nouns.

As mentioned, all common and proper nouns can be further classified as either concrete or abstract .

A concrete noun is any noun that can be experienced through one of the five senses. In other words, if you can see, smell, hear, taste, or touch it, then it’s a concrete noun.

Some examples of concrete nouns include:

Abstract nouns refer to those things that can’t be experienced or identified through the five senses.

They are not physical things we can perceive but intangible concepts and ideas, qualities and states.

Some examples of abstract nouns include:

Nouns Teaching Activity: Concrete Vs. Abstract Nouns

- Provide students with a book suitable for their current reading level.

- Instruct students to go through a page or two and identify all the nouns (the lists from Practice Activity #1 may be suitable).

- This time, ask students to sort these nouns into two lists according to whether they are concrete or abstract nouns.

A collective noun is the name of a group of people or things. That is, a collective noun always refers to more than one of something.

Some examples of collective nouns include:

People: a board of directors, a team of football players, a cast of actors, a band of musicians, a class of students.

Places: a range of mountains, a suite of rooms, a union of states, a chain of islands.

Things: a bale of hay, a constellation of stars, a bag of sweets, a school of fish, a flock of seagulls.

Countable nouns are nouns that refer to things that can be counted. They come in two flavors: singular and plural .

In their singular form, countable nouns are often preceded by the article, e.g. a , an , or the .

In their plural form, countable nouns are often preceded by a number. They can also be used in conjunction with quantifiers such as a few and many .

Some examples of countable nouns include:

COUNTABLE NOUNS EXAMPLES

Also known as mass nouns, uncountable nouns are, as their name suggests, impossible to count. Abstract ideas such as bravery and compassion are uncountable, as are things like liquid and bread .

These types of nouns are always treated in the singular and usually do not have a plural form.

They can stand alone or be used in conjunction with words and phrases such as any , some , a little , a lot of , and much .

Some examples of uncountable nouns include:

UNCOUNTABLE NOUNS EXAMPLES

Nouns teaching activity: how many can you list .

- Organize students into small groups to work collaboratively.

- Challenge students to list as many countable and uncountable nouns as they can in ten minutes.

- To make things more challenging, stipulate that there must be an uncountable noun and a countable noun to gain a point.

- The winning group is the one that scores the most points.

Without a verb, there is no sentence! Verbs are the words we use to represent both internal and external actions or states of being. Without a verb, nothing happens.

There are many different types of verbs. Here, we will look at five important verb forms organised according to the jobs they perform:

Dynamic Verbs

Stative verbs, transitive verbs, intransitive verbs, auxiliary verbs.

Each verb can be classified as being either an action or a stative verb.

Dynamic or action verbs describe the physical activity performed by the subject of a sentence. This type of verb is usually the first we learn as children.

For example, run , hit , throw , hide , eat , sleep , watch , write , etc. are all dynamic verbs, as is any action performed by the body.

Let’s see a few examples in sentences:

- I jogged around the track three times.

- She will dance as if her life depends on it.

- She took a candy from the bag, unwrapped it, and popped it into her mouth.

If a verb doesn’t describe a physical activity, then it is a stative verb.

Stative verbs refer to states of being, conditions, or mental processes. Generally, we can classify stative verbs into four types:

- Emotions/Thoughts

Some examples of stative verbs include:

Senses: hurt, see, smell, taste, hear, etc.

Emotions: love, doubt, desire, remember, believe, etc.

Being: be, have, require, involve, contain, etc.

Possession: want, include, own, have, belong, etc.

Here are some stative verbs at work in sentences:

- That is one thing we can agree on.

- I remember my first day at school like it was yesterday.

- The university requires students to score at least 80%.

- She has only three remaining.

Sometimes verbs can fit into more than one category, e.g., be , have , look , see , e.g.,

- She looks beautiful. (Stative)

- I look through the telescope. (Dynamic)

Each action or stative verb can also be further classified as transitive or intransitive .

A transitive verb takes a direct object after it. The object is the noun, noun phrase, or pronoun that has something done to it by the subject of the sentence.

We see this in the most straightforward English sentences, i.e., the Subject-Verb-Object or SVO sentence.

Here are two examples to illustrate. Note: the subject of each sentence is underlined, and the transitive verbs are in bold.

- The teacher answered the student’s questions.

- She studies languages at university.

- My friend loves cabbage.

Most sentences in English employ transitive verbs.

An intransitive verb does not take a direct object after it. It is important to note that only nouns, noun phrases, and pronouns can be classed as direct objects.

Here are some examples of intransitive verbs – notice how none of these sentences has direct objects after their verbs.

- Jane’s health improved .

- The car ran smoothly.

- The school opens at 9 o’clock.

Auxiliary verbs, also known as ‘helping’ verbs, work with other verbs to affect the meaning of a sentence. They do this by combining with a main verb to alter the sentence’s tense, mood, or voice.

Auxiliary verbs will frequently use not in the negative.

There are relatively few auxiliary verbs in English. Here is a list of the main ones:

- be (am, are, is, was, were, being)

- do (did, does, doing)

- have (had, has, having)

Here are some examples of auxiliary verbs (in bold) in action alongside a main verb (underlined).

She is working as hard as she can.

- You must not eat dinner until after five o’clock.

- The parents may come to the graduation ceremony.

The Subject-Auxiliary Inversion Test

To test whether or not a verb is an auxiliary verb, you can use the Subject-Auxiliary Inversion Test .

- Take the sentence, e.g:

- Now, invert the subject and the suspected auxiliary verb to see if it creates a question.

Is she working as hard as she can?

- Can it take ‘not’ in the negative form?

She is not working as hard as she can.

- If the answer to both of these questions is yes, you have an auxiliary verb. If not, you have a full verb.

Verbs Teaching Activity: Identify the Verbs

- Instruct students to go through an appropriate text length (e.g., paragraph, page, etc.) and compile a list of verbs.

- In groups, students should then discuss and categorize each verb according to whether they think they are dynamic or stative, transitive or intransitive, and/or auxiliary verbs.

The job of an adjective is to modify a noun or a pronoun. It does this by describing, quantifying, or identifying the noun or pronoun. Adjectives help to make writing more interesting and specific. Usually, the adjective is placed before the word it modifies.

As with other parts of speech, not all adjectives are the same. There are many different types of adjectives and, in this article, we will look at:

Descriptive Adjectives

- Degrees of Adjectives

Quantitative Adjectives

Demonstrative adjectives, possessive adjectives, interrogative adjectives, proper adjectives.

Descriptive adjectives are what most students think of first when asked what an adjective is. Descriptive adjectives tell us something about the quality of the noun or pronoun in question. For this reason, they are sometimes referred to as qualitative adjectives .

Some examples of this type of adjective include:

- hard-working

In sentences, they look like this:

- The pumpkin was enormous .

- It was an impressive feat of athleticism I ever saw.

- Undoubtedly, this was an exquisite vase.

- She faced some tough competition.

Degrees of Adjectives

Descriptive adjectives have three degrees to express varying degrees of intensity and to compare one thing to another. These degrees are referred to as positive , comparative , and superlative .

The positive degree is the regular form of the descriptive adjective when no comparison is being made, e.g., strong .

The comparative degree is used to compare two people, places, or things, e.g., stronger .

There are several ways to form the comparative, methods include:

- Adding more or less before the adjective

- Adding -er to the end of one syllable adjectives

- For two-syllable adjectives ending in y , change the y to an i and add -er to the end.

The superlative degree is typically used when comparing three or more things to denote the upper or lowermost limit of a quality, e.g., strongest .

There are several ways to form the superlative, including:

- Adding most or least before the adjective

- Adding -est to the end of one syllable adjectives

- For two-syllable adjectives ending in y , change the y to an i and add -est to the end.

There are also some irregular adjectives of degree that follow no discernible pattern that must be learned off by students, e.g., good – better – best .

Let’s take a look at these degrees of adjectives in their different forms.

Let’s take a quick look at some sample sentences:

- It was a beautiful example of kindness.

Comparative

- The red is nice, but the green is prettier .

Superlative

- This mango is the most delicious fruit I have ever tastiest.

Quantitive adjectives provide information about how many or how much of the noun or pronoun.

Some quantitive adjectives include:

- She only ate half of her sandwich.

- This is my first time here.

- I would like three slices, please.

- There isn’t a single good reason to go.

- There aren’t many places like it.

- It’s too much of a good thing.

- I gave her a whole box of them.

A demonstrative adjective identifies or emphasizes a noun’s place in time or space. The most common demonstrative adjectives are this , that , these , and those .

Here are some examples of demonstrative adjectives in use:

- This boat is mine.

- That car belongs to her.

- These shoes clash with my dress.

- Those people are from Canada.

Possessive adjectives show ownership, and they are sometimes confused with possessive pronouns.

The most common possessive adjectives are my , your , his , her , our , and their .

Students need to be careful not to confuse these with possessive pronouns such as mine , yours , his (same in both contexts), hers , ours , and theirs .

Here are some examples of possessive adjectives in sentences:

- My favorite food is sushi.

- I would like to read your book when you have finished it.

- I believe her car is the red one.

- This is their way of doing things.

- Our work here is done.

Interrogative adjectives ask questions, and, in common with many types of adjectives, they are always followed by a noun. Basically, these are the question words we use to start questions. Be careful however, interrogative adjectives modify nouns. If the word after the question word is a verb, then you have an interrogative adverb on hand.

Some examples of interrogative adjectives include what , which , and whose .

Let’s take a look at these in action:

- What drink would you like?

- Which car should we take?

- Whose shoes are these?

Please note: Whose can also fit into the possessive adjective category too.

We can think of proper adjectives as the adjective form of proper nouns – remember those? They were the specific names of people, places, and things and need to be capitalized.

Let’s take the proper noun for the place America . If we wanted to make an adjective out of this proper noun to describe something, say, a car we would get ‘ American car’.

Let’s take a look at another few examples:

- Joe enjoyed his cup of Ethiopian coffee.

- My favorite plays are Shakespearean tragedies.

- No doubt about it, Fender guitars are some of the best in the world.

- The Mona Lisa is a fine example of Renaissance art.

Though it may come as a surprise to some, articles are also adjectives as, like all adjectives, they modify nouns. Articles help us determine a noun’s specification.

For example, ‘a’ and ‘an’ are used in front of an unspecific noun, while ‘the’ is used when referring to a specific noun.

Let’s see some articles as adjectives in action!

- You will find an apple inside the cupboard.

- This is a car.

- The recipe is a family secret.

Adjectives Teaching Activity: Types of Adjective Tally

- Choose a suitable book and assign an appropriate number of pages or length of a chapter for students to work with.

- Students work their way through each page, tallying up the number of each type of adjective they can identify using a table like the one below:

- Note how degrees of adjective has been split into comparative and superlative. The positive forms will take care of in the descriptive category.

- You may wish to adapt this table to exclude the easier categories to identify, such as articles and demonstrative, for example.

Traditionally, adverbs are defined as those words that modify verbs, but they do so much more than that. They can be used not only to describe how verbs are performed but also to modify adjectives, other adverbs, clauses, prepositions, or entire sentences.

With such a broad range of tasks at the feet of the humble adverb, it would be impossible to cover every possibility in this article alone. However, there are five main types of adverbs our students should familiarize themselves with. These are:

Adverbs of Manner

Adverbs of time, adverbs of frequency, adverbs of place, adverbs of degree.

Adverbs of manner describe how or the way in which something happens or is done. This type of adverb is often the first type taught to students. Many of these end with -ly . Some common examples include happily , quickly , sadly , slowly , and fast .

Here are a few taster sentences employing adverbs of manner:

- She cooks Chinese food well .

- The children played happily together.

- The students worked diligently on their projects.

- Her mother taught her to cross the road carefully .

- The date went badly .

Adverbs of time indicate when something happens. Common adverbs of time include before , now , then , after , already , immediately , and soon .

Here are some sentences employing adverbs of time:

- I go to school early on Wednesdays.

- She would like to finish her studies eventually .

- Recently , Sarah moved to Bulgaria.

- I have already finished my homework.

- They have been missing training lately .

While adverbs of time deal with when something happens, adverbs of frequency are concerned with how often something happens. Common adverbs of frequency include always , frequently , sometimes , seldom , and never .

Here’s what they look like in sentences:

- Harry usually goes to bed around ten.

- Rachel rarely eats breakfast in the morning.

- Often , I’ll go home straight after school.

- I occasionally have ketchup on my pizza.

- She seldom goes out with her friends.

Adverbs of place, as the name suggests, describe where something happens or where it is. They can refer to position, distance, or direction. Some common adverbs of place include above , below , beside , inside , and anywhere .

Check out some examples in the sentences below:

- Underneath the bridge, there lived a troll.

- There were pizzerias everywhere in the city.

- We walked around the park in the pouring rain.

- If the door is open, then go inside .

- When I am older, I would like to live nearby .

Adverbs of degree express the degree to which or how much of something is done. They can also be used to describe levels of intensity. Some common adverbs of degree include barely , little , lots , completely , and entirely .

Here are some adverbs of degree at work in sentences:

- I hardly noticed her when she walked into the room.

- The little girl had almost finished her homework.

- The job was completely finished.

- I was so delighted to hear the good news.

- Jack was totally delighted to see Diane after all these years.

Adverb Teaching Activity: The Adverb Generator

- Give students a worksheet containing a table divided into five columns. Each column bears a heading of one of the different types of adverbs ( manner , time , frequency , place , degree ).

- Challenge each group to generate as many different examples of each adverb type and record these in the table.

- The winning group is the one with the most adverbs. As a bonus, or tiebreaker, task the students to make sentences with some of the adverbs.

Pronouns are used in place of a specific noun used earlier in a sentence. They are helpful when the writer wants to avoid repetitive use of a particular noun such as a name. For example, in the following sentences, the pronoun she is used to stand for the girl’s name Mary after it is used in the first sentence.

Mary loved traveling. She had been to France, Thailand, and Taiwan already, but her favorite place in the world was Australia. She had never seen an animal quite as curious-looking as the duck-billed platypus.

We also see her used in place of Mary’s in the above passage. There are many different pronouns and, in this article, we’ll take a look at:

Subject Pronouns

Object pronouns, possessive pronouns, reflexive pronouns, intensive pronouns, demonstrative pronouns, interrogative pronouns.

Subject pronouns are the type of pronoun most of us think of when we hear the term pronoun . They operate as the subject of a verb in a sentence. They are also known as personal pronouns.

The subject pronouns are:

Here are a few examples of subject pronouns doing what they do best:

- Sarah and I went to the movies last Thursday night.

- That is my pet dog. It is an Irish Wolfhound.

- My friends are coming over tonight, they will be here at seven.

- We won’t all fit into the same car.

- You have done a fantastic job with your grammar homework!

Object pronouns operate as the object of a verb, or a preposition, in a sentence. They act in the same way as object nouns but are used when it is clear what the object is.

The object pronouns are:

Here are a few examples of object pronouns in sentences:

- I told you , this is a great opportunity for you .

- Give her some more time, please.

- I told her I did not want to do it .

- That is for us .

- Catherine is the girl whom I mentioned in my letter.

Possessive pronouns indicate ownership of a noun. For example, in the sentence:

These books are mine .

The word mine stands for my books . It’s important to note that while possessive pronouns look similar to possessive adjectives, their function in a sentence is different.

The possessive pronouns are:

Let’s take a look at how these are used in sentences:

- Yours is the yellow jacket.

- I hope this ticket is mine .

- The train that leaves at midnight is theirs .

- Ours is the first house on the right.

- She is the person whose opinion I value most.

- I believe that is his .

Reflexive pronouns are used in instances where the object and the subject are the same. For example, in the sentence, she did it herself , the words she and herself refer to the same person.

The reflexive pronoun forms are:

Here are a few more examples of reflexive pronouns at work:

- I told myself that numerous times.

- He got himself a new computer with his wages.

- We will go there ourselves .

- You must do it yourself .

- The only thing to fear is fear itself .

This type of pronoun can be used to indicate emphasis. For example, when we write, I spoke to the manager herself , the point is made that we talked to the person in charge and not someone lower down the hierarchy.

Similar to the reflexive pronouns above, we can easily differentiate between reflexive and intensive pronouns by asking if the pronoun is essential to the sentence’s meaning. If it isn’t, then it is used solely for emphasis, and therefore, it’s an intensive rather than a reflexive pronoun.

Often confused with demonstrative adjectives, demonstrative pronouns can stand alone in a sentence.

When this , that , these , and those are used as demonstrative adjectives they come before the noun they modify. When these same words are used as demonstrative pronouns, they replace a noun rather than modify it.

Here are some examples of demonstrative pronouns in sentences:

- This is delicious.

- That is the most beautiful thing I have ever seen.

- These are not mine.

- Those belong to the driver.

Interrogative pronouns are used to form questions. They are the typical question words that come at the start of questions, with a question mark coming at the end. The interrogative pronouns are:

Putting them into sentences looks like this:

- What is the name of your best friend?

- Which of these is your favourite?

- Who goes to the market with you?

- Whom do you think will win?

- Whose is that?

Pronoun Teaching Activity: Pronoun Review Table

- Provide students with a review table like the one below to revise the various pronoun forms.

- They can use this table to help them produce independent sentences.

- Once students have had a chance to familiarize themselves thoroughly with each of the different types of pronouns, provide the students with the headings and ask them to complete a table from memory.

Prepositions

Prepositions provide extra information showing the relationship between a noun or pronoun and another part of a sentence. These are usually short words that come directly before nouns or pronouns, e.g., in , at , on , etc.

There are, of course, many different types of prepositions, each relating to particular types of information. In this article, we will look at:

Prepositions of Time

Prepositions of place, prepositions of movement, prepositions of manner, prepositions of measure.

- Preposition of Agency

- Preposition of Possession

- Preposition of Source

Phrasal Prepositions

It’s worth noting that several prepositional words make an appearance in several different categories of prepositions.

Prepositions of time indicate when something happens. Common prepositions of time include after , at , before , during , in , on .

Let’s see some of these at work:

- I have been here since Thursday.

- My daughter was born on the first of September.

- He went overseas during the war.

- Before you go, can you pay the bill, please?

- We will go out after work.

Sometimes students have difficulty knowing when to use in , on , or at . These little words are often confused. The table below provides helpful guidance to help students use the right preposition in the right context.

The prepositions of place, in , at , on , will be instantly recognisable as they also double as prepositions of time. Again, students can sometimes struggle a little to select the correct one for the situation they are describing. Some guidelines can be helpful.

- If something is contained or confined inside, we use in .

- If something is placed upon a surface, we use on .

- If something is located at a specific point, we use at .

A few example sentences will assist in illustrating these:

- He is in the house.

- I saw it in a magazine.

- In France, we saw many great works of art.

- Put it on the table.

- We sailed on the river.

- Hang that picture on the wall, please.

- We arrived at the airport just after 1 pm.

- I saw her at university.

- The boy stood at the window.

Usually used with verbs of motion, prepositions of movement indicate movement from one place to another. The most commonly used preposition of movement is to .

Some other prepositions of movement include:

Here’s how they look in some sample sentences:

- The ball rolled across the table towards me.

- We looked up into the sky.

- The children ran past the shop on their way home.

- Jackie ran down the road to greet her friend.

- She walked confidently through the curtains and out onto the stage.

Preposition of manner shows us how something is done or how it happens. The most common of these are by , in , like , on , with .

Let’s take a look at how they work in sentences:

- We went to school by bus.

- During the holidays, they traveled across the Rockies on foot.

- Janet went to the airport in a taxi.

- She played soccer like a professional.

- I greeted her with a smile.

Prepositions of measure are used to indicate quantities and specific units of measurement. The two most common of these are by and of .

Check out these sample sentences:

- I’m afraid we only sell that fabric by the meter.

- I will pay you by the hour.

- She only ate half of the ice cream. I ate the other half.

- A kilogram of apples is the same weight as a kilogram of feathers.

Prepositions of Agency

These prepositions indicate the causal relationship between a noun or pronoun and an action. They show the cause of something happening. The most commonly used prepositions of agency are by and with .

Here are some examples of their use in sentences:

- The Harry Potter series was written by J.K. Rowling.

- This bowl was made by a skilled craftsman.

- His heart was filled with love.

- The glass was filled with water.

Prepositions of Possession

Prepositions of possessions indicate who or what something belongs to. The most common of these are of , to , and with .

Let’s take a look:

- He is the husband of my cousin.

- He is a friend of the mayor.

- This once belonged to my grandmother.

- All these lands belong to the Ministry.

- The man with the hat is waiting outside.

- The boy with the big feet tripped and fell.

Prepositions of Source

Prepositions of source indicate where something comes from or its origins. The two most common prepositions of source are from and by . There is some crossover here with prepositions of agency.

Here are some examples:

- He comes from New Zealand.

- These oranges are from our own orchard.

- I was warmed by the heat of the fire.

- She was hugged by her husband.

- The yoghurt is of Bulgarian origin.

Phrasal prepositions are also known as compound prepositions. These are phrases of two or more words that function in the same way as prepositions. That is, they join nouns or pronouns to the rest of the sentence.

Some common phrasal prepositions are:

- According to

- For a change

- In addition to

- In spite of

- Rather than

- With the exception of

Students should be careful of overusing phrasal prepositions as some of them can seem clichéd. Frequently, it’s best to say things in as few words as is necessary.

Preposition Teaching Activity: Pr eposition Sort

- Print out a selection of the different types of prepositions on pieces of paper.

- Organize students into smaller working groups and provide each group with a set of prepositions.

- Using the headings above as categories, challenge students to sort the prepositions into the correct groups. Note that some prepositions will comfortably fit into more than one group.

- The winning group is the one to sort all prepositions correctly first.

- As an extension exercise, students can select a preposition from each category and write a sample sentence for it.

ConjunctionS

Conjunctions are used to connect words, phrases, and clauses. There are three main types of conjunction that are used to join different parts of sentences. These are:

- Coordinating

- Subordinating

- Correlative

Coordinating Conjunctions

These conjunctions are used to join sentence components that are equal such as two words, two phrases, or two clauses. In English, there are seven of these that can be memorized using the mnemonic FANBOYS:

Here are a few example sentences employing coordinating conjunctions:

- As a writer, he needed only a pen and paper.

- I would describe him as strong but lazy.

- Either we go now or not at all.

Subordinating Conjunctions

Subordinating conjunctions are used to introduce dependent clauses in sentences. Basically, dependent clauses are parts of sentences that cannot stand as complete sentences on their own.

Some of the most common subordinate conjunctions are:

Let’s take a look at some example sentences:

- I will complete it by Tuesday if I have time.

- Although she likes it, she won’t buy it.

- Jack will give it to you after he finds it.

Correlative Conjunctions

Correlative conjunctions are like shoes; they come in pairs. They work together to make sentences work. Some come correlative conjunctions are:

- either / or

- neither / nor

- Not only / but also

Let’s see how some of these work together:

- If I were you, I would get either the green one or the yellow one.

- John wants neither pity nor help.

- I don’t know whether you prefer horror or romantic movies.

Conjunction Teaching Activity: Conjunction Challenge

- Organize students into Talking Pairs .

- Partner A gives Partner B an example of a conjunction.

- Partner B must state which type of conjunction it is, e.g. coordinating, subordinating, or correlative.

- Partner B must then compose a sentence that uses the conjunction correctly and tell it to Partner A.

- Partners then swap roles.

InterjectionS

Interjections focus on feelings and are generally grammatically unrelated to the rest of the sentence or sentences around them. They convey thoughts and feelings and are common in our speech. They are often followed by exclamation marks in writing. Interjections include expressions such as:

- Eww! That is so gross!

- Oh , I don’t know. I’ve never used one before.

- That’s very… err …generous of you, I suppose.

- Wow! That is fantastic news!

- Uh-Oh! I don’t have any more left.

Interjection Teaching Activity: Create a scenario

- Once students clearly understand what interjections are, brainstorm as a class as many as possible.

- Write a master list of interjections on the whiteboard.

- Partner A suggests an interjection word or phrase to Partner B.

- Partner B must create a fictional scenario where this interjection would be used appropriately.

With a good grasp of the fundamentals of parts of speech, your students will now be equipped to do a deeper dive into the wild waters of English grammar.

To learn more about the twists and turns of English grammar, check out our comprehensive article on English grammar here.

DOWNLOAD THESE 9 FREE CLASSROOM PARTS OF SPEECH POSTERS

PARTS OF SPEECH TUTORIAL VIDEOS

MORE ARTICLES RELATED TO PARTS OF SPEECH

Choose Your Test

Sat / act prep online guides and tips, understanding the 8 parts of speech: definitions and examples.

General Education

If you’re trying to learn the grammatical rules of English, you’ve probably been asked to learn the parts of speech. But what are parts of speech and how many are there? How do you know which words are classified in each part of speech?

The answers to these questions can be a bit complicated—English is a difficult language to learn and understand. Don’t fret, though! We’re going to answer each of these questions for you with a full guide to the parts of speech that explains the following:

- What the parts of speech are, including a comprehensive parts of speech list

- Parts of speech definitions for the individual parts of speech. (If you’re looking for information on a specific part of speech, you can search for it by pressing Command + F, then typing in the part of speech you’re interested in.)

- Parts of speech examples

- A ten question quiz covering parts of speech definitions and parts of speech examples

We’ve got a lot to cover, so let’s begin!

Feature Image: (Gavina S / Wikimedia Commons)

What Are Parts of Speech?

The parts of speech definitions in English can vary, but here’s a widely accepted one: a part of speech is a category of words that serve a similar grammatical purpose in sentences.

To make that definition even simpler, a part of speech is just a category for similar types of words . All of the types of words included under a single part of speech function in similar ways when they’re used properly in sentences.

In the English language, it’s commonly accepted that there are 8 parts of speech: nouns, verbs, adjectives, adverbs, pronouns, conjunctions, interjections, and prepositions. Each of these categories plays a different role in communicating meaning in the English language. Each of the eight parts of speech—which we might also call the “main classes” of speech—also have subclasses. In other words, we can think of each of the eight parts of speech as being general categories for different types within their part of speech . There are different types of nouns, different types of verbs, different types of adjectives, adverbs, pronouns...you get the idea.

And that’s an overview of what a part of speech is! Next, we’ll explain each of the 8 parts of speech—definitions and examples included for each category.

There are tons of nouns in this picture. Can you find them all?

Nouns are a class of words that refer, generally, to people and living creatures, objects, events, ideas, states of being, places, and actions. You’ve probably heard English nouns referred to as “persons, places, or things.” That definition is a little simplistic, though—while nouns do include people, places, and things, “things” is kind of a vague term. I t’s important to recognize that “things” can include physical things—like objects or belongings—and nonphysical, abstract things—like ideas, states of existence, and actions.

Since there are many different types of nouns, we’ll include several examples of nouns used in a sentence while we break down the subclasses of nouns next!

Subclasses of Nouns, Including Examples

As an open class of words, the category of “nouns” has a lot of subclasses. The most common and important subclasses of nouns are common nouns, proper nouns, concrete nouns, abstract nouns, collective nouns, and count and mass nouns. Let’s break down each of these subclasses!

Common Nouns and Proper Nouns

Common nouns are generic nouns—they don’t name specific items. They refer to people (the man, the woman), living creatures (cat, bird), objects (pen, computer, car), events (party, work), ideas (culture, freedom), states of being (beauty, integrity), and places (home, neighborhood, country) in a general way.

Proper nouns are sort of the counterpart to common nouns. Proper nouns refer to specific people, places, events, or ideas. Names are the most obvious example of proper nouns, like in these two examples:

Common noun: What state are you from?

Proper noun: I’m from Arizona .

Whereas “state” is a common noun, Arizona is a proper noun since it refers to a specific state. Whereas “the election” is a common noun, “Election Day” is a proper noun. Another way to pick out proper nouns: the first letter is often capitalized. If you’d capitalize the word in a sentence, it’s almost always a proper noun.

Concrete Nouns and Abstract Nouns

Concrete nouns are nouns that can be identified through the five senses. Concrete nouns include people, living creatures, objects, and places, since these things can be sensed in the physical world. In contrast to concrete nouns, abstract nouns are nouns that identify ideas, qualities, concepts, experiences, or states of being. Abstract nouns cannot be detected by the five senses. Here’s an example of concrete and abstract nouns used in a sentence:

Concrete noun: Could you please fix the weedeater and mow the lawn ?

Abstract noun: Aliyah was delighted to have the freedom to enjoy the art show in peace .

See the difference? A weedeater and the lawn are physical objects or things, and freedom and peace are not physical objects, though they’re “things” people experience! Despite those differences, they all count as nouns.

Collective Nouns, Count Nouns, and Mass Nouns

Nouns are often categorized based on number and amount. Collective nouns are nouns that refer to a group of something—often groups of people or a type of animal. Team , crowd , and herd are all examples of collective nouns.

Count nouns are nouns that can appear in the singular or plural form, can be modified by numbers, and can be described by quantifying determiners (e.g. many, most, more, several). For example, “bug” is a count noun. It can occur in singular form if you say, “There is a bug in the kitchen,” but it can also occur in the plural form if you say, “There are many bugs in the kitchen.” (In the case of the latter, you’d call an exterminator...which is an example of a common noun!) Any noun that can accurately occur in one of these singular or plural forms is a count noun.

Mass nouns are another type of noun that involve numbers and amount. Mass nouns are nouns that usually can’t be pluralized, counted, or quantified and still make sense grammatically. “Charisma” is an example of a mass noun (and an abstract noun!). For example, you could say, “They’ve got charisma, ” which doesn’t imply a specific amount. You couldn’t say, “They’ve got six charismas, ” or, “They’ve got several charismas .” It just doesn’t make sense!

Verbs are all about action...just like these runners.

A verb is a part of speech that, when used in a sentence, communicates an action, an occurrence, or a state of being . In sentences, verbs are the most important part of the predicate, which explains or describes what the subject of the sentence is doing or how they are being. And, guess what? All sentences contain verbs!

There are many words in the English language that are classified as verbs. A few common verbs include the words run, sing, cook, talk, and clean. These words are all verbs because they communicate an action performed by a living being. We’ll look at more specific examples of verbs as we discuss the subclasses of verbs next!

Subclasses of Verbs, Including Examples

Like nouns, verbs have several subclasses. The subclasses of verbs include copular or linking verbs, intransitive verbs, transitive verbs, and ditransitive or double transitive verbs. Let’s dive into these subclasses of verbs!

Copular or Linking Verbs

Copular verbs, or linking verbs, are verbs that link a subject with its complement in a sentence. The most familiar linking verb is probably be. Here’s a list of other common copular verbs in English: act, be, become, feel, grow, seem, smell, and taste.

So how do copular verbs work? Well, in a sentence, if we said, “Michi is ,” and left it at that, it wouldn’t make any sense. “Michi,” the subject, needs to be connected to a complement by the copular verb “is.” Instead, we could say, “Michi is leaving.” In that instance, is links the subject of the sentence to its complement.

Transitive Verbs, Intransitive Verbs, and Ditransitive Verbs

Transitive verbs are verbs that affect or act upon an object. When unattached to an object in a sentence, a transitive verb does not make sense. Here’s an example of a transitive verb attached to (and appearing before) an object in a sentence:

Please take the clothes to the dry cleaners.

In this example, “take” is a transitive verb because it requires an object—”the clothes”—to make sense. “The clothes” are the objects being taken. “Please take” wouldn’t make sense by itself, would it? That’s because the transitive verb “take,” like all transitive verbs, transfers its action onto another being or object.

Conversely, intransitive verbs don’t require an object to act upon in order to make sense in a sentence. These verbs make sense all on their own! For instance, “They ran ,” “We arrived ,” and, “The car stopped ” are all examples of sentences that contain intransitive verbs.

Finally, ditransitive verbs, or double transitive verbs, are a bit more complicated. Ditransitive verbs are verbs that are followed by two objects in a sentence . One of the objects has the action of the ditransitive verb done to it, and the other object has the action of the ditransitive verb directed towards it. Here’s an example of what that means in a sentence:

I cooked Nathan a meal.

In this example, “cooked” is a ditransitive verb because it modifies two objects: Nathan and meal . The meal has the action of “cooked” done to it, and “Nathan” has the action of the verb directed towards him.

Adjectives are descriptors that help us better understand a sentence. A common adjective type is color.

#3: Adjectives

Here’s the simplest definition of adjectives: adjectives are words that describe other words . Specifically, adjectives modify nouns and noun phrases. In sentences, adjectives appear before nouns and pronouns (they have to appear before the words they describe!).

Adjectives give more detail to nouns and pronouns by describing how a noun looks, smells, tastes, sounds, or feels, or its state of being or existence. . For example, you could say, “The girl rode her bike.” That sentence doesn’t have any adjectives in it, but you could add an adjective before both of the nouns in the sentence—”girl” and “bike”—to give more detail to the sentence. It might read like this: “The young girl rode her red bike.” You can pick out adjectives in a sentence by asking the following questions:

- Which one?

- What kind?

- How many?

- Whose’s?

We’ll look at more examples of adjectives as we explore the subclasses of adjectives next!

Subclasses of Adjectives, Including Examples

Subclasses of adjectives include adjective phrases, comparative adjectives, superlative adjectives, and determiners (which include articles, possessive adjectives, and demonstratives).

Adjective Phrases

An adjective phrase is a group of words that describe a noun or noun phrase in a sentence. Adjective phrases can appear before the noun or noun phrase in a sentence, like in this example:

The extremely fragile vase somehow did not break during the move.

In this case, extremely fragile describes the vase. On the other hand, adjective phrases can appear after the noun or noun phrase in a sentence as well:

The museum was somewhat boring.

Again, the phrase somewhat boring describes the museum. The takeaway is this: adjective phrases describe the subject of a sentence with greater detail than an individual adjective.

Comparative Adjectives and Superlative Adjectives

Comparative adjectives are used in sentences where two nouns are compared. They function to compare the differences between the two nouns that they modify. In sentences, comparative adjectives often appear in this pattern and typically end with -er. If we were to describe how comparative adjectives function as a formula, it might look something like this:

Noun (subject) + verb + comparative adjective + than + noun (object).

Here’s an example of how a comparative adjective would work in that type of sentence:

The horse was faster than the dog.

The adjective faster compares the speed of the horse to the speed of the dog. Other common comparative adjectives include words that compare distance ( higher, lower, farther ), age ( younger, older ), size and dimensions ( bigger, smaller, wider, taller, shorter ), and quality or feeling ( better, cleaner, happier, angrier ).

Superlative adjectives are adjectives that describe the extremes of a quality that applies to a subject being compared to a group of objects . Put more simply, superlative adjectives help show how extreme something is. In sentences, superlative adjectives usually appear in this structure and end in -est :

Noun (subject) + verb + the + superlative adjective + noun (object).

Here’s an example of a superlative adjective that appears in that type of sentence:

Their story was the funniest story.

In this example, the subject— story —is being compared to a group of objects—other stories. The superlative adjective “funniest” implies that this particular story is the funniest out of all the stories ever, period. Other common superlative adjectives are best, worst, craziest, and happiest... though there are many more than that!

It’s also important to know that you can often omit the object from the end of the sentence when using superlative adjectives, like this: “Their story was the funniest.” We still know that “their story” is being compared to other stories without the object at the end of the sentence.

Determiners

The last subclass of adjectives we want to look at are determiners. Determiners are words that determine what kind of reference a noun or noun phrase makes. These words are placed in front of nouns to make it clear what the noun is referring to. Determiners are an example of a part of speech subclass that contains a lot of subclasses of its own. Here is a list of the different types of determiners:

- Definite article: the

- Indefinite articles : a, an

- Demonstratives: this, that, these, those

- Pronouns and possessive determiners: my, your, his, her, its, our, their

- Quantifiers : a little, a few, many, much, most, some, any, enough

- Numbers: one, twenty, fifty

- Distributives: all, both, half, either, neither, each, every

- Difference words : other, another

- Pre-determiners: such, what, rather, quite

Here are some examples of how determiners can be used in sentences:

Definite article: Get in the car.

Demonstrative: Could you hand me that magazine?

Possessive determiner: Please put away your clothes.

Distributive: He ate all of the pie.

Though some of the words above might not seem descriptive, they actually do describe the specificity and definiteness, relationship, and quantity or amount of a noun or noun phrase. For example, the definite article “the” (a type of determiner) indicates that a noun refers to a specific thing or entity. The indefinite article “an,” on the other hand, indicates that a noun refers to a nonspecific entity.

One quick note, since English is always more complicated than it seems: while articles are most commonly classified as adjectives, they can also function as adverbs in specific situations, too. Not only that, some people are taught that determiners are their own part of speech...which means that some people are taught there are 9 parts of speech instead of 8!

It can be a little confusing, which is why we have a whole article explaining how articles function as a part of speech to help clear things up .

Adverbs can be used to answer questions like "when?" and "how long?"

Adverbs are words that modify verbs, adjectives (including determiners), clauses, prepositions, and sentences. Adverbs typically answer the questions how?, in what way?, when?, where?, and to what extent? In answering these questions, adverbs function to express frequency, degree, manner, time, place, and level of certainty . Adverbs can answer these questions in the form of single words, or in the form of adverbial phrases or adverbial clauses.

Adverbs are commonly known for being words that end in -ly, but there’s actually a bit more to adverbs than that, which we’ll dive into while we look at the subclasses of adverbs!

Subclasses Of Adverbs, Including Examples

There are many types of adverbs, but the main subclasses we’ll look at are conjunctive adverbs, and adverbs of place, time, manner, degree, and frequency.

Conjunctive Adverbs

Conjunctive adverbs look like coordinating conjunctions (which we’ll talk about later!), but they are actually their own category: conjunctive adverbs are words that connect independent clauses into a single sentence . These adverbs appear after a semicolon and before a comma in sentences, like in these two examples:

She was exhausted; nevertheless , she went for a five mile run.

They didn’t call; instead , they texted.

Though conjunctive adverbs are frequently used to create shorter sentences using a semicolon and comma, they can also appear at the beginning of sentences, like this:

He chopped the vegetables. Meanwhile, I boiled the pasta.

One thing to keep in mind is that conjunctive adverbs come with a comma. When you use them, be sure to include a comma afterward!

There are a lot of conjunctive adverbs, but some common ones include also, anyway, besides, finally, further, however, indeed, instead, meanwhile, nevertheless, next, nonetheless, now, otherwise, similarly, then, therefore, and thus.

Adverbs of Place, Time, Manner, Degree, and Frequency

There are also adverbs of place, time, manner, degree, and frequency. Each of these types of adverbs express a different kind of meaning.

Adverbs of place express where an action is done or where an event occurs. These are used after the verb, direct object, or at the end of a sentence. A sentence like “She walked outside to watch the sunset” uses outside as an adverb of place.

Adverbs of time explain when something happens. These adverbs are used at the beginning or at the end of sentences. In a sentence like “The game should be over soon,” soon functions as an adverb of time.

Adverbs of manner describe the way in which something is done or how something happens. These are the adverbs that usually end in the familiar -ly. If we were to write “She quickly finished her homework,” quickly is an adverb of manner.

Adverbs of degree tell us the extent to which something happens or occurs. If we were to say “The play was quite interesting,” quite tells us the extent of how interesting the play was. Thus, quite is an adverb of degree.

Finally, adverbs of frequency express how often something happens . In a sentence like “They never know what to do with themselves,” never is an adverb of frequency.

Five subclasses of adverbs is a lot, so we’ve organized the words that fall under each category in a nifty table for you here:

It’s important to know about these subclasses of adverbs because many of them don’t follow the old adage that adverbs end in -ly.

Here's a helpful list of pronouns. (Attanata / Flickr )

#5: Pronouns

Pronouns are words that can be substituted for a noun or noun phrase in a sentence . Pronouns function to make sentences less clunky by allowing people to avoid repeating nouns over and over. For example, if you were telling someone a story about your friend Destiny, you wouldn’t keep repeating their name over and over again every time you referred to them. Instead, you’d use a pronoun—like they or them—to refer to Destiny throughout the story.

Pronouns are typically short words, often only two or three letters long. The most familiar pronouns in the English language are they, she, and he. But these aren’t the only pronouns. There are many more pronouns in English that fall under different subclasses!

Subclasses of Pronouns, Including Examples