- Current Issue

- Upcoming Issue

- Guidance to Authors

- Editorial Executive Board

- Individual Details

- Become a Peer Reviewer

- Proof Readers

- Terms & Conditions

- Submit a Manuscript

- Manuscript Guidance

- Add Conference

- Add Job Advert

Critical Analysis of Case Based Discussions

J M L Williamson and A J Osborne

Introduction

Assessment and evaluation are the foundations of learning; the former is concerned with how students perform and the latter, how successful the teaching was in reaching its objectives. Case based discussions (CBDs) are structured, non-judgmental reviews of decision-making and clinical reasoning 1 . They are mapped directly to the surgical curriculum and “assess what doctors actually do in practice” 1 . Patient involvement is thought to enhance the effectiveness of the assessment process, as it incorporates key adult learning principles: it is meaningful, relevant to work, allows active involvement and involves three domains of learning 2 :

- Clinical (knowledge, decisions, skills)

- Professionalism (ethics, teamwork)

- Communication (with patients, families and staff)

The ability of work based assessments to test performance is not well established. The purpose of this critical review is to assess if CBDs are effective as an assessment tool.

Validity of Assessment

Validity concerns the accuracy of an assessment, what this means in practical terms, and how to avoid drawing unwarranted conclusions or decisions from the results. Validity can be explored in five ways: face, content, concurrent, construct and criterion-related/predicative.

CBDs have high face validity as they focus on the role doctors perform and are, in essence, an evolution of ‘bedside oral examinations’ 3 . The key elements of this assessment are learnt in medical school; thus the purpose of a CBD is easy for both trainees and assessors to validate 1 . In terms of content validity, CBDs are unique in assessing a student’s decision-making and which, is key to how doctors perform in practice. However, as only six CBDs are required a year, they are unlikely to be representative of the whole curriculum. Thus CBDs may have a limited content validity overall, especially if students focus on one type of condition for all assessments.

Determining the concurrent validity of CBDs is difficult as they assess the pinnacle of Miller’s triangle – what a trainee ‘does’ in clinical practice (figure1) 4 . CBDs are unique in this aspect, but there may be some overlap with other work based assessments particularly in task specific skills and knowledge. Simulation may give some concurrent validity to the assessment of judgment. The professional aspect of assessment can be validated by a 360 degree appraisal, as this requests feedback about a doctor’s professionalism from other healthcare professionals 1 .

CBDs have high construct validity, as the assessment is consistent with practice and appropriate for the working environment. The clinical skills being assessed will improve with expertise and thus there should be ‘expert-novice’ differences on marking 3 . However the standard of assessment (i.e. the ‘pass mark’) increases with expertise – as students are always being assessed against a mark of competency for their level. A novice can therefore score the same ‘mark’ as an expert despite a difference in ability.

In terms of predictive validity performance-based assessments are simulations and examinees do not behave in the same way as they would in real life 3 . Thus, CBDs are an assessment of competence (‘shows how’) but not of true clinical performance and one perhaps could deduct that they don’t assess the attitude of the trainee which completes the cycle along with knowledge and skills (‘does’) 4 . CBDs permit inferences to be drawn concerning the skills of examinees that extend beyond the particular cases included in the assessment 3 . The quality of performance in one assessment can be a poor predictor of performance in another context. Both the limited number and lack of generalizability of these assessments have a negative influence on predictive validity 3 .

Reliability of Assessment

Reliability can be defined as “the degree to which test scores are free from errors of measurement”. Feldt and Brennan describe the ‘essence’ of reliability as the “quantification of the consistency and inconsistency in examinee performance” 5 . Moss states that less standardized forms of assessment, such as CBDs, present serious problems for reliability 6 . These types of assessment permit both students and assessors substantial latitude in interpreting and responding to situations, and are heavily reliant on assessor’s ability. Reliability of CBDs is influenced by the quality of the rater’s training, the uniformity of assessment, and the degree of standardization in examinee.

Rating scales are also known to hugely affect reliability – understanding of how to use these scales must be achieved by all trainee assessors in order to achieve marking consistency. In CBD assessments, trainees should be rated against a level of completion at the end of the current stage of training (i.e. core or higher training) 1 . While accurate ratings are critical to the success of any WBA, there may be latitude in the interpretation of these rating scales between different assessors. Assessors who have not received formal WBA training tend to score trainees more generously than trained assessors 7-8 . Improved assessor training in the use of CBDs and spreading assessments throughout the student’s placement (i.e. a CBD every two months) may improve the reliability and effectiveness of the tool 1 .

Practicality of Assessment

CBDs are a one-to-one assessment and are not efficient; they are labour intensive and only cover a limited amount of the curriculum per assessment. The time taken to complete CBDs has been thought to negatively impact on training opportunities 7 . Formalized assessment time could relieve the pressure of arranging ad hoc assessments and may improve the negative perceptions of students regarding CBDs.

The practical advantages of CBDs are that they allow assessments to occur within the workplace and they assess both judgment and professionalism – two subjects on the curriculum which are otherwise difficult to assess 1 . CBDs can be very successful in promoting autonomy and self-directed learning, which improves the efficiency of this teaching method 9 . Moreover, CBDs can be immensely successful in improving the abilities of trainees and can change clinical practice – a feature than is not repeated by other forms of assessment 8 .

One method for ensuring the equality of assessments across all trainees is by providing clear information about what CBDs are, the format they take and the relevance they have to the curriculum. The information and guidance provided for the assessment should be clear, accurate and accessible to all trainees, assessors, and external assessors. This minimizes the potential for inconsistency of marking practice and perceived lack of fairness 7-10 . However, the lack of standardization of this assessment mechanism combined with the variation in training and interpretation of the rating scales between assessors may result in inequality.

Formative Assessment

Formative assessments modify and enhance both learning and understanding by the provision of feedback 11 . The primary function of the rating scale of a CBD is to inform the trainee and trainer about what needs to be learnt 1 . Marks per see provide no learning improvement; students gain the most learning value from assessment that is provided without marks or grades 12 . CBDs have feedback is built into the process and therefore it can given immediately and orally. Verbal feedback has a significantly greater effect on future performance than grades or marks as the assessor can check comprehension and encourage the student to act upon the advice given 1,11-12 . It should be specific and related to need; detailed feedback should only occur to help the student work through misconceptions or other weaknesses in performance 12 . Veloski, et al, suggests that systemic feedback delivered from a credible source can change clinical performance 8 .

For trainees to be able to improve, they must have the capacity to monitor the quality of their own work during their learning by undertaking self-assessment 12 . Moreover, trainees must accept that their work can be improved and identify important aspects of their work that they wish to improve. Trainee’s learning can be improved by providing high quality feedback and the three main elements are crucial to this process are 12 :

- Helping students recognise their desired goal

- Providing students with evidence about how well their work matches that goal

- Explaining how to close the gap between current performance and desired goal

The challenge for an effective CBDis to have an open relationship between student and assessor where the trainee is able to give an honest account of their abilities and identify any areas of weakness. This relationship currently does not exists in most CBDs, as studies by Veloski, et al 8 and Norcini and Burch 9 who revealed that only limited numbers of trainees anticipated changing their practice in response to feedback data. An unwillingness to engage in formal self-reflection by surgical trainees and reluctance to voice any weaknesses may impair their ability to develop and lead to resistance in the assessment process. Improved training of assessors and removing the scoring of the CBD form may allow more accurate and honest feedback to be given to improve the student’s future performance. An alternative method to improve performance is to ‘feed forward’ (as opposed to feedback) focusing on what students should concentrate on in future tasks 10

Summative Assessment

Summative assessments are intended to identify how much the student has learnt. CBDs have a strong summative feel: a minimum number of assessments are required and a satisfactory standard must be reached to allow progression of a trainee to the next level of training 1 . Summative assessment affects students in a number of different ways; it guides their judgment of what is important to learn, affects their motivation and self-perceptions of competence, structures their approaches to and timing of personal study, consolidates learning, and affects the development of enduring learning strategies and skills 12-13 . Resnick and Resnick summarize this as “what is not assessed tends to disappear from the curriculum” 13 . Accurate recording of CBDs is vital, as the assessment process is transient, and allows external validation and moderation.

Evaluation of any teaching is fundamental to ensure that the curriculum is reaching its objectives 14 . Student evaluation allows the curriculum to develop and can result in benefits to both students and patients. Kirkpatrick suggested four levels on which to focus evaluation 14 :

Level 1 – Learner’s reactions Level 2a – Modification of attitudes and perceptions Level 2b – Acquisition of knowledge and skills Level 3 – Change in behaviour Level 4a – Change in organizational practice Level 4b – Benefits to patients

At present there is little opportunity within the Intercollegiate Surgical Curriculum Project (ISCP) for students to provide feedback. Thus a typical ‘evaluation cycle’ for course development (figure 2) cannot take place 15 . Given the widespread nature of subjects covered by CBDs, the variations in marking standards by assessors, and concerns with validity and reliability, an overall evaluation of the curriculum may not be possible. However, regular evaluation of the learning process can improve the curriculum and may lead to better student engagement with the assessment process 14 . Ideally the evaluation process should be reliable, valid and inexpensive 15 . A number of evaluation methods exist, but all should allow for ongoing monitoring review and further enquiries to be undertaken.

CBDs, like all assessments, do have limitations, but we feel that they play a vital role in development of trainees. Unfortunately, Pereira and Dean suggest that trainees view CBDs with suspicion 7 . As a result, students do not engage fully with the assessment and evaluation process and CBDs are not being used to their full potential. The main problems with CBDs relate to the lack of formal assessor training in the use of the WBA and the lack of evaluation of the assessment process Adequate training of assessors will improve feedback and standardize the assessment process nationally. Evaluation of CBDs should improve the validity of the learning tool, enhancing the training curriculum and encouraging engagement of trainees.

If used appropriately, CBDs are valid, reliable and provide excellent feedback which is effective and efficient in changing practice. However, a combination of assessment modalities should be utilized to ensure that surgical trainees are facilitated in their development across the whole spectrum of the curriculum.

1. Intercollegiate Surgical Curriculum Project (ISCP). ISCP/GMP Blueprint, version 2. ISCP website (www.iscp.ac.uk) (accessed November 2010)

2. Lake FR, Ryan G. Teaching on the run tips 4: teaching with patients. Medical Journal of Australia 2004;181:158-159

3. Swanson DB, Norman GR, Linn RL. Educational researcher 1995;24;5-11+35

4. Miller GE. The assessment of clinical skills/competence/ performance. Academic Medicine, 1990;65:563–567.

5. Feldt LS, Brennan RL. Reliability. In Linn RL (ed), Education measurement (3rd edition). Washington, DC: The American Council on Education and the National Council on Measurement in Education; 1989

6. Moss PA. Can there be Validity without Reliability? Educational Researcher 1994:23;5-12

7. Pereira EA, Dean BJ. British surgeons’ experience of mandatory online workplaced-based assessment. Journal of the Royal Society of Medicine 2009;102:287-93

8. Veloski J, Boex JR, Grasberger MJ, Evans A, Wolfson DB. Systemic review of the literature on assessment, feedback and physician’s clinical performance: BEME Guide No. 7. Medical teacher 2006;28:117-28

9. Norcini J, Burch V. Workplaced-based assessment as an educational tool: AMEE Guide No 31. Medical teacher 2007;28:117-28

10. Hounsell D. Student feedback, learning and development in Slowery, M and Watson, D (eds). Higher education and the lifecourse. Buckingham; Open University Press; 2003.

11. Bloxham S, Boyd P. Developing effective assessment in higher education: A practical guide. Maidenhead: Open University Press; 2007

12. Cooks TJ. The impact of classroom evaluation practices on students. Review of Educational Research 1998;58;438-481

13. Resnick LB, Resnick D. Assessing the thinking curriculum: New tools for educational reform. In Gifford B and O’Connor MC (eds), Cognitive approaches to assessment. Boston: Kluwer-Nijhoff; 1992

14. Barr H, Freeth D, Hammick M, Koppel, Reeves S. Evaluation of interprofessional education: a United Kingdom review of health and social care. London: CAIPE/BERA; 2000

15. Wahlqvist M, Skott A, Bjorkelund C, Dahlgren G, Lonka K, Mattsson B. Impact of medical students’ descriptive evaluations on long-term course development. BMC Medical Education 2006;6:24

- Login or register to post comments

- Disclaimers

Increasing Collaborative Discussion in Case-Based Learning Improves Student Engagement and Knowledge Acquisition

- Original Research

- Open access

- Published: 05 September 2022

- Volume 32 , pages 1055–1064, ( 2022 )

Cite this article

You have full access to this open access article

- Nana Sartania ORCID: orcid.org/0000-0002-3196-2312 1 ,

- Sharon Sneddon ORCID: orcid.org/0000-0001-9767-4180 1 ,

- James G. Boyle 1 ,

- Emily McQuarrie ORCID: orcid.org/0000-0002-0010-2491 1 &

- Harry P. de Koning ORCID: orcid.org/0000-0002-9963-1827 2

2686 Accesses

8 Citations

2 Altmetric

Explore all metrics

In the transition from academic to clinical learning, the development of clinical reasoning skills and teamwork is essential, but not easily achieved by didactic teaching only. Case-based learning (CBL) was designed to stimulate discussions of genuine clinical cases and diagnoses but in our initial format (CBL’10) remained predominantly tutor-driven rather than student-directed. However, interactive teaching methods stimulate deep learning and consolidate taught material, and we therefore introduced a more collaborative CBL (cCBL), featuring a structured format with discussions in small breakout groups. This aimed to increase student participation and improve learning outcomes.

A survey with open and closed questions was distributed among 149 students and 36 tutors that had participated in sessions of both CBL formats. A statistical analysis compared exam scores of topics taught via CBL’10 and cCBL.

Students and tutors both evaluated the switch to cCBL positively, reporting that it increased student participation and enhanced consolidation and integration of the wider subject area. They also reported that the cCBL sessions increased constructive discussion and stimulated deep learning. Moreover, tutors found the more structured cCBL sessions easier to facilitate. Analysis of exam results showed that summative assessment scores of subjects switched to cCBL significantly increased compared to previous years, whereas scores of subjects that remained taught as CBL’10 did not change.

Conclusions

Compared to our initial, tutor-led CBL format, cCBL resulted in improved educational outcomes, leading to increased participation, confidence, discussion and higher exam scores.

Similar content being viewed by others

Strategies for teaching evidence-based practice in nursing education: a thematic literature review

May-Elin T. Horntvedt, Anita Nordsteien, … Elisabeth Severinsson

Study smart – impact of a learning strategy training on students’ study behavior and academic performance

Felicitas Biwer, Anique de Bruin & Adam Persky

The Value of Using Tests in Education as Tools for Learning—Not Just for Assessment

Dillon H. Murphy, Jeri L. Little & Elizabeth L. Bjork

Avoid common mistakes on your manuscript.

Introduction

Teaching methods in medical education that involve students in discussion and interaction continue to evolve with a focus on promoting collaborative and active learning. Many medical schools have introduced clinical teaching early in the curriculum in an attempt to integrate basic and clinical sciences [ 1 ]. The use of clinical cases to aid teaching has been documented for over a century, with the first use of case-based learning (CBL) in 1912 to teach pathology at the University of Edinburgh [ 2 ]. There is no set definition for CBL currently. Thistlethwaite et al. [ 3 ] define the goal of CBL ‘is to prepare students for clinical practice through the use of authentic clinical cases. It links theory to practice through the application of knowledge to the cases, using inquiry-based learning methods’, and it fits in the continuum between structured and guided learning. As such, ‘CBL’ comes in many different formats, some more didactic, some more participatory, but the pros and cons of the different approaches have rarely been systematically evaluated.

Clinical Reasoning is one of the key skills to be taught before the transition into the clinic. Diagnostic error rates continue to be high [ 4 ] and reflect deficits in both knowledge and reasoning skills. Training in clinical reasoning enhances the students’ ability to transfer declarative knowledge to clinical problems in preparation for working in clinical teams, and a format of transition-stage teaching that addresses both deficits in tandem would be highly beneficial. It has been reported that some forms of CBL learning compare favourably to didactic teaching [ 5 , 6 , 7 ] because it is a participatory method [ 8 ] that leads to improved motivation [ 9 ] and the development of reflective thinking [ 10 ]. Preparing students to think like clinicians before they commence clinical attachments affords opportunities for vertical and horizontal integration of the curriculum and fosters learning for competence [ 11 ].

CBL was introduced at the University of Glasgow, in its initial format, in 2010 (CBL’10) and incorporated in ‘Phase 3', a 15-week-long period of transition into full-time clinical teaching with a focus on pathophysiology. McLean [ 7 ] reviewed several published definitions of CBL and summarized that CBL requires the presentation of a clinical case followed by an ‘enquiry’ on the part of the learner and provision of further information by a tutor who guides the discussion towards meeting the learning objectives—the idea we tried to replicate in CBL’10. However, our in-house evaluation found that student participation was uneven, and the sessions often became too didactic, contravening the idea of CBLs being student-centred. As a form of adult learning, Mayo [ 12 ] describes CBL in terms of the socio-constructivist model, with the students themselves constructing the new knowledge and insights and the tutor functioning merely as a guide.

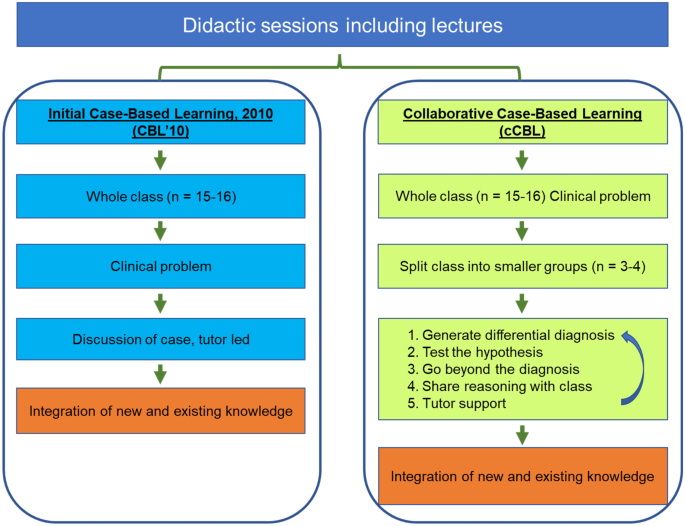

The recent work by Schwartzstein and colleagues [ 13 ] suggested that collaborative case-based learning (cCBL), a modification of CBL, brings additional benefits to students over more didactic forms of CBL in terms of enjoyment and working collectively in teams. Addition of classroom discussions to case-based learning encourages the students to make inferences and conclusions from the presented data [ 14 , 15 ]. cCBL is described as ‘team-based’ and as incorporating elements of both PBL and CBL [ 13 ]. In PBL, however, a problem is presented as the means of obtaining basic scientific knowledge, whereas CBL is typically supported by prior didactic teaching to assure the class has the required knowledge base to discuss the clinical case; they exercise logical diagnostic problem-solving that combines comprehension, critical thinking and problem-solving skills, engendering deep cognitive learning [ 16 ]. cCBL places more emphasis on student-based discussions of focused but open-ended questions in small groups, before reaching a consensus in a larger group, and requires students to iteratively generate their own hypotheses from real-life clinical observations and data (Fig. 1 ), incorporating elements of various small group teaching modalities [ 13 ]. The collaborative format integrates cognitive and social learning modes [ 12 ] and makes the material appear more relevant [ 17 ], particularly for students with below average academic achievement and/or hesitant to participate in discussions in large groups. A key benefit of cCBL is the increased interactivity between the students in small groups to ensure student-centred active learning and reasoning. The sessions require the students to integrate different types of information, including clinical and social data and ethical considerations, while the training in evidence-based deduction stimulates the procedural learning essential for clinical reasoning [ 18 , 19 ]. The CBL’10 and cCBL formats are compared in Fig. 1 .

Comparison of the CBL’10 and collaborative CBL formats. An essential feature of our cCBL is the iterative nature of the small group discussion with the small groups reporting back to class, leading to next-level case information being released by the tutor followed by additional round(s) of small group discussions

We here present a pilot study where we re-designed some of our CBL’10 scenarios to fit the cCBL format in which we reinforced the discussion element, using breakout groups for intensive small group discussions, while keeping the other modules in the CBL’10 format. The collaborative approach aimed to encourage students to practice clinical reasoning and decision-making by providing iterative experiences of analysing and problem-solving complex cases. This models genuine care situations where clinicians are required to integrate a variety of topics, including ethical issues, prevention and epidemiology. Students are given relevant case information to discuss, from which they form hypotheses. They report back to the full class, upon which further information is released (e.g. ‘investigative outcomes’) followed by subsequent discussion in the breakout groups; this process is repeated several times (Fig. 1 ).

In 2015, the National Academy of Medicine urgently highlighted the need to improve diagnostic error rates, which continued to be too high [ 20 ]. The change to cCBL aimed to improve inductive clinical reasoning skills and joint decision-making in small teams. Moreover, this format encourages the ‘explicit integration’ of clinical reasoning in the undergraduate years of the medical curriculum, as encouraged in a recent consensus statement by the UK Clinical Reasoning in Medical Education (CReME) group in order to foster effective clinical reasoning in teams [ 21 ]. Importantly, we believe that the collaborative format also highlights the importance of a truly patient-centred care to participating students, which may not be as evident in CBL’10.

While it is expected that cCBL encourages a higher level of participation and should result in better knowledge as well as better reasoning and decision making in clinical practice, it is not easy to summatively assess clinical reasoning in an undergraduate setting. Indeed, a systematic review of the use of CBL, drawing on worldwide practice, shows that the three top methods of evaluation of a CBL learning session were survey (36%), test (17%) and test plus survey (16%) [ 7 ]. OSCE was used less frequently—only 9% of the studies reviewed reported using a practical exam like OSCE [ 7 ] or prescribing [ 22 ] as an outcome for evaluating the effectiveness of CBL. Here, we share a mixed-methods evaluation of our implementation, consisting of both a survey focusing on issues of participation and motivation and on summative assessments to gauge the effects on integrative knowledge acquisition in subjects taught by either CBL format.

This pilot study focuses on the comparison of CBL’10 and cCBL as used in Phase 3 of the Glasgow Undergraduate Medical School curriculum. For this study, we have converted three CBL’10 scenarios to cCBL, while other sessions continued unchanged as CBL’10. Evaluations by students and tutors of these sessions are presented, alongside a side-by-side comparison of the exam performance for topics taught by the two CBL formats. All tutors are trained by faculty and had experience of teaching both versions of CBLs and were as such, well acquainted with both methods when asked to compare the two. Similarly, all students surveyed had participated in both formats.

After presenting the initial case information to the class of 16 students, the group was divided into sub-groups of 3–4 students each. The cCBL included pre-class readiness assessment and in class activities in which students were asked to work on specific tasks individually (discuss differentials; propose investigations; suggest treatment and management), debate their answers in groups of 3–4 and record these on ‘post-it’ notes presented to the full class as part of the discussion to reach a consensus in the larger groups of 16 [ 13 ].

Students ( n = 270) took part in a survey that gathered quantitative and qualitative data from responding year 3 students (Table 1 ; n = 149; 55%) and clinical tutors (Table 2 ; n = 36). The questionnaire addressed the participants’ perception of the effectiveness of the cCBL sessions. The survey was designed by NS and SS. The questions were based on the annual course evaluation forms and included core statutory questions used for teaching appraisal as well as bespoke ones specific to cCBLs. The survey has high face validity as the authors have extensive experience of questionnaire design, are experts in CBL and are highly experienced in curriculum design, development and evaluation.

The paper-based surveys were distributed and collected by the year administrator immediately after the cCBL teaching sessions and contained open and closed questions (5-point Likert scale: 1, strongly disagree; and 5, strongly agree) on the experiences of the group work, peer-to-peer interaction and the intended learning outcomes (ILOs) coverage throughout these sessions. Open questions in the survey were categorized into sub-themes through an inductive process, and the dominant thematic categories were agreed upon and analysed. Quotes were selected in relation to the whole dataset. Initial subthemes were refined through successive returns to the data, from which additional quotes were used.

We applied interpretive analysis to develop an argument based on the categories identified, in order to explain how the use of cCBLs has helped students to overcome team-learning difficulties using collaborative inductive reasoning. This nested mixed method [ 23 , 24 ] provides a stronger basis of causal inference by combining quantitative analysis of the large open question dataset with an in-depth investigation of the student and tutor responses embedded in the survey.

The second outcome measure was a comparative analysis of the end-of-year exam performance between two independent cohorts that were assessed on the topics covered in cCBL versus the same topics taught via CBL’10 in previous years. The three cCBL-taught topics assessed in 2019/2020 and 2020/2021 were myocardial infarction, chronic kidney disease and diabetes; the outcomes were compared to the results of 2015/2016 and 2018/2019, when students were assessed on the same topics, then taught via CBL’10. Student knowledge was assessed in summative exams using both multiple choice questions (MCQ) and modified essay questions (MEQ), with a similar weighting for each component. Exam questions were developed and standard set by subject experts involved in designing and delivering the curriculum and quality assured to ensure validity and reliability. Exam questions were subject to internal and external scrutiny. Questions were blueprinted to intended learning outcomes (ILOs) to ensure content validity and that the type of testing used (MEQs) is appropriate to test relevant knowledge in terms of diagnosis, investigation and management in a written format (Downing et al., 2003) [ 25 ]. MEQs are often used to assess higher order abilities and abstract knowledge according to Bloom’s taxonomy, for which they are preferred over MCQs [ 26 ]. For example, structured questions are mapped to intended learning outcomes such as the ability to ‘differentiate’, which requires analysing and conceptualizing knowledge [ 27 ]. Examinations were standard set using a modified Angoff method whereby the panel judges (subject experts) discuss each question and arbitrate the expected answers from students [ 28 ]. Information regarding internal consistency of the exams was determined by calculating Cronbach’s alpha [ 29 ]. Data are presented as mean exam score ± SE. An unpaired, two-tailed t test was used to establish whether the difference in knowledge gain of the topics taught by the two methods is indeed attributable to the new teaching method. p < 0.01 was considered statistically significant.

The Practice of CBL at the University of Glasgow

The three re-designed collaborative CBLs introduced in 2019/20 were compared to the remaining unchanged CBL’10 s. The students took part in 90-min CBL sessions twice a week and all participated equally in both types of CBL sessions and all other aspects of the course. The key differences between the two types of CBL are shown in Table 3 .

In our CBL’10 s, tutors led a more didactic session with the occasional discussions designed to allow the students to contextualize the knowledge gained from earlier lectures while still building on the clinical and problem-solving aspects of the medical curriculum.

In cCBLs, students were expected to follow the structured script and asked to formulate hypotheses. This required them to apply the knowledge gained from the supporting lectures and other teaching formats to various clinical presentations in three steps. First, they needed to generate a differential diagnosis and commit to a most likely diagnosis. The next step was to test the hypothesis and see what additional tests they required in order to confirm or reject the initial diagnosis. Finally, students are asked to go beyond the diagnosis and explain their therapeutic goal, as well as consider the factors that might influence the patient’s response to therapy. Students feed their thoughts back to the main group using post-it notes.

Groups of 16 were further subdivided into smaller groups of 3–4 students each, which were given specific tasks (e.g. generating differential diagnosis; proposing investigations and/or possible treatment/management). Through interactions in the small break-out groups, students were able to integrate a variety of topics, including prevention, ethical issues and epidemiology or health systems issues and generate discussions necessary for confirming or refuting the initial diagnosis. The advantage of smaller groups was the opportunity to practice analytical reasoning skills in a safe environment. In terms of group size in shared reasoning, Edelbring et al. [ 30 ] found the dyad peer setting works best, although there was a concern that any knowledge asymmetry in the peer group may negatively impact on the learning experience.

Survey of Opinion of Collaborative Versus Traditional CBL Sessions

Qualitative analysis identified two overarching themes: enhanced engagement and gain of knowledge.

Enhanced engagement

Students and tutors both found that cCBLs increased student engagement and motivation for self-directed learning as a result of the more interactive nature of these sessions. Ninety percent of students and 92% of tutors thought that the cCBL sessions facilitated group discussions and thought them more interactive than the CBL’10 sessions; all had had experience with both formats.

‘Students were more engaged; the sessions generally were more interactive using breakout groups and post-it notes’. (Tutor 28). ‘Breakout stimulated discussion; smaller groups worked better, they were more interactive, more engaging and as a result, more enjoyable’. (Tutor 9). ‘Mini-group work is effective for discussion, the sessions are interactive, got you thinking about specific ideas first’. (Student 39).

As the small groups are presented with a set of clinical symptoms and a patient history in real-life cases, the discussions tend to draw on a wide range of topics and knowledge within the group.

‘Encourages discussion about dermatology + other systems’. (Student 86).

The biggest benefit—according to tutors and students—was the inclusivity, as the collaborative CBLs encouraged those who normally participate passively to engage better, due to the very small group size.

‘Definitely encourages more discussion and even from those who may not normally contribute’ (Tutor 16). ‘Allows to encourage even fairly non-responsive ones’ (Student 126).

With an increased engagement came a deeper understanding of the subject matter, and the students approached these sessions more confidently. Tutors commented that the students took control of their own learning and really practiced their clinical reasoning skills as intended, rather than relying on the tutors.

‘Encouraged students to identify own learning needs; onus is on students to breakout and discuss’. (Tutor 36). ‘Allowed students to be actively involved and develop clinical reasoning skills’. (Tutor 35). ‘Makes you think for yourself, got you thinking about specific ideas first and think of alternatives and different causes/potentials’. (Student 60).

Knowledge gain

Students felt that the interactivity helped contextualize the knowledge and they learnt better as a result of it; it was easier for them to concentrate on the topic when discussing it with their peers:

‘I felt like I learned more because I was more engaged as it was interactive; use of post-its was helpful in solidifying points from discussion and whether or not they were valid, in a way that previous CBLs lacked’. (Student 27, Student 20). ‘Very interactive which encouraged more active learning and discussions, were clinically relevant’. (Student 17).

Students felt that breaking down the topic to basics and building a hypothesis that they discussed with peers in smaller groups, allowed them to consolidate lectures.

‘Reinforces knowledge from lectures in a practical case situation; we learn more when we discuss the topic with others and it helps consolidate knowledge’. (Students 99 and 49). ‘[collaborative] CBLs wrapped up the lectures well and helped me to consolidate my knowledge’. (Student 42). ‘Breaking everything down to basics helped with understanding’. (Student 123). ‘Using ‘post-it's’ for differentials helps consolidate knowledge from the week; groups of 3 work best—groups should be really small, otherwise it doesn’t work’. (Student 21).

It should be noted, however, that a quarter of the students surveyed and responding were either neutral (17%) or disliked (8%) the discussions in breakout groups. These students questioned the usefulness of the approach as they felt they had insufficient knowledge of the subject matter to engage effectively in small group discussions.

‘The format was not very helpful; I prefer when it goes through the case sequentially; don’t like too many breakout group discussions – often we don’t know enough to comment’. (Student 84). ‘Students didn’t enjoy the post-it notes and preferred to discuss things as opposed to writing down’. (Tutor 8). ‘It is difficult to answer /discuss very broad topics in small groups with limited knowledge. It is much easier in the previous weeks to follow a case from start to finish with smaller questions’. (Student 91).

Effect of the Introduction of cCBL on Summative Examinations

In order to objectively assess knowledge gain, we compared relevant exam performance of the cohorts that were taught specific topics via CBL’10 with those in later cohorts that received them as cCBLs.

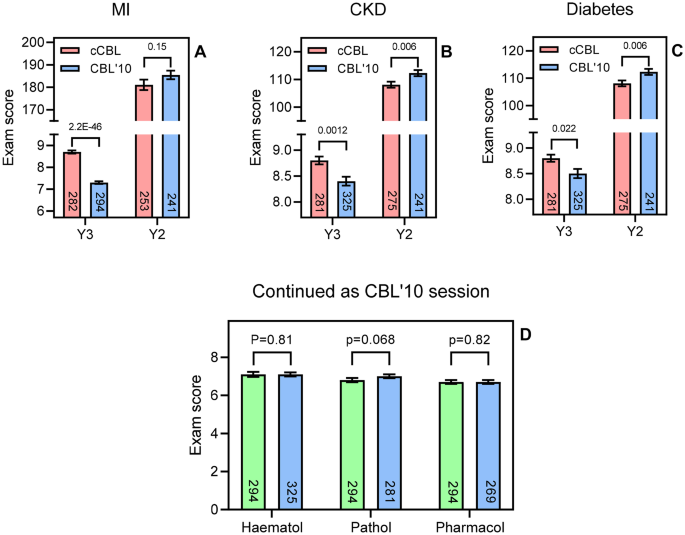

There was a highly significant improvement in the cCBL students’ exam marks for the questions particularly on myocardial infarction (MI; p = 2.2E-46 , Fig. 2 A), but also on chronic kidney disease (CKD; p = 0.0012 , Fig. 2 B) and diabetes ( p = 0.022 ; Fig. 2 C). As a control, the overall exam performance for the selected two cohorts at the end of year 2 was used, providing the nearest possible comparison. The two cohorts compared for MI, sitting the Y2 exam in 2018/19 (the cohort of Y3 in 2019–2020) and 2014/2015 (the Y3 cohort of 2015–2016), respectively, performed identically in this assessment, indicating that the two cohorts achieved comparable exam grades prior to cCBL ( p = 0.15 ; Fig. 2 A). Analysis of the end-of-Y2 exam for the cohorts compared for CKD and diabetes showed that the cohort that would go on to receive CBL’10 in these subjects performed slightly better in the Y2 exam than the cohort that would go on to do cCBL in Year 3 ( p = 0.006 ; Fig. 2 B, C).

A – C Comparison of exam performance in year 3 on a specific topic between cohorts having been taught the subject by cCBL (red bars; 2019/2020 or 2020/2021) and CBL’10 (blue bars; 2015/2016 and 2018/2019). The two cohorts were set questions of comparable difficulty and complexity in their Y3 exams. As a control, the overall cohort performance in the end of year 2 exam was also analysed for the same cohorts. Bars show average ± SEM and the t test score is indicated above the compared bars. D Y3 exam performance comparison for the two cohorts in three subjects taught only via CBL’10 and assessed by a similar question on the topic. Green bars, cohort of 2019/2020; blue bars, cohorts of 2020/2021, 2018/2019, and 2015/2016, respectively. The number of students in each cohort is shown in each bar

However, not all topics in the 15-week Phase 3 were changed to cCBL at the same time. In order to allow a true side-by-side comparison, we analysed exam performance in topics that were still taught via CBL’10 in 2019/2020 (Fig. 2 D, green bars) and compared these with exam performance in the same topic in previous years, also taught by CBL’10 (Fig. 2 D, blue bars). In contrast to the gains seen in the cCBL topics of MI, CKD and diabetes seen in Figs. 2 A–C, for the topics of haematology, pathology and pharmacology, taught via CBL’10 (and assessed in both cohorts), exam performance was statistically identical ( p = 0.81 , 0.068 and 0.82 , respectively, Fig. 2 D). We can thus conclude that the improvements in exam scores in cCBL-taught topics is likely attributable to the CBL format as no such improvement was seen when the same cohorts were compared in topics still taught via CBL’10.

CBL, in its original form [ 3 , 31 , 32 ], was introduced into our year 3 MBChB curriculum in 2010 and has consistently been one of the most highly evaluated components of the course. However, our tutor feedback indicated diminishing student engagement in the discussion elements of the class. Following the study by Krupat et al. [ 13 ], demonstrating an improved learning experience of students with the cCBL format, we introduced a similar change in academic year 2019/2020, in a subset of our CBL sessions, as a pilot study to address and investigate the student participation issues.

We have conducted a side-by-side comparison of the two cohorts that received both types of CBL and found that cCBL improved student engagement, motivation and knowledge gain and had a positive impact on assessment performance. Direct quantitative evidence of improved exam scores in the cCBL group was presented. Our findings are consistent with other studies that evaluated a switch to collaborative CBL [ 13 , 33 , 34 ], but our study is the first to compare two types of CBL in a mixed teaching year featuring both formats. While this study cannot firmly stipulate that the gain in the quality of learning by students can only be attributed to the switch from the original CBL to cCBL, no performance gain was seen in the subjects that remained taught as CBL’10. The majority of our students engaged well with the cCBL process and evaluated it positively.

We think cCBL sessions are particularly effective in developing clinical reasoning. The hypothesis-generating step and the discussions are opportunities for students to consolidate topics, first in small peer groups and then with the tutor for clarification, as required. This approach develops deeper clinical insights than didactic teaching can provide. The complex combination of skills and knowledge needed to arrive at differential diagnoses and evidence-based practice cannot be taught effectively via lectures alone [ 35 , 36 ]. Interactive teaching sessions with peers and tutors, involving discussions and clinical reasoning, are essential and highly valued by the current generation of students [ 37 ]. The qualitative analysis carried out in this study identified two overarching themes: gain of engagement/motivation and gain of knowledge.

Fredricks et al. [ 38 ] distinguish three types of engagement: behavioural, emotional and cognitive, describing participation, emotional responses to the learning environment and deliberate investment of effort by the student, respectively. Expanding on this theme, studies by Wang and Eccles [ 39 ] and Kahu [ 40 ] as well as DiBenedetto and Bembenutty [ 41 ] found that each engagement type influenced academic performance and aspiration in different ways, but that both aspects were positively correlated with self-regulated learning. A study with 5,805 undergraduate students refined the model further to show that the effects of a student’s emotional state on achievement are mediated through self-regulated learning and motivation [ 42 ]. Cavanagh et al. [ 43 ] identified student buy-in of the active learning format as an important factor for improved course performance, showing the need to engage with the student body in co-creating and developing teaching material and the format in which the students want to be taught.

The data collected from students that participated in the cCBL sessions indicated that it enhanced learning, increased interactivity and promoted better learning of the topic through the group discussions. A large majority of the students believed that they learned more because of the interactive format and that the stepwise process of the cCBL enabled topics to be broken down, making the construction of hypotheses easier. They also reported that the collaborative format allowed better integration of diverse learning on the course, which built confidence and helped with consolidation. However, some of the students felt uneasy about being expected to discuss complex scenarios in such small groups, apparently not confident that they had the knowledge to do so. Table 1 shows that 12/149 responded disagree or strongly disagree to the survey question ‘The breakout sessions were useful in discussing topics with my peers’, with a further 25/149 responding ‘neutral’. It could be argued that these students may have actually benefitted most from this clinical reasoning training, as they will next find themselves in various ward placements requiring these skills.

The tutors found that the collaborative format made it easier to engage with, and motivate students, particularly the quieter ones, who had less opportunity to ‘hide’ in the small groups. While this may have been somewhat uncomfortable for the most passive participants, we regard the increased (need for) participation as one of the most positive outcomes of the cCBL format. Students are more anxious about being called upon (by the tutor) to answer a question in a larger group, as opposed to discussions with a few of their peers. McConnell and Eva [ 44 ] discuss how a (pre)-clinical student’s emotional state can determine learning outcomes and how direct questioning can induce ‘fear and stress’. The use of ‘post-it’ notes to capture ideas and thoughts is another effective way to get everyone involved in the process and can help overcome silence in groups as it serves to almost anonymize the opinions submitted to the larger group. Tutors also noted that cCBL helped students identify their own learning needs as the small group discussions readily identified knowledge gaps.

Almost all of the tutors (94%) surveyed felt more confident in leading the student discussions and that the session achieved the learning outcomes of the case. Thistlethwaite et al . [ 3 ] noted a similar satisfaction with CBL in tutors, attributing the enjoyment to either the use of authentic clinical cases, or the group learning effect. The cCBL development further extends these gains as our tutors noted that the smaller groups used in cCBL were probably the reason for the increased engagement. Moreover, tutors were more positive about delivering sessions because cCBL is more structured than CBL’10, which is hostage to the participation by students in the full group, with the iterative 3-step process providing tutors with a clear framework to follow.

The use of active learning is increasingly considered to be associated with student engagement and improved outcomes [ 45 ]. Bonwell and Eison [ 46 ] defined active learning ‘as instructional activities involving students in doing things and thinking about what they are doing’. It strengthens students’ use of higher order thinking to complete activities or participate in discussion in class, and it often includes working in groups. Students retain information for longer, as groups tend to learn through discussions, formulating hypotheses and evaluation of others’ ideas. Often, it helps them recognize the value of their contribution, resulting in increased confidence, as shown by the survey results. When views of cCBL were negative, it was almost always reflecting lack of confidence in their own knowledge, emphasizing the need to have CBL follow on from didactic teaching sessions, consolidating comprehension. This study confirms that cCBL encourages participation and that it is popular with most students, who find it a relevant way to prepare for the clinical phase of their medical education. Many students are still too passive in CBL’10, and the switch to cCBL aimed to increase ‘active learning’ for the entire cohort and thereby improve exam performance as well.

Although many medical schools are using active learning strategies, there is still little evidence in the literature that directly demonstrates a positive effect on summative assessment. Krupat et al. [ 13 ] showed an increase in attainment in lower aptitude students. In this study, we have shown exam performance improvements in all three subjects that switched to cCBL with a highly significant improvement in MI and modest improvements in two other topics. We attribute the very large improvement in cardiovascular question performance, relative to the more modest improvements in diabetes and nephrology, to the fact that the students have little cardiology teaching in year 2. In contrast, year 2 provided a solid understanding of the basic principles and clinical application of the other two subjects, which resulted in a less dramatic improvement when using cCBL, while the cardiovascular topic is a more sensitive test of the extent that knowledge gain is possible using cCBL. These increases in performance were compared to three other topics that remained taught by CBL’10 (haematology, pathology and pharmacology). None of these subjects showed any increase in assessment scores in the cohorts examined. Moreover, the cohorts performed virtually identically in their respective second year exams, suggesting that the difference in exam performance between the two cohorts taught via two different CBL formats could indeed be attributed to cCBL efficacy.

However, a limitation of any form of teaching is that one size rarely fits all. The collaborative style of CBL aims to encourage participation and discussion, with ample opportunity to explore issues in a safe environment, but we would wish to study how different individual learners perceive this and whether a more personalized approach could be developed. In addition, we would like to investigate whether non-specialty and specialty tutors find the process equally effective. The true benefit of the cCBL would have been logical to assess in a clinical setting, where the reasoning skills are exercised daily. However, it is obvious that there are a number of potential confounders to such a study evaluating students’ abilities to reason clinically, once they have more experience of placements.

To summarize, modification of CBL to a more collaborative approach with very small breakout groups is effective in improving medical students’ engagement with tutors and peers and their performance in assessment. This side-by-side direct comparative study outlines the clear benefits of the collaborative format: practicing clinical reasoning in small groups, and the power of the directed, focused discussions of the cases presented to the group led to increased participation as well as improved summative examination scores.

Eisenstein A, Vaisman L, Johnston-Cox H, Gallan A, Shaffer K, Vaughan D, O’Hara C, Joseph L. Integration of basic science and clinical medicine: the innovative approach of the cadaver biopsy project at the Boston University School of Medicine. Acad Med. 2014;89:50–3.

Article Google Scholar

Sturdy S. Scientific method for medical practitioners: the case method of teaching pathology in early twentieth-century Edinburgh. Bull Hist Med. 2007;81(4):760–92.

Thistlethwaite JE, Davies D, Ekeocha S, Kidd JM, MacDougall C, Matthews P, Purkis J, Clay D. The effectiveness of case-based learning in health professional education. A BEME systematic review: BEME Guide No. 23. Med Teacher. 2012;34:e421–44.

Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165:1493–9. https://doi.org/10.1001/archinte.165.13.1493 .

Jhala M, Mathur J. The association between deep learning approach and case based learning. BMC Med Educ. 2019;19:106.

Srinivasan M, Wilkes M, Stevenson F, Nguyen T, Slavin S. Comparing problem-based learning with case-based learning: effects of a major curricular shift at two institutions. Acad Med. 2007;82:74–82.

McLean SF. Case-based learning and its application in medical and health-care fields: a review of worldwide literature. J Med Educ Curric Dev. 2016;3:39–49. https://doi.org/10.4137/JMECD.S20377 .

Tomey AM. Learning with cases. J Contin Educ Nurs. 2003;34:34–8.

Irby DM. Three exemplary models of case-based teaching. Acad Med. 1994;69:947–53.

Schwartz PL, Egan AG, Heath CJ. Students’ perceptions of course outcomes and learning styles in case-based courses in a traditional medical school. Acad Med. 1994;69:507.

Ten Cate O, Custers EJ, Durning SJ. Principles and practice of case-based clinical reasoning education: a method for preclinical students. Cham, Switzerland: Springer. 2018:208. ISBN 978–3–319–64827–9.

Mayo JA. Using case-based instruction to bridge the gap between theory and practice in psychology of adjustment. J Construct Psychol. 2004;17:137–46. https://doi.org/10.1080/10720530490273917 .

Krupat E, Richards J, Sullivan A, Fleenor T, Schwartzstein R. Assessing the effectiveness of case-based collaborative learning via randomized controlled trial. Acad Med. 2016;91:723–9.

Wassermann S. Introduction to case method teaching: a guide to the galaxy. New York: Teachers College Press; 1994.

Google Scholar

McDade SA. Case study pedagogy to advance critical thinking. Teach Psychol. 1995;22:9–10.

Krockenberger MB, Bosward KL, Canfield PJ. Integrated case-based applied pathology (ICAP): a diagnostic-approach model for the learning and teaching of veterinary pathology. J Vet Med Educ. 2007;34:396–408.

Koh D, Chia KS, Jeyaratnam J, Chia SE, Singh J. Case studies in occupational medicine for medical undergraduate training. Occup Med. 1995;45:27–30.

Wadowski PP, Steinlechner B, Schiferer A, Löffler-Statka H. From clinical reasoning to effective clinical decision making – new training methods. Front Psychol. 2015;6:473.

Turk B, Ertl S, Wong G, Wadowski PP, Löffler-Statka H. Does case-based blended-learning expedite the transfer of declarative knowledge to procedural knowledge in practice? BMC Med Ed. 2019;19:447. https://doi.org/10.1186/s12909-019-1884-4 .

Balogh EP, Miller BT, Ball JR (Eds). Improving diagnosis in health care. Committee on diagnostic error in health care; Board on health care services; Institute of medicine; The national academies of sciences, engineering, and medicine. Washington (DC): The National Academies Press. 2015.

Cooper N, Bartlett M, Gay S, Hammond A, Lillicrap M, Matthan J, Singh M. UK Clinical Reasoning in Medical Education (CReME) consensus statement group. Consensus statement on the content of clinical reasoning curricula in undergraduate medical education. Med Teach. 2021;43:152–22.

Herdeiro MT, Ferreira M, Ribeiro-Vaz I, Junqueira Polónia J, Costa-Pereira A. Workshop- and telephone-based Interventions to improve adverse drug reaction reporting: a cluster-randomized trial in Portugal. Drug Saf. 2012;35:655–65. https://doi.org/10.1007/BF03261962 .

Creswell JW. Research design: qualitative, quantitative, and mixed methods approaches. 2nd ed. Thousand Oaks, CA: Sage; 2003.

Lieberman ES. Nested analysis as a mixed-method strategy for comparative research. Am Pol Sci Rev. 2005;99:435–52.

Downing SM. Validity: on the meaningful interpretation of assessment data. Med Educ. 2003;37:830–7.

Palmer EJ, Devitt PG. Assessment of higher order cognitive skills in undergraduate education: modified essay or multiple choice questions? BMC Med Educ. 2007;7:49. https://doi.org/10.1186/1472-6920-7-49 .

Krathwohl DR. A revision of Bloom’s taxonomy: an overview. Theory Pract. 2002;41(4):212–8.

Norcini JJ. Setting standards on educational tests. Med Educ. 2003;37:464–9.

Raykov T, Marcoulides GA. Thanks coefficient alpha, we still need you! Educ Psychol Meas. 2017;2017(79):200–10.

Edelbring S, Parodis I, Lundberg IE. Increasing reasoning awareness: video analysis of students’ two-party virtual patient interactions. JMIR Med Educ. 2018;4: e4. https://doi.org/10.2196/mededu.9137 .

Garvey MT, O’Sullivan M, Blake M. Multidisciplinary case-based learning for undergraduate students. Eur J Dent Educ. 2000;4:165–8.

Struck BD, Teasdale TA. Development and evaluation, of a longitudinal case-based learning (CBL) experience for a Geriatric Medicine rotation. Gerontol Geriat Educ. 2008;28:105–14.

Fischer K, Sullivan AM, Krupat E, Schwartzstein RM. Assessing the effectiveness of using mechanistic concept maps in case-based collaborative learning. Acad Med. 2019;94:208–12.

Said JT, Thompson LL, Foord L, Chen ST. Impact of a case-based collaborative learning curriculum on knowledge and learning preferences of dermatology residents. Int J Womens Dermatol. 2020;6:404–8.

Wouda JC, Van de Wiel HB. Education in patient-physician communication: how to improve effectiveness? Patient Educ Couns. 2013;90:46–53.

Draaisma E, Bekhof J, Langenhorst VJ, Brand PL. Implementing evidence-based medicine in a busy general hospital department: results and critical success factors. BMJ Evid Based Med. 2018;23:173–6.

Koenemann N, Lenzer B, Zottmann JM, Fischer MR, Weidenbusch M. Clinical Case Discussions - a novel, supervised peer-teaching format to promote clinical reasoning in medical students. GMS J Med Educ. 2020;37:Doc48.

Fredricks JA, Blumenfeld PC, Paris AH. School engagement: potential of the concept, state of the evidence. Rev Educ Res. 2004;74:59–109.

Wang M, Eccles J. Adolescent behavioral, emotional, and cognitive engagement trajectories in school and their differential relations to educational success. J Res Adolesc. 2012;22:31–9.

Kahu ER. Framing student engagement in higher education. Stud High Educ. 2013;38(5):758–73. https://doi.org/10.1080/03075079.2011.598505 .

DiBenedetto MK, Bembenutty H. Within the pipeline: self-regulated learning, self-efficacy, and socialization among college students in science courses. Learn Individ Differ. 2013;23:218–24.

Mega C, Ronconi L, De Beni R. What makes a good student? How emotions, self-regulated learning, and motivation contribute to academic achievement. J Educ Psychol. 2014;106:121.

Cavanagh AJ, Aragon OR, Chen X, Couch BA, Durham MF, Bobrownicki A, Hanauer DI, Graham MJ. Student buy in to active learning in a college science course. CBE Life Sci Educ. 2016;15:ar76. https://doi.org/10.1187/cbe.16-07-0212 .

McConnell MM, Eva KW. The role of emotion in the learning and transfer of clinical skills and knowledge. Acad Med. 2012;87:1316–22.

Freeman S, O’Connor E, Parks JW, Cunningham M, Hurley D, Haak D, Dirks C, Wenderoth MP. Active learning increases student performance in science, engineering, and mathematics. Proc Natl Acad Sci USA. 2014;111:8410–5.

Bonwell CC, Eison JA. Active learning: creating excitement in the classroom. ASHE-ERIC Higher Education Report No. 1. Washington (DC): George Washington University, School of Education and Human Development. 1991. ISBN 1–878380–08–7.

Download references

Author information

Authors and affiliations.

Undergraduate Medical School, School of Medicine, University of Glasgow, Glasgow, UK

Nana Sartania, Sharon Sneddon, James G. Boyle & Emily McQuarrie

Institute of Infection, Immunity and Inflammation, University of Glasgow, Glasgow, UK

Harry P. de Koning

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Nana Sartania .

Ethics declarations

Ethics approval.

Ethical approval for the project was granted by the MVLS College Ethics Committee of the University of Glasgow (Ref 200190106).

Consent to Participate

Consent to participate was obtained from all individual participants included in the study.

Conflict of Interest

The authors declare no conflict of interest.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Sartania, N., Sneddon, S., Boyle, J.G. et al. Increasing Collaborative Discussion in Case-Based Learning Improves Student Engagement and Knowledge Acquisition. Med.Sci.Educ. 32 , 1055–1064 (2022). https://doi.org/10.1007/s40670-022-01614-w

Download citation

Accepted : 25 August 2022

Published : 05 September 2022

Issue Date : October 2022

DOI : https://doi.org/10.1007/s40670-022-01614-w

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Medical education

- Case-based learning

- Collaborative learning

- Small group teaching

- Find a journal

- Publish with us

- Track your research

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- For authors

- Browse by collection

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 9, Issue 9

- Can clinical case discussions foster clinical reasoning skills in undergraduate medical education? A randomised controlled trial

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Marc Weidenbusch 1 , 2 ,

- http://orcid.org/0000-0003-2239-797X Benedikt Lenzer 1 ,

- Maximilian Sailer 3 ,

- Christian Strobel 1 ,

- Raphael Kunisch 2 ,

- Jan Kiesewetter 1 ,

- Martin R Fischer 1 ,

- http://orcid.org/0000-0002-3887-1181 Jan M Zottmann 1

- 1 Institute for Medical Education, University Hospital of LMU Munich , Munich , Germany

- 2 Department of Internal Medicine IV , University Hospital of LMU Munich , Munich , Germany

- 3 Department of Education , University of Passau , Passau , Germany

- Correspondence to Dr Jan M Zottmann; jan.zottmann{at}med.uni-muenchen.de

Objective Fostering clinical reasoning is a mainstay of medical education. Based on the clinicopathological conferences, we propose a case-based peer teaching approach called clinical case discussions (CCDs) to promote the respective skills in medical students. This study compares the effectiveness of different CCD formats with varying degrees of social interaction in fostering clinical reasoning.

Design, setting, participants A single-centre randomised controlled trial with a parallel design was conducted at a German university. Study participants (N=106) were stratified and tested regarding their clinical reasoning skills right after CCD participation and 2 weeks later.

Intervention Participants worked within a live discussion group (Live-CCD), a group watching recordings of the live discussions (Video-CCD) or a group working with printed cases (Paper-Cases). The presentation of case information followed an admission-discussion-summary sequence.

Primary and secondary outcome measures Clinical reasoning skills were measured with a knowledge application test addressing the students’ conceptual, strategic and conditional knowledge. Additionally, subjective learning outcomes were assessed.

Results With respect to learning outcomes, the Live-CCD group displayed the best results, followed by Video-CCD and Paper-Cases, F(2,87)=27.07, p<0.001, partial η 2 =0.384. No difference was found between Live-CCD and Video-CCD groups in the delayed post-test; however, both outperformed the Paper-Cases group, F(2,87)=30.91, p<0.001, partial η 2 =0.415. Regarding subjective learning outcomes, the Live-CCD received significantly better ratings than the other formats, F(2,85)=13.16, p<0.001, partial η 2 =0.236.

Conclusions This study demonstrates that the CCD approach is an effective and sustainable clinical reasoning teaching resource for medical students. Subjective learning outcomes underline the importance of learner (inter)activity in the acquisition of clinical reasoning skills in the context of case-based learning. Higher efficacy of more interactive formats can be attributed to positive effects of collaborative learning. Future research should investigate how the Live-CCD format can further be improved and how video-based CCDs can be enhanced through instructional support.

- undergraduate medical education

- case-based learning

- clinical reasoning

- social interaction

- medical decision making

This is an open access article distributed in accordance with the Creative Commons Attribution Non Commercial (CC BY-NC 4.0) license, which permits others to distribute, remix, adapt, build upon this work non-commercially, and license their derivative works on different terms, provided the original work is properly cited, appropriate credit is given, any changes made indicated, and the use is non-commercial. See: http://creativecommons.org/licenses/by-nc/4.0/ .

https://doi.org/10.1136/bmjopen-2018-025973

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Strengths and limitations of this study

First empirical study on the implementation of clinical case discussions in undergraduate medical education.

Comparison of clinical case discussions with differing grades of social interaction to determine their effectiveness on medical students’ acquisition of clinical reasoning skills by between-group analyses.

Implementation of multidimensional and multilayered test instruments in a pre-test, post-test and delayed post-test design to measure clinical reasoning skills with a knowledge application test and self-assessment.

The knowledge application test utilised in this study did not allow for a more in-depth analysis of clinical reasoning skills (ie, a distinction of conceptual, strategic and conditional knowledge).

Introduction

Curriculum developers face the challenge of implementing competence-oriented frameworks such as CanMEDS (Canada; http://www.royalcollege.ca/canmeds ), NKLM (Germany; http://www.nklm.de ) or PROFILES (Switzerland; http://www.profilesmed.ch ), including the need to train clinical reasoning skills as a medical doctor’s key competence. 1–3 As such, clinical reasoning skills are crucial not only for appropriate medical decision making but also to avoid diagnostic errors and the associated harm for both patients and healthcare systems. 4

Case-based learning has been proposed to foster clinical reasoning skills 5 and is well accepted among students. 6 Case-based learning found an early representation in clinicopathological conferences (CPC, first introduced by Cannon in 1900 7 ) which are practised until today. The CPC conducted at the Massachusetts General Hospital are published on a regular basis known as the Case Records series of the New England Journal of Medicine . In those CPCs, the ‘medical mystery’ 8 presented by the case under discussion calls readers to think about the possible diagnosis themselves, before it is finally disclosed at the last part of the CPC. Despite the absence of definitive evidence for efficacy as a teaching method, CPCs have widely been used in medical education since the early 20th century to foster clinical reasoning. 9–11 While CPC case records reach lots of medical readers around the world, they have been criticised as being anachronistic with a diagnosing ‘star’ (ie, the discussant), performing, acutely aware of being the centre of attention. 12

Case-based learning formats are embedded in a context, which is known to promote learning better than providing facts in an abstract, non-contextual form. 13 A definition found in the review by Merseth suggests three essential elements of a case: a case is real (ie, based on a real-life situation or event); it relies on careful research and study; it is ‘created explicitly for discussion and seeks to include sufficient detail and information to elicit active analysis and interpretation by users’. 14 Cases may be represented by means of text, pictures, videos and the like. Realism and authenticity are varying features of cases, 15 but particularly elaborated and authentic cases provide increased diagnostic challenge, comprising added value for medical training. 16

However, due to their setup, CPCs are often passive learning situations for participants, as they listen to the discussant laying out his or her clinical reasoning on the case under discussion. According to the ICAP framework by Chi et al , 17 teaching formats increase their efficacy from passive < active < constructive < interactive learning environments. Learning is enhanced when students interactively engage in discussions among each other. Accordingly, case-based learning has been found to be particularly beneficial in collaborative settings. 15 However, another important aspect to consider in collaborative learning environments is that some students may participate passively, while others contribute disproportionately much. To foster optimal learning effects, students should thus be encouraged to be interactively engaged. One prerequisite to achieve self-guided learning in groups is a low threshold for students to come forward with their questions and participate in ensuing discussions. 18 To this end, peer teaching has been established as an effective tool to stimulate discussions. 19 To make sure peer tutors are not overwhelmed in moderating these discussions, the presence of an experienced clinician appears to be warranted 20 in addition to a specific training of the tutors.

Taken together, while traditional CPCs encompass some important dimensions of effective case-based learning environments, they are not systematically aiming at constructive or interactive learner activities that are known features of effective teaching formats. 17 21 Therefore, we introduced clinical case discussions (CCD) in undergraduate medical education to account for these features. We still use the case records of the Massachusetts General Hospital, 9 as these cases exemplify realistic patient encounters and fulfil the criteria for an interactive collaborative learning process as explained above. In the CCD approach, cases are typically presented with information until the admission of the patient to the hospital. This event is usually the starting point of an interactive discussion phase of the group about possible diagnoses and diagnostic strategies. After all test results have been discussed, the actual diagnosis is disclosed and the pitfalls and take-home messages of the case are summarised.

To investigate the effectiveness of the CCD approach in undergraduate medical education, we designed an intervention trial and assessed clinical reasoning skills in medical students before and after participating in live CCDs or being exposed to video recordings of live CCDs. We compared these formats and their effects on clinical reasoning with the more traditional approach of working through written cases. When carrying out this randomised trial, we hypothesised that participation in live CCD sessions would lead to a higher increase of clinical reasoning skills than simply reading the cases. To better understand possible effects of the CCD learning environment with its social components on learning outcomes, participation in live CCDs as outlined above was additionally compared with the effects of watching videos of CCDs online. This comparison also seemed relevant from an economic point of view as videostreaming of lectures and seminars is prevalent at many institutions in higher education, allowing for flexible and scalable access to learning materials. 22 To investigate the potential of different CCD formats for regular curricular use, we also measured subjective learning outcomes after the intervention and correlated student self-assessments with objective changes in their clinical reasoning skills.

Participants

Initially, we recruited 106 volunteer medical students at the Medical Faculty of LMU Munich. Randomisation was performed in a two-step procedure. First, we selected a sample of roughly 100 enrolled students. Next, we stratified participants by creating triplets on the basis of the variables age, gender, year of study, prior CCD participation and performance in a knowledge application pre-test. This was done in an effort to limit the risk of random misdistribution of the selected sample. From each triplet, we randomly assigned participants to the experimental groups. A total of 90 participants eventually completed the study, 31 of them were male and 59 female. They were aged 20–41 years (M=23; SD=2.97) and in their first to eighth clinical semester (M=3.50; SD=1.78).

The study was approved by the ethics committee of the Medical Faculty of LMU Munich (approval reference no. 222–15). Written informed consent was obtained from all study participants and they received a financial reimbursement of 50 Euros on completion of the trial.

Patient and public involvement

No patients or public were involved in this research.

Study design

We conducted a single-centre randomised controlled trial consisting of a total of five course sessions with a parallel design (see figure 1 ). One week prior to the first CCD session, participants were introduced to the principles of the CCD approach and the sequence of this trial in an introductory session where they also took a knowledge application pre-test (T_0). In the experimental phase, participants attended 3 weekly interventional course sessions of 90 min each in one of three experimental groups with the respective CCD formats. Participants took a knowledge application post-test at the end of the last experimental course session (T_1), 4 weeks after pre-testing. A delayed knowledge application post-test was conducted 2 weeks after completion of the interventional courses (T_2); we deliberately chose that time interval to investigate the sustainability of possible effects while balancing the risk of postintervention confounding. 23

- Download figure

- Open in new tab

- Download powerpoint

Study design. Full data sets of 90 medical students were analysed. T_0, knowledge application pre-test; T_1, knowledge application post-test; T_2, delayed knowledge application post-test.

In all experimental groups, the intervention was based on the same three, independent internal medicine cases. Chief complaints in these cases were paraesthesia (first session), fever and respiratory failure (second session) and rapidly progressive respiratory failure (third session). 24–26 Cases were worked through in an iterative approach in different formats: (1) peer-moderated live case discussions in an interactive setting (Live-CCD, n=30), (2) a single-learner format utilising an interactive multimedia platform displaying video recordings of the live case discussions (Video-CCD, n=27) and (3) a single-learner format in which the students worked with the original paper cases of the NEJM (Paper-Cases, n=33). The cases were prepared in a way that participants in each format were exposed to the same case information.

In all three groups, cases were presented in a specified structured manner similar to the original CPC (see figure 2 ). In each format, the students (‘discussants’) had to fill out a form after the admission in which the case had to be summarised and a list of clinical problems and working diagnoses had to be provided. Subsequently, between discussion and summary a second case summary had to be completed in which the final diagnostic test and the most likely diagnosis had to be proposed.

Live-CCD structure. CCD sessions are divided into three parts. In the admission part, the presenting student shows the discussants his prepared slides (based on the original NEJM case record), after which the group has to agree on an assessment of the patient under discussion. In the interactive discussion part, the students prioritise the medical problems, link them to possible aetiologies and order tests to further corroborate or discard differential diagnoses. After all these tests have been discussed, students order the putative diagnostic test. The result is disclosed along with the pathological discussion and ‘take home messages’ on important differentials in the third part of the session. CBC, complete blood count; CC, chief complaint; CCD, clinical case discussion; CMP, comprehensive metabolic panel; CXR, chest radiograph; FH, family history; HPI, history of present illness; Meds, medications; PE, physical examination; PMH, past medical history; PT, prothrombin time; PTT, partial thromboplastin time; ROS, review of systems; SH, social history; UA, urine analysis; VS, vital signs.