Critical thinking

We’ve already established that information can be biased. Now it’s time to look at our own bias.

Studies have shown that we are more likely to accept information when it fits into our existing worldview, a phenomenon known as confirmation or myside bias (for examples see Kappes et al., 2020 ; McCrudden & Barnes, 2016 ; Pilditch & Custers, 2018 ). Wittebols (2019) defines it as a “tendency to be psychologically invested in the familiar and what we believe and less receptive to information that contradicts what we believe” (p. 211). Quite simply, we may reject information that doesn’t support our existing thinking.

This can manifest in a number of ways with Hahn and Harris (2014) suggesting four main behaviours:

- Searching only for information that supports our held beliefs

- Failing to critically evaluate information that supports our held beliefs - accepting it at face value - while explaining away or being overly critical of information that might contradict them

- Becoming set in our thinking, once an opinion has been formed, and deliberately ignoring any new information on the topic

- A tendency to be overconfident with the validity of our held beliefs.

Peters (2020) also suggests that we’re more likely to remember information that supports our way of thinking, further cementing our bias. Taken together, the research suggests that bias has a huge impact on the way we think. To learn more about how and why bias can impact our everyday thinking, watch this short video.

Filter bubbles and echo chambers

The theory of filter bubbles emerged in 2011, proposed by an Internet activist, Eli Pariser. He defined it as “your own personal unique world of information that you live in online” ( Pariser, 2011, 4:21 ). At the time that Pariser proposed the filter bubble theory, he focused on the impact of algorithms, connected with social media platforms and search engines, which prioritised content and personalised results based on the individuals past online activity, suggesting “the Internet is showing us what it thinks we want to see, but not necessarily what we should see” (Pariser, 2011, 3:47. Watch his TED talk if you’d like to know more).

Our understanding of filter bubbles has now expanded to recognise that individuals also select and create their own filter bubbles. This happens when you seek out likeminded individuals or sources; follow your friends or people you admire on social media; people that you’re likely to share common beliefs, points-of-view, and interests with. Barack Obama (2017) addressed the concept of filter bubbles in his presidential farewell address:

For too many of us it’s become safer to retreat into our own bubbles, whether in our neighbourhoods, or on college campuses, or places of worship, or especially our social media feeds, surrounded by people who look like us and share the same political outlook and never challenge our assumptions… Increasingly we become so secure in our bubbles that we start accepting only information, whether it’s true or not, that fits our opinions, instead of basing our opinions on the evidence that is out there. ( Obama, 2017, 22:57 ).

Filter bubbles are not unique to the social media age. Previously, the term echo chamber was used to describe the same phenomenon in the news media where different channels exist, catering to different points of view. Within an echo chamber, people are able to seek out information that supports their existing beliefs, without encountering information that might challenge, contradict or oppose.

Other forms of bias

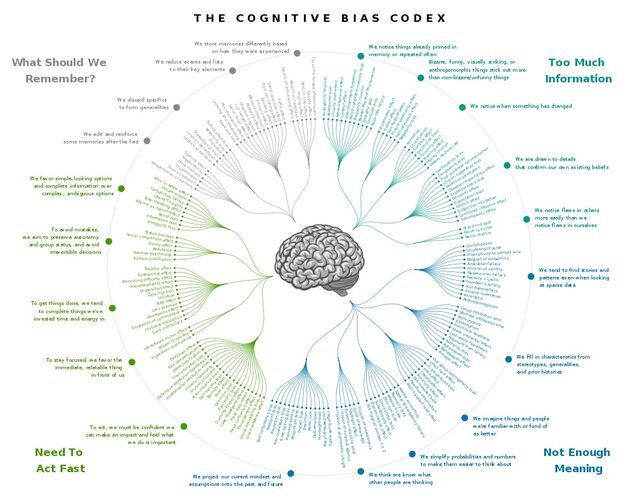

There are many different ways in which bias can affect the way you think and how you process new information. Try the quiz below to discover some additional forms of bias, or check out Buzzfeed’s 2017 article on cognitive bias.

Cognitive Bias Is the Loose Screw in Critical Thinking

Recognizing your biases enhances understanding and communication..

Posted May 17, 2021 | Reviewed by Jessica Schrader

- People cannot think critically unless they are aware of their cognitive biases, which can alter their perception of reality.

- Cognitive biases are mental shortcuts people take in order to process the mass of information they receive daily.

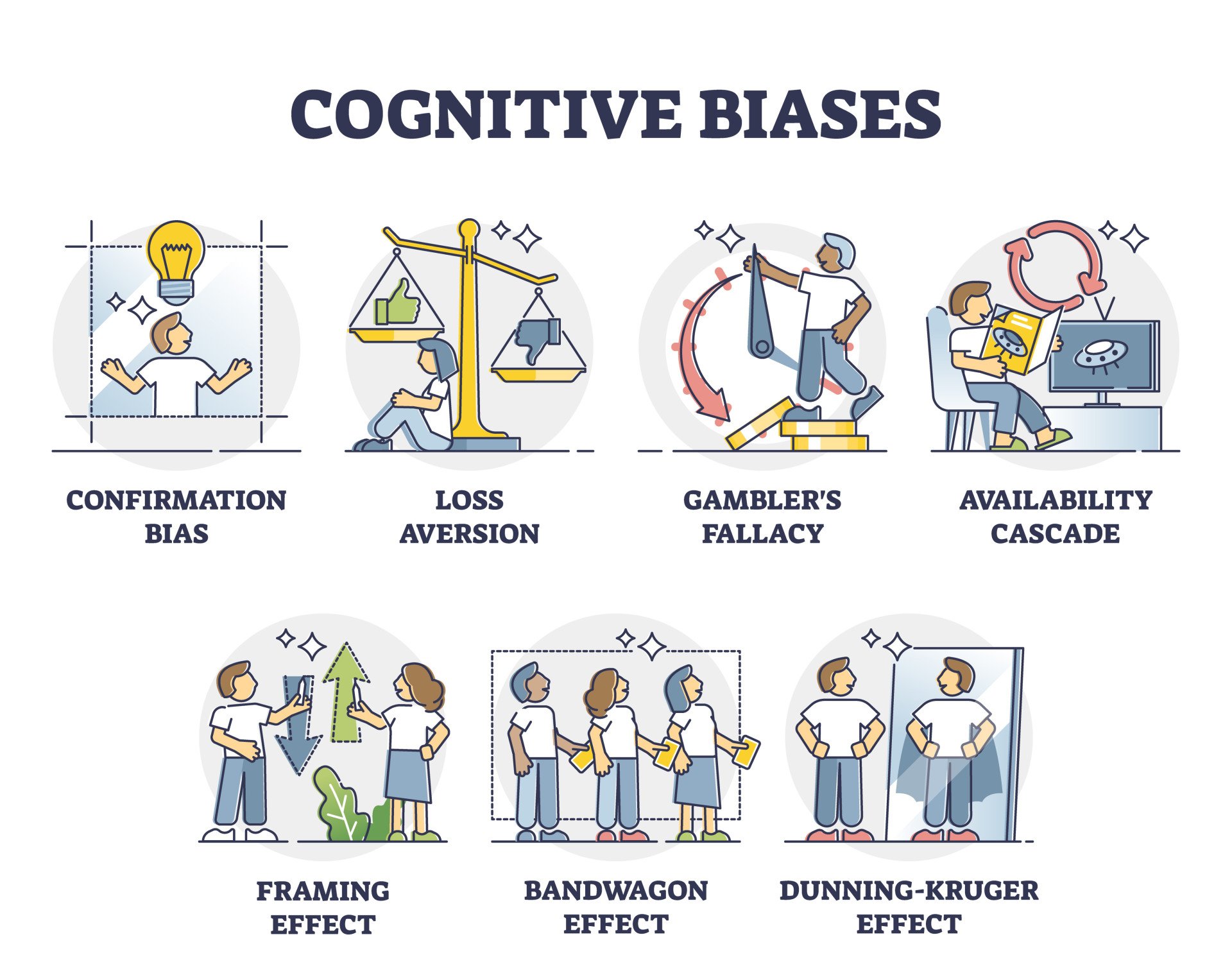

- Cognitive biases include confirmation bias, anchoring bias, bandwagon effect, and negativity bias.

When I was a kid, I was enamored of cigarette-smoking movie stars. When I was a teenager , some of my friends began to smoke; I wanted to smoke too, but my parents forbid it. I was also intimidated by the ubiquitous anti-smoking commercials I saw on television warning me that smoking causes cancer. As much as I wanted to smoke, I was afraid of it.

When I started college as a pre-med major, I also started working in a hospital emergency room. I was shocked to see that more than 90% of the nurses working there were smokers, but that was not quite enough to convince me that smoking was OK. It was the doctors: 11 of the 12 emergency room physicians I worked with were smokers. That was all the convincing I needed. If actual medical doctors thought smoking was safe, then so did I. I started smoking without concern because I had fallen prey to an authority bias , which is a type of cognitive bias. Fortunately for my health, I wised up and quit smoking 10 years later.

It's Likely You're Unaware of These Habits

Have you ever thought someone was intelligent simply because they were attractive? Have you ever dismissed a news story because it ran in a media source you didn’t like? Have you ever thought or said, “I knew that was going to happen!” in reference to a team winning, a stock going up in value, or some other unpredictable event occurring? If you replied "yes” to any of these, then you may be guilty of relying on a cognitive bias.

In my last post, I wrote about the importance of critical thinking, and how in today’s information age, no one has an excuse for living in ignorance. Since then, I recalled a huge impediment to critical thinking: cognitive bias. We are all culpable of leaning on these mental crutches, even though we don’t do it intentionally.

What Are Cognitive Biases?

The Cambridge English Dictionary defines cognitive bias as the way a particular person understands events, facts, and other people, which is based on their own particular set of beliefs and experiences and may not be reasonable or accurate.

PhilosophyTerms.com calls it a bad mental habit that gets in the way of logical thinking.

PositivePsychology.com describes it this way: “We are often presented with situations in life when we need to make a decision with imperfect information, and we unknowingly rely on prejudices or biases.”

And, according to Alleydog.com, a cognitive bias is an involuntary pattern of thinking that produces distorted perceptions of people, surroundings, and situations around us.

In brief, a cognitive bias is a shortcut to thinking. And, it’s completely understandable; the onslaught of information that we are exposed to every day necessitates some kind of time-saving method. It is simply impossible to process everything, so we make quick decisions. Most people don’t have the time to thoroughly think through everything they are told. Nevertheless, as understandable as depending on biases may be, it is still a severe deterrent to critical thinking.

Here's What to Watch Out For

Wikipedia lists 197 different cognitive biases. I am going to share with you a few of the more common ones so that in the future, you will be aware of the ones you may be using.

Confirmation bias is when you prefer to attend media and information sources that are in alignment with your current beliefs. People do this because it helps maintain their confidence and self-esteem when the information they receive supports their knowledge set. Exposing oneself to opposing views and opinions can cause cognitive dissonance and mental stress . On the other hand, exposing yourself to new information and different viewpoints helps open up new neural pathways in your brain, which will enable you to think more creatively (see my post: Surprise: Creativity Is a Skill, Not a Gift! ).

Anchoring bias occurs when you become committed or attached to the first thing you learn about a particular subject. A first impression of something or someone is a good example (see my post: Sometimes You Have to Rip the Cover Off ). Similar to anchoring is the halo effect , which is when you assume that a person’s positive or negative traits in one area will be the same in some other aspect of their personality . For example, you might think that an attractive person will also be intelligent without seeing any proof to support it.

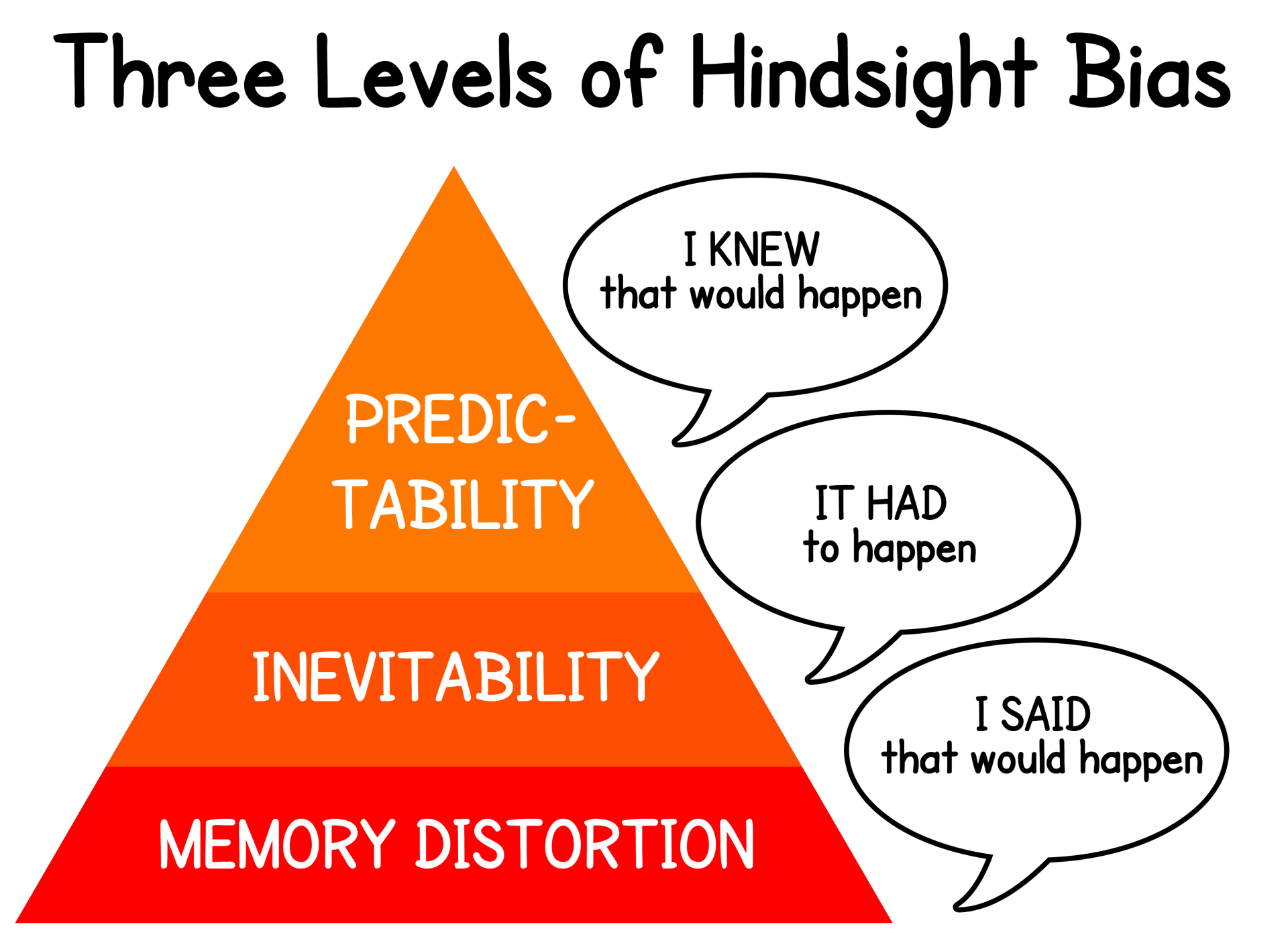

Hindsight bias is the inclination to see some events as more predictable than they are; also known as the “I knew it all along" reaction. Examples of this bias would be believing that you knew who was going to win an election, a football or baseball game, or even a coin toss after it occurred.

Misinformation effect is when your memories of an event can become affected or influenced by information you received after the event occurred. Researchers have proven that memory is inaccurate because it is vulnerable to revision when you receive new information.

Actor-observer bias is when you attribute your actions to external influences and other people's actions to internal ones. You might think you missed a business opportunity because your car broke down, but your colleague failed to get a promotion because of incompetence.

False consensus effect is when you assume more people agree with your opinions and share your values than actually do. This happens because you tend to spend most of your time with others, such as family and friends, who actually do share beliefs similar to yours.

Availability bias occurs when you believe the information you possess is more important than it actually is. This happens when you watch or listen to media news sources that tend to run dramatic stories without sharing any balancing statistics on how rare such events may be. For example, if you see several stories on fiery plane crashes, you might start to fear flying because you assume they occur with greater frequency than they actually do.

Bandwagon effect, also known as herd mentality or groupthink , is the propensity to accept beliefs or values because many other people also hold them as well. This is a conformity bias that occurs because most people desire acceptance, connection, and belonging with others, and fear rejection if they hold opposing beliefs. Most people will not think through an opinion and will assume it is correct because so many others agree with it.

Authority bias is when you accept the opinion of an authority figure because you believe they know more than you. You might assume that they have already thought through an issue and made the right conclusion. And, because they are an authority in their field, you grant more credibility to their viewpoint than you would for anyone else. This is especially true in medicine where experts are frequently seen as infallible. An example would be an advertiser showing a doctor, wearing a lab coat, touting their product.

Negativity bias is when you pay more attention to bad news than good. This is a natural bias that dates back to humanity’s prehistoric days when noticing threats, risks, and other lethal dangers could save your life. In today’s civilized world, this bias is not as necessary (see my post Fear: Lifesaver or Manipulator ).

Illusion of control is the belief that you have more control over a situation than you actually do. An example of this is when a gambler believes he or she can influence a game of chance.

Understand More and Communicate Better

Learning these biases, and being on the alert for them when you make a decision to accept a belief or opinion, will help you become more effective at critical thinking.

Robert Wilson is a writer and humorist based in Atlanta, Georgia.

- Find a Therapist

- Find a Treatment Center

- Find a Psychiatrist

- Find a Support Group

- Find Online Therapy

- United States

- Brooklyn, NY

- Chicago, IL

- Houston, TX

- Los Angeles, CA

- New York, NY

- Portland, OR

- San Diego, CA

- San Francisco, CA

- Seattle, WA

- Washington, DC

- Asperger's

- Bipolar Disorder

- Chronic Pain

- Eating Disorders

- Passive Aggression

- Personality

- Goal Setting

- Positive Psychology

- Stopping Smoking

- Low Sexual Desire

- Relationships

- Child Development

- Therapy Center NEW

- Diagnosis Dictionary

- Types of Therapy

Understanding what emotional intelligence looks like and the steps needed to improve it could light a path to a more emotionally adept world.

- Emotional Intelligence

- Gaslighting

- Affective Forecasting

- Neuroscience

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

2.2: Overcoming Cognitive Biases and Engaging in Critical Reflection

- Last updated

- Save as PDF

- Page ID 162135

- Nathan Smith et al.

Learning Objectives

By the end of this section, you will be able to:

- Label the conditions that make critical thinking possible.

- Classify and describe cognitive biases.

- Apply critical reflection strategies to resist cognitive biases.

To resist the potential pitfalls of cognitive biases, we have taken some time to recognize why we fall prey to them. Now we need to understand how to resist easy, automatic, and error-prone thinking in favor of more reflective, critical thinking.

Critical Reflection and Metacognition

To promote good critical thinking, put yourself in a frame of mind that allows critical reflection. Recall from the previous section that rational thinking requires effort and takes longer. However, it will likely result in more accurate thinking and decision-making. As a result, reflective thought can be a valuable tool in correcting cognitive biases. The critical aspect of critical reflection involves a willingness to be skeptical of your own beliefs, your gut reactions, and your intuitions. Additionally, the critical aspect engages in a more analytic approach to the problem or situation you are considering. You should assess the facts, consider the evidence, try to employ logic, and resist the quick, immediate, and likely conclusion you want to draw. By reflecting critically on your own thinking, you can become aware of the natural tendency for your mind to slide into mental shortcuts.

This process of critical reflection is often called metacognition in the literature of pedagogy and psychology. Metacognition means thinking about thinking and involves the kind of self-awareness that engages higher-order thinking skills. Cognition, or the way we typically engage with the world around us, is first-order thinking, while metacognition is higher-order thinking. From a metacognitive frame, we can critically assess our thought process, become skeptical of our gut reactions and intuitions, and reconsider our cognitive tendencies and biases.

To improve metacognition and critical reflection, we need to encourage the kind of self-aware, conscious, and effortful attention that may feel unnatural and may be tiring. Typical activities associated with metacognition include checking, planning, selecting, inferring, self-interrogating, interpreting an ongoing experience, and making judgments about what one does and does not know (Hackner, Dunlosky, and Graesser 1998). By practicing metacognitive behaviors, you are preparing yourself to engage in the kind of rational, abstract thought that will be required for philosophy.

Good study habits, including managing your workspace, giving yourself plenty of time, and working through a checklist, can promote metacognition. When you feel stressed out or pressed for time, you are more likely to make quick decisions that lead to error. Stress and lack of time also discourage critical reflection because they rob your brain of the resources necessary to engage in rational, attention-filled thought. By contrast, when you relax and give yourself time to think through problems, you will be clearer, more thoughtful, and less likely to rush to the first conclusion that leaps to mind. Similarly, background noise, distracting activity, and interruptions will prevent you from paying attention. You can use this checklist to try to encourage metacognition when you study:

- Check your work.

- Plan ahead.

- Select the most useful material.

- Infer from your past grades to focus on what you need to study.

- Ask yourself how well you understand the concepts.

- Check your weaknesses.

- Assess whether you are following the arguments and claims you are working on.

Cognitive Biases

In this section, we will examine some of the most common cognitive biases so that you can be aware of traps in thought that can lead you astray. Cognitive biases are closely related to informal fallacies. Both fallacies and biases provide examples of the ways we make errors in reasoning.

CONNECTIONS

See the chapter on logic and reasoning for an in-depth exploration of informal fallacies.

Watch the video to orient yourself before reading the text that follows.

Cognitive Biases 101, with Peter Bauman

Click to view content

Confirmation Bias

One of the most common cognitive biases is confirmation bias , which is the tendency to search for, interpret, favor, and recall information that confirms or supports your prior beliefs. Like all cognitive biases, confirmation bias serves an important function. For instance, one of the most reliable forms of confirmation bias is the belief in our shared reality. Suppose it is raining. When you first hear the patter of raindrops on your roof or window, you may think it is raining. You then look for additional signs to confirm your conclusion, and when you look out the window, you see rain falling and puddles of water accumulating. Most likely, you will not be looking for irrelevant or contradictory information. You will be looking for information that confirms your belief that it is raining. Thus, you can see how confirmation bias—based on the idea that the world does not change dramatically over time—is an important tool for navigating in our environment.

Unfortunately, as with most heuristics, we tend to apply this sort of thinking inappropriately. One example that has recently received a lot of attention is the way in which confirmation bias has increased political polarization. When searching for information on the internet about an event or topic, most people look for information that confirms their prior beliefs rather than what undercuts them. The pervasive presence of social media in our lives is exacerbating the effects of confirmation bias since the computer algorithms used by social media platforms steer people toward content that reinforces their current beliefs and predispositions. These multimedia tools are especially problematic when our beliefs are incorrect (for example, they contradict scientific knowledge) or antisocial (for example, they support violent or illegal behavior). Thus, social media and the internet have created a situation in which confirmation bias can be “turbocharged” in ways that are destructive for society.

Confirmation bias is a result of the brain’s limited ability to process information. Peter Wason (1960) conducted early experiments identifying this kind of bias. He asked subjects to identify the rule that applies to a sequence of numbers—for instance, 2, 4, 8. Subjects were told to generate examples to test their hypothesis. What he found is that once a subject settled on a particular hypothesis, they were much more likely to select examples that confirmed their hypothesis rather than negated it. As a result, they were unable to identify the real rule (any ascending sequence of numbers) and failed to “falsify” their initial assumptions. Falsification is an important tool in the scientist’s toolkit when they are testing hypotheses and is an effective way to avoid confirmation bias.

In philosophy, you will be presented with different arguments on issues, such as the nature of the mind or the best way to act in a given situation. You should take your time to reason through these issues carefully and consider alternative views. What you believe to be the case may be right, but you may also fall into the trap of confirmation bias, seeing confirming evidence as better and more convincing than evidence that calls your beliefs into question.

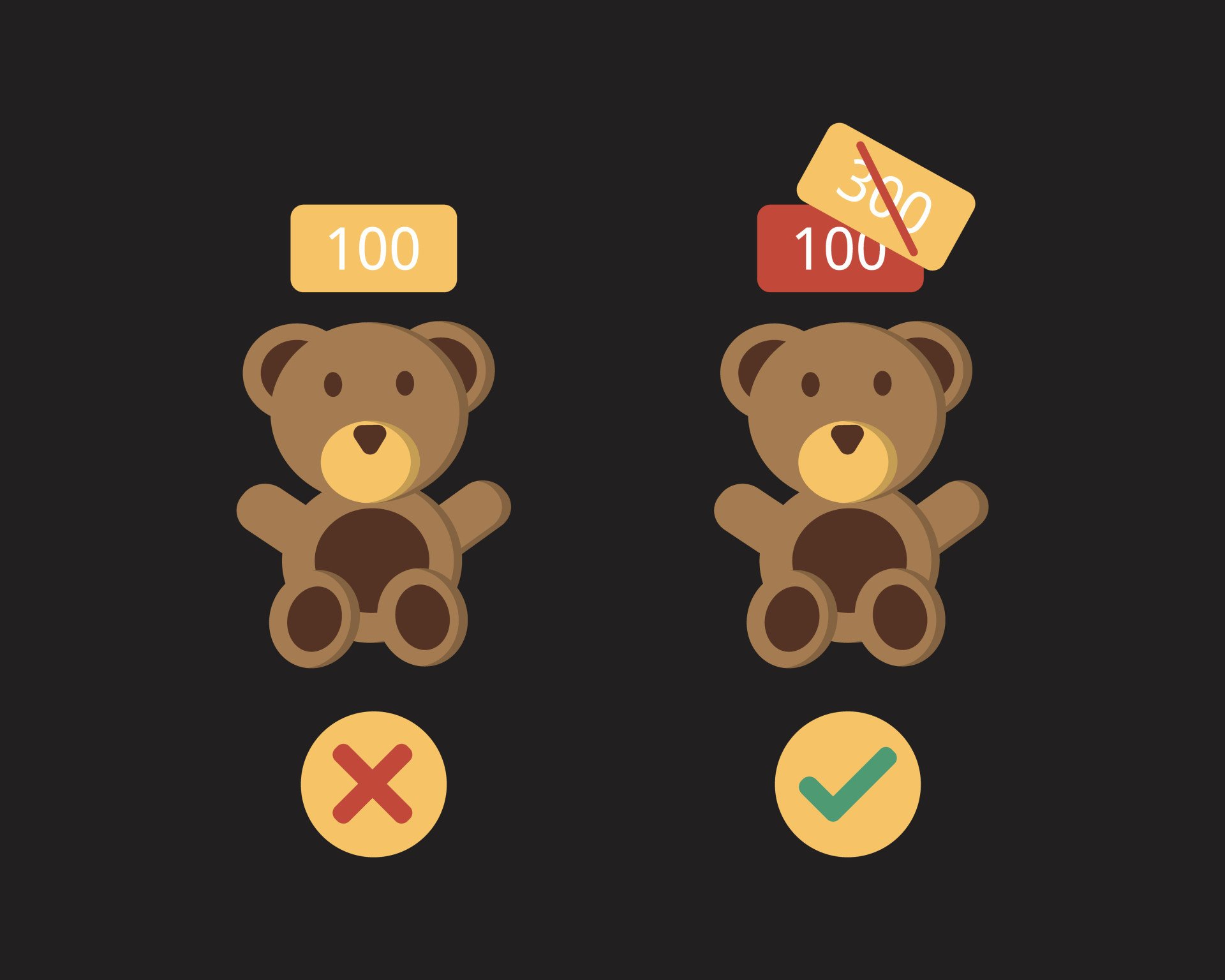

Anchoring Bias

Confirmation bias is closely related to another bias known as anchoring. Anchoring bias refers to our tendency to rely on initial values, prices, or quantities when estimating the actual value, price, or quantity of something. If you are presented with a quantity, even if that number is clearly arbitrary, you will have a hard discounting it in your subsequent calculations; the initial value “anchors” subsequent estimates. For instance, Tversky and Kahneman (1974) reported an experiment in which subjects were asked to estimate the number of African nations in the United Nations. First, the experimenters spun a wheel of fortune in front of the subjects that produced a random number between 0 and 100. Let’s say the wheel landed on 79. Subjects were asked whether the number of nations was higher or lower than the random number. Subjects were then asked to estimate the real number of nations. Even though the initial anchoring value was random, people in the study found it difficult to deviate far from that number. For subjects receiving an initial value of 10, the median estimate of nations was 25, while for subjects receiving an initial value of 65, the median estimate was 45.

In the same paper, Tversky and Kahneman described the way that anchoring bias interferes with statistical reasoning. In a number of scenarios, subjects made irrational judgments about statistics because of the way the question was phrased (i.e., they were tricked when an anchor was inserted into the question). Instead of expending the cognitive energy needed to solve the statistical problem, subjects were much more likely to “go with their gut,” or think intuitively. That type of reasoning generates anchoring bias. When you do philosophy, you will be confronted with some formal and abstract problems that will challenge you to engage in thinking that feels difficult and unnatural. Resist the urge to latch on to the first thought that jumps into your head, and try to think the problem through with all the cognitive resources at your disposal.

Availability Heuristic

The availability heuristic refers to the tendency to evaluate new information based on the most recent or most easily recalled examples. The availability heuristic occurs when people take easily remembered instances as being more representative than they objectively are (i.e., based on statistical probabilities). In very simple situations, the availability of instances is a good guide to judgments. Suppose you are wondering whether you should plan for rain. It may make sense to anticipate rain if it has been raining a lot in the last few days since weather patterns tend to linger in most climates. More generally, scenarios that are well-known to us, dramatic, recent, or easy to imagine are more available for retrieval from memory. Therefore, if we easily remember an instance or scenario, we may incorrectly think that the chances are high that the scenario will be repeated. For instance, people in the United States estimate the probability of dying by violent crime or terrorism much more highly than they ought to. In fact, these are extremely rare occurrences compared to death by heart disease, cancer, or car accidents. But stories of violent crime and terrorism are prominent in the news media and fiction. Because these vivid stories are dramatic and easily recalled, we have a skewed view of how frequently violent crime occurs.

Another more loosely defined category of cognitive bias is the tendency for human beings to align themselves with groups with whom they share values and practices. The tendency toward tribalism is an evolutionary advantage for social creatures like human beings. By forming groups to share knowledge and distribute work, we are much more likely to survive. Not surprisingly, human beings with pro-social behaviors persist in the population at higher rates than human beings with antisocial tendencies. Pro-social behaviors, however, go beyond wanting to communicate and align ourselves with other human beings; we also tend to see outsiders as a threat. As a result, tribalistic tendencies both reinforce allegiances among in-group members and increase animosity toward out-group members.

Tribal thinking makes it hard for us to objectively evaluate information that either aligns with or contradicts the beliefs held by our group or tribe. This effect can be demonstrated even when in-group membership is not real or is based on some superficial feature of the person—for instance, the way they look or an article of clothing they are wearing. A related bias is called the bandwagon fallacy . The bandwagon fallacy can lead you to conclude that you ought to do something or believe something because many other people do or believe the same thing. While other people can provide guidance, they are not always reliable. Furthermore, just because many people believe something doesn’t make it true. Watch the video below to improve your “tribal literacy” and understand the dangers of this type of thinking.

The Dangers of Tribalism, Kevin deLaplante

Sunk Cost Fallacy

Sunk costs refer to the time, energy, money, or other costs that have been paid in the past. These costs are “sunk” because they cannot be recovered. The sunk cost fallacy is thinking that attaches a value to things in which you have already invested resources that is greater than the value those things have today. Human beings have a natural tendency to hang on to whatever they invest in and are loath to give something up even after it has been proven to be a liability. For example, a person may have sunk a lot of money into a business over time, and the business may clearly be failing. Nonetheless, the businessperson will be reluctant to close shop or sell the business because of the time, money, and emotional energy they have spent on the venture. This is the behavior of “throwing good money after bad” by continuing to irrationally invest in something that has lost its worth because of emotional attachment to the failed enterprise. People will engage in this kind of behavior in all kinds of situations and may continue a friendship, a job, or a marriage for the same reason—they don’t want to lose their investment even when they are clearly headed for failure and ought to cut their losses.

A similar type of faulty reasoning leads to the gambler’s fallacy , in which a person reasons that future chance events will be more likely if they have not happened recently. For instance, if I flip a coin many times in a row, I may get a string of heads. But even if I flip several heads in a row, that does not make it more likely I will flip tails on the next coin flip. Each coin flip is statistically independent, and there is an equal chance of turning up heads or tails. The gambler, like the reasoner from sunk costs, is tied to the past when they should be reasoning about the present and future.

There are important social and evolutionary purposes for past-looking thinking. Sunk-cost thinking keeps parents engaged in the growth and development of their children after they are born. Sunk-cost thinking builds loyalty and affection among friends and family. More generally, a commitment to sunk costs encourages us to engage in long-term projects, and this type of thinking has the evolutionary purpose of fostering culture and community. Nevertheless, it is important to periodically reevaluate our investments in both people and things.

In recent ethical scholarship, there is some debate about how to assess the sunk costs of moral decisions. Consider the case of war. Just-war theory dictates that wars may be justified in cases where the harm imposed on the adversary is proportional to the good gained by the act of defense or deterrence. It may be that, at the start of the war, those costs seemed proportional. But after the war has dragged on for some time, it may seem that the objective cannot be obtained without a greater quantity of harm than had been initially imagined. Should the evaluation of whether a war is justified estimate the total amount of harm done or prospective harm that will be done going forward (Lazar 2018)? Such questions do not have easy answers.

Table 2.1 summarizes these common cognitive biases.

Table 2.1 Common Cognitive Biases

Think Like A Philosopher

As we have seen, cognitive biases are built into the way human beings process information. They are common to us all, and it takes self-awareness and effort to overcome the tendency to fall back on biases. Consider a time when you have fallen prey to one of the five cognitive biases described above. What were the circumstances? Recall your thought process. Were you aware at the time that your thinking was misguided? What were the consequences of succumbing to that cognitive bias?

Write a short paragraph describing how that cognitive bias allowed you to make a decision you now realize was irrational. Then write a second paragraph describing how, with the benefit of time and distance, you would have thought differently about the incident that triggered the bias. Use the tools of critical reflection and metacognition to improve your approach to this situation. What might have been the consequences of behaving differently? Finally, write a short conclusion describing what lesson you take from reflecting back on this experience. Does it help you understand yourself better? Will you be able to act differently in the future? What steps can you take to avoid cognitive biases in your thinking today?

How it works

For Business

Join Mind Tools

Article • 8 min read

Critical Thinking

Developing the right mindset and skills.

By the Mind Tools Content Team

We make hundreds of decisions every day and, whether we realize it or not, we're all critical thinkers.

We use critical thinking each time we weigh up our options, prioritize our responsibilities, or think about the likely effects of our actions. It's a crucial skill that helps us to cut out misinformation and make wise decisions. The trouble is, we're not always very good at it!

In this article, we'll explore the key skills that you need to develop your critical thinking skills, and how to adopt a critical thinking mindset, so that you can make well-informed decisions.

What Is Critical Thinking?

Critical thinking is the discipline of rigorously and skillfully using information, experience, observation, and reasoning to guide your decisions, actions, and beliefs. You'll need to actively question every step of your thinking process to do it well.

Collecting, analyzing and evaluating information is an important skill in life, and a highly valued asset in the workplace. People who score highly in critical thinking assessments are also rated by their managers as having good problem-solving skills, creativity, strong decision-making skills, and good overall performance. [1]

Key Critical Thinking Skills

Critical thinkers possess a set of key characteristics which help them to question information and their own thinking. Focus on the following areas to develop your critical thinking skills:

Being willing and able to explore alternative approaches and experimental ideas is crucial. Can you think through "what if" scenarios, create plausible options, and test out your theories? If not, you'll tend to write off ideas and options too soon, so you may miss the best answer to your situation.

To nurture your curiosity, stay up to date with facts and trends. You'll overlook important information if you allow yourself to become "blinkered," so always be open to new information.

But don't stop there! Look for opposing views or evidence to challenge your information, and seek clarification when things are unclear. This will help you to reassess your beliefs and make a well-informed decision later. Read our article, Opening Closed Minds , for more ways to stay receptive.

Logical Thinking

You must be skilled at reasoning and extending logic to come up with plausible options or outcomes.

It's also important to emphasize logic over emotion. Emotion can be motivating but it can also lead you to take hasty and unwise action, so control your emotions and be cautious in your judgments. Know when a conclusion is "fact" and when it is not. "Could-be-true" conclusions are based on assumptions and must be tested further. Read our article, Logical Fallacies , for help with this.

Use creative problem solving to balance cold logic. By thinking outside of the box you can identify new possible outcomes by using pieces of information that you already have.

Self-Awareness

Many of the decisions we make in life are subtly informed by our values and beliefs. These influences are called cognitive biases and it can be difficult to identify them in ourselves because they're often subconscious.

Practicing self-awareness will allow you to reflect on the beliefs you have and the choices you make. You'll then be better equipped to challenge your own thinking and make improved, unbiased decisions.

One particularly useful tool for critical thinking is the Ladder of Inference . It allows you to test and validate your thinking process, rather than jumping to poorly supported conclusions.

Developing a Critical Thinking Mindset

Combine the above skills with the right mindset so that you can make better decisions and adopt more effective courses of action. You can develop your critical thinking mindset by following this process:

Gather Information

First, collect data, opinions and facts on the issue that you need to solve. Draw on what you already know, and turn to new sources of information to help inform your understanding. Consider what gaps there are in your knowledge and seek to fill them. And look for information that challenges your assumptions and beliefs.

Be sure to verify the authority and authenticity of your sources. Not everything you read is true! Use this checklist to ensure that your information is valid:

- Are your information sources trustworthy ? (For example, well-respected authors, trusted colleagues or peers, recognized industry publications, websites, blogs, etc.)

- Is the information you have gathered up to date ?

- Has the information received any direct criticism ?

- Does the information have any errors or inaccuracies ?

- Is there any evidence to support or corroborate the information you have gathered?

- Is the information you have gathered subjective or biased in any way? (For example, is it based on opinion, rather than fact? Is any of the information you have gathered designed to promote a particular service or organization?)

If any information appears to be irrelevant or invalid, don't include it in your decision making. But don't omit information just because you disagree with it, or your final decision will be flawed and bias.

Now observe the information you have gathered, and interpret it. What are the key findings and main takeaways? What does the evidence point to? Start to build one or two possible arguments based on what you have found.

You'll need to look for the details within the mass of information, so use your powers of observation to identify any patterns or similarities. You can then analyze and extend these trends to make sensible predictions about the future.

To help you to sift through the multiple ideas and theories, it can be useful to group and order items according to their characteristics. From here, you can compare and contrast the different items. And once you've determined how similar or different things are from one another, Paired Comparison Analysis can help you to analyze them.

The final step involves challenging the information and rationalizing its arguments.

Apply the laws of reason (induction, deduction, analogy) to judge an argument and determine its merits. To do this, it's essential that you can determine the significance and validity of an argument to put it in the correct perspective. Take a look at our article, Rational Thinking , for more information about how to do this.

Once you have considered all of the arguments and options rationally, you can finally make an informed decision.

Afterward, take time to reflect on what you have learned and what you found challenging. Step back from the detail of your decision or problem, and look at the bigger picture. Record what you've learned from your observations and experience.

Critical thinking involves rigorously and skilfully using information, experience, observation, and reasoning to guide your decisions, actions and beliefs. It's a useful skill in the workplace and in life.

You'll need to be curious and creative to explore alternative possibilities, but rational to apply logic, and self-aware to identify when your beliefs could affect your decisions or actions.

You can demonstrate a high level of critical thinking by validating your information, analyzing its meaning, and finally evaluating the argument.

Critical Thinking Infographic

See Critical Thinking represented in our infographic: An Elementary Guide to Critical Thinking .

You've accessed 1 of your 2 free resources.

Get unlimited access

Discover more content

How to use linkedin effectively.

Getting the Best from the World's Biggest Networking Site

Expert Interviews

Wrong Fit, Right Fit: Why How We Work Matters More Than Ever

With André Martin

Add comment

Comments (1)

priyanka ghogare

Get 30% off your first year of Mind Tools

Great teams begin with empowered leaders. Our tools and resources offer the support to let you flourish into leadership. Join today!

Sign-up to our newsletter

Subscribing to the Mind Tools newsletter will keep you up-to-date with our latest updates and newest resources.

Subscribe now

Business Skills

Personal Development

Leadership and Management

Member Extras

Most Popular

Latest Updates

Tips for Dealing with Customers Effectively

Pain Points Podcast - Procrastination

Mind Tools Store

About Mind Tools Content

Discover something new today

Pain points podcast - starting a new job.

How to Hit the Ground Running!

Ten Dos and Don'ts of Career Conversations

How to talk to team members about their career aspirations.

How Emotionally Intelligent Are You?

Boosting Your People Skills

Self-Assessment

What's Your Leadership Style?

Learn About the Strengths and Weaknesses of the Way You Like to Lead

Recommended for you

Developing surveys.

Asking the Right Questions the Right Way

Business Operations and Process Management

Strategy Tools

Customer Service

Business Ethics and Values

Handling Information and Data

Project Management

Knowledge Management

Self-Development and Goal Setting

Time Management

Presentation Skills

Learning Skills

Career Skills

Communication Skills

Negotiation, Persuasion and Influence

Working With Others

Difficult Conversations

Creativity Tools

Self-Management

Work-Life Balance

Stress Management and Wellbeing

Coaching and Mentoring

Change Management

Team Management

Managing Conflict

Delegation and Empowerment

Performance Management

Leadership Skills

Developing Your Team

Talent Management

Problem Solving

Decision Making

Member Podcast

Cognitive Bias: How We Are Wired to Misjudge

Charlotte Ruhl

Research Assistant & Psychology Graduate

BA (Hons) Psychology, Harvard University

Charlotte Ruhl, a psychology graduate from Harvard College, boasts over six years of research experience in clinical and social psychology. During her tenure at Harvard, she contributed to the Decision Science Lab, administering numerous studies in behavioral economics and social psychology.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Have you ever been so busy talking on the phone that you don’t notice the light has turned green and it is your turn to cross the street?

Have you ever shouted, “I knew that was going to happen!” after your favorite baseball team gave up a huge lead in the ninth inning and lost?

Or have you ever found yourself only reading news stories that further support your opinion?

These are just a few of the many instances of cognitive bias that we experience every day of our lives. But before we dive into these different biases, let’s backtrack first and define what bias is.

What is Cognitive Bias?

Cognitive bias is a systematic error in thinking, affecting how we process information, perceive others, and make decisions. It can lead to irrational thoughts or judgments and is often based on our perceptions, memories, or individual and societal beliefs.

Biases are unconscious and automatic processes designed to make decision-making quicker and more efficient. Cognitive biases can be caused by many things, such as heuristics (mental shortcuts) , social pressures, and emotions.

Broadly speaking, bias is a tendency to lean in favor of or against a person, group, idea, or thing, usually in an unfair way. Biases are natural — they are a product of human nature — and they don’t simply exist in a vacuum or in our minds — they affect the way we make decisions and act.

In psychology, there are two main branches of biases: conscious and unconscious. Conscious or explicit bias is intentional — you are aware of your attitudes and the behaviors resulting from them (Lang, 2019).

Explicit bias can be good because it helps provide you with a sense of identity and can lead you to make good decisions (for example, being biased towards healthy foods).

However, these biases can often be dangerous when they take the form of conscious stereotyping.

On the other hand, unconscious bias , or cognitive bias, represents a set of unintentional biases — you are unaware of your attitudes and behaviors resulting from them (Lang, 2019).

Cognitive bias is often a result of your brain’s attempt to simplify information processing — we receive roughly 11 million bits of information per second. Still, we can only process about 40 bits of information per second (Orzan et al., 2012).

Therefore, we often rely on mental shortcuts (called heuristics) to help make sense of the world with relative speed. As such, these errors tend to arise from problems related to thinking: memory, attention, and other mental mistakes.

Cognitive biases can be beneficial because they do not require much mental effort and can allow you to make decisions relatively quickly, but like conscious biases, unconscious biases can also take the form of harmful prejudice that serves to hurt an individual or a group.

Although it may feel like there has been a recent rise of unconscious bias, especially in the context of police brutality and the Black Lives Matter movement, this is not a new phenomenon.

Thanks to Tversky and Kahneman (and several other psychologists who have paved the way), we now have an existing dictionary of our cognitive biases.

Again, these biases occur as an attempt to simplify the complex world and make information processing faster and easier. This section will dive into some of the most common forms of cognitive bias.

Confirmation Bias

Confirmation bias is the tendency to interpret new information as confirmation of your preexisting beliefs and opinions while giving disproportionately less consideration to alternative possibilities.

Real-World Examples

Since Watson’s 1960 experiment, real-world examples of confirmation bias have gained attention.

This bias often seeps into the research world when psychologists selectively interpret data or ignore unfavorable data to produce results that support their initial hypothesis.

Confirmation bias is also incredibly pervasive on the internet, particularly with social media. We tend to read online news articles that support our beliefs and fail to seek out sources that challenge them.

Various social media platforms, such as Facebook, help reinforce our confirmation bias by feeding us stories that we are likely to agree with – further pushing us down these echo chambers of political polarization.

Some examples of confirmation bias are especially harmful, specifically in the context of the law. For example, a detective may identify a suspect early in an investigation, seek out confirming evidence, and downplay falsifying evidence.

Experiments

The confirmation bias dates back to 1960 when Peter Wason challenged participants to identify a rule applying to triples of numbers.

People were first told that the sequences 2, 4, 6 fit the rule, and they then had to generate triples of their own and were told whether that sequence fits the rule. The rule was simple: any ascending sequence.

But not only did participants have an unusually difficult time realizing this and instead devised overly-complicated hypotheses, they also only generated triples that confirmed their preexisting hypothesis (Wason, 1960).

Explanations

But why does confirmation bias occur? It’s partially due to the effect of desire on our beliefs. In other words, certain desired conclusions (ones that support our beliefs) are more likely to be processed by the brain and labeled as true (Nickerson, 1998).

This motivational explanation is often coupled with a more cognitive theory.

The cognitive explanation argues that because our minds can only focus on one thing at a time, it is hard to parallel process (see information processing for more information) alternate hypotheses, so, as a result, we only process the information that aligns with our beliefs (Nickerson, 1998).

Another theory explains confirmation bias as a way of enhancing and protecting our self-esteem.

As with the self-serving bias (see more below), our minds choose to reinforce our preexisting ideas because being right helps preserve our sense of self-esteem, which is important for feeling secure in the world and maintaining positive relationships (Casad, 2019).

Although confirmation bias has obvious consequences, you can still work towards overcoming it by being open-minded and willing to look at situations from a different perspective than you might be used to (Luippold et al., 2015).

Even though this bias is unconscious, training your mind to become more flexible in its thought patterns will help mitigate the effects of this bias.

Hindsight Bias

Hindsight bias refers to the tendency to perceive past events as more predictable than they actually were (Roese & Vohs, 2012). There are cognitive and motivational explanations for why we ascribe so much certainty to knowing the outcome of an event only once the event is completed.

When sports fans know the outcome of a game, they often question certain decisions coaches make that they otherwise would not have questioned or second-guessed.

And fans are also quick to remark that they knew their team was going to win or lose, but, of course, they only make this statement after their team actually did win or lose.

Although research studies have demonstrated that the hindsight bias isn’t necessarily mitigated by pure recognition of the bias (Pohl & Hell, 1996).

You can still make a conscious effort to remind yourself that you can’t predict the future and motivate yourself to consider alternate explanations.

It’s important to do all we can to reduce this bias because when we are overly confident about our ability to predict outcomes, we might make future risky decisions that could have potentially dangerous outcomes.

Building on Tversky and Kahneman’s growing list of heuristics, researchers Baruch Fischhoff and Ruth Beyth-Marom (1975) were the first to directly investigate the hindsight bias in the empirical setting.

The team asked participants to judge the likelihood of several different outcomes of former U.S. president Richard Nixon’s visit to Beijing and Moscow.

After Nixon returned back to the States, participants were asked to recall the likelihood of each outcome they had initially assigned.

Fischhoff and Beyth found that for events that actually occurred, participants greatly overestimated the initial likelihood they assigned to those events.

That same year, Fischhoff (1975) introduced a new method for testing the hindsight bias – one that researchers still use today.

Participants are given a short story with four possible outcomes, and they are told that one is true. When they are then asked to assign the likelihood of each specific outcome, they regularly assign a higher likelihood to whichever outcome they have been told is true, regardless of how likely it actually is.

But hindsight bias does not only exist in artificial settings. In 1993, Dorothee Dietrich and Matthew Olsen asked college students to predict how the U.S. Senate would vote on the confirmation of Supreme Court nominee Clarence Thomas.

Before the vote, 58% of participants predicted that he would be confirmed, but after his actual confirmation, 78% of students said that they thought he would be approved – a prime example of the hindsight bias. And this form of bias extends beyond the research world.

From the cognitive perspective, hindsight bias may result from distortions of memories of what we knew or believed to know before an event occurred (Inman, 2016).

It is easier to recall information that is consistent with our current knowledge, so our memories become warped in a way that agrees with what actually did happen.

Motivational explanations of the hindsight bias point to the fact that we are motivated to live in a predictable world (Inman, 2016).

When surprising outcomes arise, our expectations are violated, and we may experience negative reactions as a result. Thus, we rely on the hindsight bias to avoid these adverse responses to certain unanticipated events and reassure ourselves that we actually did know what was going to happen.

Self-Serving Bias

Self-serving bias is the tendency to take personal responsibility for positive outcomes and blame external factors for negative outcomes.

You would be right to ask how this is similar to the fundamental attribution error (Ross, 1977), which identifies our tendency to overemphasize internal factors for other people’s behavior while attributing external factors to our own.

The distinction is that the self-serving bias is concerned with valence. That is, how good or bad an event or situation is. And it is also only concerned with events for which you are the actor.

In other words, if a driver cuts in front of you as the light turns green, the fundamental attribution error might cause you to think that they are a bad person and not consider the possibility that they were late for work.

On the other hand, the self-serving bias is exercised when you are the actor. In this example, you would be the driver cutting in front of the other car, which you would tell yourself is because you are late (an external attribution to a negative event) as opposed to it being because you are a bad person.

From sports to the workplace, self-serving bias is incredibly common. For example, athletes are quick to take responsibility for personal wins, attributing their successes to their hard work and mental toughness, but point to external factors, such as unfair calls or bad weather, when they lose (Allen et al., 2020).

In the workplace, people attribute internal factors when they have hired for a job but external factors when they are fired (Furnham, 1982). And in the office itself, workplace conflicts are given external attributions, and successes, whether a persuasive presentation or a promotion, are awarded internal explanations (Walther & Bazarova, 2007).

Additionally, self-serving bias is more prevalent in individualistic cultures , which place emphasis on self-esteem levels and individual goals, and it is less prevalent among individuals with depression (Mezulis et al., 2004), who are more likely to take responsibility for negative outcomes.

Overcoming this bias can be difficult because it is at the expense of our self-esteem. Nevertheless, practicing self-compassion – treating yourself with kindness even when you fall short or fail – can help reduce the self-serving bias (Neff, 2003).

The leading explanation for the self-serving bias is that it is a way of protecting our self-esteem (similar to one of the explanations for the confirmation bias).

We are quick to take credit for positive outcomes and divert the blame for negative ones to boost and preserve our individual ego, which is necessary for confidence and healthy relationships with others (Heider, 1982).

Another theory argues that self-serving bias occurs when surprising events arise. When certain outcomes run counter to our expectations, we ascribe external factors, but when outcomes are in line with our expectations, we attribute internal factors (Miller & Ross, 1975).

An extension of this theory asserts that we are naturally optimistic, so negative outcomes come as a surprise and receive external attributions as a result.

Anchoring Bias

individualistic cultures is closely related to the decision-making process. It occurs when we rely too heavily on either pre-existing information or the first piece of information (the anchor) when making a decision.

For example, if you first see a T-shirt that costs $1,000 and then see a second one that costs $100, you’re more likely to see the second shirt as cheap as you would if the first shirt you saw was $120. Here, the price of the first shirt influences how you view the second.

Sarah is looking to buy a used car. The first dealership she visits has a used sedan listed for $19,000. Sarah takes this initial listing price as an anchor and uses it to evaluate prices at other dealerships.

When she sees another similar used sedan priced at $18,000, that price seems like a good bargain compared to the $19,000 anchor price she saw first, even though the actual market value is closer to $16,000.

When Sarah finds a comparable used sedan priced at $15,500, she continues perceiving that price as cheap compared to her anchored reference price.

Ultimately, Sarah purchases the $18,000 sedan, overlooking that all of the prices seemed like bargains only in relation to the initial high anchor price.

The key elements that demonstrate anchoring bias here are:

- Sarah establishes an initial reference price based on the first listing she sees ($19k)

- She uses that initial price as her comparison/anchor for evaluating subsequent prices

- This biases her perception of the market value of the cars she looks at after the initial anchor is set

- She makes a purchase decision aligned with her anchored expectations rather than a more objective market value

Multiple theories seek to explain the existence of this bias.

One theory, known as anchoring and adjustment, argues that once an anchor is established, people insufficiently adjust away from it to arrive at their final answer, and so their final guess or decision is closer to the anchor than it otherwise would have been (Tversky & Kahneman, 1992).

And when people experience a greater cognitive load (the amount of information the working memory can hold at any given time; for example, a difficult decision as opposed to an easy one), they are more susceptible to the effects of anchoring.

Another theory, selective accessibility, holds that although we assume that the anchor is not a suitable answer (or a suitable price going back to the initial example) when we evaluate the second stimulus (or second shirt), we look for ways in which it is similar or different to the anchor (the price being way different), resulting in the anchoring effect (Mussweiler & Strack, 1999).

A final theory posits that providing an anchor changes someone’s attitudes to be more favorable to the anchor, which then biases future answers to have similar characteristics as the initial anchor.

Although there are many different theories for why we experience anchoring bias, they all agree that it affects our decisions in real ways (Wegner et al., 2001).

The first study that brought this bias to light was during one of Tversky and Kahneman’s (1974) initial experiments. They asked participants to compute the product of numbers 1-8 in five seconds, either as 1x2x3… or 8x7x6…

Participants did not have enough time to calculate the answer, so they had to estimate based on their first few calculations.

They found that those who computed the small multiplications first (i.e., 1x2x3…) gave a median estimate of 512, but those who computed the larger multiplications first gave a median estimate of 2,250 (although the actual answer is 40,320).

This demonstrates how the initial few calculations influenced the participant’s final answer.

Availability Bias

Availability bias (also commonly referred to as the availability heuristic ) refers to the tendency to think that examples of things that readily come to mind are more common than what is actually the case.

In other words, information that comes to mind faster influences the decisions we make about the future. And just like with the hindsight bias, this bias is related to an error of memory.

But instead of being a memory fabrication, it is an overemphasis on a certain memory.

In the workplace, if someone is being considered for a promotion but their boss recalls one bad thing that happened years ago but left a lasting impression, that one event might have an outsized influence on the final decision.

Another common example is buying lottery tickets because the lifestyle and benefits of winning are more readily available in mind (and the potential emotions associated with winning or seeing other people win) than the complex probability calculation of actually winning the lottery (Cherry, 2019).

A final common example that is used to demonstrate the availability heuristic describes how seeing several television shows or news reports about shark attacks (or anything that is sensationalized by the news, such as serial killers or plane crashes) might make you think that this incident is relatively common even though it is not at all.

Regardless, this thinking might make you less inclined to go in the water the next time you go to the beach (Cherry, 2019).

As with most cognitive biases, the best way to overcome them is by recognizing the bias and being more cognizant of your thoughts and decisions.

And because we fall victim to this bias when our brain relies on quick mental shortcuts in order to save time, slowing down our thinking and decision-making process is a crucial step to mitigating the effects of the availability heuristic.

Researchers think this bias occurs because the brain is constantly trying to minimize the effort necessary to make decisions, and so we rely on certain memories – ones that we can recall more easily – instead of having to endure the complicated task of calculating statistical probabilities.

Two main types of memories are easier to recall: 1) those that more closely align with the way we see the world and 2) those that evoke more emotion and leave a more lasting impression.

This first type of memory was identified in 1973, when Tversky and Kahneman, our cognitive bias pioneers, conducted a study in which they asked participants if more words begin with the letter K or if more words have K as their third letter.

Although many more words have K as their third letter, 70% of participants said that more words begin with K because the ability to recall this is not only easier, but it more closely aligns with the way they see the world (knowing the first letter of any word is infinitely more common than the third letter of any word).

In terms of the second type of memory, the same duo ran an experiment in 1983, 10 years later, where half the participants were asked to guess the likelihood of a massive flood would occur somewhere in North America, and the other half had to guess the likelihood of a flood occurring due to an earthquake in California.

Although the latter is much less likely, participants still said that this would be much more common because they could recall specific, emotionally charged events of earthquakes hitting California, largely due to the news coverage they receive.

Together, these studies highlight how memories that are easier to recall greatly influence our judgments and perceptions about future events.

Inattentional Blindness

A final popular form of cognitive bias is inattentional blindness . This occurs when a person fails to notice a stimulus that is in plain sight because their attention is directed elsewhere.

For example, while driving a car, you might be so focused on the road ahead of you that you completely fail to notice a car swerve into your lane of traffic.

Because your attention is directed elsewhere, you aren’t able to react in time, potentially leading to a car accident. Experiencing inattentional blindness has its obvious consequences (as illustrated by this example), but, like all biases, it is not impossible to overcome.

Many theories seek to explain why we experience this form of cognitive bias. In reality, it is probably some combination of these explanations.

Conspicuity holds that certain sensory stimuli (such as bright colors) and cognitive stimuli (such as something familiar) are more likely to be processed, and so stimuli that don’t fit into one of these two categories might be missed.

The mental workload theory describes how when we focus a lot of our brain’s mental energy on one stimulus, we are using up our cognitive resources and won’t be able to process another stimulus simultaneously.

Similarly, some psychologists explain how we attend to different stimuli with varying levels of attentional capacity, which might affect our ability to process multiple stimuli simultaneously.

In other words, an experienced driver might be able to see that car swerve into the lane because they are using fewer mental resources to drive, whereas a beginner driver might be using more resources to focus on the road ahead and unable to process that car swerving in.

A final explanation argues that because our attentional and processing resources are limited, our brain dedicates them to what fits into our schemas or our cognitive representations of the world (Cherry, 2020).

Thus, when an unexpected stimulus comes into our line of sight, we might not be able to process it on the conscious level. The following example illustrates how this might happen.

The most famous study to demonstrate the inattentional blindness phenomenon is the invisible gorilla study (Most et al., 2001). This experiment asked participants to watch a video of two groups passing a basketball and count how many times the white team passed the ball.

Participants are able to accurately report the number of passes, but what they fail to notice is a gorilla walking directly through the middle of the circle.

Because this would not be expected, and because our brain is using up its resources to count the number of passes, we completely fail to process something right before our eyes.

A real-world example of inattentional blindness occurred in 1995 when Boston police officer Kenny Conley was chasing a suspect and ran by a group of officers who were mistakenly holding down an undercover cop.

Conley was convicted of perjury and obstruction of justice because he supposedly saw the fight between the undercover cop and the other officers and lied about it to protect the officers, but he stood by his word that he really hadn’t seen it (due to inattentional blindness) and was ultimately exonerated (Pickel, 2015).

The key to overcoming inattentional blindness is to maximize your attention by avoiding distractions such as checking your phone. And it is also important to pay attention to what other people might not notice (if you are that driver, don’t always assume that others can see you).

By working on expanding your attention and minimizing unnecessary distractions that will use up your mental resources, you can work towards overcoming this bias.

Preventing Cognitive Bias

As we know, recognizing these biases is the first step to overcoming them. But there are other small strategies we can follow in order to train our unconscious mind to think in different ways.

From strengthening our memory and minimizing distractions to slowing down our decision-making and improving our reasoning skills, we can work towards overcoming these cognitive biases.

An individual can evaluate his or her own thought process, also known as metacognition (“thinking about thinking”), which provides an opportunity to combat bias (Flavell, 1979).

This multifactorial process involves (Croskerry, 2003):

(a) acknowledging the limitations of memory, (b) seeking perspective while making decisions, (c) being able to self-critique, (d) choosing strategies to prevent cognitive error.

Many strategies used to avoid bias that we describe are also known as cognitive forcing strategies, which are mental tools used to force unbiased decision-making.

The History of Cognitive Bias

The term cognitive bias was first coined in the 1970s by Israeli psychologists Amos Tversky and Daniel Kahneman, who used this phrase to describe people’s flawed thinking patterns in response to judgment and decision problems (Tversky & Kahneman, 1974).

Tversky and Kahneman’s research program, the heuristics and biases program, investigated how people make decisions given limited resources (for example, limited time to decide which food to eat or limited information to decide which house to buy).

As a result of these limited resources, people are forced to rely on heuristics or quick mental shortcuts to help make their decisions.

Tversky and Kahneman wanted to understand the biases associated with this judgment and decision-making process.

To do so, the two researchers relied on a research paradigm that presented participants with some type of reasoning problem with a computed normative answer (they used probability theory and statistics to compute the expected answer).

Participants’ responses were then compared with the predetermined solution to reveal the systematic deviations in the mind.

After running several experiments with countless reasoning problems, the researchers were able to identify numerous norm violations that result when our minds rely on these cognitive biases to make decisions and judgments (Wilke & Mata, 2012).

Key Takeaways

- Cognitive biases are unconscious errors in thinking that arise from problems related to memory, attention, and other mental mistakes.

- These biases result from our brain’s efforts to simplify the incredibly complex world in which we live.

- Confirmation bias , hindsight bias, mere exposure effect , self-serving bias , base rate fallacy , anchoring bias , availability bias , the framing effect , inattentional blindness, and the ecological fallacy are some of the most common examples of cognitive bias. Another example is the false consensus effect .

- Cognitive biases directly affect our safety, interactions with others, and how we make judgments and decisions in our daily lives.

- Although these biases are unconscious, there are small steps we can take to train our minds to adopt a new pattern of thinking and mitigate the effects of these biases.

Allen, M. S., Robson, D. A., Martin, L. J., & Laborde, S. (2020). Systematic review and meta-analysis of self-serving attribution biases in the competitive context of organized sport. Personality and Social Psychology Bulletin, 46 (7), 1027-1043.

Casad, B. (2019). Confirmation bias . Retrieved from https://www.britannica.com/science/confirmation-bias

Cherry, K. (2019). How the availability heuristic affects your decision-making . Retrieved from https://www.verywellmind.com/availability-heuristic-2794824

Cherry, K. (2020). Inattentional blindness can cause you to miss things in front of you . Retrieved from https://www.verywellmind.com/what-is-inattentional-blindness-2795020

Dietrich, D., & Olson, M. (1993). A demonstration of hindsight bias using the Thomas confirmation vote. Psychological Reports, 72 (2), 377-378.

Fischhoff, B. (1975). Hindsight is not equal to foresight: The effect of outcome knowledge on judgment under uncertainty. Journal of Experimental Psychology: Human Perception and Performance, 1 (3), 288.

Fischhoff, B., & Beyth, R. (1975). I knew it would happen: Remembered probabilities of once—future things. Organizational Behavior and Human Performance, 13 (1), 1-16.

Furnham, A. (1982). Explanations for unemployment in Britain. European Journal of social psychology, 12(4), 335-352.

Heider, F. (1982). The psychology of interpersonal relations . Psychology Press.

Inman, M. (2016). Hindsight bias . Retrieved from https://www.britannica.com/topic/hindsight-bias

Lang, R. (2019). What is the difference between conscious and unconscious bias? : Faqs. Retrieved from https://engageinlearning.com/faq/compliance/unconscious-bias/what-is-the-difference-between-conscious-and-unconscious-bias/

Luippold, B., Perreault, S., & Wainberg, J. (2015). Auditor’s pitfall: Five ways to overcome confirmation bias . Retrieved from https://www.babson.edu/academics/executive-education/babson-insight/finance-and-accounting/auditors-pitfall-five-ways-to-overcome-confirmation-bias/

Mezulis, A. H., Abramson, L. Y., Hyde, J. S., & Hankin, B. L. (2004). Is there a universal positivity bias in attributions? A meta-analytic review of individual, developmental, and cultural differences in the self-serving attributional bias. Psychological Bulletin, 130 (5), 711.

Miller, D. T., & Ross, M. (1975). Self-serving biases in the attribution of causality: Fact or fiction?. Psychological Bulletin, 82 (2), 213.

Most, S. B., Simons, D. J., Scholl, B. J., Jimenez, R., Clifford, E., & Chabris, C. F. (2001). How not to be seen: The contribution of similarity and selective ignoring to sustained inattentional blindness. Psychological Science, 12 (1), 9-17.

Mussweiler, T., & Strack, F. (1999). Hypothesis-consistent testing and semantic priming in the anchoring paradigm: A selective accessibility model. Journal of Experimental Social Psychology, 35 (2), 136-164.

Neff, K. (2003). Self-compassion: An alternative conceptualization of a healthy attitude toward oneself. Self and Identity, 2 (2), 85-101.

Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2 (2), 175-220.

Orzan, G., Zara, I. A., & Purcarea, V. L. (2012). Neuromarketing techniques in pharmaceutical drugs advertising. A discussion and agenda for future research. Journal of Medicine and Life, 5 (4), 428.

Pickel, K. L. (2015). Eyewitness memory. The handbook of attention , 485-502.

Pohl, R. F., & Hell, W. (1996). No reduction in hindsight bias after complete information and repeated testing. Organizational Behavior and Human Decision Processes, 67 (1), 49-58.

Roese, N. J., & Vohs, K. D. (2012). Hindsight bias. Perspectives on Psychological Science, 7 (5), 411-426.

Ross, L. (1977). The intuitive psychologist and his shortcomings: Distortions in the attribution process. In Advances in experimental social psychology (Vol. 10, pp. 173-220). Academic Press.

Tversky, A., & Kahneman, D. (1973). Availability: A heuristic for judging frequency and probability. Cognitive Psychology, 5 (2), 207-232.

Tversky, A., & Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases. Science, 185 (4157), 1124-1131.

Tversky, A., & Kahneman, D. (1983). Extensional versus intuitive reasoning: The conjunction fallacy in probability judgment. Psychological Review , 90(4), 293.

Tversky, A., & Kahneman, D. (1992). Advances in prospect theory: Cumulative representation of uncertainty. Journal of Risk and Uncertainty, 5 (4), 297-323.

Walther, J. B., & Bazarova, N. N. (2007). Misattribution in virtual groups: The effects of member distribution on self-serving bias and partner blame. Human Communication Research, 33 (1), 1-26.

Wason, Peter C. (1960), “On the failure to eliminate hypotheses in a conceptual task”. Quarterly Journal of Experimental Psychology, 12 (3): 129–40.

Wegener, D. T., Petty, R. E., Detweiler-Bedell, B. T., & Jarvis, W. B. G. (2001). Implications of attitude change theories for numerical anchoring: Anchor plausibility and the limits of anchor effectiveness. Journal of Experimental Social Psychology, 37 (1), 62-69.

Wilke, A., & Mata, R. (2012). Cognitive bias. In Encyclopedia of human behavior (pp. 531-535). Academic Press.

Further Information

Test yourself for bias.

- Project Implicit (IAT Test) From Harvard University

- Implicit Association Test From the Social Psychology Network

- Test Yourself for Hidden Bias From Teaching Tolerance

- How The Concept Of Implicit Bias Came Into Being With Dr. Mahzarin Banaji, Harvard University. Author of Blindspot: hidden biases of good people5:28 minutes; includes transcript

- Understanding Your Racial Biases With John Dovidio, PhD, Yale University From the American Psychological Association11:09 minutes; includes transcript

- Talking Implicit Bias in Policing With Jack Glaser, Goldman School of Public Policy, University of California Berkeley21:59 minutes

- Implicit Bias: A Factor in Health Communication With Dr. Winston Wong, Kaiser Permanente19:58 minutes

- Bias, Black Lives and Academic Medicine Dr. David Ansell on Your Health Radio (August 1, 2015)21:42 minutes

- Uncovering Hidden Biases Google talk with Dr. Mahzarin Banaji, Harvard University

- Impact of Implicit Bias on the Justice System 9:14 minutes

- Students Speak Up: What Bias Means to Them 2:17 minutes

- Weight Bias in Health Care From Yale University16:56 minutes

- Gender and Racial Bias In Facial Recognition Technology 4:43 minutes

Journal Articles

- An implicit bias primer Mitchell, G. (2018). An implicit bias primer. Virginia Journal of Social Policy & the Law , 25, 27–59.

- Implicit Association Test at age 7: A methodological and conceptual review Nosek, B. A., Greenwald, A. G., & Banaji, M. R. (2007). The Implicit Association Test at age 7: A methodological and conceptual review. Automatic processes in social thinking and behavior, 4 , 265-292.

- Implicit Racial/Ethnic Bias Among Health Care Professionals and Its Influence on Health Care Outcomes: A Systematic Review Hall, W. J., Chapman, M. V., Lee, K. M., Merino, Y. M., Thomas, T. W., Payne, B. K., … & Coyne-Beasley, T. (2015). Implicit racial/ethnic bias among health care professionals and its influence on health care outcomes: a systematic review. American journal of public health, 105 (12), e60-e76.

- Reducing Racial Bias Among Health Care Providers: Lessons from Social-Cognitive Psychology Burgess, D., Van Ryn, M., Dovidio, J., & Saha, S. (2007). Reducing racial bias among health care providers: lessons from social-cognitive psychology. Journal of general internal medicine, 22 (6), 882-887.

- Integrating implicit bias into counselor education Boysen, G. A. (2010). Integrating Implicit Bias Into Counselor Education. Counselor Education & Supervision, 49 (4), 210–227.

- Cognitive Biases and Errors as Cause—and Journalistic Best Practices as Effect Christian, S. (2013). Cognitive Biases and Errors as Cause—and Journalistic Best Practices as Effect. Journal of Mass Media Ethics, 28 (3), 160–174.

- Empathy intervention to reduce implicit bias in pre-service teachers Whitford, D. K., & Emerson, A. M. (2019). Empathy Intervention to Reduce Implicit Bias in Pre-Service Teachers. Psychological Reports, 122 (2), 670–688.

Contextual Debiasing and Critical Thinking: Reasons for Optimism

- Published: 26 April 2016

- Volume 37 , pages 103–111, ( 2018 )

Cite this article

- Vasco Correia 1

1562 Accesses

8 Citations

19 Altmetric

Explore all metrics