- Search Search

- CN (Chinese)

- DE (German)

- ES (Spanish)

- FR (Français)

- JP (Japanese)

- Open Research

- Booksellers

- Peer Reviewers

- Springer Nature Group ↗

- Publish an article

- Roles and responsibilities

- Signing your contract

- Writing your manuscript

- Submitting your manuscript

- Producing your book

- Promoting your book

- Submit your book idea

- Manuscript guidelines

- Book author services

- Publish a book

- Publish conference proceedings

- Research data policy

Data availability statements

- Data repository guidance

- Sensitive data

- Data policy FAQs

- Research data helpdesk

Guidance for authors and editors

An article’s data availability statement lets a reader know where and how to access data that support the results and analysis. It may include links to publicly accessible datasets that were analysed or generated during the study, descriptions of what data are available and/or information on how to access data that is not publicly available.

The data availability statement is a valuable link between a paper’s results and the supporting evidence. Springer Nature’s data policy is based on transparency, requiring these statements in original research articles across our journals.

The guidance below offers advice on how to create a data availability statement, along with examples from different research areas.

Data availability statements support research data

What should a data availability statement include.

Your data availability statement should describe how the data supporting the results reported in your paper can be accessed.

- If your data are in a repository, include hyperlinks and persistent identifiers (e.g. DOI or accession number) for the data where available.

- If your data cannot be shared openly, for example to protect study participant privacy, then this should be explained.

- Include both original data generated in your research and any secondary data reuse that supports your results and analyses.

Read our detailed guidance on how to write an excellent data availability statement .

Citing data sources

You should cite any publicly available data on which the conclusions of the paper rely. This includes novel data shared alongside the publication and any secondary data sources.

Data citations should include a persistent identifier (such as a DOI), should be included in the reference list using the minimum information recommended by DataCite (Dataset Creator, Dataset Title, Publisher [repository], Publication Year, Identifier [e.g. DOI, Handle or ARK]) and follow journal style.

See our further guidance on citing datasets .

Statement examples by research area

Life sciences and clinical medicine, data publicly available in a repository:.

- PRO-Seq data were deposited into the Gene Expression Omnibus database under accession number GSE85337 and are available at the following URL: https://www.ncbi.nlm.nih.gov/geo/query/acc.cgi?acc=GSE85337 . Example from: https://www.nature.com/articles/s41559-017-0447-5

- The experimental data and the simulation results that support the findings of this study are available in Figshare with the identifier https://doi.org/10.6084/m9.figshare.13322975 . Example from: https://www.nature.com/articles/s41557-020-00629-3

- The anonymised data collected are available as open data via the University of Bristol online data repository: https://doi.org/10.5523/bris.1dnhjcw6w4m1n29u2yuxjywde1 . Example from: https://doi.org/10.1186/s12889-020-08633-5

Data available with the paper or supplementary information:

- All data supporting the findings of this study are available within the paper and its Supplementary Information. Microsatellite primer sequences are provided in Supplementary Table 2, along with original reference describing the microsatellites used in this study. Example from: https://doi.org/10.1038/s41559-017-0148

- All data on the measured ecosystem variables indicating ecosystem functions that support the findings of this study are included within this paper and its Supplementary Information files. Example from: https://doi.org/10.1038/s41559-017-0391-4

Data cannot be shared openly but are available on request from authors:

- The data that support the findings of this study are not openly available due to reasons of sensitivity and are available from the corresponding author upon reasonable request. Data are located in controlled access data storage at Karolinska Institutet. Example from: https://doi.org/10.1186/s12910-022-00758-z

- The data that support the findings of this study are available from the authors but restrictions apply to the availability of these data, which were used under license from the Natural History Museum (London) for the current study, and so are not publicly available. Data are, however, available from the authors upon reasonable request and with permission from the Centre for Human Evolution Studies at the Natural History Museum. Example from: https://www.nature.com/articles/s41559-018-0528-0

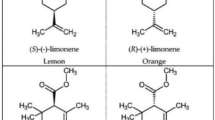

Chemistry and chemical biology

- Crystallographic data for the structures reported in this article have been deposited at the Cambridge Crystallographic Data Centre, under deposition numbers CCDC 2125007 (1), 2125008 (3), 2125009 (4), 2125010 (5), 2166315 (6) and 2125011 (7). Copies of the data can be obtained free of charge via https://www.ccdc.cam.ac.uk/structures/ . All other relevant data generated and analysed during this study, which include experimental, spectroscopic, crystallographic and computational data, are included in this article and its supplementary information. Source data are provided with this paper. Example from: https://doi.org/10.1038/s41557-022-01081-1

- The raw transient absorption data (including the anisotropy measurements), Raman and ultraviolet–visible spectra, and computational data that support the findings of this study are available in the Edinburgh DataShare repository with the identifier https://doi.org/10.7488/ds/2751 . Example from: https://www.nature.com/articles/s41557-020-0431-6

- The authors declare that the data supporting the findings of this study are available within the paper and its Supplementary Information files . Should any raw data files be needed in another format they are available from the corresponding author upon reasonable request. Source data are provided with this paper. Example from: https://www.nature.com/articles/s41557-022-01049-1

Physical sciences

- The dataset on global land precipitation source and evapotranspiration sink is available at https://doi.org/10.1594/PANGAEA.908705 . The MODIS LAI C6 product is available at https://doi.org/10.5067/MODIS/MOD15A2H.006 . GPCP v.2.3 precipitation data are available at https://psl.noaa.gov/data/gridded/data.gpcp.html . GLEAM v.3.3a evapotranspiration data are available at https://www.gleam.eu/ . Air temperature and wind speed from ERA5 are available at https://cds.climate.copernicus.eu . Surface radiation (CERES_SYN1deg_Ed4.1) data are available at https://ceres.larc.nasa.gov/ . SST from NOAA Optimum Interpolation v.2 is available at https://psl.noaa.gov/data/gridded/data.noaa.oisst.v2.html . Snow-cover product is available at https://nsidc.org/data/NSIDC-0046/versions/4 . Elevation data are available at https://www.ngdc.noaa.gov/mgg/global/global.html .

- The authors declare that the data supporting the findings of this study are available within the paper, its supplementary information files, and the National Tibetan Plateau Data Center ( https://doi.org/10.11888/Cryos.tpdc.272747 ).

- Data sets generated during the current study are available from the corresponding author on reasonable request. The natural gas production data are available from Drilling Info but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Example from: https://www.nature.com/articles/s41559-017-0329-x

Humanities and social science

- The datasets generated by the survey research during and/or analyzed during the current study are available in the Dataverse repository, https://doi.org/10.7910/DVN/205YXZ .” Example from: https://doi.org/10.1057/s41599-020-00552-5

- The Greek Hippocratic texts used in this study are available to the public under a Creative Commons license at A Digital Corpus for Graeco-Arabic Studies: https://www.graeco-arabic-studies.org/texts.html . Example from: https://doi.org/10.1057/s41599-020-0511-7

Data available in a repository with restricted access:

- The Heinz et al. data are available from the Inter-University Consortium of Political and Social Research at https://www.icpsr.umich.edu/icpsrweb/ICPSR/studies/6040 . Our custom data are available in the Open Science Framework repository at https://osf.io/5xayz/ . Example from: https://www.nature.com/articles/s41562-019-0761-9

- The raw CoVIDA and HBS data are protected and are not available due to data privacy laws. The processed data sets are available at OPENICPSR under accession code 14212129 ( https://www.openicpsr.org/openicpsr/project/142121 ). Example from: https://www.nature.com/articles/s41467-021-25038-z

- The data that support the findings of this study are available from Norwegian Social Research (NOVA), but restrictions apply to the availability of these data, which were used under licence for the current study and so are not publicly available. The data are, however, available from the authors upon reasonable request and with the permission of Norwegian Social Research (NOVA). Example from: https://www.nature.com/articles/s41562-021-01255-w

Data shared with manuscript or Supplementary Information:

- The author confirms that all data generated or analysed during this study are included in this published article. Example from: https://doi.org/10.1057/s41599-020-0527-z

Data sharing is not applicable:

- We do not analyse or generate any datasets, because our work proceeds within a theoretical and mathematical approach. Example from: https://doi.org/10.1057/s41599-020-0517-1

- Tools & Services

- Account Development

- Sales and account contacts

- Professional

- Press office

- Locations & Contact

We are a world leading research, educational and professional publisher. Visit our main website for more information.

- © 2023 Springer Nature

- General terms and conditions

- Your US State Privacy Rights

- Your Privacy Choices / Manage Cookies

- Accessibility

- Legal notice

- Help us to improve this site, send feedback.

Research data management

- Introduction

- Using the CORD data repository

How to write a data availability statement (DAS)

- Restricting access to research data

- Digital Object Identifiers (DOIs)

- Data management plans

- RDM in bids and proposals

- What is FAIR Data?

- Syncing ORCID and DataCite for CORD

- Selecting a data licence

- Contact the Research Data Manager

What is a DAS?

Data availability statements, also known as data access statements, are included in publications to describe where the data associated with the paper is available, and under what conditions the data can be accessed. They are required by many funders and scientific journals as well as the UKRI Common Principles on Data Policy .

Compliance with funder policy

UKRI requires all research articles to include a ‘Data Access Statement’, even where there are no data associated with the article or the data are inaccessible. In situations where no new data have been created, such as a review, the statement “No new data were created or analysed in this study. Data sharing is not applicable to this article.” should be included in the article as a DAS.

Read the full UKRI policy to ensure you know what your responsibilities are.

Cranfield University’s Open Access Policy also holds researchers responsible for ‘Ensuring published results always include a statement on how and on what terms supporting data may be accessed.’

What should I include in a DAS?

Examples of data access statements are provided below, but your statement should typically include:

- where the data can be accessed (preferably a data repository, like CORD )

- a persistent identifier, such as a Digital Object Identifier (DOI) or accession number, or a link to a permanent record for the dataset

- details of any restrictions on accessing the data and a justifiable explanation (e.g., for ethical, legal, or commercial reasons)

*A simple direction to contact the author may not be considered acceptable by some funders and publishers. The EPSRC have made this explicit. Consider setting up a shared email address for your research group or use an existing departmental address. ** Under some circumstances (e.g., participants did not agree for their data to be shared) it may be appropriate to explain that the data are not available at all. In this case, you must give clear and justified reasons.

*** Use in situations such as a review, where no new data have been created. Additional examples of data access/availability statements can be found on these publisher web pages: Springer Nature , Wiley and Taylor Francis .

Examples of poor and insufficient DAS

Some funders and journals do not accept a direction to contact the author/authors. Data availability statement: “Data available on request / reasonable request” Statement too vague without the provision of a persistent identifier of accession number Data availability statement: “Data are available in a public, open access repository.” Data access statement as part of the methods section Methods: “All data and codes are available under the following OSF repository: https://osf.io/9999/ ” An unclear statement whether there is data. Availability of data and materials: “Not applicable”

Where should I put the DAS in my paper?

Some journals provide a “data availability” or “data access” section. If no such section exists, you can place your statement in the acknowledgements section.

Link Your Datasets to Your Article

Once your article is published, you should update your repository project with the DOI for your article, which will be emailed to you upon article publication. Linking your supporting data to your publication will enable your data and paper to be reciprocally connected, ensuring you receive credit for your work. This can be done in CORD by using the ‘Resource Title’ and ‘Resource DOI’ fields respectively .

- << Previous: Using the CORD data repository

- Next: Restricting access to research data >>

- Last Updated: Apr 3, 2024 1:15 PM

- URL: https://library.cranfield.ac.uk/research-data-management

- Chinese (Traditional)

- Springer Support

- Solution home

- Author and Peer Reviewer Support

- Research Data Sharing

Write a data availability statement for a paper

Your data availability statement should describe how the data supporting the results reported in your paper can be accessed. This includes both primary data (data you generated as part of your study) and secondary data (data from other sources that you reused in your study). If the data are in a repository, include hyperlinks and persistent identifiers for the data where available. If your data cannot be shared openly for any reason, for example to protect study participant privacy, then this should be explained in the data availability statement. If accessing or reusing your data is subject to any conditions of use or restrictions, these should also be described in the data availability statement.

Go to our data availability statement resource page for more information on writing data availability statements, and examples for different research areas. For more information, please email [email protected] .

Related Articles

- Find a data repository for my data

- Data my journal expects me to share

- Publishing data in a repository

- Select the right licence for your data

- My research didn’t generate data or I reused existing data

- Correctly citing and referencing your dataset for maximum impact

- Publishing articles that describe datasets

- Release of research data ahead of manuscript publication

- Sensitive data or data about human participants: sharing expectations

- Software and code sharing

- Manuscript Preparation

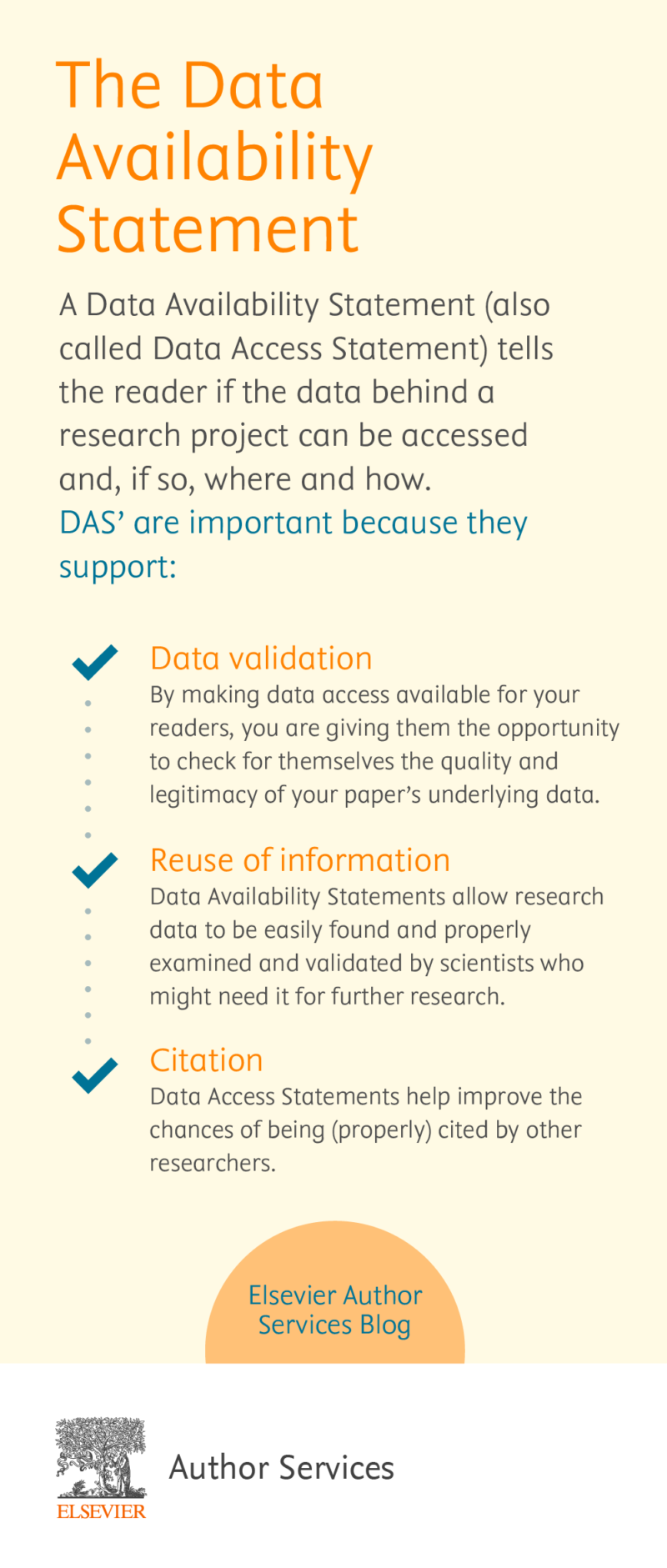

The Data Availability Statement

- 5 minute read

- 56.4K views

Table of Contents

Surely, you have already come across many metaphors for knowledge: a building permanently under construction, a very big tree with its roots deep down in the earth, finding nourishment in the legacy of the ancient masters, or even a never-ending road, with countless crossroads and a horizon that sometimes looks close enough to reach – but never actually quite achievable. With our research, we challenge ourselves with goals that always are set further and further away.

One thing is absolutely sure… a step forward actually only means progress when the last one has been given thoughtfully and confidently, putting us in a solid position to continue moving forward. In other words, research is an activity that naturally thrives from previous findings and research. Allowing your readers to access research data is considered a good practice in science, and a mechanism to encourage transparency in scientific progress. It’s for this that most journals today demand a Data Availability Statement, where authors openly provide the necessary information for others to reproduce works stated or reported in an article. In the case of research reviews, however, that are not based on original data, such a statement is not needed.

What is a Data Availability Statement (DAS)?

A Data Availability Statement (also called Data Access Statement) tells the reader if the data behind a research project can be accessed and, if so, where and how. Ideally, authors should include hyperlinks to public databases to make it easier for the readers to find them. If you are currently in the process of submitting an article to a journal, you should check its guidelines and policy, but normally a DAS is included in a very visible place in the manuscript right before the reference section. For further guidance, most journals offer templates for different kinds of DAS formats, regarding different means of accessing data (or not).

When specific journal instructions regarding how to formulate a DAS are absent or unavailable, there are some examples below you might find handy. However, you should consider tailoring or combining them to fit your needs (replace the information inside brackets):

Data available to be shared

- The raw data required to reproduce the above findings are available to download from [INSERT PERMANENT WEB LINK(s)]. The processed data required to reproduce the above findings are available to download from [INSERT PERMANENT WEB LINK(s)].

Data not available to be shared

- The raw/processed data required to reproduce the above findings cannot be shared at this time due to legal/ ethical reasons.

- The raw/processed data required to reproduce the above findings cannot be shared at this time due to technical/ time limitations.

- The raw/processed data required to reproduce the above findings cannot be shared at this time as the data also forms part of an ongoing study.

As previously mentioned, the most common practice to share datasets is to provide a permanent web link. This way, almost universal access is guaranteed. There are no restrictions concerning the choice of a database;. Authors can use whatever database they want, but depending on which journal they are submitting their papers to, they can be invited to upload their dataset to a specific one. This is a way to encourage authors to include a Data Availability Statement during submission for publication. Generally, though, you can also link your dataset directly to your article.

Why should I write a Data Availability Statement?

Actually, Data Availability Statements are normally only one or two sentences, so there is really no reason why it shouldn’t be included in the manuscript. Furthermore, most journals and funders require them for submission purposes.

DAS’ are important because they support

Data validation.

Impactful findings emerge from solid data. Validating data is an often overlooked step, but it is key to achieve accurate results. By making data access available for your readers, you are giving them the opportunity to check for themselves the quality and legitimacy of your paper’s underlying data. Additionally, it also makes it easier for researchers to find and have access to an even larger quantity of scientific material that could be important to develop their own body of work.

Reuse of information

Science progress ultimately rests on used and reused chunks of information. They are like bricks for that infinitely growing building mentioned in the introduction. Data Availability Statements allow research data to be easily found and properly examined and validated by scientists who might need it for further research. Together with database indexation, they are a very effective tool for general access to scientific information networks, making it easy for researchers to develop their scientific projects, and further knowledge.

Probably the best news for authors concerning Data Access Statements is that they definitely help improve the chances of being (properly) cited by other researchers. As you might already be aware by now, the number of citations constitutes an important value taken into account when calculating a researcher’s relevance metric (H-index). The higher one’s H-index, the more visibility and recognition he or she has.

It can’t be overemphasized how important Data Availability Statements are for transparency in science. They assure that researchers are properly recognized for their work and help science grow as a discipline, in which sustainable progress is a key pillar.

Data Availability Statement with Elsevier

In summary, with a Data Availability Statement an author can provide information about the data presented in an article and provide a reason if data is not available to access. View this example article where the DAS will appear under the “research data” section from the article outline.

Benefits for authors and readers include

- Increases transparency

- Allows compliance with data policies

- Encourages good scientific practice and encourages trust

How does it work

Check the Guide for Authors of the journal of your choice to see your options for sharing research data. The majority of Elsevier journals will have integrated the option to create a Data Availability Statement directly in the submission flow. You will be guided through the steps to complete the DAS during the manuscript submission process.

The difference between abstract and conclusion

17 March 2021 – Elsevier’s Mini Program Launched on WeChat Brings Quality Editing Straight to your Smartphone

You may also like.

Make Hook, Line, and Sinker: The Art of Crafting Engaging Introductions

Can Describing Study Limitations Improve the Quality of Your Paper?

A Guide to Crafting Shorter, Impactful Sentences in Academic Writing

6 Steps to Write an Excellent Discussion in Your Manuscript

How to Write Clear and Crisp Civil Engineering Papers? Here are 5 Key Tips to Consider

The Clear Path to An Impactful Paper: ②

The Essentials of Writing to Communicate Research in Medicine

Changing Lines: Sentence Patterns in Academic Writing

Input your search keywords and press Enter.

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Data Availability

Introduction.

PLOS journals require authors to make all data necessary to replicate their study’s findings publicly available without restriction at the time of publication. When specific legal or ethical restrictions prohibit public sharing of a data set, authors must indicate how others may obtain access to the data.

When submitting a manuscript, authors must provide a Data Availability Statement describing compliance with PLOS' data policy. If the article is accepted for publication, the Data Availability Statement will be published as part of the article.

Acceptable data sharing methods are listed below , accompanied by guidance for authors as to what must be included in their Data Availability Statement and how to follow best practices in research reporting .

PLOS believes that sharing data fosters scientific progress. Data availability allows and facilitates:

- Validation, replication, reanalysis, new analysis, reinterpretation or inclusion into meta-analyses;

- Reproducibility of research;

- Efforts to ensure data are archived, increasing the value of the investment made in funding scientific research;

- Reduction of the burden on authors in preserving and finding old data, and managing data access requests;

- Citation and linking of research data and their associated articles, enhancing visibility and ensuring recognition for authors, data producers and curators.

Publication is conditional on compliance with this policy. If restrictions on access to data come to light after publication, we reserve the right to post a Correction, an Editorial Expression of Concern, contact the authors' institutions and funders, or, in extreme cases, retract the publication.

Minimal Data Set Definition

Authors must share the “minimal data set” for their submission. PLOS defines the minimal data set to consist of the data required to replicate all study findings reported in the article, as well as related metadata and methods. Additionally, PLOS requires that authors comply with field-specific standards for preparation, recording, and deposition of data when applicable.

For example, authors should submit the following data:

- The values behind the means, standard deviations and other measures reported;

- The values used to build graphs;

- The points extracted from images for analysis.

Authors do not need to submit their entire data set if only a portion of the data was used in the reported study. Also, authors do not need to submit the raw data collected during an investigation if the standard in the field is to share data that have been processed.

PLOS does not permit references to “data not shown.” Authors should deposit relevant data in a public data repository or provide the data in the manuscript.

We require authors to provide sample image data in support of all reported results (e.g. for immunohistochemistry images, fMRI images, etc.), either with the submission files or in a public repository.

For manuscripts submitted to PLOS Biology, PLOS ONE, PLOS Climate, PLOS Water, PLOS Global Public Health or PLOS Mental Health on or after July 1, 2019, authors must provide original, uncropped and minimally adjusted images supporting all blot and gel results reported in the article’s figures and Supporting Information files. Whilst it is not necessary to provide original images at time of initial submission, we will require these files during the peer review process or before a submission can be accepted for publication.

When reviewing concerns arising after publication in relation to images shown, we may request available underlying data for any image files depicted in the article, as needed to resolve the concern(s).

Acceptable Data Sharing Methods

Deposition within data repository (strongly recommended).

All data and related metadata underlying reported findings should be deposited in appropriate public data repositories, unless already provided as part of a submitted article. Repositories may be either subject-specific repositories that accept specific types of structured data, or cross-disciplinary generalist repositories that accept multiple data types.

If field-specific standards for data deposition exist, PLOS requires authors to comply with these standards. Authors should select repositories appropriate to their field of study (for example, ArrayExpress or GEO for microarray data; GenBank, EMBL, or DDBJ for gene sequences).

The Data Availability Statement must list the name of the repository or repositories as well as digital object identifiers (DOIs), accession numbers or codes, or other persistent identifiers for all relevant data.

Data citation

PLOS encourages authors to cite any publicly available research data in their reference list. References to data sets (data citations) must include a persistent identifier (such as a DOI). Citations of data sets, when they appear in the reference list, should include the minimum information recommended by DataCite and follow journal style.

Example : Andrikou C, Thiel D, Ruiz-Santiesteban JA, Hejnol A. Active mode of excretion across digestive tissues predates the origin of excretory organs. 2019. Dryad Digital Repository. https://doi.org/10.5061/dryad.bq068jr .

PLOS supports the data citation roadmap for scientific publishers developed by the Publishers Early Adopters Expert Group as part of the Data Citation Implementation Pilot (DCIP) project, an initiative of FORCE11 and the NIH BioCADDIE program.

Data in Supporting Information files

Although authors are encouraged to directly deposit data in appropriate repositories , data can be included in Supporting Information files. When including data in Supporting Information files, authors should submit data in file formats that are standard in their field and allow wide dissemination. If there are currently no standards in the field, authors should maximize the accessibility and reusability of the data by selecting a file format from which data can be efficiently extracted (for example, spreadsheets are preferable to PDFs or images when providing tabulated data).

Upon publication, PLOS uploads all Supporting Information files associated with an article to the figshare repository to increase compliance with the FAIR principles (Findable, Accessible, Interoperable, Reusable).

Supporting Information files are published exactly as provided and are not copyedited. Each file should be less than 20 MB.

Data Management Plans

Some funding agencies have policies on the preparation and sharing of Data Management Plans (DMPs), and authors who receive funding from some agencies may be required to prepare DMPs as a condition of grants.

PLOS encourages authors to prepare DMPs before conducting their research and encourages authors to make those plans available to editors, reviewers and readers who wish to assess them.

The following resources may also be consulted for guidance on DMPs:

- Funders and institutions

- Digital Curation Centre

- Data Stewardship Wizard

Acceptable Data Access Restrictions

PLOS recognizes that, in some instances, authors may not be able to make their underlying data set publicly available for legal or ethical reasons. This data policy does not overrule local regulations, legislation or ethical frameworks. Where these frameworks prevent or limit data release, authors must make these limitations clear in the Data Availability Statement at the time of submission. Acceptable restrictions on public data sharing are detailed below.

Please note it is not acceptable for an author to be the sole named individual responsible for ensuring data access.

Third-party data

For studies involving third-party data, we encourage authors to share any data specific to their analyses that they can legally distribute. PLOS recognizes, however, that authors may be using third-party data they do not have the rights to share. When third-party data cannot be publicly shared, authors must provide all information necessary for interested researchers to apply to gain access to the data.

- A description of the data set and the third-party source

- If applicable, verification of permission to use the data set

- All necessary contact information others would need to apply to gain access to the data

Authors should properly cite and acknowledge the data source in the manuscript. Please note, if data have been obtained from a third-party source, we require that other researchers would be able to access the data set in the same manner as the authors.

Human research participant data and other sensitive data

For studies involving human research participant data or other sensitive data, we encourage authors to share de-identified or anonymized data. However, when data cannot be publicly shared, we allow authors to make their data sets available upon request.

- Explain the restrictions in detail (e.g., data contain potentially identifying or sensitive patient information)

- Provide contact information for a data access committee, ethics committee, or other institutional body to which data requests may be sent

General guidelines for human research participant data

Prior to sharing human research participant data, authors should consult with an ethics committee to ensure data are shared in accordance with participant consent and all applicable local laws.

Data sharing should never compromise participant privacy. It is therefore not appropriate to publicly share personally identifiable data on human research participants. The following are examples of data that should not be shared:

- Name, initials, physical address

- Internet protocol (IP) address

- Specific dates (birth dates, death dates, examination dates, etc.)

- Contact information such as phone number or email address

- Location data

Data that are not directly identifying may also be inappropriate to share, as in combination they can become identifying. For example, data collected from a small group of participants, vulnerable populations, or private groups should not be shared if they involve indirect identifiers (such as sex, ethnicity, location, etc.) that may risk the identification of study participants.

Steps necessary to protect privacy may include de-identifying data, adding noise, or blocking portions of the database. Where this is not possible, data sharing could be restricted by license agreements directed specifically at privacy concerns. Additional guidance on preparing human research participant data for publication, including information on how to properly de-identify these data, can be found here:

- Preparing raw clinical data for publication: guidance for journal editors, authors, and peer reviewers

The following resources may also be consulted for guidance on sharing human research participant data:

- Sharing Clinical Trial Data: Maximizing Benefits, Minimizing Risk

- European Medicines Agency: Publication and access to clinical-trial data

- US National Institutes of Health: Protecting the Rights and Privacy of Human Subjects

- Canadian Institutes of Health Research Best Practices for Protecting Privacy in Health Research

- UK Data Archive: Anonymisation Overview

- Australian National Data Service: Ethics, Consent and Data Sharing

Guidelines for qualitative data

For studies analyzing data collected as part of qualitative research, authors should make excerpts of the transcripts relevant to the study available in an appropriate data repository, within the paper, or upon request if they cannot be shared publicly. If even sharing excerpts would violate the agreement to which the participants consented, authors should explain this restriction and what data they are able to share in their Data Availability Statement.

See the Qualitative Data Repository for more information about managing and depositing qualitative data.

Other sensitive data

Some data that do not describe human research participants may also be sensitive and inappropriate to share. For studies analyzing other types of sensitive data, authors should share data as appropriate after consulting established field guidelines and all applicable local laws. Examples of sensitive data that may be subject to restrictions include, but are not limited to, data from field studies in protected areas, locations of sensitive archaeological sites, and locations of endangered or threatened species.

Additional help

Please contact the journal office ( [email protected] ) if:

- You have concerns about the ethics or legality of sharing your data

- Your institution does not have an established point of contact to field external requests for access to sensitive data

- You feel unable to share data for reasons not specified above

Unacceptable Data Access Restrictions

PLOS journals will not consider manuscripts for which the following factors influence authors’ ability to share data:

- Authors will not share data because of personal interests, such as patents or potential future publications.

- The conclusions depend solely on the analysis of proprietary data. We consider proprietary data to be data owned by individuals, organizations, funders, institutions, commercial interests, or other parties that the data owners will not share. If proprietary data are used and cannot be accessed by others in the same manner by which the authors obtained them, the manuscript must include an analysis of publicly available data that validates the study’s conclusions so that others can reproduce the analysis and build on the study’s findings.

General questions

Why do we not allow an author to be the only point of contact for fielding requests for access to restricted data?

When possible, we recommend authors deposit restricted data to a repository that allows for controlled data access. If this is not possible, directing data requests to a non-author institutional point of contact, such as a data access or ethics committee, helps guarantee long term stability and availability of data. Providing interested researchers with a durable point of contact ensures data will be accessible even if an author changes email addresses, institutions, or becomes unavailable to answer requests.

When was the current data policy implemented?

The data policy was implemented on March 3, 2014. Any paper submitted before that date will not have a Data Availability Statement. For all manuscripts submitted or published before this date, data must be made available upon reasonable request.

What if my article does not contain any data?

All articles must include a Data Availability Statement but some submissions, such as Registered Report Protocols and Lab or Study Protocol articles, may not contain data. For manuscripts that do not report data, authors must state in their Data Availability Statement that their article does not report data and the data availability policy is not applicable to their article.

Depositing data

What if I cannot provide accession numbers or DOIs for my data set at submission?

Authors may submit their manuscript and include placeholder language in their Data Availability Statement indicating that accession numbers and/or DOIs will be made available after acceptance. The journal office will contact authors prior to publication to ask for this information and will hold the paper until it is received.

Providing private data access to reviewers and editors during the peer review process is acceptable. Many repositories permit private access for review purposes, and have policies for public release at publication.

Is PLOS integrated with any repositories?

PLOS partners with repositories to support data sharing and compliance with the PLOS data policy. Our submission system is integrated with partner repositories to ensure that the article and its underlying data are paired, published together and linked. Current partners include Dryad and FlowRepository.

Partner repositories may have a data submission fee. PLOS is not able to cover this fee and authors are under no obligation to use any specific repository. PLOS does not gain financially from our association with any integrated partners.

Additionally, PLOS uploads all Supporting Information files associated with an article to the figshare repository to increase compliance with the FAIR principles (Findable, Accessible, Interoperable, Reusable).

How do I deposit data with a data repository integration partner?

When authors deposit data in the integrated repository, they receive a provisional data set DOI along with a private reviewer URL link. Upon submission to PLOS, authors should include the data set DOI in the Data Availability Statement. They should also provide the reviewer URL, which will permit restricted access to the data during peer review. If a manuscript is editorially accepted by a PLOS journal, the publication of the article and public release of the data set will be automatically coordinated.

I cannot afford the cost of depositing a large amount of data. What should I do?

PLOS encourages authors to investigate all options and to contact their institutions if they have difficulty providing access to the data underlying the research. There are several repositories recommended by PLOS that specialize in handling large data sets.

What are acceptable licenses for my data deposition?

If authors use repositories with stated licensing policies, the policies should not be more restrictive than the Creative Commons Attribution (CC BY) license .

PLOS Data Advisory Board

PLOS has formed an external board of advisors across many fields of research published in PLOS journals. This board will work with us to develop community standards for data sharing across various fields, provide input and advice on especially complex data-sharing situations submitted to the journals, define data-sharing compliance, and proactively work to refine our policy. If you have any questions or feedback, we welcome you to write to us at [email protected] .

Imperial College London Imperial College London

Latest news.

Superfast physics and a trio of Fellows: News from Imperial

'Living paint’ startup wins Imperial’s top entrepreneurship prize

Imperial wins University Challenge for historic fifth time

- Scholarly Communication

- Research and Innovation

- Support for staff

- Research data management

- Sharing data

How to write a data access statement

What is a data access statement.

Data access statements, also known as data availability statements, are included in publications to describe where the data associated with the paper is available, and under what conditions the data can be accessed. They are required by many funders and scientific journals as well as the UKRI Common Principles on Data Policy .

What should I include in a data access statement?

Examples of data access statements are provided below, but your statement should typically include:

- where the data can be accessed (preferably a data repository)

- a persistent identifier, such as a Digital Object Identifier (DOI) or accession number, or a link to a permanent record for the dataset

- details of any restrictions on accessing the data and a justifiable explanation (e.g. for ethical, legal or commercial reasons)

*A simple direction to contact the author may not be considered acceptable by some funders and publishers. The EPSRC have made this explicit. Consider setting up a shared email address for your research group or use an existing departmental address.

** Under some circumstances (e.g. participants did not agree for their data to be shared) it may be appropriate to explain that the data are not available at all. In this case, you must give clear and justified reasons.

Additional examples of data access/availability statements can be found on these publisher web pages: Springer Nature , Wiley and Taylor Francis .

Where should I put the data availability statement in my paper?

Some journals provide a “data access” or “data availability” section. If no such section exists, you can place your statement in the acknowledgements section.

- Alumni & Careers

- News & Events

Writing “Data Availability Statement” in Your Publications

More and more journals require you to write a Data Availability Statement (DAS) when you submit a manuscript. What is DAS? How do you write one?

Where do you usually find data that relates to a published paper? Sometimes, authors include data as supplementary materials in images or pdf; or as data files for download. In recent years, more authors put relevant datasets in data repositories, and give you the links to the datasets. However, when the data is not explicitly made available with the published paper, readers have no clue about its accessibility.

Data Availability Statement , or DAS , serves to add transparency, so that data can be validated, reused, and properly cited. DAS is simple to write . It is one or two sentences that tells the readers where to find the data associated with your paper. You can place it near the end of your manuscript, such as putting it before the “References”.

What DAS Describes

A DAS simply describes the availability of the data underlying your paper. Here, “data” means the dataset that supports the results reported, that would be needed to interpret, replicate and build upon the findings in the published paper. DAS can be very short; and it should tell your readers:

- whether the data is available

- where to find it

- under what conditions it can be accessed

Here are three examples of how DAS appears in an article:

Source: Calzolari et al. Vestibular agnosia in traumatic brain injury and its link to imbalance. Brain , 144 , 1 (January 2021), Pages 128–143, https://doi.org/10.1093/brain/awaa386

Source: Gomez-Gonzalez, C., Nesseler, C. & Dietl, H.M. Mapping discrimination in Europe through a field experiment in amateur sport. Humanit Soc Sci Commun 8, 95 (2021). https://doi.org/10.1057/s41599-021-00773-2

Source: Danylo et al. A map of the extent and year of detection of oil palm plantations in Indonesia, Malaysia and Thailand. Sci Data 8, 96 (2021). https://doi.org/10.1038/s41597-021-00867-1

DAS Does Not Require Data Archiving or Sharing

When a journal requires you to include a DAS in your manuscript, it does not impose data sharing in open repositories. You may choose to share your data upon requests; or, you may not be able to share data due to various reasons. DAS only requires you to explicitly describe the situation with a simple and clear statement.

Scope of “Data” Involved

It is the authors’ judgement how much data and which data is qualified to be underlying data for a particular publication. It may be helpful to start with the “minimum” dataset that can support the findings. Sharing your whole set of raw data is often not necessary nor useful to your readers. You can think about which dataset is necessary and sufficient for your readers to interpret or validate your findings.

Journal Policies and Templates

Different journals have different policies regarding DAS; always check the policies of the journals you are submitting to. Some journals only encourage authors to include DAS, while some make it a requirement. They may have different formatting such as where the DAS should appear. Some publishers provide guidance and templates to help authors write DAS, for examples:

- SpringerNature

- American Institute of Physics (AIP)

- Taylor & Francis

Even when the journal you use does not have such requirement, it is a good research practice to write a DAS for each published paper. Use these templates to get started.

— By Gabi Wong , Library

Hits: 12352

- Academic Publishing

Tags: Data Availability Statement , publishing , research data management

published April 28, 2021 last modified March 11, 2022

Receive new posts by email

Your email:

Search in Research Bridge

- Research Bridge Home

- SPD Profiles

- Create and Connect ORCID iD

- Researchers’ Series Archive

- Author Tips

- Data Management Guide

- Research Impact Metrics

- About Research Bridge

- Calling for Guest Posts

- Academic Publishing 46

- AI in Research & Learning 7

- Digital Humanities 8

- Evaluation and Ranking 10

- Guest Posts 4

- HKUST Research 22

- Research Data Management Tips 17

- Research Tools 54

- Researchers' Series 18

- Finding a Repository

- Budgeting for DMS Plans - NIH

- Writing a Data Management and Sharing Plan - NIH Grant

- DMS Plan Examples

- What is the On-line DMPTool?

- Persistent Identifiers (PIDs)

- Managing Research Data

- Data Discovery

- Data Documentation and Metadata

- Qualitative Data

- Miscellaneous

- FASEB DataWorks! Help Desk Knowledge Base

Creating a Data Availability Statement

A guide to crafting a statement on how to find and access the data used in your paper., what is a data availability statement.

A data availability statement (DAS) details where the data used in a published paper can be found and how it can be accessed. These statements are required by many publishers, including FASEB, our member societies, Cambridge, Springer Nature, Wiley, and Taylor & Francis. Some grant funders also require DASs.

Where Can I Find an Article’s DAS?

In a published article, the DAS is usually printed alongside the author affiliation, disclosures, or funding information. That is, it is either at the front or very end of an article. For example, PubMed Central displays the data availability statement in a drop-down at the top of their articles:

What Should I Write in my DAS?

The table below outlines some templates. Each journal has different requirements and templates, so you should check journal author guidelines prior to submission.

This table is condensed from a webpage created by the publisher Taylor & Francis . Other useful options are available on their author services. Other publishers have their own guidance on data availability statements – for example, Wiley and Springer Nature . Publisher templates are not suitable for all situations; researchers should modify the templates to suit their paper.

Tip: Simply writing “data available upon reasonable request” is generally considered insufficient for a data availability statement. Studies have demonstrated a lack of author compliance when data is actually requested.

Back to top of page

More Resources

- CHORUS: Publisher Data Availability Policies Index

- Memorial Sloan Kettering Library Data Policy Finder

Taylor & Francis. “Writing a Data Availability Statement.” Author Services. Accessed November 12, 2023. https://authorservices.taylorandfrancis.com/data-sharing/share-your-data/data-availability-statements/ .

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

A descriptive analysis of the data availability statements accompanying medRxiv preprints and a comparison with their published counterparts

Luke A. McGuinness

1 Population Health Sciences, Bristol Medical School, University of Bristol, Bristol, United Kingdom

2 MRC Integrative Epidemiology Unit at the University of Bristol, Bristol, United Kingdom

Athena L. Sheppard

3 Department of Health Sciences, University of Leicester, Leicester, United Kingdom

Associated Data

All materials (data, code and supporting information ) are available from GitHub ( https://github.com/mcguinlu/data-availability-impact ), archived at time of submission on Zenodo (DOI: 10.5281/zenodo.3968301 ).

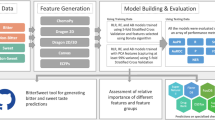

To determine whether medRxiv data availability statements describe open or closed data—that is, whether the data used in the study is openly available without restriction—and to examine if this changes on publication based on journal data-sharing policy. Additionally, to examine whether data availability statements are sufficient to capture code availability declarations.

Observational study, following a pre-registered protocol, of preprints posted on the medRxiv repository between 25th June 2019 and 1st May 2020 and their published counterparts.

Main outcome measures

Distribution of preprinted data availability statements across nine categories, determined by a prespecified classification system. Change in the percentage of data availability statements describing open data between the preprinted and published versions of the same record, stratified by journal sharing policy. Number of code availability declarations reported in the full-text preprint which were not captured in the corresponding data availability statement.

3938 medRxiv preprints with an applicable data availability statement were included in our sample, of which 911 (23.1%) were categorized as describing open data. 379 (9.6%) preprints were subsequently published, and of these published articles, only 155 contained an applicable data availability statement. Similar to the preprint stage, a minority (59 (38.1%)) of these published data availability statements described open data. Of the 151 records eligible for the comparison between preprinted and published stages, 57 (37.7%) were published in journals which mandated open data sharing. Data availability statements more frequently described open data on publication when the journal mandated data sharing (open at preprint: 33.3%, open at publication: 61.4%) compared to when the journal did not mandate data sharing (open at preprint: 20.2%, open at publication: 22.3%).

Requiring that authors submit a data availability statement is a good first step, but is insufficient to ensure data availability. Strict editorial policies that mandate data sharing (where appropriate) as a condition of publication appear to be effective in making research data available. We would strongly encourage all journal editors to examine whether their data availability policies are sufficiently stringent and consistently enforced.

1 Introduction

The sharing of data generated by a study is becoming an increasingly important aspect of scientific research [ 1 , 2 ]. Without access to the data, it is harder for other researchers to examine, verify and build on the results of that study [ 3 ]. As a result, many journals now mandate data availability statements. These are dedicated sections of research articles, which are intended to provide readers with important information about whether the data described by the study are available and if so, where they can be obtained [ 4 ].

While requiring data availability statements is an admirable first step for journals to take, and as such is viewed favorably by journal evaluation rubrics such as the Transparency and Openness Promotion [TOP] Guidelines [ 5 ], a lack of review of the contents of these statements often leads to issues. Many authors claim that their data can be made “available on request”, despite previous work establishing that these statements are demonstrably untrue in the majority of cases—that when data is requested, it is not actually made available [ 6 – 8 ]. Additionally, previous work found that the availability of data “available on request” declines with article age, indicating that this approach is not a valid long term option for data sharing [ 9 ]. This suggests that requiring data availability statements without a corresponding editorial or peer review of their contents, in line with a strictly enforced data-sharing policy, does not achieve the intended aim of making research data more openly available. However, few journals actually mandate data sharing as a condition of publication. Of a sample of 318 biomedical journals, only ~20% had a data-sharing policy that mandated data sharing [ 10 ].

Several previous studies have examined the data availability statements of published articles [ 4 , 11 – 13 ], but to date, none have examined the statements accompanying preprinted manuscripts, including those hosted on medRxiv, the preprint repository for manuscripts in the medical, clinical, and related health sciences [ 14 ]. Given that preprints, particularly those on medRxiv, have impacted the academic discourse around the recent (and ongoing) COVID-19 pandemic to a similar, if not greater, extent than published manuscripts [ 15 ], assessing whether these studies make their underlying data available without restriction (i.e. “open”), and adequately describe how to access it in their data availability statements, is worthwhile. In addition, by comparing the preprint and published versions of the data availability statements for the same paper, the potential impact of different journal data-sharing policies on data availability can be examined. This study aimed to explore the distribution of data availability statements’ description of the underlying data across a number of categories of “openness” and to assess the change between preprint and journal-published data availability statements, stratified by journal data-sharing policy. We also intended to examine whether authors planning to make the data available upon publication actually do so, and whether data availability statements are sufficient to capture code availability declarations.

2.1 Protocol and ethics

A protocol for this analysis was registered in advance and followed at all stages of the study [ 16 ]. Any deviations from the protocol are described. Ethical approval was not required for this study.

2.2 Data extraction

The data availability statements of preprints posted on the medRxiv preprint repository between 25th June 2019 (the date of first publication of a preprint on medRxiv) and 1st May 2020 were extracted using the medrxivr and rvest R packages [ 17 , 18 ]. Completing a data availability statement is required as part of the medRxiv submission process, and so a statement was available for all eligible preprints. Information on the journal in which preprints were subsequently published was extracted using the published DOI provided by medRxiv and rcrossref [ 19 ]. Several other R packages were used for data cleaning and analysis [ 20 – 33 ].

To extract the data availability statements for published articles and the journals data-sharing policies, we browsed to the article or publication website and manually copied the relevant material (where available) into an Excel file. The extracted data are available for inspection (see Material availability section).

2.3 Categorization

A pre-specified classification system was developed to categorize each data availability statement as describing either open or closed data, with additional ordered sub-categories indicating the degree of openness (see Table 1 ). The system was based on the “Findability” and “Accessibility” elements of the FAIR framework [ 34 ], the categories used by previous effort to categorize published data availability statements [ 4 , 11 ], our own experience of medRxiv data availability statements, and discussion with colleagues. Illustrative examples of each category were taken from preprints included in our sample [ 35 – 43 ].

Illustrative examples of each category were taken from preprints included in our sample (see "Data extraction").

The data availability statement for each preprinted record were categorized by two independent researchers, using the groups presented in Table 1 , while the statements for published articles were categorized using all groups barring Category 3 and 4 (“Available in the future”). Records for which the data availability statement was categorized as “Not applicable” (Category 1 from Table 1 ) at either the preprint or published stage were excluded from further analyses. Researchers were provided only with the data availability statement, and as a result, were blind to the associated preprint metadata (e.g. title, authors, corresponding author institution) in case this could affect their assessments. Any disagreements were resolved through discussion.

Due to our large sample, if authors claimed that all data were available in the manuscript or as a S1 File , or that their study did not make use of any data, we took them at their word. Where a data availability statement met multiple categories or contained multiple data sources with varying levels of openness, we took a conservative approach and categorized it on the basis of the most restrictive aspect (see S1 File for some illustrative examples). We plotted the distribution of preprint and published data availability statements across the nine categories presented in Table 1 .

Similarly, the extracted data-sharing policies were classified by two independent reviewers according to whether the journal mandated data sharing (1) or not (0). Where the journal had no obvious data sharing policy, these were classified as not mandating data sharing.

2.4 Changes between preprinted and published statements

To assess if data availability statements change between preprint and published articles, we examined whether a discrepancy existed between the categories assigned to the preprinted and published statements, and the direction of the discrepancy (“more closed” or “more open”). Records were deemed to become “more open” if their data availability statement was categorized as “closed” at the preprint stage and “open” at the published stage. Conversely, records described as “more closed” were those moving from “open” at preprint to “closed” on publication.

We declare a minor deviation from our protocol for this analysis [ 16 ]. Rather than investigating the data-sharing policy only for journals with the largest change in openness as intended, which involved setting an arbitrary cut-off when defining “largest change”, we systematically extracted and categorized the data-sharing policies for all journals in which preprints had subsequently been published using two categories (1: “requiring/mandating data sharing” and, 2: “not requiring/mandating data sharing”), and compared the change in openness between these two categories. Note that Category 2 includes journals that encourage data sharing, but do not make it a condition of publication.

To assess claims that data will be provided on publication, the data availability statements accompanying the published articles for all records in Category 3 (“Data available on publication (link provided)”) or Category 4 (“Data available on publication (no link provided)”) from Table 1 were assessed, and any difference between the two categories examined.

2.5 Code availability

Finally, to assess whether data availability statements also capture the availability of programming code, such as STATA do files or R scripts, the data availability statement and full text PDF for a random sample of 400 preprinted records were assessed for code availability (1: “code availability described” and 2: “code availability not described”).

The data availability statements accompanying 4101 preprints registered between 25th June 2019 and 1st May 2020 were extracted from the medRxiv preprint repository on the 26th May 2020 and were coded by two independent researchers according to the categories in Table 1 . During this process, agreement between the raters was high (Cohen’s Kappa = 0.98; “almost perfect agreement”) [ 44 ].

Of the 4101 preprints, 163 (4.0%) in Category 0 (“Not applicable”) were excluded following coding, leaving 3938 remaining records. Of these, 911 (23.1%) had made their data open as per the criteria in Table 1 . The distribution of data availability statements across the categories can be seen in Fig 1 . A total of 379 (9.6%) preprints had been subsequently published, and of these, only 159 (42.0%) had data availability statements that we could categorize. 4 (2.5%) records in Category 0 (“Not applicable”) were excluded, and of the 155 remaining, 59 (38.1%) had made their data open as per our criteria.

For the comparison of preprinted data availability statements with their published counterparts, we excluded records that were not published, that did not have a published data availability statement or that were labeled as “Not applicable” at either the preprint or published stage, leaving 151 records (3.7% of the total sample of 4101 records) records.

Data availability statements more frequently described open data on publication compared to the preprinted record when the journal mandated data sharing ( Table 2 ). Moreover, the data availability statements for 8 articles published in journals that did not mandate open data sharing became less open on publication. The change in openness for preprints grouped by category and stratified by journal policy is shown in S1 Table in S1 File , while the change for each individual journal included in our analysis is shown in S2 Table in S1 File .

Interestingly, 22 records published in a journal mandating open data sharing did not have an open data availability statement. The majority of these records described data that was available from a central access-controlled repository (Category 5 or 6), while in others, legal restrictions were cited as the reason for lack of data sharing. However, in some cases, data was either insufficiently described or was only available on request (S3 Table in S1 File ), indicating that journal policies which mandate data sharing may not always be consistently applied allowing some records may slip through the gaps.

161 (4.1%) preprints stated that data would be available on publication, but only 10 of these had subsequently been published ( Table 3 ) and the number describing open data on publication did not seem to vary based on whether the preprinted data availability statements include a link to an embargoed repository or not, though the sample size is small.

Of the 400 records for which code availability was assessed, 75 mentioned code availability in the preprinted full-text manuscript. However, only 22 (29.3%) of these also described code availability in the corresponding data availability statement (S4 Table in S1 File ).

4 Discussion

4.1 principal findings and comparison with other studies.

We have reviewed 4101 preprinted and 159 published data availability statements, coding them as “open” or “closed” according to a predefined classification system. During this labor-intensive process, we appreciated statements that reflected the authors’ enthusiasm for data sharing (“YES”) [ 45 ], their bluntness (“Data is not available on request.”) [ 46 ], and their efforts to endear themselves to the reader (“I promise all data referred to in the manuscript are available.”) [ 47 ]. Of the preprinted statements, almost three-quarters were categorized as “closed”, with the largest individual category being “available on request”. In light of the substantial impact that studies published as preprints on medRxiv have had on real-time decision making during the current COVID-19 pandemic [ 15 ], it is concerning that data for these preprints is so infrequently readily available for inspection.

A minority of published records we examined contained a data availability statement (n = 159 (42.0%)). This lack of availability statement at publication results in a loss of useful information. For at least one published article, we identified relevant information in the preprinted statement that did not appear anywhere in the published article, due to it not containing a data availability statement [ 48 , 49 ].

We provide initial descriptive evidence that strict data-sharing policies, which mandate that data be made openly available (where appropriate) as a condition of publication, appear to succeed in making research data more open than those that do not. Our findings, though based on a relatively small number of observations, agree with other studies on the effect of journal policies on author behavior. Recent work has shown that “requiring” a data availability statement was effective in ensuring that this element was completed [ 4 ], while “encouraging” authors to follow a reporting checklist (the ARRIVE checklist) had no effect on compliance [ 50 , 51 ].

Finally, we also provide evidence that data availability statements alone are insufficient to capture code availability declarations. Even when researchers wish to share their code, as evidenced by a description of code availability in the main paper, they frequently do not include this information in the data availability statement. Code sharing has been advocated strongly elsewhere [ 52 – 54 ], as it provides an insight into the analytic decisions made by the research team, and there are few, if any, circumstances in which it is not possible to share the analytic code underpinning an analysis. Similar to data availability statements, a dedicated code availability statement which is critically assessed against a clear code-sharing policy as part of the editorial and peer review processes will help researchers to appraise published results.

4.2 Strengths and limitations

A particular strength of this analysis is that the design allows us to compare what is essentially the same paper (same design, findings and authorship team) under two different data-sharing polices, and assess the change in the openness of the statement between them. To our knowledge this is the first study to use this approach to examine the potential impact of journal editorial policies. This approach also allows us to address the issue of self-selection. When looking at published articles alone, it is not possible to tell whether authors always intended to make their data available and chose a given journal due to its reputation for data sharing. In addition, we have examined all available preprints within our study period and all corresponding published articles, rather than taking a sub-sample. Finally, categorization of the statements was carried out by two independent researchers using predefined categories, reducing the risk of misclassification.

However, our analysis is subject to a number of potential limitations. The primary one is that manuscripts (at both the preprint and published stages) may have included links to the data, or more information that uniquely identifies the dataset from a data portal, within the text (for example, in the Methods section). While this might be the case, if readers are expected to piece together the relevant information from different locations in the manuscript, it throws into question what having a dedicated data availability statement adds. A second limitation is that we do not assess the veracity of any data availability statements, which may introduce some misclassification bias into our categorization. For example, we do not check whether all relevant data can actually be found in the manuscript/ S1 File (Category 7) or the linked repository (Category 8), meaning our results provide a conservative estimate of the scale of the issue, asprevious work has suggested that this is unlikely to be the case [ 12 ]. A further consideration is that for Categories 1 (“No data available”) and 2 (“Available on request”), there will be situations where making research data available is not feasible, for example, due to cost or concerns about patient re-identifiability [ 55 , 56 ]. This situation is perfectly reasonable, as long as statements are explicit in justifying the lack of open data.

4.3 Implications for policy

Data availability statements are an important tool in the fight to make studies more reproducible. However, without critical review of these statements in line with strict data-sharing policies, authors default to not sharing their data or making it “available on request”. Based on our analysis, there is a greater change towards describing open data between preprinted and published data availability statements in journals that mandate data sharing as a condition of publication. This would suggest that data sharing could be immediately improved by journals becoming more stringent in their data availability policies. Similarly, introduction of a related code availability section (or composite “material” availability section) will aid in reproducibility by capturing whether analytic code is available in a standardized manuscript section.

It would be unfair to expect all editors and reviewers to be able to effectively review the code and data provided with a submission. As proposed elsewhere [ 57 ], a possible solution is to assign an editor or reviewer whose sole responsibility in the review process is to examine the data and code provided. They would also be responsible for judging, when data and code are absent, whether the argument presented by the authors for not sharing these materials is valid.

However, while this study focuses primarily on the role of journals, some responsibility for enacting change rests with the research community at large. If researchers regularly shared our data, strict journal data-sharing policies would not be needed. As such, we would encourage authors to consider sharing the data underlying future publications, regardless of whether the journal actually mandates it.

5 Conclusion

Requiring that authors submit a data availability statement is a good first step, but is insufficient to ensure data availability, as our work shows that authors most commonly use them to state that data is only available on request. However, strict editorial policies that mandate data sharing (where appropriate) as a condition of publication appear to be effective in making research data available. In addition to the introduction of a dedicated code availability statement, a move towards mandated data sharing will help to ensure that future research is readily reproducible. We would strongly encourage all journal editors to examine whether their data availability policies are sufficiently stringent and consistently enforced.

Supporting information

Acknowledgments.

We must acknowledge the input of several people, without whom the quality of this work would have been diminished: Matthew Grainger and Neal Haddaway for their insightful comments on the subject of data availability statements; Phil Gooch and Sarah Nevitt for their skill in identifying missing published papers based on the vaguest of descriptions; Antica Culina, Julian Higgins and Alfredo Sánchez-Tójar for their comments on the preprinted version of this article; and Ciara Gardiner, for proof-reading this manuscript.

Funding Statement

LAM is supported by an National Institute for Health Research (NIHR; https://www.nihr.ac.uk/ ) Doctoral Research Fellowship (DRF-2018-11-ST2-048). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. The views expressed in this article are those of the authors and do not necessarily represent those of the NHS, the NIHR, MRC, or the Department of Health and Social Care.

Data Availability

- PLoS One. 2021; 16(5): e0250887.

Decision Letter 0

PONE-D-20-29718

Dear Dr. McGuinness,

Thank you for submitting your manuscript to PLOS ONE. After careful consideration, we feel that it has merit but does not fully meet PLOS ONE’s publication criteria as it currently stands. Therefore, we invite you to submit a revised version of the manuscript that addresses the points raised during the review process.

The reviewers suggested minor revisions. Please, reviewed that carefully.

Please submit your revised manuscript by Mar 20 2021 11:59PM. If you will need more time than this to complete your revisions, please reply to this message or contact the journal office at gro.solp@enosolp . When you're ready to submit your revision, log on to https://www.editorialmanager.com/pone/ and select the 'Submissions Needing Revision' folder to locate your manuscript file.

Please include the following items when submitting your revised manuscript:

- A rebuttal letter that responds to each point raised by the academic editor and reviewer(s). You should upload this letter as a separate file labeled 'Response to Reviewers'.

- A marked-up copy of your manuscript that highlights changes made to the original version. You should upload this as a separate file labeled 'Revised Manuscript with Track Changes'.

- An unmarked version of your revised paper without tracked changes. You should upload this as a separate file labeled 'Manuscript'.

If you would like to make changes to your financial disclosure, please include your updated statement in your cover letter. Guidelines for resubmitting your figure files are available below the reviewer comments at the end of this letter.

If applicable, we recommend that you deposit your laboratory protocols in protocols.io to enhance the reproducibility of your results. Protocols.io assigns your protocol its own identifier (DOI) so that it can be cited independently in the future. For instructions see: http://journals.plos.org/plosone/s/submission-guidelines#loc-laboratory-protocols

We look forward to receiving your revised manuscript.

Kind regards,

Rafael Sarkis-Onofre

Academic Editor

Journal Requirements:

When submitting your revision, we need you to address these additional requirements.

1. Please ensure that your manuscript meets PLOS ONE's style requirements, including those for file naming. The PLOS ONE style templates can be found at

https://journals.plos.org/plosone/s/file?id=wjVg/PLOSOne_formatting_sample_main_body.pdf and

https://journals.plos.org/plosone/s/file?id=ba62/PLOSOne_formatting_sample_title_authors_affiliations.pdf