How to write a hypothesis for marketing experimentation

Creating your strongest marketing hypothesis

The potential for your marketing improvement depends on the strength of your testing hypotheses.

But where are you getting your test ideas from? Have you been scouring competitor sites, or perhaps pulling from previous designs on your site? The web is full of ideas and you’re full of ideas – there is no shortage of inspiration, that’s for sure.

Coming up with something you want to test isn’t hard to do. Coming up with something you should test can be hard to do.

Hard – yes. Impossible? No. Which is good news, because if you can’t create hypotheses for things that should be tested, your test results won’t mean mean much, and you probably shouldn’t be spending your time testing.

Taking the time to write your hypotheses correctly will help you structure your ideas, get better results, and avoid wasting traffic on poor test designs.

With this post, we’re getting advanced with marketing hypotheses, showing you how to write and structure your hypotheses to gain both business results and marketing insights!

By the time you finish reading, you’ll be able to:

- Distinguish a solid hypothesis from a time-waster, and

- Structure your solid hypothesis to get results and insights

To make this whole experience a bit more tangible, let’s track a sample idea from…well…idea to hypothesis.

Let’s say you identified a call-to-action (CTA)* while browsing the web, and you were inspired to test something similar on your own lead generation landing page. You think it might work for your users! Your idea is:

“My page needs a new CTA.”

*A call-to-action is the point where you, as a marketer, ask your prospect to do something on your page. It often includes a button or link to an action like “Buy”, “Sign up”, or “Request a quote”.

The basics: The correct marketing hypothesis format

A well-structured hypothesis provides insights whether it is proved, disproved, or results are inconclusive.

You should never phrase a marketing hypothesis as a question. It should be written as a statement that can be rejected or confirmed.

Further, it should be a statement geared toward revealing insights – with this in mind, it helps to imagine each statement followed by a reason :

- Changing _______ into ______ will increase [conversion goal], because:

- Changing _______ into ______ will decrease [conversion goal], because:

- Changing _______ into ______ will not affect [conversion goal], because:

Each of the above sentences ends with ‘because’ to set the expectation that there will be an explanation behind the results of whatever you’re testing.

It’s important to remember to plan ahead when you create a test, and think about explaining why the test turned out the way it did when the results come in.

Level up: Moving from a good to great hypothesis

Understanding what makes an idea worth testing is necessary for your optimization team.

If your tests are based on random ideas you googled or were suggested by a consultant, your testing process still has its training wheels on. Great hypotheses aren’t random. They’re based on rationale and aim for learning.

Hypotheses should be based on themes and analysis that show potential conversion barriers.

At Conversion, we call this investigation phase the “Explore Phase” where we use frameworks like the LIFT Model to understand the prospect’s unique perspective. (You can read more on the the full optimization process here).

A well-founded marketing hypothesis should also provide you with new, testable clues about your users regardless of whether or not the test wins, loses or yields inconclusive results.

These new insights should inform future testing: a solid hypothesis can help you quickly separate worthwhile ideas from the rest when planning follow-up tests.

“Ultimately, what matters most is that you have a hypothesis going into each experiment and you design each experiment to address that hypothesis.” – Nick So, VP of Delivery

Here’s a quick tip :

If you’re about to run a test that isn’t going to tell you anything new about your users and their motivations, it’s probably not worth investing your time in.

Let’s take this opportunity to refer back to your original idea:

Ok, but what now ? To get actionable insights from ‘a new CTA’, you need to know why it behaved the way it did. You need to ask the right question.

To test the waters, maybe you changed the copy of the CTA button on your lead generation form from “Submit” to “Send demo request”. If this change leads to an increase in conversions, it could mean that your users require more clarity about what their information is being used for.

That’s a potential insight.

Based on this insight, you could follow up with another test that adds copy around the CTA about next steps: what the user should anticipate after they have submitted their information.

For example, will they be speaking to a specialist via email? Will something be waiting for them the next time they visit your site? You can test providing more information, and see if your users are interested in knowing it!

That’s the cool thing about a good hypothesis: the results of the test, while important (of course) aren’t the only component driving your future test ideas. The insights gleaned lead to further hypotheses and insights in a virtuous cycle.

It’s based on a science

The term “hypothesis” probably isn’t foreign to you. In fact, it may bring up memories of grade-school science class; it’s a critical part of the scientific method .

The scientific method in testing follows a systematic routine that sets ideation up to predict the results of experiments via:

- Collecting data and information through observation

- Creating tentative descriptions of what is being observed

- Forming hypotheses that predict different outcomes based on these observations

- Testing your hypotheses

- Analyzing the data, drawing conclusions and insights from the results

Don’t worry! Hypothesizing may seem ‘sciency’, but it doesn’t have to be complicated in practice.

Hypothesizing simply helps ensure the results from your tests are quantifiable, and is necessary if you want to understand how the results reflect the change made in your test.

A strong marketing hypothesis allows testers to use a structured approach in order to discover what works, why it works, how it works, where it works, and who it works on.

“My page needs a new CTA.” Is this idea in its current state clear enough to help you understand what works? Maybe. Why it works? No. Where it works? Maybe. Who it works on? No.

Your idea needs refining.

Let’s pull back and take a broader look at the lead generation landing page we want to test.

Imagine the situation: you’ve been diligent in your data collection and you notice several recurrences of Clarity pain points – meaning that there are many unclear instances throughout the page’s messaging.

Rather than focusing on the CTA right off the bat, it may be more beneficial to deal with the bigger clarity issue.

Now you’re starting to think about solving your prospects conversion barriers rather than just testing random ideas!

If you believe the overall page is unclear, your overarching theme of inquiry might be positioned as:

- “Improving the clarity of the page will reduce confusion and improve [conversion goal].”

By testing a hypothesis that supports this clarity theme, you can gain confidence in the validity of it as an actionable marketing insight over time.

If the test results are negative : It may not be worth investigating this motivational barrier any further on this page. In this case, you could return to the data and look at the other motivational barriers that might be affecting user behavior.

If the test results are positive : You might want to continue to refine the clarity of the page’s message with further testing.

Typically, a test will start with a broad idea — you identify the changes to make, predict how those changes will impact your conversion goal, and write it out as a broad theme as shown above. Then, repeated tests aimed at that theme will confirm or undermine the strength of the underlying insight.

Building marketing hypotheses to create insights

You believe you’ve identified an overall problem on your landing page (there’s a problem with clarity). Now you want to understand how individual elements contribute to the problem, and the effect these individual elements have on your users.

It’s game time – now you can start designing a hypothesis that will generate insights.

You believe your users need more clarity. You’re ready to dig deeper to find out if that’s true!

If a specific question needs answering, you should structure your test to make a single change. This isolation might ask: “What element are users most sensitive to when it comes to the lack of clarity?” and “What changes do I believe will support increasing clarity?”

At this point, you’ll want to boil down your overarching theme…

- Improving the clarity of the page will reduce confusion and improve [conversion goal].

…into a quantifiable hypothesis that isolates key sections:

- Changing the wording of this CTA to set expectations for users (from “submit” to “send demo request”) will reduce confusion about the next steps in the funnel and improve order completions.

Does this answer what works? Yes: changing the wording on your CTA.

Does this answer why it works? Yes: reducing confusion about the next steps in the funnel.

Does this answer where it works? Yes: on this page, before the user enters this theoretical funnel.

Does this answer who it works on? No, this question demands another isolation. You might structure your hypothesis more like this:

- Changing the wording of the CTA to set expectations for users (from “submit” to “send demo request”) will reduce confusion for visitors coming from my email campaign about the next steps in the funnel and improve order completions.

Now we’ve got a clear hypothesis. And one worth testing!

What makes a great hypothesis?

1. It’s testable.

2. It addresses conversion barriers.

3. It aims at gaining marketing insights.

Let’s compare:

The original idea : “My page needs a new CTA.”

Following the hypothesis structure : “A new CTA on my page will increase [conversion goal]”

The first test implied a problem with clarity, provides a potential theme : “Improving the clarity of the page will reduce confusion and improve [conversion goal].”

The potential clarity theme leads to a new hypothesis : “Changing the wording of the CTA to set expectations for users (from “submit” to “send demo request”) will reduce confusion about the next steps in the funnel and improve order completions.”

Final refined hypothesis : “Changing the wording of the CTA to set expectations for users (from “submit” to “send demo request”) will reduce confusion for visitors coming from my email campaign about the next steps in the funnel and improve order completions.”

Which test would you rather your team invest in?

Before you start your next test, take the time to do a proper analysis of the page you want to focus on. Do preliminary testing to define bigger issues, and use that information to refine and pinpoint your marketing hypothesis to give you forward-looking insights.

Doing this will help you avoid time-wasting tests, and enable you to start getting some insights for your team to keep testing!

Share this post

Other articles you might like

Exploration vs. Exploitation: how to balance short-term results with long-term impact

Spotlight: Kevin Turchyn on how Whirlpool Corporation relentlessly tests key assumptions for breakthrough results

Is Goodyear’s UX as effective as its tires?

Join 5,000 other people who get our newsletter updates

- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- *New* Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

A Beginner’s Guide to Hypothesis Testing in Business

- 30 Mar 2021

Becoming a more data-driven decision-maker can bring several benefits to your organization, enabling you to identify new opportunities to pursue and threats to abate. Rather than allowing subjective thinking to guide your business strategy, backing your decisions with data can empower your company to become more innovative and, ultimately, profitable.

If you’re new to data-driven decision-making, you might be wondering how data translates into business strategy. The answer lies in generating a hypothesis and verifying or rejecting it based on what various forms of data tell you.

Below is a look at hypothesis testing and the role it plays in helping businesses become more data-driven.

Access your free e-book today.

What Is Hypothesis Testing?

To understand what hypothesis testing is, it’s important first to understand what a hypothesis is.

A hypothesis or hypothesis statement seeks to explain why something has happened, or what might happen, under certain conditions. It can also be used to understand how different variables relate to each other. Hypotheses are often written as if-then statements; for example, “If this happens, then this will happen.”

Hypothesis testing , then, is a statistical means of testing an assumption stated in a hypothesis. While the specific methodology leveraged depends on the nature of the hypothesis and data available, hypothesis testing typically uses sample data to extrapolate insights about a larger population.

Hypothesis Testing in Business

When it comes to data-driven decision-making, there’s a certain amount of risk that can mislead a professional. This could be due to flawed thinking or observations, incomplete or inaccurate data , or the presence of unknown variables. The danger in this is that, if major strategic decisions are made based on flawed insights, it can lead to wasted resources, missed opportunities, and catastrophic outcomes.

The real value of hypothesis testing in business is that it allows professionals to test their theories and assumptions before putting them into action. This essentially allows an organization to verify its analysis is correct before committing resources to implement a broader strategy.

As one example, consider a company that wishes to launch a new marketing campaign to revitalize sales during a slow period. Doing so could be an incredibly expensive endeavor, depending on the campaign’s size and complexity. The company, therefore, may wish to test the campaign on a smaller scale to understand how it will perform.

In this example, the hypothesis that’s being tested would fall along the lines of: “If the company launches a new marketing campaign, then it will translate into an increase in sales.” It may even be possible to quantify how much of a lift in sales the company expects to see from the effort. Pending the results of the pilot campaign, the business would then know whether it makes sense to roll it out more broadly.

Related: 9 Fundamental Data Science Skills for Business Professionals

Key Considerations for Hypothesis Testing

1. alternative hypothesis and null hypothesis.

In hypothesis testing, the hypothesis that’s being tested is known as the alternative hypothesis . Often, it’s expressed as a correlation or statistical relationship between variables. The null hypothesis , on the other hand, is a statement that’s meant to show there’s no statistical relationship between the variables being tested. It’s typically the exact opposite of whatever is stated in the alternative hypothesis.

For example, consider a company’s leadership team that historically and reliably sees $12 million in monthly revenue. They want to understand if reducing the price of their services will attract more customers and, in turn, increase revenue.

In this case, the alternative hypothesis may take the form of a statement such as: “If we reduce the price of our flagship service by five percent, then we’ll see an increase in sales and realize revenues greater than $12 million in the next month.”

The null hypothesis, on the other hand, would indicate that revenues wouldn’t increase from the base of $12 million, or might even decrease.

Check out the video below about the difference between an alternative and a null hypothesis, and subscribe to our YouTube channel for more explainer content.

2. Significance Level and P-Value

Statistically speaking, if you were to run the same scenario 100 times, you’d likely receive somewhat different results each time. If you were to plot these results in a distribution plot, you’d see the most likely outcome is at the tallest point in the graph, with less likely outcomes falling to the right and left of that point.

With this in mind, imagine you’ve completed your hypothesis test and have your results, which indicate there may be a correlation between the variables you were testing. To understand your results' significance, you’ll need to identify a p-value for the test, which helps note how confident you are in the test results.

In statistics, the p-value depicts the probability that, assuming the null hypothesis is correct, you might still observe results that are at least as extreme as the results of your hypothesis test. The smaller the p-value, the more likely the alternative hypothesis is correct, and the greater the significance of your results.

3. One-Sided vs. Two-Sided Testing

When it’s time to test your hypothesis, it’s important to leverage the correct testing method. The two most common hypothesis testing methods are one-sided and two-sided tests , or one-tailed and two-tailed tests, respectively.

Typically, you’d leverage a one-sided test when you have a strong conviction about the direction of change you expect to see due to your hypothesis test. You’d leverage a two-sided test when you’re less confident in the direction of change.

4. Sampling

To perform hypothesis testing in the first place, you need to collect a sample of data to be analyzed. Depending on the question you’re seeking to answer or investigate, you might collect samples through surveys, observational studies, or experiments.

A survey involves asking a series of questions to a random population sample and recording self-reported responses.

Observational studies involve a researcher observing a sample population and collecting data as it occurs naturally, without intervention.

Finally, an experiment involves dividing a sample into multiple groups, one of which acts as the control group. For each non-control group, the variable being studied is manipulated to determine how the data collected differs from that of the control group.

Learn How to Perform Hypothesis Testing

Hypothesis testing is a complex process involving different moving pieces that can allow an organization to effectively leverage its data and inform strategic decisions.

If you’re interested in better understanding hypothesis testing and the role it can play within your organization, one option is to complete a course that focuses on the process. Doing so can lay the statistical and analytical foundation you need to succeed.

Do you want to learn more about hypothesis testing? Explore Business Analytics —one of our online business essentials courses —and download our Beginner’s Guide to Data & Analytics .

About the Author

- Free Resources

A/B Testing in Digital Marketing: Example of four-step hypothesis framework

by Daniel Burstein , Senior Director, Content & Marketing, MarketingSherpa and MECLABS Institute

This article was originally published in the MarketingSherpa email newsletter .

If you are a marketing expert — whether in a brand’s marketing department or at an advertising agency — you may feel the need to be absolutely sure in an unsure world.

What should the headline be? What images should we use? Is this strategy correct? Will customers value this promo?

This is the stuff you’re paid to know. So you may feel like you must boldly proclaim your confident opinion.

But you can’t predict the future with 100% accuracy. You can’t know with absolute certainty how humans will behave. And let’s face it, even as marketing experts we’re occasionally wrong.

It’s not bad, it’s healthy. And the most effective way to overcome that doubt is by testing our marketing creative to see what really works.

Developing a hypothesis

After we published Value Sequencing: A step-by-step examination of a landing page that generated 638% more conversions , a MarketingSherpa reader emailed us and asked …

Great stuff Daniel. Much appreciated. I can see you addressing all the issues there.

I thought I saw one more opportunity to expand on what you made. Would you consider adding the IF, BY, WILL, BECAUSE to the control/treatment sections so we can see what psychology you were addressing so we know how to create the hypothesis to learn from what the customer is currently doing and why and then form a test to address that? The video today on customer theory was great (Editor’s Note: Part of the MarketingExperiments YouTube Live series ) . I think there is a way to incorporate that customer theory thinking into this article to take it even further.

Developing a hypothesis is an essential part of marketing experimentation. Qualitative-based research should inform hypotheses that you test with real-world behavior.

The hypotheses help you discover how accurate those insights from qualitative research are. If you engage in hypothesis-driven testing, then you ensure your tests are strategic (not just based on a random idea) and built in a way that enables you to learn more and more about the customer with each test.

And that methodology will ultimately lead to greater and greater lifts over time, instead of a scattershot approach where sometimes you get a lift and sometimes you don’t, but you never really know why.

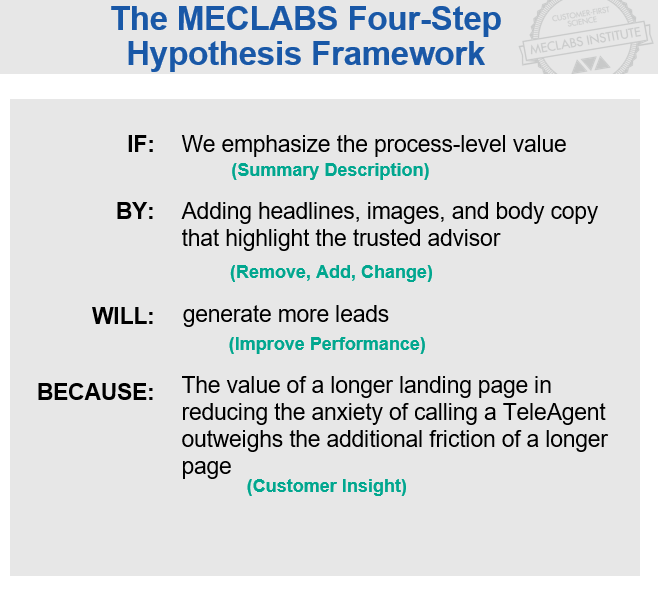

Here is a handy tool to help you in developing hypotheses — the MECLABS Four-Step Hypothesis Framework.

As the reader suggests, I will use the landing page test referenced in the previous article as an example. ( Please note: While the experiment in that article was created with a hypothesis-driven approach, this specific four-step framework is fairly new and was not in common use by the MECLABS team at that time, so I have created this specific example after the test was developed based on what I see in the test).

Here is what the hypothesis would look like for that test, and then we’ll break down each part individually:

If we emphasize the process-level value by adding headlines, images and body copy, we will generate more leads because the value of a longer landing page in reducing the anxiety of calling a TeleAgent outweighs the additional friction of a longer page.

IF: Summary description

The hypothesis begins with an overall statement about what you are trying to do in the experiment. In this case, the experiment is trying to emphasize the process-level value proposition (one of the four essential levels of value proposition ) of having a phone call with a TeleAgent.

The control landing page was emphasizing the primary value proposition of the brand itself.

The treatment landing page is essentially trying to answer this value proposition question: If I am your ideal customer, why should I call a TeleAgent rather than take any other action to learn more about my Medicare options?

The control landing page was asking a much bigger question that customers weren’t ready to say “yes” to yet, and it was overlooking the anxiety inherent in getting on a phone call with someone who might try to sell you something: If I am your ideal customer, why should I buy from your company instead of any other company.

This step answers WHAT you are trying to do.

BY: Remove, add, change

The next step answers HOW you are going to do it.

As Flint McGlaughlin, CEO and Managing Director of MECLABS Institute teaches, there are only three ways to improve performance: removing, adding or changing .

In this case, the team focused mostly on adding — adding headlines, images and body copy that highlighted the TeleAgents as trusted advisors.

“Adding” can be counterintuitive for many marketers. The team’s original landing page was short. Conventional wisdom says customers won’t read long landing pages. When I’m presenting to a group of marketers, I’ll put a short and long landing page on a slide and ask which page they think achieved better results.

Invariably I will hear, “Oh, the shorter page. I would never read something that long.”

That first-person statement is a mistake. Your marketing creative should not be based on “I” — the marketer. It should be based on “they” — the customer.

Most importantly, you need to focus on the customer at a specific point in time — when he or she is in the mindspace of considering to take an action like purchase a product or in need of more information before they decide to download a whitepaper. And sometimes in these situations, longer landing pages perform better.

In the case of this landing page, even the customer may not necessarily favor a long landing page all the time. But in the real-world situation when they are considering whether to call a TeleAgent or not, the added value helps more customers decide to take the action.

WILL: Improve performance

This is your KPI (key performance indicator). This step answers another HOW question: How do you know your hypothesis has been supported or refuted?

You can choose secondary metrics to monitor during your test as well. This might help you interpret the customer behavior observed in the test.

But ultimately, the hypothesis should rest on a single metric.

For this test, the goal was to generate more leads. And the treatment did — 638% more leads.

BECAUSE: Customer insight

This last step answers a WHY question — why did the customers act this way?

This helps you determine what you can learn about customers based on the actions observed in the experiment.

This is ultimately why you test. To learn about the customer and continually refine your company’s customer theory .

In this case, the team theorized that the value of a longer landing page in reducing the anxiety of calling a TeleAgent outweighs the additional friction of a longer landing page.

And the test results support that hypothesis.

Related Resources

The Hypothesis and the Modern-Day Marketer

Boost your Conversion Rate with a MECLABS Quick Win Intensive

Designing Hypotheses that Win: A four-step framework for gaining customer wisdom and generating marketing results

Improve Your Marketing

Join our thousands of weekly case study readers.

Enter your email below to receive MarketingSherpa news, updates, and promotions:

Note: Already a subscriber? Want to add a subscription? Click Here to Manage Subscriptions

Get Better Business Results With a Skillfully Applied Customer-first Marketing Strategy

The customer-first approach of MarketingSherpa’s agency services can help you build the most effective strategy to serve customers and improve results, and then implement it across every customer touchpoint.

Get headlines, value prop, competitive analysis, and more.

Marketer Vs Machine

Marketer Vs Machine: We need to train the marketer to train the machine.

Free Marketing Course

Become a Marketer-Philosopher: Create and optimize high-converting webpages (with this free online marketing course)

Project and Ideas Pitch Template

A free template to help you win approval for your proposed projects and campaigns

Six Quick CTA checklists

These CTA checklists are specifically designed for your team — something practical to hold up against your CTAs to help the time-pressed marketer quickly consider the customer psychology of your “asks” and how you can improve them.

Infographic: How to Create a Model of Your Customer’s Mind

You need a repeatable methodology focused on building your organization’s customer wisdom throughout your campaigns and websites. This infographic can get you started.

Infographic: 21 Psychological Elements that Power Effective Web Design

To build an effective page from scratch, you need to begin with the psychology of your customer. This infographic can get you started.

Receive the latest case studies and data on email, lead gen, and social media along with MarketingSherpa updates and promotions.

- Your Email Account

- Customer Service Q&A

- Search Library

- Content Directory:

Questions? Contact Customer Service at [email protected]

© 2000-2024 MarketingSherpa LLC, ISSN 1559-5137 Editorial HQ: MarketingSherpa LLC, PO Box 50032, Jacksonville Beach, FL 32240

The views and opinions expressed in the articles of this website are strictly those of the author and do not necessarily reflect in any way the views of MarketingSherpa, its affiliates, or its employees.

11 A/B Testing Examples From Real Businesses

Published: April 21, 2023

Whether you're looking to increase revenue, sign-ups, social shares, or engagement, A/B testing and optimization can help you get there.

But for many marketers out there, the tough part about A/B testing is often finding the right test to drive the biggest impact — especially when you're just getting started. So, what's the recipe for high-impact success?

Truthfully, there is no one-size-fits-all recipe. What works for one business won't work for another — and finding the right metrics and timing to test can be a tough problem to solve. That’s why you need inspiration from A/B testing examples.

In this post, let's review how a hypothesis will get you started with your testing, and check out excellent examples from real businesses using A/B testing. While the same tests may not get you the same results, they can help you run creative tests of your own. And before you check out these examples. be sure to review key concepts of A/B testing.

A/B Testing Hypothesis Examples

A hypothesis can make or break your experiment, especially when it comes to A/B testing. When creating your hypothesis, you want to make sure that it’s:

- Focused on one specific problem you want to solve or understand

- Able to be proven or disproven

- Focused on making an impact (bringing higher conversion rates, lower bounce rate, etc.)

When creating a hypothesis, following the "If, then" structure can be helpful, where if you changed a specific variable, then a particular result would happen.

Here are some examples of what that would look like in an A/B testing hypothesis:

- Shortening contact submission forms to only contain required fields would increase the number of sign-ups.

- Changing the call-to-action text from "Download now" to "Download this free guide" would increase the number of downloads.

- Reducing the frequency of mobile app notifications from five times per day to two times per day will increase mobile app retention rates.

- Using featured images that are more contextually related to our blog posts will contribute to a lower bounce rate.

- Greeting customers by name in emails will increase the total number of clicks.

Let’s go over some real-life examples of A/B testing to prepare you for your own.

A/B Testing Examples

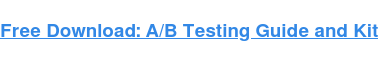

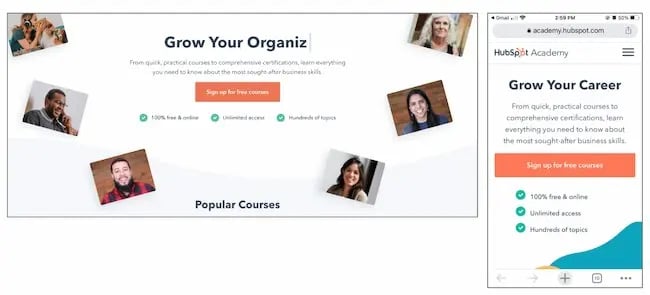

Website a/b testing examples, 1. hubspot academy's homepage hero image.

Most websites have a homepage hero image that inspires users to engage and spend more time on the site. This A/B testing example shows how hero image changes can impact user behavior and conversions.

Based on previous data, HubSpot Academy found that out of more than 55,000 page views, only .9% of those users were watching the video on the homepage. Of those viewers, almost 50% watched the full video.

Chat transcripts also highlighted the need for clearer messaging for this useful and free resource.

That's why the HubSpot team decided to test how clear value propositions could improve user engagement and delight.

A/B Test Method

HubSpot used three variants for this test, using HubSpot Academy conversion rate (CVR) as the primary metric. Secondary metrics included CTA clicks and engagement.

Variant A was the control.

For variant B, the team added more vibrant images and colorful text and shapes. It also included an animated "typing" headline.

Variant C also added color and movement, as well as animated images on the right-hand side of the page.

As a result, HubSpot found that variant B outperformed the control by 6%. In contrast, variant C underperformed the control by 1%. From those numbers, HubSpot was able to project that using variant B would lead to about 375 more sign ups each month.

2. FSAstore.com’s Site Navigation

Every marketer will have to focus on conversion at some point. But building a website that converts is tough.

FSAstore.com is an ecommerce company supplying home goods for Americans with a flexible spending account.

This useful site could help the 35 million+ customers that have an FSA. But the website funnel was overwhelming. It had too many options, especially on category pages. The team felt that customers weren't making purchases because of that issue.

To figure out how to appeal to its customers, this company tested a simplified version of its website. The current site included an information-packed subheader in the site navigation.

To test the hypothesis, this A/B testing example compared the current site to an update without the subheader.

This update showed a clear boost in conversions and FSAstore.com saw a 53.8% increase in revenue per visitor.

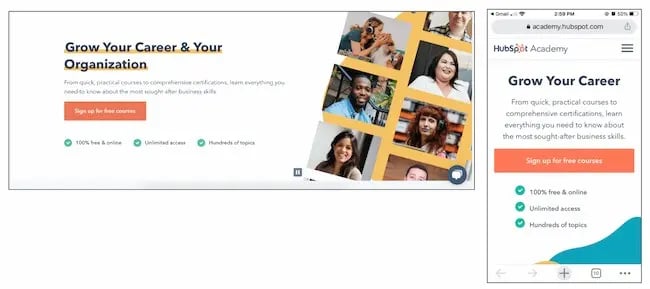

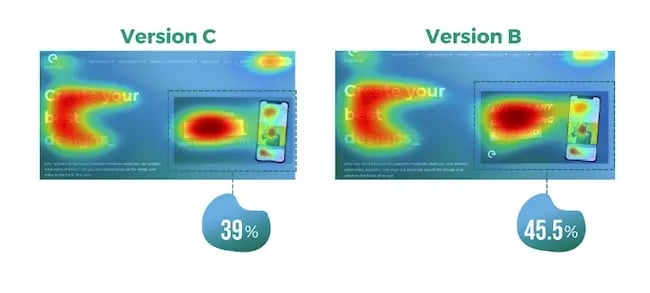

3. Expoze’s Web Page Background

The visuals on your web page are important because they help users decide whether they want to spend more time on your site.

In this A/B testing example, Expoze.io decided to test the background on its homepage.

The website home page was difficult for some users to read because of low contrast. The team also needed to figure out how to improve page navigation while still representing the brand.

First, the team did some research and created several different designs. The goals of the redesign were to improve the visuals and increase attention to specific sections of the home page, like the video thumbnail.

They used AI-generated eye tracking as they designed to find the best designs before A/B testing. Then they ran an A/B heatmap test to see whether the new or current design got the most attention from visitors.

The new design showed a big increase in attention, with version B bringing over 40% more attention to the desired sections of the home page.

This design change also brought a 25% increase in CTA clicks. The team believes this is due to the added contrast on the page bringing more attention to the CTA button, which was not changed.

4. Thrive Themes’ Sales Page Optimization

Many landing pages showcase testimonials. That's valuable content and it can boost conversion.

That's why Thrive Themes decided to test a new feature on its landing pages — customer testimonials .

In the control, Thrive Themes had been using a banner that highlighted product features, but not how customers felt about the product.

The team decided to test whether adding testimonials to a sales landing page could improve conversion rates.

In this A/B test example, the team ran a 6-week test with the control against an updated landing page with testimonials.

This change netted a 13% increase in sales. The control page had a 2.2% conversion rate, but the new variant showed a 2.75% conversion rate.

Email A/B Testing Examples

5. hubspot's email subscriber experience.

Getting users to engage with email isn't an easy task. That's why HubSpot decided to A/B test how alignment impacts CTA clicks.

HubSpot decided to change text alignment in the weekly emails for subscribers to improve the user experience. Ideally, this improved experience would result in a higher click rate.

For the control, HubSpot sent centered email text to users.

For variant B, HubSpot sent emails with left-justified text.

HubSpot found that emails with left-aligned text got fewer clicks than the control. And of the total left-justified emails sent, less than 25% got more clicks than the control.

6. Neurogan’s Deal Promotion

Making the most of email promotion is important for any company, especially those in competitive industries.

This example uses the power of current customers for increasing email engagement.

Neurogan wasn't always offering the right content to its audience and it was having a hard time competing with a flood of other new brands.

An email agency audited this brand's email marketing, then focused efforts on segmentation. This A/B testing example starts with creating product-specific offers. Then, this team used testing to figure out which deals were best for each audience.

These changes brought higher revenue for promotions and higher click rates. It also led to a new workflow with a 37% average open rate and a click rate of 3.85%.

For more on how to run A/B testing for your campaigns, check out this free A/B testing kit .

Social Media A/B Testing Examples

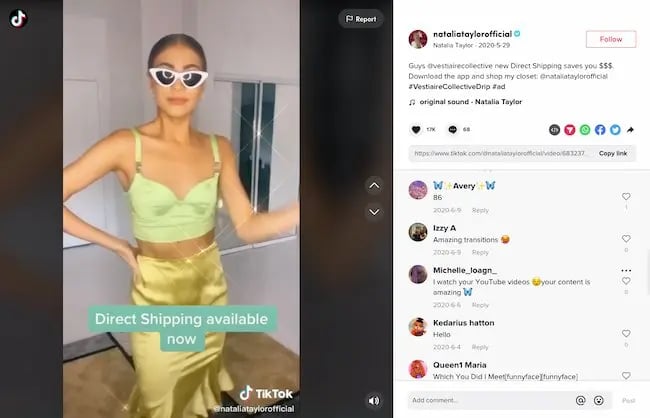

7. vestiaire’s tiktok awareness campaign.

A/B testing examples like the one below can help you think creatively about what to test and when. This is extra helpful if your business is working with influencers and doesn't want to impact their process while working toward business goals.

Fashion brand Vestaire wanted help growing the brand on TikTok. It was also hoping to increase awareness with Gen Z audiences for its new direct shopping feature.

Vestaire's influencer marketing agency asked eight influencers to create content with specific CTAs to meet the brand's goals. Each influencer had extensive creative freedom and created a range of different social media posts.

Then, the agency used A/B testing to choose the best-performing content and promoted this content with paid advertising .

This testing example generated over 4,000 installs. It also decreased the cost per install by 50% compared to the brand's existing presence on Instagram and YouTube.

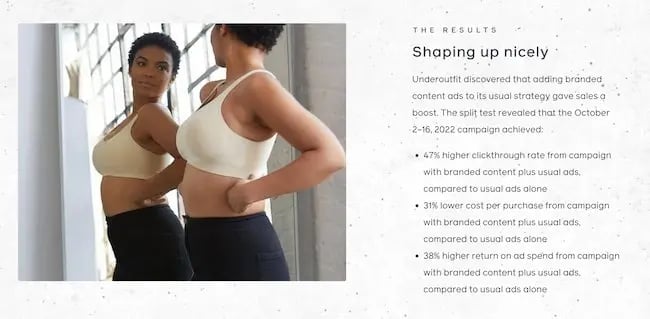

8. Underoutfit’s Promotion of User-Generated Content on Facebook

Paid advertising is getting more expensive, and clickthrough rates decreased through the end of 2022 .

To make the most of social ad spend, marketers are using A/B testing to improve ad performance. This approach helps them test creative content before launching paid ad campaigns, like in the examples below.

Underoutfit wanted to increase brand awareness on Facebook.

To meet this goal, it decided to try adding branded user-generated content. This brand worked with an agency and several creators to create branded content to drive conversion.

Then, Underoutfit ran split testing between product ads and the same ads combined with the new branded content ads. Both groups in the split test contained key marketing messages and clear CTA copy.

The brand and agency also worked with Meta Creative Shop to make sure the videos met best practice standards.

The test showed impressive results for the branded content variant, including a 47% higher clickthrough rate and 28% higher return on ad spend.

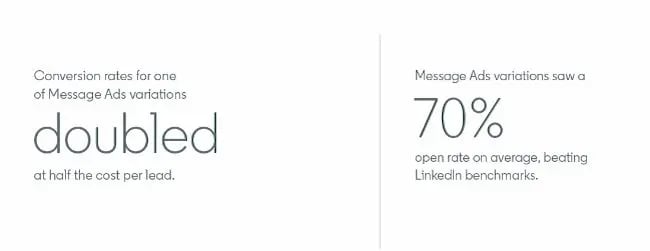

9. Databricks’ Ad Performance on LinkedIn

Pivoting to a new strategy quickly can be difficult for organizations. This A/B testing example shows how you can use split testing to figure out the best new approach to a problem.

Databricks , a cloud software tool, needed to raise awareness for an event that was shifting from in-person to online .

To connect with a large group of new people in a personalized way, the team decided to create a LinkedIn Message Ads campaign. To make sure the messages were effective, it used A/B testing to tweak the subject line and message copy.

The third variant of the copy featured a hyperlink in the first sentence of the invitation. Compared to the other two variants, this version got nearly twice as many clicks and conversions.

Mobile A/B Testing Example

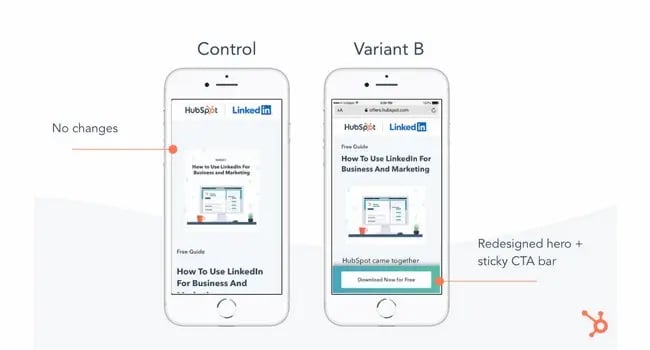

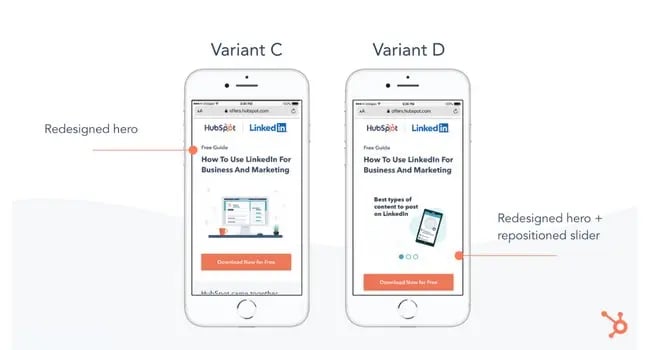

7. hubspot's mobile calls-to-action.

On this blog, you'll notice anchor text in the introduction, a graphic CTA at the bottom, and a slide-in CTA when you scroll through the post. Once you click on one of these offers, you'll land on a content offer page.

While many users access these offers from a desktop or laptop computer, many others plan to download these offers to mobile devices.

But on mobile, users weren't finding the CTA buttons as quickly as they could on a computer. That's why HubSpot tested mobile design changes to improve the user experience.

Previous A/B tests revealed that HubSpot's mobile audience was 27% less likely to click through to download an offer. Also, less than 75% of mobile users were scrolling down far enough to see the CTA button.

So, HubSpot decided to test different versions of the offer page CTA, using conversion rate (CVR) as the primary metric. For secondary metrics, the team measured CTA clicks for each CTA, as well as engagement.

HubSpot used four variants for this test.

For variant A, the control, the traditional placement of CTAs remained unchanged.

For variant B, the team redesigned the hero image and added a sticky CTA bar.

For variant C, the redesigned hero was the only change.

For variant D, the team redesigned the hero image and repositioned the slider.

All variants outperformed the control for the primary metric, CVR. Variant C saw a 10% increase, variant B saw a 9% increase, and variant D saw an 8% increase.

From those numbers, HubSpot was able to project that using variant C on mobile would lead to about 1,400 more content leads and almost 5,700 more form submissions each month.

11. Hospitality.net’s Mobile Booking

Businesses need to keep up with quick shifts in mobile devices to create a consistently strong customer experience.

A/B testing examples like the one below can help your business streamline this process.

Hospitality.net offered both simplified and dynamic mobile booking experiences. The simplified experience showed a limited number of available dates and the design is for smaller screens. The dynamic experience is for the larger mobile device screens. It shows a wider range of dates and prices.

But the brand wasn’t sure which mobile optimization strategy would be better for conversion.

This brand believed that customers would prefer the dynamic experience and that it would get more conversions. But it chose to test these ideas with a simple A/B test. Over 34 days, it sent half of the mobile visitors to the simplified mobile experience, and half to the dynamic experience, with over 100,000 visitors total.

This A/B testing example showed a 33% improvement in conversion. It also helped confirm the brand's educated guesses about mobile booking preferences.

A/B Testing Takeaways for Marketers

A lot of different factors can go into A/B testing, depending on your business needs. But there are a few key things to keep in mind:

- Every A/B test should start with a hypothesis focused on one specific problem that you can test.

- Make sure you’re testing a control variable (your original version) and a treatment variable (a new version that you think will perform better).

- You can test various things, like landing pages, CTAs, emails, or mobile app designs.

- The best way to understand if your results mean something is to figure out the statistical significance of your test.

- There are a variety of goals to focus on for A/B testing (increased site traffic, lower bounce rates, etc.), but you should be able to test, support, prove, and disprove your hypothesis.

- When testing, make sure you’re splitting your sample groups equally and randomly, so your data is viable and not due to chance.

- Take action based on the results you observe.

Start Your Next A/B Test Today

You can see amazing results from the A/B testing examples above. These businesses were able to take action on goals because they started testing. If you want to get great results, you've got to get started, too.

Editor's note: This post was originally published in October 2014 and has been updated for comprehensiveness.

Don't forget to share this post!

Related articles.

How to Do A/B Testing: 15 Steps for the Perfect Split Test

Multivariate Testing: How It Differs From A/B Testing

How to A/B Test Your Pricing (And Why It Might Be a Bad Idea)

15 of the Best A/B Testing Tools for 2023

How to Determine Your A/B Testing Sample Size & Time Frame

These 20 A/B Testing Variables Measure Successful Marketing Campaigns

![hypothesis examples marketing How to Understand & Calculate Statistical Significance [Example]](https://blog.hubspot.com/hubfs/FEATURED%20IMAGE-Nov-22-2022-06-25-18-4060-PM.png)

How to Understand & Calculate Statistical Significance [Example]

What is an A/A Test & Do You Really Need to Use It?

The Ultimate Guide to Social Testing

![hypothesis examples marketing How to Conduct the Perfect Marketing Experiment [+ Examples]](https://blog.hubspot.com/hubfs/marketing-experiment%20copy.jpg)

How to Conduct the Perfect Marketing Experiment [+ Examples]

Learn more about A/B and how to run better tests.

Marketing software that helps you drive revenue, save time and resources, and measure and optimize your investments — all on one easy-to-use platform

Expert Advice on Developing a Hypothesis for Marketing Experimentation

- Conversion Rate Optimization

Simbar Dube

Every marketing experimentation process has to have a solid hypothesis.

That’s a must – unless you want to be roaming in the dark and heading towards a dead-end in your experimentation program.

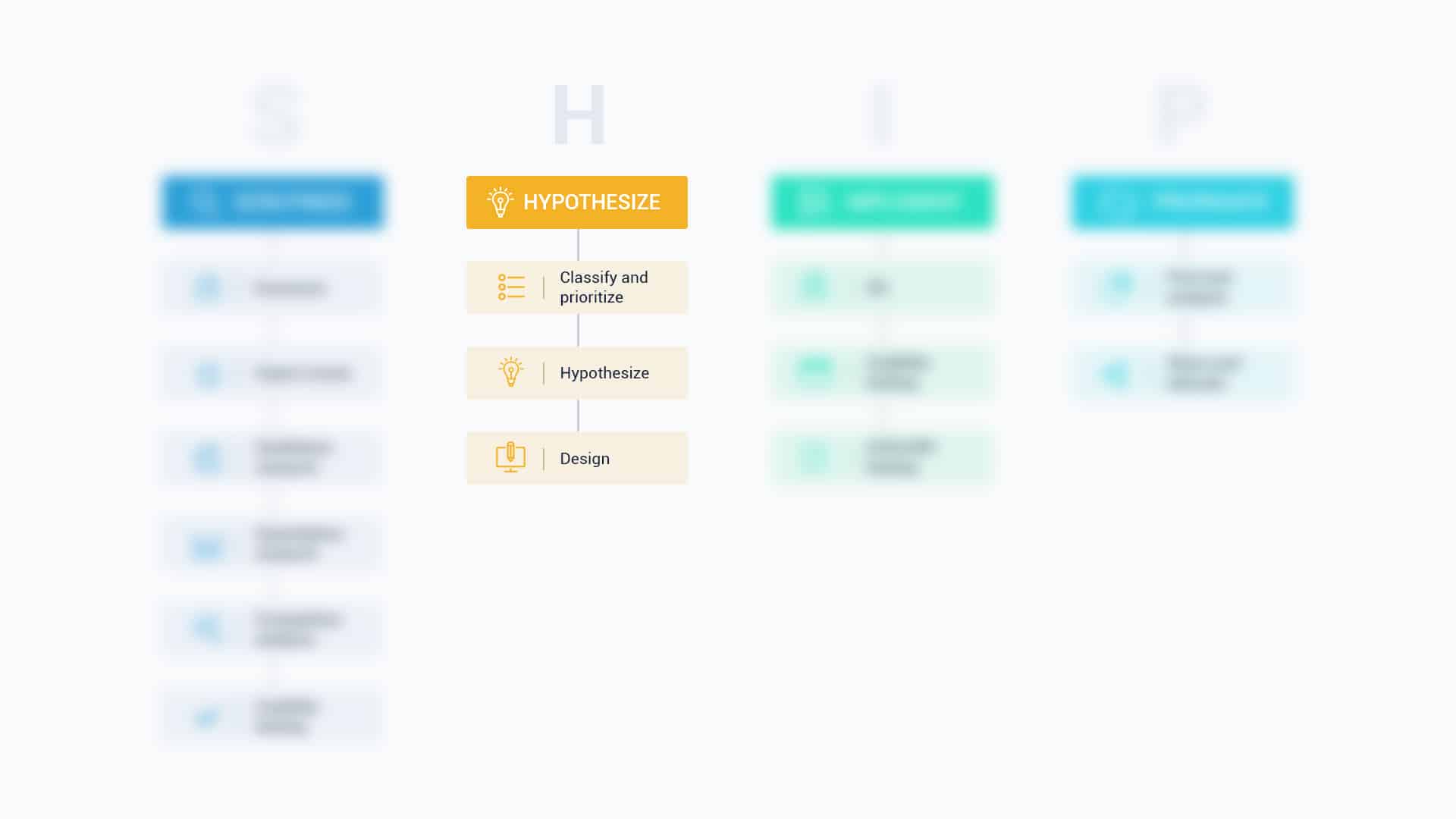

Hypothesizing is the second phase of our SHIP optimization process here at Invesp.

It comes after we have completed the research phase.

This is an indication that we don’t just pull a hypothesis out of thin air. We always make sure that it is based on research data.

But having a research-backed hypothesis doesn’t mean that the hypothesis will always be correct. In fact, tons of hypotheses bear inconclusive results or get disproved.

The main idea of having a hypothesis in marketing experimentation is to help you gain insights – regardless of the testing outcome.

By the time you finish reading this article, you’ll know:

- The essential tips on what to do when crafting a hypothesis for marketing experiments

- How a marketing experiment hypothesis works

How experts develop a solid hypothesis

The basics: marketing experimentation hypothesis.

A hypothesis is a research-based statement that aims to explain an observed trend and create a solution that will improve the result. This statement is an educated, testable prediction about what will happen.

It has to be stated in declarative form and not as a question.

“ If we add magnification info, product video and making virtual mirror buttons, will that improve engagement? ” is not declarative, but “ Improving the experience of product pages by adding magnification info, product video and making virtual mirror buttons will increase engagement ” is.

Here’s a quick example of how a hypothesis should be phrased:

- Replacing ___ with __ will increase [conversion goal] by [%], because:

- Removing ___ and __ will decrease [conversion goal] by [%], because:

- Changing ___ into __ will not affect [conversion goal], because:

- Improving ___ by ___will increase [conversion goal], because:

As you can see from the above sentences, a good hypothesis is written in clear and simple language. Reading your hypothesis should tell your team members exactly what you thought was going to happen in an experiment.

Another important element of a good hypothesis is that it defines the variables in easy-to-measure terms, like who the participants are, what changes during the testing, and what the effect of the changes will be:

Example : Let’s say this is our hypothesis:

Displaying full look items on every “continue shopping & view your bag” pop-up and highlighting the value of having a full look will improve the visibility of a full look, encourage visitors to add multiple items from the same look and that will increase the average order value, quantity with cross-selling by 3% .

Who are the participants :

Visitors.

What changes during the testing :

Displaying full look items on every “continue shopping & view your bag” pop-up and highlighting the value of having a full look…

What the effect of the changes will be:

Will improve the visibility of a full look, encourage visitors to add multiple items from the same look and that will increase the average order value, quantity with cross-selling by 3% .

Don’t bite off more than you can chew! Answering some scientific questions can involve more than one experiment, each with its own hypothesis. so, you have to make sure your hypothesis is a specific statement relating to a single experiment.

How a Marketing Experimentation Hypothesis Works

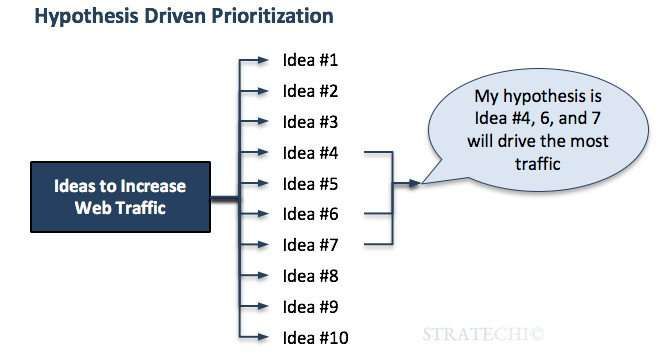

Assuming that you have done conversion research and you have identified a list of issues ( UX or conversion-related problems) and potential revenue opportunities on the site. The next thing you’d want to do is to prioritize the issues and determine which issues will most impact the bottom line.

Having ranked the issues you need to test them to determine which solution works best. At this point, you don’t have a clear solution for the problems identified. So, to get better results and avoid wasting traffic on poor test designs, you need to make sure that your testing plan is guided.

This is where a hypothesis comes into play.

For each and every problem you’re aiming to address, you need to craft a hypothesis for it – unless the problem is a technical issue that can be solved right away without the need to hypothesize or test.

One important thing you should note about an experimentation hypothesis is that it can be implemented in different ways.

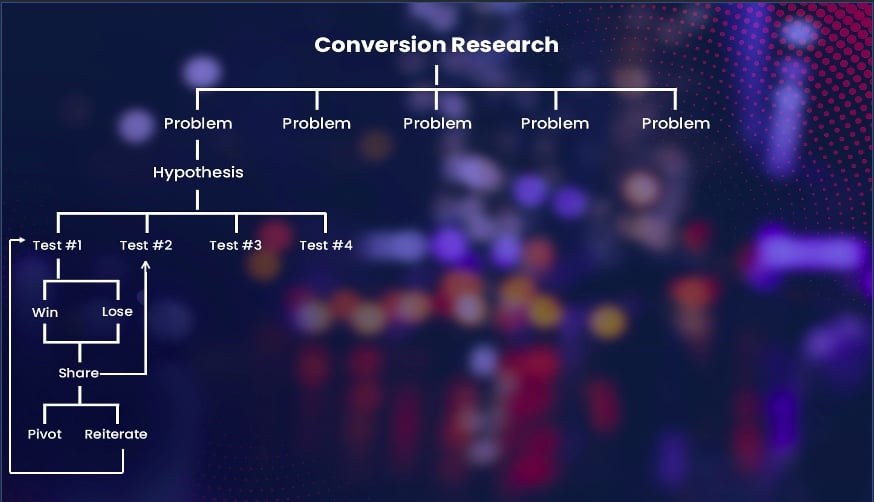

This means that one hypothesis can have four or five different tests as illustrated in the image above. Khalid Saleh , the Invesp CEO, explains:

“There are several ways that can be used to support one single hypothesis. Each and every way is a possible test scenario. And that means you also have to prioritize the test design you want to start with. Ultimately the name of the game is you want to find the idea that has the biggest possible impact on the bottom line with the least amount of effort. We use almost 18 different metrics to score all of those.”

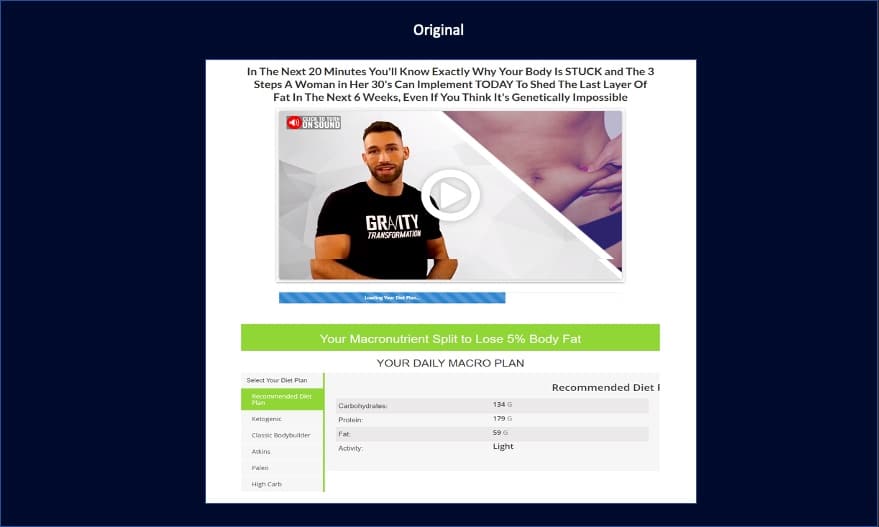

In one of the recent tests we launched after watching video recordings, viewing heatmaps, and conducting expert reviews, we noticed that:

- Visitors were scrolling to the bottom of the page to fill out a calculator so as to get a free diet plan.

- Brand is missing

- Too many free diet plans – and this made it hard for visitors to choose and understand.

- No value proposition on the page

- The copy didn’t mention the benefits of the paid program

- There was no clear CTA for the next action

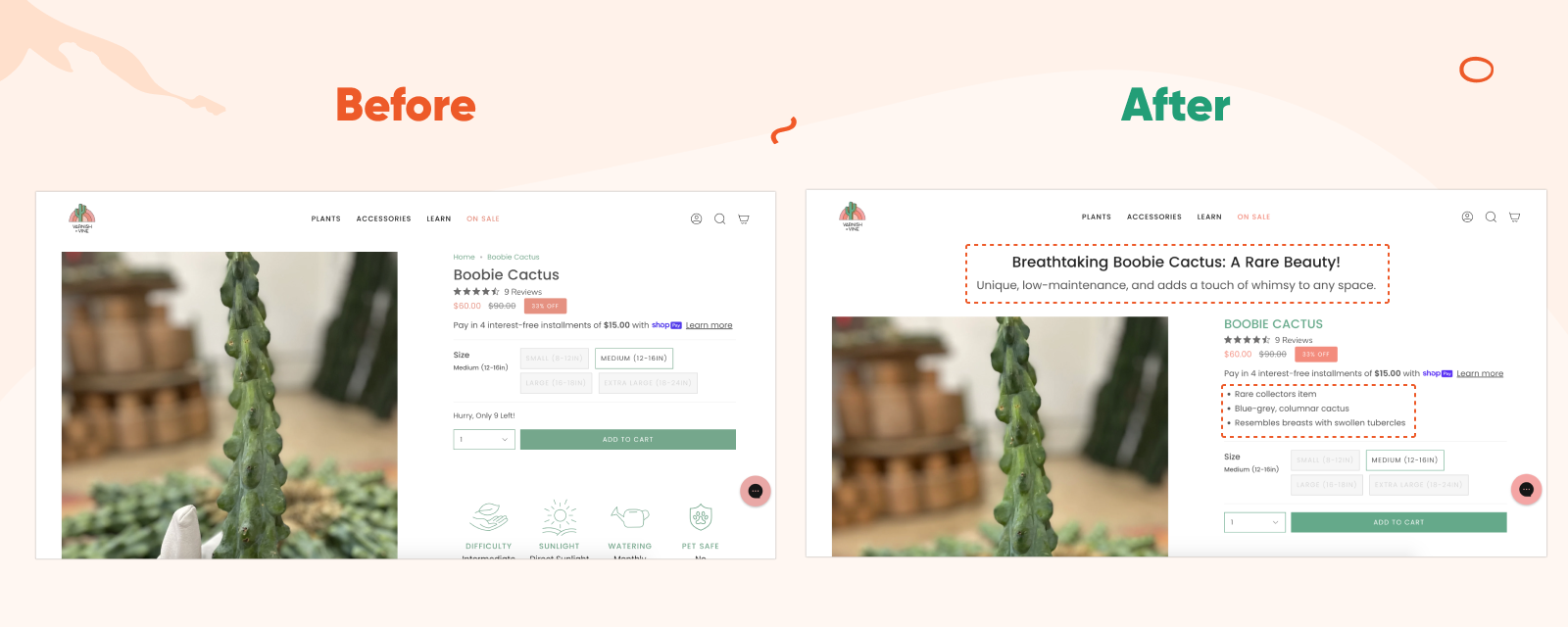

To help you understand, let’s have a look at how the original page looked like before we worked on it:

So our aim was to make the shopping experience seamless for visitors, make the page more appealing and not confusing. In order to do that, here is how we phrased the hypothesis for the page above:

Improving the experience of optin landing pages by making the free offer accessible above the fold and highlighting the next action with a clear CTA and will increase the engagement on the offer and increase the conversion rate by 1%.

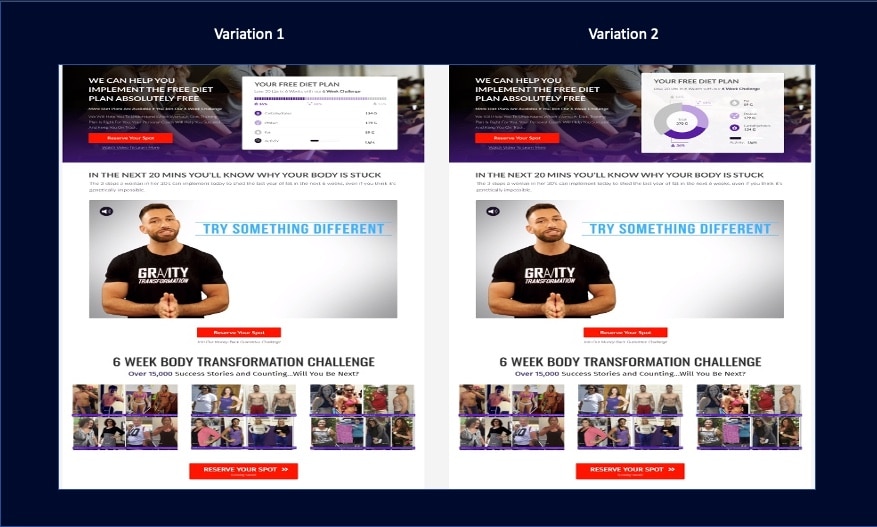

For this particular hypothesis, we had two design variations aligned to it:

The two above designs are different, but they are aligned to one hypothesis. This goes on to show how one hypothesis can be implemented in different ways. Looking at the two variations above – which one do you think won?

Yes, you’re right, V2 was the winner.

Considering that there are many ways you can implement one hypothesis, so when you launch a test and it fails, it doesn’t necessarily mean that the hypothesis was wrong. Khalid adds:

“A single failure of a test doesn’t mean that the hypothesis is incorrect. Nine times out of ten it’s because of the way you’ve implemented the hypothesis. Look at the way you’ve coded and look at the copy you’ve used – you are more likely going to find something wrong with it. Always be open.”

So there are three things you should keep in mind when it comes to marketing experimentation hypotheses:

- It takes a while for this hypothesis to really fully test it.

- A single failure doesn’t necessarily mean that the hypothesis is incorrect.

- Whether a hypothesis is proved or disproved, you can still learn something about your users.

I know it’s never easy to develop a hypothesis that informs future testing – I mean it takes a lot of intense research behind the scenes, and tons of ideas to begin with. So, I reached out to six CRO experts for tips and advice to help you understand more about developing a solid hypothesis and what to include in it.

Maurice says that a solid hypothesis should have not more than one goal:

Maurice Beerthuyzen – CRO/CXO Lead at ClickValue “Creating a hypothesis doesn’t begin at the hypothesis itself. It starts with research. What do you notice in your data, customer surveys, and other sources? Do you understand what happens on your website? When you notice an opportunity it is tempting to base one single A/B test on one hypothesis. Create hypothesis A and run a single test, and then move forward to the next test. With another hypothesis. But it is very rare that you solve your problem with only one hypothesis. Often a test provides several other questions. Questions which you can solve with running other tests. But based on that same hypothesis! We should not come up with a new hypothesis for every test. Another mistake that often happens is that we fill the hypothesis with multiple goals. Then we expect that the hypothesis will work on conversion rate, average order value, and/or Click Through Ratio. Of course, this is possible, but when you run your test, your hypothesis can only have one goal at once. And what if you have two goals? Just split the hypothesis then create a secondary hypothesis for your second goal. Every test has one primary goal. What if you find a winner on your secondary hypothesis? Rerun the test with the second hypothesis as the primary one.”

Jon believes that a strong hypothesis is built upon three pillars:

Jon MacDonald – President and Founder of The Good Respond to an established challenge – The challenge must have a strong background based on data, and the background should state an established challenge that the test is looking to address. Example: “Sign up form lacks proof of value, incorrectly assuming if users are on the page, they already want the product.” Propose a specific solution – What is the one, the single thing that is believed will address the stated challenge? Example: “Adding an image of the dashboard as a background to the signup form…”. State the assumed impact – The assumed impact should reference one specific, measurable optimization goal that was established prior to forming a hypothesis. Example: “…will increase signups.” So, if your hypothesis doesn’t have a specific, measurable goal like “will increase signups,” you’re not really stating a test hypothesis!”

Matt uses his own hypothesis builder to collate important data points into a single hypothesis.

Matt Beischel – Founder of Corvus CRO Like Jon, Matt also breaks down his hypothesis writing process into three sections. Unlike Jon, Matt sections are: Comprehension Response Outcome I set it up so that the names neatly match the “CRO.” It’s a sort of “mad-libs” style fill-in-the-blank where each input is an important piece of information for building out a robust hypothesis. I consider these the minimum required data points for a good hypothesis; if you can’t completely fill out the form, then you don’t have a good hypothesis. Here’s a breakdown of each data point: Comprehension – Identifying something that can be improved upon Problem: “What is a problem we have?” Observation Method: “How did we identify the problem?” Response – Change that can cause improvement Variation: “What change do we think could solve the problem?” Location: “Where should the change occur?” Scope: “What are the conditions for the change?” Audience: “Who should the change affect?” Outcome – Measurable result of the change that determines the success Behavior Change : “What change in behavior are we trying to affect?” Primary KPI: “What is the important metric that determines business impact?” Secondary KPIs: “Other metrics that will help reinforce/refute the Primary KPI” Something else to consider is that I have a “user first” approach to formulating hypotheses. My process above is always considered within the context of how it would first benefit the user. Now, I do feel that a successful experiment should satisfy the needs of BOTH users and businesses, but always be in favor of the user. Notice that “Behavior Change” is the first thing listed in Outcome, not primary business KPI. Sure, at the end of the day you are working for the business’s best interests (both strategically and financially), but placing the user first will better inform your decision making and prioritization; there’s a reason that things like personas, user stories, surveys, session replays, reviews, etc. exist after all. A business-first ideology is how you end up with dark patterns and damaging brand credibility.”

One of the many mistakes that CROs make when writing a hypothesis is that they are focused on wins and not on insights. Shiva advises against this mindset:

Shiva Manjunath – Marketing Manager and CRO at Gartner “Test to learn, not test to win. It’s a very simple reframe of hypotheses but can have a magnitude of difference. Here’s an example: Test to Win Hypothesis: If I put a product video in the middle of the product page, I will improve add to cart rates and improve CVR. Test to Learn Hypothesis: If I put a product video on the product page, there will be high engagement with the video and it will positively influence traffic What you’re doing is framing your hypothesis, and test, in a particular way to learn as much as you can. That is where you gain marketing insights. The more you run ‘marketing insight’ tests, the more you will win. Why? The more you compound marketing insight learnings, your win velocity will start to increase as a proxy of the learnings you’ve achieved. Then, you’ll have a higher chance of winning in your tests – and the more you’ll be able to drive business results.”

Lorenzo says it’s okay to focus on achieving a certain result as long as you are also getting an answer to: “Why is this event happening or not happening?”

Lorenzo Carreri – CRO Consultant “When I come up with a hypothesis for a new or iterative experiment, I always try to find an answer to a question. It could be something related to a problem people have or an opportunity to achieve a result or a way to learn something. The main question I want to answer is “Why is this event happening or not happening?” The question is driven by data, both qualitative and quantitative. The structure I use for stating my hypothesis is: From [data source], I noticed [this problem/opportunity] among [this audience of users] on [this page or multiple pages]. So I believe that by [offering this experiment solution], [this KPI] will [increase/decrease/stay the same].

Jakub Linowski says that hypotheses are meant to hold researchers accountable:

Jakub Linowski – Chief Editor of GoodUI “They do this by making your change and prediction more explicit. A typical hypothesis may be expressed as: If we change (X), then it will have some measurable effect (A). Unfortunately, this oversimplified format can also become a heavy burden to your experiment design with its extreme reductionism. However you decide to format your hypotheses, here are three suggestions for more flexibility to avoid limiting yourself. One Or More Changes To break out of the first limitation, we have to admit that our experiments may contain a single or multiple changes. Whereas the classic hypothesis encourages a single change or isolated variable, it’s not the only way we can run experiments. In the real world, it’s quite normal to see multiple design changes inside a single variation. One valid reason for doing this is when wishing to optimize a section of a website while aiming for a greater effect. As more positive changes compound together, there are times when teams decide to run bigger experiments. An experiment design (along with your hypotheses) therefore should allow for both single or multiple changes. One Or More Metrics A second limitation of many hypotheses is that they often ask us to only make a single prediction at a time. There are times when we might like to make multiple guesses or predictions to a set of metrics. A simple example of this might be a trade-off experiment with a guess of increased sales but decreased trial signups. Being able to express single or multiple metrics in our experimental designs should therefore be possible. Estimates, Directional Predictions, Or Unknowns Finally, traditional hypotheses also tend to force very simple directional predictions by asking us to guess whether something will increase or decrease. In reality, however, the fidelity of predictions can be higher or lower. On one hand, I’ve seen and made experiment estimations that contain specific numbers from prior data (ex: increase sales by 14%). While at other times it should also be acceptable to admit the unknown and leave the prediction blank. One example of this is when we are testing a completely novel idea without any prior data in a highly exploratory type of experiment. In such cases, it might be dishonest to make any sort of predictions and we should allow ourselves to express the unknown comfortably.”

Conclusion

So there you have it! Before you jump on launching a test, start by making sure that your hypothesis is solid and backed by research. Ask yourself the questions below when crafting a hypothesis for marketing experimentation:

- Is the hypothesis backed by research?

- Can the hypothesis be tested?

- Does the hypothesis provide insights?

- Does the hypothesis set the expectation that there will be an explanation behind the results of whatever you’re testing?

Don’t worry! Hypothesizing may seem like a very complicated process, but it’s not complicated in practice especially when you have done proper research.

If you enjoyed reading this article and you’d love to get the best CRO content – delivered by the best experts in the industry – straight to your inbox, every week. Please subscribe here .

Share This Article

Join 25,000+ marketing professionals.

Subscribe to Invesp’s blog feed for future articles delivered to receive weekly updates by email.

Discover Similar Topics

Bayesian vs. Frequentist AB Testing: Which Testing Method Is Better

- Test Categories

Expert Insights: Exploring Multivariate Testing Strategies for Websites in 2024

- A/B Testing , Multivariate Testing

Our Services

- Conversion Optimization Training

- Conversion Rate Optimization Professional Services

- Landing Page Optimization

- Conversion Rate Audit

- Design for Growth

- Conversion Research & Discovery

- End to End Digital Optimization

By Industry

- E-commerce CRO Services

- Lead Generation CRO Services

- SaaS CRO Services

- Startup CRO Program

- Case Studies

- Privacy Policy

- © 2006-2020 All rights reserved. Invesp

Subscribe with us

- US office: Chicago, IL

- European office: Istanbul, Turkey

- +1.248.270.3325

- [email protected]

- Conversion Rate Optimization Services

- © 2006-2023 All rights reserved. Invesp

- Popular Topics

- A/B Testing

- Conversion Optimization

- Copy Writing

- Infographics

- Landing Pages

- Multivariate Testing

- Sales & Marketing

- Sales and Marketing

- Shopping Cart

- Social Media

- Subscribers

- MECLABS AI’s Problem Solver in Action

- MECLABS AI: Harness AI With the Power of Your Voice

- Harnessing MECLABS AI: Transform Your Copywriting and Landing Pages

- MECLABS AI: Overcome the ‘Almost Trap’ and Get Real Answers

- MECLABS AI: A brief glimpse into what is coming!

- Transforming Marketing with MECLABS AI: A New Paradigm

- Creative AI Marketing: Escaping the ‘Vending Machine Mentality’

- AI + Synoptic Layered Approach = Transformed Webpage Conversion

- AI Customer Simulations: A Marketing Revolution!

- The Implied Value Proposition: Three ways to transform your sales copy

Designing Hypotheses that Win: A four-step framework for gaining customer wisdom and generating marketing results

There are smart marketers everywhere testing many smart ideas — and bad ones. The problem with ideas is that they are unreliable and unpredictable . Knowing how to test is only half of the equation. As marketing tools and technology evolve rapidly offering new, more powerful ways to measure consumer behavior and conduct more sophisticated testing, it is becoming more important than ever to have a reliable system for deciding what to test .

Without a guiding framework, we are left to draw ideas almost arbitrarily from competitors, brainstorms, colleagues, books and any other sources without truly understanding what makes them good, bad or successful. Ideas are unpredictable because until you can articulate a forceful “because” statement to why your ideas will work, regardless of how good, they are nothing more than a guess , albeit educated, but most often not by the customer.

20+ years of in-depth research, testing, optimization and over 20,000+ sales path experiments have taught us that there is an answer to this problem, and that answer involves rethinking how we view testing and optimization. This short article touches on the keynote message MECLABS Institute’s founder Flint McGlaughlin will give at the upcoming 2018 A/B Testing Summit virtual conference on December 12-13 th . You can register for free at the link above.

Marketers don’t need better ideas; they need a better understanding of their customer.

So if understanding your customer is the key to efficient and effective optimization and ideas aren’t reliable or predictable, what then? We begin with the process of intensively analyzing existing data, metrics, reports and research to construct our best Customer Theory , which is the articulation of our understanding of our customer and their behavior toward our offer.

Then, as we identify problems/focus areas for higher performance in our funnel, we transform our ideas for solving them into a hypothesis containing four key parts :

- If [we achieve this in the mind of the consumer]

- By [adding, subtracting or changing these elements]

- Then [this result will occur]

- Because [that will confirm or deny this belief/hypothesis about the customer]

By transforming ideas into hypotheses, we orient our test to learn about our customer rather than merely trying out an idea. The hypothesis grounds our thinking in the psychology of the customer by providing a framework that forces the right questions into the equation of what to test . “The goal of a test is not to get a lift, but to get a learning,” says Flint McGlaughlin, “and learning compounds over time.”

Let’s look at some examples of what to avoid in your testing, along with good examples of hypotheses.

“Let’s advertise our top products in our rotating banner — that’s what Competitor X is doing.”

“We need more attractive imagery … Let’s place a big, powerful hero image as our banner. Everyone is doing it.”

“We should go minimalist … It’s modern, sleek and sexy, and customers love it. It’ll be good for our brand. Less is more.”

“If we emphasize and sample the diversity of our product line by grouping our top products from various categories in a slowly rotating banner, we will increase clickthrough and engagement from the homepage because customers want to understand the range of what we have to offer (versus some other value, e.g., quality, style, efficacy, affordability, etc.).”

“If we reinforce the clarity of the value proposition by using more relevant imagery to draw attention to the most important information, we will increase clickthrough and ultimately conversion because the customer wants to quickly understand why we’re different in such a competitive space.”

“If we better emphasize the primary message be reducing unnecessary, less-relevant page elements and changing to a simpler, clearer more readable design, we will increase clickthrough and engagement on the homepage because customers are currently overwhelmed by too much friction on this page.”

The golden rule of optimization is “Specificity converts . ” The more specific/relevant you can be to the individual wants and needs of your ideal customer, the more likely the probability of conversion. To be as specific and relevant as possible to a consumer, we use testing not as merely an idea-trial hoping for positive results, but as a mechanism to fill in the gaps of our understanding that existing data can’t answer. Our understanding of the customer is what powers the efficiency and efficacy of our testing .

In Summary …

Smart ideas only work sometimes, but a framework based on understanding your customer will yield more consistent, more rewarding results that only improve over time. The first key to rethinking your approach to optimization is to construct a robust customer theory articulating your best understanding of your customer. From this, you can transform your ideas into hypotheses that will begin producing invaluable insights to lay the groundwork for how you communicate with your customer.

Looking for ideas to inform your hypotheses? We have created and compiled a 60-page guide that contains 21 crafted tools and concepts, and outlines the unique methodology we have used and tested with our partners for 20+ years. You can download the guide for free here: A Model of Your Customer’s Mind

You might also like …

A/B Testing Summit free online conference – Research your seat to see Flint McGlaughlin’s keynote Design Hypotheses that Win: A 4-step framework for gaining customer wisdom and generating significant results

The Hypothesis and the Modern-Day Marketer

Customer Theory: How we learned from a previous test to drive a 40% increase in CTR

Quin McGlaughlin is currently a Senior Optimization Analyst at MECLABS Institute and full-time distance student at Harvard University, studying psychology and business. He has worked on projects for some of MECLABS’ largest clients ranging from Fortune 50 companies to defense contractors, not-for-profits, major ecommerce organizations and others. He has also served as Vice Chair of Digital Strategy for the Harvard Extension Student Association and provided marketing consulting for small and mid-size businesses.

Low-Hanging Fruit for the Holiday Season: Four simple marketing changes with significant impact

Most Popular MarketingExperiments Articles of 2018

How to Discover Exactly What the Customer Wants to See on the Next Click: 3 critical…

The 21 Psychological Elements that Power Effective Web Design (Part 3)

The 21 Psychological Elements that Power Effective Web Design (Part 2)

The 21 Psychological Elements that Power Effective Web Design (Part 1)

Awesome work.Just wanted to drop a comment and say I am new to your blog and really like what I am reading.Thanks for the share

Leave A Reply Cancel Reply

Your email address will not be published.

Save my name, email, and website in this browser for the next time I comment.

- Quick Win Clinics

- Research Briefs

- A/B Testing

- Conversion Marketing

- Copywriting

- Digital Advertising

- Digital Analytics

- Digital Subscriptions

- E-commerce Marketing

- Email Marketing

- Lead Generation

- Social Marketing

- Value Proposition

- Research Services

- Video – Transparent Marketing

- Video – 15 years of marketing research in 11 minutes

- Lecture – The Web as a Living Laboratory

- Featured Research

Welcome, Login to your account.

Recover your password.

A password will be e-mailed to you.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Strong Hypothesis | Steps & Examples

How to Write a Strong Hypothesis | Steps & Examples

Published on May 6, 2022 by Shona McCombes . Revised on November 20, 2023.

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection .

Example: Hypothesis

Daily apple consumption leads to fewer doctor’s visits.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, other interesting articles, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more types of variables .

- An independent variable is something the researcher changes or controls.

- A dependent variable is something the researcher observes and measures.

If there are any control variables , extraneous variables , or confounding variables , be sure to jot those down as you go to minimize the chances that research bias will affect your results.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

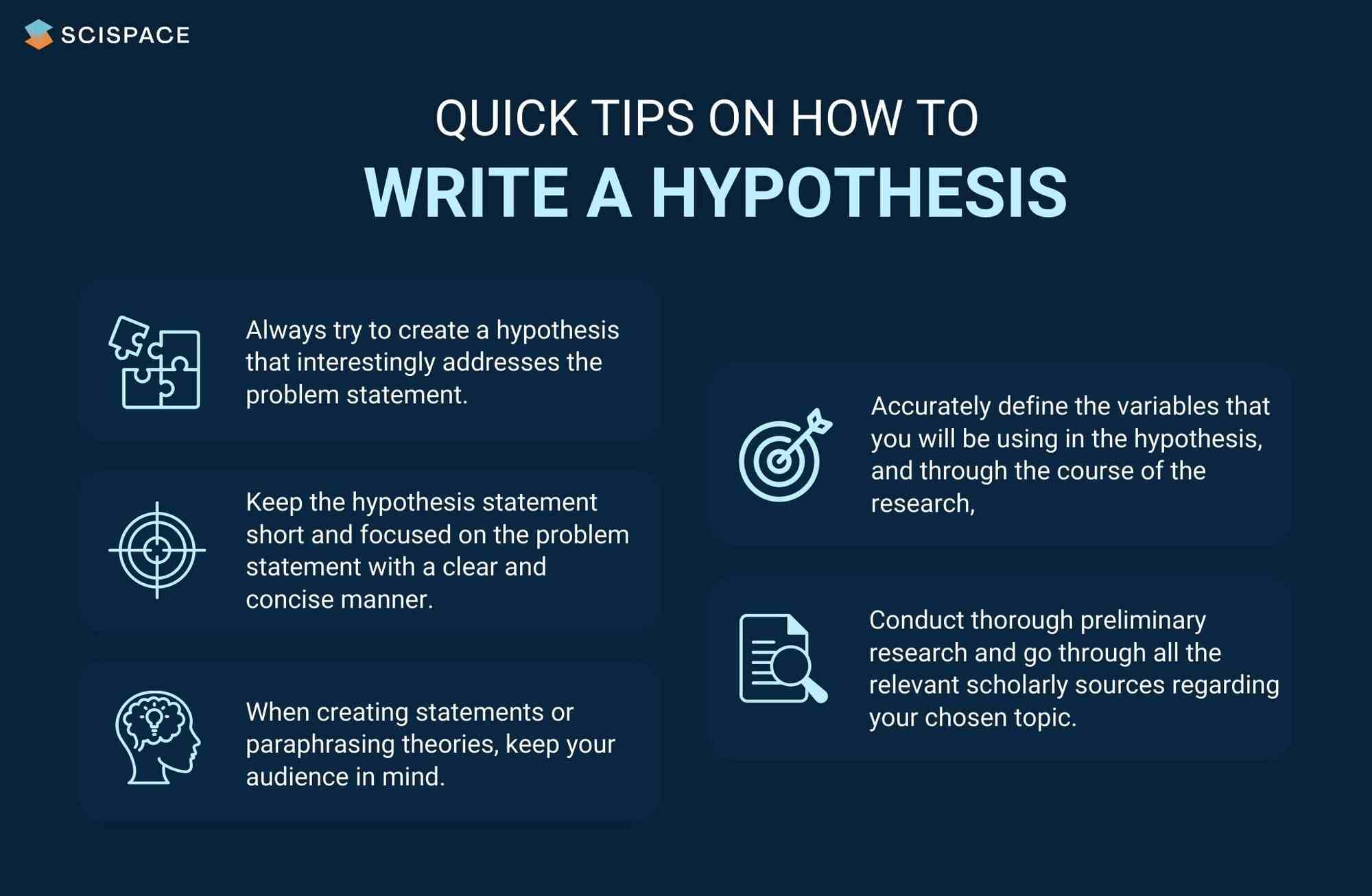

Step 1. Ask a question

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2. Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to ensure that you’re embarking on a relevant topic . This can also help you identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalize more complex constructs.

Step 3. Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

4. Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

5. Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if…then form. The first part of the sentence states the independent variable and the second part states the dependent variable.