Literature Review Example 3 offers an excellent example of a comparative review [ Language and gender ]. This was written by Alastair Pennycook for his undergraduate students as a model of (among other things) of how to structure a review of the literature - for an example of the above structure. - Contact sales (+234) 08132546417

- Have a questions? [email protected]

- Latest Projects

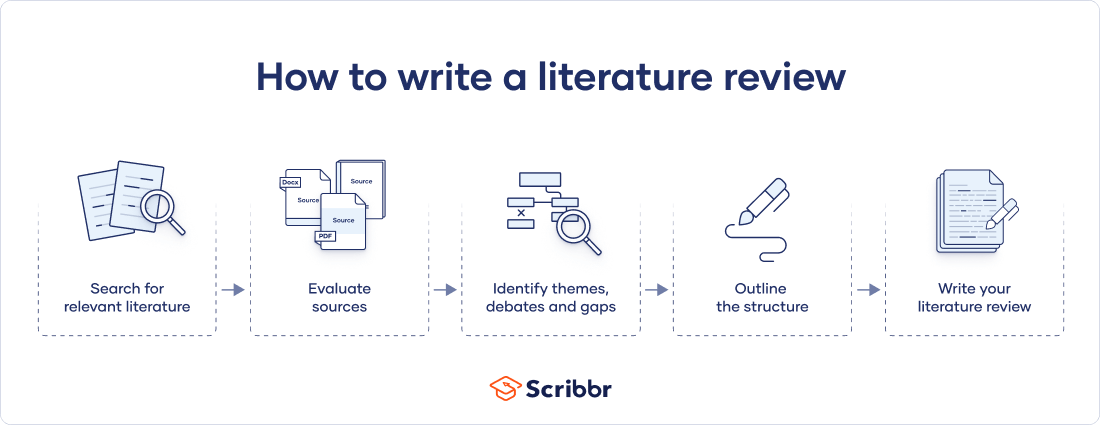

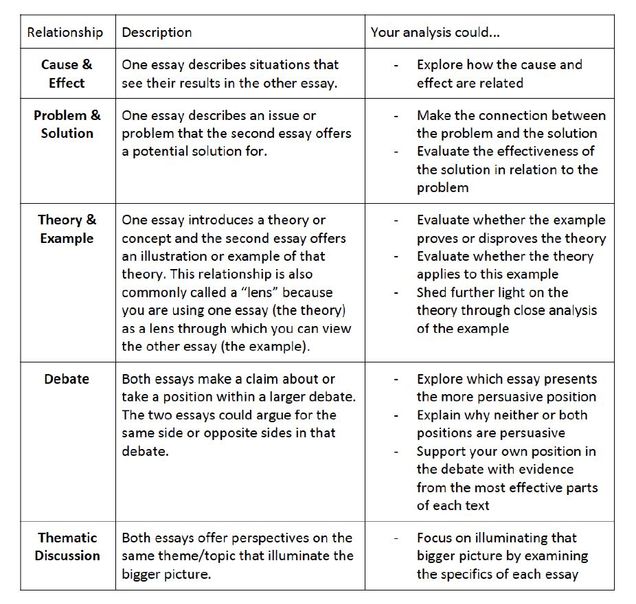

Project MaterialsA step-by-step guide to writing a comparative analysis.  Click Here to Download Now. Do You Have New or Fresh Topic? Send Us Your TopicHow to write a comparative analysis. Writing a comparative review in a research paper is not as difficult as many people might tend to think. With some tips, it is possible to write an outstanding comparative review. There are steps that must be utilized to attain this result. They are as detailed in this article. Within the literary, academic, and journalistic world, analysis allows exposing ideas and arguments in front of a context, making it an important material for discussion within the professional work. Within this genre, we can find a comparative analysis. For some authors, the comparative essay is defined as the text where two opposing positions are proposed or where two theses are verified. Through this comparison, the author intends to make the reader reflect on a specific topic. It consists of giving a written opinion about two positions, which are compared between them to conclude. Do you know how to write a comparative essay? In this article, we will explain step by step how to do it. So, let’s see the guidelines that you must follow to achieve a good comparative analysis . How to write a good comparative analysisThe structure. In general, the approach is developed in the first paragraph or at the beginning of the work. Its objective is to propose the author’s position regarding a specific subject. Generally, this approach specifies the objective to be achieved. You must be clear about what topic you are going to deal with, what you want to explain, and what the perspectives will be to use in your comparative analysis, and you must also define who you write for. As it is a comparative text, it begins with a general observation that can serve as a context for both approaches, then begins by establishing the arguments in each of the two cases. Do not forget to compare both objects of study according to each argument or idea to develop. Let it be the reader himself who finds or defines his position in this essay and choose one of the two alternatives. In this entry, there are two possibilities of approach: one deductive and the other inductive. The deductive method raises the issue, and you use your analysis of the variables leading, guiding the reader to draw their conclusions or fix a position on the issue. While the inductive method starts with argument, developing each of the variables until reaching the topic’s approach or problem. The two ways of approaching the subject are viable. Choose the one that is easiest for you to work with. At the end of this section, your audience should: - First of all, have a clear understanding of what topics you will cover in your essay, what you want to explain, and under what positions or perspectives you will do it. It begins with a general observation that establishes the similarity between the two subjects and then moves the essay’s focus to the concrete.

- The reader should understand which points will be examined and which points will not be examined in the comparison. At the end of the introduction, state your preference, or describe the two subjects’ meaning.

- Your readers should be able to describe the ideas you are going to treat. Make a detailed exposition of its characteristics, history, consequences, and development that you consider appropriate. Your comparative analysis should expose the characteristics of the second position on which you want to speak as much as in the first one.

Development of bodyGenerally, in the body of the essay, the author presents all the arguments that support his thesis, which gives him a reflective and justifying body of the author’s initial statement. Depending on the length of the work, which can range from two to 15 pages, each paragraph or before a title corresponds to an argument’s development. After speaking on the subject, the author must close the essay, must conclude, must show the findings of his work, and/or show the conclusions he reached. You must write a final closing paragraph, as a conclusion, in which you expose a confrontation between the two positions. Try to create a fight between them so that the reader gets involved. The conclusion should give a brief and general summary of the most important similarities and differences. It should end with a personal statement, an opinion, and the “what then?” – what is important about the two things being compared. Readers should be left feeling that all the different threads of this essay have been put together coherently, that they have learned something – and they must be sure that this is the end – that they do not look around for pages missing. And finally, your assessment must explain what position you stand in solidarity and why you prefer it to the other. Examples of how to write a comparative analysisParagraph 1: Messi’s preferred position / Ronaldo’s preferred position. Paragraph 2: Messi’s play style / Ronaldo’s play style. Paragraph 3: Messi aerial game / Ronaldo aerial game. Paragraph 1: Messi teamwork . Paragraph 2: Ronaldo’s teamwork. Paragraph 3: Messi stopped the ball. Paragraph 4: Ronaldo’s stopped the ball. Paragraph 5: Messi’s achievements. Paragraph 6: Ronaldo’s achievements. Few Important Rules for Comparative analysisEven if the exercise sounds simple, there are a few rules that should be followed to help your audience as best as possible make the best decision. 1. Clearly state your positionThe first question is, “Why are you doing a comparison analysis”? To highlight your view or ideas over another, or simply to compare two (or more) solutions that do not belong to you? It is imperative that you clearly state your position to your reader, so does your credibility. Be honest and state, for example: - The idea you are trying to espouse

- The framework you are using

- The reason why you are doing this comparison, the objective

In addition to the above, you must be consistent with the exposition of your ideas. 2. Stay objectiveEven if you include your personal ideology in your comparison, stay as objective as possible. Your readers will not appreciate it when you point out all the disadvantages of one idea while you display the advantages of the other. Your comparison will turn into advertising. You have to raise weak points and strong points on both sides. These analyses are always subjective, so you have to clarify which position convinces you the most. 3. Think about audience’ expectationsThe research paper is intended for your readers, which means that you must take their expectations into account when writing your review. Put aside your desire to sell your desired idea, and take your readers’ perspective: - What information are they interested in?

- What are their criteria?

- What do they want to know?

- What do they want from the product or service?

Again, it is about being objective in all your statements. Do You Have New or Fresh Topic? Send Us Your Topic 4. Organize informationFor your readers to want to read your comparative analysis, it is important to structure your comments. The idea is to make it easy for your readers to navigate your paper and get them to find the information that interests them quickly. 5. End with a conclusionYou’ve tried to be as objective as possible throughout your comparison, and now is the time to let go like we have mentioned many times in this post. In your conclusion, you can go directly to your readers and give your opinion. With a few tips, you can also encourage them to go towards one or the other idea. Note: If time is not an issue, the best way to review the essay is to leave it for one day. Go for a walk, eat something, have fun, and forget. Then it’s time to go back to the text, find problems, and fix them. This must be done separately, that is, first find all the problems you can without correcting them. Although the idea of doing it at the same time is tempting, it is smarter to do it separately. It is effective and fast. Tips on Comparative analysisBe concise or accurate in your analysis and dissertation of the topic. Sometimes the authors believe that the more elaborate the language and the more extensive the writing, the better the writers or essayists. On the contrary, a good essay refers to the exact analysis of a topic, where the reader can dynamically advance the work and understand the author’s position. Use only the arguments necessary for the explanation of the topic, do not talk too much. You run the risk of redundant or repetitive, which makes the text-heavy both when reading it and understanding it. Write in Short SentencesJust as we recommend that you do not redound in your texts, we also encourage you to write with short sentences. They give dynamism to the text. Communication is direct. The reader advances in the text and understands much more. Include Reflections in Your TextSupporting your approach with reflections or quotes from authors makes your essay more important. Above all, use those arguments that justify or give strength to your position regarding one thesis or the other. Text RevisionSince comparative analysis can tend to be a subjective work, you must let it “sit” for a day or a few hours and read it again. This exercise will allow you to make corrections. Modify those aspects that are not clear enough for you. And you can improve it, in a few words. Once you do this exercise, just like this, you can submit it. Not What You Were Looking For? Send Us Your TopicINSTRUCTIONS AFTER PAYMENT - 1.Your Full name

- 2. Your Active Email Address

- 3. Your Phone Number

- 4. Amount Paid

- 5. Project Topic

- 6. Location you made payment from

» Send the above details to our email; [email protected] or to our support phone number; (+234) 0813 2546 417 . As soon as details are sent and payment is confirmed, your project will be delivered to you within minutes. Latest UpdatesInfluence of guidance and counselling on students’ discipline in koforidua technical university, impact of parental educational background on students career development in lagos state, problems and prospects of dissolution of marriage, leave a reply cancel reply. Your email address will not be published. Required fields are marked * Save my name, email, and website in this browser for the next time I comment. This site uses Akismet to reduce spam. Learn how your comment data is processed .  Advertisements- Hire A Writer

- Plagiarism Research Clinic

- International Students

- Project Categories

- WHY HIRE A PREMIUM RESEARCHER?

- UPGRADE PLAN

- PROFESSIONAL PLAN

- STANDARD PLAN

- MBA MSC STANDARD PLAN

- MBA MSC PROFESSIONAL PLAN

DCC Curation Lifecycle Model 2.0: Literature Review and Comparative Analysis Journal TitleJournal issn, volume title, description, collections. - Open access

- Published: 21 August 2024

Evaluating the effectiveness of large language models in abstract screening: a comparative analysis- Michael Li 1 ,

- Jianping Sun 2 &

- Xianming Tan ORCID: orcid.org/0000-0002-5478-2269 1 , 3

Systematic Reviews volume 13 , Article number: 219 ( 2024 ) Cite this article 176 Accesses Metrics details This study aimed to evaluate the performance of large language models (LLMs) in the task of abstract screening in systematic review and meta-analysis studies, exploring their effectiveness, efficiency, and potential integration into existing human expert-based workflows. We developed automation scripts in Python to interact with the APIs of several LLM tools, including ChatGPT v4.0, ChatGPT v3.5, Google PaLM 2, and Meta Llama 2, and latest tools including ChatGPT v4.0 turbo, ChatGPT v3.5 turbo, Google Gemini 1.0 pro, Meta Llama 3, and Claude 3. This study focused on three databases of abstracts and used them as benchmarks to evaluate the performance of these LLM tools in terms of sensitivity, specificity, and overall accuracy. The results of the LLM tools were compared to human-curated inclusion decisions, gold standard for systematic review and meta-analysis studies. Different LLM tools had varying abilities in abstract screening. Chat GPT v4.0 demonstrated remarkable performance, with balanced sensitivity and specificity, and overall accuracy consistently reaching or exceeding 90%, indicating a high potential for LLMs in abstract screening tasks. The study found that LLMs could provide reliable results with minimal human effort and thus serve as a cost-effective and efficient alternative to traditional abstract screening methods. While LLM tools are not yet ready to completely replace human experts in abstract screening, they show great promise in revolutionizing the process. They can serve as autonomous AI reviewers, contribute to collaborative workflows with human experts, and integrate with hybrid approaches to develop custom tools for increased efficiency. As technology continues to advance, LLMs are poised to play an increasingly important role in abstract screening, reshaping the workflow of systematic review and meta-analysis studies. Peer Review reports IntroductionA systematic review is a rigorous form of research that collates and synthesizes all existing evidence on a specific research question [ 1 ]. It stands as a cornerstone not just in medical research but across diverse academic disciplines. Unlike traditional literature reviews, systematic reviews follow a comprehensive and standardized process, such as the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guideline [ 2 ], designed to minimize bias and ensure reproducibility. Therefore, these reviews are recognized as one of the zenith levels of evidence in evidence-based research [ 3 ] and play a pivotal role in shaping clinical guidelines, healthcare policies, and informing medical decisions [ 4 ]. Commencing with a well-articulated research question, a typical systematic review launches an exhaustive search strategy that subsequently sweeps through databases, such as PubMed and Embase, supplemented by additional sources such as clinical trial registries and pertinent article reference lists, with aims to capture all relevant studies and mitigate bias. Predetermined inclusion and exclusion criteria guide the subsequent screening and selection of studies, encompassing facts like study design, patient demographics, and intervention types. Reviewers, working independently, appraise each study’s eligibility, reconciling disagreements through discussions or third-party reviews. Data extraction and synthesis follow, either through meta-analysis or narrative synthesis, depending on the heterogeneity of the selected studies. The practice of conducting systematic reviews has gained substantial popularity with considerable demand within the academic community. A notable reference [ 5 ] to this trend found that approximately 75 trials and potentially 11 systematic reviews are disseminated daily, based on data from around 2010. Moreover, a query for “systematic review” in the Google Scholar database yields approximately 17,000 entries that have been published within the year 2023 alone, which translates into about 51 systematic reviews per day, as observed on November 26, 2023. This expanding volume of literature underscores the critical role that systematic reviews play in the consolidation of research findings across various fields of study. Despite their pivotal role, executing systematic reviews remains a formidable task due to the abstract screening, a key phase that can be overwhelmingly time-consuming due to its volume. For example, in Polanin et al. [ 6 ], the authors reported that the research staff screened 29,846 abstracts independently (14,923 unique citations were double screened) over the course of 189 days. In addition, the variability in reporting standards, use of jargon, and varied study designs can further complicate the abstract screening process [ 7 ]. Nevertheless, the repetitive nature of the task, combined with cognitive fatigue, can lead to human errors [ 8 , 9 ]. Recent advancements in machine learning (ML) and deep learning propose possible solutions to these challenges. However, traditional ML models, while promising, require domain-specific training, a time-consuming process that often demands manual labeling of datasets [ 10 ]. Advancements in natural language processing (NLP) and artificial intelligence (AI) are opening doors to address challenges in systematic reviews. Large language models (LLMs) like ChatGPT [ 11 ], PaLM [ 12 ], Llama [ 13 ], and Claude [ 14 ] are capturing the research community’s attention. Their collective potential, especially their capability to operate without exhaustive domain-specific training, makes them prime candidates for revolutionizing the systematic review process. While each of the aforementioned AI tools brings its unique capabilities to the table, the fundamental question remains: How do they stack up, both individually and collectively, in the realm of abstract screening, against the human expert-based process? In pursuit of answers, this research seeks to thoroughly investigate the potential of ChatGPT, Google PaLM, Llama, and Claude in automating the crucial abstract screening phase integral to systematic reviews. Our goal is to rigorously compare the performance of these advanced AI-driven methods with existing machine learning (ML)-based approaches. In doing so, we aim to develop AI strategies that masterfully balance efficiency and accuracy with minimal human intervention, ultimately transforming systematic review practice across disciplines. The use of NLP for abstract screening is established [ 10 , 15 ]. However, the application of LLMs specifically for this task is a nascent field [ 16 , 17 ]. This emerging area offers significant potential to improve efficiency and accuracy. Our study aims to fill this gap by providing a comprehensive analysis of LLM capabilities in abstract screening, laying the groundwork for future research and application. This is particularly relevant considering the rapid evolution of this technology, highlighting its potential to streamline systematic reviews now and in the future. The remainder of this paper is structured to provide a comprehensive exploration of our topic. We begin with an in-depth examination of existing methods for abstract screening, including both manual and NLP-based approaches, laying the groundwork for understanding the current state of the field. We then introduce the use of large language model (LLM) tools for abstract screening, detailing our experimental design to meticulously evaluate their performance in this context. Subsequent sections present our empirical findings and results, shedding light on the capabilities and limitations of the AI tools in question. Finally, we engage in a thoughtful discussion, reflecting on the implications of our findings and considering the future trajectory of abstract screening in systematic reviews. Existing approaches to abstract screening in systematic reviewsIn the vast realm of systematic reviews, the critical task of abstract screening serves as a foundational step in curating the highest quality of evidence [ 2 ]. However, this process often presents significant challenges due to the involvement of sifting through large volumes of literature to identify those that align with predefined criteria. Over time, various methodologies, ranging from manual evaluations to sophisticated AI-driven techniques, have been proposed to address the complexities of this task. In this section, we first describe the existing approaches on their operational mechanisms and associated advantages and disadvantages. Manual approachHistorically, the process of abstract screening was firmly rooted in manual evaluations. In this conventional approach, individual reviewers would scrutinize each abstract against predefined criteria [ 1 ]. The meticulous nature of this method required that multiple experts independently evaluate the literature to ensure both reliability and reduced biases [ 8 ]. While the depth of human expertise brought about nuanced understanding, the manual nature of this method made it both time-consuming and, at times, prone to human error [ 6 , 9 ]. NLP-based approachAs technology evolved, the field witnessed the incorporation of natural language processing (NLP) to automate abstract screening [ 10 ]. In this framework, abstract text undergoes preprocessing and vectorization. Supervised machine learning models, notably the support vector machine (SVM) and the random forest (RF), are then trained on this vectorized data to classify literature based on specific criteria [ 15 ]. The strength of this approach lies in its potential for efficiency. However, its efficacy and accuracy hinges heavily on the availability of a well-curated, labeled training set. Zero-shot classificationA more recent and innovative approach is zero-shot classification, which was notably highlighted by Xian et al. [ 18 ]. Eschewing the need for an extensive labeled training dataset, zero-shot classification offers the allure of categorizing abstracts without prior domain-specific training. By calculating a probability score for each abstract, researchers obtain a dynamic measure of its alignment with predefined categories. While it does not require training for the machine learning model, the tradeoff is a loss of sensitivity and potential omissions of pertinent studies [ 19 ]. In this study, for a given set of abstracts, we first obtain embeddings (using OpenAI’s text embedding API, the babbage model) for abstracts and for a pre-specified description of an ideal study to include. We indeed use the inclusion/exclusion criteria (see “Examples of abstract screening by using LLMs,” “Automated workflow for streamlining abstract screening via ChatGPT and other tools,” and “ Study design ” sections) as the description of an ideal study to include. We then compute the cosine similarity score between each abstract (its embedding) and the pre-specified description (its embedding). We claim the top 10% (in terms of similarity) of abstracts as positive (i.e., a study should be included). Hybrid approachTo reconcile the strengths and limitations of these models, a hybrid methodology has emerged [ 18 ]. It cleverly marries the rapid categorization capabilities of zero-shot classification with the precision of traditional machine learning models such as SVM and RF. Here, zero-shot classification provides an initial curation of all abstracts in the training set. Then, based on zero-shot classification’s curation, the abstracts with classification score higher than a pre-specified threshold are selected to undergo manual review to rectify zero-shot classification. The rectified classification then serves as a foundation for training traditional models, which are then employed for screening the broader dataset (e.g., in the testing set). The hybrid approach balances the speed of zero-shot and the precision of traditional ML, and potentially offers enhanced accuracy at reduced human efforts. However, this approach involves the use of multiple methodologies and still relies on well-curated, labeled training data (in this case, a subset of the whole training set). In this study, for a given set of abstracts, we rectify the “positive” abstracts (i.e., the top 10% of abstracts) identified by zero-shot, and then fit a classification model for rectified labels to the text embeddings of the corresponding abstracts using SVM. This learned classification model is then used to predict the class of other abstracts. We added the R code for the zero-shot and hybrid approaches to the GitHub repository https://github.com/mikeli380/LLMAbstractScreening . Active learning approachActive learning [ 20 , 21 , 22 ] is an innovative approach to machine learning that optimizes the training process by allowing the model to selectively query a human annotator for labels on the most informative data points. This method is particularly advantageous in scenarios where labeled data is scarce or expensive to obtain. Active learning models can start with minimal datasets, often requiring only one relevant and one irrelevant abstract, making them particularly suitable for tasks such as abstract screening, where the acquisition of large labeled datasets can be prohibitive. The main advantage of active learning is its efficiency. By focusing on the most informative samples, it reduces the amount of data that needs to be labeled while still effectively training the model. This can significantly reduce the time and resources required for the annotation process. However, the effectiveness of active learning depends heavily on the initial selection of samples and the criteria used to determine the informativeness of subsequent data points. If not well calibrated, the model may request labels for data that do not significantly improve its performance, resulting in an inefficient use of resources. In addition, the iterative nature of querying and updating the model based on new labels can introduce complexity into the training process, potentially requiring more sophisticated infrastructure and oversight than traditional supervised learning methods. While this study did not test active learning approaches for abstract screening, readers interested in exploring this methodology further are referred to [ 23 ] for detailed information on the application of active learning in abstract screening contexts. Amidst the evolving methodologies described in the “ Existing approaches to abstract screening in systematic reviews ” section, modern AI tools based on large language models (LLMs), such as ChatGPT, PaLM, Llama, and Claude, are emerging as potential game-changers. Grounded in advanced language processing capabilities, these tools can be tailored to evaluate abstracts against nuanced criteria and offer detailed assessments and classifications. Their prowess signals transformative potential for abstract screening. In this section, we first present two examples to illustrate the potential of LLMs in the context of abstract screening, and then precede with our in-depth discussion on the study’s workflow and design that aims to critically investigate the performance of LLMs in this domain. Examples of abstract screening by using LLMsIn this subsection, we demonstrate the process of LLM-based abstract screening using ChatGPT with two specified abstracts: (1) Millard et al. [ 24 ] and (2) Zawia et al. [ 25 ]. Using other LLM tools such as Llama, Google PaLM, or Claude for abstract screening is similar and will be skipped for brevity. In constructing the prompts, we adopted a standardized approach to mimic a typical interaction between a senior researcher and a research assistant. Each prompt was designed to include three critical elements: a brief statement of the topic under review, the exact eligibility criteria as specified in the study guidelines, and the text of the abstract to be reviewed. This approach was designed to simulate a realistic and practical scenario that reflects common academic practices. In addition, to ensure consistency and objectivity in the responses, we required that the results of the LLM be in a binary “yes” or “no” format, directly indicating whether each abstract met the inclusion criteria. This format was chosen to eliminate any ambiguity in the model’s decisions and to facilitate straightforward analysis of its accuracy. Abstract (1)ChatGPT prompt: “I would like you to help me with conducting a systematic review on animal depression. I will provide the title and abstract for one journal article and would like you to screen the paper for inclusion. Here are the inclusion criteria: Does the study provide primary data of an animal model of depression or depressive-like phenotype with an appropriate control group? Here is the abstract: Wistar-Kyoto (WKY) rats show endogenous depressive behavior that can be reversed by antidepressants. Given that WKYs exhibit decreased sensitivity to some antidepressants and treatment-resistant depressed patients often show hypothalamic-pituitary-thyroid (HPT) dysregulation, we examined the behavioral and HPT hormonal responses of WKYs to altered thyroid status. “Euthyroid” WKYs had elevated basal plasma TSH and T(3) levels as compared to Wistars. Hypothyroidism increased TSH levels more in WKYs than in Wistars and increased response latency in the open field test (OFT) of WKYs only. Administration of T(4) and T(3) suppressed plasma TSH equally in both strains. Wistars responded to increased T(3) levels with decreased response latency and increased activity in the OFT, but increased immobility in the forced swim test. In contrast, WKYs responded only to the high T(3) levels with decreased response latency in the OFT. These results suggest the existence of a decreased central nervous system sensitivity to thyroid hormones in WKYs that could be related to their depressive behavior.” ChatGPT v4 response (accessed on 10/15/2023): Given the abstract and the inclusion criteria, this study appears to meet the inclusion criteria for your systematic review on animal models of depression. | Consideration for inclusion: 1. Animal model and depressive-like phenotype: • The study utilizes Wistar-Kyoto (WKY) rats which are mentioned to show endogenous depressive behavior. • This study investigates behaviors and hormonal responses associated with depression in this particular animal model, with a focus on the role of thyroid hormones. 2. Primary data and appropriate control group: • The study provides primary data on behavioral and hormonal responses of WKY rats, comparing them to Wistars (likely serving as the control group), under altered thyroid status, allowing for comparisons between the depressive-like phenotype and controls. • The utilization of both “Euthyroid” WKYs and Wistars as controls helps in effectively investigating the behavioral changes and responses in WKY rats due to alterations in thyroid status. 3. Behavioral test indicating depression: • Depressive behavior and responses are assessed using standardized tests like the open field test (OFT) and the forced swim test, which are conventional methods to investigate depressive-like behaviors in animal models. • The mention of antidepressant’s impact on WKY rats indicates a focus on depressive-like states. 4. Relevance to human condition: • Insights gained from this study on decreased central nervous system sensitivity to thyroid hormones and its possible relation to depressive behavior in WKYs can have implications in understanding treatment-resistant depression in humans, making it valuable for the review. Conclusion: Given the provided abstract, this study does seem to fit the inclusion criteria for a systematic review on animal depression, as it provides primary data on an animal model demonstrating depressive-like behavior, includes an appropriate control group, and employs standardized tests to study the depressive-like states and their relation to thyroid hormones. The detailed exploration of depressive behaviors and hormonal imbalances in relation to altered thyroid status can offer valuable insights for understanding and treating depression. |

Abstract (2)The induction of the activity of cerebral ornithine decarboxylase (EC 4.1.1.17) and mRNA by electrical stimulation exhibits regional differences. The effects of the enzyme inhibitor difluoromethylornithine on these regional variations was examined. Administration of this inhibitor resulted in pronounced depression of both basal and induced activity of ornithine decarboxylase in the hippocampus. Basal activity of the enzyme in the neocortex and the cerebellum appeared to be resistant to difluoromethylornithine but the induced enzyme activity was sensitive to the effects of this inhibitor. Susceptibility to difluoromethylornithine may be directly correlated with a slower turnover rate for ornithine decarboxylase. These results suggest that ornithine decarboxylase in the hippocampus may possess a longer half-life than its counterparts in other regions of the brain. Pretreatment with difluoromethylornithine had no effect on the induced ornithine decarboxylase mRNA in the neocortex. Thus, elevated activity of ornithine decarboxylase enzyme, due to electrical stimulation, appears to not have any effect on either the transcription or the decay rate of the induced ornithine decarboxylase mRNA. These findings support the concept of region-specific regulation of cerebral ornithine decarboxylase. ” Given the provided abstract and the inclusion criteria, this study does not appear to meet the criteria for inclusion in a systematic review on animal models of depression or depressive-like phenotypes with an appropriate control group. | Consideration for inclusion: 1. Depression or depressive-like phenotype: • The abstract does not provide any information or data related to depression or depressive-like behavior or phenotypes in animals. It is mainly focused on the activity of cerebral ornithine decarboxylase and its regional differences in response to electrical stimulation and inhibitor administration. 2. Animal model: • There is no mention of the use of any specific animal model or any behavioral assays to assess depressive-like symptoms or states in the provided abstract. 3. Behavioral assessment and primary data: • The abstract does not contain any details about behavioral assessments related to depression or depressive-like states. It primarily provides data on the effects of enzyme inhibitor difluoromethylornithine on cerebral ornithine decarboxylase activity and mRNA, but does not relate these findings to depression or depressive-like behaviors. 4. Relevance to depression: • While the study explores biochemical aspects in brain regions like the hippocampus, which is implicated in mood disorders like depression, the connection between the findings and depressive symptoms or states is not established in the provided abstract. Conclusion: This article does not seem to fit the inclusion criteria for a systematic review on animal models of depression or depressive-like phenotypes as it lacks primary data on depressive-like behaviors or phenotypes in animals, and there is no mention of the use of animal models representative of depression or an appropriate control group for such a comparison. |

From the above two examples presented, it is evident that ChatGPT adeptly comprehends the posed queries and efficiently extracted the inclusion and exclusion criteria. It not only makes clear decisions regarding study inclusion but also articulates its rationale by cross-referencing each criterion. It is worth noting that the same ChatGPT prompt might result in slightly different responses between different users due to the variability in its response generation, but the conclusion for the eligibility of an abstract is typically consistent. In addition, enhancing the specificity of the criteria within the prompts can further refine the screening process. To illustrate, consider the following enhanced prompts: Enhanced ChatGPT prompt for abstract (1): “I seek assistance with a systematic review focused on animal depression. I’ll provide the abstract of a particular journal article and would appreciate an assessment for its inclusion based on the following criteria: The study employs an animal model reflecting depression or a depressive-like phenotype. It incorporates an appropriate control group. Primary data is presented within the study. and here are the exclusion criteria: The study bypasses the use of an animal model. The utilized animal model doesn’t characterize depression or a depressive-like phenotype. Absence of a control group within the study. The study is primarily a review, devoid of primary data. Here is the abstract: …”. Let’s assess the provided abstract based on the inclusion and exclusion criteria: | Inclusion criteria: 1. Animal model reflecting depression: The study uses Wistar-Kyoto (WKY) rats, which are described as showing “endogenous depressive behavior.” Thus, this criterion is met. 2. Appropriate control group: The study compares WKY rats to Wistars (another strain), which serves as a comparison or control group. This criterion is met. 3. Primary data: The abstract mentions specific results such as the elevated basal plasma TSH and T(3) levels in “Euthyroid” WKYs compared to Wistars and the different responses in the open field test (OFT). This suggests the presence of primary data. Thus, this criterion is met. | Exclusion criteria: 1. Bypasses the use of an animal model: The study uses WKY rats as an animal model. Thus, this criterion is not met, which is good for inclusion. 2. Animal model doesn’t characterize depression: The WKY rats show “endogenous depressive behavior.” Thus, this criterion is not met, which again supports inclusion. 3. Absence of a control group: The study does use a control group (Wistars). Thus, this criterion is not met, supporting inclusion. 4. Study is primarily a review: The abstract presents experimental data and does not indicate that the study is a review. Thus, this criterion is not met, which is favorable for inclusion. | Conclusion: Given that the abstract meets all the inclusion criteria and none of the exclusion criteria, it should be considered in your systematic review on animal depression. |