- Open access

- Published: 08 November 2016

A systematic literature review of open source software quality assessment models

- Adewole Adewumi 1 ,

- Sanjay Misra ORCID: orcid.org/0000-0002-3556-9331 1 , 2 ,

- Nicholas Omoregbe 1 ,

- Broderick Crawford 3 &

- Ricardo Soto 3

SpringerPlus volume 5 , Article number: 1936 ( 2016 ) Cite this article

7599 Accesses

23 Citations

6 Altmetric

Metrics details

Many open source software (OSS) quality assessment models are proposed and available in the literature. However, there is little or no adoption of these models in practice. In order to guide the formulation of newer models so they can be acceptable by practitioners, there is need for clear discrimination of the existing models based on their specific properties. Based on this, the aim of this study is to perform a systematic literature review to investigate the properties of the existing OSS quality assessment models by classifying them with respect to their quality characteristics, the methodology they use for assessment, and their domain of application so as to guide the formulation and development of newer models. Searches in IEEE Xplore, ACM, Science Direct, Springer and Google Search is performed so as to retrieve all relevant primary studies in this regard. Journal and conference papers between the year 2003 and 2015 were considered since the first known OSS quality model emerged in 2003.

A total of 19 OSS quality assessment model papers were selected. To select these models we have developed assessment criteria to evaluate the quality of the existing studies. Quality assessment models are classified into five categories based on the quality characteristics they possess namely: single-attribute, rounded category, community-only attribute, non-community attribute as well as the non-quality in use models. Our study reflects that software selection based on hierarchical structures is found to be the most popular selection method in the existing OSS quality assessment models. Furthermore, we found that majority (47%) of the existing models do not specify any domain of application.

Conclusions

In conclusion, our study will be a valuable contribution to the community and helps the quality assessment model developers in formulating newer models and also to the practitioners (software evaluators) in selecting suitable OSS in the midst of alternatives.

Prior to the emergence of open source software (OSS) quality models, the McCall, Dromey and ISO 9126 models were already in existence (Miguel et al. 2014 ). These models however did not consider some quality attributes unique to OSS such as community—a body of users and developers formed around OSS who contribute to the software and popularize it (Haaland et al. 2010 ). This gap is what led to the evolution of OSS quality models. Majority of the OSS quality models that exist today are derived from the ISO 9126 quality model (Miguel et al. 2014 ; Adewumi et al. 2013 ). It defines six internal and external quality characteristics, which are functionality, reliability, usability, efficiency, maintainability and portability. ISO 25010 replaced the ISO 9126 in 2010 (ISO/IEC 9126 2001 ), it has the following product quality attributes (ISO/IEC 2501 0 2001): functional suitability, reliability, performance efficiency, operability, security, compatibility, maintainability and transferability. The ISO 25010 quality in use attributes includes effectiveness, efficiency, satisfaction, safety and usability.

It is important to note that ISO 25010 can serve as standard for OSS only in terms of product quality and quality in use. It does not address unique characteristics of OSS such as the community. A key distinguishing feature of OSS is that it is built and maintained by a community (Haaland et al. 2010 ). The quality of this community also determines the quality of the OSS (Samoladas et al. 2008 ). From the literature, community related quality characteristics include (Soto and Ciolkowski 2009 ): maintenance capacity, sustainability, and process maturity. Maintenance capacity refers to the number of contributors to an OSS project and the amount of time they are willing and able to contribute to the development effort as observed from versioning logs, mailing lists, discussion forums and bug report systems. Furthermore, sustainability refers to the ability of the community to grow in terms of new contributors and to regenerate by attracting and engaging new members to take the place of those leaving the community. In addition, process maturity refers to the adoption and use of standard practices in the development process such as submission and review of changes, peer review of changes, provision of a test suite, and planned releases.

Since the advent of the first OSS quality model in 2003 (Adewumi et al. 2013 ), a number of other models have since been derived leading to an increasing collection of OSS quality models. Quality models in general can be classified into three broad categories namely: definition, assessment and prediction models (Ouhbi et al. 2014 , 2015 ; Deissenboeck et al. 2009 ). Generally, OSS quality assessment models outline specific attributes that guide the selection of OSS. The assessment models are very significant because they can help software evaluators to select suitable OSS in the midst of alternatives (Kuwata et al. 2014 ). However, despite the numerous quality assessment models proposed, there is still little or no adoption of these models in practice (Hauge et al. 2009 ; Ali Babar 2010 ). In order to guide the formulation of newer models, there is need to understand the nature of the existing OSS quality assessment models. The aim of this study is to investigate the nature of the existing OSS quality assessment models by classifying them with respect to their quality characteristics, the methodology they use for assessment, and their domain of application so as to guide the formulation and development of newer models. Existing studies on OSS quality assessment models (Miguel et al. 2014 ; Adewumi et al. 2013 ) are largely descriptive reviews that did not seek to classify OSS quality assessment models along specific dimensions, or answer specific research questions. In contrast, this paper employs a methodical, structured, and rigorous analysis of existing literature in order to classify existing OSS quality assessment models and establish a template guide for model developers when they come up with new models. Thus, this study is a systematic literature review that investigates three research questions, namely: (1) what are the key quality characteristics possessed by the OSS assessment models? (2) What selection methods are employed for use in these assessment models? (3) What is the domain of application? In order to conduct this systematic review, the original guidelines proposed by Kitchenham ( 2004 ) have been followed.

The rest of this paper is structured as follows: “ Methods ” section describes the method of obtaining the existing OSS quality models. “ Results ” section presents the results obtained in the study, while “ Summary and discussion ” section discusses the findings of the study. “ Conclusion and future work ” section concludes the paper with a brief note.

This section outlines the research questions posed in this study and also explains in detail the rationale behind each question. It goes on to discuss the search strategy for retrieving the relevant papers; criteria for including any given paper in the study; quality assessment of the retrieved papers as well as how relevant information was extracted from each selected paper.

Research questions

This study aims at gaining insight into the existing OSS quality models and addresses three research questions. The three research questions alongside the rationale motivating each question is presented in Table 1 . These form the basis for defining the search strategy.

Search strategy

A search string was defined based on the keywords derived from the research question as follows: “(Open Source Software OR libre OR OSS or FLOSS or FOSS) AND (model OR quality model OR measurement model OR evaluation model)”.

In order to retrieve the primary studies containing OSS quality models we made use of Scopus digital library. It indexes several renowned scientific journals, books and conference proceedings (e.g. IEEE, ACM, Science Direct and Springer). We considered only papers from (2003 to 2015) since the first OSS quality model emerged in 2003 (Haaland et al. 2010 ; Adewumi et al. 2013 ). We also focused on journal papers and conference proceedings in the subject area of Computer Science that were written in English. A total of 3198 primary studies were initially retrieved. After checking through their titles and abstracts, the number was reduced to 209. To be sure that no paper had been left out, we also performed a search in IEEE Explore, ACM and Springer using the same search string. No new papers were retrieved from this search that had not already been seen from the search in Scopus. Furthermore, a search was performed using Google Search and two relevant articles were retrieved (Duijnhouwer and Widdows 2003 ; Atos 2006 ) and added to make a total of 211 retrieved papers. These papers were read in detail to determine their suitability for inclusion.

Inclusion criteria

Papers proposing cost models and conceptual models were removed. Also position papers and papers that did not present a model for assessing quality in OSS in order to guide selection in the midst of alternatives were also removed. A crosscheck was conducted through the reference list of candidate studies to ensure that no model had been left out. As a result, 19 primary studies were selected, which are further discussed in the next segment of this section.

Quality assessment

Each primary study was evaluated by using the criteria defined in Adewumi et al. ( 2013 ). The criteria are based on four quality assessment (QA) questions:

Are the model’s attributes derived from a known standard (this can be ISO 9126, ISO 25010 or CMMI)?

Is the evaluation procedure of the model adequately described?

Does a tool support the evaluation process?

Is a demonstration of quality assessment using the model provided?

The questions were scored as follows:

Y (yes), the model’s attribute are mostly derived from a known standard, P (Partly), only a few of the model’s attributes are derived from a known standard; N (no), the model’s attributes are not all derived from a known standard.

Y, the evaluation procedure of the model are adequately described; P, the evaluation procedure was described inadequately; N, the evaluation procedure of the model was not described at all.

Y, the evaluation process is fully supported by a tool; P, the evaluation process is partially supported by a tool; N no tool support is provided for the evaluation process.

Y a complete demonstration of quality assessment using the model is provided; P only a partial demonstration of quality assessment using the model is provided; N there is no demonstration of quality assessment using the model provided.

The scoring procedure was Y = 1, P = 0.5, N = 0. The first author coordinated the quality evaluation extraction process. The first author assessed every paper, and assigned 5 papers each to the second, third and fourth authors and 4 papers to the fifth author so they could assess independently. When there was a disagreement, we discussed the issues until we reached agreement.

Data extraction strategy

In this phase, the first author extracted the data while the other four authors checked the extraction. This approach though inconsistent with the medical standards summarized in Kitchenham’s guidelines ( 2004 ) has been found useful in practice (Brereton et al. 2007 ). The first author coordinated the data extraction and checking tasks, which involved all of the authors of this paper. Allocation was not randomized rather it was based on the time availability of the individual researchers. When there was a disagreement, we discussed the issues until we reached agreement.

The selected studies were gleaned to collect the data that would provide the set of possible answers to the research questions. Table 2 shows the data extraction form that was created as an Excel sheet and filled by the first author for each of the papers selected.

From Table 2 it can be observed that the information extracted includes: the Study Ref., title, and classification [publication outlet, publication year and research questions (RQ) 1, 2 and 3].

Quality characteristics that the models in the selected studies can possess include the product quality and the quality in use characteristics of the ISO 25010 namely: functional suitability, reliability, performance efficiency, operability, security, compatibility, maintainability, transferability, effectiveness, efficiency, satisfaction, safety and usability. We also include community related quality characteristics as described in the literature namely (Soto and Ciolkowski 2009 ): maintenance capacity, sustainability and process maturity.

The methods used by assessment models for selection can be classified as (Petersen et al. 2008 ; Wen et al. 2012 ):

Data mining technique such as: Artificial Neural Network, Case-Based Reasoning, Data Envelope Analysis (DEA), Fuzzy Logic etc.

Process: A series of actions, or functions leading to a selection result and performing operations on data

Tool based technique: A technique that greatly employs software tools to accomplish selection task

Model: A system representation that allows for selection based on investigation through a hierarchical structure

Framework: A real or conceptual structure intended to serve as support or guide for selection process

Other, e.g. guidelines

The domain of application can be classified as follows (Forward and Lethbridge 2008 ):

Data dominant software—i.e. consumer-oriented software, business-oriented software, design and engineering software as well as information display and transaction entry

Systems software—i.e. operating systems, networking/communications, device/peripheral drivers, support utilities, middleware and system components, software backplanes (e.g. Eclipse), servers and malware

Control-dominant software—i.e. hardware control, embedded software, real time control software, process control software (e.g. air traffic control, industrial process, nuclear plants)

Computation-dominant software—i.e. operations research, information management and manipulation, artistic creativity, scientific software and artificial intelligence

No domain specified

Synthesis method

The synthesis method was based on:

Counting the number of papers per publication outlet and the number of papers found on a year-wise basis,

Counting the primary studies that are classified in response to each research question,

Presenting charts and frequency tables for the classification results which have been used in the analysis,

Presenting in the discussion a narrative summary with which to recount the key findings of this study.

This section presents the results obtained in response to the research questions posed in this study. Table 3 is a summary of the OSS quality assessment models used in this study, their sources and year of publication. The first column of the table (Study Ref.) represents the reference number of each quality assessment model in ascending order. The table shows that 2009 has the most number of published papers—three publications in total. The year 2003, 2004, 2005 and 2012 have the lowest number of publications—one published paper each. All other years (2007, 2008, 2011, 2013, 2014, 2015) have two published papers.

The studies were assessed for quality using the criteria described in the previous section (see “ Quality assessment ” section). The score for each study is shown in Table 4 . The results of the quality analysis shows that all studies scored above 1 on the proposed quality assessment scale with only one study scoring less than 2. One study scored 4, five studies scored 3.5, five studies scored 3, five studies scored 2.5 and two studies scored 2.

Table 5 shows the summary of the response to the research questions from each of the selected articles. From the table, it can be observed that an assessment model can belong to more than one category for RQ1 (an example is the assessment model in Study Ref. 8 which is single-attribute model, a non-community attribute model and a non-quality in use model).

RQ1. What are the key quality characteristics possessed by the models?

To address RQ1, we performed a comparative study of each identified model against ISO 25010 as well as community related quality characteristics described in “ Background ” section. Based on our comparative study, which is presented in Table 6 , we classify the quality assessment models into five categories, which are discussed as follows:

Single-attribute models: This refers to models that only measure one quality characteristic. Qualification and Selection of Open Source software (QSOS) model (Atos 2006 , Deprez and Alexandre 2008 ), Mathieu and Wray model ( 2007 ), Sudhaman and Thangavel model ( 2015 ) and Open Source Usability Maturity Model (OS-UMM) model (Raza et al. 2012 ) fall into this category. QSOS possesses maintainability as its quality characteristic. Mathieu and Wray as well as Sudhaman and Thangavel models both possess efficiency as their singular quality characteristic. In addition, OS-UMM possesses usability as its singular quality characteristic.

Rounded category models: This refers to models that possess at least one quality characteristic in each of the three categories used for comparison (i.e. product quality, quality in use and community related characteristics). Open Source Maturity Model (OSMM) (Duijnhouwer and Widdows 2003 ), Open Business Readiness Rating (Open BRR) model (Wasserman et al. 2006 ), Source Quality Observatory for Open Source Software (SQO-OSS) model (Samoladas et al. 2008 ; Spinellis et al. 2009 ), Evaluation Framework for Free/Open souRce projecTs (EFFORT) model (Aversano and Tortorella 2013 ), Muller ( 2011 ) and Sohn et al. model ( 2015 ) fall into this category of models. OSMM possesses all the quality characteristics in the product quality category as well as in the community-related quality characteristics but only possesses usability in the quality in use category. Open BRR and EFFORT models both possess all the community-related quality characteristics, some of the product quality characteristics and usability from the quality in use category. SQO-OSS possesses all the community-related quality characteristics, three of the product quality characteristics and effectiveness from the quality in use category. Muller model possesses one characteristic each from the product quality and community-related categories. It also possesses efficiency and usability from the quality in use category. As for Sohn et al. model, it possesses two quality characteristics from the product quality category and one quality characteristic each from the quality in use and community-related quality categories.

Community-only attribute model: This refers to a model that only measures community-related quality characteristics. The only model that fits this description is the Kuwata et al. model ( 2014 ) as seen in Table 6 . The model does not possess any quality characteristic from the product quality or quality in use categories.

Non-community attribute model: This refers to models that do not measure any community-related quality characteristics. QSOS (Atos 2006 ), Sung et al. ( 2007 ), Raffoul et al. ( 2008 ), Alfonzo et al. ( 2008 ), Mathieu and Wray, Chirila et al. (Del Bianco et al. 2010a ), OS-UMM (Raza et al. 2012 ), Sudhaman and Thangavel, and Sarrab and Rehman (Sarrab and Rehman 2014 ) models fall into this category.

Non-quality in use models: This refers to models that do not include any quality in use characteristics in their structure. QSOS (Atos 2006 , Deprez and Alexandre 2008 ), QualOSS (Soto and Ciolkowski 2009 ), OMM (Petrinja et al. 2009 , Del Bianco et al. 2010b , Del Bianco et al. 2011 , Chirila et al. ( 2011 ), Adewumi et al. ( 2013 ), and Kuwata et al. models are the models in this category.

From our classification, it is possible for a particular model to belong to more than one category. QSOS for instance belongs to three of the categories (i.e. it is a single-attribute model, non-community attribute model and non-quality in use model). Mathieu and Wray model ( 2007 ), Chirila et al. model ( 2011 ), OS-UMM (Raza et al. 2012 ), Sudhaman and Thangavel model ( 2015 ), as well as Kuwata et al. model ( 2014 ) all belong to two categories respectively. Precisely, Mathieu and Wray model is a single-attribute model and non-community attribute model. Chirila et al. model is a non-community attribute model as well as a non-quality in use model. OS-UMM is a single attribute model and a non-community attribute model. Sudhaman and Thangavel model is both a single-attribute model and non-community attribute model. Kuwata et al. model is both a community-only attribute model and a non-quality in use model. All the other models belong to a single category and they include: OSMM (Duijnhouwer and Widdows 2003 ), Open BRR (Wasserman et al. 2006 ), Sung et al. ( 2007 ), QualOSS (Soto and Ciolkowski 2009 ), OMM (Petrinja et al. 2009 ), SQO-OSS (Samoladas et al. 2008 ), EFFORT (Aversano and Tortorella 2013 ), Raffoul et al. ( 2008 ), Alfonzo et al. ( 2008 ), Muller ( 2011 ), Adewumi et al. ( 2013 ), Sohn et al. as well as Sarrab and Rehman models ( 2014 ).

Table 6 is a comparative analysis between the OSS quality models presented in Table 3 and the ISO 25010 model. It also features community related characteristics and how they compare with the OSS quality models. Cells marked with ‘x’ indicate that the OSS quality model possesses such characteristic similar to ISO 25010. An empty cell simply means that the OSS quality model does not possess such characteristic as found in ISO 25010.

Figure 1 shows the frequency distribution of the ISO 25010 Product quality characteristics in the OSS quality models we considered. It shows that maintainability is measured by 55% of the existing OSS quality models making it the most common product quality characteristic measured by existing OSS quality models. This is followed by functional suitability, which is measured in 50% of the existing quality models. The least measured are operability, compatibility and transferability that are each measured by 30% of the existing quality models. From Fig. 1 , it can be inferred that the maintainability of a given OSS is of more importance than the functionality it possesses. This is because being an OSS; the code is accessible making it possible to incorporate missing features. However, such missing features can be difficult to implement if the code is not well documented, readable and understandable which are all attributes of maintainable code. Similar inferences can be made as regard the other quality characteristics. For instance, the reliability and security of an OSS can be improved upon if the code is maintainable. In addition, the performance efficiency, operability, compatibility and transferability can all be improved upon with maintainable code.

Frequency distribution of ISO 25010 product quality characteristics in OSS quality models

Figure 2 shows the frequency distribution of the ISO 25010 Quality in Use characteristics in the OSS quality models we considered. It shows that usability is measured by 50% of the existing OSS quality models making it the most commonly measured characteristic in this category. It is followed by effectiveness and efficiency, which are both considered by 15% of the existing OSS quality models. Satisfaction and safety on the other hand are not considered in any of the existing OSS quality models. From Fig. 2 , it can be easily inferred that usability is the most significant attribute under the quality in use category and hence all other attributes in this category add up to define it. In other words, usable OSS is one that is effective in accomplishing specific tasks, efficient in managing system resources, safe for the environment and provides satisfaction to an end-user.

Frequency distribution of ISO 25010 quality in use characteristics in OSS quality models

Figure 3 shows the frequency distribution of community related quality characteristics in the OSS quality models we considered. It shows that maintenance capacity is measured in 45% of the existing OSS quality models making it the most commonly measured attribute in this category. It is closely followed by sustainability that is measured by 40% of the existing OSS quality models. Process maturity is the least measured attribute in this category and is considered in 35% of the existing OSS quality models. It can be inferred from Fig. 3 that evaluators of an OSS quality via its community are mostly interested in the maintenance capacity of such a community in comparison to the sustainability of the community. Also, they are more concerned about the sustainability of the community than the maturity of the community’s processes.

Frequency distribution of community related quality characteristics in OSS quality models

RQ2. What are the methods applied for reaching selection decisions?

Figure 4 depicts the various selection methods adopted in the existing OSS quality models for reaching a decision in the midst of alternatives. The model approach, which entails making system representation that allows for selection based on investigation through a hierarchical structure is the most common selection method used in the existing literature and is used by six (32%) of the existing models. This is followed by the process approach that accounts for use in 21% (four) of the existing models. For the “other” category, three (16%) of the models use a form of guideline in the selection process. Framework approach accounts for 11% while the data mining approach, as well as the tool-based approach both account for 10% each of the existing OSS quality models. In general, it can be observed that more emphasis is placed on non-automated approaches in the existing quality models and so applying these models in real life selection scenarios is usually time-consuming and requires expertise to conduct (Hauge et al. 2009 ; Ali Babar 2010 ).

Selection methods used in OSS quality models

RQ3. What is the domain of application?

Figure 5 depicts the domain of application of the existing OSS quality assessment models. In general, majority of the models do not specify the domain of application. However, for those with specific domain of application, we observed that majority focus on measuring quality in data-dominant software that includes: business-oriented software such as Enterprise Resource Planning and Customer Relationship Management solutions; design and engineering software as well as information display and transaction systems such as issue tracking systems. System software evaluation accounts for 16% while computation-dominant software accounts for 11%.

Domains in which OSS quality models have been applied

Summary and discussion

Principal findings.

From the existing OSS quality models considered in this study, 20% of the models only measure a single quality attribute. Models in this category include: QSOS (which measures maintainability) (Atos 2006 ), Wray and Mathieu (Mathieu and Wray 2007 ) (which measures efficiency), OS-UMM (which measures usability) (Raza et al. 2012 ) and Sudhaman and Thangavel model (which measures efficiency) (Sudhaman and Thangavel 2015 ). Furthermore, 50% of the existing models do not measure community related quality characteristics even though community is what distinguishes OSS from their proprietary counterpart. Models in this category include: QSOS (Atos 2006 ), Sung et al. model ( 2007 ), Raffoul et al. model ( 2008 ), Alfonzo et al. model ( 2008 ), Wray and Mathieu model (Mathieu and Wray 2007 ), Chirila et al. model ( 2011 ), OS-UMM (Raza et al. 2012 ), Sudhaman and Thangavel model ( 2015 ) and Sarrab and Rehman model ( 2014 ). In addition, 35% of the models touch on all categories. They include: OSMM (Duijnhouwer and Widdows 2003 ), Open BRR (Wasserman et al. 2006 ), SQO-OSS (Spinellis et al. 2009 ), EFFORT (Aversano and Tortorella 2013 ), Müller model ( 2011 ) and Sohn et al. model ( 2015 ). Among these models a number of them have been applied to selection scenarios and reported in the literature. A notable example is the EFFORT model, which has been applied to evaluate OSS in the customer relationship management (CRM) domain (Aversano and Tortorella 2011 ) as well as in the enterprise resource-planning (ERP) domain (Aversano and Tortorella 2013 ).

From the existing OSS quality models, it is observed that in the aspect of product quality as defined by ISO 25010, maintainability is the most significant quality characteristic; Usability is the most significant quality in use characteristic in the existing OSS quality models while Maintenance capacity is the most significant community related characteristic in the OSS quality assessment models. Also worthy of note is that satisfaction and safety attributes of quality in use are never considered in the OSS quality models.

The model approach is the most adopted selection method in the existing OSS quality models. The least considered are the tool-based and data mining selection approaches. However, as newer publications emerge we expect to see other approaches and data mining gaining more ground.

Majority (47%) of the existing models do not specify any domain of application. As for those with specific domain of application, a greater percentage focus of data-dominant software especially enterprise resource planning software. Computation-dominant software is the least considered in this regard. Software in this category includes: operations research, information management and manipulation, artistic creativity, scientific software and artificial intelligence software.

From the this study, we also observed that none of the existing models evaluate all the criteria that we laid out, in terms of every quality characteristic under product quality, quality in use, and community related quality characteristics.

Implications of the results

Based on the comparison of the existing quality assessment models, there is clearly no suitable model—each model has its own limitations. As a result, the findings of this analysis have implications especially for practitioners who work towards coming up with new assessment models. They should note the following points in line with the research questions posed in this study:

Emphasis should shift from trying to build comprehensive models (containing all the possible software characteristics) to building models that include only essential quality characteristics. This study has shown that these essential quality characteristics include: maintainability, usability and maintenance capacity of software community. By narrowing down to these three essential quality characteristics, model developers would help to reduce the burden of OSS evaluation via existing quality assessment models, which has been referred to largely as being laborious and time consuming to conduct (Hauge et al. 2009 ; Ali Babar 2010 ).

Newer models should incorporate selection methods that are amenable to automation as this is not the case in most of the existing OSS quality assessment models reviewed in this study. The selection methods mostly adopted are the model (32%), process (21%) and other (16%) such as guidelines, which are not easily amenable to automation (Fahmy et al. 2012 ). Model developers should thus turn their focus to data mining techniques (Leopairote et al. 2013 ), framework or tool-based selection methods, which are currently among the least considered options. The advantage this offers is that it will help quicken the evaluation process resulting in faster decision-making. Following this advice could also bring about increased adoption of the models in practice (Wang et al. 2013 ). In addition, model developers can also consider modeling quality assessment as a multi-criteria decision-making (MCDM) problem so as to facilitate automation as seen in recent studies (Fakir and Canbolat 2008 ; Cavus 2010 , 2011 ). A MCDM problem in this context can be regarded as a process of choosing among available alternatives (i.e. different OSS alternatives) based on a number of attributes (quality criteria). Considering this option opens the model developer to several well-known MCDM methods that amenable to automation such as: DEA, Analytic Hierarchy Process (AHP), and Technique for Order of Preference by Similarity to Ideal Solution (TOPSIS) to mention a few (Zavadskas et al. 2014 ).

From Fig. 5 , it can be observed that 47% of the quality assessment models considered do not mention the domain of application. This implies that most of the models were designed to be domain-independent. As such, domain-independence should be the focus of model developers (Wagner et al. 2015 ). A domain independent model is one that is able to assess quality in various category of OSS including those that are data-dominant, system software, control-dominant and computation-dominant. It should also be able to this with little or no customization. By following this particular consideration, the model proposed can tend to be widely adopted and possibly standardized.

Threats to validity

Construct threats to validity in this type of study is related to the identification of primary studies. In order to ensure that, as many relevant primary studies as possible were included, different synonyms for ‘open source software’ and ‘quality model’ were included in the search string. The first and second author conducted the automatic search for relevant literature independently and the results obtained were harmonized using a spreadsheet application and duplicates were removed. The reference sections of the selected papers were also scanned to ensure that all relevant references had been included. The final decision to include a study for further consideration depended on the agreement of all the authors. If a disagreement arose, then a discussion took place until consensus was reached.

Internal validity has to do with the data extraction and analysis. As previously mentioned, the first author carried out the data extraction of the primary studies and assigned them to the other authors to assess. The first author also participated in assessing all the primary studies and compared his results with those of the other authors and discrepancies in results were discussed until an agreement was reached. The assignment process of the primary studies to the other authors was not randomized because the sample size (number of primary studies) was relatively small and the time availability of each researcher needed to be considered. In order to properly classify the primary studies based on the quality characteristics they possessed, the authors adopted the ISO 25010 model ( 2001 ) as benchmark. All the authors were fully involved in the process of classifying the primary studies and all disagreements where discussed until a consensus was reached.

To mitigate the effects of incorrect data extraction, which can affect conclusion validity, the steps in the selection and data, extraction activity was clearly described as discussed in the previous paragraphs. The traceability between the data extracted and the conclusions was strengthened through the direct generation of charts and frequency tables from the data by using a statistical package. In our opinion, slight differences based on publication selection bias and misclassification would not alter the main conclusions drawn from the papers identified in this study.

As regards the external validity of this study, the results obtained apply specifically to quality assessment models within the OSS domain. Quality assessment models that evaluate quality in proprietary software are not covered. In addition, the validity of the inferences in this paper only concern OSS quality assessment models. This threat is therefore not present in this context. The results of this study may serve as starting point for OSS quality researchers to further identify and classify newer models in this domain.

Conclusion and future work

The overall goal of this study is to analyze and classify the existing knowledge as regards OSS quality assessment models. Papers dealing with these models were identified between 2003 and 2015. 19 papers were selected. The main publication outlets of the papers identified were journals and conference proceedings. The result of this study shows that maintainability is the most significant and ubiquitous product quality characteristic considered in the literature while usability is the most significant attribute in the quality in use category. Maintenance capacity of an OSS community is also a crucial quality characteristic under community related quality characteristics. The most commonly used selection method is the model approach and the least considered are the tool-based and data mining approaches. Another interesting result is that nearly half (47%) of the selected papers do not mention an application domain for the models in their research. More attention should be paid to building models that incorporate only essential quality characteristics. Also, framework, tool-based and data mining selection methods should be given more attention in future model proposals.

This study could help researchers to identify essential quality attributes with which to develop more robust quality models that are applicable in the various software domains. Also, researchers can compare the existing selection methods in order to determine the most effective. As future work, we intend to model OSS quality assessment as a MCDM problem. This will afford us the opportunity to choose from a range of MCDM methods one (or more) that can be used to evaluate quality in OSS across multiple domains.

Abbreviations

customer relationship management

Data Envelope Analysis

Evaluation Framework for Free/Open souRce projecTs

enterprise resource-planning

multi-criteria decision making

Open Business Readiness Rating

Open Source Maturity Model

Open Source Usability Maturity Model

open source software

quality assessment

Qualification and Selection of Open Source software

research question

Source Quality Observatory for Open Source Software

Technique for Order of Preference by Similarity to Ideal Solution

Adewumi A, Misra S, Omoregbe N (2013a) A review of models for evaluating quality in open source software. IERI Proc 4(1):88–92

Article Google Scholar

Adewumi A, Omoregbe N, Misra S (2013) Quantitative quality model for evaluating open source web applications: case study of repository software. In: 16th International conference on computational science and engineering (CSE), Dec 3 2013

Alfonzo O, Domínguez K, Rivas L, Perez M, Mendoza L, Ortega M (2008) Quality measurement model for analysis and design tools based on FLOSS. In: 19th Australian conference on software engineering, Perth, Australia, 26–28 March 2008

Atos (2006), Method for qualification and selection of open source software (QSOS) version 2.0. http://backend.qsos.org/download/qsos-2.0_en.pdf . Accessed 5 Jan 2015

Aversano L, Tortorella M (2011) Applying EFFORT for evaluating CRM open source systems. In: International conference on product-focused software process improvement, Springer, Heidelberg, pp 202–216

Aversano L, Tortorella M (2013) Quality evaluation of FLOSS projects: application to ERP systems. Inf Softw Technol 55(7):1260–1276

Brereton OP, Kitchenham BA, Budgen DT, Khalil M (2007) Lessons from applying the systematic literature review process within the software engineering domain. J Syst Softw 80:571–583

Cavus N (2010) The evaluation of learning management systems using an artificial intelligence fuzzy logic algorithm. Adv Eng Softw 41:248–254

Article MATH Google Scholar

Cavus N (2011) The application of a multi-attribute decision-making algorithm to learning management systems evaluation. Br J Edu Technol 42:19–30

Chirila C, Juratoni D, Tudor D, Cretu V (2011) Towards a software quality assessment model based on open-source statical code analyzers. In: 6th IEEE international conference on computational intelligence and informatics (SACI), May 19 2011

Deissenboeck F, Juergens E, Lochman K, Wagner S (2009) Software quality models: purposes, usage scenarios and requirements. In: ICSE workshop on software quality, May 16 2009

Del Bianco V, Lavazza L, Morasca S, Taibi D, Tosi D (2010a) The QualiSPo approach to OSS product quality evaluation. In: 3rd International workshop on emerging trends in free/libre/open source software research and development, New York

Del Bianco V, Lavazza L, Morasca S, Taibi D, Tosi D (2010b) An investigation of the users’ perception of OSS quality. In: 6th International conference on open source systems, Springer Verlag, pp 15–28

Del Bianco V, Lavazza L, Morasca S, Taibi D (2011) A survey on open source software trustworthiness. IEEE Softw 28(5):67–75

Deprez JC, Alexandre S (2008) Comparing assessment methodologies for free/open source software: OpenBRR and QSOS. In: 9th international conference on product-focused software process improvement (PROFES‘08), Springer, Heidelberg, pp 189–203

Duijnhouwer F, Widdows C (2003) Open source maturity model. http://jose-manuel.me/thesis/references/GB_Expert_Letter_Open_Source_Maturity_Model_1.5.3.pdf Accessed: 5 Jan 2015

Fahmy S, Haslinda N, Roslina W, Fariha Z (2012) Evaluating the quality of software in e-book using the ISO 9126 model. Int J Control Autom 5:115–122

Google Scholar

Fakir O, Canbolat MS (2008) A web-based decision support system for multi-criteria inventory classification using fuzzy AHP methodology. Expert Syst Appl 35:1367–1378

Forward A, Lethbridge TC (2008) A taxonomy of software types to facilitate search and evidence-based software engineering. In: Proceedings of the 2008 conference of the centre for advanced studies on collaborative research, Oct 27 2008

Haaland K, Groven AK, Regnesentral N, Glott R, Tannenberg A, FreeCode AS (2010) Free/libre open source quality models—a comparison between two approaches. In: 4th FLOS international workshop on Free/Libre/Open Source Software, July 2010

Hauge Ø, Østerlie T, Sørensen CF, Gerea M (2009) An empirical study on selection of open source software—preliminary results. In: ICSE workshop on emerging trends in free/libre/open source software research and development, May 18 2009

ISO/IEC 9126 (2001) Software engineering—product quality—part 1: quality model. http://www.iso.org/iso/catalogue_detail.htm?csnumber=22749 Accessed 14 Nov 2015

ISO/IEC 25010 (2010) Systems and software engineering—systems and software product quality requirements and evaluation (SQuaRE)—system and software quality models. http://www.iso.org/iso/catalogue_detail.htm?csnumber=35733 Accessed 14 Oct 2016

Kitchenham BA (2004) Procedures for undertaking systematic reviews. http://csnotes.upm.edu.my/kelasmaya/pgkm20910.nsf/0/715071a8011d4c2f482577a700386d3a/$FILE/10.1.1.122.3308[1].pdf . Accessed 14 Oct 2016

Kuwata Y, Takeda K, Miura H (2014) A study on maturity model of open source software community to estimate the quality of products. Proc Comput Sci 35:1711–1717

Leopairote W, Surarerks A, Prompoon N (2013) Evaluating software quality in use using user reviews mining. In: 10th International joint conference on computer science and software engineering, May 29 2013

Mathieu R, Wray B (2007) The application of DEA to measure the efficiency of open source security tool production. In: AMCIS 2007 proceedings, Dec 31 2007

Miguel JP, Mauricio D, Rodríguez G (2014) A review of software quality models for the evaluation of software products. Int J Soft Eng Appl 5(6):31–53

Müller T (2011) How to choose an free and open source integrated library system. Int Digi Lib Perspect 27(1):57–78

Ouhbi S, Idri A, Fernández-Alemán JL, Toval A (2014) Evaluating software product quality: a systematic mapping study. In: International conference on software process and product measurement, Oct 6 2014

Ouhbi S, Idri A, Fernández-Alemán JL, Toval A (2015) Predicting software product quality: a systematic mapping study. Computación y Sistemas 19(3):547–562

Petersen K, Feldt R, Mujtaba S, Mattsson M (2008) Systematic mapping studies in software engineering. In: 12th International conference on evaluation and assessment in software engineering, Blekinge Institute of Technology, Italy, Jun 26 2008

Petrinja E, Nambakam R, Sillitti A (2009) Introducing the open source maturity model. In: Proceedings of the 2009 ICSE workshop on emerging trends in free/libre/open source software research and development, May 18 2009

Raffoul E, Domínguez K, Perez M, Mendoza LE, Griman AC (2008) Quality model for the selection of FLOSS-based Issue tracking system. In: Proceedings of the IASTED international conference on software engineering, Innsbruck, Austria, 12 Feb 2008

Raza A, Capretz LF, Ahmed F (2012) An open source usability maturity model (OS-UMM). Comput Hum Behav 28(4):1109–1121

Samoladas I, Gousios G, Spinellis D, Stamelos I (2008) The SQO-OSS quality model: measurement based open source software evaluation. In: IFIP International Conference on Open Source Systems. Springer, Milano, pp 237–248

Sarrab M, Rehman OMH (2014) Empirical study of open source software selection for adoption, based on software quality characteristics. Adv Eng Softw 69:1–11

Sohn H, Lee M, Seong B, Kim J (2015) Quality evaluation criteria based on open source mobile HTML5 UI framework for development of cross-platform. Int J Soft Eng Appl 9(6):1–12

Soto M, Ciolkowski M (2009) The QualOSS open source assessment model measuring the performance of open source communities. In: Proceedings of the 3rd international symposium on empirical software engineering and measurement, 15 Oct 2009

Spinellis D, Gousios G, Karakoidas V, Louridas P, Adams PJ, Samoladas I, Stamelos I (2009) Evaluating the quality of open source software. Elect Notes Theor Comp Sci 233:5–28

Stol KJ, Ali Babar, M (2010) Challenges in using open source software in product development: a review of the literature. In: Proceedings of the 3rd international workshop on emerging trends in free/libre/open source software research and development, May 8 2010

Sudhaman P, Thangavel C (2015) Efficiency analysis of ERP projects—software quality perspective. Int J of Proj Manag 33:961–970

Sung WJ, Kim JH, Rhew SY (2007) A quality model for open source software selection. In: Sixth international conference on advanced language processing and web information technology, 22 Aug 2007

Wagner S, Goeb A, Heinemann L, Kläs M, Lampasona C, Lochmann K, Mayr A, Plösch R, Seidl A, Streit J, Trendowicz A (2015) Operationalised product quality models and assessment: the Quamoco approach. Inf and Soft Tech 62:101–123

Wang D, Zhu S, Li T (2013) SumView: a web-based engine for summarizing product reviews and customer opinions. Expert Syst Appl 40:27–33

Wasserman AI, Pal M, Chan C (2006) Business readiness rating for open source. In: Proceedings of the EFOSS Workshop, Como, Italy, 8 Jun 2006

Wen J, Li S, Lin Z, Hu Y, Huang C (2012) Systematic literature review of machine learning based software development effort estimation models. Inf Softw Technol 54(1):41–59

Zavadskas EK, Turskis Z, Kildienė S (2014) State of art surveys of overviews on MCDM/MADM methods. Technol Econ Dev Econ 20:165–179

Download references

Authors’ contributions

AA is a Ph.D. student and has done a significant part of the work under the supervision of SM. SM—is main supervisor of AA and working with him since last 4 years for completion of the work. NO is co-supervisor of AA and provided his continuous guidance in completion of the work. BC and RS—are co-researchers with our software engineering cluster in CU. They both contributed a lot for improving the manuscript (reviewed and added valuable contributions) since the beginning of the work. All authors read and approved the final manuscript.

Acknowledgements

We are thankful to Dr. Olawande Daramola of Computer and Information Science Department for his valuable suggestions and comments for improvement of the work/paper.

Competing interests

The authors declare that they have no competing interests.

Availability of data and materials

The dataset(s) supporting the conclusions of this article is included within the article in Tables 3 , 5 and 6 .

Author information

Authors and affiliations.

Covenant University, Ota, Nigeria

Adewole Adewumi, Sanjay Misra & Nicholas Omoregbe

Atilim University, Ankara, Turkey

Sanjay Misra

Pontificia Universidad Católica de Valparaíso, Valparaiso, Chile

Broderick Crawford & Ricardo Soto

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Sanjay Misra .

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Reprints and permissions

About this article

Cite this article.

Adewumi, A., Misra, S., Omoregbe, N. et al. A systematic literature review of open source software quality assessment models. SpringerPlus 5 , 1936 (2016). https://doi.org/10.1186/s40064-016-3612-4

Download citation

Received : 17 May 2016

Accepted : 27 October 2016

Published : 08 November 2016

DOI : https://doi.org/10.1186/s40064-016-3612-4

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Open source software

- Quality assessment models

Open Source Software Evaluation, Selection, and Adoption: a Systematic Literature Review

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 01 February 2021

An open source machine learning framework for efficient and transparent systematic reviews

- Rens van de Schoot ORCID: orcid.org/0000-0001-7736-2091 1 ,

- Jonathan de Bruin ORCID: orcid.org/0000-0002-4297-0502 2 ,

- Raoul Schram 2 ,

- Parisa Zahedi ORCID: orcid.org/0000-0002-1610-3149 2 ,

- Jan de Boer ORCID: orcid.org/0000-0002-0531-3888 3 ,

- Felix Weijdema ORCID: orcid.org/0000-0001-5150-1102 3 ,

- Bianca Kramer ORCID: orcid.org/0000-0002-5965-6560 3 ,

- Martijn Huijts ORCID: orcid.org/0000-0002-8353-0853 4 ,

- Maarten Hoogerwerf ORCID: orcid.org/0000-0003-1498-2052 2 ,

- Gerbrich Ferdinands ORCID: orcid.org/0000-0002-4998-3293 1 ,

- Albert Harkema ORCID: orcid.org/0000-0002-7091-1147 1 ,

- Joukje Willemsen ORCID: orcid.org/0000-0002-7260-0828 1 ,

- Yongchao Ma ORCID: orcid.org/0000-0003-4100-5468 1 ,

- Qixiang Fang ORCID: orcid.org/0000-0003-2689-6653 1 ,

- Sybren Hindriks 1 ,

- Lars Tummers ORCID: orcid.org/0000-0001-9940-9874 5 &

- Daniel L. Oberski ORCID: orcid.org/0000-0001-7467-2297 1 , 6

Nature Machine Intelligence volume 3 , pages 125–133 ( 2021 ) Cite this article

71k Accesses

207 Citations

162 Altmetric

Metrics details

- Computational biology and bioinformatics

- Computer science

- Medical research

A preprint version of the article is available at arXiv.

To help researchers conduct a systematic review or meta-analysis as efficiently and transparently as possible, we designed a tool to accelerate the step of screening titles and abstracts. For many tasks—including but not limited to systematic reviews and meta-analyses—the scientific literature needs to be checked systematically. Scholars and practitioners currently screen thousands of studies by hand to determine which studies to include in their review or meta-analysis. This is error prone and inefficient because of extremely imbalanced data: only a fraction of the screened studies is relevant. The future of systematic reviewing will be an interaction with machine learning algorithms to deal with the enormous increase of available text. We therefore developed an open source machine learning-aided pipeline applying active learning: ASReview. We demonstrate by means of simulation studies that active learning can yield far more efficient reviewing than manual reviewing while providing high quality. Furthermore, we describe the options of the free and open source research software and present the results from user experience tests. We invite the community to contribute to open source projects such as our own that provide measurable and reproducible improvements over current practice.

Similar content being viewed by others

Causal machine learning for predicting treatment outcomes

Highly accurate protein structure prediction with AlphaFold

Segment anything in medical images

With the emergence of online publishing, the number of scientific manuscripts on many topics is skyrocketing 1 . All of these textual data present opportunities to scholars and practitioners while simultaneously confronting them with new challenges. Scholars often develop systematic reviews and meta-analyses to develop comprehensive overviews of the relevant topics 2 . The process entails several explicit and, ideally, reproducible steps, including identifying all likely relevant publications in a standardized way, extracting data from eligible studies and synthesizing the results. Systematic reviews differ from traditional literature reviews in that they are more replicable and transparent 3 , 4 . Such systematic overviews of literature on a specific topic are pivotal not only for scholars, but also for clinicians, policy-makers, journalists and, ultimately, the general public 5 , 6 , 7 .

Given that screening the entire research literature on a given topic is too labour intensive, scholars often develop quite narrow searches. Developing a search strategy for a systematic review is an iterative process aimed at balancing recall and precision 8 , 9 ; that is, including as many potentially relevant studies as possible while simultaneously limiting the total number of studies retrieved. The vast number of publications in the field of study often leads to a relatively precise search, with the risk of missing relevant studies. The process of systematic reviewing is error prone and extremely time intensive 10 . In fact, if the literature of a field is growing faster than the amount of time available for systematic reviews, adequate manual review of this field then becomes impossible 11 .

The rapidly evolving field of machine learning has aided researchers by allowing the development of software tools that assist in developing systematic reviews 11 , 12 , 13 , 14 . Machine learning offers approaches to overcome the manual and time-consuming screening of large numbers of studies by prioritizing relevant studies via active learning 15 . Active learning is a type of machine learning in which a model can choose the data points (for example, records obtained from a systematic search) it would like to learn from and thereby drastically reduce the total number of records that require manual screening 16 , 17 , 18 . In most so-called human-in-the-loop 19 machine-learning applications, the interaction between the machine-learning algorithm and the human is used to train a model with a minimum number of labelling tasks. Unique to systematic reviewing is that not only do all relevant records (that is, titles and abstracts) need to seen by a researcher, but an extremely diverse range of concepts also need to be learned, thereby requiring flexibility in the modelling approach as well as careful error evaluation 11 . In the case of systematic reviewing, the algorithm(s) are interactively optimized for finding the most relevant records, instead of finding the most accurate model. The term researcher-in-the-loop was introduced 20 as a special case of human-in-the-loop with three unique components: (1) the primary output of the process is a selection of the records, not a trained machine learning model; (2) all records in the relevant selection are seen by a human at the end of the process 21 ; (3) the use-case requires a reproducible workflow and complete transparency is required 22 .

Existing tools that implement such an active learning cycle for systematic reviewing are described in Table 1 ; see the Supplementary Information for an overview of all of the software that we considered (note that this list was based on a review of software tools 12 ). However, existing tools have two main drawbacks. First, many are closed source applications with black box algorithms, which is problematic as transparency and data ownership are essential in the era of open science 22 . Second, to our knowledge, existing tools lack the necessary flexibility to deal with the large range of possible concepts to be learned by a screening machine. For example, in systematic reviews, the optimal type of classifier will depend on variable parameters, such as the proportion of relevant publications in the initial search and the complexity of the inclusion criteria used by the researcher 23 . For this reason, any successful system must allow for a wide range of classifier types. Benchmark testing is crucial to understand the real-world performance of any machine learning-aided system, but such benchmark options are currently mostly lacking.

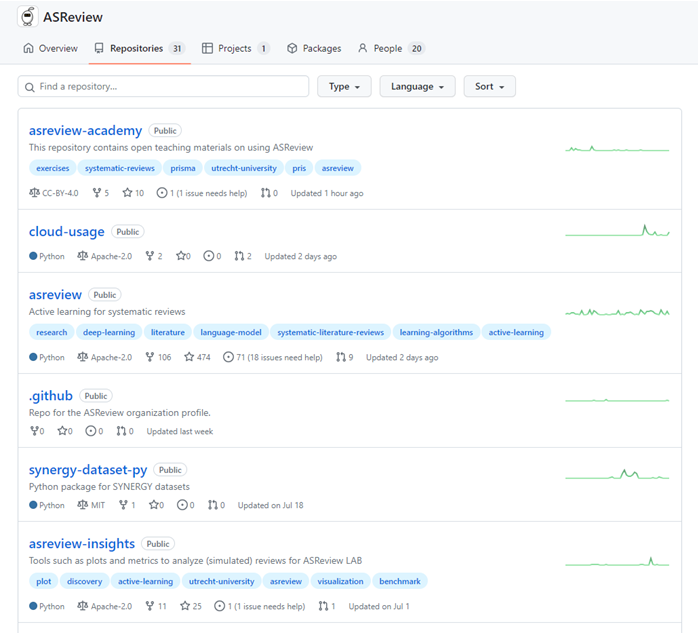

In this paper we present an open source machine learning-aided pipeline with active learning for systematic reviews called ASReview. The goal of ASReview is to help scholars and practitioners to get an overview of the most relevant records for their work as efficiently as possible while being transparent in the process. The open, free and ready-to-use software ASReview addresses all concerns mentioned above: it is open source, uses active learning, allows multiple machine learning models. It also has a benchmark mode, which is especially useful for comparing and designing algorithms. Furthermore, it is intended to be easily extensible, allowing third parties to add modules that enhance the pipeline. Although we focus this paper on systematic reviews, ASReview can handle any text source.

In what follows, we first present the pipeline for manual versus machine learning-aided systematic reviews. We then show how ASReview has been set up and how ASReview can be used in different workflows by presenting several real-world use cases. We subsequently demonstrate the results of simulations that benchmark performance and present the results of a series of user-experience tests. Finally, we discuss future directions.

Pipeline for manual and machine learning-aided systematic reviews

The pipeline of a systematic review without active learning traditionally starts with researchers doing a comprehensive search in multiple databases 24 , using free text words as well as controlled vocabulary to retrieve potentially relevant references. The researcher then typically verifies that the key papers they expect to find are indeed included in the search results. The researcher downloads a file with records containing the text to be screened. In the case of systematic reviewing it contains the titles and abstracts (and potentially other metadata such as the authors’s names, journal name, DOI) of potentially relevant references into a reference manager. Ideally, two or more researchers then screen the records’s titles and abstracts on the basis of the eligibility criteria established beforehand 4 . After all records have been screened, the full texts of the potentially relevant records are read to determine which of them will be ultimately included in the review. Most records are excluded in the title and abstract phase. Typically, only a small fraction of the records belong to the relevant class, making title and abstract screening an important bottleneck in systematic reviewing process 25 . For instance, a recent study analysed 10,115 records and excluded 9,847 after title and abstract screening, a drop of more than 95% 26 . ASReview therefore focuses on this labour-intensive step.

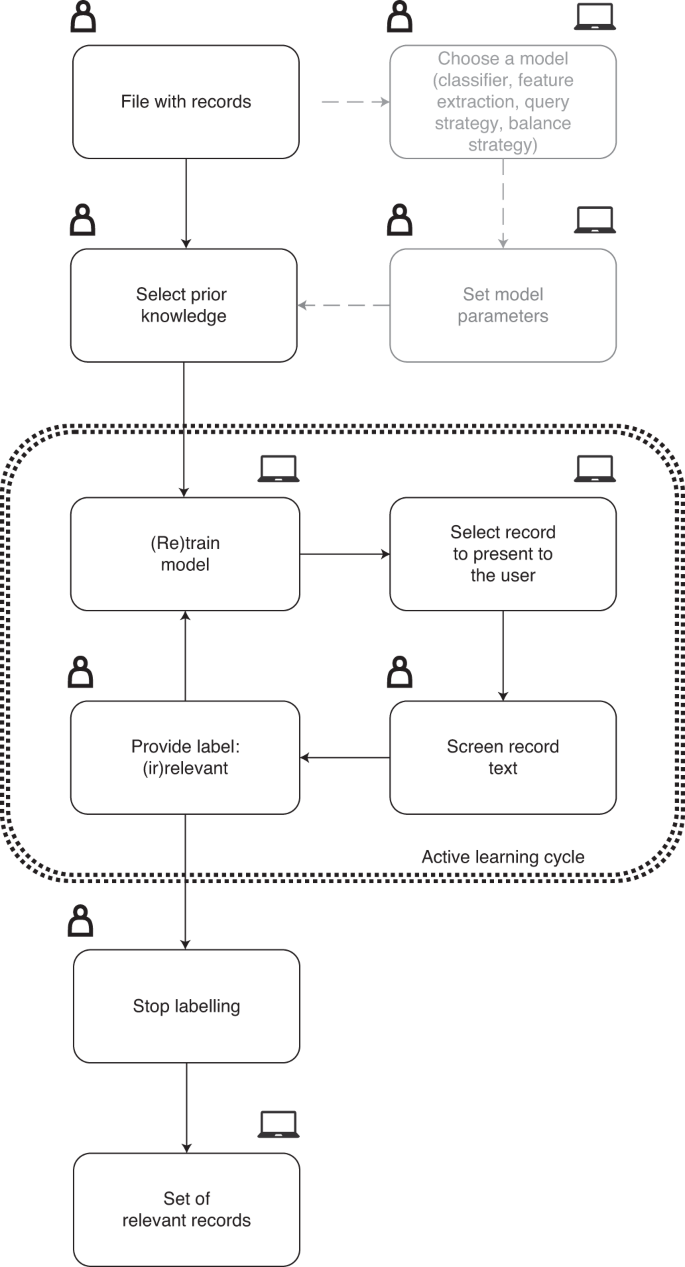

The research pipeline of ASReview is depicted in Fig. 1 . The researcher starts with a search exactly as described above and subsequently uploads a file containing the records (that is, metadata containing the text of the titles and abstracts) into the software. Prior knowledge is then selected, which is used for training of the first model and presenting the first record to the researcher. As screening is a binary classification problem, the reviewer must select at least one key record to include and exclude on the basis of background knowledge. More prior knowledge may result in improved efficiency of the active learning process.

The symbols indicate whether the action is taken by a human, a computer, or whether both options are available.

A machine learning classifier is trained to predict study relevance (labels) from a representation of the record-containing text (feature space) on the basis of prior knowledge. We have purposefully chosen not to include an author name or citation network representation in the feature space to prevent authority bias in the inclusions. In the active learning cycle, the software presents one new record to be screened and labelled by the user. The user’s binary label (1 for relevant versus 0 for irrelevant) is subsequently used to train a new model, after which a new record is presented to the user. This cycle continues up to a certain user-specified stopping criterion has been reached. The user now has a file with (1) records labelled as either relevant or irrelevant and (2) unlabelled records ordered from most to least probable to be relevant as predicted by the current model. This set-up helps to move through a large database much quicker than in the manual process, while the decision process simultaneously remains transparent.

Software implementation for ASReview

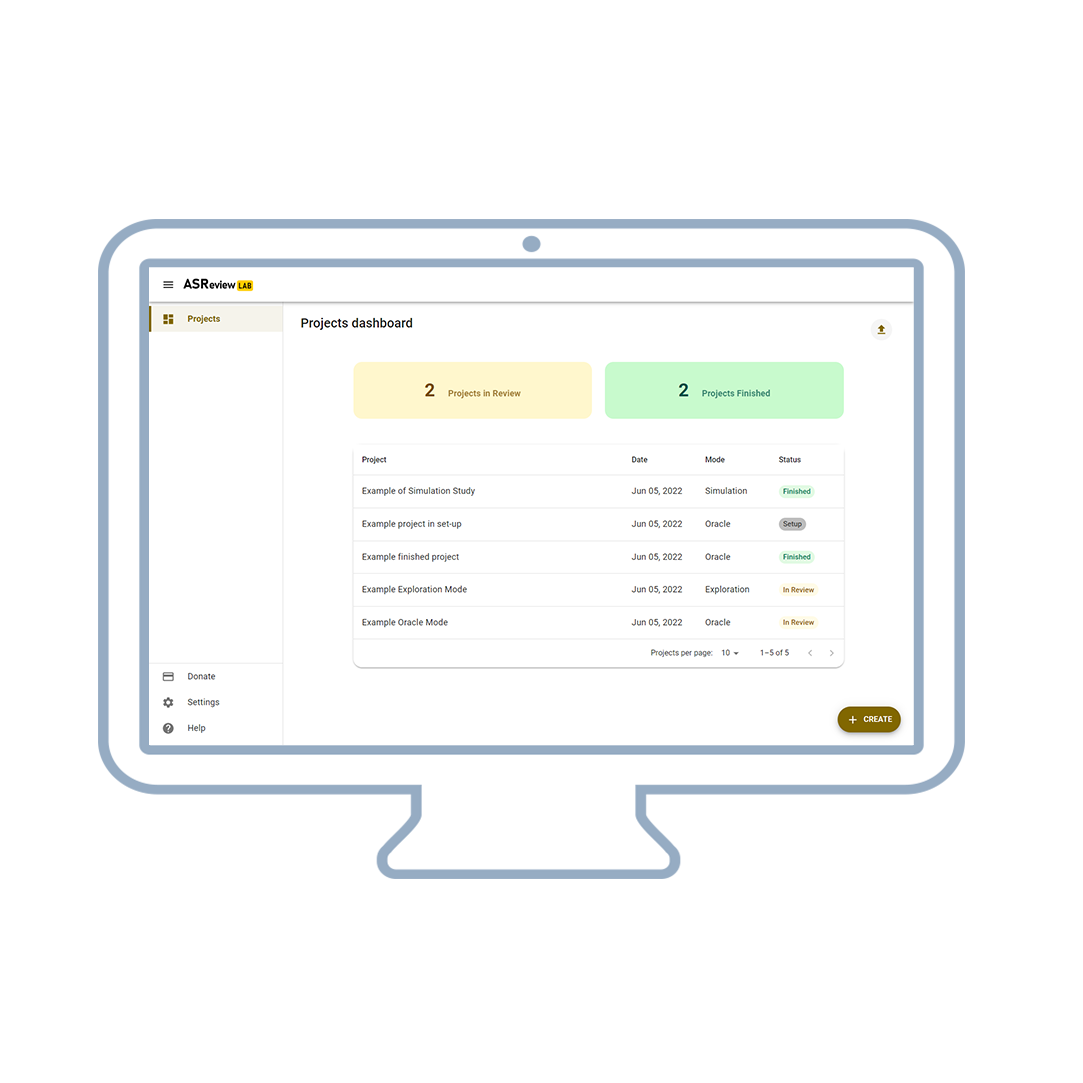

The source code 27 of ASReview is available open source under an Apache 2.0 license, including documentation 28 . Compiled and packaged versions of the software are available on the Python Package Index 29 or Docker Hub 30 . The free and ready-to-use software ASReview implements oracle, simulation and exploration modes. The oracle mode is used to perform a systematic review with interaction by the user, the simulation mode is used for simulation of the ASReview performance on existing datasets, and the exploration mode can be used for teaching purposes and includes several preloaded labelled datasets.

The oracle mode presents records to the researcher and the researcher classifies these. Multiple file formats are supported: (1) RIS files are used by digital libraries such as IEEE Xplore, Scopus and ScienceDirect; the citation managers Mendeley, RefWorks, Zotero and EndNote support the RIS format too. (2) Tabular datasets with the .csv, .xlsx and .xls file extensions. CSV files should be comma separated and UTF-8 encoded; the software for CSV files accepts a set of predetermined labels in line with the ones used in RIS files. Each record in the dataset should hold the metadata on, for example, a scientific publication. Mandatory metadata is text and can, for example, be titles or abstracts from scientific papers. If available, both are used to train the model, but at least one is needed. An advanced option is available that splits the title and abstracts in the feature-extraction step and weights the two feature matrices independently (for TF–IDF only). Other metadata such as author, date, DOI and keywords are optional but not used for training the models. When using ASReview in the simulation or exploration mode, an additional binary variable is required to indicate historical labelling decisions. This column, which is automatically detected, can also be used in the oracle mode as background knowledge for previous selection of relevant papers before entering the active learning cycle. If unavailable, the user has to select at least one relevant record that can be identified by searching the pool of records. At least one irrelevant record should also be identified; the software allows to search for specific records or presents random records that are most likely to be irrelevant due to the extremely imbalanced data.

The software has a simple yet extensible default model: a naive Bayes classifier, TF–IDF feature extraction, a dynamic resampling balance strategy 31 and certainty-based sampling 17 , 32 for the query strategy. These defaults were chosen on the basis of their consistently high performance in benchmark experiments across several datasets 31 . Moreover, the low computation time of these default settings makes them attractive in applications, given that the software should be able to run locally. Users can change the settings, shown in Table 2 , and technical details are described in our documentation 28 . Users can also add their own classifiers, feature extraction techniques, query strategies and balance strategies.

ASReview has a number of implemented features (see Table 2 ). First, there are several classifiers available: (1) naive Bayes; (2) support vector machines; (3) logistic regression; (4) neural networks; (5) random forests; (6) LSTM-base, which consists of an embedding layer, an LSTM layer with one output, a dense layer and a single sigmoid output node; and (7) LSTM-pool, which consists of an embedding layer, an LSTM layer with many outputs, a max pooling layer and a single sigmoid output node. The feature extraction techniques available are Doc2Vec 33 , embedding LSTM, embedding with IDF or TF–IDF 34 (the default is unigram, with the option to run n -grams while other parameters are set to the defaults of Scikit-learn 35 ) and sBERT 36 . The available query strategies for the active learning part are (1) random selection, ignoring model-assigned probabilities; (2) uncertainty-based sampling, which chooses the most uncertain record according to the model (that is, closest to 0.5 probability); (3) certainty-based sampling (max in ASReview), which chooses the record most likely to be included according to the model; and (4) mixed sampling, which uses a combination of random and certainty-based sampling.

There are several balance strategies that rebalance and reorder the training data. This is necessary, because the data is typically extremely imbalanced and therefore we have implemented the following balance strategies: (1) full sampling, which uses all of the labelled records; (2) undersampling the irrelevant records so that the included and excluded records are in some particular ratio (closer to one); and (3) dynamic resampling, a novel method similar to undersampling in that it decreases the imbalance of the training data 31 . However, in dynamic resampling, the number of irrelevant records is decreased, whereas the number of relevant records is increased by duplication such that the total number of records in the training data remains the same. The ratio between relevant and irrelevant records is not fixed over interactions, but dynamically updated depending on the number of labelled records, the total number of records and the ratio between relevant and irrelevant records. Details on all of the described algorithms can be found in the code and documentation referred to above.

By default, ASReview converts the records’s texts into a document-term matrix, terms are converted to lowercase and no stop words are removed by default (but this can be changed). As the document-term matrix is identical in each iteration of the active learning cycle, it is generated in advance of model training and stored in the (active learning) state file. Each row of the document-term matrix can easily be requested from the state-file. Records are internally identified by their row number in the input dataset. In oracle mode, the record that is selected to be classified is retrieved from the state file and the record text and other metadata (such as title and abstract) are retrieved from the original dataset (from the file or the computer’s memory). ASReview can run on your local computer, or on a (self-hosted) local or remote server. Data (all records and their labels) remain on the users’s computer. Data ownership and confidentiality are crucial and no data are processed or used in any way by third parties. This is unique by comparison with some of the existing systems, as shown in the last column of Table 1 .

Real-world use cases and high-level function descriptions

Below we highlight a number of real-world use cases and high-level function descriptions for using the pipeline of ASReview.

ASReview can be integrated in classic systematic reviews or meta-analyses. Such reviews or meta-analyses entail several explicit and reproducible steps, as outlined in the PRISMA guidelines 4 . Scholars identify all likely relevant publications in a standardized way, screen retrieved publications to select eligible studies on the basis of defined eligibility criteria, extract data from eligible studies and synthesize the results. ASReview fits into this process, particularly in the abstract screening phase. ASReview does not replace the initial step of collecting all potentially relevant studies. As such, results from ASReview depend on the quality of the initial search process, including selection of databases 24 and construction of comprehensive searches using keywords and controlled vocabulary. However, ASReview can be used to broaden the scope of the search (by keyword expansion or omitting limitation in the search query), resulting in a higher number of initial papers to limit the risk of missing relevant papers during the search part (that is, more focus on recall instead of precision).

Furthermore, many reviewers nowadays move towards meta-reviews when analysing very large literature streams, that is, systematic reviews of systematic reviews 37 . This can be problematic as the various reviews included could use different eligibility criteria and are therefore not always directly comparable. Due to the efficiency of ASReview, scholars using the tool could conduct the study by analysing the papers directly instead of using the systematic reviews. Furthermore, ASReview supports the rapid update of a systematic review. The included papers from the initial review are used to train the machine learning model before screening of the updated set of papers starts. This allows the researcher to quickly screen the updated set of papers on the basis of decisions made in the initial run.

As an example case, let us look at the current literature on COVID-19 and the coronavirus. An enormous number of papers are being published on COVID-19. It is very time consuming to manually find relevant papers (for example, to develop treatment guidelines). This is especially problematic as urgent overviews are required. Medical guidelines rely on comprehensive systematic reviews, but the medical literature is growing at breakneck pace and the quality of the research is not universally adequate for summarization into policy 38 . Such reviews must entail adequate protocols with explicit and reproducible steps, including identifying all potentially relevant papers, extracting data from eligible studies, assessing potential for bias and synthesizing the results into medical guidelines. Researchers need to screen (tens of) thousands of COVID-19-related studies by hand to find relevant papers to include in their overview. Using ASReview, this can be done far more efficiently by selecting key papers that match their (COVID-19) research question in the first step; this should start the active learning cycle and lead to the most relevant COVID-19 papers for their research question being presented next. A plug-in was therefore developed for ASReview 39 , which contained three databases that are updated automatically whenever a new version is released by the owners of the data: (1) the Cord19 database, developed by the Allen Institute for AI, with over all publications on COVID-19 and other coronavirus research (for example SARS, MERS and so on) from PubMed Central, the WHO COVID-19 database of publications, the preprint servers bioRxiv and medRxiv and papers contributed by specific publishers 40 . The CORD-19 dataset is updated daily by the Allen Institute for AI and updated also daily in the plugin. (2) In addition to the full dataset, we automatically construct a daily subset of the database with studies published after December 1st, 2019 to search for relevant papers published during the COVID-19 crisis. (3) A separate dataset of COVID-19 related preprints, containing metadata of preprints from over 15 preprints servers across disciplines, published since January 1st, 2020 41 . The preprint dataset is updated weekly by the maintainers and then automatically updated in ASReview as well. As this dataset is not readily available to researchers through regular search engines (for example, PubMed), its inclusion in ASReview provided added value to researchers interested in COVID-19 research, especially if they want a quick way to screen preprints specifically.

Simulation study

To evaluate the performance of ASReview on a labelled dataset, users can employ the simulation mode. As an example, we ran simulations based on four labelled datasets with version 0.7.2 of ASReview. All scripts to reproduce the results in this paper can be found on Zenodo ( https://doi.org/10.5281/zenodo.4024122 ) 42 , whereas the results are available at OSF ( https://doi.org/10.17605/OSF.IO/2JKD6 ) 43 .

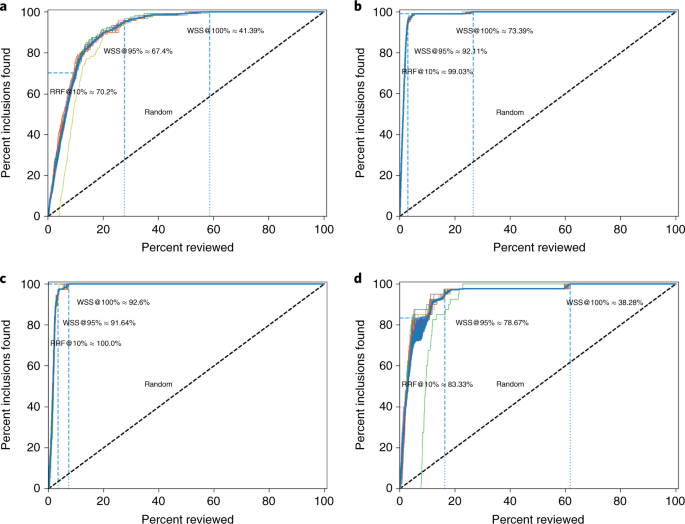

First, we analysed the performance for a study systematically describing studies that performed viral metagenomic next-generation sequencing in common livestock such as cattle, small ruminants, poultry and pigs 44 . Studies were retrieved from Embase ( n = 1,806), Medline ( n = 1,384), Cochrane Central ( n = 1), Web of Science ( n = 977) and Google Scholar ( n = 200, the top relevant references). After deduplication this led to 2,481 studies obtained in the initial search, of which 120 were inclusions (4.84%).

A second simulation study was performed on the results for a systematic review of studies on fault prediction in software engineering 45 . Studies were obtained from ACM Digital Library, IEEExplore and the ISI Web of Science. Furthermore, a snowballing strategy and a manual search were conducted, accumulating to 8,911 publications of which 104 were included in the systematic review (1.2%).

A third simulation study was performed on a review of longitudinal studies that applied unsupervised machine learning techniques to longitudinal data of self-reported symptoms of the post-traumatic stress assessed after trauma exposure 46 , 47 ; 5,782 studies were obtained by searching Pubmed, Embase, PsychInfo and Scopus and through a snowballing strategy in which both the references and the citation of the included papers were screened. Thirty-eight studies were included in the review (0.66%).

A fourth simulation study was performed on the results for a systematic review on the efficacy of angiotensin-converting enzyme inhibitors, from a study collecting various systematic review datasets from the medical sciences 15 . The collection is a subset of 2,544 publications from the TREC 2004 Genomics Track document corpus 48 . This is a static subset from all MEDLINE records from 1994 through 2003, which allows for replicability of results. Forty-one publications were included in the review (1.6%).

Performance metrics

We evaluated the four datasets using three performance metrics. We first assess the work saved over sampling (WSS), which is the percentage reduction in the number of records needed to screen achieved by using active learning instead of screening records at random; WSS is measured at a given level of recall of relevant records, for example 95%, indicating the work reduction in screening effort at the cost of failing to detect 5% of the relevant records. For some researchers it is essential that all relevant literature on the topic is retrieved; this entails that the recall should be 100% (that is, WSS@100%). We also propose the amount of relevant references found after having screened the first 10% of the records (RRF10%). This is a useful metric for getting a quick overview of the relevant literature.