An Introduction to Web Scraping for Research

Like web archiving , web scraping is a process by which you can collect data from websites and save it for further research or preserve it over time. Also like web archiving, web scraping can be done through manual selection or it can involve the automated crawling of web pages using pre-programmed scraping applications.

Unlike web archiving, which is designed to preserve the look and feel of websites, web scraping is mostly used for gathering textual data. Most web scraping tools also allow you to structure the data as you collect it. So, instead of massive unstructured text files, you can transform your scraped data into spreadsheet, csv, or database formats that allow you to analyze and use it in your research.

There are many applications for web scraping. Companies use it for market and pricing research, weather services use it to track weather information, and real estate companies harvest data on properties. But researchers also use web scraping to perform research on web forums or social media such as Twitter and Facebook, large collections of data or documents published on the web, and for monitoring changes to web pages over time. If you are interested in identifying, collecting, and preserving textual data that exists online, there is almost certainly a scraping tool that can fit your research needs.

Please be advised that if you are collecting data from web pages, forums, social media, or other web materials for research purposes and it may constitute human subjects research, you must consult with and follow the appropriate UW-Madison Institutional Review Board process as well as follow their guidelines on “ Technology & New Media Research ”.

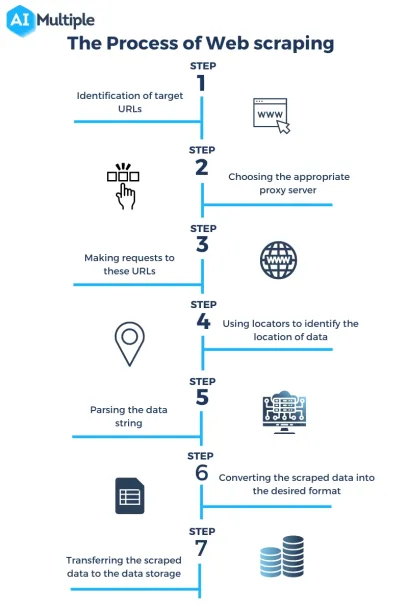

How it Works

The web is filled with text. Some of that text is organized in tables, populated from databases, altogether unstructured, or trapped in PDFs. Most text, though, is structured according to HTML or XHTML markup tags which instruct browsers how to display it. These tags are designed to help text appear in readable ways on the web and like web browsers, web scraping tools can interpret these tags and follow instructions on how to collect the text they contain.

Web Scraping Tools

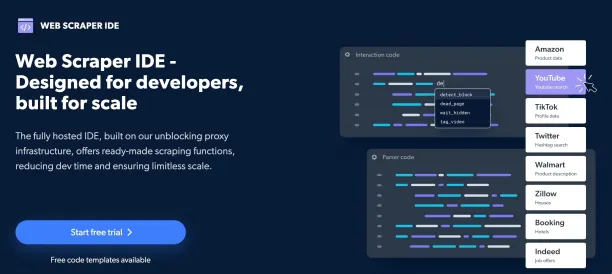

The most crucial step for initiating a web scraping project is to select a tool to fit your research needs. Web scraping tools can range from manual browser plug-ins, to desktop applications, to purpose-built libraries within popular programming languages. The features and capabilities of web scraping tools can vary widely and require different investments of time and learning. Some tools require subscription fees, but many are free and open access.

Browser Plug-in Tools: these tools allow you to install a plugin to your Chrome or Firefox browser. Plug-ins often require more manual work in that you, the user, are going through the pages and selecting what you want to collect. Popular options include:

Scraper : a Chrome plugin Web Scraper.io : Available for Chrome and Firefox

Programming Languages: For large scale, complex scraping projects sometimes the best option is using specific libraries within popular programming languages. These tools require more up front learning, but once set up and going, are largely automated processes. It’s important to remember that to set up and use these tools, you don’t always need to be a programming expert and there are often tutorials that can help you get started. Some popular tools designed for web scraping include:

Scrapy and Beautiful Soup : Python libraries [see tutorial here and here ] rvest : a package in R [see tutorial here ] Apache Nutch : a Java library [see tutorial here ]

Desktop Applications: Downloading one of these tools to your computer can often provide familiar interface features and generally easy to learn workflows. These tools are often quite powerful, but are designed for enterprise contexts and sometimes come with data storage or subscription fees. Some examples include:

Parsehub : Initially free, but includes data limits and subscription storage past those limits Mozenda : Powerful subscription based tool

Application Programming Interface (API): Technically, a web scraping tool is an Application Programming Interface (API) in that it helps the client (you the user) interact with data stored on a server (the text). It’s helpful to know that, if you’re gathering data from a large company like Google, Amazon, Facebook, or Twitter, they often have their own APIs that can help you gather the data. Using these ready-made tools can sometimes save time and effort and may be worth investigating before you initiate a project.

The Ethics of Web Scraping

Included in their introduction to web scraping (using Python), Library Carpentry has produced a detailed set of resources on the ethics of web scraping . These include explicit delineations of what is and is not legal as well as helpful guidelines and best practices for collecting data produced by others. On the page they also include a Web Scraping Code of Conduct that provides quick advice about the most responsible ways to approach projects of this kind.

Overall, it’s important to remember that because web scraping involves the collection of data produced by others, it’s necessary to consider all the potential privacy and security implications involved in a project. Prior to your project, ensure you understand what constitutes sensitive data on campus and reach out to both your IT and IRB about your project so you have a data management plan prior to collecting any websites.

PromptCloud Inc, 16192 Coastal Highway, Lewes De 19958, Delaware USA 19958

- Web Scraping

- Role of Web Scraping in Modern ...

Role of Web Scraping in Modern Research – A Practical Guide for Researchers

Bhagyashree

- January 23, 2024

Imagine you’re deep into research when a game-changing tool arrives – web scraping. It’s not just a regular data collector; think of it as an automated assistant that helps researchers efficiently gather online information. Picture this: data on websites, that are a bit tricky to download in structured formats – web scraping steps in to simplify the process.

Techniques range from basic scripts in languages like Python to advanced operations with dedicated web scraping software. Researchers must navigate legal and ethical considerations , adhering to copyright laws and respecting website terms of use. It’s like embarking on a digital quest armed not only with coding skills but also a sense of responsibility in the vast online realm.

Understanding Legal and Ethical Considerations

When engaging in web scraping for research, it’s important to know about certain laws, like the Computer Fraud and Abuse Act (CFAA) in the United States and the General Data Protection Regulation (GDPR) in the European Union. These rules deal with unauthorized access to data and protecting people’s privacy. Researchers must ensure they:

- Obtain data from websites with public access or with explicit permission.

- Respect the terms of service provided by the website.

- Avoid scraping personal data without consent in compliance with international privacy laws.

- Implement ethical considerations, such as not harming the website’s functionality or overloading servers.

Neglecting these aspects can lead to legal consequences and damage the researcher’s reputation.

Choosing the Right Web Scraping Tool

When selecting a web scraping tool , researchers should consider several key factors:

- Complexity of Tasks

- Ease of Use

- Customization

- Data Export Options

- Support and Documentation

By carefully evaluating these aspects, researchers can identify the web scraping tool that best aligns with their project requirements.

Data Collection Methods: API vs. HTML Scraping

When researchers gather data from web sources, they primarily employ two methods: API (Application Programming Interface) pulling and HTML scraping.

APIs serve as interfaces offered by websites, enabling the systematic retrieval of structured data, commonly formatted as JSON or XML. They are designed to be accessed programmatically and can provide a stable and efficient means of data collection, while typically respecting the website’s terms of service.

- Often provides structured data

- Designed for programmatic access

- Generally more stable and reliable

- May require authentication

- Sometimes limited by rate limits or data caps

- Potentially restricted access to certain data

HTML scraping, in contrast, involves extracting data directly from a website’s HTML code. This method can be used when no API is available, or when the API does not provide the required data.

- Can access any data displayed on a webpage

- No need for API keys or authentication is necessary

- More susceptible to breakage if website layout changes

- Data extracted is unstructured

- Legal and ethical factors need to be considered

Researchers must choose the method that aligns with their data needs, technical capabilities, and compliance with legal frameworks.

Best Practices in Web Scraping for Research

- Respect Legal Boundaries : Confirm the legality of scraping a website and comply with Terms of Service.

- Use APIs When Available : Prefer officially provided APIs as they are more stable and legal.

- Limit Request Rate : To avoid server overload, throttle your scraping speed and automate polite waiting periods between requests.

- Identify Yourself : Through your User-Agent string, be transparent about your scraping bot’s purpose and your contact information.

- Cache Data : Save data locally to minimize repeat requests thus reducing the load on the target server.

- Handle Data Ethically : Protect private information and ensure data usage complies with privacy regulations and ethical guidelines.

- Cite Sources : Properly attribute the source of scraped data in your scholarly work, giving credit to original data owners.

- Use Robust Code : Anticipate and handle potential errors or changes in website structure gracefully to maintain research integrity.

Use Cases: How Researchers Are Leveraging Web Scraping

Researchers are applying web scraping to diverse fields:

- Market Research : Extracting product prices, reviews, and descriptions to analyze market trends and consumer behavior.

- Social Science : Scraping social media platforms for public sentiment analysis and to study communication patterns.

- Academic Research : Collecting large datasets from scientific journals for meta-analysis and literature review.

- Healthcare Data Analysis : Aggregating patient data from various health forums and websites to study disease patterns.

- Competitive Analysis : Monitoring competitor websites for changes in pricing, products, or content strategy.

Web Scraping in Modern Research

A recent article by Forbes explores the impact of web scraping on modern research, emphasizing the digital revolution’s transformation of traditional methodologies. Integration of tools like data analysis software and web scraping has shortened the journey from curiosity to discovery, allowing researchers to rapidly test and refine hypotheses. Web scraping plays a pivotal role in transforming the chaotic internet into a structured information repository, providing a multi-dimensional view of the information landscape.

The potential of web scraping in research is vast, catalyzing innovation and redefining disciplines, but researchers must navigate challenges related to data privacy, ethical information sharing, and maintaining methodological integrity for credible work in this new era of exploration.

Overcoming Common Challenges in Web Scraping

Researchers often encounter multiple hurdles while web scraping. To bypass website structures that complicate data extraction, consider employing advanced parsing techniques. When websites limit access, proxy servers can simulate various user locations, reducing the likelihood of getting blocked.

Overcome anti-scraping technologies by mimicking human behavior: adjust scraping speeds and patterns. Moreover, regularly update your scraping tools to adapt to web technologies’ rapid evolution. Finally, ensure legal and ethical scraping by adhering to the website’s terms of service and robots.txt protocols.

Web scraping, when conducted ethically, can be a potent tool for researchers. To harness its power:

- Understand and comply with legal frameworks and website terms of service.

- Implement robust data handling protocols to respect privacy and data protection.

- Use scraping judiciously, avoiding overloading servers.

Responsible web scraping for research balances information gathering for digital ecosystems. The power of web scraping must be wielded thoughtfully, ensuring it remains a valuable aid to research, not a disruptive force.

Is web scraping detectable?

Yes, websites can detect web scraping using measures like CAPTCHA or IP blocking, designed to identify automated scraping activities. Being aware of these detection methods and adhering to a website’s rules is crucial for individuals engaged in web scraping to avoid detection and potential legal consequences.

What is web scraping as a research method?

Web scraping is a technique researchers use to automatically collect data from websites. By employing specialized tools, they can efficiently organize information from the internet, enabling a quicker analysis of trends and patterns. This not only streamlines the research process but also provides valuable insights, contributing to faster decision-making compared to manual methods.

Is it legal to use web scraped data for research?

The legality of using data obtained through web scraping for research depends on the rules set by the website and prevailing privacy laws. Researchers need to conduct web scraping in a manner that aligns with the website’s guidelines and respects individuals’ privacy. This ethical approach ensures that the research is not only legal but also maintains its credibility and reliability.

Do data scientists use web scraping?

Absolutely, data scientists frequently rely on web scraping as a valuable tool in their toolkit. This technique enables them to gather a substantial volume of data from various internet sources, facilitating the analysis of trends and patterns. While web scraping is advantageous, data scientists must exercise caution, ensuring that their practices align with ethical guidelines and the rules governing web scraping to maintain responsible and legal usage.

Sharing is caring!

Recent post

Driving Hospitality Industry Growth through Data Analysis

- April 28, 2024

Predictive Analytics and Data Extraction: Transforming Decision-Making

Building a Data-Driven Culture: Integrating Web Scraping

- April 24, 2024

Revolutionizing Business Intelligence with Enterprise Data Extraction

Leveraging Web Scraping for Consumer Sentiment Analysis

- April 23, 2024

The Impact of Big Data on Market

More from web scraping.

Are you looking for a custom data extraction service?

- Name * First Last

- Company Name *

- Contact Number *

- Company Email *

- What data type are you looking for? What type of data do you need? Ecommerce Product Data Travel Data Data for AI Jobs Data News, Articles, and Forums Product Reviews Real Estate Listings Airline Data Hotel Listings and Pricing Data Social Media Data Market Research and Analytics Automobile Data Image Extraction Others Please select the data type your project needs

- Requirements *

- I consent to having this website store my submitted information so they can respond to my inquiry.

- Hidden Tags

- Hidden CTA Type

- Email This field is for validation purposes and should be left unchanged.

Please fill up all the fields to submit

- Phone This field is for validation purposes and should be left unchanged.

Scraping Web Data for Marketing Insights

Learn how to use web scraping and APIs to build valid web data sets for academic research.

Journal of Marketing (Vol. 86, Issue 5, 2022)

Learn how to scrape⚡️

Follow technical tutorials on using web scraping and APIs for data retrieval from the web.

Discover datasets and APIs

Browse our directory of public web datasets and APIs for use in academic research projects.

Seek inspiration from 400+ published papers

Explore the database of published papers in marketing using web data (2001-2022).

Stay in the loop

Subscribe to the newsletter and get occasional updates.

Supported by

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- CAREER COLUMN

- 08 September 2020

How we learnt to stop worrying and love web scraping

- Nicholas J. DeVito 0 ,

- Georgia C. Richards 1 &

- Peter Inglesby 2

Nicholas J. DeVito is a doctoral candidate and researcher at the EBM DataLab at the University of Oxford, UK.

You can also search for this author in PubMed Google Scholar

Georgia C. Richards is a doctoral candidate and researcher at the EBM DataLab at the University of Oxford, UK.

Peter Inglesby is a software engineer at the EBM DataLab at the University of Oxford, UK.

In research, time and resources are precious. Automating common tasks, such as data collection, can make a project efficient and repeatable, leading in turn to increased productivity and output. You will end up with a shareble and reproducible method for data collection that can be verified, used and expanded on by others — in other words, a computationally reproducible data-collection workflow.

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Rent or buy this article

Prices vary by article type

Prices may be subject to local taxes which are calculated during checkout

Nature 585 , 621-622 (2020)

doi: https://doi.org/10.1038/d41586-020-02558-0

This is an article from the Nature Careers Community, a place for Nature readers to share their professional experiences and advice. Guest posts are encouraged .

- Research data

Want to make a difference? Try working at an environmental non-profit organization

Career Feature 26 APR 24

Scientists urged to collect royalties from the ‘magic money tree’

Career Feature 25 APR 24

NIH pay rise for postdocs and PhD students could have US ripple effect

News 25 APR 24

AI’s keen diagnostic eye

Outlook 18 APR 24

So … you’ve been hacked

Technology Feature 19 MAR 24

No installation required: how WebAssembly is changing scientific computing

Technology Feature 11 MAR 24

Researchers want a ‘nutrition label’ for academic-paper facts

Nature Index 17 APR 24

Adopt universal standards for study adaptation to boost health, education and social-science research

Correspondence 02 APR 24

How AI is being used to accelerate clinical trials

Nature Index 13 MAR 24

Berlin (DE)

Springer Nature Group

ECUST Seeking Global Talents

Join Us and Create a Bright Future Together!

Shanghai, China

East China University of Science and Technology (ECUST)

Position Recruitment of Guangzhou Medical University

Seeking talents around the world.

Guangzhou, Guangdong, China

Guangzhou Medical University

Junior Group Leader

The Imagine Institute is a leading European research centre dedicated to genetic diseases, with the primary objective to better understand and trea...

Paris, Ile-de-France (FR)

Imagine Institute

Director of the Czech Advanced Technology and Research Institute of Palacký University Olomouc

The Rector of Palacký University Olomouc announces a Call for the Position of Director of the Czech Advanced Technology and Research Institute of P...

Czech Republic (CZ)

Palacký University Olomouc

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

How to use web scraping for online research

Research takes time. Whether it is academic research or market research, speeding up the data extraction process can save you days of work and eliminate the possibility of human error. Manual research is alright when copying and pasting information from a few websites. But what about more extensive projects where you need to collect and sort data from hundreds of web pages?

Enter web scraping . Also referred to as data scraping, data mining, data extraction, screen scraping , or web harvesting, web scraping is an automated solution for all your research needs. You tell the scraper what information you are looking for, and it gets you the data — already neatly organized and structured, so you won’t even have to sort it.

What is online research?

Online research refers to using the internet to retrieve data. This includes any type of website through any type of device. It's hard to find a good word to describe the amount of data in the internet — huge? Astronomical? Infinite?

Not to mention that to extract data manually, you would have to read entire web pages to determine whether they are relevant to your research or not , select relevant information, think of a structure and parameters to catalog it…

How can you use web scraping for online research?

Web scraping is the automated process of extracting structured information from a website. Simply put, your scraper will export data from a website and insert it tidily into a spreadsheet or a file. If you need to extract data for your research, this might just be the shortcut you have been looking for. Here are some examples of how you can use web scraping for online research:

Medical research : Monitoring clinical trial results, patient status, and disease incidence and detection (think of the COVID-19 pandemic) are only a few of the possible uses of web scraping in the healthcare and pharmaceutical field .

Academic research : Scholars search for data and facts for analysis which leads to a greater body of knowledge. Whatever their field of research may be, web scraping certainly speeds up the data collection process and saves energy for analysis, at both the university, think tank , and even individual student level .

News research : We have mentioned a few instances in which web scraping was instrumental to investigative journalism . But you don’t need to be a journalist to use a news web scraper! You might just want to stay up to speed with all the new developments in your professional field (like web design , for example) or receive updates every time your soccer team scores a goal. A web scraper can do that for you and store the results neatly so that you can use them in reports, databases, or just keep them handy for later reference.

Market research : Market research is fundamental to the success of every business. You need to know what your competitors are doing and what consumers want in order to sell. As the saying goes, “keep your friends close and your enemies closer.” And thanks to web scraping, your enemies will be closer than ever. Review websites are great places to find customer feedback on your competitors’ performance and activities. Check out these actors to scrape Yelp or Yellow Pages and make web scraping part of your market research .

Advantages of using web scraping for online research

There are several advantages to using web scraping for research . Research can be a tedious, time-consuming, and expensive business. Sometimes, human or financial resources are scarce, resulting in poor and inaccurate results. Here are the most significant advantages of web scraping in research:

Speed : once you have set the parameters for your research, the web scraper sends your request to the website(s), brings you back the answer, and logs it into a file. It doesn’t require the same time as you to read through the whole web page, select the data you want, copy and paste it into a separate file, and then tidy it all up. If the research project is highly ambitious, the scraper might take a few hours or days — still much less than it would take you manually.

Convenience : you can export all the extracted data to a spreadsheet or file on your device. You won’t have to waste extra time sorting it because the scraper has already done that for you, as any well-designed scraper will extract structured data . The file will also be available offline when you don’t have internet access.

Cost : research is a lot of work. That is why universities, governments, and organizations usually need to hire a research department or consultants. Web scraping drastically reduces the workforce needed for such projects, therefore cutting the prices.

Also, data itself is expensive. If you need to find influencers from a target audience to promote a product, you may not have the capital to buy a database or a directory from a third party. Web scraping allows you to retrieve that data on your own.

Are there risks or downsides to web scraping?

A word of caution to the web scraping beginners. The usual rules related to copyright and especially personal data apply to data extracted through web scraping – plus a few more. Web scraping is legal , but you need to respect international regulations and the target website's terms of service.

Here are the main things to keep in mind when web scraping:

Getting blocked : Whenever you send a request to a website, the website has to send an answer back to you. This happens very fast, but it still takes time and energy. If you send too many requests too fast, you risk significantly slowing down the whole website or even bringing it down. Alternatively, the website could recognize that the requests are automated and block your IP address (temporarily or permanently). To minimize this risk , consider setting a pause for the program between each request and, more importantly, use a proxy server . Apify Proxy was designed with web scraping in mind, while also respecting the websites scraped.

Sharing : You can’t always share the data you extracted from a website. Some content might be licensed or copyrighted. However, you can always share your web scraping code on platforms such as GitHub so that others might use them.

But I don’t know how to program 🤷♀️

You might lack the technical competence needed to build a web scraper yourself. Most people do. But don’t despair: Apify’s no-code tools for extracting data are user-friendly and accessible.

You don’t need to know how to code to use an Apify scraper. The actor scrapes the website in HTML and extracts the data directly in an easily-readable spreadsheet or text format. You can then access the file any time you want for your research, even offline.

Check whether someone in the Apify community has already created the scraper you need in Apify Store or request a custom scraper for your project.

Related articles

How to extract and download news articles online

Top 5 Google Scholar APIs to extract article data

Using web scraping to preserve digital memory of war crimes in Ukraine

Get started now.

- Tools and Resources

- Customer Services

- Econometrics, Experimental and Quantitative Methods

- Economic Development

- Economic History

- Economic Theory and Mathematical Models

- Environmental, Agricultural, and Natural Resources Economics

- Financial Economics

- Health, Education, and Welfare Economics

- History of Economic Thought

- Industrial Organization

- International Economics

- Labor and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Micro, Behavioral, and Neuro-Economics

- Public Economics and Policy

- Urban, Rural, and Regional Economics

- Share This Facebook LinkedIn Twitter

Article contents

Applications of web scraping in economics and finance.

- Piotr Śpiewanowski , Piotr Śpiewanowski Institute of Economics, Polish Academy of Sciences

- Oleksandr Talavera Oleksandr Talavera Department of Economics, University of Birmingham

- and Linh Vi Linh Vi Department of Economics, University of Birmingham

- https://doi.org/10.1093/acrefore/9780190625979.013.652

- Published online: 18 July 2022

The 21st-century economy is increasingly built around data. Firms and individuals upload and store enormous amount of data. Most of the produced data is stored on private servers, but a considerable part is made publicly available across the 1.83 billion websites available online. These data can be accessed by researchers using web-scraping techniques.

Web scraping refers to the process of collecting data from web pages either manually or using automation tools or specialized software. Web scraping is possible and relatively simple thanks to the regular structure of the code used for websites designed to be displayed in web browsers. Websites built with HTML can be scraped using standard text-mining tools, either scripts in popular (statistical) programming languages such as Python, Stata, R, or stand-alone dedicated web-scraping tools. Some of those tools do not even require any prior programming skills.

Since about 2010, with the omnipresence of social and economic activities on the Internet, web scraping has become increasingly more popular among academic researchers. In contrast to proprietary data, which might not be feasible due to substantial costs, web scraping can make interesting data sources accessible to everyone.

Thanks to web scraping, the data are now available in real time and with significantly more details than what has been traditionally offered by statistical offices or commercial data vendors. In fact, many statistical offices have started using web-scraped data, for example, for calculating price indices. Data collected through web scraping has been used in numerous economic and finance projects and can easily complement traditional data sources.

- web scraping

- online prices

- online vacancies

- web-crawler

Web Scraping and the Digital Economy

Today’s economy is increasingly built around data. Firms and individuals upload and store enormous amount of data. This has been made possible thanks to the ongoing digital revolution. The price of data storage and data transfer has decreased to the point where the marginal (incremental) cost of storing and transmitting increasing volumes of data has fallen to virtually zero. The total volume of data created and stored rose from a mere 0.8 Zettabyte (ZB) or trillion gigabytes in 2009 to 33 ZB in 2018 and is expected to reach 175 ZB in 2025 (Reinsel et al., 2017 ). The stored data are shared at an unprecedented speed; in 2017 more than 46,000 GB of data (or four times the size of the entire U.S. Library of Congress) was transferred every second (United Nations Conference on Trade and Development (UNCTAD), 2019 ).

The digital revolution has produced a wealth of novel data that allows not only testing well-established economic hypotheses but also addressing questions on human interaction that have not been tested outside of the lab. Those new sources of data include social media, various crowd-sourcing projects (e.g., Wikipedia), GPS tracking apps, static location data, or satellite imagery. With the Internet of Things emerging, the scope of data available for scraping is set to increase in the future.

The growth of available data coincides with enormous progress in technology and software used to analyze it. Artificial intelligence (AI) techniques enable researchers to find meaningful patterns in large quantities of data of any type, allowing them to find useful information not only in data tables but also in unstructured text or even in pictures, voice recordings, and videos. The use of this new data, previously not available for quantitative analysis, enables researchers to ask new questions and helps avoid omitted variable bias by including information that has been known to others but not included in quantitative research data sets.

Natural language processing (NLP) techniques have been used for decades to convert unstructured text into structured data that machine-learning tools can analyze to uncover hidden connections. But the digital revolution expands the set of data sources useful to researchers to other media. For example, Gorodnichenko et al. ( 2021 ) have recently studied emotions embedded in the voice of Federal Reserve Board governors during press conferences. Using deep learning algorithms, they examined quantitative measures of vocal features of voice recordings such as voice pitch (indicating the level of highness/lowness of a tone) or frequency (indicating the variation in the pitch) to determine the mood/emotion of a speaker. The study shows that the tone of the voice reveals information that has been used by market participants. It is only a matter of time until conceptually similar tools will be used to read emotions from facial expressions.

Most of the produced data is stored on private servers, but a considerable part is made publicly available across the 1.83 billion websites available online that are now up for grabs for researchers equipped with basic web-scraping skills. 1

Many online services used in daily life, including search engines and price and news aggregators, would not be possible without web scraping. In fact, even Google, the most popular search engine as of 2021 , is just a large-scale web crawler. Automated data collection has also been used in business, for example, for market research and lead generation. Thus, unsurprisingly, this data collection method has also received growing attention in social research.

While new data types and data analysis tools offer new opportunities and one can expect a significant increase in use of video, voice, or picture analysis in the future, web scraping is used predominantly to collect text from websites. Using web scraping, researchers can extract data from various sources to build customized data sets to fit individual research needs.

The information available online on, inter alia, prices (e.g., Cavallo, 2017 ; Cavallo & Rigobon, 2016 ; Gorodnichenko & Talavera, 2017 ), auctions (e.g., Gnutzmann, 2014 ; Takahashi, 2018 ), job vacancies (e.g., Hershbein & Kahn, 2018 ; Kroft & Pope, 2014 ), or real estate and housing rental information (e.g., Halket & di Custoza, 2015 ; Piazzesi et al., 2020 ) has allowed refining answers to the well-known economic questions. 2

Thanks to web scraping, the data that until recently have been available only with a delay and in an aggregated form are now available in real time and with significantly more details than what has been traditionally offered by statistical offices or commercial data vendors. For example, in studies of prices, web scraping performed over extended periods of time allows the collection of price data from all vendors in a given area, with product details (including product identifier) in the desired granularity. Studies of the labor market or real estate markets benefit from extracting information from detailed ad descriptions.

The advantages of web scraping have been also noticed by statistical offices and central banks around the world. In fact, many statistical offices have started using web-scraped data, for example, for calculating price indices. However, data quality, sampling, and representativeness are major challenges and so is legal uncertainty around data privacy and confidentiality (Doerr et al., 2021 ). Although this is true for all data types, big data exacerbates the problem: most big data produced are not the final product, but rather a by-product of other applications.

What makes web scraping possible and relatively simple is the regular structure of the code used for websites designed to be displayed in web browsers. Websites built with HTML can be scraped using standard text-mining tools, either scripts in popular (statistical) programming languages such as Python or R or stand-alone dedicated web-scraping tools. Some of those tools do not even require any prior programming skills.

Web scraping allows collecting novel data that are unavailable in traditional data sets assembled by public institutions or commercial providers. Given the wealth of data available online, only researchers’ determination and coding skills determine the final scope of the data set to be explored. With web scraping, data can be collected at a very low cost per observation, compared to other methods. Taking control of the data-collecting process allows data to be gathered at the desired frequency from multiple sources in real time, while the entire process can be performed remotely. Although the set of available data is quickly growing, not all data can be collected online. For example, while price data are easily available, information on quantities sold is typically missing. 3

The rest of this article is organized as follows: “ Web Scraping Demystified ” presents the mechanics of web scraping and introduces some common web-scraping tools including code snippets that can be reused by readers in their own web-scraping exercises. “ Applications in Economics and Finance Research ” shows how those tools have been applied in economic research. Finally, “ Concluding Remarks ” wraps up the subject of web scraping, and suggestions for further reading are offered to help readers to master web-scraping skills.

Web Scraping Demystified

This section will present a brief overview of the most common web-scraping concepts and techniques. Knowing these fundamentals, researchers can decide which methods are most relevant and suitable for their projects.

Introduction to HTML

Before starting a web-scraping project, one should be familiar with Hypertext Markup Language (HTLM). HTML is a global language used to create web pages and has been developed so that all kinds of computers/devices (i.e., PCs, handheld devices) should be able to understand it. HTML allows users to publish online documents with different contents (i.e., headings, text, tables, images, and hyperlinks), incorporate video clips or sounds, and display forms for searching information, ordering products, and so forth. An HTML document is composed of a series of elements, which serve as labels of different contents and tell the web browser how to display them correctly. For example, the <title> element contains the title of the HTML page, the <body> element accommodates all the content of the page, the <img> element contains images, and the <a> element enables creating links to other content. A website with the underlying HTML code can be found here. To view HTML in any browser, one can just right click and choose the Inspect (or Inspect Element) option.

The code here is taken from a HTML document and contains information on the book’s name, author, description and price. 4

Each book’s information is enclosed in the <div> tag with attribute class and value “c-book__body” . The book title is contained in the <a> tag with attribute class and value “c-book__title” . Information on author names is embedded in the <span> tag with attribute class and value “c-book__by” . The description of the book is enclosed within the <p> tag with attribute class=“c-book__description” . Finally, the price information can be found in the <span> tag with attribute class=“c-book__price c-price” . Information on all other books listed on this website is coded in the same way. These regularities allow efficient scraping, which is described in the section “ Responsible Web Scraping .”

To access an HTML page, one should identify its Uniform Resource Locator (URL), which is the web’s address specifying its location on the Internet. A dynamic URL is usually made up of two main parts, with the first one being the base URL, which lets the web browser know how to access the information specified in the server. The next part is the query string that usually follows a question mark. An example of a URL is: Wordery where the base part is https://wordery.com/educational-art-design-YQA , and the query string part is viewBy=grid&resultsPerPage=20&page=1, which consists of specific queries to the website: displaying products in a grid view (viewBy=grid), showing 20 products in each page (resultsPerPage=20), and loading the first page (page=1) of the Educational Art Design books category. The regular structure of URLs is another feature that makes web scraping easy. Changing the page number (the last digit in the preceding URL) from 1 to 2 will display the subsequent 20 results of the query. This process can continue until the last query result is reached. Thus, it is easy to extract all query results with a simple loop.

Responsible Web Scraping

Data displayed by most websites is for public consumption. Recent court rulings, indicate that since publicly available sites cannot require a user to agree to any Terms of Service before accessing data, users are free to use web crawlers to collect data from the sites. 5 Many websites, however, provide (more detailed) data to registered users only, with the registration being conditional on accepting a ban on automated web scraping.

Constraints on web scraping arise also from the potentially excessive use of the infrastructure of the website owners, potentially impairing the service quality. The process of web scraping involves making requests to the host, which in turn will have to process a request and then send a response back. A data-scraping project usually consists of querying repeatedly and loading a large number of web pages in a short period of time, which might lead to overwhelmed traffic, system overload, and potential damages to the server and its users. With this in mind, a few rules in building web scrapers have to be followed to avoid damages such as website crashes.

Before scraping a website’s data, one should check whether there are any restrictions specified by the target website for scraping activities. Most websites provide the Robots Exclusion Protocol (also known as robots.txt file), which tells web crawlers the type/name of pages or files it can or cannot request from the website. This file can usually be found at the top-level directory of a website. For example, the robots.txt file of the Wordery website, which is available at Wordery Robots , states that:

The User-agent line specifies the name of the bot and the accompanying rules are what the bot should adhere to. User-agent is a text string in the header of a request that identifies the type of device, operating system, and browser that are used to access a web page. Normally, if a User-agent is not provided when web scraping, the rules stated for all bots under User-agent: * section should be followed. Allow gives specific URLs that are allowed to request with bots, and, conversely, Disallow specifies disallowed URLs.

In the prior example, Wordery does not allow a web scraper to access and scrape pages containing keywords such as “basket,” “checkout,” “settings,” and “newsletter.” The restrictions stated in robots.txt are not legally binding; however, they are a standard that most web users adhere to. Furthermore, if access to data is conditional on accepting some terms of service of the website, it is highly recommended that all the references to web-scraping activities be checked to make sure the data are obtained legally. Moreover, it is strongly recommended that contact details are included in the User-agent part of the requests.

Web crawlers process text at a substantially faster pace than humans, which, in principle, allows sending many more requests (downloading more web pages) than a human user would send. Thus, one should make sure that the web-scraping process does not affect the performance or bandwidth of the web server in any way. Most web servers will automatically block IP, preventing further access to its pages, if the servers receive too many requests. To limit the risk of being blocked, the program should take temporary pauses between subsequent requests. For more bandwidth-intensive crawlers, scraping should be planned at the time when the targeted websites experience the least traffic, for example, nighttime.

Furthermore, depending on the scale of each project, it might be worthwhile informing the website’s owners of the project or asking whether they have the data available in a structured format. Contacting data providers directly may save on coding efforts, though the data owner’s consent is far from guaranteed.

Typically, the researchers’ data needs are not very disruptive to the websites being scraped and the data are not being used for commercial purposes or re-sold, thus the harm made to the data owners is negligible. Nonetheless, the authors are aware of legal cases filed against researchers using web-scraped data by companies that owned the data and whose interests were affected by publication of research results based on data scraped from those sources. Retaining anonymity of the data sources and the identities of the firms involved in most cases protects the researchers against such risks.

Web-Scraping Tools

There are a wide variety of available tools/techniques that can be used for web scraping and data retrieval. In general, some web-scraping tools require programming knowledge such as Requests and BeautifulSoup libraries in Python, rvest package in R, or Scrapy, while some are more ready to use and require little to no technical expertise, such as iMacros or Visual web ripper. Many of the tools have been around for quite a while and have a large community of users (i.e., stackoverflow.com).

Python Requests and BeautifulSoup

One of the most popular web-scraping techniques is using Requests and BeautifulSoup libraries in Python, which are available for both Python 2.x and 3.x. 6 In addition to having Python installed, it is required to install necessary libraries such as bs4 and requests. The next step is to build a web scraper that sends a request to the website’s server asking the server to return the content of a specific page as an HTML file. The requests module in Python enables the performance of this task. For example, one can retrieve the HTML file from Wordery your online bookshop with the following codes:

Using the BeautifulSoup library could help to transform the complex HTML file into an object with nested data structure—the parse tree—which is easier to navigate and search. To do so, the HTML file has to be passed to the BeautifulSoup constructor to get the object soup as follows:

This example uses the HTML parser html.parser—a built-in parser in Python 3. From the object soup, one can parse all books’ name, author, price, and description from the web page as follows:

Scrapy is a popular open-source application framework written in Python for scraping websites and extracting structured data. 7 This is a programming-oriented method and requires coding skills. One of the main benefits of Scrapy is that it schedules and processes requests asynchronously, hence it can perform very quickly. This means that Scrapy does not need to wait for a request to be finished and processed; it can send another request or do other things in the meantime. Even if some request fails or an error occurs, other requests can keep going. Scrapy is extensible, as it allows users to plug in their own functionality and work on different operating systems.

There are two ways of running Scrapy, which are running from the command line and running from a Python script using Scrapy API. Due to space limitations, this article offers only a brief introduction on how to run Scrapy from the command line. Scrapy is controlled through the Scrapy command-line tool (aka Scrapy tool) and its sub-commands (aka Scrapy commands). There are two types of Scrapy commands: global commands—those that can work without a Scrapy project—and project-only commands—those that work only from inside a Scrapy project. Some examples of global commands are: startproject to create a new Scrapy project or view to show the content of the given URL in the browser as Scrapy would “see” it.

To start a Scrapy project, a researcher would need to install the latest version of Python and pip, since Scrapy supports only Python 3.6+. First, one can create a new Scrapy project using the global command startproject as follows:

This command will create a Scrapy project named wordery under the your_project_dir directory. Specifying your_project_dir is optional; otherwise, your_project_dir will be the same as the current directory.

After creating the project, one needs to go inside the project’s directory by running the following command:

The next task is to write a Python script to create the spider and save it in the file named wordery_spider.py under the wordery/spiders directory. Although adjustable, all Scrapy projects have the same directory structure by default. A Scrapy spider is a class that defines how a certain web page (or a group of pages) will be scraped, including how to perform the crawl (i.e., follow links) and how to extract structured data from the pages (i.e., scraping items). An example of a spider named WorderySpider that scrapes and parses all books’ name, price, and description from the page https://wordery.com/educational-art-design-YQA is :

After creating the spider, the next step involves changing the directory to the top-level directory of the project and using the command crawl to run such a spider:

Finally, the output that looks like the following example can be easily converted into a tabular form:

Another useful web scraping tool is iMacros, a web browser–based application for web automation popular since 2001 . 8 It is provided as a web browser extension (available for Mozilla Firefox, Google Chrome, and Internet Explorer) or a stand-alone application. iMacros can be easy to start with and requires little to no programming skills. It allows users to record repetitious tasks once and to automatically replay such tasks when needed. Furthermore, there is also an iMacros API that enables users to write script with various Windows programming languages.

After installation, the iMacros add-on can be found on the top bar of the browser. An iMacros web-scraping project normally starts by recording a macro—a set of commands for the browser to perform—in the iMacros panel. iMacros can record mouse clicks on various elements of a web page and translate them into TAG commands. Although simple tasks (e.g., navigating to a URL) can be easily recorded, more complicated tasks (e.g., looping through all items) might require some modifications of the recorded code.

As an illustration, the macro shown here asks the browser to access and extract information from the page https://wordery.com/educational-art-design-YQA :

Stata is a popular statistical software that enables researchers to carry out simple web-scraping tasks. First, one can use the readfile command to load the HTML file content from the website into a string. The next step is to extract product information that requires intensive work to handle string variables. A wide range of Stata string functions can be applied, such as splitting string, extracting substring, and searching string (i.e., regular expression). Otherwise, one might also rely on user-written packages such as readhtml , although its functions are limited to reading tables and lists from web pages. However, text manipulation operations are still limited in Stata, and it is recommended to combine Stata with Python.

The following Stata codes can be used to parse books’ information from the Wordery website:

Other Tools

Visual scraper.

Besides browser extensions, there are several ready-to-use data extraction desktop applications. For instance, iMacros has Professional Edition, which includes a stand-alone browser. This application is very easy to use because it allows scripting, recording, and data extraction. Similar applications are Visual Web Ripper, Helium Scraper, ScrapeStorm, and WebHarvy.

Cloud-Based Solutions

Cloud-based services are among the most flexible web-scraping tools since they are not operating system–dependent (they are accessible from the web browser and hence do not require installation), the extracted data is stored in the cloud, and their processing power is unrivaled by most systems. Most cloud-based services provide IP rotation to avoid getting blocked by the websites. Some cloud-based web-scraping providers are Octoparse, ParseHub, Diffbot, and Mozenda. These solutions have numerous advantages but might come with a higher cost than other web-scraping methods.

Recognizing the need for users to collect information, many websites also make their data available and directly retrievable through an open Application Programming Interface (API). While a user interface is designed for use by humans, APIs are designed for use by a computer or application. Web APIs act as intermediaries or means of communication between websites and users. Specifically, they determine the types of requests that can be made, how to make them, the data formats that should be used, and the rules to adhere to. APIs enable users to achieve data quickly and flexibly by requesting it directly from the database of the website, using their own programming language of choice.

A large number of companies and organizations offer free public APIs. Examples of open APIs are those of social media networks such as Facebook and Twitter, governments/international organizations such as the United States, France, Canada, and the World Bank, as well as companies such as Google and Skyscanner. For example, Reed.co.uk, a leading U.K. job portal, offers an open job seeker API, which allows job seekers to search all the jobs posted on this site. To get the API key, one can access the web page and sign up with an email address; then, an API key will be sent to the registered mailbox. This jobseeker API provides detailed job information, such as the ID of the employer, profile ID of the employer, location of the job, type of job, posted wage (if available), and so forth.

If APIs are available, it is usually much easier to collect data through an API than through web scraping. Furthermore, data scraping with API is completely legal. The data copyrights remain with the data provider, but this is not a limitation for use of data in research.

However, there are several challenges in collecting data using an API. First of all, the types and amount of data that are freely available through an API might be very limited. Data owners often set rate limits, based on time, the time between two consecutive queries, or number of concurrent queries and the number of records returned per query, which can significantly slow down collection of large data sets. Also, the scope of data available through free APIs may be limited. Those restrictions sometimes are lifted in return for a fee. An example is Google’s Cloud Translation API for language detection and text translation. The first 500,000 characters of text per month will be free of charge, but then fees will be charged for any over-the-limit characters. Finally, some programming skills are required to use API, though for major data providers one can easily find API wrappers written by users of major statistical software.

Applications in Economics and Finance Research

The use of web scraping in economic research started nearly as soon as when the first data started to be published on the Web (see Edelman, 2012 for a review of early web-scraping research in economics). In the early days of the Internet (and web scraping), data sets on a few hundreds of observations were considered rich enough to give insights sufficient for publication in a top journal (e.g., Roth & Ockenfels, 2002 ). As the technology matures and the amount of information available online increases, expectations on the size and quality of the scraped data are also getting higher. Low computation and storage costs allow the processing of data sets with billions of scraped observations (e.g., the Billion Prices Project, Cavallo & Rigobon, 2016 ). Web-scraping practice is present in a wide range of economic and finance research areas, including online prices, job vacancies, peer-to-peer lending, and house-sharing markets.

Online Prices

Together with the tremendous rise of e-commerce, online price data have received growing interest from economic researchers as an alternative to traditional data sources. The Billion Price Project (BPP) created at MIT by Cavallo and Rigobon in 2008 seeks to gather a massive amount of prices every day from hundreds of online retailers in the world. While the U.S. Bureau of Labor Statistics can gather only 80,000 prices on a monthly or bimonthly basis, the BPP can reach half a million price quotes in the United States each day. Collecting conventional-store prices is usually expensive and complicated, whereas retrieving online prices can come at a much lower cost (Cavallo & Rigobon, 2016 ). Moreover, detailed information for each product can be collected at a higher frequency (i.e., hourly, daily). Web scraping also allows researchers to quickly update the exit of products and introduction of new products. Furthermore, Cavallo ( 2018 ) points out that the use of Internet price data can help mitigate measurement biases (i.e., time averaging and imputation of missing prices) in traditionally collected data.

Several works in the online price literature focus on very narrow market segments. For example, in the context of online book retailing, Boivin et al. ( 2012 ) collected more than 141,000 price quotes of 213 books sold on major online book websites in the United States (Amazon.com and BN.com) and Canada (Amazon.ca and Chapters.ca) on every Monday, Wednesday, and Friday from June 2008 to June 2009 . They documented extensive international market segmentation and pervasive deviations from the law of one price. In a study on air ticket prices, Dudás et al. ( 2017 ) gathered more than 31,000 flight fares over a period of 182 days from three online travel agencies (Expedia, Orbitz, Cheaptickets) and two metasearch sites (Kayak, Skyscanner) for flights from Budapest to three popular European travel destinations (i.e., London, Paris, and Barcelona) using iMacros. They found that metasearch engines outperform online travel agencies in offering lower ticket prices and that there is no website that offers the lowest airfares constantly.

As price-comparison websites (PCWs) have gained popularity among consumers looking for the cheapest prices, these shopping platforms have become a promising data source for various studies. For instance, Baye and Morgan ( 2009 ) used a program written in Perl to download almost 300,000 price quotes from 90 best-selling products sold at Shopper.com, the top PCW for consumer electronics goods during 7 months between 2000 and 2001 . Their study aimed to document the effect of brand advertising activities on price dispersion, which refers to the case when identical products are priced differently across sellers. In the same vein, employing nearly 16,000 prices of six product categories (i.e., Hard Drives, Software, GPS, TV, Projector Accessories, and DVDs) scraped from the leading PCW BizRate, Liu et al. ( 2012 ) examined the pricing strategies of sellers with different reputation levels. They showed that, on average, low-rated sellers charge considerably higher prices than high-rated sellers and that this negative price premium effect is even larger if the market competition increases.

Lünnemann and Wintr ( 2011 ) conducted a comparative analysis with a much broader product category coverage on online price stickiness in the United States and four large European countries (France, Germany, Italy, and the United Kingdom). They collected more than 5 million price quotes from leading PCWs at daily frequencies during a 1-year period between December 2004 and December 2005 . Their data set contains common product categories, including consumer electronics, entertainment, information technology, small household appliances, consumer durables, and services from more than 1,000 sellers. Their finding is that prices adjust more often in European online markets than in the U.S. online markets. However, data sets used in these studies cover only a short duration of time (i.e., not exceeding a year). In a more recent study, Gorodnichenko and Talavera ( 2017 ) collected 20 million price quotes for more than 115,000 goods covering 55 good types in four main categories (i.e., computers, electronics, software, and cameras) from a PCW for 5 years on U.S. and Canadian online markets. They showed that online prices tend to be more flexible than conventional retail prices: price adjustments occur more often in online stores than in offline stores, but the size of price changes in online stores is less than one-half of that in brick-and-mortar stores.

Due to different competitive environments, prices collected from PCWs might not be representative, as sellers who participate in these platforms tend to raise the frequency and lower the size of price changes (Ellison & Ellison, 2009 ). Alternatively, researchers seek to expand data coverage by scraping a larger number of websites at a high frequency or to focus on specific types of websites, especially in studies on the consumer price index (CPI). The construction of the CPI requires price data that are representative of retail transactions. Hence, instead of gathering data from online-only retailers that might sell many products, which, however, account for only a small proportion of retail transactions, Cavallo ( 2018 ) collected daily prices from large multichannel retailers (i.e., Walmart) that sell goods both online and offline. Moreover, researchers may focus their data collection on product categories that are present in the official statistics, for which consumer expenditure weights are available. An example is the study of Faryna et al. ( 2018 ), who scraped online prices to compare online and official price statistics. Their data can cover up to 46% of the Consumer Price Inflation basket with more than 328 CPI sub-components. The data set includes 3 million price observations of more than 75,000 product categories of food, beverages, alcohol, and tobacco.

Despite the increasing efforts to scrape at a larger scale and for a longer duration to widen data coverage, there is only a limited number of studies featuring data on the quantity of goods sold (Gorodnichenko & Talavera, 2017 ). In order to derive the quantity proxy, Chevalier and Goolsbee ( 2003 ) employed the observed sales ranking of approximately 20,000 books listed on Amazon.com and BarnesandNoble.com to estimate elasticities of demand and compute a price index for online books. Using a similar approach, a project of the U.K. Office for National Statistics estimated sales quantities using products’ popularity rankings (i.e., the order that products are displayed on a web page when sorted by popularity) to approximate expenditure weights of goods in consumer price statistics. 9

Online Job Vacancies

Since about 2010 , with the growing number of employers and job seekers relying on online job portals to advertize and find jobs, researchers have increasingly identified the online job market as a new data source for analyzing labor market dynamics and trends. In comparison with more traditional approaches, scraping job vacancies has the advantage of time and cost effectiveness (Kureková et al., 2015 ). Specifically, while results of official labor market surveys might take up to a year to become available, online vacancies are real-time data that can be collected in a much shorter time at a low cost. Another key advantage is that the content of online job ads usually provides more detailed information than that provided by traditional newspaper sources.

Various research papers have focused their data collection on a single online job board. For instance, to examine gender discrimination, Kuhn and Shen ( 2013 ) collected more than a million vacancies posted on Zhaopin.com, the third-largest Chinese online job portal. Over one tenth of the job ads expresses a gender preference (male or female), which is more common in jobs requiring lower levels of skill. Some studies have employed API provided by online job portals to collect vacancies data. An example is the work of Capiluppi and Baravalle ( 2010 ), who developed a web spider to download job postings from monster.co.uk via their API, a leading online job board to investigate demand for IT skills in the United Kingdom. Their data set covers more than 48,000 vacancies in the IT category during the 9-month period from September 2009 to May 2010 .

However, data collected from a single website might not be representative of the overall job market. Alternatively, a large number of research papers rely on vacancies data scraped by a third party. The most well-known provider is Burning Glass Technologies (BGT), an analytics software company that scrapes, parses, and cleans vacancies from over 40,000 online job boards and company websites to create a near-universe of U.S. online job ads. Using a data set of almost 45 million online job postings from 2010 to 2015 collected by BGT, Deming and Kahn ( 2018 ) identified 10 commonly observed and recognizable skill groups to document the wage return of cognitive and social skills. Other studies used BGT data to address various labor market research issues, such as changes in skills demand and the nature of work (see, e.g., Adams et al., 2020 ; Djumalieva & Sleeman, 2018 ; Hershbein & Kahn, 2018 ); responses of the labor market to exogenous shocks (see, e.g., Forsythe et al., 2020 ; Javorcik et al., 2019 ), and responses of the labor market to technological developments (see, e.g., Acemoglu et al., 2020 ; Alekseeva et al., 2020 ; Deming & Noray, 2018 ).

Other Applications

Internet data enable scholars to have more insights into the peer-to-peer (P2P) economies, including online marketplaces (i.e., Ebay, Amazon), housing/rental market (i.e., Airbnb, Zoopla), and peer-to-peer lending platforms (Proper, renrendai). For the latter, with the availability of API, numerous studies employ data provided by Prosper.com—a U.S.–based P2P lending website (see, e.g., Bertsch et al., 2017 ; Krumme & Herrero, 2009 ; Lin et al., 2013 ). For instance, using Prosper API, Lin et al. ( 2013 ) obtained information on borrowers’ credit history, friendships, and the outcome of loan requests of more than 56,000 loan listings between January 2007 and May 2008 to study the information asymmetry in the P2P lending market. Specifically, they focused their analysis on borrowers’ friendship networks and credit quality and showed that friendships increase the likelihood of successful funding, lower interest rates on funded loans, and lower default rates. Later, Bertsch et al. ( 2017 ) scraped more than 326,000 loan-hour observations of loan funding progress and borrower and loan listing characteristics from Prosper between November 2015 and January 2016 to examine the impact of the monetary normalization process on online lending markets.

In the context of online marketplaces, using a data set of 20,000 coin auctions scraped directly from eBay, Lucking-Reiley et al. ( 2007 ) documented the effect of sellers’ feedback ratings on auction prices. They found that negative feedback ratings have a much larger effect on auction prices than positive feedback ratings. With regard to housing markets, Yilmaz et al. ( 2020 ) scraped more than 300,000 rental listings in 13 U.K. cities between 2015 and 2017 through Zoopla API to explore the seasonality in the rental market. Such seasonal patterns can be explained by students’ higher renting demand around the start of an academic year; this effect becomes stronger when the distance to the university campus is controlled for. Wang and Nicolau ( 2017 ) investigated accommodation prices with Airbnb listings data from a third-party website, Insideairbnb.com, which provides data sourced from publicly available information on Airbnb.com. Similarly, obtaining data of Airbnb listings in Boston from a third-party provider, Rainmaker Insights, Inc., which collects listings of property for rent, Horn and Merante ( 2017 ) examined the impact of home sharing on the housing market. Their findings suggest that the increasing presence of Airbnb leads to a decrease in the supply of housing offered for rent, thus increasing asking rents.

Concluding Remarks

The enormous amount of available data on almost every topic online should attract the interest of all empirical researchers. As an increasingly large part of our everyday activities moves online—the process speeding up due to the COVID-19 pandemic—scraping the Internet will become the only way to find information about a large part of human activities.

Data collected through web scraping has been used in thousands of projects and has led to better understanding of price formation, auction mechanisms, labor markets, social interactions, and many more important topics. With new data regularly uploaded on various websites, the old answers can be verified in different settings and new research questions can be posed.

However, the exclusivity of the data retrieved with web scraping often means that researchers are left alone to identify the potential pitfalls of the data. In the large public databases, there is broad knowledge in the public domain on database-specific issues typical for empirical research, such as sample selection, endogeneity, omitted variables, and error-in variables. In contrast, with the novel data sets, the entire burden rests on the web-scraping researchers. The growing strand of methodological research highlighting those pitfalls and suggesting steps to adjust the collected sample for representativeness helps to overcome those difficulties (e.g., Konny et al. 2022 ; Kureková et al. 2015 ).

In contrast to propriety data or sensitive registry data that require written agreements and substantial funds, and are thus off-limits to most researchers, especially in their early career stages, web scraping is available to everyone, and online data can be tapped with moderate ease. Abundant online resources and an active community willing to provide answers to even the most complex technical questions can undoubtedly make the learning curve steep. Thus, the return on investment for web-scraping skills is remarkably high, and not only to empirical researchers.

Further Reading

- Bolton, P. , Holmström, B. , Maskin, E. , Pissarides, C. , Spence, M. , Sun, T. , Sun, T. , Xiong, W. , Yang, L. , Chen, L. , & Huang, Y. (2021). Understanding big data: Data calculus in the digital era. Luohan Academy Report .

- Jarmin, R. S. (2019). Evolving measurement for an evolving economy: Thoughts on 21st century US economic statistics. Journal of Economic Perspectives , 33 (1), 165–184.

- Acemoglu, D. , Autor, D. , Hazell, J. , & Restrepo, P. (2020). AI and jobs: Evidence from online vacancies (National Bureau of Economic Research No. w28257).

- Adams, A. , Balgova, M. , & Matthias, Q. (2020). Flexible work arrangements in low wage jobs: Evidence from job vacancy data (IZA Discussion Paper No. 13691).

- Alekseeva, L. , Azar, J. , Gine, M. , Samila, S. , & Taska, B. (2020). The demand for AI skills in the labor market (CEPR Discussion Paper No. DP14320).

- Baye, M. R. , & Morgan, J. (2009). Brand and price advertising in online markets. Management Science , 55 (7), 1139–1151.

- Bertsch, C. , Hull, I. , & Zhang, X. (2017). Monetary normalizations and consumer credit: Evidence from Fed liftoff and online lending (Sveriges Riksbank Working Paper No. 319).

- Boivin, J. , Clark, R. , & Vincent, N. (2012). Virtual borders. Journal of International Economics , 86 (2), 327–335.

- Capiluppi, A. , & Baravalle, A. (2010). Matching demand and offer in on-line provision: A longitudinal study of monster.com. In 12th IEEE International Symposium on Web Systems Evolution (WSE) (pp. 13–21). IEEE.

- Cavallo, A. (2017). Are online and offline prices similar? Evidence from large multi-channel retailers. American Economic Review , 107 (1), 283–303.

- Cavallo, A. (2018). Scraped data and sticky prices. Review of Economics and Statistics , 100 (1), 105–119.

- Cavallo, A. , & Rigobon, R. (2016). The billion prices project: Using online prices for measurement and research. Journal of Economic Perspectives , 30 (2), 151–178.

- Chevalier, J. , & Goolsbee, A. (2003). Measuring prices and price competition online: Amazon.com and BarnesandNoble.com. Quantitative Marketing and Economics , 1 (2), 203–222.

- Deming, D. , & Kahn, L. B. (2018). Skill requirements across firms and labor markets: Evidence from job postings for professionals. Journal of Labor Economics , 36 (S1), S337–S369.

- Deming, D. J. , & Noray, K. L. (2018). STEM careers and technological change (National Bureau of Economic Research No. w25065).

- Djumalieva, J. , & Sleeman, C. (2018). An open and data-driven taxonomy of skills extracted from online job adverts (ESCoE Discussion Paper No. 2018–13).

- Doerr, S. , Gambacorta, L. , & Garralda, J. M. S. (2021). Big data and machine learning in central banking (BIS Working Papers No 930).

- Dudás, G. , Boros, L. , & Vida, G. (2017). Comparing the temporal changes of airfares on online travel agency websites and metasearch engines. Tourism: An International Interdisciplinary Journal , 65 (2), 187–203.

- Edelman, B. (2012). Using Internet data for economic research. Journal of Economic Perspectives , 26 (2), 189–206.

- Ellison, G. , & Ellison, S. F. (2009). Search, obfuscation, and price elasticities on the internet. Econometrica , 77 (2), 427–452.

- Faryna, O. , Talavera, O. , & Yukhymenko, T. (2018). What drives the difference between online and official price indexes? Visnyk of the National Bank of Ukraine , 243 , 21–32.

- Forsythe, E. , Kahn, L. B. , Lange, F. , & Wiczer, D. (2020). Labor demand in the time of COVID-19: Evidence from vacancy postings and UI claims. Journal of Public Economics , 189 , 104238.

- Gnutzmann, H. (2014). Of pennies and prospects: Understanding behaviour in penny auctions. SSRN Electronic Journal , 2492108.

- Gorodnichenko, Y. , Pham, T. , Talavera, O. (2021). The voice of monetary policy (National Bureau of Economic Research No. w28592).

- Gorodnichenko, Y. , & Talavera, O. (2017). Price setting in online markets: Basic facts, international comparisons, and cross-border integration. American Economic Review , 107 (1), 249–282.

- Halket, J. , & di Custoza, M. P. M. (2015). Homeownership and the scarcity of rentals. Journal of Monetary Economics , 76 , 107–123.

- Hershbein, B. , & Kahn, L. B. (2018). Do recessions accelerate routine-biased technological change? Evidence from vacancy postings. American Economic Review , 108 (7), 1737–1772.

- Horn, K. , & Merante, M. (2017). Is home sharing driving up rents? Evidence from Airbnb in Boston. Journal of Housing Economics , 38 , 14–24.

- Javorcik, B. , Kett, B. , Stapleton, K. , & O’Kane, L. (2019). The Brexit vote and labour demand: Evidence from online job postings (Economics Series Working Papers No. 878). Department of Economics, University of Oxford.

- Konny, C. G. , Williams, B. K. , & Friedman, D. M. (2022). Big data in the US consumer price index: Experiences and plans. In K. Abraham , R. Jarmin , B. Moyer , and M. Shapiro (Eds), Big data for 21st century economic statistics . University of Chicago Press.

- Kroft, K. , & Pope, D. G. (2014). Does online search crowd out traditional search and improve matching efficiency? Evidence from Craigslist. Journal of Labor Economics , 32 (2), 259–303.

- Krumme, K. A. , & Herrero, S. (2009). Lending behavior and community structure in an online peer-to-peer economic network. In 2009 International Conference on Computational Science and Engineering (Vol. 4, pp. 613–618). IEEE.

- Kuhn, P. , & Shen, K. (2013). Gender discrimination in job ads: Evidence from China. The Quarterly Journal of Economics , 128 (1), 287–336.

- Kureková, L. M. , Beblavý, M. , & Thum-Thysen, A. (2015). Using online vacancies and web surveys to analyse the labour market: A methodological inquiry. IZA Journal of Labor Economics , 4 (1), 1–20.

- Lin, M. , Prabhala, N. R. , & Viswanathan, S. (2013). Judging borrowers by the company they keep: Friendship networks and information asymmetry in online peer-to-peer lending. Management Science , 59 (1), 17–35.

- Liu, Y. , Feng, J. , & Wei, K. K. (2012). Negative price premium effect in online market—The impact of competition and buyer informativeness on the pricing strategies of sellers with different reputation levels. Decision Support Systems , 54 (1), 681–690.

- Lucking-Reiley, D. , Bryan, D. , Prasad, N. , & Reeves, D. (2007). Pennies from eBay: The determinants of price in online auctions. The Journal of Industrial Economics , 55 (2), 223–233.

- Lünnemann, P. , & Wintr, L. (2011). Price stickiness in the US and Europe revisited: Evidence from Internet prices. Oxford Bulletin of Economics and Statistics , 73 (5), 593–621.

- Piazzesi, M. , Schneider, M. , & Stroebel, J. (2020). Segmented housing search. American Economic Review , 110 (3), 720–759.

- Reinsel, D. , Gantz, J. , & Rydning, J. (2017). Data age 2025: The evolution of data to life-critical: Don’t focus on big data; focus on the data that’s big (IDC Whitepaper).

- Roth, A. E. , & Ockenfels, A. (2002). Last-minute bidding and the rules for ending second-price auctions: Evidence from eBay and Amazon auctions on the Internet. American Economic Review , 92 (4), 1093–1103.