Teaching & Learning

The legal profession in 2024: ai.

Harvard Law School’s David Wilkins says that generative artificial intelligence has the potential to transform the practice of law

Last June, an attorney filed a legal brief he had written with the help of the generative AI platform, ChatGPT. The document included citations to a series of legal cases that seemingly offered precedents that supported his client’s position. There was only one problem. As the judge in the case discovered, six of those cases didn’t exist. Instead, they’d been dreamed up by the online tool. This was only one of several high-profile incidents in which new technology has stymied — and sometimes embarrassed — the lawyers using it (just Google “I am not a cat.”). Yet, many legal experts believe generative AI will also change the legal profession in ways that will aid lawyers and their clients.

To learn more about how AI is expected to transform the legal profession, as well as other important industry trends in the year ahead, Harvard Law Today recently spoke with Professor David Wilkins ’80 , director of the Center on the Legal Profession at Harvard Law School. In the first installation of a two-part interview, Wilkins briefly summarizes legal industry trends he will be following this year and outlines both the opportunities and challenges AI will likely present. In part two, Wilkins will discuss a range of other questions, from lawyering in an election year, to salary trends, to the changing work and priorities of general counsels, and what they mean for the future of legal education.

Harvard Law Today: What do you see as some of the biggest trends in the legal profession this year?

David Wilkins: This has really been a very eventful year for the legal profession, with many important changes and potential disruptions, all of which are interrelated. Let me touch briefly on a few. Leading the list, of course, is artificial intelligence. Every other conversation I have is about ChatGPT and how it will impact the practice of law. Those conversations often lead quickly to talking about legal careers and AI’s potential employment effects. The IMF has said that 40% of all jobs in the world could be affected by AI and that it’s mostly going to be felt in the white collar and professional ranks. That clearly has implications for lawyers.

Another important trend is that, according to 2024 NALP data, the majority of associates at law firms are now women, which has led to a whole series of questions about how diversity will be treated in the legal profession moving forward, particularly in light of the fact that a majority of minority law students are female.

There are also discussions around the role of the legal profession in a time of global unrest. For example, what will be the effects of rising geopolitical tensions — the war in Ukraine, the conflict in the Middle East, competition with China — on markets? Two years ago, ESG [environmental, social, and corporate governance] was the global zeitgeist. And while it is still a powerful organizing force in Europe, it has become a highly contentious issue here in the United States. And, of course, we are entering an election year here in the United States. Lawyers are going to have critical roles to play in helping America and the world protect democracy in a time of increasing polarization and conflict.

Last — but here at HLS, certainly not least — we need to think about what all this means for legal education. How can we best prepare students for the new realities of legal careers in the middle decades of the 21st century? And just as important, how will legal education itself be disrupted by technology and other emerging trends?

“Basic legal information is going to be more and more accessible through technology to more and more people. The problem is that access to basic legal information is just one step in the process of legal services.”

HLT: Let’s start with AI. We’ve seen some high-profile instances of AI gone wrong, when lawyers have used it to write legal briefs, only for the court to discover that the AI made up cases that didn’t exist. What role is AI playing in the legal profession today, where do you see it going in the next year or so, and what checks should we be thinking about putting on its use?

Wilkins: We are just at the very tip of the iceberg in thinking about the implications of AI. When we spoke at this time last year , nobody but perhaps our friends at the Berkman Klein Center had ever heard of generative AI, let alone used ChatGPT. Now, it’s everywhere. In my conversations with lawyers, people started out very skeptical that you could use it for anything useful in the day-to-day work of a practicing lawyer. And now, increasingly, people are more comfortable using it in a wide range of settings.

AI is also getting much better and hallucinating less [a term experts use to refer to inaccurate information generated by AI]. The industry is moving from non-specialized AI to AI trained on legal materials, designed to tackle specific, complex legal problems. Most lawyers I’ve talked to say that if you ask ChatGPT, let alone a more sophisticated version, to write a memo about a legal question, you will get something approximately as good as what a first-year law firm associate would produce. Of course, a lawyer will still have to review it — just as any good senior lawyer will review the work of their juniors before sending it out into the world — but when you think about the relative cost of AI versus a first-year associate, you can begin to see the transformative potential.

HLT: Can you envision a time when clients in need of legal assistance can turn to AI, instead of lawyers, for help, at least in more routine private law matters like divorces or smaller lawsuits? Maybe the plaintiff’s AI talks to the respondent’s AI and to the judge’s AI and the various AIs sort everything out without the humans needing to get too deeply involved. I’m half joking, but then again, did the horse and buggy manufacturers immediately understand the implications of the automobile?

Wilkins: It sounds very futuristic, except we know that things like this are already happening in all sorts of fields. For example, when I get sick, I go to WebMD to see what I might have and what I might be able to do about it, including questions that I should ask my doctor. There are so many sites now where you could get access to information and generative AI is allowing that information to become more interactive. To answer your question, if the issue is access to basic legal information, that is going to be more and more accessible through technology to more and more people. The problem is that access to basic legal information is just one step in the process of legal services. It is already possible to have an AI that fills out basic legal forms—think LegalZoom. It’s clear that the bar is not going to be able to stop this sort of access. More sophisticated, interactive analysis with an AI lawyer? That may now be on the horizon — and more controversial. One of the key challenges in all of this is going to be that access to the kinds of legal tools that AI can provide are not going to be equally available. When I talk to lawyers in the legal services community, they see the potential for AI to help their clients, but they worry that the most sophisticated tools will be in the hands of the most sophisticated, already well-resourced parties who will be able to leverage that technology to gain even further advantage.

“One of the key challenges in all of this is going to be that access to the kinds of legal tools that AI can provide are not going to be equally available.”

HLT: Doesn’t that asymmetry in access to justice exist today? Some people can hire top law firms to help with their legal problems, but many others can barely get access to any lawyer at all.

Wilkins: Of course, you’re absolutely right that some people and organizations have always had more access to legal services than others. As Marc Galanter wrote in his pathbreaking article “ Why the ‘haves’ come out ahead ,” published half a century ago this year, “repeat players,” including big corporations, who engage in a lot of litigation are always able to invest in a way that gives them an advantage over smaller “one shot” litigants, by, for example, hiring experienced lawyers and playing for the rules by deciding which cases to appeal or investing in lobbying. In one sense, therefore, technology is just another advantage that the privileged will be able to pay for.

But we also know that technology often produces exponential change in ways that create the potential for magnifying existing inequalities exponentially. Thus, it’s not just that some litigants can hire a better lawyer to draft or analyze a contract than others. In the world of AI, these better resourced parties can deploy a tool that can read every relevant contract in ways that no human could ever do. And yet, it is not a simple one-way street, since we have seen how technology also disrupts the powerful. Take the music business. It used to be that the music business was completely controlled by the big record companies, which decided who got to make music and how you bought it — an album, or later CDs (remember those?!) complete with 16 songs and beautiful cover art. And then technology allowed people to order any one song and put them together in any way they wanted. And the record business has been decimated. Now, there are still big players in the record business who figured out how to make money on the top artists. But it’s a whole different business than it was 25 or 30 years ago because of technology. It’s hard to predict exactly how this is going to play out in the legal business, but you are likely to see both an accentuation of the inequality as people at the top are able to access the best technology and therefore get the best results, and the disruption of existing hierarchies as new players enter the legal marketplace offering new kinds of services, leveraging technology in a way that was not possible before.

HLT: I went to school before the age of personal computing, but I’m sure I must have wondered why I needed to learn math when calculators existed to do that work for me. Do we lose something if we become too highly reliant on technology?

Wilkins: Yes, there’s no question. But here’s the challenge. There are two reductive schools of thought, neither of which can be true. One is that every advanced technology is unequivocally good, and the less repetitive labor that humans have to do, the better. The problem with that is that one day we might end up on Barcaloungers like the people in the movie WALL-E. We also know that there’s a huge amount of valuable development that goes into learning the basics, even if once you learned them, you don’t do these elemental tasks anymore. We now let kids use calculators in school once they’ve learned the basics of addition, subtraction, multiplication, and division. Law firms and lawyers need to ask themselves the same question. If we want well trained, mid-level and senior lawyers, those mid-level and senior lawyers need to do some amount of junior lawyer work so that they understand what it is to do in-the-weeds legal research or to craft and write a well-written brief. But the question is, how much of this type of training is needed? If the point is just memorization or information recall, machines will always do it better. Maybe you need to do at least enough to understand what the information is. But you certainly don’t need to do seven or eight years of it just to be a competent partner or a senior level lawyer.

Modal Gallery

Gallery block modal gallery.

Artificial Intelligence and the Law

Legal scholars on the potential for innovation and upheaval.

- December 5, 2023

- Tomas Weber

- Illustrations by Joan Wong | Photography by Timothy Archibald

- Fall 2023 – Issue 109

- Cover Story

- Share on Twitter

- Share on Facebook

- Share by Email

Earlier this year, in Belgium, a young father of two ended his life after a conversation with an AI-powered chatbot. He had, apparently, been talking to the large language model regularly and had become emotionally dependent on it. When the system encouraged him to commit suicide, he did. “Without these conversations with the chatbot,” his widow told a Brussels newspaper, “my husband would still be here.”

A devastating tragedy, but one that experts predict could become a lot more common.

As the use of generative AI expands, so does the capacity of large language models to cause serious harm. Mark Lemley (BA ’88), the William H. Neukom Professor of Law, worries about a future in which AI provides advice on committing acts of terrorism, recipes for poisons or explosives, or disinformation that can ruin reputations or incite violence.

The question is who, if anybody, will be held accountable for these harms?

“We don’t have case law yet,” Lemley says. “The company that runs the AI is not doing anything deliberate. They don’t necessarily know what the AI is going to say in response to any given prompt.” So, who’s liable? “The correct answer, right now, might be nobody. And that’s something we will probably want to change.”

Generative AI is developing at a stunning speed, creating new and thorny problems in well-established legal areas, disrupting long-standing regimes of civil liability—and outpacing the necessary frameworks, both legal and regulatory, that can ensure the risks are anticipated and accounted for.

To keep up with the flood of new, large language models like ChatGPT, judges and lawmakers will need to grapple, for the first time, with a host of complex questions. For starters, how should the law govern harmful speech that is not created by human beings with rights under the First Amendment? How must criminal statutes and prosecutions change to address the role of bots in the commission of crimes? As growing numbers of people seek legal advice from chatbots, what does that mean for the regulation of legal services? With large language models capable of authoring novels and AI video generators churning out movies, how can existing copyright law be made current?

Hanging over this urgent list of questions is yet another: Are politicians, administrators, judges, and lawyers ready for the upheaval AI has triggered?

ARTIFICIAL AGENTS, CRIMINAL INTENT

Did ChatGPT defame Professor Lemley?

In 2023, when Lemley asked the chatbot GPT-4 to provide information about himself, it said he had been accused of a crime: namely, the misappropriation of trade secrets. Director of the Stanford Program in Law, Science and Technology , Lemley had done no such thing. His area of research, it seems, had caused the chatbot to hallucinate criminal offenses.

More recently, while researching a paper on AI and liability, Lemley and his team asked Google for information on how to prevent seizures. The search engine responded with a link titled “Had a seizure, now what?” and Lemley clicked. Among the answers: “put something in someone’s mouth” and “hold the person down.” Something was very wrong. Google’s algorithm, it turned out, had sourced content from a webpage explaining precisely what not to do. The error could have caused serious injury. (This advice is no longer included in search results.)

Lemley says it is not clear AI companies will be held liable for errors like these. The law, he says, needs to evolve to plug the gaps. But Lemley is also concerned about an even broader problem: how to deal with AI models that cause harm but that have impenetrable technical details locked inside a black box.

Take defamation. Establishing liability, Lemley explains, requires a plaintiff to prove mens rea: an intent to deceive. When the author of an allegedly defamatory statement is a chatbot, though, the question of intent becomes murky and will likely turn on the model’s technical details: how exactly it was trained and optimized.

To guard against possible exposure, Lemley fears, developers will make their models less transparent. Turning an AI into a black box, after all, makes it harder for plaintiffs to argue that it had the requisite “intent.” At the same time, it makes models more difficult to regulate.

How, then, should we change the law? What’s needed, says Lemley, is a legal framework that incentivizes developers to focus less on avoiding liability and more on encouraging companies to create systems that reflect our preferences. We’d like systems to be open and comprehensible, he says. We’d prefer AIs that do not lie and do not cause harm. But that doesn’t mean they should only say nice things about people simply to avoid liability. We expect them to be genuinely informative.

In light of these competing interests, judges and policymakers should take a fine-grained approach to AI cases, asking what, exactly, we should be seeking to incentivize. As a starting point, suggests Lemley, we should dump the mens rea requirement in AI defamation cases now that we’ve entered an era when dangerous content can so easily be generated by machines that lack intent.

Lemley’s point extends to AI speech that contributes to criminal conduct. Imagine, he says, a chatbot generating a list of instructions for becoming a hit man or making a deadly toxin. There is precedent for finding human beings liable for these things. But when it comes to AI, once again accountability is made difficult by the machine’s lack of intent.

“We want AI to avoid persuading people to hurt themselves, facilitating crimes, and telling falsehoods about people,” Lemley writes in “Where’s the Liability in Harmful AI Speech?” So instead of liability resting on intent, which AIs lack, Lemley suggests an AI company should be held liable for harms in cases where it was designed without taking standard actions to mitigate risk.

“It is deploying AI to help prosecutors make decisions that are not conditioned on race. Because that’s what the law requires.”

Julian Nyarko, associate professor of law, on the algorithm he developed

At the same time, Lemley worries that holding AI companies liable when ordinary humans wouldn’t be, may inappropriately discourage development of the technology. He and his co-authors argue that we need a set of best practices for safe AI. Companies that follow the best practices would be immune from suit for harms that result from their technology while companies that ignore best practices would be held responsible when their AIs are found to have contributed to a resulting harm.

HELPING TO CLOSE THE ACCESS TO JUSTICE GAP

As AI threatens to disrupt criminal law, lawyers themselves are facing major disruptions. The technology has empowered individuals who cannot find or pay an attorney to turn to AI-powered legal help. In a civil justice system awash in unmet legal need, that could be a game changer.

“It’s hard to believe,” says David Freeman Engstrom , JD ’02, Stanford’s LSVF Professor in Law and co-director of the Deborah L. Rhode Center on the Legal Profession , “but the majority of civil cases in the American legal system—that’s millions of cases each year—are debt collections, evictions, or family law matters.” Most pit a represented institutional plaintiff (a bank, landlord, or government agency) against an unrepresented individual. AI-powered legal help could profoundly shift the legal services marketplace while opening courthouse doors wider for all.

“Up until now,” says Engstrom, “my view was that AI wasn’t powerful enough to move the dial on access to justice.” That view was front and center in a book Engstrom published earlier this year, Legal Tech and the Future of Civil Justice . Then ChatGPT roared onto the scene—a “lightning-bolt moment,” as he puts it. The technology has advanced so fast that Engstrom now sees rich potential for large language models to translate back and forth between plain language and legalese, parsing an individual’s description of a problem and responding with clear legal options and actions.

“We need to make more room for new tools to serve people who currently don’t have lawyers,” says Engstrom, whose Rhode Center has worked with multiple state supreme courts on how to responsibly relax their unauthorized practice of law and related rules. As part of that work, a groundbreaking Rhode Center study offered the first rigorous evidence on legal innovation in Utah and Arizona, the first two states to implement significant reforms.

But there are signs of trouble on the horizon. This summer, a New York judge sanctioned an attorney for filing a motion that cited phantom precedents. The lawyer, it turns out, relied on ChatGPT for legal research, never imagining the chatbot might hallucinate fake law.

How worried should we be about AI-powered legal tech leading lay people—or even attorneys—astray? Margaret Hagan , JD ’13, lecturer in law, is trying to walk a fine line between techno-optimism and pessimism.

“I can see the point of view of both camps,” says Hagan, who is also the executive director of the Legal Design Lab , which is researching how AI can increase access to justice, as well as designing and evaluating new tools. “The lab tries to steer between those two viewpoints and not be guided by either optimistic anecdotes or scary stories.”

To that end, Hagan is studying how individuals are using AI tools to solve legal problems. Beginning in June, she gave volunteers fictional legal scenarios, such as receiving an eviction notice, and watched as they consulted Google Bard. “People were asking, ‘Do I have any rights if my landlord sends me a notice?’ and ‘Can I really be evicted if I pay my rent on time?’” says Hagan.

Bard “provided them with very clear and seemingly authoritative information,” she says, including correct statutes and ordinances. It also offered up imaginary case law and phone numbers of nonexistent legal aid groups.

In her policy lab class, AI for Legal Help , which began last autumn, Hagan’s students are continuing that work by interviewing members of the public about how they might use AI to help them with legal problems. As a future lawyer, Jessica Shin, JD ’25, a participant in Hagan’s class, is concerned about vulnerable people placing too much faith in these tools.

“I’m worried that if a chatbot isn’t dotting the i’s and crossing the t’s, key things can and will be missed—like statute of limitation deadlines or other procedural steps that will make or break their cases,” she says.

“Government cannot govern AI, if government doesn’t understand AI.”

Daniel Ho, William Benjamin Scott and Luna M. Scott Professor of Law

Given all this promise and peril, courts need guidance, and SLS is providing it. Engstrom was just tapped by the American Law Institute to lead a multiyear project to advise courts on “high-volume” dockets, including debt, eviction, and family cases. Technology will be a pivotal part, as will examining how courts can leverage AI. Two years ago, Engstrom and Hagan teamed up with Mark Chandler, JD ’81, former Cisco chief legal officer now at the Rhode Center, to launch the Filing Fairness Project . They’ve partnered with courts in seven states, from Alaska to Texas, to make it easier for tech providers to serve litigants using AI-based tools. Their latest collaboration will work with the Los Angeles Superior Court, the nation’s largest, to design new digital pathways that better serve court users.

CAN MACHINES PROMOTE COMPLIANCE WITH THE LAW?

The hope that AI can be harnessed to help foster fairness and efficiency extends to the work of government too. Take criminal justice. It’s supposed to be blind, but the system all too often can be discriminatory—especially when it comes to race. When deciding whether to charge or dismiss a case, a prosecutor is prohibited by the Constitution from taking a suspect’s race into account. There is real concern, though, that these decisions might be shaped by racial bias—whether implicit or explicit.

Enter AI. Julian Nyarko , associate professor of law, has developed an algorithm to mask race-related information from felony reports. He then implemented the algorithm in a district attorney’s office, erasing racially identifying details before the reports reached the prosecutor’s desk. Nyarko believes his algorithm will help ensure lawful prosecutorial decisions.

“The work uses AI tools to increase compliance with the law,” he says. “It is deploying AI to help prosecutors make decisions that are not conditioned on race. Because that’s what the law requires.”

GOVERNING AI

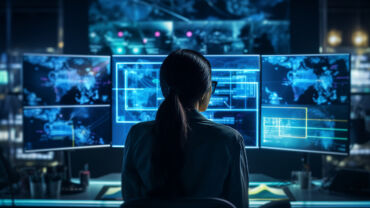

While the legal profession evaluates how it might integrate this new technology, the government has been catching up on how to grapple with the AI revolution. According to Daniel Ho , the William Benjamin Scott and Luna M. Scott Professor of Law and a senior fellow at Stanford’s Institute for Human-Centered AI, one of the core challenges for the public sector is a dearth of expertise.

Very few specialists in AI choose to work in the public sector. According to a recent survey, less than 1 percent of recent AI PhD graduates took positions in government—compared with some 60 percent who chose industry jobs. A lack of the right people, and an ailing government digital infrastructure, means the public sector is missing the expertise to craft law and policy and effectively use these tools to improve governance. “Government cannot govern AI,” says Ho, “if government doesn’t understand AI.”

Ho, who also advises the White House as an appointed member of the National AI Advisory Committee (NAIAC), is concerned policymakers and administrators lack sufficient knowledge to separate speculative from concrete risks posed by the technology.

Evelyn Douek , a Stanford Law assistant professor, agrees. There is a lack of available information about how commonly used AI tools work—information the government could use to guide its regulatory approach, she says. The outcome? An epidemic of what Douek calls “magical thinking” on the part of the public sector about what is possible.

The information gap between the public and private sectors motivated a large research team from Stanford Law School’s Regulation, Evaluation, and Governance Lab (RegLab) to assess the feasibility of recent proposals for AI regulation. The team, which included Tino Cuéllar (MA ’96, PhD ’00), former SLS professor and president of the Carnegie Endowment for International Peace; Colleen Honigsberg , professor of law; and Ho, concluded that one important step is for the government to collect and investigate events in which AI systems seriously malfunction or cause harm, such as with bioweapons risk.

“If you look at other complex products, like cars and pharmaceuticals, the government has a database of information that details the factors that led to accidents and harms,” says Neel Guha, JD/PhD ’24 (BA ’18), a PhD student in computer science and co-author of a forthcoming paper that explores this topic. The NAIAC formally adopted this recommendation for such a reporting system in November.

“Our full understanding of how these systems are being used and where they might fail is still in flux,” says Guha. “An adverse-event-reporting system is a necessary prerequisite for more effective governance.”

MODERNIZING GOVERNMENT

While the latest AI models demand new regulatory tools and frameworks, they also require that we rethink existing ones—a challenge when the various stakeholders often operate in separate silos.

“Policymakers might propose something that is technically impossible. Engineers might propose a technical solution that is flatly illegal.” Ho says. “What you need are people with an understanding of both dimensions.”

Last year, Ho, Christie Lawrence, JD ’24, and Isaac Cui, JD ’25, documented extensive challenges the federal government faced in implementing AI legal requirements in an article. This led Ho to testify before the U.S. Senate on a range of reforms. And this work is driving change. The landmark White House executive order on AI adopted these recommendations, and the proposed AI Leadership to Enable Accountable Deployment (AI LEAD) Act would further codify recommendations, such as the creation of a chief AI officer, agency AI governance boards, and agency strategic planning. These requirements would help ensure the government is able to properly use and govern the technology.

“If generative AI technologies continue on their present trajectory, it seems likely that they will upend many of our assumptions about a copyright system.”

Paul Goldstein, Stella W. and Ira S. Lillick Professor of Law

Ho, as faculty director of RegLab, is also building bridges with local and federal agencies to develop high-impact demonstration projects of machine learning and data science in the public sector.

The RegLab is working with the Internal Revenue Service to modernize the tax-collection system with AI. It is collaborating with the Environmental Protection Agency to develop machine-learning technology to improve environmental compliance. And during the pandemic, it partnered with Santa Clara County to improve the public health department’s wide range of pandemic response programs.

“AI has real potential to transform parts of the public sector,” says Ho. “Our demonstration projects with government agencies help to envision an affirmative view of responsible technology to serve Americans.”

In a sign of an encouraging shift, Ho has observed an increasing number of computer scientists gravitating toward public policy, eager to participate in shaping laws and policy to respond to rapidly advancing AI, as well as law students with deep interests in technology. Alumni of the RegLab have been snapped up to serve in the IRS and the U.S. Digital Service, the technical arm of the executive branch. Ho himself serves as senior advisor on responsible AI to the U.S. Department of Labor. And the law school and the RegLab are front and center in training a new generation of lawyers and technologists to shape this future.

AI GOES TO HOLLYWOOD

Swaths of books and movies have been made about humans threatened by artificial intelligence, but what happens when the technology becomes a menace to the entertainment industry itself? It’s still early days for generative AI-created novels, films, and other content, but it’s beginning to look like Hollywood has been cast in its own science fiction tale—and the law has a role to play.

“If generative AI technologies continue on their present trajectory,” says the Stella W. and Ira S. Lillick Professor of Law Paul Goldstein , “it seems likely that they will upend many of our assumptions about a copyright system.”

There are two main assumptions behind intellectual property law that AI is on track to disrupt. From feature films and video games with multimillion-dollar budgets to a book whose author took five years to complete, the presumption has been that copyright law is necessary to incentivize costly investments. Now AI has upended that logic.

“When a video game that today requires a $100 million investment can be produced by generative AI at a cost that is one or two orders of magnitude lower,” says Goldstein, “the argument for copyright as an incentive to investment will weaken significantly across popular culture.”

The second assumption, resting on the consumer side of the equation, is no more stable. Copyright, a system designed in part to protect the creators of original works, has also long been justified as maximizing consumer choice. However, in an era of AI-powered recommendation engines, individual choice becomes less and less important, and the argument will only weaken as streaming services “get a lot better at figuring out what suits your tastes and making decisions for you,” says Goldstein.

If these bedrock assumptions behind copyright are both going to be rendered “increasingly irrelevant” by AI, what then is the necessary response? Goldstein says we need to find legal frameworks that will better safeguard human authors.

“I believe that authorship and autonomy are independent values that deserve to be protected,” he says. Goldstein foresees a framework in which AI-produced works are clearly labeled as such to guarantee consumers have accurate information.

The labeling approach may have the advantage of simplicity, but on its own it is not enough. At a moment of unprecedented disruption, Goldstein argues, lawmakers should be looking for additional ways to support human creators who will find themselves competing with AIs that can generate works faster and for a fraction of the cost. The solution, he suggests, might involve looking to practices in countries that have traditionally given greater thought to supporting artists, such as those in Europe.

“There will always be an appetite for authenticity, a taste for the real thing,” Goldstein says. “How else do you explain why someone will pay $2,000 to watch Taylor Swift from a distant balcony, when they could stream the same songs in their living room for pennies?” In the case of intellectual property law, catching up with the technology may mean heeding our human impulse—and taking the necessary steps to facilitate the deeply rooted urge to make and share authentic works of art. SL

- Enroll & Pay

- Prospective Students

- Current Students

- Faculty & Staff

Law professor outlines risks, encourages best practices to use AI for legal, academic writing

LAWRENCE — One of the biggest concerns regarding artificial intelligence is that people will use it as a writing tool, then pass off the results as their own work. But when Andrew Torrance and Bill Tomlinson tried to list AI as a co-author on a law review article, journals didn’t like that either.

Torrance, the Paul E. Wilson Distinguished Professor of Law at KU, and Tomlinson of the University of California-Irvine have been longtime collaborators. Their early work using AI in scholarly writing has developed into several papers. “ChatGPT and Works Scholarly: Best Practices and Legal Pitfalls in Writing with AI,” written with Rebecca Black of the University of California-Irvine, was published in the SMU Law Review.

“We wrote a bunch of papers using AI and got them accepted. And along the way we learned a lot about what worked and what didn’t when using AI,” Torrance said. “It’s enhanced productivity a lot. Before, one paper a year or so would be good. Now you can do so much more. We edit ourselves to make sure those pitfalls don’t happen. In some cases, we consider AI to be a co-author. That’s one of the things we learned right away, is be explicit. We celebrate that we use it.”

The paper provides guidelines for those curious about using either of the leading AI engines in their academic writing. They largely apply to any kind of writing, but the authors found while AI can be a useful tool, a human touch is still necessary to avoid faulty work. The guidelines include:

- Using standardized approaches.

- Having AI form multiple outlines and drafts.

- Using plagiarism filters.

- Ensuring arguments make sense.

- Avoiding AI "hallucinations," in which the tools simply make things up.

- Watching for repetition, which the models tend to use.

Torrance is also an intellectual property scholar, so violating others’ copyrights would look especially bad, he said. Making sure citations of others’ work are accurate is also vital.

The researchers provide step-by-step guidelines on usage as well as information about the ethics of AI in writing and its place in legal scholarship.

“It gives you a huge head start when using these tools,” Torrance said. “Remember, these are the absolute worst versions of these tools we’ll see in our lifetimes. We’re on the Model T now, but even the Model T is amazing. But you need to be sure you don’t drive it into a ditch.”

Tomlinson and Torrance also noted using AI allows for “late-finding scholarship.” In traditional publishing, if the science or scholarship changed, that information would have to wait for a new edition. Now, as understanding evolves, writing can continuously be updated. That opens the door for publications that can be “dynamically definitive instead of statistically definitive,” Torrance said, while simultaneously making knowledge more accessible.

Torrance, Tomlinson and collaborators Black and Don Patterson of UC-Irvine wrote that, regardless of what one thinks about AI, it can play an incredibly useful role in academic writing and that those who use it properly can have a decided advantage in productivity.

“We hope this paper allows or helps people to shift some of the mentality around AI. I’m sure we haven’t identified all the possible pitfalls,” Torrance said. “Frankly, a lot of these are mistakes you need to avoid, period. I think a lot of the same principles apply between a human writing and using AI. We thought, as a public service, we should put this out there.”

Regardless of how AI evolves, the authors have laid a foundation for how scholars could use the tool in legal and responsible ways. And a piece of advice Torrance offers students in his legal analytics class can apply to all, even if they’re not in the field of law.

“The tagline for the class is, ‘Be the lawyer who masters AI, not the one who is run over by it,’” he said.

Image credit: Adobe Stock.

Read this article from the KU News Service

Artificial Intelligence and Law

This journal seeks papers that address the development of formal or computational models of legal knowledge, reasoning, and decision making. It also includes in-depth studies of innovative artificial intelligence systems that are being used in the legal domain, and gives space to studies addressing the legal, ethical and social implications of the use of artificial intelligence in law. It welcomes interdisciplinary approaches including not only artificial intelligence and jurisprudence, but also logic, machine learning, cognitive psychology, linguistics, or philosophy.

In addition to original research contributions, this journal welcomes book reviews as well as research notes posing interesting and timely research challenges.

- Kevin D. Ashley,

- Giovanni Sartor,

- Matthias Grabmair,

- Katie Atkinson

Societies and partnerships

- International Association for Artificial Intelligence and Law (opens in a new tab)

Latest issue

Volume 32, Issue 1

Latest articles

Unfair clause detection in terms of service across multiple languages.

- Andrea Galassi

- Francesca Lagioia

- Marco Lippi

Re-evaluating GPT-4’s bar exam performance

- Eric Martínez

Boosting court judgment prediction and explanation using legal entities

- Irene Benedetto

- Alkis Koudounas

- Elena Baralis

A comparative user study of human predictions in algorithm-supported recidivism risk assessment

- Manuel Portela

- Carlos Castillo

- Antonio Andres Pueyo

Legal sentence boundary detection using hybrid deep learning and statistical models

- Reshma Sheik

- Sneha Rao Ganta

- S. Jaya Nirmala

Journal information

- ACM Digital Library

- Current Contents/Engineering, Computing and Technology

- Current Contents/Social & Behavioral Sciences

- EI Compendex

- Google Scholar

- Japanese Science and Technology Agency (JST)

- Norwegian Register for Scientific Journals and Series

- OCLC WorldCat Discovery Service

- Science Citation Index Expanded (SCIE)

- Social Science Citation Index

- TD Net Discovery Service

- The Philosopher’s Index

- UGC-CARE List (India)

Rights and permissions

Springer policies

© Springer Nature B.V.

- Find a journal

- Publish with us

- Track your research

- Global directory Global directory

- Product logins Product logins

- Contact us Contact us

Our Privacy Statement & Cookie Policy

All Thomson Reuters websites use cookies to improve your online experience. They were placed on your computer when you launched this website. You can change your cookie settings through your browser.

- Privacy Statement

- Cookie Policy

As much as AI can help businesses and departments do beneficial work, there are ethical considerations to take into account . AI can help fraudsters conduct their activities more efficiently and accurately. It is also important to always remember that AI generates responses based on algorithms created by humans and information provided by humans. As such, humans should be held accountable for verifying and fact-checking what AI generates.

The eagerness to leverage AI has created some situations that have highlighted the necessity of consent and accountability, including cases where AI has “hallucinated” an answer – meaning it improvised a response based on the information it had – which led to legal and ethical consequences. Since AI does not provide any data on the source of the responses, ensuring a human validation process should be a part of AI policies. AI is also subject to bias risk mitigation , in which the data used to train a model may result in errors favoring one outcome over another. This can result in a lack of trust and fairness.

Using AI responsibly

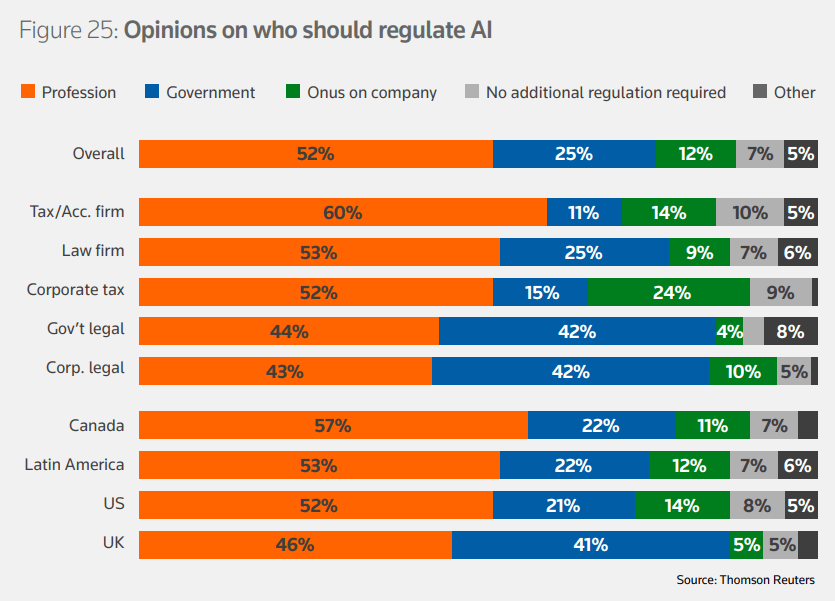

The United States has issued an Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence that establishes principles and guidelines for federal agencies to follow when implementing an AI system. It also offers a framework for working with various stakeholders. The Future of Professionals report shows that 52% of professionals believe that regulations governing the professional ethics of AI are a necessity, and 25% believe that governments should be designing and overseeing professional ethics regulations.

Key areas of AI governance frameworks should cover encryption and authentication protocols, frequent auditing and testing procedures, traceability, educating employees on the proper and ethical use of AI, and best practices for securing and protecting confidential data. Ultimately, businesses and departments should be able to understand how an algorithm arrives at its output and where the data comes from. Because it pulls data from large data sets, those data sets should be legitimate and qualified to get the most accurate and relevant output.

If AI is to be incorporated into your workflow, a human-centric design should be at the core of the initiative. Transparency and accountability are all crucial to maintaining trust with clients, users, and employees. Biases should be avoided, fairness should be promoted, and security should be the top priority.

Given the private and confidential nature of the information that professionals are working with, we believe that developing internal AI regulations and governance at the firm level is critical to establishing trust, accountability, and transparency around the consent and privacy of client information. It’s important to understand the principles behind AI ethics and make sure your organization has a framework that is tailored to the business and your customers.

Confidence in your adoption of AI for all stakeholders lies in professionals’ ability to provide high levels of service, access to information on the use of AI, and foster a culture of risk mitigation and awareness. For more information, download the Future of Professionals report.

- Artificial Intelligence

- Preventing Fraud

- Risk and Fraud

Join our community

Sign up for industry-leading insights, updates, and all things AI @ Thomson Reuters.

Webcast and events

Browse all our upcoming and on-demand webcasts and virtual events hosted by leading legal experts.

Generative AI for legal professionals: What to know and what to do right now

AI is reshaping the legal landscape by providing invaluable support across various roles in law firms and legal departments. Rather than replacing legal professionals, gen AI enhances efficiency, accelerates tasks, and enables lawyers to focus on applying their expertise.

The growing importance of adverse media searches

False positives and false negatives: How best to leverage adverse media searches in the battle against financial crime.

Charting a path forward with AI adoption in compliance: Reducing uncertainty and embracing change

Industry experts discuss the best examples of AI being used for compliance, the key risks, what this means for compliance analysts, and how leaders can make themselves and regulators comfortable with putting AI to use.

Mitigate risk, detect fraudulent activity, and streamline investigations

In today’s digital world, risk and fraud detection is even more important than ever before

Related posts

Creating new advisory service and pricing models with AI

Secure AI for your legal department

State of the Courts Report 2024: Worries over caseloads and backlogs recede as GenAI enters the chat

More answers.

Fraud investigation: An overview

Today’s lawyers and mental health: Mental Health Awareness Month

Digital fund formation services: A future for law firms

AI Chatbot Owners Argue ‘Fair Use’ in Copyright Suits: QuickTake

By Ethan M Steinberg

How do ChatGPT and other chatbots generate written works, images and music to rival the output of a talented human? By ingesting content that people already created and identifying patterns in the material so they can produce something new. These generative artificial intelligence platforms have hoovered up 19th century novels, beat poetry, draft contracts, movie scripts, photo essays, millions of songs and everything in between on the way to becoming the most disruptive technological force since the invention of the internet.

It turns out this vast trawling of mankind’s past endeavors doesn’t come for free. News organizations, novelists, music publishers ...

Learn more about Bloomberg Law or Log In to keep reading:

Learn about bloomberg law.

AI-powered legal analytics, workflow tools and premium legal & business news.

Already a subscriber?

Log in to keep reading or access research tools.

- Contributors

- Mission and Values

- Submissions

- The Regulatory Review In Depth

AI Providers Should Not Be Liable for Users’ Securities Violations

Jack solowey.

Policymakers are considering a liability regime that is bad economics and bad law.

If you are a policymaker looking to assert jurisdiction over a hot new sector, you might try to put deep-pocketed players on the hook for harms within your purview.

This strategy could explain a bill introduced in the U.S. Senate Banking Committee —the Financial Artificial Intelligence Risk Reduction (FAIRR) Act—that would make artificial intelligence (AI) providers liable for uses of their tools that violate securities laws, unless those providers take reasonable preventive steps.

This liability regime might be understandable given policymakers’ incentives. Nonetheless, it would produce poor public policy by clumsily assigning blame in a way that clashes with standard economic and legal frameworks for determining when producers should be liable for harms associated with the use of their products.

Ideally, liability rules would discourage violations of individuals’ rights without discouraging the productive enjoyment thereof. Economists try to strike this balance by assigning liability to the “least cost avoider”—the party for whom preventing or ameliorating rights-infringing activity is the cheapest.

It appears unlikely that AI tool providers are the least cost avoiders of securities law violations. If, for example, a registered securities firm, such as a broker-dealer, investment adviser, or investment company, uses a generic AI model, it is far likelier that the firm, not the model developer, would have an advantage in mitigating securities law risks—including through a dedicated compliance team.

It may be more tempting to view the AI provider as the least cost avoider when an individual, not a registered securities firm, uses the tool. But even when that person is no securities law expert, it is still probably cheaper for the individual to handle the legal issues than it would be for the AI provider. Paying for legal advice about a known and specific activity is usually going to be less expensive than devising a compliance program to guard against every possible permutation of securities violation.

This is not to say that policymakers cutting the Gordian knot of AI liability by putting model providers on the hook have nothing going for them. For instance, identifying a clearly liable party could present lower administrative costs for the legal system. Richard Epstein , professor at the New York University School of Law, writes of the “ twin objectives” of legal rules: “reducing administrative costs” and “setting desirable incentives.” When these aims are in tension, though, Epstein observes that the question becomes “whether the savings in administrative costs is dissipated by the creation of inferior incentive structures.”

Making AI providers liable for securities violations generally would produce inferior incentives. Tyler Cowen , economist and professor at George Mason University , argues that “placing full liability on AI providers for all their different kinds of output, and the consequences of those outputs, would probably bankrupt them.” That would reduce valuable AI innovations that benefit securities market participants .

In addition, in situations where an AI user is directly responsible for a securities violation and the AI provider merely failed to prevent that violation, it still would be likely more enticing to launch enforcement and private actions against the AI provider wherever possible, as such a provider would tend to be a higher profile target with the seeming ability to pay a large judgment or settlement. For this reason, making AI firms liable for such securities violations would perversely have those companies regularly pick up the tab for the parties clearly and directly responsible for violations.

AI provider liability for securities violations does not look any better through the lenses of the common law, such as products liability or agency law. Neither doctrine suggests universal AI provider liability for resulting harm is appropriate.

For one, in most of the United States, provider liability for faulty products only covers physical injuries to people and property, not “purely economic losses,” which would typically characterize securities violations. Moreover, important (but not uniformly applied) product liability considerations ask whether the user modified the product or used it in an abnormal fashion , either of which could forestall the provider’s liability. When a user prompts an AI tool to produce a securities violation, there is a reasonable argument that the user engaged in modification or abnormal use. At the very least, this is the type of argument that courts should consider.

Agency law reveals similar issues. The typical rule is that principals (on whose behalf agents act) are liable for acts of their agents that are within the scope of the agents’ employment. In the AI context, even if we assume that the AI provider could be the principal to the digital AI agent, there remains the question of whether the AI agent acted within the scope of “employment.” Because these questions are often litigated with mixed results , a liability regime that ignores them would clash with the time-tested subtleties of the common law.

Key principles of U.S. securities law also weigh against uniform AI provider liability. Specifically, securities laws have varying state of mind requirements. Notably, private fraud actions under the Securities Exchange Act and its implementing regulations have been interpreted by the Supreme Court to require allegations of the defendant’s “intent to deceive, manipulate, or defraud.” The FAIRR Act would circumvent such requirements by deeming the AI provider to have the relevant state of mind. Notably, a recent speech by U.S. Securities and Exchange Commission Chair Gary Gensler interpreted the FAIRR Act as proposing to impose a strict liability standard.

Undermining intent requirements not only would clash with existing law, but it would also reveal the inefficiency of placing blame with AI providers. State-of-mind requirements can, in part, codify important intuitions about whether a party was in any good position to avoid harms. For example, in asking whether harm was foreseeable, the tort law’s negligence standard essentially asks whether it would have made any sense for the defendant to act in a way that would have averted the harm. In removing, or at least downgrading, AI provider state-of-mind requirements for securities violations, the FAIRR Act would allocate liability for conduct in ways that have not previously made sense to policymakers or courts.

Grappling with liability questions in the age of AI is critical. But taking the lazy way out by blaming those building AI tools that all of us stand to benefit from is not the answer, particularly when doing so clashes with both economics and longstanding legal principles.

Jack Solowey is a Policy Analyst at the Cato Institute’s Center for Monetary and Financial Alternatives, focusing on financial technology.

Related Essays

What Do U.S. Courts Say About the Use of AI?

An analysis of state and federal court decisions uncovers standards to guide governmental use of artificial intelligence.

A Global Administrative Law for an Era of AI

Policymakers should follow three steps when regulating artificial intelligence on a global scale.

The Rise of AI and Technology in Immigration Enforcement

Scholars explore how technological advancements impact immigrants’ privacy rights.

IMAGES

VIDEO

COMMENTS

Let me touch briefly on a few. Leading the list, of course, is artificial intelligence. Every other conversation I have is about ChatGPT and how it will impact the practice of law. Those conversations often lead quickly to talking about legal careers and AI's potential employment effects.

Julian Nyarko, associate professor of law, on the algorithm he developed. At the same time, Lemley worries that holding AI companies liable when ordinary humans wouldn't be, may inappropriately discourage development of the technology. He and his co-authors argue that we need a set of best practices for safe AI.

The use of AI is finally beginning to permeate the legal field as well, bringing change to the practice of law.1 Many of these changes are positive as the use of advanced AI programs has the potential to both improve the quality of legal services and increase individual access to justice.2 The use of AI in the legal field, however, also invokes ...

AI as mathematics, where the focus is on formal systems; 2. AI as technology, where the focus is on the art of system design; 3. AI as psychology, where the focus is on intelligent minds; 4. AI as sociology, where the focus is on societies of agents. And then AI as law, to which we return in a minute (Table 1 ).

Much has been written recently about artificial intelligence (AI) and law. But what is AI, and what is its relation to the practice and administration of law? This article addresses those questions by providing a high-level overview of AI and its use within law. ... PAPERS. 11,592. This Journal is curated by: Mark B. Turner at Case Western ...

The first issue of Artificial Intelligence and Law journal was published in 1992. This paper discusses several topics that relate more naturally to groups of papers than a single paper published in the journal: ontologies, reasoning about evidence, the various contributions of Douglas Walton, and the practical application of the techniques of AI and Law.

It contains timely and original articles that thoroughly examine the ethical, legal, and socio-political implications of AI and law as viewed from various academic perspectives, such as philosophy, theology, law, medicine, and computer science. The issues covered include, for example, the key concept of personhood and its legal and ethical ...

Teaching Law and Artificial Intelligence . Brendan Johnson * **& Francis X. Shen. A. BSTRACT. In this Essay we present the first detailed analysis of how U.S. law schools are beginning to offer more courses in Law and Artificial Intelligence. Based on a review of 197 law school course catalogs available online, we find that 26% of law schools ...

Artificial intelligence (AI) has a growing normative impact on our daily lives. The use of AI in plentiful applications is already challenging many areas, issues, and concepts of established law. Much of the law that exists today logically evolved to account for human actions. As AI systems become smarter, more autonomous, and lacking in ...

Among the most important of these legal values are: equal treatment under the law; public, unbiased, and independent adjudication of legal disputes; justification and explanation for legal outcomes; outcomes based upon law, principle, and facts rather than social status or power; outcomes premised upon reasonable, and socially justifiable ...

2020. TLDR. A framework is proposed that seeks to identify and establish a set of robust autonomous levels articulating the realm of Artificial Intelligence and Legal Reasoning (AILR), and can be utilized by scholars in academic pursuits of AI legal reasoning, along with being used by law practitioners and legal professionals in gauging how ...

Abstract. This paper examines the evolving role of Artificial Intelligence (AI) technology in the field of law, specifically focusing on legal research and decision making. AI has emerged as a ...

By Massimo Genovese. The Colleges of Law - Thu, Nov 30, 2023. Over the next several months of Legal Currents and Futures posts, The Colleges of Law faculty and students are sharing a series of thought pieces about artificial intelligence and the law. Artificial intelligence (AI) ignites human imagination, from academia to critically acclaimed ...

Abstract. Artificial Intelligence (AI) use in the legal industry has increased rapidly over the past decade. AI technologies, such as machine learning algorithms, natural language processing, and computer vision, have been applied to various legal tasks, including legal research, contract review, and prediction of case outcomes.

In the current study, 177 papers on artificial intelligence applications in law published between 1960 and April 29, 2022, were pulled from Scopus using keywords and analysed scientometrically. We ...

Scope of Artificial Intelligence in Law. Jyoti Dabass, Bhupender Singh Dabass. Published 28 June 2018. Law, Computer Science. TLDR. Current applications of legal AI are discussed and deep learning and machine learning techniques that can be applied in future to simplify the cumbersome legal tasks are suggested. Expand.

The "Kira" technology is used to analyze legal papers, locate and spot any potential dangerous areas, and extract provisions from various legal documents. Current state of artificial intelligence in law. ... When we look at India, artificial intelligence in law is still in its infancy. Lawyers are hesitant to adopt this technology because ...

Artificial intelligence (AI) enables machines to mimic human intelligence to perform tasks such as learning, decision making and problem solving and through the integration and use of AI in ...

LAWRENCE — One of the biggest concerns regarding artificial intelligence is that people will use it as a writing tool, then pass off the results as their own work. ... was published in the SMU Law Review. "We wrote a bunch of papers using AI and got them accepted. And along the way we learned a lot about what worked and what didn't when ...

Jeffrey Allen is the principal in the Graves & Allen law firm in Oakland, California. He is Editor-in-Chief Emeritus of GPSolo magazine and the GPSolo eReport and serves as an editor and the technology columnist for Experience magazine.A frequent speaker and writer on technology topics, he is coauthor (with Ashley Hallene) of Technology Tips for Lawyers and Other Business Professionals (ABA ...

This journal seeks papers that address the development of formal or computational models of legal knowledge, reasoning, and decision making. It also includes in-depth studies of innovative artificial intelligence systems that are being used in the legal domain, and gives space to studies addressing the legal, ethical and social implications of the use of artificial intelligence in law.

Using AI responsibly. The United States has issued an Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence that establishes principles and guidelines for federal agencies to follow when implementing an AI system. It also offers a framework for working with various stakeholders. The Future of Professionals report shows that 52% of professionals believe that regulations ...

Discrimination by AI decision tools among chief concerns. The Department of Labor, the FTC, and seven other federal agencies are doubling down on enforcement commitments in the face of artificial intelligence, including in civil rights and fair competition. In a statement Thursday, the agencies pledged to "vigorously use our collective ...

"Under current law in most jurisdictions,… Artificial intelligence (AI) is playing an expanding — and often invisible — role in America's legal system.

AI essay on legal personhood. this essay argues that artificially intelligent (ai) agents should not be granted legal personhood. the european advocation of ... JJ, Diamantis, ME and Grant, TD (2017) Of, for and by the people: the lacuna of synthetic persons. Artificial Intelligence Law 25 277 25 Kurki, VAJ and Pietrzykowski, T (2017) Legal ...

While many related studies concentrate on the drawbacks of artificial intelligence (AI) in law, this study uses a top-down deductive approach to analyze the inclusion of AI in law from an international point of view before focusing on the local implications for law in the Indian context.

These generative artificial intelligence platforms have hoovered up 19th century novels, beat poetry, draft contracts, movie scripts, photo essays, millions of songs and everything in between on the way to becoming the most disruptive technological force since the invention of the internet. It turns out this vast trawling of mankind's past ...

When a user prompts an AI tool to produce a securities violation, there is a reasonable argument that the user engaged in modification or abnormal use. At the very least, this is the type of argument that courts should consider. Agency law reveals similar issues. The typical rule is that principals (on whose behalf agents act) are liable for ...

UK Government. The UK and US have signed a landmark deal to work together on testing advanced artificial intelligence (AI). The agreement signed on Monday says both countries will work together on ...