- New! Member Benefit New! Member Benefit

- Featured Analytics Hub

- Resources Resources

- Member Directory

- Networking Communities

- Advertise, Exhibit, Sponsor

- Find or Post Jobs

- Learn and Engage Learn and Engage

- Bridge Program

- Compare AACSB-Accredited Schools

- Explore Programs

- Advocacy Advocacy

- Featured AACSB Announces 2024 Class of Influential Leaders

- Diversity, Equity, Inclusion, and Belonging

- Influential Leaders

- Innovations That Inspire

- Connect With Us Connect With Us

- Accredited School Search

- Accreditation

- Learning and Events

- Advertise, Sponsor, Exhibit

- Tips and Advice

- Is Business School Right for Me?

The Importance of Variance Analysis

This critical topic is too often taught to only a handful of students—or neglected in the b-school curriculum altogether.

Variance analysis is an essential tool for business graduates to have in their toolkits as they enter the workforce. Over our decades of experience in executive education, we’ve observed that managers across all industries and functions use variance analysis to measure the ability of their organizations to meet their commitments.

Because variance analysis is such a powerful risk management tool, there is a strong case for including it in the finance portion of any MBA curriculum. Yet fewer than half of finance professors believe they should be teaching this subject; they view it as a topic more typically taught in accounting classes. At the same time, in practice, variance analysis is such a cross-functional tool that it could be taught throughout the business school curriculum—but it’s not. We perceive a worrisome disconnect between the way variance analysis is taught and the way it is used in real life.

Variance Analysis and Its Applications

There are three periods in the life of a business plan: prior period to plan, plan to actual, and prior period to actual. For instance, if a business plan is being formulated for 2019, the “prior period” would be 2018, the “plan to actual” would be the budget for 2019, and the “prior period to actual” would be what really happens in 2019. These three stages are also referred to as planning, meeting commitments, and growth.

For each of these periods, variance analysis looks at the deviations between the targeted objective and the actual outcome. The most common variances are found in price, volume, cost, and productivity. When executives conduct an operational review, they will need to explain why there were positive or negative variances in any of these areas. For instance, did the company miss a target because it lost an anticipated national account or failed to lock in a price contract due to competitive pressure?

Executives who understand variances will improve their risk management, make better decisions, and be more likely to meet commitments. In the process, they’ll produce outcomes that can give an organization a real competitive advantage and, ultimately, create shareholder value.

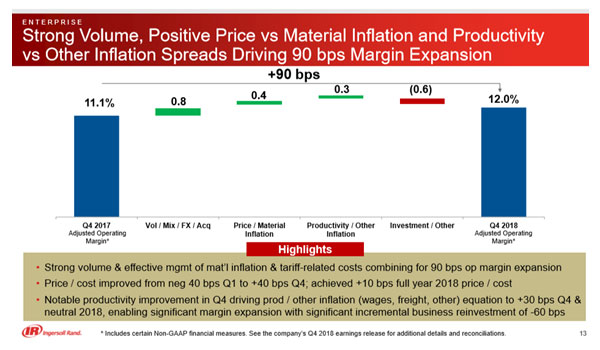

Most businesses apply variance analysis at the operating income level to determine what they projected and what they achieved. The variances usually are displayed in the form of floating bar charts—also known as walk, bridge, or waterfall charts. These graphics are often used in internal corporate documents as well as in investor-facing documents such as quarterly earnings presentations.

While variance analysis can be applied in many functional areas, it is used most often in finance-related fields. Yet, the majority of finance programs at both the graduate and undergraduate levels don’t cover it at all. We surveyed finance faculty in 2013 and accounting faculty in 2017 to determine how they teach and use variance analysis. Among other things, we learned that:

- More than 80 percent of accounting faculty believe that variance analysis is important to a finance career, and they are far more likely to teach it than their finance faculty colleagues.

- Only 59 percent of finance faculty and 48 percent of accounting faculty are familiar with examples of walk charts from real-world companies. Yet these visual portrayals of operating margin variances are commonplace in quarterly earnings presentations and readily found on investor relations websites.

Because universities mostly fail to teach this important topic, corporate educators have been left to fill the learning gap. Many global organizations, in fact, make variance analysis a key subject in their development programs for entry-level financial professionals.

The University Response

We believe it’s critical for universities to better align their curricula with the skills that today’s employers seek in the graduates they hire. Not only do we think variance analysis should be included in the business curriculum, but we could even make an argument for running it as a capstone business course. We offer these suggestions for ways that faculty could integrate this powerful tool across the business school program:

- Both accounting and finance faculty should, as much as is practical, incorporate variance analysis into their classes, particularly focusing on financial planning and analysis. We acknowledge that a dearth of corporate finance texts on the topic will make this a challenge for finance professors. The two of us employ teaching materials in our graduate business and undergraduate finance classes based on experience in the corporate world, and we would be glad to share them with others.

- Faculty who use case studies should always include a case specific to variance analysis tools. Students who pursue careers in corporate finance will almost certainly be required to use such tools, particularly as data and predictive analytics applications are enhanced to improve forecasting accuracy. Two sources of such case studies are TRI Corporation and Harvard Business Publishing.

- Professors can introduce students to real-world applications of variance analysis by showing how it is used in investor relations (IR) pitches. As instructors, the two of us routinely search IR sites for applications of variance analysis. We specifically look for operating margin variance walks (floating bars, brick charts) for visual applications that can make the topic come to life for students. Here’s an example from Ingersoll Rand:

- Faculty from accounting and finance programs should collaborate on when, where, and how to teach variance analysis. At the very least, this will ensure that students gain an understanding of the topic from either a finance or an accounting perspective, but the ideal would be for them to benefit from both perspectives for a holistic understanding. At Fairfield University, accounting programs introduce students to the theory of variance analysis. Then finance programs take an operational and cross-functional approach that addresses planning, meeting commitments, and growth.

- Both accounting and finance faculty should help finance majors understand variance analysis from a practitioner’s standpoint. Discussions about pricing, supply chain, manufacturing costs, risk management, and inflation and deflation around cost inputs can help students grasp the necessity of making trade-offs and balancing short-term and long-term business goals. To make sure students understand the practitioner’s viewpoint, we use corporate business simulations that are more operationally focused, as opposed to being academic in tone.

- To extend the topic to all majors, not just finance and accounting students, faculty from disciplines such as strategy and operations could also incorporate variance analysis into their classes. For instance, if they use business simulations for their capstone courses, they could add a component that covers variance analysis. At Fairfield, we use a variety of competitive business simulations from the corporate world.

- Finally, professors can bring in guest speakers from almost any business functional area and ask them to explain, as part of their presentations, how variance analysis is relevant in their fields. As an example, we often have senior finance executives from Stanley Black & Decker—a company known well-known for its ability to grow and meet its commitments via variance analysis—present to our graduate program. We tap other companies from Fairfield County as well.

In the graduate classes we teach at Fairfield University, we have always tried to connect theory with practice. And we’ve long believed that creating a culture of meeting and exceeding commitments requires aligning interaction across functions in the workplace. With this article, we hope that, at the very least, we can start a larger discussion about the need for cross-disciplinary teaching of variance analysis.

For more about variance analysis materials, contact us at [email protected] or [email protected] .

Estimation and statistical inferences of variance components in the analysis of single-case experimental design using multilevel modeling

- Published: 10 September 2021

- Volume 54 , pages 1559–1579, ( 2022 )

Cite this article

- Haoran Li ORCID: orcid.org/0000-0003-0886-4172 1 ,

- Wen Luo 1 ,

- Eunkyeng Baek 1 ,

- Christopher G. Thompson 1 &

- Kwok Hap Lam 1

1292 Accesses

3 Citations

2 Altmetric

Explore all metrics

Multilevel models (MLMs) can be used to examine treatment heterogeneity in single-case experimental designs (SCEDs). With small sample sizes, common issues for estimating between-case variance components in MLMs include nonpositive definite matrix, biased estimates, misspecification of covariance structures, and invalid Wald tests for variance components with bounded distributions. To address these issues, unconstrained optimization, model selection procedure based on parametric bootstrap, and restricted likelihood ratio test (RLRT)-based procedure are introduced. Using simulation studies, we compared the performance of two types of optimization methods (constrained vs. unconstrained) when the covariance structures are correctly specified or misspecified. We also examined the performance of a model selection procedure to obtain the optimal covariance structure. The results showed that the unconstrained optimization can avoid nonpositive definite issues to a great extent without a compromise in model convergence. The misspecification of covariance structures would cause biased estimates, especially with small between case variance components. However, the model selection procedure was found to attenuate the magnitude of bias. A practical guideline was generated for empirical researchers in SCEDs, providing conditions under which trustworthy point and interval estimates can be obtained for between-case variance components in MLMs, as well as the conditions under which the RLRT-based procedure can produce acceptable empirical type I error rate and power.

Similar content being viewed by others

MultiSCED: A tool for (meta-)analyzing single-case experimental data with multilevel modeling

A demonstration and evaluation of the use of cross-classified random-effects models for meta-analysis

The application of meta-analytic (multi-level) models with multiple random effects: A systematic review

Avoid common mistakes on your manuscript.

Single-case experimental designs (SCEDs) are commonly used in research to evaluate the effectiveness of an intervention by repeatedly measuring an outcome of a small number of cases over time. The SCEDs utilize various designs including, but not limited to, AB, ABAB, multiple baseline across cases, multiple baseline across behaviors or settings, alternating treatments, changing criterion, multiple probe across cases, and multiple probe across behaviors or settings (Pustejovsky et al., 2019 ; Shadish & Sullivan, 2011 ). Among them, multiple baseline design (MBD) is the mostly adopted type of SCED design (Pustejovsky et al., 2019 ). MBD is comprised of interrupted time series data from multiple cases, settings, or behaviors where an intervention is introduced sequentially within different time series (Baek & Ferron, 2013 ; Ferron et al., 2010 ; Moeyaert et al., 2017 ; Pustejovsky et al., 2019 ; Shadish & Sullivan, 2011 ). This design enables researchers to attribute changes in level and trend to the intervention instead of external events, history, or maturation, especially when the randomization is introduced in the design (Kratochwill & Levin, 2010 ; Levin et al., 2018 ), which makes it possible to use a randomization test for inferential purposes (Michiels et al., 2020 )

Combining research findings from multiple cases within an SCED study can provide strong evidence for the average treatment effect across cases (Moeyaert et al., 2017 ; Shadish & Rindskopf, 2007 ). In addition to the average treatment effect, it is informative to estimate the variation of an intervention effect across cases. It is likely that a treatment effect is positive on average but has a large variation to the extent that the treatment is not effective or has adverse effects for some cases. In other words, a large variation in the treatment effect indicates that the treatment is not equally effective for all cases. Further analyses may be needed to identify individual characteristics to explain variation in the treatment effect. Thus, the interpretation of the average effect should be accompanied by the associated variation in effect across cases (Barlow et al., 2009 ; Kratochwill & Levin, 2014 ; Moeyaert et al., 2017 ).

There has been a great amount of single-case data analytical techniques including visual analysis, nonoverlap indices, descriptive indices quantifying changes in level and slope, standardized mean difference indices, procedures based on regression analysis, simulation-based procedures, and randomization test (Manolov & Moeyaert, 2017 ). As a very flexible statistical approach, multilevel modeling (MLM) has been proposed to analyze SCED data (Ferron et al., 2009 ; Moeyaert et al., 2014a ; Shadish et al., 2013a ; Van den Noortgate & Onghena, 2003a , 2003b ). In a single study, a two-level model can capture both the average treatment effect (through the estimated fixed effect) and the between-case variability in treatment effects (through estimated variance-covariance components). Extant studies show that the treatment effect can be estimated accurately, and its statistical inferences based on adjusted degrees of freedom have good statistical properties (i.e., type I error rates and coverage rates close to the nominal level) in the analysis of SCED using MLMs (Baek et al., 2020 ; Baek & Ferron, 2013 ; Ferron et al., 2009 ; Moeyaert et al., 2017 ).

For the estimation and statistical inferences of the between-case variation in treatment effects, however, there are several challenges facing researchers when using MLMs. First, researchers may encounter the issue of nonpositive definiteness when using restricted maximum likelihood (REML) estimators in the constrained optimization, leading to inadmissible estimates of variance components. Second, estimates of between-case variance components tend to be biased using either REML or Bayesian approaches (Baek et al., 2020 ; Baek & Ferron, 2020 ; Ferron et al., 2009 ; Hembry et al., 2015 ; Moeyaert et al., 2017 ; Moeyaert et al., 2013a , 2013c ). Though the bias is expected to be mitigated with more cases and/or larger between-case variance components, it is unknown what number of cases is needed and/or what values of the between-case variance components should be to achieve accurate estimates. Third, although the misspecification of covariance structures (i.e., constraining a non-zero covariance to be zero) may have little impact on the accuracy of the estimation of large between-case variance components (Moeyaert et al., 2017 ), it is unknown whether the estimation is accurate when the between-case variance is small or moderate. Last, the commonly used Wald test is not appropriate for testing the between-case variance components in SCEDs because it requires large sample sizes. It also has unfavorable properties when the null value of tested variance component is zero or close to zero (West et al., 2014 ). A sophisticated procedure based on the restricted likelihood ratio test (RLRT) is needed to conduct significance tests and the performance of such tests (i.e., type I error rate and power) has not been systematically examined (Shadish et al., 2013a ).

To fill the gap in the literature, our study serves three purposes. First, we addressed the issue of non-positive definiteness by using the unconstrained optimization through matrix parameterization and compared its performance with the traditional constrained optimization method. Second, we examined the impact of misspecifications of covariance structures and explored the feasibility of using a “post hoc” model selection procedure (test for the covariance component to determine whether it should be kept in the fitted model) to obtain the optimal covariance structure. Third, we introduced and evaluated the performance of the RLRT-based procedure to test the between-case variance components. A colloquial (non-technical) illustration was provided and the empirical type I error rate and power of the RLRT-based procedure for testing the between-case variance components was evaluated. Last, we provided a guideline about the conditions for obtaining accurate estimation and statistical inferences for between-case variance components with MLMs in SCEDs.

The remaining of the paper is organized as follows. First, we review the basic MLM for MBD, the issue of nonpositive definiteness, and an unconstrained optimization approach as a positive solution. Second, we review the performance of MLMs to estimate between-case variance components, the impact of misspecification of covariance structures, and model selection procedure to obtain the optimal covariance structures. Next, we review the RLRT-based procedures for testing between-case variance components. Following this portion of the literature, we present methods for the current simulation study, followed by the results. Last, we discuss the findings, limitations, and future directions.

Multilevel modeling for MBD data

The basic expression of a multilevel model for MBD is

where i and j are the index of measurements and cases, respectively, Phase is a binary variable (0 = baseline and 1 = treatment), γ 00 is the average baseline level, γ 10 is the average treatment effect (i.e., change in level), μ 0 j and μ 1 j are random effects associated with the baseline level and treatment effect for individual j , and e ij is the within-case error term. We assume that μ 0 j and μ 1 j follow a multivariate normal distribution with a mean vector of zeros, variances indicated by \( {\sigma}_{u0}^2 \) and \( {\sigma}_{u1}^2 \) , respectively, and a single covariance term indicated by σ u 0 u 1 (i.e., the G matrix). Although in the basic multilevel model the level-1 error ( e ij ) is assumed to follow an independent and identically normal distribution (i.i.d.), more complex error structures where autocorrelation is assumed, such as the first-order autoregressive, can be applied (Baek & Ferron, 2013 ; Ferron et al., 2009 ). We provide the matrix form of this model in Appendix A.

Non-positive definiteness and unconstrained parameterization

For an estimated variance-covariance G matrix, we refer to G as positive semidefinite or nonnegative definite when all its eigenvalues ≥0, or equivalently \( {\sigma}_{u0}^2\ge 0 \) , \( {\sigma}_{u1}^2\ge 0 \) and \( {\sigma}_{u0}^2{\sigma}_{u1}^2\ge {\sigma}_{u0u1}^2 \) . Positive semidefinite G matrices include positive definite and singular matrices. When some of its eigenvalues =0, a G matrix is referred to as a singular or degenerate matrix with statistically dependent vectors. When all eigenvalues in G are greater than 0, the nonredundant G matrix (i.e., random effects are not perfectly correlated) is positive definite, indicating that \( {\sigma}_{u0}^2>0 \) , \( {\sigma}_{u1}^2>0 \) and \( {\sigma}_{u0}^2{\sigma}_{u1}^2>{\sigma}_{u0u1}^2 \) .

Some optimization algorithms to implement REML estimation impose constraints on the parameters to ensure that estimated G matrices are positive definite during the iteration process (i.e., constrained optimization; West et al., 2014 ). However, such constraints are not imposed at the last iteration when the estimation converges. Hence, it is possible to obtain nonpositive definite G matrices as the final estimates, especially when the cluster-level sample size is small (e.g., a small number of cases in SCEDs) because little information is available for estimating variance components (Chung et al., 2015 ).

Two primary scenarios that would lead to nonpositive definiteness are as follows. First, a variance component estimate might be close to 0 (accounting for the finite numeric precision of a computer) or outside the parameter space (i.e., estimated to be negative). This arises given the lack of variation in the random effects, after controlling for the fixed effects. When this situation is encountered, statistical software, such as Proc MIXED in SAS and MIXED in SPSS, would provide a warning (e.g., ‘the estimated G matrix is not positive definite’ in SAS or ‘this covariance estimate is redundant’ in SPSS) and by default set the variance estimate equal to 0 (West et al., 2014 ). Second, there is also a possibility that estimates of between-case variance components are significantly larger than 0, while the G matrix remains nonpositive definite. This occurs when the condition \( {\sigma}_{u0}^2{\sigma}_{u1}^2>{\sigma}_{u0u1}^2 \) is not satisfied. Similar warning messages are provided in this scenario (e.g., ‘the estimated G matrix is not positive definite’ in SAS). Under both scenarios, applied researchers often choose to either ignore warning messages and report variance components estimates regardless or not report the estimates with concerns about nonpositive definiteness.

The issue of nonpositive definiteness has an impact on methodological investigations, which rely on Monte Carlo simulation. If there is a large proportion of nonpositive definite cases, results should be interpreted with extreme caution and studies that evaluate estimators would risk severe bias if conclusions are only based on cases with positive definite matrices (Gill & King, 2004 ). The issue of nonpositive definiteness also has an impact on empirical studies. As Kiernan ( 2018 ) indicated, when the G matrix is nonpositive definite, we may not compare parameter estimates across different statistical programs. Moreover, the nonpositive definiteness encountered in the last iteration in statistical packages could lead to inadmissible solutions (Demidenko, 2013 ; Stram & Lee, 1994 ).

Several approaches have been suggested to resolve the problem of nonpositive definiteness. The most intuitive practice is to remove random effects associated with zero variance estimates and re-conduct analyses (Kiernan, 2018 ). This is acceptable in the first scenario where there is not much variation. Under the second scenario, however, it is difficult to determine whether the issue is caused by variance components or covariance. Alternatively, if the interest is only on the fixed effects, one can remove the positive definiteness constraints for the G matrix via use of the ‘nobound’ option in SAS (West et al., 2014 ). Nonetheless, it is common to encounter negative variance estimates (i.e., G matrix is not positive semidefinite), which are difficult to interpret. Lastly, a more promising approach to deal with nonpositive definiteness is to employ different types of matrix parameterization in the iteration process (Pinheiro & Bates, 1996 ). Matrix parameterization can reduce a constrained optimization problem (i.e., G matrices are forced to be positive definite in the iteration process) to an unconstrained problem and ensures that G matrices are at least positive semidefinite throughout the entire estimation process (Demidenko, 2013 ; West et al., 2014 ). More specifically, the upper-triangular elements in the G matrix can be reparametrized in the way that the resulting estimate must be positive semidefinite (McNeish & Bauer, 2020 ).

Next, we briefly describe a commonly used parameterization, namely, log-Cholesky parameterization, for unstructured G matrices and for diagonal G matrices where we assume that the random effects are independent. Let G denote a q × q positive semidefinite variance-covariance matrix. As G is symmetric, there are only q ( q + 1)/2 (i.e., G is unstructured) or q (i.e., G is diagonal) unconstrained parameters. We denote a minimum set of unconstrained parameters by a vector θ . The G matrix is represented by G = L T L , where L = L ( θ ) is a q × q matrix. Any G matrix defined as the above decomposition is positive semidefinite (Pinheiro & Bates, 1996 ). In log-Cholesky parameterization, L is an upper triangular matrix and θ includes the logarithms of the diagonal elements of L and the upper off-diagonal elements. For example, if \( G=\left[\begin{array}{cc}2& 1\\ {}1& 2\end{array}\right] \) , the Cholesky decomposition of \( G=\left[\begin{array}{cc}1.414& 0\\ {}0.707& 1.225\end{array}\right]\left[\begin{array}{cc}1.414& 0.707\\ {}0& 1.225\end{array}\right] \) . The log-Cholesky parameterization of G is θ = (log (1.414), 0.707, log (1.225)) T . In models where the covariance is constrained to be 0, G becomes a diagonal matrix that can be parametrized by fewer parameters in θ . Under this situation, the matrix can be simply parameterized by the logarithm of the diagonal elements of L . For example, if \( G=\left[\begin{array}{cc}9& 0\\ {}0& 4\end{array}\right] \) , the Cholesky decomposition of \( G=\left[\begin{array}{cc}3& 0\\ {}0& 2\end{array}\right]\left[\begin{array}{cc}3& 0\\ {}0& 2\end{array}\right] \) . The parameterization of G is θ = (log (3), log (2)) T .

It should be noted that matrix parameterization with a diagonal G matrix in some software ensures positive definiteness (e.g., nlme package in R), which is a stronger assumption compared to the positive semi-definiteness. Thus, maximum likelihood estimates may not exist when the true variance component is 0. Demidenko ( 2013 ) showed that the estimated model was more likely to fail to converge when matrices were forced to be positive definite using matrix parameterization and concluded that the best way to cope with this issue is not to cope at all. Put another way, they follow the principle that it is better to get any solution (even negative variance estimates are informative as it may shed light on which random effect is problematic and thus can be omitted) than no solution at all, as in the case of failing to converge.

In summary, there is a controversy on whether and how to deal with nonpositive definiteness. The impact of using constrained optimization versus using the unconstrained optimization on the performance of MLMs for SCED should be systematically examined. We expect that the impact of nonpositive definiteness on the estimates of variance components is trivial in the first scenario (i.e., when there is a lack of variation in the random effects). In this case, the final estimates of the variance components will be set to 0 when the G matrix is not positive definite in most programs that implement the constrained optimization method. In the second scenario (i.e., when the condition \( {\sigma}_{u0}^2{\sigma}_{u1}^2>{\sigma}_{u0u1}^2 \) is not satisfied), we expect that the constrained optimization would yield inadmissible correlation estimates between random effects (i.e., r < −1.0 or r > 1.0).

Estimation of between-case variance components

Several simulation studies examined the performance of MLMs with REML to estimate the variance components in the MBD. In general, the estimates of between-level variance components were biased, however, the directions of the biases were not consistent. Some studies found moderate to large positive biases in the estimates of variance in the treatment effect (e.g., Ferron et al. 2009 , Moeyaert et al., 2013a , 2013c ), especially when the true variance component was small (e.g., \( {\sigma}_{u1}^2<.\mathrm{0.5} \) ). Some studies found small to moderate negative

biases (Baek et al., 2020 ; Joo & Ferron, 2019 ; Moeyaert et al., 2017 ) when the variance component was moderate ( \( {\sigma}_{u1}^2=.\mathrm{0.5}\ \mathrm{or}\ 2.0 \) ). For estimates of level-1 error variance, readers are referred to simulation studies with emphasize on the level-1 error structures (see Baek & Ferron, 2013 , 2020 ; Joo et al., 2019 ). In summary, with different magnitude of between-case variance, the estimates of between-case variance components are biased in general, which made it difficult to decide the degree of heterogeneity in treatment effects among cases.

As previous findings are inconsistent, it is necessary to replicate the simulation studies and improve the generalizability of the findings by considering a wider range for the magnitude of the variance components. Besides, previous studies based on REML with constrained optimization (e.g., default optimization method with SAS Proc MIXED) may eliminate nonpositive definite cases and thus the observed bias could be partly due to high rates of non-positive G matrix, especially when variance components are small. Hence, it is important to re-examine the estimates using constrained and unconstrained optimization methods. Last, previous studies adopted a diagonal covariance structure, but this practice can lead to underfitted covariance structures in reality (Moeyaert et al., 2016 ), which we will elaborate in the following section. Therefore, there is a need to revisit the estimation of variance components with consideration of a wider range of between-case variance components, different optimization methods, and different covariance structures through the basic model with REML. We hope that this can serve as the starting point to clarify the direction of the bias, and in which conditions the estimates of between-case variance components are trustworthy with REML.

Specification of covariance structures

In addition to the issues of non-positive definiteness and the general issue of biased estimation of variance components due to small sample sizes, the misspecification of covariance structures could also impact the estimates of between-case variance components. A common type of misspecification is to ignore the covariance when the true covariance is nonzero, which leads to an underfitted model. Previous studies in contexts of general MLM showed that underfitting covariance structures could result in biased variance estimates and inflated type I error rate for the test of fixed effects (Barr et al., 2013 ; Hoffman, 2015 ). In contrast, another type of misspecification is to assume a full covariance structure when there is no correlation between random effects, leading to an overfitted model. This could result in loss of power to detect significant fixed effects especially when the sample size is small (Matuschek et al., 2017 ). In SCEDs, a nonzero covariance indicates that the treatment effect is correlated with the baseline level, which is a common phenomenon (Moeyaert et al., 2016 ). However, previous studies to combine SCED data across cases and studies using MLMs typically assumed a diagonal covariance structure (Denis et al., 2011 ; Moeyaert et al., 2013b , 2014b ; Van den Noortgate & Onghena, 2003a , 2007 ), which could lead to an underfitted covariance structure. On the other hand, overfitting is possible when treatment effects are unrelated to baseline levels. Moeyaert et al. ( 2016 ) evaluated consequences of the misspecification of covariance structures (i.e., overfitting and underfitting) and found that it had no effect on the estimation and inferences for the treatment effect, and the between-case variance estimates were also unbiased in both correctly specified and misspecified covariance structures. However, the authors only considered conditions where the true variance components are large (i.e., \( {\sigma}_{u0}^2={\sigma}_{u1}^2=2\ \mathrm{or}\ 8 \) ). It is unknown whether the findings can be generalized to conditions where the true between-case variance is small in size.

Without theories or prior knowledge about the relationship between baseline levels and treatment effects among cases, empirical researchers may seek a “post hoc” model selection procedure based on model fit to determine an optimal covariance structure. Due to the small sample sizes in SCEDs, the commonly used RLRT statistic to compare model fit does not follow a Chi-square distribution. Likewise, the information criterion (e.g., AIC and BIC) is not appropriate because they become equivalent to RLRTs at different alpha levels (e.g., AIC is equivalent to RLRTs at alpha = .157) and thus inherit the issue of the regular RLRT when the sample size is small. Hence, a parametric bootstrap (PB) approach (see Davison & Hinkley, 1997 ) based on the RLRT was proposed to determine whether the covariance is statistically significantly different from 0. PB simulates the null distribution the RLRT statistic, upon which an approximated p value can be calculated. Specifically, the first step is to simulate B (e.g., B = 500) bootstrap samples from the null model (i.e., a reduced model without the tested covariance). Then, the differences between the deviance of the reduced and the full model for each simulated date set are computed (e.g., T ∗ = { t 1 , …, t B }, where t is the RLRT statistic). Last, the observed deviance difference ( t obs ) from the empirical data is compared against the simulated null distribution provided by T ∗ and the approximated p value is calculated as follows:

where n extreme is the number of values in T ∗ that are equal or greater than the t obs .

The significant level for test based on PB should be chosen with caution. Within the context of model selection, we should not simply assume alpha =.05. Indeed, the significance level should be considered as the relative weight of model complexity and goodness of fit (Matuschek et al., 2017 ). When alpha = 0, an infinity penalty on the model complexity is implied and we always choose a reduced model, irrespective the evidence provided by the data. However, when alpha = 1, an infinite penalty on the lack of fit is implied and we always choose a more complex model. From this perspective, choosing alpha = .05 implies an overly strong penalty for model complexity. Following the same practice in Matuschek et al. ( 2017 ), we choose alpha = .20 for the PB test to select an optimal covariance structure.

Significance tests for variance components

There are two scenarios in which the significance tests for variance components are conducted. In the first scenario, the diagonal G matrix is adopted (either assumed or based on the model selection procedure). In this case, the null and alternative hypotheses are:

We obtain the difference in the -2*log(restricted likelihood) between two nested models, that is, the constrained model in which the tested parameter is constrained to 0, and the reference model in which the tested parameter is freely estimated. Using asymptotic theory, regular restricted likelihood ratio test assumes that the difference follows a χ 2 distribution with degrees of freedom equal to the difference in the number of covariance parameters. However, there is a case where the test of a variance component is not commonplace because the tested parameter value in the null hypothesis is at the boundary of the parameter space. Stram and Lee ( 1994 ) found that the regular likelihood ratio test is overconservative when the null value of between-case variance component is 0. In this case, inference based on the regular restricted likelihood ratio test is not accurate.

More accurate sampling distributions of the RLRT statistic have been proposed to deal with this boundary issue in testing variance components in MLM. Self and Liang ( 1987 ) and Stram and Lee ( 1994 ) showed that the RLRT for the presence of a single variance component has an asymptotic 0.5 \( {\chi}_0^2 \) : 0.5 \( {\chi}_1^2 \) mixture distribution under i.i.d. assumptions. We denote this test as RLRT SL where \( {\chi}_0^2 \) implies a probability mass at 0 and \( {\chi}_1^2 \) is the Chi-square distribution with df = 1. The calculation of p value for the RLRT SL is

where χ 2 is the difference in the -2*log(restricted likelihood) between the nested model and the reference model.

The second scenario for testing variance components is when the unstructured G matrix is adopted (either assumed or based on the model selection procedure). In this case to formally obtain the p value, we need to test the variance and covariance simultaneously (i.e., both the variance and covariance are equal to 0 in the null hypothesis) because it is impossible to specify a reduced model in which the covariance is nonzero but the variance being tested is 0. Similar to the case when testing only one parameter, the RLRT SL has an asymptotic 0.5 \( {\chi}_1^2 \) : 0.5 \( {\chi}_2^2 \) mixture distribution under i.i.d. assumptions, while \( {\chi}_1^2 \) and \( {\chi}_2^2 \) are the chi-square distributions with df = 1 and 2, respectively. We calculate p value for the RLRT SL as

where χ 2 is the difference in the -2*log(restricted likelihood) between the nested model and the reference model. In addition, when the i.i.d. assumption is violated, the generalized least squares (GLS) transformation (Wiencierz et al., 2011 ) can be applied to RLRT SL to account for autocorrelated errors in the context of SCED. Details of the transformation are presented in Appendix B .

Confidence intervals for variance components

It is well known that the Wald-type confidence interval (CI) performs poorly for small data sets and for parameters like variance components, which are known to have a skewed or bounded sampling distribution (SAS Institute, 2017 ). One alternative approach that can be applied in the MLM framework is the Satterthwaite approximation (Satterthwaite, 1946 ), which constructs confidence intervals for parameters with a lower boundary of 0. To calculate the bounds of the CI, we use

where the degrees of freedom v = 2 Z 2 , Z is the Wald statistic \( {\hat{\sigma}}^2/ SE\left({\hat{\sigma}}^2\right) \) , and the denominators are 1 − α /2 and α /2 ( α = .05 by default) quantiles of the χ 2 distribution with v degrees of freedom. The small sample size properties of this approximation in the context of SCED with presence of autocorrelation have not been examined so far.

Simulation conditions

To examine the performance of MLMs with different optimization methods and covariance structures, and the performance of the RLRT-based procedure for the between-case variance component, two Monte Carlo simulation studies were conducted. In Simulation Study 1, we generated data using Eq. ( 1 ) with zero covariance between the baseline level and treatment effect, and varied following design factors: series length I , number of cases J , treatment effect ( γ 10 ), between-case variance ( \( {\sigma}_{u0}^2 \) and \( {\sigma}_{u1}^2 \) ) and autocorrelation ( ρ ). In Simulation Study 2, an additional design factor was varied, namely, the correlation between the baseline level and treatment effect ( r ).

The number of cases J simulated in each study took on a value of 4 or 8, and the simulated series length took on a value of 10 or 20. This represents characteristics of SCED research based on the reviews of single case analysis (Pustejovsky et al., 2019 ; Shadish & Sullivan, 2011 ) and are consistent with previous simulation studies (Baek et al., 2020 ; Ferron et al., 2009 ; Moeyaert et al., 2017 ). Because we focused on the MBD across cases design, the start of the treatment is staggered across cases as shown in Table 1 . These staring points were chosen so that both the baseline and treatment phase contained a sufficient number of measurements. For all conditions, the intercept γ 00 was set to 0. To identify values for treatment effects and variance-covariance components that are authentic in SCED data, we reviewed five meta-analyses of single-case studies (Alen et al., 2009 ; Denis et al., 2011 ; Kokina & Kern, 2010 ; Shogren et al., 2004 ; Wang et al., 2011 ) and a reanalysis with MLMs that quantified the treatment effects and the variance components based on the data from above five meta-analyses (Moeyaert et al., 2017 ). According to the reanalysis results, a median standardized treatment effect size was 2.0. Hence, we set the treatment effect ( γ 10 ) to be either 2.0 or 0. In terms of variance components, the results of the reanalysis for the baseline level ( \( {\sigma}_{u0}^2 \) ), treatment effect ( \( {\sigma}_{u1}^2 \) ), within-case residuals ( \( {\sigma}_e^2 \) ) is provided in Table 2 (for full results, see Moeyaert et al., 2017 ). For between-case variance components, the estimates covered a wide range of .27 to 7.96 from five meta-analyses with similar number of reviewed studies. The relatively large standard errors also indicate uncertainty of the point estimation in each meta-analysis. Thus, to reflect the large range and uncertainty of the between-case variance component estimates, \( {\sigma}_{u0}^2 \) and \( {\sigma}_{u1}^2 \) were given a value of 0, .3, .5, 1.0, 2.0, 4.0, or 8.0 in Simulation Study 1 (zero covariance) and .3, .5, 1.0, 2.0, 4.0, or 8.0 in Simulation Study 2 (nonzero covariance). This setting also covers the range of adopted values for between-case variance components in previous simulation studies (Baek et al., 2020 ; Baek & Ferron, 2020 ; Ferron et al., 2009 ; Hembry et al., 2015 ; Moeyaert et al., 2017 ; Moeyaert et al., 2013a , 2013c , 2016 ).

In Simulation Study 1, covariance σ u 0 u 1 was set to 0. In Simulation Study 2, covariances σ u 0 u 1 were generated based on negative correlations between the baseline level and treatment effect found in the above meta-analyses (Alen et al., 2009 ; Denis et al., 2011 ; Kokina & Kern, 2010 ; Shogren et al., 2004 ; Wang et al., 2011 ) and the reanalysis using MLM (Moeyaert et al., 2016 ). Specifically, we used the lower and upper bound of the correlation (i.e., r = − .3 or − .7) following Moeyaert et al. ( 2016 ).

For within-case variance, the results based on the reanalysis were quite similar each other with estimates in the range of 1.000 to 1.146 and small standard errors. Thus, the within-case error was generated with variance of 1.0 and assumed homogeneous across phases in both simulation studies. In terms of autocorrelations among repeated measures in SCEDs, Shadish and Sullivan ( 2011 ) found that the average estimate for single-cases studies with MBD was .32. Considering the typical autocorrelated values found in behavior data and used in previous simulation studies (Ferron et al., 2009 ; Shadish & Sullivan, 2011 ; Sideridis & Greenwood, 1997 ), the autocorrelation ρ was set to .2, .4 or .6 in Simulation Study 1 and 2. The summarized design factors and conditions are presented in Table 3 . Combining all the design factors, there were a total of 168 conditions and 288 conditions in Simulation Study 1 and 2, respectively. In each condition, 2000 independent data sets (i.e., replications) were simulated.

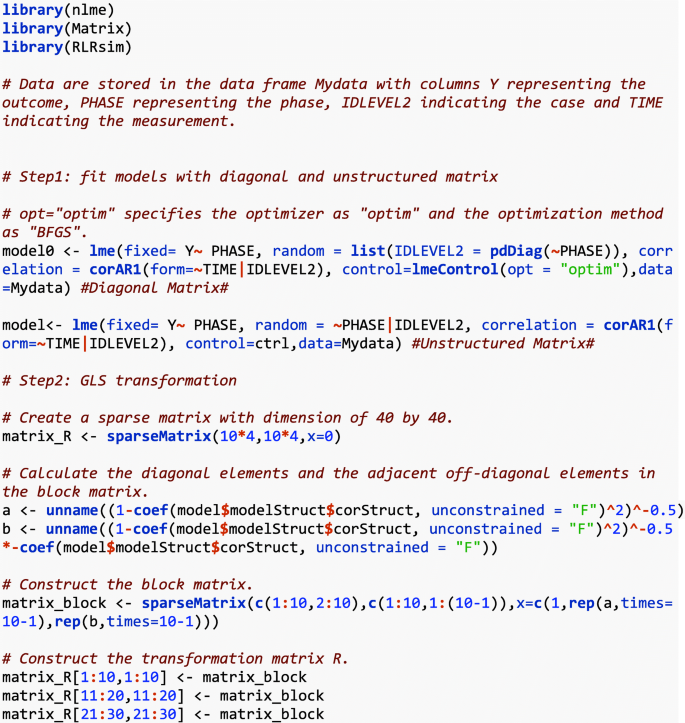

In both simulation studies, we analyzed data by Eq. ( 1 ) with various G matrices and REML estimation with either constrained or unconstrained optimization. Specifically, we considered the following five different combinations: unconstrained optimization with a diagonal matrix (UD), constrained optimization with a diagonal matrix (CD), unconstrained optimization with an unstructured matrix (UU), constrained optimization with an unstructured matrix (CU), and unconstrained optimization with the model selection procedure (UM). The UM was implemented through the parametric bootstrap with the GLS transformation. The constrained optimization with model selection procedure was not considered due to the low convergence rate with a small number of cases ( J = 4). The unconstrained optimization was implemented by using the lme () function in the nlme package in R 4.0.3 (Pinherio et al. 2019 ) and the constrained optimization was implemented in SAS 9.4 using Proc MIXED. Kenward-Roger method was used to estimate degree of freedom and standard error for the treatment effect (Kenward & Roger, 1997 ). R code for the estimation with the model selection procedure, GLS transformation, parametric bootstrap, and RLRT SL are provided in Appendix C . Both the R and the SAS code for the simulation is available from the Open Science Framework project (see the link in the open practices statement).

Performance measures

Outcomes examined included the convergence rate and positive definiteness rate, as well as relative bias, mean squared error (MSE) and coverage rate of the 95% confidence intervals for the estimated parameters. Outcomes were calculated based on converged replications except for the bias of correlation estimates (inadmissible estimates caused by nonpositive definiteness were excluded). The relative bias is the absolute bias divided by the population true value Footnote 1 . Bias in a parameter estimate was considered to be acceptable if the relative bias was less than 5% (Carsey & Harden, 2013 ). The MSE was calculated as the expected value for the squared differences between the estimates and population parameters. The coverage rate of the 95% confidence interval was calculated as the proportion of the 95% confidence intervals that contains the true population values. To account for simulation error caused by a finite number of repetitions in our simulation, we computed the bounds around the coverage rate which follows a binomial distribution: \( p\pm 1.96\times \sqrt{p\left(1-p\right)/r} \) , where p is the expected probability (0.95) and r is the number of repetitions (2000). Therefore, we consider coverage rates between 94.0% and 96.0% ( \( 0.95\pm 1.96\times \sqrt{0.95\left(1-0.95\right)/2000} \) ) to be acceptable.

To evaluate the performance of RLRT SL , empirical type I error rate and power were calculated. The empirical type I error rate was computed as the proportion of repetitions where the true variance component was 0, but the p value of the test is less than .05 (i.e., the nominal α level). Empirical power was computed as the proportion of repetitions where the true variance component is non-zero and the p -value of the test is less than .05. With 2000 simulated datasets, we expect that the empirical type I error rate ranges from .04 to .06 ( \( .05\pm 1.96\times \sqrt{0.95\left(1-0.95\right)/2000} \) ).

We expect that the positive definiteness rate for the unconstrained optimization is significantly higher than that of the constrained optimization in both studies. When diagonal matrix is correctly specified (i.e., models specified with diagonal matrix in Study 1), only the first scenario of nonpositive definiteness will occur, and thus we expect that differences in variance components estimates are small between constrained and unconstrained optimization. With unstructured matrix, however, estimates of the correlation between random effects are likely to be inadmissible (| r | > 1) for constrained optimization, thus leading to more nonpositive definiteness than unconstrained optimization in both studies. We also expect that the misspecification of covariance structures would cause biased estimates of between-case variance components and the model selection procedure would mitigate the biases.

In each simulation study, we first compared combinations of covariance structures and optimization methods (i.e., five model specifications: UD, CD, UU, CU, and UM) in terms of the model convergence rate and positive definiteness rate of the estimated G matrix. We then analyzed relative bias, coverage rate, MSE for the estimates of between-case variance components. We further investigated the bias/relative bias for the estimates of the covariance and correlation between random effects, and the impact of nonpositive definiteness on their estimates. Last, we illustrated the type I error rate and power for the RLRT-based procedure to test between-case variance components. The results for the average baseline level, average treatment effect, level 1 residual variance and autocorrelation are not primary focus of this study and thus are provided in the supplemental material (see Table S1 for Study1 and Table S2 for Study 2).

To study the impact of the design factors on the above performance outcomes, we conducted a series of ANOVA analyses. For the positive definiteness rate, and performance outcomes for the estimates of between-case variance and covariance, we conducted a mixed ANOVA with the between-subject design factors including series length, number of cases, treatment effect, between-case variance, correlation (only included in Study 2), autocorrelation, and the within-subject factor of model specification. For type I error rate and power in Study 1, we investigated whether they were dependent on the design factors. As the nonzero covariance is generated and thus the variance is nonzero in Study 2, we only investigated whether the power was dependent on the design factors in each procedure. Main effects and two-way interaction effects were estimated. We also calculated the η 2 to determine whether effect sizes were small (.01), medium (.06) or large (.14) based on the benchmarks given by Cohen ( 1988 ). The overview of η 2 for the between-case variance components is shown in supplemental Tables S3 to S6 . The overview of η 2 for the covariance and correlation is shown in supplemental Table S7 . We focused on the most meaningful and important findings in the following sections, that is, the impact of model specification on the relative bias for between-case variance components, and the type I error rate and power of statistical inferences. To avoid discussing trivial effects, we will primarily look at the design factors whose main effects and/or associated interaction effects have large effect sizes ( η 2 ≥ .14). Results presented in following tables and figures varied according to these high-impact factors. All results highlighted in the results section were statistically significant at .05 alpha (actually, p < .001).

Convergence rates

For the convergence rate, most of the variability was associated with the model specification (Study 1: F (4, 624) = 646.71, η 2 = .36; Study 2: F (4, 1104) = 1126.55, η 2 = .36) and its interaction with the number of cases (Study 1: F (4, 624) = 489.84, η 2 = .27; Study 2: F (4, 1104) = 936.44, η 2 = .30). As shown in Table 4 , the convergence rates were high under all conditions (above 98%) except when the unstructured matrix was specified, and the constrained optimization was used (CU) for condition of four cases (convergence rate = 81.12% and 83.30% for Study 1 and 2, respectively).

Positive definiteness

For the positive definiteness rate, most of the variability was associated with the model specification (Study 1: F (4, 624) = 5246.23, η 2 = .46; Study 2: F (4, 1104) = 10330.90, η 2 = .46), between-case variance (Study 1: F (6, 117) = 4429.57, η 2 = .18; Study 2: F (5, 232) = 2755.53, η 2 = .16), and their interaction (Study 1: F (24, 624) = 488.21, η 2 = .26; Study 2: F (20, 1104) = 935.01, η 2 = .21). As shown in Table 5 , the unconstrained optimization with diagonal matrix had 100% positive definiteness rates across all conditions. For unconstrained optimization with unstructured matrix (UU) or model selection procedure (UM), positive definiteness rates were consistently high across levels of the between-case variance (above 95%). On the other hand, as between-case variance became small, positive definiteness rates decreased dramatically with constrained optimization (i.e., CD and CU). Overall, the positive definiteness rate was less than 40% on average when the variance was small (<.3).

Between-case variance components

Relative bias.

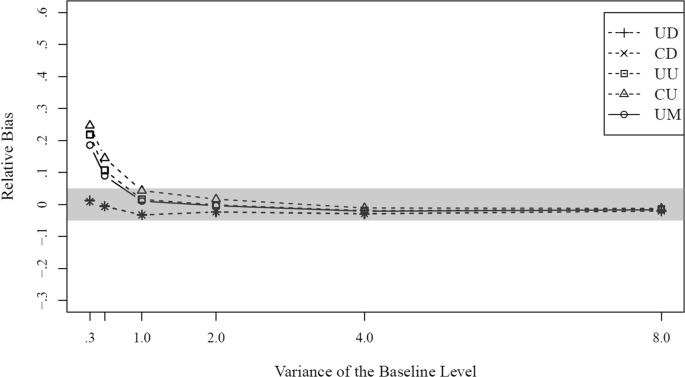

In Study 1 with zero covariance, the relative bias of the variance in the baseline level was mostly associated with the size of the variance in the baseline level ( F (5, 99) = 120.94, η 2 = .32), model specification ( F (4, 532) = 359.86, η 2 = .13), and their interaction ( F (20, 532) = 69.77, η 2 = .13). As illustrated in Fig. 1 , overfitting the G matrix (i.e., UU and CU) would overestimate the variance of the baseline level when the variance component is small (i.e., < 0.5). Although UD and CD were better than the others when the variance of the treatment effect was small, the differences became smaller as the variance component increased. As expected, the model selection procedure (UM) slightly mitigated the bias caused by the misspecification.

Relative bias in the baseline level variation for different model specifications as a function of variance in simulation Study 1(zero covariance). Note . UD = unconstrained and diagonal; CD = constrained and diagonal; UU = unconstrained and unstructured; CU = constrained and unstructured; UM = unconstrained and model selection

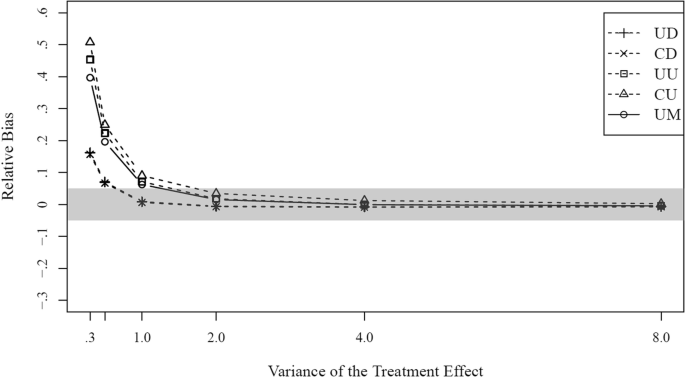

Similar results were found for the variance of the treatment effect. The size of the variance in the treatment effect ( F (5, 99) = 572.82, η 2 = .46) had statistically significant and large impact on the relative bias. The model specification ( F (4, 532) = 216.58, η 2 = .07) and its interaction with the variance ( F (20, 532) = 51.21, η 2 = .08) only had statistically significant and medium effects. Although the pattern was similar to the variance of the baseline level, one main difference was that when the size of the variance of the treatment effect was small (< 0.5), regardless of model specification methods, the variance was overestimated Fig. 2 .

Relative bias in the treatment effect variation for different model specifications as a function of variance in simulation Study 1 (zero covariance). Note . UD = unconstrained and diagonal; CD = constrained and diagonal; UU = unconstrained and unstructured; CU = constrained and unstructured; UM = unconstrained and model selection

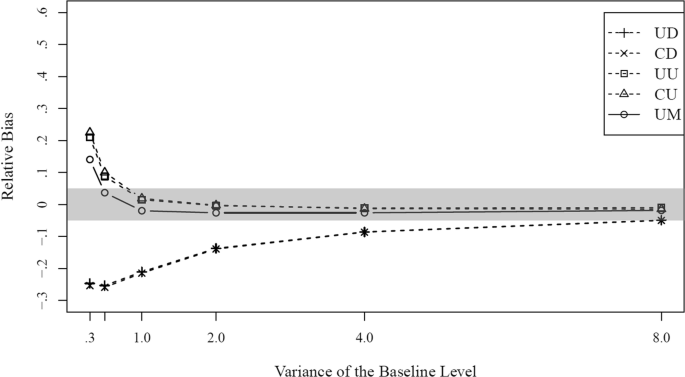

In Study 2 with nonzero covariance, the relative bias of the variance in the baseline level was largely associated with the model specification ( F (4, 1104) = 4211.35, η 2 = .45) and its interaction with the variance in the baseline level ( F (20, 1104) = 424.35, η 2 = .23). As illustrated in Fig. 3 , ignoring the covariance would cause negatively biased variance estimate of the intercept when the variance was 4 or less whereas specifying an unstructured covariance matrix (i.e., UU and CU) could result in positively biased variance estimate of the intercept when the variance was 0.5 or less. As expected, the model selection procedure (UM) had the best performance with acceptable relative biases for all sizes of variance except when the variance was small (0.3).

Relative bias in the baseline level variation for different model specifications as a function of variance in simulation Study 2 (nonzero covariance). Note . UD = unconstrained and diagonal; CD = constrained and diagonal; UU = unconstrained and unstructured; CU = constrained and unstructured; UM = unconstrained and model selection

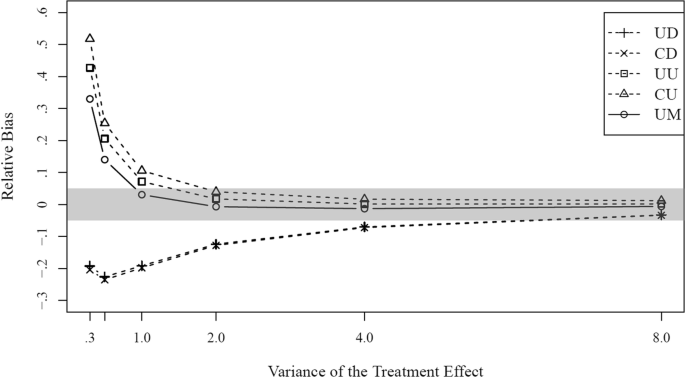

Similar patterns were found for the variance of the treatment effect in Study 2. The model specification ( F (4, 1104) = 2248.08, η 2 = .33) and its interaction with the variance in the treatment effect ( F (20, 1104) = 283.58, η 2 = .21) had statistically significant and large effects on the relative bias. However, it seems to be more challenging to obtain accurate estimates of the variance of the treatment effect than the baseline level. As illustrated in Fig. 4 , the variance of the treatment should be at least 1.0 in order for the relative biases to fall within the acceptable level even using the best performing method (i.e., model selection procedure (UM)). Table 6 showed the relative biases under various conditions based on the model selection procedure Footnote 2 .

Relative bias in the treatment effect variation for different model specifications as a function of variance in simulation Study 2 (nonzero covariance). Note . UD = unconstrained and diagonal; CD = constrained and diagonal; UU = unconstrained and unstructured; CU = constrained and unstructured; UM = unconstrained and model selection

Coverage rates and MSE

The coverage rate for the variance of the baseline level in both studies was largely associated with the amount of variance (Study 1: F (5, 99) = 496.51, η 2 = .60; Study 2: F (5, 232) = 701.78, η 2 = .42). As the variance increased, the average coverage rates approached the nominal level of .95. The effect of model specification had small to moderate effect (Study 1: F (4, 532) = 263.43, η 2 = .02; Study 2: F (4, 1104) = 1319.49, η 2 = .12). The average coverage rate for all model specifications were close to the nominal level (Study 1: range of .943 to .954; Study 2: range of .945 to .9). For model selection procedure, the coverage rate was close to the nominal level when variance in the baseline level was larger than 1.0.

Similar patterns were found for the coverage rate of the variance of the treatment effect in both studies. The most influential factor was the size of the variance (Study 1: F (5, 99) = 1183.88, η 2 = .74; Study 2: F (5, 232) = 2086.93, η 2 = .56). As the variance increased, coverage rates also approached the nominal level of .95. The effect of model specification was small to moderate (Study 1: F (4, 532) = 177.16, η 2 = .01; Study 2: F (4, 1104) = 1115.49, η 2 = .09). The average coverage rates for different model specifications were slightly below or close to the nominal level (Study 1: range of .926 to .941; Study 2: range of .926 to .959). For model selection procedure (UM), the coverage rate was close to the nominal level when variance in the treatment effect was larger than 1.0.

MSE can be very useful to compare different methods in terms of efficiency of estimators. In both studies, MSE was mostly associated with the between-case variance ( η 2 in the range of .7931 to .8117) as MSE inherently depended on the nominal value of the between-case variance. Model specification had statistically significant but trivial effects on the MSE ( η 2 in the range of .0002 to .0005), but it showed slight disadvantage for the constrained optimization with unstructured matrix (CU). We provided the average MSE for each specification in Table 7 .

Covariance and correlation

The bias of covariance estimates was largely associated with the number of cases (Study 1: F (1, 117) = 100.60, η 2 = .24; Study 2: F (1, 232) = 99.47, η 2 = .19). In addition, the between-case variance had a medium to large effect on the biases (Study 1: F (6, 117) = 11.89, η 2 = .17; Study 2: F (5, 232) = 100.60, η 2 = .09). Overall, the bias/relative bias of the covariance estimates tended to be underestimated in Study 1 (average bias = −.052) and in Study 2 (average bias = −.032; average relative bias = −.151). As illustrated in Table 8 , the magnitude of the biases/relative biases decreased with larger number of case and size of between-case variance.

As expected, correlation estimates in constrained optimization were out of boundary (i.e., inadmissible) when nonpositive definiteness was encountered in both studies. Therefore, large proportions (same as the nonpositive definiteness rate) of inadmissible correlation estimates were obtained in the constrained optimization, especially with small amount of the between-case variance. On the other hand, all correlation estimates were admissible in the unconstrained optimization. Therefore, we only evaluated the bias/relative bias of the correlation estimates in the unconstrained optimization and found that they tended to be underestimated in Study 1 (average bias = −.031) and overestimated in Study 2 (average bias = .099; average relative bias = .216). As illustrated in Table 8 , ANOVA results showed that the magnitude of bias in correlation estimates reduced with larger between-case variance (Study 1: F (6, 117) = 1781.92, η 2 = .90; Study 2: F (5, 232) = 166.29, η 2 = .35). In addition, the interaction effect between the variance and correlation is significant and large in Study 2 ( F (5, 232) = 73.27, p < .001, η 2 = .15). Thus, with larger magnitude of the correlation, the bias would decrease more rapidly when the between-case variance increased.

Empirical type I error rates

The empirical type I error rates of the RLRT SL using unconstrained optimization Footnote 3 were examined based on Study 1. For both variance components, the empirical type I error rates were mostly associated with series length (baseline level: F (1, 9) = 468.62, η 2 = .39; treatment effect: F (1, 9) = 145.30, η 2 = .40), autocorrelation (baseline level: F (2, 9) = 209.46, η 2 = .35; treatment effect: F (2, 9) = 25.13, η 2 = .14), and model specification (baseline level: F (2, 36) = 511.71, η 2 = .15; treatment effect: F (2, 36) = 380.75, η 2 = .37). The empirical type I error rates of RLRT SL based on the series length, autocorrelation and model specification were shown in Table 9 . For model selection procedure with typical autocorrelation ( ρ = .32) found in MBD (see Shadish & Sullivan, 2011 ), the type I error rates for variance in the baseline level and treatment effect were close to the nominal level when the series length was 20 and were inflated when the series length was 10, regardless of the number of cases. For the model specification with diagonal or unstructured matrix, the type I error rates were even closer to the nominal level.

Empirical power

In Study 1, the empirical power of the RLRT SL for the variance components was mostly associated with the amount of the variance (baseline level: F (5, 99) = 945.64, η 2 = .71; treatment effect: (5, 99) = 3898.30, η 2 = .85). In addition, there were medium effect of the series length (baseline level: F (1, 99) = 477.65, η 2 = .06; treatment effect: F (1, 99) = 592.36, η 2 = .03) and number of cases (baseline level: F (1, 99) = 945.64, η 2 = .13; treatment effect: F (1, 99) = 2014.59, η 2 = .09). Similar results were found for the empirical power in Study 2. Tables 10 and 11 showed the empirical power under various combinations of variance size, number of cases, and series length for Study 1 and 2, respectively. For the variance of the baseline level, the power reached the commonly accepted .80 threshold without inflated type I error rate when the series length = 20 in conditions where the amount of variance ≥ 2.0 and the number of cases = 4, or when the amount of variance ≥ 1.0 and the number of cases = 8 in both studies. For the variance of the treatment effect, the power reached .80 without inflated type I error rate when the series length = 20 in conditions where the amount of variance ≥ 4.0 and the number of cases = 4, or when the amount of variance ≥ 2.0 and the number of cases = 8 in both studies. As model specification had little impact on the empirical power of the test (all η 2 < .01), the power for model specification with diagonal and unstructured matrix was provided in supplemental Table S10 and S11 .

The purpose of this study was threefold. The first was to evaluate the impact of optimization methods (i.e., constrained vs. unconstrained) and specifications of covariance structures (diagonal vs. unstructured vs model selection procedure) on between-case variance components using MLMs in the analysis of SCEDs. The second was to evaluate an RLRT-based procedure to make statistical inferences for between-case variance components. Lastly, based on the findings from the simulation studies, we aimed to provide guidelines to show empirical researchers the conditions under which the estimates of between-case variance components were reliable, and the test procedure had acceptable type I error rate and power.

The convergence rates were very high (≥ 98%) in all conditions except for the estimation of an unstructured matrix using the constrained optimization with a small number of cases ( J = 4). The unconstrained optimization had advantages over the constrained optimization because the positive definiteness rates were over 90% and consistent across levels of the between-case variance when using unconstrained optimization. On the other hand, the constrained optimization frequently encountered non-positive definiteness (proportion ≥ 60%) when the variance was small (< .3).

Although the unconstrained optimization is effective in reducing non-positive definite estimates, it did not affect the estimates of the between-case variance components. Regardless of the optimization methods, positive biases in the estimated variance of treatment effects were present when the true variance size was small (i.e., 1.0 or below), even when the covariance structure was correctly specified. This is partly because that the between-case variance is close to the boundary of its parameter space and the asymptotic assumptions of REML are seriously violated with small samples. Another possible reason is the biased estimate of the autocorrelation of level 1 errors. Though the estimates of autocorrelation are not of our interest in this study, we found negative biases in the estimates, which are consistent with previous findings (Ferron et al., 2009 ).

Overall, the bias of between-case variance estimates would decrease when the size of between-case variance components became larger, which is consistent with previous work (Ferron et al. 2009 , Moeyaert et al., 2013a , 2013c ). In general, overfitting the covariance structure tended to overestimate the between-case variance components, whereas underfitting the covariance structure tended to underestimate the between-case variance components, regardless of the choice of optimization methods. However, the positive biases caused by overfitting reduced to an acceptable level when the variance was 1.0 or above, whereas the negative biases caused by underfitting were not acceptable until the variance was as large as 8.0. Hence our findings partially supported the conclusion in Moeyaert et al. ( 2016 ) that correctly specified model did not significantly overperform than the mis-specified model when the between-case variance was large.

In practice, applied researchers seldom know the true covariance structure in the population or the true size of the variance, therefore it is difficult to determine whether the covariance structure is correctly specified or the impact of misspecifications. Our findings showed that the post hoc model selection procedure based on the parameter bootstrap could mitigate the bias caused by misspecifications. Specifically, point estimates for the variance in the baseline level were accurate when the variance ≥ 1.0 with four cases or variance ≥ 0.5 with eight cases. This was also true for the variance in the treatment effect when the variance ≥ 2.0 with four cases or variance ≥ 1.0 with eight cases. Despite the differences in point estimates, the coverage rates of the confidence intervals were similar across the various model specifications. For the model selection procedure, the interval estimates for between case variance components was close to the nominal level when the variance ≥ 1.0. The average MSE of between-case variance estimates for five model specifications were also very alike, with sight disadvantages in terms of efficiency for the estimator in the constrained optimization with unstructured matrix.

Although the choice of optimization methods did not affect the estimates of variance components, it did have an impact on the estimates of the correlation between random effects. The constrained optimization resulted in a high rate of nonpositive definiteness with inadmissible correlation estimates. On the other hand, although the unconstrained optimization seldom encountered non-positive definiteness issues, the estimated correlation coefficient was likely to be biased. Hence, we caution researchers interpreting the estimated correlation between the random effects. For covariance estimates, there were no significant differences between constrained and unconstrained optimization methods. However, caution is also needed in certain conditions because biased estimates are obtained.

Last but not least, through the examinations of the empirical type I error rate and power of the RLRT SL for testing between-case variance components, we found that at least 20 measurements are needed to ensure that the empirical type I error rate is close to the nominal level. Because the GLS transformation applied to RLRT SL is based on the estimates of autocorrelations, a sufficient series length can prevent inflated type I error rate by providing more accurate estimations of autocorrelations. For single case studies, Ferron et al. ( 2010 ) found a median of 24 measurements and Shadish and Sullivan ( 2011 ) found a median of 20 measurements. Thus, empirical type I error rate is expected be close to the nominal level for at least half of single case studies. On the other hand, although the estimates of between-case variance components have little bias when the variance is sufficiently large, studies with few cases might be under-powered. In the next section, we provided specific guidelines for the minimally detectable effect size for variance component under commonly encountered conditions in SCED.

Recommendations

A practical guideline was summarized for empirical researchers about the estimation and statistical inference for between-case variance in SCEDs. First, we recommend using the unconstrained optimization if nonpositive definite issues are encountered, especially when the estimate of correlation/covariance between random effects is of interests. Second, we recommend using the model selection procedure (see Appendix C for the R code) to determine the optimal covariance structure (i.e., either diagonal or unstructured) if there is no strong theoretical grounding or prior knowledge regarding the structure of the covariance matrix. Third, researchers should be aware that when the estimated variance components are small (0.5 or less), they are likely to be overestimated, indicating that the true parameter values are even smaller, especially when the number of cases is small (i.e., n = 4). Fourth, when conducting null hypothesis significance test for variance components, researcher should use the RLRT based on the asymptotic mixture distribution (i.e., RLRT SL ) and apply the GLS transformation to account for autocorrelated errors (see Appendix C for the R code). Fifth, researchers should make sure that the power for testing variance components is sufficient. For the power of .80 without an inflated type I error rate (i.e., given series length = 20), the minimally detectable variance of the baseline level is 2.0 when there are four cases, or 1.0 when there are eight cases. For the variance of the treatment effect, the minimally detectable variance is 4.0 when the number of cases = 4, or 2.0 when the number of cases = 8. Last but not least, researchers should be cautious when interpreting the covariance between random effects as the covariance estimates can be interpreted with confidence only when there are four cases and the estimated variance components are larger than 4.0 or when there are eight cases and the estimated variance components are larger than the 1.0. More caution is warranted for the correlation estimates as they are biased across all conditions.

Limitations and future research

Results of this study are limited to the chosen conditions. Although we chose typical conditions in SCEDs, conclusions from our study should be further validated before they can be generalized to other conditions. Specifically, we only simulated data based on MBD across cases, which is most common design in SCEDs. However, other designs such as ABAB, MBD across behaviors and alternating treatments are not rare in single case studies. Under those designs, conclusions in our study should be carefully reexamined.

Due to the limitation of REML, future studies should continue to explore the Bayesian approaches. Bayesian approaches are promising because they are not based on the asymptotic assumptions. However, previous studies using Bayesian approach did not generate consistent results with different choice of priors for the between-case variance components (Baek et al., 2020 ; Hembry et al., 2015 ; Joo & Ferron, 2019 ; Moeyaert et al., 2017 ). Future study should consider the construction of reasonable informative priors to improve the variance estimation. The Bayesian approach may also provide better estimates of autocorrelations (Shadish et al., 2013b ), which can be used in the GLS transformation for the RLRT. In addition, with small series length ( I = 10) and small number of cases ( J = 4) most of the conditions are under-powered with RLRT-based procedures. With reasonable informative priors for the between-case variance component (see Tsai & Hsiao, 2008 ), Bayesian inference based on the posterior distribution can be an alternative to the RLRT-based procedures to improve the power for detecting the significance of between-case variance components.

Absolute bias is calculated when the population value of a parameter is zero.

Relative biases based on diagonal and unstructured covariance structure specification can be found in supplemental Table S8 and S9 , respectively).

We did not evaluate the RLRT SL for the constrained optimization due to the high non-positive definiteness rate.

Alen, E., Grietens, H., & Van den Noortgate, W. (2009). Meta-analysis of single-case studies: An illustration for the treatment of anxiety disorders . Unpublished manuscript, Department of Educational Science and Psychology, University of Leuven.

Baek, E., Beretvas, S. N., Van den Noortgate, W., & Ferron, J. M. (2020). Brief research report: Bayesian versus REML estimations with noninformative priors in multilevel single-case data. The Journal of Experimental Education , 88 (4), 698−710. https://doi.org/10.1080/00220973.2018.1527280

Article Google Scholar

Baek, E. K., & Ferron, J. M. (2013). Multilevel models for multiple-baseline data: Modeling across-participant variation in autocorrelation and residual variance. Behavior Research Methods , 45 (1), 65−74. https://doi.org/10.3758/s13428-012-0231-z

Article PubMed Google Scholar

Baek, E., & Ferron, J. M. (2020). Modeling heterogeneity of the level-1 error covariance matrix in multilevel models for single-case data. Methodology , 16 (2), 166−185. https://doi.org/10.5964/meth.2817

Barlow, D. H., Nock, M. K., & Hersen, M. (2009). Single case experimental designs: Strategies for studying behavior change (3rd ed.). Allyn & Bacon.

Carsey, T. M., & Harden, J. J. (2013). Monte Carlo simulation and resampling methods for social science . Sage Publications.

Chung, Y., Gelman, A., Rabe-Hesketh, S., Liu, J., & Dorie, V. (2015). Weakly informative prior for point estimation of covariance matrices in hierarchical models. Journal of Educational and Behavioral Statistics , 40 (2), 136−157. https://doi.org/10.3102/1076998615570945

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Erlbaum.

Google Scholar

Davison, A. C., & Hinkley, D. V. (1997). Bootstrap methods and their application . Cambridge University Press.

Book Google Scholar

Demidenko, E. (2013). Mixed models: theory and applications with R . John Wiley & Sons.

Denis, J., Van den Noortgate, W., & Maes, B. (2011). Self-injurious behavior in people with profound intellectual disabilities: A meta-analysis of single-case studies. Research in Developmental Disabilities, 32, 911−923. https://doi.org/10.1016/j.ridd.2011.01.014

Ferron, J. M., Bell, B. A., Hess, M. R., Rendina-Gobioff, G., & Hibbard, S. T. (2009). Making treatment effect inferences from multiple-baseline data: The utility of multilevel modeling approaches. Behavior Research Methods , 41 (2), 372−384. https://doi.org/10.3758/BRM.41.2.372

Ferron, J. M., Farmer, J. L., & Owens, C. M. (2010). Estimating individual treatment effects from multiple-baseline data: A Monte Carlo study of multilevel-modeling approaches. Behavior Research Methods , 42 (4), 930−943. https://doi.org/10.3758/BRM.42.4.930

Gill, J., & King, G. (2004). What to do when your Hessian is not invertible: Alternatives to model respecification in nonlinear estimation. Sociological Methods & Research , 33 (1), 54−87. https://doi.org/10.1177/0049124103262681

Hembry, I., Bunuan, R., Beretvas, S. N., Ferron, J. M., & Van den Noortgate, W. (2015). Estimation of a nonlinear intervention phase trajectory for multiple-baseline design data. The Journal of Experimental Education , 83 (4), 514−546. https://doi.org/10.1080/00220973.2014.907231

Hoffman, L. (2015). Longitudinal analysis: Modeling within-person fluctuation and change . Routledge.

Joo, S. H., & Ferron, J. M. (2019). Application of the within-and between-series estimators to non-normal multiple-baseline data: Maximum likelihood and Bayesian approaches. Multivariate Behavioral Research , 54 (5), 666−689. https://doi.org/10.1080/00273171.2018.1564877

Joo, S. H., Ferron, J. M., Moeyaert, M., Beretvas, S. N., & Van den Noortgate, W. (2019). Approaches for specifying the level-1 error structure when synthesizing single-case data. The Journal of Experimental Education , 87 (1), 55−74. https://doi.org/10.1080/00220973.2017.1409181

Kenward, M. G., & Roger, J. H. (1997). Small sample inference for fixed effects from restricted maximum likelihood. Biometrics , 53 (3), 983−997. https://doi.org/10.2307/2533558

Kiernan, K. (2018). Insights into using the GLIMMIX procedure to model categorical outcomes with random effects. Cary: SAS Institute Inc. paper SAS2179 .

Kokina, A., & Kern, L. (2010). Social Story interventions for students with autism spectrum disorders: A meta-analysis. Journal of Autism and Developmental Disorders, 40, 812– 826. https://doi.org/10.1007/s10803-009-0931-0

Kratochwill, T. R., & Levin, J. R. (2010). Enhancing the scientific credibility of single-case intervention research: Randomization to the rescue. Psychological Methods , 15 (2), 124−144. https://doi.org/10.1037/14376-003

Kratochwill, T. R., & Levin, J. R. (2014). Single-case intervention research: Methodological and statistical advances . American Psychological Association.

Levin, J. R., Ferron, J. M., & Gafurov, B. S. (2018). Comparison of randomization-test procedures for single-case multiple-baseline designs. Developmental Neurorehabilitation , 21 (5), 290−311. https://doi.org/10.1080/17518423.2016.1197708

Manolov, R., & Moeyaert, M. (2017). Recommendations for choosing single-case data analytical techniques. Behavior Therapy , 48 (1), 97−114. https://doi.org/10.1016/j.beth.2016.04.008