- Privacy Policy

Home » Case Study – Methods, Examples and Guide

Case Study – Methods, Examples and Guide

Table of Contents

A case study is a research method that involves an in-depth examination and analysis of a particular phenomenon or case, such as an individual, organization, community, event, or situation.

It is a qualitative research approach that aims to provide a detailed and comprehensive understanding of the case being studied. Case studies typically involve multiple sources of data, including interviews, observations, documents, and artifacts, which are analyzed using various techniques, such as content analysis, thematic analysis, and grounded theory. The findings of a case study are often used to develop theories, inform policy or practice, or generate new research questions.

Types of Case Study

Types and Methods of Case Study are as follows:

Single-Case Study

A single-case study is an in-depth analysis of a single case. This type of case study is useful when the researcher wants to understand a specific phenomenon in detail.

For Example , A researcher might conduct a single-case study on a particular individual to understand their experiences with a particular health condition or a specific organization to explore their management practices. The researcher collects data from multiple sources, such as interviews, observations, and documents, and uses various techniques to analyze the data, such as content analysis or thematic analysis. The findings of a single-case study are often used to generate new research questions, develop theories, or inform policy or practice.

Multiple-Case Study

A multiple-case study involves the analysis of several cases that are similar in nature. This type of case study is useful when the researcher wants to identify similarities and differences between the cases.

For Example, a researcher might conduct a multiple-case study on several companies to explore the factors that contribute to their success or failure. The researcher collects data from each case, compares and contrasts the findings, and uses various techniques to analyze the data, such as comparative analysis or pattern-matching. The findings of a multiple-case study can be used to develop theories, inform policy or practice, or generate new research questions.

Exploratory Case Study

An exploratory case study is used to explore a new or understudied phenomenon. This type of case study is useful when the researcher wants to generate hypotheses or theories about the phenomenon.

For Example, a researcher might conduct an exploratory case study on a new technology to understand its potential impact on society. The researcher collects data from multiple sources, such as interviews, observations, and documents, and uses various techniques to analyze the data, such as grounded theory or content analysis. The findings of an exploratory case study can be used to generate new research questions, develop theories, or inform policy or practice.

Descriptive Case Study

A descriptive case study is used to describe a particular phenomenon in detail. This type of case study is useful when the researcher wants to provide a comprehensive account of the phenomenon.

For Example, a researcher might conduct a descriptive case study on a particular community to understand its social and economic characteristics. The researcher collects data from multiple sources, such as interviews, observations, and documents, and uses various techniques to analyze the data, such as content analysis or thematic analysis. The findings of a descriptive case study can be used to inform policy or practice or generate new research questions.

Instrumental Case Study

An instrumental case study is used to understand a particular phenomenon that is instrumental in achieving a particular goal. This type of case study is useful when the researcher wants to understand the role of the phenomenon in achieving the goal.

For Example, a researcher might conduct an instrumental case study on a particular policy to understand its impact on achieving a particular goal, such as reducing poverty. The researcher collects data from multiple sources, such as interviews, observations, and documents, and uses various techniques to analyze the data, such as content analysis or thematic analysis. The findings of an instrumental case study can be used to inform policy or practice or generate new research questions.

Case Study Data Collection Methods

Here are some common data collection methods for case studies:

Interviews involve asking questions to individuals who have knowledge or experience relevant to the case study. Interviews can be structured (where the same questions are asked to all participants) or unstructured (where the interviewer follows up on the responses with further questions). Interviews can be conducted in person, over the phone, or through video conferencing.

Observations

Observations involve watching and recording the behavior and activities of individuals or groups relevant to the case study. Observations can be participant (where the researcher actively participates in the activities) or non-participant (where the researcher observes from a distance). Observations can be recorded using notes, audio or video recordings, or photographs.

Documents can be used as a source of information for case studies. Documents can include reports, memos, emails, letters, and other written materials related to the case study. Documents can be collected from the case study participants or from public sources.

Surveys involve asking a set of questions to a sample of individuals relevant to the case study. Surveys can be administered in person, over the phone, through mail or email, or online. Surveys can be used to gather information on attitudes, opinions, or behaviors related to the case study.

Artifacts are physical objects relevant to the case study. Artifacts can include tools, equipment, products, or other objects that provide insights into the case study phenomenon.

How to conduct Case Study Research

Conducting a case study research involves several steps that need to be followed to ensure the quality and rigor of the study. Here are the steps to conduct case study research:

- Define the research questions: The first step in conducting a case study research is to define the research questions. The research questions should be specific, measurable, and relevant to the case study phenomenon under investigation.

- Select the case: The next step is to select the case or cases to be studied. The case should be relevant to the research questions and should provide rich and diverse data that can be used to answer the research questions.

- Collect data: Data can be collected using various methods, such as interviews, observations, documents, surveys, and artifacts. The data collection method should be selected based on the research questions and the nature of the case study phenomenon.

- Analyze the data: The data collected from the case study should be analyzed using various techniques, such as content analysis, thematic analysis, or grounded theory. The analysis should be guided by the research questions and should aim to provide insights and conclusions relevant to the research questions.

- Draw conclusions: The conclusions drawn from the case study should be based on the data analysis and should be relevant to the research questions. The conclusions should be supported by evidence and should be clearly stated.

- Validate the findings: The findings of the case study should be validated by reviewing the data and the analysis with participants or other experts in the field. This helps to ensure the validity and reliability of the findings.

- Write the report: The final step is to write the report of the case study research. The report should provide a clear description of the case study phenomenon, the research questions, the data collection methods, the data analysis, the findings, and the conclusions. The report should be written in a clear and concise manner and should follow the guidelines for academic writing.

Examples of Case Study

Here are some examples of case study research:

- The Hawthorne Studies : Conducted between 1924 and 1932, the Hawthorne Studies were a series of case studies conducted by Elton Mayo and his colleagues to examine the impact of work environment on employee productivity. The studies were conducted at the Hawthorne Works plant of the Western Electric Company in Chicago and included interviews, observations, and experiments.

- The Stanford Prison Experiment: Conducted in 1971, the Stanford Prison Experiment was a case study conducted by Philip Zimbardo to examine the psychological effects of power and authority. The study involved simulating a prison environment and assigning participants to the role of guards or prisoners. The study was controversial due to the ethical issues it raised.

- The Challenger Disaster: The Challenger Disaster was a case study conducted to examine the causes of the Space Shuttle Challenger explosion in 1986. The study included interviews, observations, and analysis of data to identify the technical, organizational, and cultural factors that contributed to the disaster.

- The Enron Scandal: The Enron Scandal was a case study conducted to examine the causes of the Enron Corporation’s bankruptcy in 2001. The study included interviews, analysis of financial data, and review of documents to identify the accounting practices, corporate culture, and ethical issues that led to the company’s downfall.

- The Fukushima Nuclear Disaster : The Fukushima Nuclear Disaster was a case study conducted to examine the causes of the nuclear accident that occurred at the Fukushima Daiichi Nuclear Power Plant in Japan in 2011. The study included interviews, analysis of data, and review of documents to identify the technical, organizational, and cultural factors that contributed to the disaster.

Application of Case Study

Case studies have a wide range of applications across various fields and industries. Here are some examples:

Business and Management

Case studies are widely used in business and management to examine real-life situations and develop problem-solving skills. Case studies can help students and professionals to develop a deep understanding of business concepts, theories, and best practices.

Case studies are used in healthcare to examine patient care, treatment options, and outcomes. Case studies can help healthcare professionals to develop critical thinking skills, diagnose complex medical conditions, and develop effective treatment plans.

Case studies are used in education to examine teaching and learning practices. Case studies can help educators to develop effective teaching strategies, evaluate student progress, and identify areas for improvement.

Social Sciences

Case studies are widely used in social sciences to examine human behavior, social phenomena, and cultural practices. Case studies can help researchers to develop theories, test hypotheses, and gain insights into complex social issues.

Law and Ethics

Case studies are used in law and ethics to examine legal and ethical dilemmas. Case studies can help lawyers, policymakers, and ethical professionals to develop critical thinking skills, analyze complex cases, and make informed decisions.

Purpose of Case Study

The purpose of a case study is to provide a detailed analysis of a specific phenomenon, issue, or problem in its real-life context. A case study is a qualitative research method that involves the in-depth exploration and analysis of a particular case, which can be an individual, group, organization, event, or community.

The primary purpose of a case study is to generate a comprehensive and nuanced understanding of the case, including its history, context, and dynamics. Case studies can help researchers to identify and examine the underlying factors, processes, and mechanisms that contribute to the case and its outcomes. This can help to develop a more accurate and detailed understanding of the case, which can inform future research, practice, or policy.

Case studies can also serve other purposes, including:

- Illustrating a theory or concept: Case studies can be used to illustrate and explain theoretical concepts and frameworks, providing concrete examples of how they can be applied in real-life situations.

- Developing hypotheses: Case studies can help to generate hypotheses about the causal relationships between different factors and outcomes, which can be tested through further research.

- Providing insight into complex issues: Case studies can provide insights into complex and multifaceted issues, which may be difficult to understand through other research methods.

- Informing practice or policy: Case studies can be used to inform practice or policy by identifying best practices, lessons learned, or areas for improvement.

Advantages of Case Study Research

There are several advantages of case study research, including:

- In-depth exploration: Case study research allows for a detailed exploration and analysis of a specific phenomenon, issue, or problem in its real-life context. This can provide a comprehensive understanding of the case and its dynamics, which may not be possible through other research methods.

- Rich data: Case study research can generate rich and detailed data, including qualitative data such as interviews, observations, and documents. This can provide a nuanced understanding of the case and its complexity.

- Holistic perspective: Case study research allows for a holistic perspective of the case, taking into account the various factors, processes, and mechanisms that contribute to the case and its outcomes. This can help to develop a more accurate and comprehensive understanding of the case.

- Theory development: Case study research can help to develop and refine theories and concepts by providing empirical evidence and concrete examples of how they can be applied in real-life situations.

- Practical application: Case study research can inform practice or policy by identifying best practices, lessons learned, or areas for improvement.

- Contextualization: Case study research takes into account the specific context in which the case is situated, which can help to understand how the case is influenced by the social, cultural, and historical factors of its environment.

Limitations of Case Study Research

There are several limitations of case study research, including:

- Limited generalizability : Case studies are typically focused on a single case or a small number of cases, which limits the generalizability of the findings. The unique characteristics of the case may not be applicable to other contexts or populations, which may limit the external validity of the research.

- Biased sampling: Case studies may rely on purposive or convenience sampling, which can introduce bias into the sample selection process. This may limit the representativeness of the sample and the generalizability of the findings.

- Subjectivity: Case studies rely on the interpretation of the researcher, which can introduce subjectivity into the analysis. The researcher’s own biases, assumptions, and perspectives may influence the findings, which may limit the objectivity of the research.

- Limited control: Case studies are typically conducted in naturalistic settings, which limits the control that the researcher has over the environment and the variables being studied. This may limit the ability to establish causal relationships between variables.

- Time-consuming: Case studies can be time-consuming to conduct, as they typically involve a detailed exploration and analysis of a specific case. This may limit the feasibility of conducting multiple case studies or conducting case studies in a timely manner.

- Resource-intensive: Case studies may require significant resources, including time, funding, and expertise. This may limit the ability of researchers to conduct case studies in resource-constrained settings.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Questionnaire – Definition, Types, and Examples

Observational Research – Methods and Guide

Quantitative Research – Methods, Types and...

Qualitative Research Methods

Explanatory Research – Types, Methods, Guide

Survey Research – Types, Methods, Examples

10 Real World Data Science Case Studies Projects with Example

Top 10 Data Science Case Studies Projects with Examples and Solutions in Python to inspire your data science learning in 2023.

BelData science has been a trending buzzword in recent times. With wide applications in various sectors like healthcare , education, retail, transportation, media, and banking -data science applications are at the core of pretty much every industry out there. The possibilities are endless: analysis of frauds in the finance sector or the personalization of recommendations on eCommerce businesses. We have developed ten exciting data science case studies to explain how data science is leveraged across various industries to make smarter decisions and develop innovative personalized products tailored to specific customers.

Walmart Sales Forecasting Data Science Project

Downloadable solution code | Explanatory videos | Tech Support

Table of Contents

Data science case studies in retail , data science case study examples in entertainment industry , data analytics case study examples in travel industry , case studies for data analytics in social media , real world data science projects in healthcare, data analytics case studies in oil and gas, what is a case study in data science, how do you prepare a data science case study, 10 most interesting data science case studies with examples.

So, without much ado, let's get started with data science business case studies !

With humble beginnings as a simple discount retailer, today, Walmart operates in 10,500 stores and clubs in 24 countries and eCommerce websites, employing around 2.2 million people around the globe. For the fiscal year ended January 31, 2021, Walmart's total revenue was $559 billion showing a growth of $35 billion with the expansion of the eCommerce sector. Walmart is a data-driven company that works on the principle of 'Everyday low cost' for its consumers. To achieve this goal, they heavily depend on the advances of their data science and analytics department for research and development, also known as Walmart Labs. Walmart is home to the world's largest private cloud, which can manage 2.5 petabytes of data every hour! To analyze this humongous amount of data, Walmart has created 'Data Café,' a state-of-the-art analytics hub located within its Bentonville, Arkansas headquarters. The Walmart Labs team heavily invests in building and managing technologies like cloud, data, DevOps , infrastructure, and security.

Walmart is experiencing massive digital growth as the world's largest retailer . Walmart has been leveraging Big data and advances in data science to build solutions to enhance, optimize and customize the shopping experience and serve their customers in a better way. At Walmart Labs, data scientists are focused on creating data-driven solutions that power the efficiency and effectiveness of complex supply chain management processes. Here are some of the applications of data science at Walmart:

i) Personalized Customer Shopping Experience

Walmart analyses customer preferences and shopping patterns to optimize the stocking and displaying of merchandise in their stores. Analysis of Big data also helps them understand new item sales, make decisions on discontinuing products, and the performance of brands.

ii) Order Sourcing and On-Time Delivery Promise

Millions of customers view items on Walmart.com, and Walmart provides each customer a real-time estimated delivery date for the items purchased. Walmart runs a backend algorithm that estimates this based on the distance between the customer and the fulfillment center, inventory levels, and shipping methods available. The supply chain management system determines the optimum fulfillment center based on distance and inventory levels for every order. It also has to decide on the shipping method to minimize transportation costs while meeting the promised delivery date.

Here's what valued users are saying about ProjectPro

Abhinav Agarwal

Graduate Student at Northwestern University

Gautam Vermani

Data Consultant at Confidential

Not sure what you are looking for?

iii) Packing Optimization

Also known as Box recommendation is a daily occurrence in the shipping of items in retail and eCommerce business. When items of an order or multiple orders for the same customer are ready for packing, Walmart has developed a recommender system that picks the best-sized box which holds all the ordered items with the least in-box space wastage within a fixed amount of time. This Bin Packing problem is a classic NP-Hard problem familiar to data scientists .

Whenever items of an order or multiple orders placed by the same customer are picked from the shelf and are ready for packing, the box recommendation system determines the best-sized box to hold all the ordered items with a minimum of in-box space wasted. This problem is known as the Bin Packing Problem, another classic NP-Hard problem familiar to data scientists.

Here is a link to a sales prediction data science case study to help you understand the applications of Data Science in the real world. Walmart Sales Forecasting Project uses historical sales data for 45 Walmart stores located in different regions. Each store contains many departments, and you must build a model to project the sales for each department in each store. This data science case study aims to create a predictive model to predict the sales of each product. You can also try your hands-on Inventory Demand Forecasting Data Science Project to develop a machine learning model to forecast inventory demand accurately based on historical sales data.

Get Closer To Your Dream of Becoming a Data Scientist with 70+ Solved End-to-End ML Projects

Amazon is an American multinational technology-based company based in Seattle, USA. It started as an online bookseller, but today it focuses on eCommerce, cloud computing , digital streaming, and artificial intelligence . It hosts an estimate of 1,000,000,000 gigabytes of data across more than 1,400,000 servers. Through its constant innovation in data science and big data Amazon is always ahead in understanding its customers. Here are a few data analytics case study examples at Amazon:

i) Recommendation Systems

Data science models help amazon understand the customers' needs and recommend them to them before the customer searches for a product; this model uses collaborative filtering. Amazon uses 152 million customer purchases data to help users to decide on products to be purchased. The company generates 35% of its annual sales using the Recommendation based systems (RBS) method.

Here is a Recommender System Project to help you build a recommendation system using collaborative filtering.

ii) Retail Price Optimization

Amazon product prices are optimized based on a predictive model that determines the best price so that the users do not refuse to buy it based on price. The model carefully determines the optimal prices considering the customers' likelihood of purchasing the product and thinks the price will affect the customers' future buying patterns. Price for a product is determined according to your activity on the website, competitors' pricing, product availability, item preferences, order history, expected profit margin, and other factors.

Check Out this Retail Price Optimization Project to build a Dynamic Pricing Model.

iii) Fraud Detection

Being a significant eCommerce business, Amazon remains at high risk of retail fraud. As a preemptive measure, the company collects historical and real-time data for every order. It uses Machine learning algorithms to find transactions with a higher probability of being fraudulent. This proactive measure has helped the company restrict clients with an excessive number of returns of products.

You can look at this Credit Card Fraud Detection Project to implement a fraud detection model to classify fraudulent credit card transactions.

New Projects

Let us explore data analytics case study examples in the entertainment indusry.

Ace Your Next Job Interview with Mock Interviews from Experts to Improve Your Skills and Boost Confidence!

Netflix started as a DVD rental service in 1997 and then has expanded into the streaming business. Headquartered in Los Gatos, California, Netflix is the largest content streaming company in the world. Currently, Netflix has over 208 million paid subscribers worldwide, and with thousands of smart devices which are presently streaming supported, Netflix has around 3 billion hours watched every month. The secret to this massive growth and popularity of Netflix is its advanced use of data analytics and recommendation systems to provide personalized and relevant content recommendations to its users. The data is collected over 100 billion events every day. Here are a few examples of data analysis case studies applied at Netflix :

i) Personalized Recommendation System

Netflix uses over 1300 recommendation clusters based on consumer viewing preferences to provide a personalized experience. Some of the data that Netflix collects from its users include Viewing time, platform searches for keywords, Metadata related to content abandonment, such as content pause time, rewind, rewatched. Using this data, Netflix can predict what a viewer is likely to watch and give a personalized watchlist to a user. Some of the algorithms used by the Netflix recommendation system are Personalized video Ranking, Trending now ranker, and the Continue watching now ranker.

ii) Content Development using Data Analytics

Netflix uses data science to analyze the behavior and patterns of its user to recognize themes and categories that the masses prefer to watch. This data is used to produce shows like The umbrella academy, and Orange Is the New Black, and the Queen's Gambit. These shows seem like a huge risk but are significantly based on data analytics using parameters, which assured Netflix that they would succeed with its audience. Data analytics is helping Netflix come up with content that their viewers want to watch even before they know they want to watch it.

iii) Marketing Analytics for Campaigns

Netflix uses data analytics to find the right time to launch shows and ad campaigns to have maximum impact on the target audience. Marketing analytics helps come up with different trailers and thumbnails for other groups of viewers. For example, the House of Cards Season 5 trailer with a giant American flag was launched during the American presidential elections, as it would resonate well with the audience.

Here is a Customer Segmentation Project using association rule mining to understand the primary grouping of customers based on various parameters.

Get FREE Access to Machine Learning Example Codes for Data Cleaning , Data Munging, and Data Visualization

In a world where Purchasing music is a thing of the past and streaming music is a current trend, Spotify has emerged as one of the most popular streaming platforms. With 320 million monthly users, around 4 billion playlists, and approximately 2 million podcasts, Spotify leads the pack among well-known streaming platforms like Apple Music, Wynk, Songza, amazon music, etc. The success of Spotify has mainly depended on data analytics. By analyzing massive volumes of listener data, Spotify provides real-time and personalized services to its listeners. Most of Spotify's revenue comes from paid premium subscriptions. Here are some of the examples of case study on data analytics used by Spotify to provide enhanced services to its listeners:

i) Personalization of Content using Recommendation Systems

Spotify uses Bart or Bayesian Additive Regression Trees to generate music recommendations to its listeners in real-time. Bart ignores any song a user listens to for less than 30 seconds. The model is retrained every day to provide updated recommendations. A new Patent granted to Spotify for an AI application is used to identify a user's musical tastes based on audio signals, gender, age, accent to make better music recommendations.

Spotify creates daily playlists for its listeners, based on the taste profiles called 'Daily Mixes,' which have songs the user has added to their playlists or created by the artists that the user has included in their playlists. It also includes new artists and songs that the user might be unfamiliar with but might improve the playlist. Similar to it is the weekly 'Release Radar' playlists that have newly released artists' songs that the listener follows or has liked before.

ii) Targetted marketing through Customer Segmentation

With user data for enhancing personalized song recommendations, Spotify uses this massive dataset for targeted ad campaigns and personalized service recommendations for its users. Spotify uses ML models to analyze the listener's behavior and group them based on music preferences, age, gender, ethnicity, etc. These insights help them create ad campaigns for a specific target audience. One of their well-known ad campaigns was the meme-inspired ads for potential target customers, which was a huge success globally.

iii) CNN's for Classification of Songs and Audio Tracks

Spotify builds audio models to evaluate the songs and tracks, which helps develop better playlists and recommendations for its users. These allow Spotify to filter new tracks based on their lyrics and rhythms and recommend them to users like similar tracks ( collaborative filtering). Spotify also uses NLP ( Natural language processing) to scan articles and blogs to analyze the words used to describe songs and artists. These analytical insights can help group and identify similar artists and songs and leverage them to build playlists.

Here is a Music Recommender System Project for you to start learning. We have listed another music recommendations dataset for you to use for your projects: Dataset1 . You can use this dataset of Spotify metadata to classify songs based on artists, mood, liveliness. Plot histograms, heatmaps to get a better understanding of the dataset. Use classification algorithms like logistic regression, SVM, and Principal component analysis to generate valuable insights from the dataset.

Explore Categories

Below you will find case studies for data analytics in the travel and tourism industry.

Airbnb was born in 2007 in San Francisco and has since grown to 4 million Hosts and 5.6 million listings worldwide who have welcomed more than 1 billion guest arrivals in almost every country across the globe. Airbnb is active in every country on the planet except for Iran, Sudan, Syria, and North Korea. That is around 97.95% of the world. Using data as a voice of their customers, Airbnb uses the large volume of customer reviews, host inputs to understand trends across communities, rate user experiences, and uses these analytics to make informed decisions to build a better business model. The data scientists at Airbnb are developing exciting new solutions to boost the business and find the best mapping for its customers and hosts. Airbnb data servers serve approximately 10 million requests a day and process around one million search queries. Data is the voice of customers at AirBnB and offers personalized services by creating a perfect match between the guests and hosts for a supreme customer experience.

i) Recommendation Systems and Search Ranking Algorithms

Airbnb helps people find 'local experiences' in a place with the help of search algorithms that make searches and listings precise. Airbnb uses a 'listing quality score' to find homes based on the proximity to the searched location and uses previous guest reviews. Airbnb uses deep neural networks to build models that take the guest's earlier stays into account and area information to find a perfect match. The search algorithms are optimized based on guest and host preferences, rankings, pricing, and availability to understand users’ needs and provide the best match possible.

ii) Natural Language Processing for Review Analysis

Airbnb characterizes data as the voice of its customers. The customer and host reviews give a direct insight into the experience. The star ratings alone cannot be an excellent way to understand it quantitatively. Hence Airbnb uses natural language processing to understand reviews and the sentiments behind them. The NLP models are developed using Convolutional neural networks .

Practice this Sentiment Analysis Project for analyzing product reviews to understand the basic concepts of natural language processing.

iii) Smart Pricing using Predictive Analytics

The Airbnb hosts community uses the service as a supplementary income. The vacation homes and guest houses rented to customers provide for rising local community earnings as Airbnb guests stay 2.4 times longer and spend approximately 2.3 times the money compared to a hotel guest. The profits are a significant positive impact on the local neighborhood community. Airbnb uses predictive analytics to predict the prices of the listings and help the hosts set a competitive and optimal price. The overall profitability of the Airbnb host depends on factors like the time invested by the host and responsiveness to changing demands for different seasons. The factors that impact the real-time smart pricing are the location of the listing, proximity to transport options, season, and amenities available in the neighborhood of the listing.

Here is a Price Prediction Project to help you understand the concept of predictive analysis which is widely common in case studies for data analytics.

Uber is the biggest global taxi service provider. As of December 2018, Uber has 91 million monthly active consumers and 3.8 million drivers. Uber completes 14 million trips each day. Uber uses data analytics and big data-driven technologies to optimize their business processes and provide enhanced customer service. The Data Science team at uber has been exploring futuristic technologies to provide better service constantly. Machine learning and data analytics help Uber make data-driven decisions that enable benefits like ride-sharing, dynamic price surges, better customer support, and demand forecasting. Here are some of the real world data science projects used by uber:

i) Dynamic Pricing for Price Surges and Demand Forecasting

Uber prices change at peak hours based on demand. Uber uses surge pricing to encourage more cab drivers to sign up with the company, to meet the demand from the passengers. When the prices increase, the driver and the passenger are both informed about the surge in price. Uber uses a predictive model for price surging called the 'Geosurge' ( patented). It is based on the demand for the ride and the location.

ii) One-Click Chat

Uber has developed a Machine learning and natural language processing solution called one-click chat or OCC for coordination between drivers and users. This feature anticipates responses for commonly asked questions, making it easy for the drivers to respond to customer messages. Drivers can reply with the clock of just one button. One-Click chat is developed on Uber's machine learning platform Michelangelo to perform NLP on rider chat messages and generate appropriate responses to them.

iii) Customer Retention

Failure to meet the customer demand for cabs could lead to users opting for other services. Uber uses machine learning models to bridge this demand-supply gap. By using prediction models to predict the demand in any location, uber retains its customers. Uber also uses a tier-based reward system, which segments customers into different levels based on usage. The higher level the user achieves, the better are the perks. Uber also provides personalized destination suggestions based on the history of the user and their frequently traveled destinations.

You can take a look at this Python Chatbot Project and build a simple chatbot application to understand better the techniques used for natural language processing. You can also practice the working of a demand forecasting model with this project using time series analysis. You can look at this project which uses time series forecasting and clustering on a dataset containing geospatial data for forecasting customer demand for ola rides.

Explore More Data Science and Machine Learning Projects for Practice. Fast-Track Your Career Transition with ProjectPro

7) LinkedIn

LinkedIn is the largest professional social networking site with nearly 800 million members in more than 200 countries worldwide. Almost 40% of the users access LinkedIn daily, clocking around 1 billion interactions per month. The data science team at LinkedIn works with this massive pool of data to generate insights to build strategies, apply algorithms and statistical inferences to optimize engineering solutions, and help the company achieve its goals. Here are some of the real world data science projects at LinkedIn:

i) LinkedIn Recruiter Implement Search Algorithms and Recommendation Systems

LinkedIn Recruiter helps recruiters build and manage a talent pool to optimize the chances of hiring candidates successfully. This sophisticated product works on search and recommendation engines. The LinkedIn recruiter handles complex queries and filters on a constantly growing large dataset. The results delivered have to be relevant and specific. The initial search model was based on linear regression but was eventually upgraded to Gradient Boosted decision trees to include non-linear correlations in the dataset. In addition to these models, the LinkedIn recruiter also uses the Generalized Linear Mix model to improve the results of prediction problems to give personalized results.

ii) Recommendation Systems Personalized for News Feed

The LinkedIn news feed is the heart and soul of the professional community. A member's newsfeed is a place to discover conversations among connections, career news, posts, suggestions, photos, and videos. Every time a member visits LinkedIn, machine learning algorithms identify the best exchanges to be displayed on the feed by sorting through posts and ranking the most relevant results on top. The algorithms help LinkedIn understand member preferences and help provide personalized news feeds. The algorithms used include logistic regression, gradient boosted decision trees and neural networks for recommendation systems.

iii) CNN's to Detect Inappropriate Content

To provide a professional space where people can trust and express themselves professionally in a safe community has been a critical goal at LinkedIn. LinkedIn has heavily invested in building solutions to detect fake accounts and abusive behavior on their platform. Any form of spam, harassment, inappropriate content is immediately flagged and taken down. These can range from profanity to advertisements for illegal services. LinkedIn uses a Convolutional neural networks based machine learning model. This classifier trains on a training dataset containing accounts labeled as either "inappropriate" or "appropriate." The inappropriate list consists of accounts having content from "blocklisted" phrases or words and a small portion of manually reviewed accounts reported by the user community.

Here is a Text Classification Project to help you understand NLP basics for text classification. You can find a news recommendation system dataset to help you build a personalized news recommender system. You can also use this dataset to build a classifier using logistic regression, Naive Bayes, or Neural networks to classify toxic comments.

Get confident to build end-to-end projects

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

Pfizer is a multinational pharmaceutical company headquartered in New York, USA. One of the largest pharmaceutical companies globally known for developing a wide range of medicines and vaccines in disciplines like immunology, oncology, cardiology, and neurology. Pfizer became a household name in 2010 when it was the first to have a COVID-19 vaccine with FDA. In early November 2021, The CDC has approved the Pfizer vaccine for kids aged 5 to 11. Pfizer has been using machine learning and artificial intelligence to develop drugs and streamline trials, which played a massive role in developing and deploying the COVID-19 vaccine. Here are a few data analytics case studies by Pfizer :

i) Identifying Patients for Clinical Trials

Artificial intelligence and machine learning are used to streamline and optimize clinical trials to increase their efficiency. Natural language processing and exploratory data analysis of patient records can help identify suitable patients for clinical trials. These can help identify patients with distinct symptoms. These can help examine interactions of potential trial members' specific biomarkers, predict drug interactions and side effects which can help avoid complications. Pfizer's AI implementation helped rapidly identify signals within the noise of millions of data points across their 44,000-candidate COVID-19 clinical trial.

ii) Supply Chain and Manufacturing

Data science and machine learning techniques help pharmaceutical companies better forecast demand for vaccines and drugs and distribute them efficiently. Machine learning models can help identify efficient supply systems by automating and optimizing the production steps. These will help supply drugs customized to small pools of patients in specific gene pools. Pfizer uses Machine learning to predict the maintenance cost of equipment used. Predictive maintenance using AI is the next big step for Pharmaceutical companies to reduce costs.

iii) Drug Development

Computer simulations of proteins, and tests of their interactions, and yield analysis help researchers develop and test drugs more efficiently. In 2016 Watson Health and Pfizer announced a collaboration to utilize IBM Watson for Drug Discovery to help accelerate Pfizer's research in immuno-oncology, an approach to cancer treatment that uses the body's immune system to help fight cancer. Deep learning models have been used recently for bioactivity and synthesis prediction for drugs and vaccines in addition to molecular design. Deep learning has been a revolutionary technique for drug discovery as it factors everything from new applications of medications to possible toxic reactions which can save millions in drug trials.

You can create a Machine learning model to predict molecular activity to help design medicine using this dataset . You may build a CNN or a Deep neural network for this data analyst case study project.

Access Data Science and Machine Learning Project Code Examples

9) Shell Data Analyst Case Study Project

Shell is a global group of energy and petrochemical companies with over 80,000 employees in around 70 countries. Shell uses advanced technologies and innovations to help build a sustainable energy future. Shell is going through a significant transition as the world needs more and cleaner energy solutions to be a clean energy company by 2050. It requires substantial changes in the way in which energy is used. Digital technologies, including AI and Machine Learning, play an essential role in this transformation. These include efficient exploration and energy production, more reliable manufacturing, more nimble trading, and a personalized customer experience. Using AI in various phases of the organization will help achieve this goal and stay competitive in the market. Here are a few data analytics case studies in the petrochemical industry:

i) Precision Drilling

Shell is involved in the processing mining oil and gas supply, ranging from mining hydrocarbons to refining the fuel to retailing them to customers. Recently Shell has included reinforcement learning to control the drilling equipment used in mining. Reinforcement learning works on a reward-based system based on the outcome of the AI model. The algorithm is designed to guide the drills as they move through the surface, based on the historical data from drilling records. It includes information such as the size of drill bits, temperatures, pressures, and knowledge of the seismic activity. This model helps the human operator understand the environment better, leading to better and faster results will minor damage to machinery used.

ii) Efficient Charging Terminals

Due to climate changes, governments have encouraged people to switch to electric vehicles to reduce carbon dioxide emissions. However, the lack of public charging terminals has deterred people from switching to electric cars. Shell uses AI to monitor and predict the demand for terminals to provide efficient supply. Multiple vehicles charging from a single terminal may create a considerable grid load, and predictions on demand can help make this process more efficient.

iii) Monitoring Service and Charging Stations

Another Shell initiative trialed in Thailand and Singapore is the use of computer vision cameras, which can think and understand to watch out for potentially hazardous activities like lighting cigarettes in the vicinity of the pumps while refueling. The model is built to process the content of the captured images and label and classify it. The algorithm can then alert the staff and hence reduce the risk of fires. You can further train the model to detect rash driving or thefts in the future.

Here is a project to help you understand multiclass image classification. You can use the Hourly Energy Consumption Dataset to build an energy consumption prediction model. You can use time series with XGBoost to develop your model.

10) Zomato Case Study on Data Analytics

Zomato was founded in 2010 and is currently one of the most well-known food tech companies. Zomato offers services like restaurant discovery, home delivery, online table reservation, online payments for dining, etc. Zomato partners with restaurants to provide tools to acquire more customers while also providing delivery services and easy procurement of ingredients and kitchen supplies. Currently, Zomato has over 2 lakh restaurant partners and around 1 lakh delivery partners. Zomato has closed over ten crore delivery orders as of date. Zomato uses ML and AI to boost their business growth, with the massive amount of data collected over the years from food orders and user consumption patterns. Here are a few examples of data analyst case study project developed by the data scientists at Zomato:

i) Personalized Recommendation System for Homepage

Zomato uses data analytics to create personalized homepages for its users. Zomato uses data science to provide order personalization, like giving recommendations to the customers for specific cuisines, locations, prices, brands, etc. Restaurant recommendations are made based on a customer's past purchases, browsing history, and what other similar customers in the vicinity are ordering. This personalized recommendation system has led to a 15% improvement in order conversions and click-through rates for Zomato.

You can use the Restaurant Recommendation Dataset to build a restaurant recommendation system to predict what restaurants customers are most likely to order from, given the customer location, restaurant information, and customer order history.

ii) Analyzing Customer Sentiment

Zomato uses Natural language processing and Machine learning to understand customer sentiments using social media posts and customer reviews. These help the company gauge the inclination of its customer base towards the brand. Deep learning models analyze the sentiments of various brand mentions on social networking sites like Twitter, Instagram, Linked In, and Facebook. These analytics give insights to the company, which helps build the brand and understand the target audience.

iii) Predicting Food Preparation Time (FPT)

Food delivery time is an essential variable in the estimated delivery time of the order placed by the customer using Zomato. The food preparation time depends on numerous factors like the number of dishes ordered, time of the day, footfall in the restaurant, day of the week, etc. Accurate prediction of the food preparation time can help make a better prediction of the Estimated delivery time, which will help delivery partners less likely to breach it. Zomato uses a Bidirectional LSTM-based deep learning model that considers all these features and provides food preparation time for each order in real-time.

Data scientists are companies' secret weapons when analyzing customer sentiments and behavior and leveraging it to drive conversion, loyalty, and profits. These 10 data science case studies projects with examples and solutions show you how various organizations use data science technologies to succeed and be at the top of their field! To summarize, Data Science has not only accelerated the performance of companies but has also made it possible to manage & sustain their performance with ease.

FAQs on Data Analysis Case Studies

A case study in data science is an in-depth analysis of a real-world problem using data-driven approaches. It involves collecting, cleaning, and analyzing data to extract insights and solve challenges, offering practical insights into how data science techniques can address complex issues across various industries.

To create a data science case study, identify a relevant problem, define objectives, and gather suitable data. Clean and preprocess data, perform exploratory data analysis, and apply appropriate algorithms for analysis. Summarize findings, visualize results, and provide actionable recommendations, showcasing the problem-solving potential of data science techniques.

About the Author

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,

© 2024

© 2024 Iconiq Inc.

Privacy policy

User policy

Write for ProjectPro

Qualitative case study data analysis: an example from practice

Affiliation.

- 1 School of Nursing and Midwifery, National University of Ireland, Galway, Republic of Ireland.

- PMID: 25976531

- DOI: 10.7748/nr.22.5.8.e1307

Aim: To illustrate an approach to data analysis in qualitative case study methodology.

Background: There is often little detail in case study research about how data were analysed. However, it is important that comprehensive analysis procedures are used because there are often large sets of data from multiple sources of evidence. Furthermore, the ability to describe in detail how the analysis was conducted ensures rigour in reporting qualitative research.

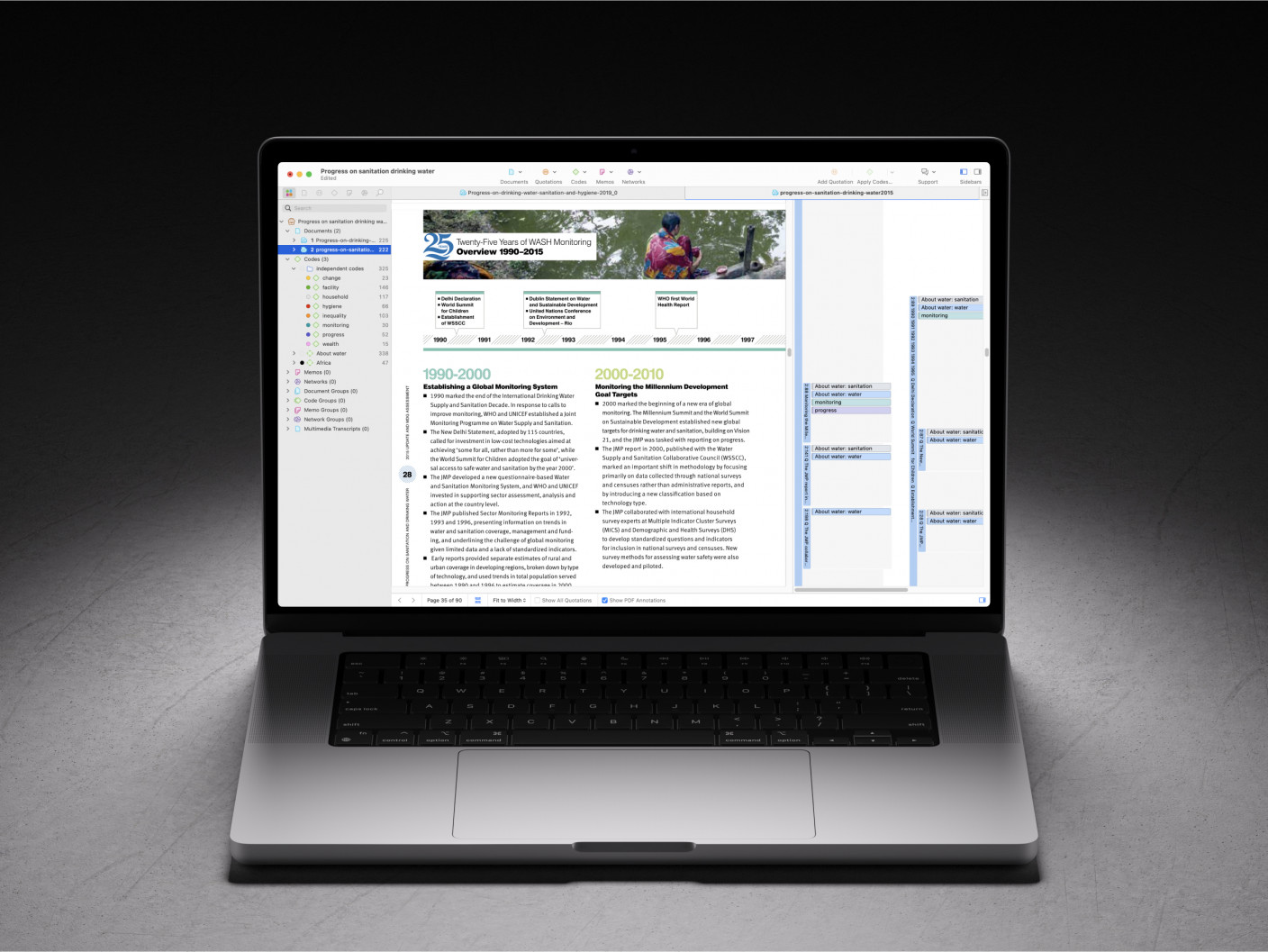

Data sources: The research example used is a multiple case study that explored the role of the clinical skills laboratory in preparing students for the real world of practice. Data analysis was conducted using a framework guided by the four stages of analysis outlined by Morse ( 1994 ): comprehending, synthesising, theorising and recontextualising. The specific strategies for analysis in these stages centred on the work of Miles and Huberman ( 1994 ), which has been successfully used in case study research. The data were managed using NVivo software.

Review methods: Literature examining qualitative data analysis was reviewed and strategies illustrated by the case study example provided. Discussion Each stage of the analysis framework is described with illustration from the research example for the purpose of highlighting the benefits of a systematic approach to handling large data sets from multiple sources.

Conclusion: By providing an example of how each stage of the analysis was conducted, it is hoped that researchers will be able to consider the benefits of such an approach to their own case study analysis.

Implications for research/practice: This paper illustrates specific strategies that can be employed when conducting data analysis in case study research and other qualitative research designs.

Keywords: Case study data analysis; case study research methodology; clinical skills research; qualitative case study methodology; qualitative data analysis; qualitative research.

- Case-Control Studies*

- Data Interpretation, Statistical*

- Nursing Research / methods*

- Qualitative Research*

- Research Design

Case Study Research in Software Engineering: Guidelines and Examples by Per Runeson, Martin Höst, Austen Rainer, Björn Regnell

Get full access to Case Study Research in Software Engineering: Guidelines and Examples and 60K+ other titles, with a free 10-day trial of O'Reilly.

There are also live events, courses curated by job role, and more.

DATA ANALYSIS AND INTERPRETATION

5.1 introduction.

Once data has been collected the focus shifts to analysis of data. It can be said that in this phase, data is used to understand what actually has happened in the studied case, and where the researcher understands the details of the case and seeks patterns in the data. This means that there inevitably is some analysis going on also in the data collection phase where the data is studied, and for example when data from an interview is transcribed. The understandings in the earlier phases are of course also valid and important, but this chapter is more focusing on the separate phase that starts after the data has been collected.

Data analysis is conducted differently for quantitative and qualitative data. Sections 5.2 – 5.5 describe how to analyze qualitative data and how to assess the validity of this type of analysis. In Section 5.6 , a short introduction to quantitative analysis methods is given. Since quantitative analysis is covered extensively in textbooks on statistical analysis, and case study research to a large extent relies on qualitative data, this section is kept short.

5.2 ANALYSIS OF DATA IN FLEXIBLE RESEARCH

5.2.1 introduction.

As case study research is a flexible research method, qualitative data analysis methods are commonly used [176]. The basic objective of the analysis is, as in any other analysis, to derive conclusions from the data, keeping a clear chain of evidence. The chain of evidence means that a reader ...

Get Case Study Research in Software Engineering: Guidelines and Examples now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.

Don’t leave empty-handed

Get Mark Richards’s Software Architecture Patterns ebook to better understand how to design components—and how they should interact.

It’s yours, free.

Check it out now on O’Reilly

Dive in for free with a 10-day trial of the O’Reilly learning platform—then explore all the other resources our members count on to build skills and solve problems every day.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Case Study | Definition, Examples & Methods

Case Study | Definition, Examples & Methods

Published on 5 May 2022 by Shona McCombes . Revised on 30 January 2023.

A case study is a detailed study of a specific subject, such as a person, group, place, event, organisation, or phenomenon. Case studies are commonly used in social, educational, clinical, and business research.

A case study research design usually involves qualitative methods , but quantitative methods are sometimes also used. Case studies are good for describing , comparing, evaluating, and understanding different aspects of a research problem .

Table of contents

When to do a case study, step 1: select a case, step 2: build a theoretical framework, step 3: collect your data, step 4: describe and analyse the case.

A case study is an appropriate research design when you want to gain concrete, contextual, in-depth knowledge about a specific real-world subject. It allows you to explore the key characteristics, meanings, and implications of the case.

Case studies are often a good choice in a thesis or dissertation . They keep your project focused and manageable when you don’t have the time or resources to do large-scale research.

You might use just one complex case study where you explore a single subject in depth, or conduct multiple case studies to compare and illuminate different aspects of your research problem.

Prevent plagiarism, run a free check.

Once you have developed your problem statement and research questions , you should be ready to choose the specific case that you want to focus on. A good case study should have the potential to:

- Provide new or unexpected insights into the subject

- Challenge or complicate existing assumptions and theories

- Propose practical courses of action to resolve a problem

- Open up new directions for future research

Unlike quantitative or experimental research, a strong case study does not require a random or representative sample. In fact, case studies often deliberately focus on unusual, neglected, or outlying cases which may shed new light on the research problem.

If you find yourself aiming to simultaneously investigate and solve an issue, consider conducting action research . As its name suggests, action research conducts research and takes action at the same time, and is highly iterative and flexible.

However, you can also choose a more common or representative case to exemplify a particular category, experience, or phenomenon.

While case studies focus more on concrete details than general theories, they should usually have some connection with theory in the field. This way the case study is not just an isolated description, but is integrated into existing knowledge about the topic. It might aim to:

- Exemplify a theory by showing how it explains the case under investigation

- Expand on a theory by uncovering new concepts and ideas that need to be incorporated

- Challenge a theory by exploring an outlier case that doesn’t fit with established assumptions

To ensure that your analysis of the case has a solid academic grounding, you should conduct a literature review of sources related to the topic and develop a theoretical framework . This means identifying key concepts and theories to guide your analysis and interpretation.

There are many different research methods you can use to collect data on your subject. Case studies tend to focus on qualitative data using methods such as interviews, observations, and analysis of primary and secondary sources (e.g., newspaper articles, photographs, official records). Sometimes a case study will also collect quantitative data .

The aim is to gain as thorough an understanding as possible of the case and its context.

In writing up the case study, you need to bring together all the relevant aspects to give as complete a picture as possible of the subject.

How you report your findings depends on the type of research you are doing. Some case studies are structured like a standard scientific paper or thesis, with separate sections or chapters for the methods , results , and discussion .

Others are written in a more narrative style, aiming to explore the case from various angles and analyse its meanings and implications (for example, by using textual analysis or discourse analysis ).

In all cases, though, make sure to give contextual details about the case, connect it back to the literature and theory, and discuss how it fits into wider patterns or debates.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2023, January 30). Case Study | Definition, Examples & Methods. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/research-methods/case-studies/

Is this article helpful?

Shona McCombes

Other students also liked, correlational research | guide, design & examples, a quick guide to experimental design | 5 steps & examples, descriptive research design | definition, methods & examples.

Currently taking bookings for May >>

The Convergence Blog

The convergence - an online community space that's dedicated to empowering operators in the data industry by providing news and education about evergreen strategies, late-breaking data & ai developments, and free or low-cost upskilling resources that you need to thrive as a leader in the data & ai space., data analysis case study: learn from humana’s automated data analysis project.

Lillian Pierson, P.E.

Playback speed:

Got data? Great! Looking for that perfect data analysis case study to help you get started using it? You’re in the right place.

If you’ve ever struggled to decide what to do next with your data projects, to actually find meaning in the data, or even to decide what kind of data to collect, then KEEP READING…

Deep down, you know what needs to happen. You need to initiate and execute a data strategy that really moves the needle for your organization. One that produces seriously awesome business results.

But how you’re in the right place to find out..

As a data strategist who has worked with 10 percent of Fortune 100 companies, today I’m sharing with you a case study that demonstrates just how real businesses are making real wins with data analysis.

In the post below, we’ll look at:

- A shining data success story;

- What went on ‘under-the-hood’ to support that successful data project; and

- The exact data technologies used by the vendor, to take this project from pure strategy to pure success

If you prefer to watch this information rather than read it, it’s captured in the video below:

Here’s the url too: https://youtu.be/xMwZObIqvLQ

3 Action Items You Need To Take

To actually use the data analysis case study you’re about to get – you need to take 3 main steps. Those are:

- Reflect upon your organization as it is today (I left you some prompts below – to help you get started)

- Review winning data case collections (starting with the one I’m sharing here) and identify 5 that seem the most promising for your organization given it’s current set-up

- Assess your organization AND those 5 winning case collections. Based on that assessment, select the “QUICK WIN” data use case that offers your organization the most bang for it’s buck

Step 1: Reflect Upon Your Organization

Whenever you evaluate data case collections to decide if they’re a good fit for your organization, the first thing you need to do is organize your thoughts with respect to your organization as it is today.

Before moving into the data analysis case study, STOP and ANSWER THE FOLLOWING QUESTIONS – just to remind yourself:

- What is the business vision for our organization?

- What industries do we primarily support?

- What data technologies do we already have up and running, that we could use to generate even more value?

- What team members do we have to support a new data project? And what are their data skillsets like?

- What type of data are we mostly looking to generate value from? Structured? Semi-Structured? Un-structured? Real-time data? Huge data sets? What are our data resources like?

Jot down some notes while you’re here. Then keep them in mind as you read on to find out how one company, Humana, used its data to achieve a 28 percent increase in customer satisfaction. Also include its 63 percent increase in employee engagement! (That’s such a seriously impressive outcome, right?!)

Step 2: Review Data Case Studies

Here we are, already at step 2. It’s time for you to start reviewing data analysis case studies (starting with the one I’m sharing below). I dentify 5 that seem the most promising for your organization given its current set-up.

Humana’s Automated Data Analysis Case Study

The key thing to note here is that the approach to creating a successful data program varies from industry to industry .

Let’s start with one to demonstrate the kind of value you can glean from these kinds of success stories.

Humana has provided health insurance to Americans for over 50 years. It is a service company focused on fulfilling the needs of its customers. A great deal of Humana’s success as a company rides on customer satisfaction, and the frontline of that battle for customers’ hearts and minds is Humana’s customer service center.

Call centers are hard to get right. A lot of emotions can arise during a customer service call, especially one relating to health and health insurance. Sometimes people are frustrated. At times, they’re upset. Also, there are times the customer service representative becomes aggravated, and the overall tone and progression of the phone call goes downhill. This is of course very bad for customer satisfaction.

Humana wanted to use artificial intelligence to improve customer satisfaction (and thus, customer retention rates & profits per customer).

Humana wanted to find a way to use artificial intelligence to monitor their phone calls and help their agents do a better job connecting with their customers in order to improve customer satisfaction (and thus, customer retention rates & profits per customer ).

In light of their business need, Humana worked with a company called Cogito, which specializes in voice analytics technology.

Cogito offers a piece of AI technology called Cogito Dialogue. It’s been trained to identify certain conversational cues as a way of helping call center representatives and supervisors stay actively engaged in a call with a customer.

The AI listens to cues like the customer’s voice pitch.

If it’s rising, or if the call representative and the customer talk over each other, then the dialogue tool will send out electronic alerts to the agent during the call.

Humana fed the dialogue tool customer service data from 10,000 calls and allowed it to analyze cues such as keywords, interruptions, and pauses, and these cues were then linked with specific outcomes. For example, if the representative is receiving a particular type of cues, they are likely to get a specific customer satisfaction result.

The Outcome

Customers were happier, and customer service representatives were more engaged..

This automated solution for data analysis has now been deployed in 200 Humana call centers and the company plans to roll it out to 100 percent of its centers in the future.

The initiative was so successful, Humana has been able to focus on next steps in its data program. The company now plans to begin predicting the type of calls that are likely to go unresolved, so they can send those calls over to management before they become frustrating to the customer and customer service representative alike.

What does this mean for you and your business?

Well, if you’re looking for new ways to generate value by improving the quantity and quality of the decision support that you’re providing to your customer service personnel, then this may be a perfect example of how you can do so.

Humana’s Business Use Cases

Humana’s data analysis case study includes two key business use cases:

- Analyzing customer sentiment; and

- Suggesting actions to customer service representatives.

Analyzing Customer Sentiment

First things first, before you go ahead and collect data, you need to ask yourself who and what is involved in making things happen within the business.

In the case of Humana, the actors were:

- The health insurance system itself

- The customer, and

- The customer service representative

As you can see in the use case diagram above, the relational aspect is pretty simple. You have a customer service representative and a customer. They are both producing audio data, and that audio data is being fed into the system.

Humana focused on collecting the key data points, shown in the image below, from their customer service operations.

By collecting data about speech style, pitch, silence, stress in customers’ voices, length of call, speed of customers’ speech, intonation, articulation, silence, and representatives’ manner of speaking, Humana was able to analyze customer sentiment and introduce techniques for improved customer satisfaction.

Having strategically defined these data points, the Cogito technology was able to generate reports about customer sentiment during the calls.

Suggesting actions to customer service representatives.

The second use case for the Humana data program follows on from the data gathered in the first case.

In Humana’s case, Cogito generated a host of call analyses and reports about key call issues.

In the second business use case, Cogito was able to suggest actions to customer service representatives, in real-time , to make use of incoming data and help improve customer satisfaction on the spot.

The technology Humana used provided suggestions via text message to the customer service representative, offering the following types of feedback:

- The tone of voice is too tense

- The speed of speaking is high

- The customer representative and customer are speaking at the same time

These alerts allowed the Humana customer service representatives to alter their approach immediately , improving the quality of the interaction and, subsequently, the customer satisfaction.

The preconditions for success in this use case were:

- The call-related data must be collected and stored

- The AI models must be in place to generate analysis on the data points that are recorded during the calls

Evidence of success can subsequently be found in a system that offers real-time suggestions for courses of action that the customer service representative can take to improve customer satisfaction.

Thanks to this data-intensive business use case, Humana was able to increase customer satisfaction, improve customer retention rates, and drive profits per customer.

The Technology That Supports This Data Analysis Case Study

I promised to dip into the tech side of things. This is especially for those of you who are interested in the ins and outs of how projects like this one are actually rolled out.

Here’s a little rundown of the main technologies we discovered when we investigated how Cogito runs in support of its clients like Humana.

- For cloud data management Cogito uses AWS, specifically the Athena product

- For on-premise big data management, the company used Apache HDFS – the distributed file system for storing big data

- They utilize MapReduce, for processing their data

- And Cogito also has traditional systems and relational database management systems such as PostgreSQL

- In terms of analytics and data visualization tools, Cogito makes use of Tableau

- And for its machine learning technology, these use cases required people with knowledge in Python, R, and SQL, as well as deep learning (Cogito uses the PyTorch library and the TensorFlow library)

These data science skill sets support the effective computing, deep learning , and natural language processing applications employed by Humana for this use case.

If you’re looking to hire people to help with your own data initiative, then people with those skills listed above, and with experience in these specific technologies, would be a huge help.

Step 3: S elect The “Quick Win” Data Use Case

Still there? Great!

It’s time to close the loop.

Remember those notes you took before you reviewed the study? I want you to STOP here and assess. Does this Humana case study seem applicable and promising as a solution, given your organization’s current set-up…

YES ▶ Excellent!

Earmark it and continue exploring other winning data use cases until you’ve identified 5 that seem like great fits for your businesses needs. Evaluate those against your organization’s needs, and select the very best fit to be your “quick win” data use case. Develop your data strategy around that.

NO , Lillian – It’s not applicable. ▶ No problem.

Discard the information and continue exploring the winning data use cases we’ve categorized for you according to business function and industry. Save time by dialing down into the business function you know your business really needs help with now. Identify 5 winning data use cases that seem like great fits for your businesses needs. Evaluate those against your organization’s needs, and select the very best fit to be your “quick win” data use case. Develop your data strategy around that data use case.

More resources to get ahead...

Get income-generating ideas for data professionals, are you tired of relying on one employer for your income are you dreaming of a side hustle that won’t put you at risk of getting fired or sued well, my friend, you’re in luck..

This 48-page listing is here to rescue you from the drudgery of corporate slavery and set you on the path to start earning more money from your existing data expertise. Spend just 1 hour with this pdf and I can guarantee you’ll be bursting at the seams with practical, proven & profitable ideas for new income-streams you can create from your existing expertise. Learn more here!

Get the convergence newsletter.

Income-Generating Ideas For Data Professionals

A 48-page listing of income-generating product and service ideas for data professionals who want to earn additional money from their data expertise without relying on an employer to make it happen..

Data Strategy Action Plan

A step-by-step checklist & collaborative trello board planner for data professionals who want to get unstuck & up-leveled into their next promotion by delivering a fail-proof data strategy plan for their data projects..

Get more actionable advice by joining The Convergence Newsletter for free below.

How Does Blockchain Support Data Privacy and Storage Security?

4 Top Data Compliance Tips and Tricks

4 steps to selecting an optimal analytics tool

Proven Evergreen Data Migration Strategy for Data Professionals Who Want to GET PROMOTED FAST

What does a data product manager do? 3 types of work I do

What is GDPR compliance and what does it mean to your company’s bottom line?

Fractional CMO for deep tech B2B businesses. Specializing in go-to-market strategy, SaaS product growth, and consulting revenue growth. American expat serving clients worldwide since 2012.

Get connected, © data-mania, 2012 - 2024+, all rights reserved - terms & conditions - privacy policy | products protected by copyscape, privacy overview.

Get The Newsletter

The 7 Most Useful Data Analysis Methods and Techniques

Data analytics is the process of analyzing raw data to draw out meaningful insights. These insights are then used to determine the best course of action.

When is the best time to roll out that marketing campaign? Is the current team structure as effective as it could be? Which customer segments are most likely to purchase your new product?

Ultimately, data analytics is a crucial driver of any successful business strategy. But how do data analysts actually turn raw data into something useful? There are a range of methods and techniques that data analysts use depending on the type of data in question and the kinds of insights they want to uncover.

You can get a hands-on introduction to data analytics in this free short course .

In this post, we’ll explore some of the most useful data analysis techniques. By the end, you’ll have a much clearer idea of how you can transform meaningless data into business intelligence. We’ll cover:

- What is data analysis and why is it important?

- What is the difference between qualitative and quantitative data?

- Regression analysis

- Monte Carlo simulation

- Factor analysis

- Cohort analysis

- Cluster analysis

- Time series analysis

- Sentiment analysis

- The data analysis process

- The best tools for data analysis

- Key takeaways

The first six methods listed are used for quantitative data , while the last technique applies to qualitative data. We briefly explain the difference between quantitative and qualitative data in section two, but if you want to skip straight to a particular analysis technique, just use the clickable menu.

1. What is data analysis and why is it important?

Data analysis is, put simply, the process of discovering useful information by evaluating data. This is done through a process of inspecting, cleaning, transforming, and modeling data using analytical and statistical tools, which we will explore in detail further along in this article.

Why is data analysis important? Analyzing data effectively helps organizations make business decisions. Nowadays, data is collected by businesses constantly: through surveys, online tracking, online marketing analytics, collected subscription and registration data (think newsletters), social media monitoring, among other methods.

These data will appear as different structures, including—but not limited to—the following:

The concept of big data —data that is so large, fast, or complex, that it is difficult or impossible to process using traditional methods—gained momentum in the early 2000s. Then, Doug Laney, an industry analyst, articulated what is now known as the mainstream definition of big data as the three Vs: volume, velocity, and variety.