Software testing is the process of evaluating and verifying that a software product or application does what it’s supposed to do. The benefits of good testing include preventing bugs and improving performance.

Software testing today is most effective when it is continuous, indicating that testing is started during the design, continues as the software is built out, and even occurs when deployed into production. Continuous testing means that organizations don’t have to wait for all the pieces to be deployed before testing can start. Shift-left, which is moving testing closer to design, and shift-right, where end-users perform validation, are also philosophies of testing that have recently gained traction in the software community. When your test strategy and management plans are understood, automation of all aspects of testing becomes essential to support the speed of delivery that is required.

Strategic application modernization is one key to transformational success that can boost annual revenue and lower maintenance and running costs.

Register for the guide on DaaS

There are many different types of software tests, each with specific objectives and strategies:

- Acceptance testing: Verifying whether the whole system works as intended.

- Code review: Confirming that new and modified software is following an organization’s coding standards and adheres to its best practices.

- Integration testing: Ensuring that software components or functions operate together.

- Unit testing: Validating that each software unit runs as expected. A unit is the smallest testable component of an application.

- Functional testing: Checking functions by emulating business scenarios, based on functional requirements. Black-box testing is a common way to verify functions.

- Performance testing: Testing how the software runs under different workloads. Load testing, for example, is used to evaluate performance under real-life load conditions.

- Regression testing: Checking whether new features break or degrade functionality. Sanity testing can be used to verify menus, functions and commands at the surface level, when there is no time for a full regression test.

- Security testing: Validating that your software is not open to hackers or other malicious types of vulnerabilities that might be exploited to deny access to your services or cause them to perform incorrectly.

- Stress testing: Testing how much strain the system can take before it fails. Stress testing is considered to be a type of non-functional testing.

- Usability testing: Validating how well a customer can use a system or web application to complete a task.

In each case, validating base requirements is a critical assessment. Just as important, exploratory testing helps a tester or testing team uncover hard-to-predict scenarios and situations that can lead to software errors.

Even a simple application can be subject to a large number and variety of tests. A test management plan helps to prioritize which types of testing provide the most value—given available time and resources. Testing effectiveness is optimized by running the fewest number of tests to find the largest number of defects.

Software testing arrived alongside the development of software, which had its beginnings just after World War II. Computer scientist Tom Kilburn is credited with writing the first piece of software, which debuted on 21 June 1948 at the University of Manchester in England. It performed mathematical calculations by using machine code instructions.

Debugging was the main testing method at the time and remained so for the next two decades. By the 1980s, development teams looked beyond isolating and fixing software bugs to testing applications in real-world settings. It set the stage for a broader view of testing, which encompassed a quality assurance process that was part of the software development lifecycle.

Few can argue against the need for quality control when developing software. Late delivery or software defects can damage a brand’s reputation, which leads to frustrated and lost customers. In extreme cases, a bug or defect can degrade interconnected systems or cause serious malfunctions.

Consider Nissan having to recall over 1 million cars due to a software defect in the airbag sensor detectors, or a software bug that caused the failure of a USD 1.2 billion military satellite launch. 1 The numbers speak for themselves. Software failures in the US cost the economy USD 1.1 trillion in assets in 2016. What’s more, they impacted 4.4 billion customers. 2

Though testing itself costs money, companies can save millions per year in development and support if they have a good testing technique and QA processes in place. Early software testing uncovers problems before a product goes to market. The sooner development teams receive test feedback, the sooner they can address issues such as:

- Architectural flaws

- Poor design decisions

- Invalid or incorrect functionality

- Security vulnerabilities

- Scalability issues

When development leaves ample room for testing, it improves software reliability and high-quality applications are delivered with few errors. A system that meets or even exceeds customer expectations leads to potentially more sales and greater market share.

Software testing follows a common process. Tasks or steps include defining the test environment, developing test cases, writing scripts, analyzing test results and submitting defect reports.

Testing can be time-consuming. Manual testing or ad hoc testing might be enough for small builds. However, for larger systems, tools are frequently used to automate tasks. Automated testing helps teams implement different scenarios, test differentiators (such as moving components into a cloud environment), and quickly get feedback on what works and what doesn't.

A good testing approach encompasses the application programming interface (API), user interface and system levels. The more tests that are automated, and run early, the better. Some teams build in-house test automation tools. However, vendor solutions offer features that can streamline key test management tasks such as:

Continuous testing

Project teams test each build as it becomes available. This type of software testing relies on test automation that is integrated with the deployment process. It enables software to be validated in realistic test environments earlier in the process, which improves design and reduces risks.

Configuration management

Organizations centrally maintain test assets and track what software builds to test. Teams gain access to assets such as code, requirements, design documents, models, test scripts and test results. Good systems include user authentication and audit trails to help teams meet compliance requirements with minimal administrative effort.

Service virtualization

Testing environments might not be available, especially early in code development. Service virtualization simulates the services and systems that are missing or not yet completed, enabling teams to reduce dependencies and test sooner. They can reuse, deploy and change a configuration to test different scenarios without having to modify the original environment.

Defect or bug tracking

Monitoring defects is important to both testing and development teams for measuring and improving quality. Automated tools allow teams to track defects, measure their scope and impact, and uncover related issues.

Metrics and reporting

Reporting and analytics enable team members to share status, goals and test results. Advanced tools integrate project metrics and present results in a dashboard. Teams quickly see the overall health of a project and can monitor relationships between test, development and other project elements.

Case studies

IBM Engineering Workflow Management acts as the critical link between required and delivered work by enabling teams to manage plans, tasks and project status.

IBM Engineering Test Management is a collaborative, quality management solution that offers end-to-end test planning and test asset management, from requirements to defects.

A comprehensive testing and virtualization platform to help ensure application quality throughout the software lifecycle.

IBM DevOps Test Workbench provides software testing tools to support API testing, functional UI testing, performance testing and service virtualization.

IBM DevOps Test Virtualization enables early and frequent testing in the development lifecycle.

IBM DevOps Automation helps improve productivity, reduce business risk and deliver applications faster using generative AI and automation.

IBM DevOps Deploy is an application-release solution that infuses automation into the continuous delivery and continuous deployment process and provides robust visibility, traceability and auditing capabilities.

Velocity automates processes in your release lifecycle and gathers insights into your DevOps processes.

Continuous testing plays a crucial role in accelerating software development, improving code quality, and avoiding costly bottlenecks.

Software development refers to a set of computer science activities dedicated to the process of creating, designing, deploying and supporting software.

This ebook explores why testing earlier and more often is critical for achieving the IBM DevOps goal of faster software delivery.

Developer-centric, deeper dive resources to help improve your software lifecycle experience.

A platform where you can stay informed through webinars, blogs, and other great content. Discuss software testing and DevOps with your peers from around the world.

Are you ready for DevOps? To deliver software and services at the speed the market demands, teams have to iterate and experiment rapidly, deploy new versions frequently, and be driven by feedback and data. The most successful cloud development teams adopt modern DevOps culture and practices, embrace cloud-native architectures and assemble toolchains from best-in-class tools to unleash their productivity.

1 " What is Software Testing? " (link resides outside ibm.com), Thomas Hamilton, guru99.com, updated 3 January 2024

2 " The glitch economy: Counting the cost of software failures " (link resides outside ibm.com), Dalibor Siroky, 30 October 2017

software testing Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Combining Learning and Engagement Strategies in a Software Testing Learning Environment

There continues to be an increase in enrollments in various computing programs at academic institutions due to many job opportunities available in the information, communication, and technology sectors. This enrollment surge has presented several challenges in many Computer Science (CS), Information Technology (IT), and Software Engineering (SE) programs at universities and colleges. One such challenge is that many instructors in CS/IT/SE programs continue to use learning approaches that are not learner centered and therefore are not adequately preparing students to be proficient in the ever-changing computing industry. To mitigate this challenge, instructors need to use evidence-based pedagogical approaches, e.g., active learning, to improve student learning and engagement in the classroom and equip students with the skills necessary to be lifelong learners. This article presents an approach that combines learning and engagement strategies (LESs) in learning environments using different teaching modalities to improve student learning and engagement. We describe how LESs are integrated into face-to-face (F2F) and online class activities. The LESs currently used are collaborative learning , gamification , problem-based learning , and social interaction . We describe an approach used to quantify each LES used during class activities based on a set of characteristics for LESs and the traditional lecture-style pedagogical approaches. To demonstrate the impact of using LESs in F2F class activities, we report on a study conducted over seven semesters in a software testing class at a large urban minority serving institution. The study uses a posttest-only study design, the scores of two midterm exams, and approximate class times dedicated to each LES and traditional lecture style to quantify their usage in a face-to-face software testing class. The study results showed that increasing the time dedicated to collaborative learning, gamification, and social interaction and decreasing the traditional lecture-style approach resulted in a statistically significant improvement in student learning, as reflected in the exam scores.

Enhancing Search-based Testing with Testability Transformations for Existing APIs

Search-based software testing (SBST) has been shown to be an effective technique to generate test cases automatically. Its effectiveness strongly depends on the guidance of the fitness function. Unfortunately, a common issue in SBST is the so-called flag problem , where the fitness landscape presents a plateau that provides no guidance to the search. In this article, we provide a series of novel testability transformations aimed at providing guidance in the context of commonly used API calls (e.g., strings that need to be converted into valid date/time objects). We also provide specific transformations aimed at helping the testing of REST Web Services. We implemented our novel techniques as an extension to EvoMaster , an SBST tool that generates system-level test cases. Experiments on nine open-source REST web services, as well as an industrial web service, show that our novel techniques improve performance significantly.

A Survey of Flaky Tests

Tests that fail inconsistently, without changes to the code under test, are described as flaky . Flaky tests do not give a clear indication of the presence of software bugs and thus limit the reliability of the test suites that contain them. A recent survey of software developers found that 59% claimed to deal with flaky tests on a monthly, weekly, or daily basis. As well as being detrimental to developers, flaky tests have also been shown to limit the applicability of useful techniques in software testing research. In general, one can think of flaky tests as being a threat to the validity of any methodology that assumes the outcome of a test only depends on the source code it covers. In this article, we systematically survey the body of literature relevant to flaky test research, amounting to 76 papers. We split our analysis into four parts: addressing the causes of flaky tests, their costs and consequences, detection strategies, and approaches for their mitigation and repair. Our findings and their implications have consequences for how the software-testing community deals with test flakiness, pertinent to practitioners and of interest to those wanting to familiarize themselves with the research area.

Test Suite Optimization Using Firefly and Genetic Algorithm

Software testing is essential for providing error-free software. It is a well-known fact that software testing is responsible for at least 50% of the total development cost. Therefore, it is necessary to automate and optimize the testing processes. Search-based software engineering is a discipline mainly focussed on automation and optimization of various software engineering processes including software testing. In this article, a novel approach of hybrid firefly and a genetic algorithm is applied for test data generation and selection in regression testing environment. A case study is used along with an empirical evaluation for the proposed approach. Results show that the hybrid approach performs well on various parameters that have been selected in the experiments.

Machine Learning Model to Predict Automated Testing Adoption

Software testing is an activity conducted to test the software under test. It has two approaches: manual testing and automation testing. Automation testing is an approach of software testing in which programming scripts are written to automate the process of testing. There are some software development projects under development phase for which automated testing is suitable to use and other requires manual testing. It depends on factors like project requirements nature, team which is working on the project, technology on which software is developing and intended audience that may influence the suitability of automated testing for certain software development project. In this paper we have developed machine learning model for prediction of automated testing adoption. We have used chi-square test for finding factors’ correlation and PART classifier for model development. Accuracy of our proposed model is 93.1624%.

Metaheuristic Techniques for Test Case Generation

The primary objective of software testing is to locate bugs as many as possible in software by using an optimum set of test cases. Optimum set of test cases are obtained by selection procedure which can be viewed as an optimization problem. So metaheuristic optimizing (searching) techniques have been immensely used to automate software testing task. The application of metaheuristic searching techniques in software testing is termed as Search Based Testing. Non-redundant, reliable and optimized test cases can be generated by the search based testing with less effort and time. This article presents a systematic review on several meta heuristic techniques like Genetic Algorithms, Particle Swarm optimization, Ant Colony Optimization, Bee Colony optimization, Cuckoo Searches, Tabu Searches and some modified version of these algorithms used for test case generation. The authors also provide one framework, showing the advantages, limitations and future scope or gap of these research works which will help in further research on these works.

Software Testing Under Agile, Scrum, and DevOps

The adoption of agility at a large scale often requires the integration of agile and non-agile development practices into hybrid software development and delivery environment. This chapter addresses software testing related issues for Agile software application development. Currently, the umbrella of Agile methodologies (e.g. Scrum, Extreme Programming, Development and Operations – i.e., DevOps) have become the preferred tools for modern software development. These methodologies emphasize iterative and incremental development, where both the requirements and solutions evolve through the collaboration between cross-functional teams. The success of such practices relies on the quality result of each stage of development, obtained through rigorous testing. This chapter introduces the principles of software testing within the context of Scrum/DevOps based software development lifecycle.

Quality Assurance Issues for Big Data Applications in Supply Chain Management

Heterogeneous data types, widely distributed data sources, huge data volumes, and large-scale business-alliance partners describe typical global supply chain operational environments. Mobile and wireless technologies are putting an extra layer of data source in this technology-enriched supply chain operation. This environment also needs to provide access to data anywhere, anytime to its end-users. This new type of data set originating from the global retail supply chain is commonly known as big data because of its huge volume, resulting from the velocity with which it arrives in the global retail business environment. Such environments empower and necessitate decision makers to act or react quicker to all decision tasks. Academics and practitioners are researching and building the next generation of big-data-based application software systems. This new generation of software applications is based on complex data analysis algorithms (i.e., on data that does not adhere to standard relational data models). The traditional software testing methods are insufficient for big-data-based applications. Testing big-data-based applications is one of the biggest challenges faced by modern software design and development communities because of lack of knowledge on what to test and how much data to test. Big-data-based applications developers have been facing a daunting task in defining the best strategies for structured and unstructured data validation, setting up an optimal test environment, and working with non-relational databases testing approaches. This chapter focuses on big-data-based software testing and quality-assurance-related issues in the context of Hadoop, an open source framework. It includes discussion about several challenges with respect to massively parallel data generation from multiple sources, testing methods for validation of pre-Hadoop processing, software application quality factors, and some of the software testing mechanisms for this new breed of applications

Use of Qualitative Research to Generate a Function for Finding the Unit Cost of Software Test Cases

In this article, we demonstrate a novel use of case research to generate an empirical function through qualitative generalization. This innovative technique applies interpretive case analysis to the problem of defining and generalizing an empirical cost function for test cases through qualitative interaction with an industry cohort of subject matter experts involved in software testing at leading technology companies. While the technique is fully generalizable, this article demonstrates this technique with an example taken from the important field of software testing. The huge amount of software development conducted in today's world makes taking its cost into account imperative. While software testing is a critical aspect of the software development process, little attention has been paid to the cost of testing code, and specifically to the cost of test cases, in comparison to the cost of developing code. Our research fills the gap by providing a function for estimating the cost of test cases.

Framework for Reusable Test Case Generation in Software Systems Testing

Agile methodologies have become the preferred choice for modern software development. These methods focus on iterative and incremental development, where both requirements and solutions develop through collaboration among cross-functional software development teams. The success of a software system is based on the quality result of each stage of development with proper test practice. A software test ontology should represent the required software test knowledge in the context of the software tester. Reusing test cases is an effective way to improve the testing of software. The workload of a software tester for test-case generation can be improved, previous software testing experience can be shared, and test efficiency can be increased by automating software testing. In this chapter, the authors introduce a software testing framework (STF) that uses rule-based reasoning (RBR), case-based reasoning (CBR), and ontology-based semantic similarity assessment to retrieve the test cases from the case library. Finally, experimental results are used to illustrate some of the features of the framework.

Export Citation Format

Share document.

International Conference on Computational Science

ICCS 2020: Computational Science – ICCS 2020 pp 457–463 Cite as

Testing Research Software: A Case Study

- Nasir U. Eisty 15 ,

- Danny Perez 16 ,

- Jeffrey C. Carver 15 ,

- J. David Moulton 16 &

- Hai Ah Nam 16

- Conference paper

- First Online: 15 June 2020

2111 Accesses

Part of the book series: Lecture Notes in Computer Science ((LNTCS,volume 12143))

Background : The increasing importance of software for the conduct of various types of research raises the necessity of proper testing to ensure correctness. The unique characteristics of the research software produce challenges in the testing process that require attention. Aims : Therefore, the goal of this paper is to share the experience of implementing a testing framework using a statistical approach for a specific type of research software, i.e. non-deterministic software. Method : Using the ParSplice research software project as a case, we implemented a testing framework based on a statistical testing approach called Multinomial Test . Results : Using the new framework, we were able to test the ParSplice project and demonstrate correctness in a situation where traditional methodical testing approaches were not feasible. Conclusions : This study opens up the possibilities of using statistical testing approaches for research software that can overcome some of the inherent challenges involved in testing non-deterministic research software.

You have full access to this open access chapter, Download conference paper PDF

1 Introduction

Research software can enable mission-critical tasks, provide predictive capability to support decision making, and generate results for research publications. Faults in research software can produce erroneous results, which have significant impacts including the retraction of publications [ 8 ]. There are at least two factors leading to faults in research software: (1), the complexity of the software (often including non-determinism) presents difficulties for implementing a standard testing process and (2) the background of people who develop research software differ from traditional software developers.

Research software often has complex, non-deterministic computational behavior, with many execution paths and requires many inputs. This complexity makes it difficult for developers to manually identify critical input domain boundaries and partition the input space to identify a small but sufficient set of test cases. In addition, some research software can produce complex outputs whose assessment might rely on the experience of domain experts rather than on an objective test oracle. Finally, the use of floating-point calculations can make it difficult to choose suitable tolerances for the correctness of outputs.

In addition, research software developers generally have a limited understanding of standard software engineering testing concepts [ 7 ]. Because research software projects often have difficulty obtaining adequate budget for testing activities [ 11 ], they prioritize producing results over ensuring the quality of the software that produces those results. This problem is exacerbated by the inherent exploratory nature of the software [ 5 ] and the constant focus on adding new features. Finally, researchers usually do not have training in software engineering [ 3 ], so the lack of recognition of the importance of the corresponding skills causes them to treat testing as a secondary activity [ 10 ].

To address some of the challenges with testing research software, we conducted a case study on the development of a testing infrastructure for the ParSplice Footnote 1 research software project. The goal of this paper is to demonstrate the use of a statistical method for testing research software . The key contributions of this paper are (1) an overview of available testing techniques for non-deterministic stochastic research software, (2) implementation of a testing infrastructure of a non-deterministic parallel research software, and (3) demonstration of the use of a statistical testing method to test research software that can be a role model for other research software projects.

2 Background

In a non-deterministic system, there is often no direct way for the tester (or test oracle) to exactly predetermine the expected behavior. In ParSplice (described in Sect. 2.1 ), the non-determinism stems from (1) the use of stochastic differential equations to model the physics and (2) the order in which communication between the procedures occurs (note however that even though the results from each execution depends upon message ordering, each valid order produces a statistically accurate result, which is the key requirement for the validity of ParSplice simulations).

In cases where development of test oracles is difficult due to the non-determinism, some potentially viable testing approaches include metamorphic testing, run-time assertions, and machine learning techniques [ 6 ]. After describing the ParSplice project, the remainder of this section explains these techniques along with their possible applicability to ParSplice .

2.1 ParSplice

ParSplice (Parallel Trajectory Splicing) [ 9 ] aims at overcoming the challenge of simulating the evolution of materials over long time scales through the timewise parallelization of long atomistic trajectories using multiple independent producers. The key idea is that statistically accurate long-time trajectories can be assembled by splicing end-to-end short, independently-generated, trajectory segments. The trajectory can then grow by splicing a segment that begins in the state where the trajectory currently ends, where a state corresponds to a finite region of the configuration space of the problem. This procedure yields provably statistically accurate results, so long as the segments obey certain (relatively simple) conditions. Details can be found in the original publication [ 9 ].

The ParSplice code is a management layer that orchestrates a large number of calculations and does not perform the actual molecular dynamics itself. Instead, ParSplice uses external molecular dynamics engines. The simulations used in ParSplice rely on stochastic equations of motion to mimic the interaction of the system of interest with the wider environment, which introduces a first source of non-determinism.

A basic ParSplice implementation contains two types of processes: a splicer and producers. The splicer manages a database of segments, generates a trajectory by consuming segments from the database, and schedules execution of additional segments, each grouped by their respective initial state. Producers fulfill requests from the splicer and generate trajectory segments beginning in a given state; the results are then returned to the splicer. The number of segments to be scheduled for execution in any known state is determined through a predictor statistical model, built on-the-fly. Importantly, the quality of the predictor model only affects the efficiency of ParSplice and not the accuracy of the trajectory. This property is important because the predictor model will almost always be incomplete, as it is inferred from a finite number of simulations. The unavailability of the ground truth model (which is an extremely complex function of the underlying physical model) makes assessment of the results difficult. In addition, this type of stochastic simulation is not reproducible, adding to the difficulty of testing the code. Therefore, in this case study we create a basis for the ParSplice testing infrastructure using various methodical approaches and apply the test framework to the continuous integration process.

2.2 Metamorphic Testing

Metamorphic testing operates by checking whether the program under test behaves according to a set of metamorphic relations. For example, a metamorphic relation R would express a relationship among multiple inputs x1, x2,.., xN (for N > 1) to function f and their corresponding output values f(x1) , f(x2) ,.., f(xN) [ 2 ]. These relations specify how a change to an input affects the output. These metamorphic relations serve as a test oracle to determine whether a test case passes or fails. In the case of ParSplice , it is difficult to identify metamorphic relations because the outputs are non-deterministic. The relationship between the x ’s and the f ’s is therefore not direct but statistical in nature.

2.3 Run-Time Assertion Checking

An assertion is a boolean expression or constraint used to verify a necessary property of the program under test. Usually, testers embed assertions into the source code that evaluate when a test case is executed. Later testers use these assertions to verify whether the output is within an expected range or if there are some known relationships between program variables. In this way, a set of assertions can act as an oracle. In the context of ParSplice , assertions can be used to test specific functions, but not to test the overall validity of the simulations, would protect only against catastrophic failures, such as instabilities in the integration scheme.

2.4 Machine Learning Techniques

Machine learning is a useful approach for developing oracles for non-deterministic programs. Researchers have shown possibilities of both black-box features (developed using only inputs and outputs of the program) and white-box features (developed using the internal structure of the program) to train the classifier used as the oracle [ 1 , 4 ]. It is possible to test ParSplice with machine learning techniques. For example, we could fake the molecular dynamics (MD) engine with our own model to produce output data to use as a training set and consider the actual output data as a testing set. Due to the amount of effort required to use this approach in Parsplice , we determined that it was not feasible.

3 Case Study

To implement the testing framework, the first author spent a summer at Los Alamos National Laboratory working on the ParSplice project. The testing framework is based on the Multinomial testing approach (described in Sect. 3.2 ), implemented using a progress tracking card (PTC) in the Productivity and Sustainability Improvement Plan (PSIP) Footnote 2 methodology. The testing approach is integrated with the CMake/CTest tool for use in the runtime environment and continuous integration. In this section, we describe the PSIP methodology, the Multinomial test approach, and results that verify the implementation of the testing framework.

The PSIP methodology provides a constructive approach to increase software quality. It helps decrease the cost, time, and effort required to develop and maintain software over its intended lifetime. The PSIP workflow is a lightweight, multi-step, iterative process that fits within a project’s standard planning and development process. The steps of PSIP are: a) Document Project Practices, b) Set Goals, c) Construct Progress Tracking Card, d) Record Current PTC Values, e) Create Plan for Increasing PTC values, f) Execute Plan, g) Assess Progress, h) Repeat.

We created and followed a PTC containing a list of practices we were working to improve, with qualitative descriptions and values that helped set and track our progress. Our progress tracking card consists of 6 scores with a target finish date to develop the testing framework. The scores are:

Score 0 - No tests or approach exists

Score 1 - Requirement gathering and background research

Score 2 - Develop statistical test framework

Score 3 - Design code backend to integrate test

Score 4 - Test framework implemented into ParSplice infrastructure

Score 5 - Integrate into CI infrastructure

We were able to progress through these levels and obtain a score of 5 by the end of the case study.

3.2 Multinomial Test

The Multinomial test is a statistical test of the null hypothesis that the parameters of a multinomial distribution are given by specified values. In a multinomial population, the data is categorical and belongs to a collection of discrete non-overlapping classes. For instance, multinomial distributions model the probability of counts of each side for rolling a k-sided die n times. The Multinomial test uses Pearson’s \(\chi ^2\) test to test the null hypothesis that the observed counts are consistent with the given probabilities. The null hypothesis is rejected if the p-value of the following \(\chi ^2\) test statistics is less than a given significance level. This approach enables us to test whether the observed frequency of segments starting in i and ending in j is indeed consistent with the probabilities \(p_{ij}\) given as input to the Monte Carlo backend. Our Multinomial test script uses the output file of ParSplice as its input and execute the test and post-processes the results by performing Pearson’s \(\chi ^2\) to assess whether to reject the null-hypothesis.

3.3 Results

A key insight from the theory that underpins ParSplice is that a random process that describes the splicing procedure should rigorously converge to a discrete time Markov chain in a discrete state space. In other words, the probability that a segment added to a trajectory currently ending in state i leaves the trajectory in state j should be a constant \(p_{ij}\) that is independent of the past history of the trajectory. One way to test ParSplice would be to verify that the splicing procedure is indeed Markovian (memory-less). However, taken alone, such a test would not guarantee that the splicing proceeds according to the proper Markov chain. A more powerful test would assess whether the spliced trajectory is consistent with the ground-truth Markov chain. A key obstacle to such a test is that this ground-truth model is, in practice, unknown and can only be statistically parameterized from simulation data.

To address this issue, we replaced the molecular dynamics (MD) simulation backend with a simpler Monte Carlo implementation that samples from a pre-specified, Markov chain. That is, we replaced the extremely complex model inherent to the MD backend with a known, given model of predefined probabilities. The task then becomes assessing whether the trajectory generated by ParSplice , as run in parallel on large numbers of cores, reproduces the statistics of the ground truth model. In this context, this technique is the ultimate test of correctness, as ParSplice is specifically designed to parallelize the generation of very long trajectories that are consistent with the underlying model. Statistical agreement between the trajectory and the model demonstrates that the scheduling procedure is functional (otherwise, the splicing the of trajectory would halt), the task ordering procedure is correct, the tasks executed properly, the results reduced correctly, and the splicing algorithm was correct.

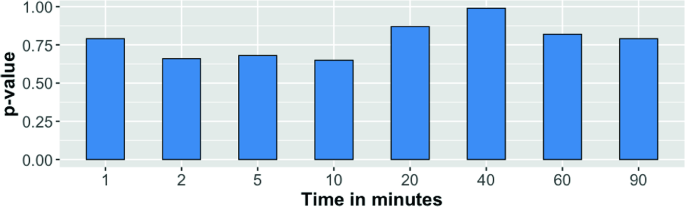

The statistical assessment to test ParSplice can be conducted using the Multinomial test approach. Our null hypothesis was that the observed counts generated by ParSplice are consistent with the probabilities in the model. If the p-value from the multinomial test is less than 0.05, we reject the null hypothesis and conclude that the observed counts differ from the expected ones. Conversely, if the p-value is greater than 0.05, we do not reject the null hypothesis and can conclude that the test passes. For the sake of verifying our Multinomial test, we ran ParSplice in different time frames and observed the result. Figure 1 shows the p-values obtained from running ParSplice for 1, 2, 5, 10, 20, 40, 60, and 90 min. We can see that in all cases, the p-values are greater than 0.05, which indicates that the tests passed during these instances of the execution.

p-values obtained by executing ParSplice for different times.

4 Conclusion

In this paper, we describe a case study of the ParSplice project in which we followed the PSIP methodology to develop a testing framework to address the difficulties of testing non-deterministic parallel research software. We first considered applying traditional industrial testing approaches. However, the non-determinism of ParSplice made these approaches unusable. Then we identified testing techniques specially designed for non-deterministic software. Once again, those techniques did not fit ParSplice . Finally, we identified a statistical testing approach, Multinomial Testing, that would work for ParSplice .

The Multinomial Testing approach is ideal for ParSplice given its constraints, i.e. time, non-determinism, and the existing continuous integration system. The lessons learned from this case study can be valuable to the larger research software community because, like ParSplice , many research software projects have stochastic behavior which produces non-deterministic results. The approach we followed to develop the test framework can be a model for other research software projects. We plan to extend the testing infrastructure in a more methodological way with as many possible testing techniques installed in the system.

https://gitlab.com/exaalt/parsplice .

https://betterscientificsoftware.github.io/PSIP-Tools/PSIP-Overview.html .

Chan, W., Cheung, S., Ho, J.C., Tse, T.: PAT: a pattern classification approach to automatic reference oracles for the testing of mesh simplification programs. J. Syst. Softw. 82 (3), 422–434 (2009)

Article Google Scholar

Chen, T.Y., Tse, T.H., Zhou, Z.: Fault-based testing in the absence of an oracle. In: 25th Annual International Computer Software and Applications Conference. COMPSAC 2001, pp. 172–178, October 2001

Google Scholar

Easterbrook, S.M., Johns, T.C.: Engineering the software for understanding climate change. Comput. Sci. Eng. 11 (6), 65–74 (2009)

Frounchi, K., Briand, L.C., Grady, L., Labiche, Y., Subramanyan, R.: Automating image segmentation verification and validation by learning test oracles. Inf. Softw. Technol. 53 (12), 1337–1348 (2011)

Heroux, M.A., Willenbring, J.M., Phenow, M.N.: Improving the development process for CSE software. In: 15th EUROMICRO International Conference on Parallel, Distributed and Network-Based Processing (PDP 2007), pp. 11–17, February 2007

Kanewala, U., Bieman, J.M.: Techniques for testing scientific programs without an oracle. In: Proceedings of the 5th International Workshop on Software Engineering for Computational Science and Engineering, SE-CSE 2013, pp. 48–57 (2013)

Kanewala, U., Bieman, J.M.: Testing scientific software: a systematic literature review. Inf. Softw. Technol. 56 (10), 1219–1232 (2014)

Miller, G.: A scientist’s nightmare: software problem leads to five retractions. Science 314 (5807), 1856–1857 (2006). https://doi.org/10.1126/science.314.5807.1856

Perez, D., Cubuk, E., Waterland, A., Kaxiras, E., Voter, A.: Long-time dynamics through parallel trajectory splicing. J. Chem. Theory Comput. 12 , 18–28 (2015)

Segal, J.: Some problems of professional end user developers. In: IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC), pp. 111–118 (2007)

Segal, J.: Software development cultures and cooperation problems: a field study of the early stages of development of software for a scientific community. Comput. Support. Coop. Work (CSCW) 18 (5), 581 (2009). https://doi.org/10.1007/s10606-009-9096-9

Download references

Acknowledgement

This research was supported by the Exascale Computing Project (17-SC-20-SC), a collaborative effort of the U.S. Department of Energy Office of Science and the National Nuclear Security Administration (LA-UR-20-20082).

Author information

Authors and affiliations.

Department of Computer Science, University of Alabama, Tuscaloosa, AL, USA

Nasir U. Eisty & Jeffrey C. Carver

Los Alamos National Laboratory, Los Alamos, NM, USA

Danny Perez, J. David Moulton & Hai Ah Nam

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Nasir U. Eisty .

Editor information

Editors and affiliations.

University of Amsterdam, Amsterdam, The Netherlands

Valeria V. Krzhizhanovskaya

Gábor Závodszky

Michael H. Lees

University of Tennessee, Knoxville, TN, USA

Jack J. Dongarra

Peter M. A. Sloot

Intellegibilis, Setúbal, Portugal

Sérgio Brissos

João Teixeira

Rights and permissions

Reprints and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper.

Eisty, N.U., Perez, D., Carver, J.C., Moulton, J.D., Nam, H.A. (2020). Testing Research Software: A Case Study. In: Krzhizhanovskaya, V., et al. Computational Science – ICCS 2020. ICCS 2020. Lecture Notes in Computer Science(), vol 12143. Springer, Cham. https://doi.org/10.1007/978-3-030-50436-6_33

Download citation

DOI : https://doi.org/10.1007/978-3-030-50436-6_33

Published : 15 June 2020

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-50435-9

Online ISBN : 978-3-030-50436-6

eBook Packages : Computer Science Computer Science (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

Here’s how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock A locked padlock ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

https://www.nist.gov/software-testing

Software testing

News and updates.

Spotlight: The Runaway Hit for Software Testing

NIST Publishes Review of Digital Forensic Methods

NIST Evaluates Face Recognition Software’s Accuracy for Flight Boarding

Projects and programs, combinatorial testing.

Face Recognition Prize Challenge 2017

Measurement, metrics, and assurance, frvt 1:n identification, publications, vulnerability test suite generator (vtsg) version 3, sate vi report: bug injection and collection, efficient parameter exploration of simulation studies, guidelines on minimum standards for developer verification of software.

- Skip to main content

We use cookies

Necessary cookies.

Necessary cookies enable core functionality. The website cannot function properly without these cookies, and can only be disabled by changing your browser preferences.

Analytics cookies

Analytical cookies help us improve our website. We use Google Analytics. All data is anonymised.

Hotjar and Clarity

Hotjar and Clarity help us to understand our users’ behaviour by visually representing their clicks, taps and scrolling. All data is anonymised.

Privacy policy

- Undergraduate study

- 2024 Degree programmes A‑Z

- Software Engineering (in partnership with KMITL)

Undergraduate

Software Engineering (in partnership with KMITL) BSc/MSci

Software engineers develop and maintain large-scale complex software infrastructures. Our programme combines theoretical computing science with the principles and practices used in the modern software industry and gives you real-world experience. Good software engineers not only have solid computer science foundations but must also be skilful in the software development process and have good people skills.

- September start

- Session dates

- Software Engineering (in partnership with KMITL) BSc 4 year degree

- Software Engineering (in partnership with KMITL) MSci: 5 year degree You may apply for transfer to the MSci mid-BSc.

- Glasgow: Gilmorehill campus and King Mongkut's Institute of Technology Ladkrabang, Thailand (KMITL)

Programme structure

Years 1 and 2 .

Students follow years 1 and 2 of the KMITL programme: BEng Software Engineering (International Programme)

Years 3, 4 and 5

Year 3 covers a broad range of topics and emphasises the skills needed for team-based software development when working with real-world customers.

After year 3, BSc students spend their summer on a paid placement in industry. This placement lasts a full year for MSci with work placement students.

The final year (4 or 5) includes advanced courses on software engineering and a substantial individual project, frequently in collaboration with employers.

BSc students can extend their degree by an additional year and graduate with an MSci. A decision regarding whether a student can transfer to the MSci will be taken by the University at the end of Year 3.

Course details

Years 1 and 2.

You follow years 1 and 2 of the KMITL programme:

BEng Software Engineering (International Programme)

Core courses:

- ALGORITHMICS I (H)

- DATA FUNDAMENTALS (H)

- HUMAN-CENTRED SYSTEMS DESIGN AND EVALUATION (H)

- SYSTEMS PROGRAMMING (H)

- PROFESSIONAL SOFTWARE DEVELOPMENT (H)

- TEAM PROJECT (H)

- SOFTWARE ENGINEERING SUMMER PLACEMENT (H)

- INDIVIDUAL PROJECT (H) (SINGLE)

- PROFESSIONAL SKILLS AND ISSUES (H)

- RESEARCH METHODS AND TECHNIQUES (M) FOR MSCI

- MSCI RESEARCH PROPOSAL AND PROJECT

- PROJECT RESEARCH READINGS IN COMPUTING SCIENCE (M)

As an Honours student (years 3 and 4), you will study courses which present a practical, design-oriented approach to computing, also covering topics such as databases, software project management and real-time systems. In year 3 you will take part in a software engineering team project.

After year 3, BSc students spend their summer on a paid placement in industry. This placement lasts a full year for MSci Work Placement students.

The final year (4 or 5) includes advanced courses on software engineering and a substantial individual project, frequently in collaboration with employers. BSc students can extend their degree by an additional year and graduate with an MSci.

Programme alteration or discontinuation The University of Glasgow endeavours to run all programmes as advertised. In exceptional circumstances, however, the University may withdraw or alter a programme. For more information, please see: Student contract .

Entry requirements

For entry in 2024.

Students should consult the KMITL website for entry requirements to Year 1 of the programme.

For entry to the University of Glasgow in Level 3 KMITL students will need to obtain a GPA of 3.0 in their first two years of study at KMITL.

There is a maximum of 10 places guaranteed at the University of Glasgow for KMITL students on this programme.

English language

For applicants whose first language is not English, the University sets a minimum English Language proficiency level.

English language requirements

International english language testing system (ielts) academic module (not general training).

- 6.5 with no sub-test under 6.0.

- Tests must have been taken within 2 years 5 months of start date. Applicants must meet the overall and subtest requirements using a single test.

Common equivalent English language qualifications

All stated English tests are acceptable for admission to this programme:

TOEFL (ib, my best or athome)

- 90 with minimum R 20, L 19, S 19, W 23.

- Tests must have been taken within 2 years 5 months of start date. Combined scores from two tests taken within 6 months of each other can be considered.

PTE (Academic)

- 60 with minimum 59 in all sub-tests.

Glasgow International College English Language (and other foundation providers)

- Tests are accepted for academic year following sitting.

University of Glasgow Pre-sessional courses

Cambridge english qualifications.

- Cambridge Advanced English (CAE): 176 overall, no subtest less than 169

- Cambridge Proficiency in English (CPE): 176 overall, no subtest less than 169

School Qualifications

- iGCSE English or ESOL 0522/0500, grade C

- International Baccalaureate English A SL5 or HL5

- International Baccalaureate English B SL6 or HL5

- SQA National 5 English or ESOL, grade B

- SQA Higher English or ESOL, grade C

- Hong Kong Diploma of Secondary Education, English Language grade 4

- West African Examination Council, Senior Secondary School Certificate, English grade C6

Alternatives to English Language qualification

- Undergraduate degree from English speaking country (including Canada if taught in English)

- Undergraduate 2+2 degree from English speaking country

- Undergraduate 2+2 TNE degree taught in English in non-English speaking country

- Masters degree from English speaking country

- Masters degree (equivalent on NARIC to UK masters degree) taught in English in non-English speaking country.

For international students, the Home Office has confirmed that the University can choose to use these tests to make its own assessment of English language ability for visa applications to degree level programmes. The University is also able to accept an IELTS test (Academic module) from any of the 1000 IELTS test centres from around the world and we do not require a specific UKVI IELTS test for degree level programmes. We therefore still accept any of the English tests listed for admission to this programme.

Pre-sessional courses

The University of Glasgow accepts evidence of the required language level from the English for Academic Study Unit Pre-sessional courses. We also consider other BALEAP accredited pre-sessional courses:

- School of Modern Languages & Cultures: English for Academic Study

- BALEAP guide to accredited courses

What do I do if...

my language qualifications are below the requirements?

The University's School of Modern Languages and Cultures offers a range of Pre-sessional courses to bring you up to entry level. The course is accredited by BALEAP, the UK professional association for academic English teaching.

my language qualifications are not listed here?

Please contact External Relations

If you require a Tier 4 student visa, your qualification must be one of the secure English language tests accepted by UK Border Agency:

- UK Border Agency Tier 4 English Language requirements

- UKBA list of approved English language tests [pdf]

Visa requirements and proof of English language level

It is a visa requirement to provide information on your level of English based on an internationally recognised and secure English language test. All test reports must be no more than 2 years old . A list of these can be found on the UK Border Agency website . If you have never taken one of these tests before, you can get an initial idea of your level by using the Common European Framework self-assessment grid which gives you a level for each skill (e.g. listening B1/writing B2 etc.) However, please note that this is not a secure English language test and it is not sufficient evidence of your level of English for visa requirements.

Further information about English language: School of Modern Languages & Cultures: English for Academic Study

Career prospects

Our graduates are employed in such companies as Codeplay , JP Morgan, Amazon and HP. We also actively support our graduates in creating their own startups.

Accreditation

Honours graduates are eligible for membership of the British Computer Society and, after relevant work experience, they can apply to become full Chartered IT Professionals (CITP) and partial Chartered Scientists (CSci)/Chartered Engineers (CEng). MSci graduates are eligible for full CITP and partial CSci. Honours degrees hold the Euro-Inf Bachelor Quality label; MSci degrees hold the Euro-Inf Master Quality Label.

Fees and funding

- Tuition fees

How and when you pay tuition fees depends on where you’re from: see Tuition fees for details.

In Years 1 and 2, students will pay their tuition fees to KMITL. Please consult the KMITL website for details on tuition fees for the first two years of the programme.

Fees for the University of Glasgow part of the programme (Year 3 onward):

Scholarships

The University is committed to supporting students and rewarding academic excellence. That's why we've invested more than £1m in additional scholarship funding in recent years.

- King Mongkut's Institute of Technology Ladkrabang (KMTIL) Glasgow Undergraduate Scholarship

The School of Computing Science and James Watt School of Engineering is offering a discount to students from King Mongkut's Institute of Technology Ladkrabang wishing to complete their 3rd and 4rth year of the BSc Software Engineering/BEng Biomedical Engineering at the University of Glasgow. A progression to the MSci/MEng may be available depending on the student's performance in year 3 and 4.

The scholarships above are specific to this programme. For more funding opportunities search the scholarships database

How to apply

Students should consult the KMITL web pages for applying to year 1 of the programme.

Students intending to transfer to this programme from KMITL from Level 3 may apply directly to the University of Glasgow.

You will require a copy of your transcript showing courses already taken and in progress in the first two years of your degree; a copy of your English language test result and a copy of the photo page of your passport.

We would encourage students to apply in January/February for September start.

- Application form: coming soon

- Application deadline: 30 June

Students who have yet to complete year 2 of their programme at KMITL, or to obtain the necessary English language qualification may receive an offer of a place conditional on meeting these two requirements. There is no need to wait to obtain these to submit an application.

Times & Sunday Times Good University Guide [Computer Science]

Subject league tables

Guardian University Guide [Computer Science & Information Systems]

Complete University Guide [Computer Science]

Related programmes

Computing science.

- Computing Science [BSc/MA/MA(SocSci)/MSci]

- Computing Science (in partnership with BUiD) [BSc/MSci]

- Computing Science (in partnership with SIT)

- Digital Media & Information Studies [MA]

- Electronic & Software Engineering [BSc/BEng/MEng]

more related Computing Science programmes

Related links

- 2025 Degree programmes A‑Z

- Information about entry requirements

- Choosing your degree

- How to apply for an undergraduate degree

- Undergraduate accommodation

- Best overall: Claud 3

Best for Live Data: Google Gemini

Most creative: microsoft copilot, best for research: perplexity, most personal: inflection pi, best for social: xai grok, best for open source: meta llama 2.

Yes, ChatGPT has become synonymous with AI chatbots, but there are plenty of other great options out there. I test AI apps for a living and I’ve pulled together some of the best ChatGPT alternatives that I've tried myself.

Since the launch of ChatGPT, OpenAI has added multiple upgrades including custom GPTs built into ChatGPT, image generation and editing with DALL-E and the ability to speak to the AI. You can even use it without an account .

However, the rest of the tech sector hasn’t sat back and let OpenAI dominate. Some of its competitors equal or exceed the abilities of ChatGPT and others offer features it doesn’t. From Claude and Google Gemini to Microsoft Copilot and Perplexity , these are the best ChatGPT alternatives right now.

Best Overall: Anthropic Claud 3

Claude 3 is the most human chatbot I’ve ever interacted with . Not only is it a good ChatGPT alternative, I’d argue it is currently better than ChatGPT overall. It has better reasoning and persuasion and isn’t as lazy. It will create a full app or write an entire story.

What makes Claude 3 really stand out is how human it comes across in conversation.

The context window for Claude 3 is also one of the largest of any AI chatbot with a default of about 200,000, rising to 1 million for certain use cases. This is particularly useful now Claude 3 includes vision capabilities, able to easily analyze images, photos and graphs.

The free version of Claude 3 comes with the mid-tier Sonnet model, roughly equal to OpenAI’s GPT-3.5 or Google’s Gemini Pro. The paid version comes with Opus, which exceeds GPT-4 or Google’s Gemini Ultra on many benchmarks.

Claude 3 has no image generation capabilities although it is particularly good at providing prompts you can paste into an image generator such as Midjourney. It is also better at coding than some of the other models.

Pricing: Claude 3 costs $20 a month for the Plus version with Opus. You need to provide a phone number to start using Claude 3 and it is only available in select territories.

Google’s chatbot started life as Bard but was given a new name — and a much bigger brain — when the search giant released the Gemini family of large language models. It is a good all-around chatbot with a friendly turn of phrase. It is also one of the most cautious and tightly moderated .

Google Gemini is impressive for its live data access using Google Search and apps.

Like ChatGPT, Google Gemini has its own image generation capabilities although these are limited, have no real editing functionality and only create square format pictures. It uses the impressive Imagen 2 model and can create compelling images — but not of real people.

Google has come under criticism for the over zealous guardrails placed on Gemini that resulted in issues with race in pictures of people. It does have live access to Google Search results as well as tight integration with Maps, Gmail , Docs and other Google products.

The free version uses the Gemini Pro 1.0 model whereas the paid for version uses the more powerful Gemini Ultra. There is also a new Gemini 1.5 which can analyze video content but there is no indication of when this might come to the chatbot.

Pricing: Gemini Advanced is the paid for version and is available for $19.99 bundled with the Gemini One subscription service. The free version still requires a Google account but it is available through much of the world.

Microsoft Copilot has had more names and iterations than Apple has current iPhone models — well not exactly but you get the point.

Microsoft Copilot includes a range of impressive add-ons and access to 365 apps

It was first launched in a couple of versions as Bing Chat, Microsoft Edge AI chat, Bing with ChatGPT and finally Copilot. Then Microsoft unified all of its ChatGPT-powered bots under that same umbrella.

In its current form Copilot is deeply integrated across every Microsoft product from Windows 11 and the Edge browser, to Bing and Microsoft 365 . Copilot is also in enterprise tools. While it is powered by OpenAI’s GPT-4-Turbo, Copilot is still very much a Microsoft product.

Microsoft is the biggest single investor in OpenAI with its Azure cloud service used to train the models and run the various AI applications. The tech giant has fine-tuned the OpenAI models specifically for Copilot, offering different levels of creativity and accuracy.

Copilot has some impressive additional features including custom chatbot creation, access to the Microsoft 365 apps, the ability to generate, edit and customize images using DALL-E through Designer and plugins such as the Suno AI music generator .

Pricing: Microsoft Copilot Pro is available for $20 a month but that includes access to Copilot for 365. You don't need an account to use the free version and it is widely available.

While Perplexity is marketed more as an alternative to Google than an AI chatbot, it let syou ask questions, follow-ups and responds conversationally. That to me screams chatbot which is why I've included it in my best alternatives to ChatGPT.

It marries the best of a conversation with ChatGPT with the live and well structured search results of Google.

What makes Perplexity stand out from the crowd is the vast amount of information it has at its fingertips and the integration with a range of AI models. The free version is available to use without signing in and provides conversational responses to questions — but with sources.

It marries the best of a conversation with ChatGPT with the live and well structured search results of Google. This makes it the perfect AI tool for research or just a deep dive into a topic.

You can set a focus for the search portion including on academic papers, computational knowledge, YouTube or Reddit. You can also disable web search and just use it like ChatGPT.

Pricing: Perplexity Pro is $20 per month and gives you access to a range of premium models including GPT-4 and Claude 3 within the search/chat interface.

Pi from Inflection AI is my favorite large language model to talk to. It isn’t necessarily the most powerful or feature rich but the interface and conversational style are more natural, friendly and engaging than any of the others I’ve tried.

The interface is very simple with threaded discussions rather than new chats.

Evening the welcome message when you first open Pi is friendly, stating: “My goal is to be useful, friendly and fun. Ask me for advice, for answers, or let’s talk about whatever’s on your mind.” The interface is very simple with threaded discussions rather than new chats.

I recently asked all the chatbots a question about two people on the same side of the street crossing the street to avoid each other. Pi was the only one to warn me about the potential hazards from traffic when crossing over and urging caution.

Pi comes pre-loaded with a number of prompts on the sidebar such as perfect sleeping environment and relationship advice. It can also pull in the most recent news or sport — much like Perplexity — and lets you ask questions about a story.

Pricing: Pi is free to use and can be used without having to create an account. It also has a voice feature for reading messages out loud.

Elon Musk’s Grok is almost the anti-Pi. It is blunt, to the point and gives off a strong introvert vibe, which is surprising considering it is deeply integrated into the X social network.

Its guardrails are less tightly wound than others.

Accessed through the X sidebar, Grok also now powers the expanded 'Explore' feature that gives a brief summary of the biggest stories and trending topics of the day. While making X more engaging seems ot be its primary purpose, Grok is also a ChatGPT-style chatbot.

Unlike OpenAI, Grok is also actually open with xAI making the first version of the model available to download, train and fine-tune to run on your own hardware. The big differentiator for Grok is what Elon Musk calls “free speech”. Its guardrails are less tightly wound than others.

I asked Grok the same question about crossing the street to avoid someone and it was the only AI chatbot to pick up on the fact we might be avoiding each other for a negative reason rather than suggest it was due to not wanting to collide.

Pricing: Grok is now available with an X Premium account. It previously required Premium+. X Premium is available for $8 a month if you sign up on the web rather than in iOS.

Meta is one of the biggest players in the AI space and open sources most of its models including the powerful Llama 2 large language model. It will also open source Llama 3 when it comes online this summer. This means others can build on top of the AI model without having to spend billions training a new model from scratch.

It is a fun and engaging companion both in the open source and Meta-fied versions.

Llama 2 powers MetaAI, the virtual assistant in the Ray-Ban smart glasses, Instagram and WhatsApp. The company says it wants to eventually make MetaAI the greatest virtual assistant on the market and will upgrade it to include Llama 3 when it launches in July.

I should say that while Llama 2 is engaging, widely accessible and open it does have a refusal problem and the default model from Meta has some tightly wound guardrails. The company says this will be solved with Llama 3.

Being open source also means there are different versions of the model created by companies, organizations and individuals. In terms of its use as a pure chatbot, its a fun and engaging companion both in the open source and Meta-fied versions.

Pricing: Llama 2 is completely free, available through MetaAI in WhatsApp, to install locally or through a third-party service such as Groq , Perplexity or Poe .

More from Tom's Guide

- ChatGPT Plus vs Copilot Pro — which premium chatbot is better?

- I pitted Google Bard with Gemini Pro vs ChatGPT — here’s the winner

- Runway vs Pika Labs — which is the best AI video tool?

Sign up to get the BEST of Tom’s Guide direct to your inbox.

Upgrade your life with a daily dose of the biggest tech news, lifestyle hacks and our curated analysis. Be the first to know about cutting-edge gadgets and the hottest deals.

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?

I test AI for a living and Meta's new MetaAI chatbot might be my new favorite

Best AI image generators of 2024

Meta’s Imagine AI image generator just got a big GIF upgrade — and I’m obsessed

Most Popular

- 2 AdBlock VPN review

- 3 Netflix has just renewed 'The Witcher' for its final season — and that’s a good thing

- 4 Huge Reebok sale knocks up to 50% off site wide — 7 deals I'd buy now

- 5 Samsung Galaxy Z Flip 6 specs just leaked on Geekbench — here’s what we know

Research on Software Testing Technology Under the Background of Big Data

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

AI Takes the Sky: DARPA's Autonomous F-16 Dogfights Human Pilot in Groundbreaking Air Force Test

T he future of aerial combat is unfolding above us, artificial intelligence takes the stick in a jet-to-jet showdown. In a series of remarkable test flights, the Defense Advanced Research Projects Agency (DARPA), in collaboration with the U.S. Air Force Test Pilot School, has successfully demonstrated that AI software can take on a human pilot in dogfighting scenarios with an F-16 fighter jet.

The star of these tests, the X-62A VISTA (Variable In-flight Simulator Test Aircraft), is a modified F-16, built to trial cutting-edge flight technologies. “The incredible accomplishment of this year was to take these machine learning agents and place them into the X-62A in a real-world environment,” remarked Col. James Valpiani, highlighting the momentous transition from simulation to actual flight.

DARPA’s foray into AI-controlled dogfights began with the 2020 AlphaDogfight trials, where an AI opponent went head-to-head with human pilots in a flight simulator. The AI emerged victorious, outmaneuvering human competitors with speeds and maneuvers beyond human tolerances. As Secretary of the Air Force Frank Kendall noted, the machine learning approach is particularly suited to “environments and situations where conditions fluctuate dynamically,” such as the chaos of air-to-air combat.

Machine learning’s potential in aviation isn’t just about outmaneuvering a human opponent. It’s about reshaping the military’s approach to aerial encounters. “The potential for autonomous air-to-air combat has been imaginable for decades, but the reality has remained a distant dream up until now,” said Kendall, marking the recent feats as “transformational moments” in combat aviation.

These tests are not taken lightly, with over 100,000 lines of flight-critical software updates and rigorous safety evaluations. The X-62A flew a series of defensive and offensive maneuvers, culminating in high-speed, nose-to-nose engagements. All of which were performed while adhering to strict safety and ethical protocols—no easy feat when entrusting AI with the complexities of combat flight.

The implications of these tests are vast. DARPA has compared the X-62A’s performance to AlphaGo Zero’s impact on strategic games like Chess and Go, suggesting a similar validation for autonomous aviation. This progress is driving programs like the Air Force’s collaborative combat aircraft initiative, pushing the envelope on AI’s role in future counter-air operations and beyond.

Frank Kendall’s enthusiasm for AI’s military applications is palpable. He plans to demonstrate his confidence by flying aboard an AI-controlled F-16 later this year. “The critical problem on the battlefield is time. And AI will be able to do much more complicated things much more accurately and much faster than human beings can,” he asserts.

The journey of AI from virtual trials to actual cockpit control in the X-62A has been rapid and remarkable. As Bill Gray, Chief Test Pilot at the USAF Test Pilot School, puts it, “Dogfighting was the problem to solve so we could start testing autonomous artificial intelligence systems in the air.”

Relevant articles:

– US Air Force says AI-controlled F-16 fighter jet has been dogfighting with humans

– Pentagon takes AI dogfighting to next level in real-world flight tests against human F-16 pilot , DefenseScoop, Wed, 17 Apr 2024 21:31:02 GMT

– US Air Force confirms first successful AI dogfight , The Verge, Thu, 18 Apr 2024 14:04:02 GMT

– Controlled Jet to Dogfight Against Manned F , The Debrief, Wed, 17 Apr 2024 17:38:40 GMT

![The future of aerial combat is unfolding above us, artificial intelligence takes the stick in a jet-to-jet showdown. In a series of remarkable test flights, the Defense Advanced Research Projects Agency (DARPA), in collaboration with the U.S. Air Force Test Pilot School, has successfully demonstrated that AI software can take on a human pilot in […] The future of aerial combat is unfolding above us, artificial intelligence takes the stick in a jet-to-jet showdown. In a series of remarkable test flights, the Defense Advanced Research Projects Agency (DARPA), in collaboration with the U.S. Air Force Test Pilot School, has successfully demonstrated that AI software can take on a human pilot in […]](https://img-s-msn-com.akamaized.net/tenant/amp/entityid/AA1nlbNq.img?w=768&h=511&m=6)

IMAGES

VIDEO

COMMENTS

Software testing is an important stage to test the reliability of the software being developed. Software testing can be done for any software developed (Jamil et al., 2016; Lawana, 2014). For the ...

Research in software testing is growing and rapidly-evolving. Based on the keywords assigned to publications, we seek to identify predominant research topics and understand how they are connected and have evolved. We have applied co-word analysis to characterize the topology of software testing research over four decades of research publications.

Abstract: Software Testing is a process, which involves, executing of a software program/application and finding all errors or bugs in that program/application so that the result will be a defect-free software. Quality of any software can only be known through means of testing (software testing). Through the advancement of technology around the world, there increased the number of verification ...

There are many different types of software tests, each with specific objectives and strategies: Acceptance testing: Verifying whether the whole system works as intended. Code review: Confirming that new and modified software is following an organization's coding standards and adheres to its best practices. Integration testing: Ensuring that software components or functions operate together.

It gets harder and harder to guarantee the quality of software systems due to their increasing complexity and fast development. Because it helps spot errors and gaps during the first phases of software development, software testing is one of the most crucial stages of software engineering. Software testing used to be done manually, which is a time-consuming, imprecise procedure that comes with ...

Software testing is a crucial component of software development. With the increasing complexity of software systems, traditional manual testing methods are becoming less feasible. Artificial Intelligence (AI) has emerged as a promising approach to software testing in recent years. This review paper aims to provide an in-depth understanding of the current state of software testing using AI. The ...

Evaluating software testing techniques: A systematic mapping study. Mitchell Mayeda, Anneliese Andrews, in Advances in Computers, 2021. 1 Introduction. Software testing is a vital process for detecting faults in software and reducing the risk of using it. With a rapidly expanding software industry and a heavy reliance on increasingly prevalent software, there is a serious demand for employing ...

Context. Software testing is an important and costly software engineering activity in the industry. Despite the efforts of the software testing research community in the last several decades, various studies show that still many practitioners in the industry report challenges in their software testing tasks.