Will AI ever reach human-level intelligence? We asked five experts

Digital Culture Editor

Interviewed

Biomedical Engineer and Neuroscientist, University of Sydney

Lecturer in AI and Data Science, Swinburne University of Technology

Lecturer in Business Analytics, University of Sydney

Professor and Head of the Department of Philosophy, and Co-Director of the Macquire University Ethics & Agency Research Centre, Macquarie University

Professor of Artificial Intelligence, Faculty of Business and Hospitality, Torrens University Australia

View all partners

Artificial intelligence has changed form in recent years.

What started in the public eye as a burgeoning field with promising (yet largely benign) applications, has snowballed into a more than US$100 billion industry where the heavy hitters – Microsoft, Google and OpenAI, to name a few – seem intent on out-competing one another.

The result has been increasingly sophisticated large language models, often released in haste and without adequate testing and oversight.

These models can do much of what a human can, and in many cases do it better. They can beat us at advanced strategy games , generate incredible art , diagnose cancers and compose music.

Read more: Text-to-audio generation is here. One of the next big AI disruptions could be in the music industry

There’s no doubt AI systems appear to be “intelligent” to some extent. But could they ever be as intelligent as humans?

There’s a term for this: artificial general intelligence (AGI). Although it’s a broad concept, for simplicity you can think of AGI as the point at which AI acquires human-like generalised cognitive capabilities. In other words, it’s the point where AI can tackle any intellectual task a human can.

AGI isn’t here yet; current AI models are held back by a lack of certain human traits such as true creativity and emotional awareness.

We asked five experts if they think AI will ever reach AGI, and five out of five said yes.

But there are subtle differences in how they approach the question. From their responses, more questions emerge. When might we achieve AGI? Will it go on to surpass humans? And what constitutes “intelligence”, anyway?

Here are their detailed responses:

Read more: Calls to regulate AI are growing louder. But how exactly do you regulate a technology like this?

- Artificial intelligence (AI)

- Artificial general intelligence

- Five Experts

- Human intelligence

Professor of Indigenous Cultural and Creative Industries (Identified)

Communications Director

Associate Director, Post-Award, RGCF

University Relations Manager

2024 Vice-Chancellor's Research Fellowships

How close are we to AI that surpasses human intelligence?

Subscribe to the center for technology innovation newsletter, jeremy baum and jeremy baum undergraduate student - ucla, researcher - ucla institute for technology, law, and policy john villasenor john villasenor nonresident senior fellow - governance studies , center for technology innovation.

July 18, 2023

- Artificial general intelligence (AGI) is difficult to precisely define but refers to a superintelligent AI recognizable from science fiction.

- AGI may still be far off, but the growing capabilities of generative AI suggest that we could be making progress toward its development.

- The development of AGI will have a transformative effect on society and create significant opportunities and threats, raising difficult questions about regulation.

For decades, superintelligent artificial intelligence (AI) has been a staple of science fiction, embodied in books and movies about androids, robot uprisings, and a world taken over by computers. As far-fetched as those plots often were, they played off a very real mix of fascination, curiosity, and trepidation regarding the potential to build intelligent machines.

Today, public interest in AI is at an all-time high. With the headlines in recent months about generative AI systems like ChatGPT, there is also a different phrase that has started to enter the broader dialog: a rtificial general intelligence , or AGI. But what exactly is AGI, and how close are today’s technologies to achieving it?

Despite the similarity in the phrases generative AI and artificial general intelligence, they have very different meanings. As a post from IBM explains, “Generative AI refers to deep-learning models that can generate high-quality text, images, and other content based on the data they were trained on.” However, the ability of an AI system to generate content does not necessarily mean that its intelligence is general.

To better understand artificial general intelligence, it helps to first understand how it differs from today’s AI, which is highly specialized. For example, an AI chess program is extraordinarily good at playing chess, but if you ask it to write an essay on the causes of World War I, it won’t be of any use. Its intelligence is limited to one specific domain. Other examples of specialized AI include the systems that provide content recommendations on the social media platform TikTok, navigation decisions in driverless cars, and purchase recommendations from Amazon.

AGI: A range of definitions

By contrast, AGI refers to a much broader form of machine intelligence. There is no single, formally recognized definition of AGI—rather, there is a range of definitions that include the following:

| “…highly autonomous systems that outperform humans at most economically valuable work” | |

| “[a] hypothetical computer program that can perform intellectual tasks as well as, or better than, a human.” | |

| “…any intelligence (there might be many) that is flexible and general, with resourcefulness and reliability comparable to (or beyond) human intelligence.” | |

| “…systems that demonstrate broad capabilities of intelligence, including reasoning, planning, and the ability to learn from experience, and with these capabilities at or above human-level.” |

While the OpenAI definition ties AGI to the ability to “outperform humans at most economically valuable work,” today’s systems are nowhere near that capable. Consider Indeed’s list of the most common jobs in the U.S. As of March 2023, the first 10 jobs on that list were: cashier, food preparation worker, stocking associate, laborer, janitor, construction worker, bookkeeper, server, medical assistant, and bartender. These jobs require not only intellectual capacity but, crucially, most of them require a far higher degree of manual dexterity than today’s most advanced AI robotics systems can achieve.

None of the other AGI definitions in the table specifically mention economic value. Another contrast evident in the table is that while the OpenAI AGI definition requires outperforming humans, the other definitions only require AGI to perform at levels comparable to humans. Common to all of the definitions, either explicitly or implicitly, is the concept that an AGI system can perform tasks across many domains, adapt to the changes in its environment, and solve new problems—not only the ones in its training data.

GPT-4: Sparks of AGI?

A group of industry AI researchers recently made a splash when they published a preprint of an academic paper titled, “Sparks of Artificial General Intelligence: Early experiments with GPT-4.” GPT-4 is a large language model that has been publicly accessible to ChatGPT Plus (paid upgrade) users since March 2023. The researchers noted that “GPT-4 can solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting,” exhibiting “strikingly close to human-level performance.” They concluded that GPT-4 “could reasonably be viewed as an early (yet still incomplete) version” of AGI.

Of course, there are also skeptics: As quoted in a May New York Times article , Carnegie Mellon professor Maarten Sap said, “The ‘Sparks of A.G.I.’ is an example of some of these big companies co-opting the research paper format into P.R. pitches.” In an interview with IEEE Spectrum, researcher and robotics entrepreneur Rodney Brooks underscored that in evaluating the capabilities of systems like ChatGPT, we often “mistake performance for competence.”

GPT-4 and beyond

While the version of GPT-4 currently available to the public is impressive, it is not the end of the road. There are groups working on additions to GPT-4 that are more goal-driven, meaning that you can give the system an instruction such as “Design and build a website on (topic).” The system will then figure out exactly what subtasks need to be completed and in what order in order to achieve that goal. Today, these systems are not particularly reliable, as they frequently fail to reach the stated goal. But they will certainly get better in the future.

In a 2020 paper , Yoshihiro Maruyama of the Australian National University identified eight attributes a system must have for it to be considered AGI: Logic, autonomy, resilience, integrity, morality, emotion, embodiment, and embeddedness. The last two attributes—embodiment and embeddedness—refer to having a physical form that facilitates learning and understanding of the world and human behavior, and a deep integration with social, cultural, and environmental systems that allows adaption to human needs and values.

It can be argued that ChatGPT displays some of these attributes, like logic. For example, GPT-4 with no additional features reportedly scored a 163 on the LSAT and 1410 on the SAT . For other attributes, the determination is tied as much to philosophy as much as to technology. For instance, is a system that merely exhibits what appears to be morality actually moral? If asked to provide a one-word answer to the question “is murder wrong?” GPT-4 will respond by saying “Yes.” This is a morally correct response, but it doesn’t mean that GPT-4 itself has morality, but rather that it has inferred the morally correct answer through its training data.

A key subtlety that often goes missing in the “How close is AGI?” discussion is that intelligence exists on a continuum, and therefore assessing whether a system displays AGI will require considering a continuum. On this point, the research done on animal intelligence offers a useful analog. We understand that animal intelligence is far too complex to enable us to meaningfully convey animal cognitive capacity by classifying each species as either “intelligent” or “not intelligent:” Animal intelligence exists on a spectrum that spans many dimensions, and evaluating it requires considering context. Similarly, as AI systems become more capable, assessing the degree to which they display generalized intelligence will be involve more than simply choosing between “yes” and “no.”

AGI: Threat or opportunity?

Whenever and in whatever form it arrives, AGI will be transformative, impacting everything from the labor market to how we understand concepts like intelligence and creativity. As with so many other technologies, it also has the potential of being harnessed in harmful ways. For instance, the need to address the potential biases in today’s AI systems is well recognized, and that concern will apply to future AGI systems as well. At the same time, it is also important to recognize that AGI will also offer enormous promise to amplify human innovation and creativity. In medicine, for example, new drugs that would have eluded human scientists working alone could be more easily identified by scientists working with AGI systems.

AGI can also help broaden access to services that previously were accessible only to the most economically privileged. For instance, in the context of education, AGI systems could put personalized, one-on-one tutoring within easy financial reach of everyone, resulting in improved global literacy rates. AGI could also help broaden the reach of medical care by bringing sophisticated, individualized diagnostic care to much broader populations.

Regulating emergent AGI systems

At the May 2023 G7 summit in Japan, the leaders of the world’s seven largest democratic economies issued a communiqué that included an extended discussion of AI, writing that “international governance of new digital technologies has not necessarily kept pace.” Proposals regarding increased AI regulation are now a regular feature of policy discussions in the United States , the European Union , Japan , and elsewhere.

In the future, as AGI moves from science fiction to reality, it will supercharge the already-robust debate regarding AI regulation. But preemptive regulation is always a challenge, and this will be particularly so in relation to AGI—a technology that escapes easy definition, and that will evolve in ways that are impossible to predict.

An outright ban on AGI would be bad policy. For example, AGI systems that are capable of emotional recognition could be very beneficial in a context such as education, where they could discern whether a student appears to understand a new concept, and adjust an interaction accordingly. Yet the EU Parliament’s AI Act, which passed a major legislative milestone in June, would ban emotional recognition in AI systems (and therefore also in AGI systems) in certain contexts like education.

A better approach is to first gain a clear understanding of potential misuses of specific AGI systems once those systems exist and can be analyzed, and then to examine whether those misuses are addressed by existing, non-AI-specific regulatory frameworks (e.g., the prohibition against employment discrimination provided by Title VII of the Civil Rights Act of 1964). If that analysis identifies a gap, then it does indeed make sense to examine the potential role in filling that gap of “soft” law (voluntary frameworks) as well as formal laws and regulations. But regulating AGI based only on the fact that it will be highly capable would be a mistake.

Related Content

Cameron F. Kerry

July 7, 2023

Alex Engler

June 16, 2023

Darrell M. West

May 17, 2023

Artificial Intelligence Technology Policy & Regulation

Governance Studies

Center for Technology Innovation

Nicol Turner Lee

September 13, 2024

Chiraag Bains

Chinasa T. Okolo

September 10, 2024

Why Artificial Intelligence Will Not Replace Human in Near Future? Essay

- To find inspiration for your paper and overcome writer’s block

- As a source of information (ensure proper referencing)

- As a template for you assignment

The development and ubiquity of artificial intelligence make people worldwide wonder if it will replace humans shortly and lead to massive job loss. Even though “the modern project of creating human-like artificial intelligence (AI) started after World War II” (Fjelland, 2020), the magnitude of these reflections has gotten such traction only in the 21st century. The changing nature of work is now occupying global organizations. It is being discussed in forums such as Davos, and the World Bank devoted its World Development Report 2019 to this topic (The World Bank, 2019: The Changing Nature of Work, n.d.). However, the major worry of the third millennium, the takeover of the globe by AI, remains a neurotic fantasy. Indeed, developing and implementing algorithms on a global scale necessitates a great deal of attention and presents new obstacles. The primary reasons AI will not replace humans shortly are empathy, creative problem solving, ethics, and decision-making.

In all areas, humanity is not yet ready to bring algorithms into industrial processes, let alone management or essential decision-making. New examples of algorithms acting independently raise concerns, primarily as developers aim to empower AI to make decisions on its own. For example, flaws in games, social networks, and creativity can still be hidden or remedied painlessly. In that case, the hazards of applying AI in areas related to human well-being necessitate a great deal of attention and algorithm transparency. The issue of explainability is how AI makes decisions in the first place.

Artificial intelligence, in this sense, concerns both developers and ordinary people. This was acknowledged by the researchers of the AI Now Research Institute at the University of New York. The black boxes that construct closed algorithms inside themselves are the primary source of concern. Explainable Al is designed to demystify the decision-making process by describing the reasoning process and the conclusion. When Facebook’s chatbots hit a roadblock and could not receive permission from human operators, they started making new requests to get around it (Reisman, Schultz, Crawford & Whittaker, 2018). The developers uncovered a bot interaction in which the phrases were meaningless to humans, yet the bots were cooperating for an unknown reason. Even though the bots were turned off, the silt persisted. Algorithms hunt for optimal solutions to situations where a person does not offer hard constraints: they act like hackers and look for unforeseen changes. That is why, for the time being, AI will not be permitted to pilot aircraft in autonomous mode. Aviation is an excellent example of an industry that necessitates extreme precision in decision-making due to the high stakes involved. The biggest constraint on algorithms’ access to control systems appears to be in areas where transparency of the decision-making process and subsequent explanation of their actions are essential.

Another important reason why AI will not be able to replace humans is what is known as emotional intelligence. The human’s ability to respond to a situation quickly with innovative ideas and empathy is unparalleled, and it cannot be replicated by any computer on the planet. According to Beck and Libert’s (2017) article in Harvard Business Review about the importance of emotional intelligence, “skills like persuasion, social understanding, and empathy are going to become differentiators as artificial intelligence and machine learning take over our other tasks” (2017, p. 4). For example, AI can search a million psychology textbooks and provide information on everything one needs to know about depression symptoms and remedies in seconds; however, only a person can read the expression on a person’s face and know exactly what needs to be said in this situation. The same is applicable for occupations that involve emotion and empathy, such as psychology, teaching, coaching, and HR management, where motivating people is part of the job. Thus, in the future, emotional intelligence will be one of the competitive advantages of a person over technology when seeking a job.

Even if AI is eventually integrated into many aspects of human existence, it is critical to recognize that technologies alone will not solve all of humanity’s problems and that we must consider issues such as ethics and law. Today, Microsoft, Google, Facebook, and others have ethical committees, which act as a type of internal censorship of AI’s potential threats. Ethics committees highlight that technologies are the result of human labor and that they are the responsibility of all participants in global interaction, including both end-users and institutional entities such as business, government, and society. Technologies will not assist in the resolution of social issues; instead, they will further amplify existing tensions and conflicts while also creating new ones. In fact, “the challenge posed by AI-equipped machines can be addressed by the kind of ethical choices made by human beings” (Etzioni & Etzioni, 2017). It is advocated that to overcome the technocratic approach to AI development, one needs to look at how different ethical dilemmas are managed in different societies. Even if developers begin to put a lot of effort into making such recommendations, it will considerably challenge AI development in general because social processes have many unknown, poorly considered, and unpredictable aspects. Therefore, the human areas of responsibility connected with communication skills, teamwork, morals, and ethics will become more valuable. Even after reaching the point of singularity, this indicates that a human will be present for an extended period.

Overall, shortly, robots will not be able to completely replace humans because they lack emotional intelligence, and cannot make important decisions and solve ethical problems. This indicates that human interactions are paramount in the contemporary world, and robots can only assist. Technological advancements do not constitute a broad threat and will not result in the early replacement of human work; however, this does not minimize the necessity for citizens to be prepared for quick changes in the job structure. Moreover, robots will provide many new opportunities for humans, particularly programmers and engineers. Automation is inevitable; it is part of progress; on the other hand, people’s goal is to establish such artificial intelligence foundations that AI in the future will not consider replacing humanity. Digital change is happening slowly and unevenly, and it is hitting many roadblocks, both technological and social. Automation and robotization are increasingly replacing manual labor and creating new jobs, but AI developments are taking place at different speeds and are having unanticipated social consequences right now. As a result, the entrance of AI into life does not only not push a person to the margins of existence but also forces them to grow in new areas.

Beck, M., & Libert, B. (2017). The rise of AI makes emotional intelligence more important. Harvard Business Review , 15 .

Etzioni, A., & Etzioni, O. (2017). Incorporating ethics into artificial intelligence. The Journal of Ethics , 21 (4), 403-418.

Fjelland, R. (2020) Why general artificial intelligence will not be realized . Humanit Soc Sci Commun , 7 (10). Web.

Reisman, D., Schultz, J., Crawford, K., & Whittaker, M. (2018). Algorithmic impact assessments: A practical framework for public agency accountability . AI Now.

The World Bank (2019) The changing nature of work . Web.

- The Importance of Trust in AI Adoption

- Leaders’ Attitude Toward AI Adoption in the UAE

- Artificial Intelligence's Impact on Communication

- The Age of Artificial Intelligence (AI)

- Will Robots Ever Replace Humans?

- Artificial Intelligence for the Future of Policing

- Artificial Intelligence in Self Driving Cars

- Artificial Intelligence in “I, Robot” by Alex Proyas

- Dangers of Logic and Artificial Intelligence

- Big Data and Artificial Intelligence

- Chicago (A-D)

- Chicago (N-B)

IvyPanda. (2022, July 18). Why Artificial Intelligence Will Not Replace Human in Near Future? https://ivypanda.com/essays/why-artificial-intelligence-will-not-replace-human-in-near-future/

"Why Artificial Intelligence Will Not Replace Human in Near Future?" IvyPanda , 18 July 2022, ivypanda.com/essays/why-artificial-intelligence-will-not-replace-human-in-near-future/.

IvyPanda . (2022) 'Why Artificial Intelligence Will Not Replace Human in Near Future'. 18 July.

IvyPanda . 2022. "Why Artificial Intelligence Will Not Replace Human in Near Future?" July 18, 2022. https://ivypanda.com/essays/why-artificial-intelligence-will-not-replace-human-in-near-future/.

1. IvyPanda . "Why Artificial Intelligence Will Not Replace Human in Near Future?" July 18, 2022. https://ivypanda.com/essays/why-artificial-intelligence-will-not-replace-human-in-near-future/.

Bibliography

IvyPanda . "Why Artificial Intelligence Will Not Replace Human in Near Future?" July 18, 2022. https://ivypanda.com/essays/why-artificial-intelligence-will-not-replace-human-in-near-future/.

IvyPanda uses cookies and similar technologies to enhance your experience, enabling functionalities such as:

- Basic site functions

- Ensuring secure, safe transactions

- Secure account login

- Remembering account, browser, and regional preferences

- Remembering privacy and security settings

- Analyzing site traffic and usage

- Personalized search, content, and recommendations

- Displaying relevant, targeted ads on and off IvyPanda

Please refer to IvyPanda's Cookies Policy and Privacy Policy for detailed information.

Certain technologies we use are essential for critical functions such as security and site integrity, account authentication, security and privacy preferences, internal site usage and maintenance data, and ensuring the site operates correctly for browsing and transactions.

Cookies and similar technologies are used to enhance your experience by:

- Remembering general and regional preferences

- Personalizing content, search, recommendations, and offers

Some functions, such as personalized recommendations, account preferences, or localization, may not work correctly without these technologies. For more details, please refer to IvyPanda's Cookies Policy .

To enable personalized advertising (such as interest-based ads), we may share your data with our marketing and advertising partners using cookies and other technologies. These partners may have their own information collected about you. Turning off the personalized advertising setting won't stop you from seeing IvyPanda ads, but it may make the ads you see less relevant or more repetitive.

Personalized advertising may be considered a "sale" or "sharing" of the information under California and other state privacy laws, and you may have the right to opt out. Turning off personalized advertising allows you to exercise your right to opt out. Learn more in IvyPanda's Cookies Policy and Privacy Policy .

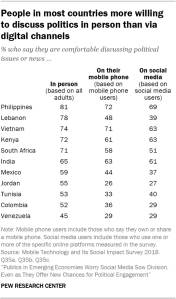

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

- Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

- Artificial Intelligence and the Future of Humans

Experts say the rise of artificial intelligence will make most people better off over the next decade, but many have concerns about how advances in AI will affect what it means to be human, to be productive and to exercise free will

Table of contents.

- 1. Concerns about human agency, evolution and survival

- 2. Solutions to address AI’s anticipated negative impacts

- 3. Improvements ahead: How humans and AI might evolve together in the next decade

- About this canvassing of experts

- Acknowledgments

Digital life is augmenting human capacities and disrupting eons-old human activities. Code-driven systems have spread to more than half of the world’s inhabitants in ambient information and connectivity, offering previously unimagined opportunities and unprecedented threats. As emerging algorithm-driven artificial intelligence (AI) continues to spread, will people be better off than they are today?

Some 979 technology pioneers, innovators, developers, business and policy leaders, researchers and activists answered this question in a canvassing of experts conducted in the summer of 2018.

The experts predicted networked artificial intelligence will amplify human effectiveness but also threaten human autonomy, agency and capabilities. They spoke of the wide-ranging possibilities; that computers might match or even exceed human intelligence and capabilities on tasks such as complex decision-making, reasoning and learning, sophisticated analytics and pattern recognition, visual acuity, speech recognition and language translation. They said “smart” systems in communities, in vehicles, in buildings and utilities, on farms and in business processes will save time, money and lives and offer opportunities for individuals to enjoy a more-customized future.

Many focused their optimistic remarks on health care and the many possible applications of AI in diagnosing and treating patients or helping senior citizens live fuller and healthier lives. They were also enthusiastic about AI’s role in contributing to broad public-health programs built around massive amounts of data that may be captured in the coming years about everything from personal genomes to nutrition. Additionally, a number of these experts predicted that AI would abet long-anticipated changes in formal and informal education systems.

Yet, most experts, regardless of whether they are optimistic or not, expressed concerns about the long-term impact of these new tools on the essential elements of being human. All respondents in this non-scientific canvassing were asked to elaborate on why they felt AI would leave people better off or not. Many shared deep worries, and many also suggested pathways toward solutions. The main themes they sounded about threats and remedies are outlined in the accompanying table.

[chart id=”21972″]

Specifically, participants were asked to consider the following:

“Please think forward to the year 2030. Analysts expect that people will become even more dependent on networked artificial intelligence (AI) in complex digital systems. Some say we will continue on the historic arc of augmenting our lives with mostly positive results as we widely implement these networked tools. Some say our increasing dependence on these AI and related systems is likely to lead to widespread difficulties.

Our question: By 2030, do you think it is most likely that advancing AI and related technology systems will enhance human capacities and empower them? That is, most of the time, will most people be better off than they are today? Or is it most likely that advancing AI and related technology systems will lessen human autonomy and agency to such an extent that most people will not be better off than the way things are today?”

Overall, and despite the downsides they fear, 63% of respondents in this canvassing said they are hopeful that most individuals will be mostly better off in 2030, and 37% said people will not be better off.

A number of the thought leaders who participated in this canvassing said humans’ expanding reliance on technological systems will only go well if close attention is paid to how these tools, platforms and networks are engineered, distributed and updated. Some of the powerful, overarching answers included those from:

Sonia Katyal , co-director of the Berkeley Center for Law and Technology and a member of the inaugural U.S. Commerce Department Digital Economy Board of Advisors, predicted, “In 2030, the greatest set of questions will involve how perceptions of AI and their application will influence the trajectory of civil rights in the future. Questions about privacy, speech, the right of assembly and technological construction of personhood will all re-emerge in this new AI context, throwing into question our deepest-held beliefs about equality and opportunity for all. Who will benefit and who will be disadvantaged in this new world depends on how broadly we analyze these questions today, for the future.”

We need to work aggressively to make sure technology matches our values. Erik Brynjolfsson Erik Brynjolfsson

Erik Brynjolfsson , director of the MIT Initiative on the Digital Economy and author of “Machine, Platform, Crowd: Harnessing Our Digital Future,” said, “AI and related technologies have already achieved superhuman performance in many areas, and there is little doubt that their capabilities will improve, probably very significantly, by 2030. … I think it is more likely than not that we will use this power to make the world a better place. For instance, we can virtually eliminate global poverty, massively reduce disease and provide better education to almost everyone on the planet. That said, AI and ML [machine learning] can also be used to increasingly concentrate wealth and power, leaving many people behind, and to create even more horrifying weapons. Neither outcome is inevitable, so the right question is not ‘What will happen?’ but ‘What will we choose to do?’ We need to work aggressively to make sure technology matches our values. This can and must be done at all levels, from government, to business, to academia, and to individual choices.”

Bryan Johnson , founder and CEO of Kernel, a leading developer of advanced neural interfaces, and OS Fund, a venture capital firm, said, “I strongly believe the answer depends on whether we can shift our economic systems toward prioritizing radical human improvement and staunching the trend toward human irrelevance in the face of AI. I don’t mean just jobs; I mean true, existential irrelevance, which is the end result of not prioritizing human well-being and cognition.”

Marina Gorbis , executive director of the Institute for the Future, said, “Without significant changes in our political economy and data governance regimes [AI] is likely to create greater economic inequalities, more surveillance and more programmed and non-human-centric interactions. Every time we program our environments, we end up programming ourselves and our interactions. Humans have to become more standardized, removing serendipity and ambiguity from our interactions. And this ambiguity and complexity is what is the essence of being human.”

Judith Donath , author of “The Social Machine, Designs for Living Online” and faculty fellow at Harvard University’s Berkman Klein Center for Internet & Society, commented, “By 2030, most social situations will be facilitated by bots – intelligent-seeming programs that interact with us in human-like ways. At home, parents will engage skilled bots to help kids with homework and catalyze dinner conversations. At work, bots will run meetings. A bot confidant will be considered essential for psychological well-being, and we’ll increasingly turn to such companions for advice ranging from what to wear to whom to marry. We humans care deeply about how others see us – and the others whose approval we seek will increasingly be artificial. By then, the difference between humans and bots will have blurred considerably. Via screen and projection, the voice, appearance and behaviors of bots will be indistinguishable from those of humans, and even physical robots, though obviously non-human, will be so convincingly sincere that our impression of them as thinking, feeling beings, on par with or superior to ourselves, will be unshaken. Adding to the ambiguity, our own communication will be heavily augmented: Programs will compose many of our messages and our online/AR appearance will [be] computationally crafted. (Raw, unaided human speech and demeanor will seem embarrassingly clunky, slow and unsophisticated.) Aided by their access to vast troves of data about each of us, bots will far surpass humans in their ability to attract and persuade us. Able to mimic emotion expertly, they’ll never be overcome by feelings: If they blurt something out in anger, it will be because that behavior was calculated to be the most efficacious way of advancing whatever goals they had ‘in mind.’ But what are those goals? Artificially intelligent companions will cultivate the impression that social goals similar to our own motivate them – to be held in good regard, whether as a beloved friend, an admired boss, etc. But their real collaboration will be with the humans and institutions that control them. Like their forebears today, these will be sellers of goods who employ them to stimulate consumption and politicians who commission them to sway opinions.”

Andrew McLaughlin , executive director of the Center for Innovative Thinking at Yale University, previously deputy chief technology officer of the United States for President Barack Obama and global public policy lead for Google, wrote, “2030 is not far in the future. My sense is that innovations like the internet and networked AI have massive short-term benefits, along with long-term negatives that can take decades to be recognizable. AI will drive a vast range of efficiency optimizations but also enable hidden discrimination and arbitrary penalization of individuals in areas like insurance, job seeking and performance assessment.”

Michael M. Roberts , first president and CEO of the Internet Corporation for Assigned Names and Numbers (ICANN) and Internet Hall of Fame member, wrote, “The range of opportunities for intelligent agents to augment human intelligence is still virtually unlimited. The major issue is that the more convenient an agent is, the more it needs to know about you – preferences, timing, capacities, etc. – which creates a tradeoff of more help requires more intrusion. This is not a black-and-white issue – the shades of gray and associated remedies will be argued endlessly. The record to date is that convenience overwhelms privacy. I suspect that will continue.”

danah boyd , a principal researcher for Microsoft and founder and president of the Data & Society Research Institute, said, “AI is a tool that will be used by humans for all sorts of purposes, including in the pursuit of power. There will be abuses of power that involve AI, just as there will be advances in science and humanitarian efforts that also involve AI. Unfortunately, there are certain trend lines that are likely to create massive instability. Take, for example, climate change and climate migration. This will further destabilize Europe and the U.S., and I expect that, in panic, we will see AI be used in harmful ways in light of other geopolitical crises.”

Amy Webb , founder of the Future Today Institute and professor of strategic foresight at New York University, commented, “The social safety net structures currently in place in the U.S. and in many other countries around the world weren’t designed for our transition to AI. The transition through AI will last the next 50 years or more. As we move farther into this third era of computing, and as every single industry becomes more deeply entrenched with AI systems, we will need new hybrid-skilled knowledge workers who can operate in jobs that have never needed to exist before. We’ll need farmers who know how to work with big data sets. Oncologists trained as robotocists. Biologists trained as electrical engineers. We won’t need to prepare our workforce just once, with a few changes to the curriculum. As AI matures, we will need a responsive workforce, capable of adapting to new processes, systems and tools every few years. The need for these fields will arise faster than our labor departments, schools and universities are acknowledging. It’s easy to look back on history through the lens of present – and to overlook the social unrest caused by widespread technological unemployment. We need to address a difficult truth that few are willing to utter aloud: AI will eventually cause a large number of people to be permanently out of work. Just as generations before witnessed sweeping changes during and in the aftermath of the Industrial Revolution, the rapid pace of technology will likely mean that Baby Boomers and the oldest members of Gen X – especially those whose jobs can be replicated by robots – won’t be able to retrain for other kinds of work without a significant investment of time and effort.”

Barry Chudakov , founder and principal of Sertain Research, commented, “By 2030 the human-machine/AI collaboration will be a necessary tool to manage and counter the effects of multiple simultaneous accelerations: broad technology advancement, globalization, climate change and attendant global migrations. In the past, human societies managed change through gut and intuition, but as Eric Teller, CEO of Google X, has said, ‘Our societal structures are failing to keep pace with the rate of change.’ To keep pace with that change and to manage a growing list of ‘wicked problems’ by 2030, AI – or using Joi Ito’s phrase, extended intelligence – will value and revalue virtually every area of human behavior and interaction. AI and advancing technologies will change our response framework and time frames (which in turn, changes our sense of time). Where once social interaction happened in places – work, school, church, family environments – social interactions will increasingly happen in continuous, simultaneous time. If we are fortunate, we will follow the 23 Asilomar AI Principles outlined by the Future of Life Institute and will work toward ‘not undirected intelligence but beneficial intelligence.’ Akin to nuclear deterrence stemming from mutually assured destruction, AI and related technology systems constitute a force for a moral renaissance. We must embrace that moral renaissance, or we will face moral conundrums that could bring about human demise. … My greatest hope for human-machine/AI collaboration constitutes a moral and ethical renaissance – we adopt a moonshot mentality and lock arms to prepare for the accelerations coming at us. My greatest fear is that we adopt the logic of our emerging technologies – instant response, isolation behind screens, endless comparison of self-worth, fake self-presentation – without thinking or responding smartly.”

John C. Havens , executive director of the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems and the Council on Extended Intelligence, wrote, “Now, in 2018, a majority of people around the world can’t access their data, so any ‘human-AI augmentation’ discussions ignore the critical context of who actually controls people’s information and identity. Soon it will be extremely difficult to identify any autonomous or intelligent systems whose algorithms don’t interact with human data in one form or another.”

At stake is nothing less than what sort of society we want to live in and how we experience our humanity. Batya Friedman Batya Friedman

Batya Friedman , a human-computer interaction professor at the University of Washington’s Information School, wrote, “Our scientific and technological capacities have and will continue to far surpass our moral ones – that is our ability to use wisely and humanely the knowledge and tools that we develop. … Automated warfare – when autonomous weapons kill human beings without human engagement – can lead to a lack of responsibility for taking the enemy’s life or even knowledge that an enemy’s life has been taken. At stake is nothing less than what sort of society we want to live in and how we experience our humanity.”

Greg Shannon , chief scientist for the CERT Division at Carnegie Mellon University, said, “Better/worse will appear 4:1 with the long-term ratio 2:1. AI will do well for repetitive work where ‘close’ will be good enough and humans dislike the work. … Life will definitely be better as AI extends lifetimes, from health apps that intelligently ‘nudge’ us to health, to warnings about impending heart/stroke events, to automated health care for the underserved (remote) and those who need extended care (elder care). As to liberty, there are clear risks. AI affects agency by creating entities with meaningful intellectual capabilities for monitoring, enforcing and even punishing individuals. Those who know how to use it will have immense potential power over those who don’t/can’t. Future happiness is really unclear. Some will cede their agency to AI in games, work and community, much like the opioid crisis steals agency today. On the other hand, many will be freed from mundane, unengaging tasks/jobs. If elements of community happiness are part of AI objective functions, then AI could catalyze an explosion of happiness.”

Kostas Alexandridis , author of “Exploring Complex Dynamics in Multi-agent-based Intelligent Systems,” predicted, “Many of our day-to-day decisions will be automated with minimal intervention by the end-user. Autonomy and/or independence will be sacrificed and replaced by convenience. Newer generations of citizens will become more and more dependent on networked AI structures and processes. There are challenges that need to be addressed in terms of critical thinking and heterogeneity. Networked interdependence will, more likely than not, increase our vulnerability to cyberattacks. There is also a real likelihood that there will exist sharper divisions between digital ‘haves’ and ‘have-nots,’ as well as among technologically dependent digital infrastructures. Finally, there is the question of the new ‘commanding heights’ of the digital network infrastructure’s ownership and control.”

Oscar Gandy , emeritus professor of communication at the University of Pennsylvania, responded, “We already face an ungranted assumption when we are asked to imagine human-machine ‘collaboration.’ Interaction is a bit different, but still tainted by the grant of a form of identity – maybe even personhood – to machines that we will use to make our way through all sorts of opportunities and challenges. The problems we will face in the future are quite similar to the problems we currently face when we rely upon ‘others’ (including technological systems, devices and networks) to acquire things we value and avoid those other things (that we might, or might not be aware of).”

James Scofield O’Rourke , a professor of management at the University of Notre Dame, said, “Technology has, throughout recorded history, been a largely neutral concept. The question of its value has always been dependent on its application. For what purpose will AI and other technological advances be used? Everything from gunpowder to internal combustion engines to nuclear fission has been applied in both helpful and destructive ways. Assuming we can contain or control AI (and not the other way around), the answer to whether we’ll be better off depends entirely on us (or our progeny). ‘The fault, dear Brutus, is not in our stars, but in ourselves, that we are underlings.’”

Simon Biggs , a professor of interdisciplinary arts at the University of Edinburgh, said, “AI will function to augment human capabilities. The problem is not with AI but with humans. As a species we are aggressive, competitive and lazy. We are also empathic, community minded and (sometimes) self-sacrificing. We have many other attributes. These will all be amplified. Given historical precedent, one would have to assume it will be our worst qualities that are augmented. My expectation is that in 2030 AI will be in routine use to fight wars and kill people, far more effectively than we can currently kill. As societies we will be less affected by this as we currently are, as we will not be doing the fighting and killing ourselves. Our capacity to modify our behaviour, subject to empathy and an associated ethical framework, will be reduced by the disassociation between our agency and the act of killing. We cannot expect our AI systems to be ethical on our behalf – they won’t be, as they will be designed to kill efficiently, not thoughtfully. My other primary concern is to do with surveillance and control. The advent of China’s Social Credit System (SCS) is an indicator of what it likely to come. We will exist within an SCS as AI constructs hybrid instances of ourselves that may or may not resemble who we are. But our rights and affordances as individuals will be determined by the SCS. This is the Orwellian nightmare realised.”

Mark Surman , executive director of the Mozilla Foundation, responded, “AI will continue to concentrate power and wealth in the hands of a few big monopolies based on the U.S. and China. Most people – and parts of the world – will be worse off.”

William Uricchio , media scholar and professor of comparative media studies at MIT, commented, “AI and its related applications face three problems: development at the speed of Moore’s Law, development in the hands of a technological and economic elite, and development without benefit of an informed or engaged public. The public is reduced to a collective of consumers awaiting the next technology. Whose notion of ‘progress’ will prevail? We have ample evidence of AI being used to drive profits, regardless of implications for long-held values; to enhance governmental control and even score citizens’ ‘social credit’ without input from citizens themselves. Like technologies before it, AI is agnostic. Its deployment rests in the hands of society. But absent an AI-literate public, the decision of how best to deploy AI will fall to special interests. Will this mean equitable deployment, the amelioration of social injustice and AI in the public service? Because the answer to this question is social rather than technological, I’m pessimistic. The fix? We need to develop an AI-literate public, which means focused attention in the educational sector and in public-facing media. We need to assure diversity in the development of AI technologies. And until the public, its elected representatives and their legal and regulatory regimes can get up to speed with these fast-moving developments we need to exercise caution and oversight in AI’s development.”

The remainder of this report is divided into three sections that draw from hundreds of additional respondents’ hopeful and critical observations: 1) concerns about human-AI evolution, 2) suggested solutions to address AI’s impact, and 3) expectations of what life will be like in 2030, including respondents’ positive outlooks on the quality of life and the future of work, health care and education. Some responses are lightly edited for style.

Sign up for our weekly newsletter

Fresh data delivery Saturday mornings

Sign up for The Briefing

Weekly updates on the world of news & information

- Artificial Intelligence

- Emerging Technology

- Future of the Internet (Project)

- Technology Adoption

A quarter of U.S. teachers say AI tools do more harm than good in K-12 education

Many americans think generative ai programs should credit the sources they rely on, americans’ use of chatgpt is ticking up, but few trust its election information, q&a: how we used large language models to identify guests on popular podcasts, computer chips in human brains: how americans view the technology amid recent advances, most popular, report materials.

- Shareable quotes from experts about artificial intelligence and the future of humans

901 E St. NW, Suite 300 Washington, DC 20004 USA (+1) 202-419-4300 | Main (+1) 202-857-8562 | Fax (+1) 202-419-4372 | Media Inquiries

Research Topics

- Email Newsletters

ABOUT PEW RESEARCH CENTER Pew Research Center is a nonpartisan, nonadvocacy fact tank that informs the public about the issues, attitudes and trends shaping the world. It does not take policy positions. The Center conducts public opinion polling, demographic research, computational social science research and other data-driven research. Pew Research Center is a subsidiary of The Pew Charitable Trusts , its primary funder.

© 2024 Pew Research Center

- Science & Environment

- History & Culture

- Opinion & Analysis

- Destinations

- Activity Central

- Creature Features

- Earth Heroes

- Survival Guides

- Travel with AG

- Travel Articles

- About the Australian Geographic Society

- AG Society News

- Sponsorship

- Fundraising

- Australian Geographic Society Expeditions

- Sponsorship news

- Our Country Immersive Experience

- AG Nature Photographer of the Year

- View Archive

- Web Stories

- Adventure Instagram

Home Topics Science & Environment Will AI ever reach human-level intelligence?

Will AI ever reach human-level intelligence?

Artificial intelligence has changed form in recent years.

What started in the public eye as a burgeoning field with promising (yet largely benign) applications, has snowballed into a more than US$100 billion industry where the heavy hitters – Microsoft, Google and OpenAI, to name a few – seem intent on out-competing one another.

The result has been increasingly sophisticated large language models, often released in haste and without adequate testing and oversight.

These models can do much of what a human can, and in many cases do it better. They can beat us at advanced strategy games , generate incredible art , diagnose cancers and compose music.

There’s no doubt AI systems appear to be “intelligent” to some extent. But could they ever be as intelligent as humans?

There’s a term for this: artificial general intelligence (AGI). Although it’s a broad concept, for simplicity you can think of AGI as the point at which AI acquires human-like generalised cognitive capabilities. In other words, it’s the point where AI can tackle any intellectual task a human can.

AGI isn’t here yet; current AI models are held back by a lack of certain human traits such as true creativity and emotional awareness.

We asked five experts if they think AI will ever reach AGI, and five out of five said ‘yes’.

But there are subtle differences in how they approach the question. From their responses, more questions emerge. When might we achieve AGI? Will it go on to surpass humans? And what constitutes “intelligence”, anyway?

Here are their detailed responses:

Paul Formosa

Professor in Philosophy and Co-Director of the Centre for Agency, Values and Ethics (CAVE), Macquarie University

AI has already achieved and surpassed human intelligence in many tasks. It can beat us at strategy games such as Go, chess, StarCraft and Diplomacy, outperform us on many language performance benchmarks, and write passable undergraduate university essays.

Of course, it can also make things up, or “hallucinate”, and get things wrong – but so can humans (although not in the same ways).

Given a long enough timescale, it seems likely AI will achieve AGI, or “human-level intelligence”. That is, it will have achieved proficiency across enough of the interconnected domains of intelligence humans possess. Still, some may worry that – despite AI achievements so far – AI will not really be “intelligent” because it doesn’t (or can’t) understand what it’s doing, since it isn’t conscious.

However, the rise of AI suggests we can have intelligence without consciousness, because intelligence can be understood in functional terms. An intelligent entity can do intelligent things such as learn, reason, write essays, or use tools.

The AIs we create may never have consciousness, but they are increasingly able to do intelligent things. In some cases, they already do them at a level beyond us, which is a trend that will likely continue.

Christina Maher

Computational Neuroscientist and Biomedical Engineer, University of Sydney

AI will achieve human-level intelligence, but perhaps not anytime soon. Human-level intelligence allows us to reason, solve problems and make decisions. It requires many cognitive abilities including adaptability, social intelligence and learning from experience.

AI already ticks many of these boxes. What’s left is for AI models to learn inherent human traits such as critical reasoning, and understanding what emotion is and which events might prompt it.

As humans, we learn and experience these traits from the moment we’re born. Our first experience of “happiness” is too early for us to even remember. We also learn critical reasoning and emotional regulation throughout childhood, and develop a sense of our “emotions” as we interact with and experience the world around us. Importantly, it can take many years for the human brain to develop such intelligence.

AI hasn’t acquired these capabilities yet. But if humans can learn these traits, AI probably can too – and maybe at an even faster rate. We are still discovering how AI models should be built, trained, and interacted with in order to develop such traits in them. Really, the big question is not if AI will achieve human-level intelligence, but when – and how.

Seyedali Mirjalili

Professor, Director of Centre for Artificial Intelligence Research and Optimisation, Torrens University Australia

I believe AI will surpass human intelligence. Why? The past offers insights we can’t ignore. A lot of people believed tasks such as playing computer games, image recognition and content creation (among others) could only be done by humans – but technological advancement proved otherwise.

Today the rapid advancement and adoption of AI algorithms, in conjunction with an abundance of data and computational resources, has led to a level of intelligence and automation previously unimaginable. If we follow the same trajectory, having more generalised AI is no longer a possibility, but a certainty of the future.

It is just a matter of time. AI has advanced significantly, but not yet in tasks requiring intuition, empathy and creativity, for example. But breakthroughs in algorithms will allow this.

Moreover, once AI systems achieve such human-like cognitive abilities, there will be a snowball effect and AI systems will be able to improve themselves with minimal to no human involvement. This kind of “automation of intelligence” will profoundly change the world.

Artificial general intelligence remains a significant challenge, and there are ethical and societal implications that must be addressed very carefully as we continue to advance towards it.

Dana Rezazadegan

Lecturer in AI and Data Science, Swinburne University of Technology

Yes, AI is going to get as smart as humans in many ways – but exactly how smart it gets will be decided largely by advancements in quantum computing .

Human intelligence isn’t as simple as knowing facts. It has several aspects such as creativity, emotional intelligence and intuition, which current AI models can mimic, but can’t match. That said, AI has advanced massively and this trend will continue.

Current models are limited by relatively small and biased training datasets, as well as limited computational power. The emergence of quantum computing will transform AI’s capabilities. With quantum-enhanced AI, we’ll be able to feed AI models multiple massive datasets that are comparable to humans’ natural multi-modal data collection achieved through interacting with the world. These models will be able to maintain fast and accurate analyses.

Having an advanced version of continual learning should lead to the development of highly sophisticated AI systems which, after a certain point, will be able to improve themselves without human input.

As such, AI algorithms running on stable quantum computers have a high chance of reaching something similar to generalised human intelligence – even if they don’t necessarily match every aspect of human intelligence as we know it.

Marcel Scharth

Lecturer in Business Analytics, University of Sydney

I think it’s likely AGI will one day become a reality, although the timeline remains highly uncertain. If AGI is developed, then surpassing human-level intelligence seems inevitable.

Humans themselves are proof that highly flexible and adaptable intelligence is allowed by the laws of physics. There’s no fundamental reason we should believe that machines are, in principle, incapable of performing the computations necessary to achieve human-like problem solving abilities.

Furthermore, AI has distinct advantages over humans, such as better speed and memory capacity, fewer physical constraints, and the potential for more rationality and recursive self-improvement. As computational power grows, AI systems will eventually surpass the human brain’s computational capacity.

Our primary challenge then is to gain a better understanding of intelligence itself, and knowledge on how to build AGI. Present-day AI systems have many limitations and are nowhere near being able to master the different domains that would characterise AGI. The path to AGI will likely require unpredictable breakthroughs and innovations.

The median predicted date for AGI on Metaculus , a well-regarded forecasting platform, is 2032. To me, this seems too optimistic. A 2022 expert survey estimated a 50% chance of us achieving human-level AI by 2059. I find this plausible.

Noor Gillani is the Technology Editor at The Conversation .

This article is republished from The Conversation under a Creative Commons license. Read the original article .

Gondwanaland: the search for a land before (human) time

The Gondwana supercontinent broke up millions of years ago. Now, researchers are piecing it back together again.

Colour and song most at risk as birdlife declines due to poor urban design

The birds that fill our mornings with songs and our parks and gardens with colour are disappearing from our cities, a new study has found.

Searching for Aussie dinosaurs

Our understanding of where to find ancient life in Australia has been turned on its head by a new appreciation of the country’s geology. Now the world is looking to our vast outback as the latest hotspot to locate fossils.

Watch Latest Web Stories

Birds of Stewart Island / Rakiura

Endangered fairy-wrens survive Kimberley floods

Australia’s sleepiest species

2024 Calendars & Diaries - OUT NOW

Our much loved calendars and diaries are now available for 2024. Adorn your walls with beautiful artworks year round. Order today.

In stock now: Hansa Soft Toys and Puppets

From cuddly companions to realistic native Australian wildlife, the range also includes puppets that move and feel like real animals.

The present and future of AI

Finale doshi-velez on how ai is shaping our lives and how we can shape ai.

Finale Doshi-Velez, the John L. Loeb Professor of Engineering and Applied Sciences. (Photo courtesy of Eliza Grinnell/Harvard SEAS)

How has artificial intelligence changed and shaped our world over the last five years? How will AI continue to impact our lives in the coming years? Those were the questions addressed in the most recent report from the One Hundred Year Study on Artificial Intelligence (AI100), an ongoing project hosted at Stanford University, that will study the status of AI technology and its impacts on the world over the next 100 years.

The 2021 report is the second in a series that will be released every five years until 2116. Titled “Gathering Strength, Gathering Storms,” the report explores the various ways AI is increasingly touching people’s lives in settings that range from movie recommendations and voice assistants to autonomous driving and automated medical diagnoses .

Barbara Grosz , the Higgins Research Professor of Natural Sciences at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) is a member of the standing committee overseeing the AI100 project and Finale Doshi-Velez , Gordon McKay Professor of Computer Science, is part of the panel of interdisciplinary researchers who wrote this year’s report.

We spoke with Doshi-Velez about the report, what it says about the role AI is currently playing in our lives, and how it will change in the future.

Q: Let's start with a snapshot: What is the current state of AI and its potential?

Doshi-Velez: Some of the biggest changes in the last five years have been how well AIs now perform in large data regimes on specific types of tasks. We've seen [DeepMind’s] AlphaZero become the best Go player entirely through self-play, and everyday uses of AI such as grammar checks and autocomplete, automatic personal photo organization and search, and speech recognition become commonplace for large numbers of people.

In terms of potential, I'm most excited about AIs that might augment and assist people. They can be used to drive insights in drug discovery, help with decision making such as identifying a menu of likely treatment options for patients, and provide basic assistance, such as lane keeping while driving or text-to-speech based on images from a phone for the visually impaired. In many situations, people and AIs have complementary strengths. I think we're getting closer to unlocking the potential of people and AI teams.

There's a much greater recognition that we should not be waiting for AI tools to become mainstream before making sure they are ethical.

Q: Over the course of 100 years, these reports will tell the story of AI and its evolving role in society. Even though there have only been two reports, what's the story so far?

There's actually a lot of change even in five years. The first report is fairly rosy. For example, it mentions how algorithmic risk assessments may mitigate the human biases of judges. The second has a much more mixed view. I think this comes from the fact that as AI tools have come into the mainstream — both in higher stakes and everyday settings — we are appropriately much less willing to tolerate flaws, especially discriminatory ones. There's also been questions of information and disinformation control as people get their news, social media, and entertainment via searches and rankings personalized to them. So, there's a much greater recognition that we should not be waiting for AI tools to become mainstream before making sure they are ethical.

Q: What is the responsibility of institutes of higher education in preparing students and the next generation of computer scientists for the future of AI and its impact on society?

First, I'll say that the need to understand the basics of AI and data science starts much earlier than higher education! Children are being exposed to AIs as soon as they click on videos on YouTube or browse photo albums. They need to understand aspects of AI such as how their actions affect future recommendations.

But for computer science students in college, I think a key thing that future engineers need to realize is when to demand input and how to talk across disciplinary boundaries to get at often difficult-to-quantify notions of safety, equity, fairness, etc. I'm really excited that Harvard has the Embedded EthiCS program to provide some of this education. Of course, this is an addition to standard good engineering practices like building robust models, validating them, and so forth, which is all a bit harder with AI.

I think a key thing that future engineers need to realize is when to demand input and how to talk across disciplinary boundaries to get at often difficult-to-quantify notions of safety, equity, fairness, etc.

Q: Your work focuses on machine learning with applications to healthcare, which is also an area of focus of this report. What is the state of AI in healthcare?

A lot of AI in healthcare has been on the business end, used for optimizing billing, scheduling surgeries, that sort of thing. When it comes to AI for better patient care, which is what we usually think about, there are few legal, regulatory, and financial incentives to do so, and many disincentives. Still, there's been slow but steady integration of AI-based tools, often in the form of risk scoring and alert systems.

In the near future, two applications that I'm really excited about are triage in low-resource settings — having AIs do initial reads of pathology slides, for example, if there are not enough pathologists, or get an initial check of whether a mole looks suspicious — and ways in which AIs can help identify promising treatment options for discussion with a clinician team and patient.

Q: Any predictions for the next report?

I'll be keen to see where currently nascent AI regulation initiatives have gotten to. Accountability is such a difficult question in AI, it's tricky to nurture both innovation and basic protections. Perhaps the most important innovation will be in approaches for AI accountability.

Topics: AI / Machine Learning , Computer Science

Cutting-edge science delivered direct to your inbox.

Join the Harvard SEAS mailing list.

Scientist Profiles

Finale Doshi-Velez

Herchel Smith Professor of Computer Science

Press Contact

Leah Burrows | 617-496-1351 | [email protected]

Related News

Five Experts Explain Whether AI Could Ever Become as Intelligent as Humans

Artificial intelligence has changed form in recent years.

What started in the public eye as a burgeoning field with promising (yet largely benign) applications, has snowballed into a more than US $100 billion industry where the heavy hitters – Microsoft, Google, and OpenAI, to name a few – seem intent on out-competing one another.

The result has been increasingly sophisticated large language models, often released in haste and without adequate testing and oversight.

These models can do much of what a human can, and in many cases do it better. They can beat us at advanced strategy games , generate incredible art , diagnose cancers and compose music.

There's no doubt AI systems appear to be "intelligent" to some extent. But could they ever be as intelligent as humans?

There's a term for this: artificial general intelligence (AGI). Although it's a broad concept, for simplicity you can think of AGI as the point at which AI acquires human-like generalized cognitive capabilities. In other words, it's the point where AI can tackle any intellectual task a human can.

AGI isn't here yet; current AI models are held back by a lack of certain human traits such as true creativity and emotional awareness.

We asked five experts if they think AI will ever reach AGI, and five out of five said yes.

But there are subtle differences in how they approach the question. From their responses, more questions emerge. When might we achieve AGI? Will it go on to surpass humans? And what constitutes "intelligence", anyway?

Here are their detailed responses:

Paul Formosa

AI and Philosophy of Technology

AI has already achieved and surpassed human intelligence in many tasks. It can beat us at strategy games such as Go, chess, StarCraft and Diplomacy, outperform us on many language performance benchmarks, and write passable undergraduate university essays.

Of course, it can also make things up, or "hallucinate", and get things wrong – but so can humans (although not in the same ways).

Given a long enough timescale, it seems likely AI will achieve AGI, or "human-level intelligence". That is, it will have achieved proficiency across enough of the interconnected domains of intelligence humans possess. Still, some may worry that – despite AI achievements so far – AI will not really be "intelligent" because it doesn't (or can't) understand what it's doing, since it isn't conscious.

However, the rise of AI suggests we can have intelligence without consciousness , because intelligence can be understood in functional terms. An intelligent entity can do intelligent things such as learn, reason, write essays, or use tools.

The AIs we create may never have consciousness, but they are increasingly able to do intelligent things. In some cases, they already do them at a level beyond us, which is a trend that will likely continue.

Christina Maher

Computational Neuroscience and Biomedical Engineering

AI will achieve human-level intelligence, but perhaps not anytime soon. Human-level intelligence allows us to reason, solve problems and make decisions. It requires many cognitive abilities including adaptability, social intelligence and learning from experience.

AI already ticks many of these boxes. What's left is for AI models to learn inherent human traits such as critical reasoning, and understanding what emotion is and which events might prompt it.

As humans, we learn and experience these traits from the moment we're born. Our first experience of "happiness" is too early for us to even remember. We also learn critical reasoning and emotional regulation throughout childhood, and develop a sense of our "emotions" as we interact with and experience the world around us. Importantly, it can take many years for the human brain to develop such intelligence.

AI hasn't acquired these capabilities yet. But if humans can learn these traits, AI probably can too – and maybe at an even faster rate. We are still discovering how AI models should be built, trained, and interacted with in order to develop such traits in them. Really, the big question is not if AI will achieve human-level intelligence, but when – and how.

Seyedali Mirjalili

AI and Swarm Intelligence

I believe AI will surpass human intelligence. Why? The past offers insights we can't ignore. A lot of people believed tasks such as playing computer games, image recognition and content creation (among others) could only be done by humans – but technological advancement proved otherwise.

Today the rapid advancement and adoption of AI algorithms, in conjunction with an abundance of data and computational resources, has led to a level of intelligence and automation previously unimaginable. If we follow the same trajectory, having more generalised AI is no longer a possibility, but a certainty of the future.

It is just a matter of time. AI has advanced significantly, but not yet in tasks requiring intuition, empathy and creativity, for example. But breakthroughs in algorithms will allow this.

Moreover, once AI systems achieve such human-like cognitive abilities, there will be a snowball effect and AI systems will be able to improve themselves with minimal to no human involvement. This kind of "automation of intelligence" will profoundly change the world.

Artificial general intelligence remains a significant challenge, and there are ethical and societal implications that must be addressed very carefully as we continue to advance towards it.

Dana Rezazadegan

AI and Data Science

Yes, AI is going to get as smart as humans in many ways – but exactly how smart it gets will be decided largely by advancements in quantum computing .

Human intelligence isn't as simple as knowing facts. It has several aspects such as creativity, emotional intelligence and intuition, which current AI models can mimic, but can't match. That said, AI has advanced massively and this trend will continue.

Current models are limited by relatively small and biased training datasets, as well as limited computational power. The emergence of quantum computing will transform AI's capabilities. With quantum-enhanced AI, we'll be able to feed AI models multiple massive datasets that are comparable to humans' natural multi-modal data collection achieved through interacting with the world. These models will be able to maintain fast and accurate analyses.

Having an advanced version of continual learning should lead to the development of highly sophisticated AI systems which, after a certain point, will be able to improve themselves without human input.

As such, AI algorithms running on stable quantum computers have a high chance of reaching something similar to generalised human intelligence – even if they don't necessarily match every aspect of human intelligence as we know it.

Marcel Scharth

Machine Learning and AI Alignment

I think it's likely AGI will one day become a reality, although the timeline remains highly uncertain. If AGI is developed, then surpassing human-level intelligence seems inevitable.

Humans themselves are proof that highly flexible and adaptable intelligence is allowed by the laws of physics. There's no fundamental reason we should believe that machines are, in principle, incapable of performing the computations necessary to achieve human-like problem solving abilities.

Furthermore, AI has distinct advantages over humans, such as better speed and memory capacity, fewer physical constraints, and the potential for more rationality and recursive self-improvement. As computational power grows, AI systems will eventually surpass the human brain's computational capacity.

Our primary challenge then is to gain a better understanding of intelligence itself, and knowledge on how to build AGI. Present-day AI systems have many limitations and are nowhere near being able to master the different domains that would characterise AGI. The path to AGI will likely require unpredictable breakthroughs and innovations.

Noor Gillani , Technology Editor, The Conversation

This article is republished from The Conversation under a Creative Commons license. Read the original article .

Table of Contents

What is artificial intelligence, what is human intelligence, artificial intelligence vs. human intelligence: a comparison, what brian cells can be tweaked to learn faster, artificial intelligence vs. human intelligence: what will the future of human vs ai be, impact of ai on the future of jobs, will ai replace humans, upskilling: the way forward, learn more about ai with simplilearn, ai vs human intelligence: key insights and comparisons.

From the realm of science fiction into the realm of everyday life, artificial intelligence has made significant strides. Because AI has become so pervasive in today's industries and people's daily lives, a new debate has emerged, pitting the two competing paradigms of AI and human intelligence.

While the goal of artificial intelligence is to build and create intelligent systems that are capable of doing jobs that are analogous to those performed by humans, we can't help but question if AI is adequate on its own. This article covers a wide range of subjects, including the potential impact of AI on the future of work and the economy, how AI differs from human intelligence, and the ethical considerations that must be taken into account.

The term artificial intelligence may be used for any computer that has characteristics similar to the human brain, including the ability to think critically, make decisions, and increase productivity. The foundation of AI is human insights that may be determined in such a manner that machines can easily realize the jobs, from the most simple to the most complicated.

Insights that are synthesized are the result of intellectual activity, including study, analysis, logic, and observation. Tasks, including robotics, control mechanisms, computer vision, scheduling, and data mining , fall under the umbrella of artificial intelligence.

The origins of human intelligence and conduct may be traced back to the individual's unique combination of genetics, upbringing, and exposure to various situations and environments. And it hinges entirely on one's freedom to shape his or her environment via the application of newly acquired information.

The information it provides is varied. For example, it may provide information on a person with a similar skill set or background, or it may reveal diplomatic information that a locator or spy was tasked with obtaining. After everything is said and done, it is able to deliver information about interpersonal relationships and the arrangement of interests.

The following is a table that compares human intelligence vs artificial intelligence: