- Library databases

- Library website

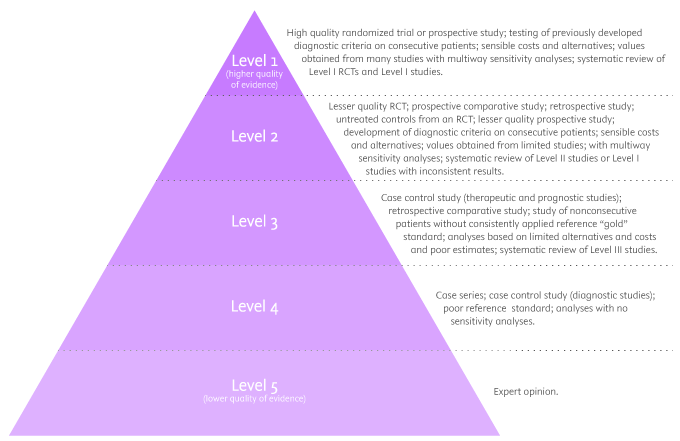

Evidence-Based Research: Levels of Evidence Pyramid

Introduction.

One way to organize the different types of evidence involved in evidence-based practice research is the levels of evidence pyramid. The pyramid includes a variety of evidence types and levels.

- systematic reviews

- critically-appraised topics

- critically-appraised individual articles

- randomized controlled trials

- cohort studies

- case-controlled studies, case series, and case reports

- Background information, expert opinion

Levels of evidence pyramid

The levels of evidence pyramid provides a way to visualize both the quality of evidence and the amount of evidence available. For example, systematic reviews are at the top of the pyramid, meaning they are both the highest level of evidence and the least common. As you go down the pyramid, the amount of evidence will increase as the quality of the evidence decreases.

Text alternative for Levels of Evidence Pyramid diagram

EBM Pyramid and EBM Page Generator, copyright 2006 Trustees of Dartmouth College and Yale University. All Rights Reserved. Produced by Jan Glover, David Izzo, Karen Odato and Lei Wang.

Filtered Resources

Filtered resources appraise the quality of studies and often make recommendations for practice. The main types of filtered resources in evidence-based practice are:

Scroll down the page to the Systematic reviews , Critically-appraised topics , and Critically-appraised individual articles sections for links to resources where you can find each of these types of filtered information.

Systematic reviews

Authors of a systematic review ask a specific clinical question, perform a comprehensive literature review, eliminate the poorly done studies, and attempt to make practice recommendations based on the well-done studies. Systematic reviews include only experimental, or quantitative, studies, and often include only randomized controlled trials.

You can find systematic reviews in these filtered databases :

- Cochrane Database of Systematic Reviews Cochrane systematic reviews are considered the gold standard for systematic reviews. This database contains both systematic reviews and review protocols. To find only systematic reviews, select Cochrane Reviews in the Document Type box.

- JBI EBP Database (formerly Joanna Briggs Institute EBP Database) This database includes systematic reviews, evidence summaries, and best practice information sheets. To find only systematic reviews, click on Limits and then select Systematic Reviews in the Publication Types box. To see how to use the limit and find full text, please see our Joanna Briggs Institute Search Help page .

You can also find systematic reviews in this unfiltered database :

To learn more about finding systematic reviews, please see our guide:

- Filtered Resources: Systematic Reviews

Critically-appraised topics

Authors of critically-appraised topics evaluate and synthesize multiple research studies. Critically-appraised topics are like short systematic reviews focused on a particular topic.

You can find critically-appraised topics in these resources:

- Annual Reviews This collection offers comprehensive, timely collections of critical reviews written by leading scientists. To find reviews on your topic, use the search box in the upper-right corner.

- Guideline Central This free database offers quick-reference guideline summaries organized by a new non-profit initiative which will aim to fill the gap left by the sudden closure of AHRQ’s National Guideline Clearinghouse (NGC).

- JBI EBP Database (formerly Joanna Briggs Institute EBP Database) To find critically-appraised topics in JBI, click on Limits and then select Evidence Summaries from the Publication Types box. To see how to use the limit and find full text, please see our Joanna Briggs Institute Search Help page .

- National Institute for Health and Care Excellence (NICE) Evidence-based recommendations for health and care in England.

- Filtered Resources: Critically-Appraised Topics

Critically-appraised individual articles

Authors of critically-appraised individual articles evaluate and synopsize individual research studies.

You can find critically-appraised individual articles in these resources:

- EvidenceAlerts Quality articles from over 120 clinical journals are selected by research staff and then rated for clinical relevance and interest by an international group of physicians. Note: You must create a free account to search EvidenceAlerts.

- ACP Journal Club This journal publishes reviews of research on the care of adults and adolescents. You can either browse this journal or use the Search within this publication feature.

- Evidence-Based Nursing This journal reviews research studies that are relevant to best nursing practice. You can either browse individual issues or use the search box in the upper-right corner.

To learn more about finding critically-appraised individual articles, please see our guide:

- Filtered Resources: Critically-Appraised Individual Articles

Unfiltered resources

You may not always be able to find information on your topic in the filtered literature. When this happens, you'll need to search the primary or unfiltered literature. Keep in mind that with unfiltered resources, you take on the role of reviewing what you find to make sure it is valid and reliable.

Note: You can also find systematic reviews and other filtered resources in these unfiltered databases.

The Levels of Evidence Pyramid includes unfiltered study types in this order of evidence from higher to lower:

You can search for each of these types of evidence in the following databases:

TRIP database

Background information & expert opinion.

Background information and expert opinions are not necessarily backed by research studies. They include point-of-care resources, textbooks, conference proceedings, etc.

- Family Physicians Inquiries Network: Clinical Inquiries Provide the ideal answers to clinical questions using a structured search, critical appraisal, authoritative recommendations, clinical perspective, and rigorous peer review. Clinical Inquiries deliver best evidence for point-of-care use.

- Harrison, T. R., & Fauci, A. S. (2009). Harrison's Manual of Medicine . New York: McGraw-Hill Professional. Contains the clinical portions of Harrison's Principles of Internal Medicine .

- Lippincott manual of nursing practice (8th ed.). (2006). Philadelphia, PA: Lippincott Williams & Wilkins. Provides background information on clinical nursing practice.

- Medscape: Drugs & Diseases An open-access, point-of-care medical reference that includes clinical information from top physicians and pharmacists in the United States and worldwide.

- Virginia Henderson Global Nursing e-Repository An open-access repository that contains works by nurses and is sponsored by Sigma Theta Tau International, the Honor Society of Nursing. Note: This resource contains both expert opinion and evidence-based practice articles.

- Previous Page: Phrasing Research Questions

- Next Page: Evidence Types

- Office of Student Disability Services

Walden Resources

Departments.

- Academic Residencies

- Academic Skills

- Career Planning and Development

- Customer Care Team

- Field Experience

- Military Services

- Student Success Advising

- Writing Skills

Centers and Offices

- Center for Social Change

- Office of Academic Support and Instructional Services

- Office of Degree Acceleration

- Office of Research and Doctoral Services

- Office of Student Affairs

Student Resources

- Doctoral Writing Assessment

- Form & Style Review

- Quick Answers

- ScholarWorks

- SKIL Courses and Workshops

- Walden Bookstore

- Walden Catalog & Student Handbook

- Student Safety/Title IX

- Legal & Consumer Information

- Website Terms and Conditions

- Cookie Policy

- Accessibility

- Accreditation

- State Authorization

- Net Price Calculator

- Contact Walden

Walden University is a member of Adtalem Global Education, Inc. www.adtalem.com Walden University is certified to operate by SCHEV © 2024 Walden University LLC. All rights reserved.

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 21, Issue 4

- New evidence pyramid

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- M Hassan Murad ,

- Mouaz Alsawas ,

- http://orcid.org/0000-0001-5481-696X Fares Alahdab

- Rochester, Minnesota , USA

- Correspondence to : Dr M Hassan Murad, Evidence-based Practice Center, Mayo Clinic, Rochester, MN 55905, USA; murad.mohammad{at}mayo.edu

https://doi.org/10.1136/ebmed-2016-110401

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

- EDUCATION & TRAINING (see Medical Education & Training)

- EPIDEMIOLOGY

- GENERAL MEDICINE (see Internal Medicine)

The first and earliest principle of evidence-based medicine indicated that a hierarchy of evidence exists. Not all evidence is the same. This principle became well known in the early 1990s as practising physicians learnt basic clinical epidemiology skills and started to appraise and apply evidence to their practice. Since evidence was described as a hierarchy, a compelling rationale for a pyramid was made. Evidence-based healthcare practitioners became familiar with this pyramid when reading the literature, applying evidence or teaching students.

Various versions of the evidence pyramid have been described, but all of them focused on showing weaker study designs in the bottom (basic science and case series), followed by case–control and cohort studies in the middle, then randomised controlled trials (RCTs), and at the very top, systematic reviews and meta-analysis. This description is intuitive and likely correct in many instances. The placement of systematic reviews at the top had undergone several alterations in interpretations, but was still thought of as an item in a hierarchy. 1 Most versions of the pyramid clearly represented a hierarchy of internal validity (risk of bias). Some versions incorporated external validity (applicability) in the pyramid by either placing N-1 trials above RCTs (because their results are most applicable to individual patients 2 ) or by separating internal and external validity. 3

Another version (the 6S pyramid) was also developed to describe the sources of evidence that can be used by evidence-based medicine (EBM) practitioners for answering foreground questions, showing a hierarchy ranging from studies, synopses, synthesis, synopses of synthesis, summaries and systems. 4 This hierarchy may imply some sort of increasing validity and applicability although its main purpose is to emphasise that the lower sources of evidence in the hierarchy are least preferred in practice because they require more expertise and time to identify, appraise and apply.

The traditional pyramid was deemed too simplistic at times, thus the importance of leaving room for argument and counterargument for the methodological merit of different designs has been emphasised. 5 Other barriers challenged the placement of systematic reviews and meta-analyses at the top of the pyramid. For instance, heterogeneity (clinical, methodological or statistical) is an inherent limitation of meta-analyses that can be minimised or explained but never eliminated. 6 The methodological intricacies and dilemmas of systematic reviews could potentially result in uncertainty and error. 7 One evaluation of 163 meta-analyses demonstrated that the estimation of treatment outcomes differed substantially depending on the analytical strategy being used. 7 Therefore, we suggest, in this perspective, two visual modifications to the pyramid to illustrate two contemporary methodological principles ( figure 1 ). We provide the rationale and an example for each modification.

- Download figure

- Open in new tab

- Download powerpoint

The proposed new evidence-based medicine pyramid. (A) The traditional pyramid. (B) Revising the pyramid: (1) lines separating the study designs become wavy (Grading of Recommendations Assessment, Development and Evaluation), (2) systematic reviews are ‘chopped off’ the pyramid. (C) The revised pyramid: systematic reviews are a lens through which evidence is viewed (applied).

Rationale for modification 1

In the early 2000s, the Grading of Recommendations Assessment, Development and Evaluation (GRADE) Working Group developed a framework in which the certainty in evidence was based on numerous factors and not solely on study design which challenges the pyramid concept. 8 Study design alone appears to be insufficient on its own as a surrogate for risk of bias. Certain methodological limitations of a study, imprecision, inconsistency and indirectness, were factors independent from study design and can affect the quality of evidence derived from any study design. For example, a meta-analysis of RCTs evaluating intensive glycaemic control in non-critically ill hospitalised patients showed a non-significant reduction in mortality (relative risk of 0.95 (95% CI 0.72 to 1.25) 9 ). Allocation concealment and blinding were not adequate in most trials. The quality of this evidence is rated down due to the methodological imitations of the trials and imprecision (wide CI that includes substantial benefit and harm). Hence, despite the fact of having five RCTs, such evidence should not be rated high in any pyramid. The quality of evidence can also be rated up. For example, we are quite certain about the benefits of hip replacement in a patient with disabling hip osteoarthritis. Although not tested in RCTs, the quality of this evidence is rated up despite the study design (non-randomised observational studies). 10

Rationale for modification 2

Another challenge to the notion of having systematic reviews on the top of the evidence pyramid relates to the framework presented in the Journal of the American Medical Association User's Guide on systematic reviews and meta-analysis. The Guide presented a two-step approach in which the credibility of the process of a systematic review is evaluated first (comprehensive literature search, rigorous study selection process, etc). If the systematic review was deemed sufficiently credible, then a second step takes place in which we evaluate the certainty in evidence based on the GRADE approach. 11 In other words, a meta-analysis of well-conducted RCTs at low risk of bias cannot be equated with a meta-analysis of observational studies at higher risk of bias. For example, a meta-analysis of 112 surgical case series showed that in patients with thoracic aortic transection, the mortality rate was significantly lower in patients who underwent endovascular repair, followed by open repair and non-operative management (9%, 19% and 46%, respectively, p<0.01). Clearly, this meta-analysis should not be on top of the pyramid similar to a meta-analysis of RCTs. After all, the evidence remains consistent of non-randomised studies and likely subject to numerous confounders.

Therefore, the second modification to the pyramid is to remove systematic reviews from the top of the pyramid and use them as a lens through which other types of studies should be seen (ie, appraised and applied). The systematic review (the process of selecting the studies) and meta-analysis (the statistical aggregation that produces a single effect size) are tools to consume and apply the evidence by stakeholders.

Implications and limitations

Changing how systematic reviews and meta-analyses are perceived by stakeholders (patients, clinicians and stakeholders) has important implications. For example, the American Heart Association considers evidence derived from meta-analyses to have a level ‘A’ (ie, warrants the most confidence). Re-evaluation of evidence using GRADE shows that level ‘A’ evidence could have been high, moderate, low or of very low quality. 12 The quality of evidence drives the strength of recommendation, which is one of the last translational steps of research, most proximal to patient care.

One of the limitations of all ‘pyramids’ and depictions of evidence hierarchy relates to the underpinning of such schemas. The construct of internal validity may have varying definitions, or be understood differently among evidence consumers. A limitation of considering systematic review and meta-analyses as tools to consume evidence may undermine their role in new discovery (eg, identifying a new side effect that was not demonstrated in individual studies 13 ).

This pyramid can be also used as a teaching tool. EBM teachers can compare it to the existing pyramids to explain how certainty in the evidence (also called quality of evidence) is evaluated. It can be used to teach how evidence-based practitioners can appraise and apply systematic reviews in practice, and to demonstrate the evolution in EBM thinking and the modern understanding of certainty in evidence.

- Leibovici L

- Agoritsas T ,

- Vandvik P ,

- Neumann I , et al

- ↵ Resources for Evidence-Based Practice: The 6S Pyramid. Secondary Resources for Evidence-Based Practice: The 6S Pyramid Feb 18, 2016 4:58 PM. http://hsl.mcmaster.libguides.com/ebm

- Vandenbroucke JP

- Berlin JA ,

- Dechartres A ,

- Altman DG ,

- Trinquart L , et al

- Guyatt GH ,

- Vist GE , et al

- Coburn JA ,

- Coto-Yglesias F , et al

- Sultan S , et al

- Montori VM ,

- Ioannidis JP , et al

- Altayar O ,

- Bennett M , et al

- Nissen SE ,

Contributors MHM conceived the idea and drafted the manuscript. FA helped draft the manuscript and designed the new pyramid. MA and NA helped draft the manuscript.

Competing interests None declared.

Provenance and peer review Not commissioned; externally peer reviewed.

Linked Articles

- Editorial Pyramids are guides not rules: the evolution of the evidence pyramid Terrence Shaneyfelt BMJ Evidence-Based Medicine 2016; 21 121-122 Published Online First: 12 Jul 2016. doi: 10.1136/ebmed-2016-110498

- Perspective EBHC pyramid 5.0 for accessing preappraised evidence and guidance Brian S Alper R Brian Haynes BMJ Evidence-Based Medicine 2016; 21 123-125 Published Online First: 20 Jun 2016. doi: 10.1136/ebmed-2016-110447

Read the full text or download the PDF:

Evidence Based Practice

- Asking the Question

Levels of Evidence

- Finding the Evidence

- Randomized Controlled Trials

- Systematic Review

- National Nursing Research Associations

- Study Design Brief comparison of the advantages and disadvantages of different types of studies.

- Clinical Study Design and Methods Terminology

Meet with a librarian for one-on-one research assistance.

Types of Evidence

- Meta-Analysis A systematic review that uses quantitative methods to summarize the results.

- Systematic Review An article in which the authors have systematically searched for, appraised, and summarized all of the medical literature for a specific topic.

- Critically Appraised Topic Authors of critically-appraised topics evaluate and synthesize multiple research studies.

- Critically Appraised Articles Authors of critically-appraised individual articles evaluate and synopsize individual research studies.

- Randomized Controlled Trials RCT's include a randomized group of patients in an experimental group and a control group. These groups are followed up for the variables/outcomes of interest.

- Cohort Study Identifies two groups (cohorts) of patients, one which did receive the exposure of interest, and one which did not, and following these cohorts forward for the outcome of interest.

- Case-Control Study Involves identifying patients who have the outcome of interest (cases) and control patients without the same outcome, and looking to see if they had the exposure of interest.

- Background Information / Expert Opinion Handbooks, encyclopedias, and textbooks often provide a good foundation or introduction and often include generalized information about a condition. While background information presents a convenient summary, often it takes about three years for this type of literature to be published.

- Animal Research / Lab Studies Information begins at the bottom of the pyramid: this is where ideas and laboratory research takes place. Ideas turn into therapies and diagnostic tools, which then are tested with lab models and animals.

Rating System for the Hierarchy of Evidence: Quantitative Questions

Level I : Evidence from a systematic review of all relevant randomized controlled trials (RCT's), or evidence-based clinical practice guidelines based on systematic reviews of RCT's

Level II : Evidence obtained from at least one well-designed Randomized Controlled Trial (RCT)

Level III : Evidence obtained from well-designed controlled trials without randomization, quasi-experimental

Level IV : Evidence from well-designed case-control and cohort studies

Level V : Evidence from systematic reviews of descriptive and qualitative studies

Level VI : Evidence from a single descriptive or qualitative study

Level VII : Evidence from the opinion of authorities and/or reports of expert committees

Melnyk, B., & Fineout-Overholt, E. (2011). Evidence-based practice in nursing & healthcare: A guide to best practice (2nd ed.). Philadelphia: Wolters Kluwer/Lippincott Williams & Wilkins, p. 10.

Evidence-Based Practice: Study Design. Medical Center Library and Archives, Duke University

Source: NRS 5231 - Research in Advanced Nursing Practice

- << Previous: Asking the Question

- Next: Finding the Evidence >>

- Last Updated: Jan 24, 2024 3:00 PM

- URL: https://library.spalding.edu/EBP

Spalding University Home

Levels of Evidence and Study Design: Levels of Evidence

Levels of evidence.

- Study Design

- Study Design by Question Type

- Rating Systems

This is a general set of levels to aid in critically evaluating evidence. It was adapted from the model presented in the book, Evidence-Based Practice in Nursing and Healthcare: A Guide to Best Practice (Melnyk & Fineout-Overholt, 2019). Some specialties may have adopted a slightly different and/or smaller set of levels.

Evidence from a clinical practice guideline based on systematic reviews or meta-analyses of randomized controlled trials. Is this is not available, then evidence from a systematic review or meta-analysis of random controlled trials.

Evidence from randomized controlled studies with good design.

Evidence from controlled trials that have good design but are not randomized.

Evidence from case-control and cohort studies with good design.

Evidence from systematic reviews of qualitative and descriptive studies.

Evidence from qualitative and descriptive studies.

Evidence from the opinion of authorities and/or the reports of expert committees.

Evidence Pyramid

The pyramid below is a hierarchy of evidence for quantitative studies. It shows the hierarchy of studies by study design; starting with secondary and reappraised studies, then primary studies, and finally reports and opinions, which have no study design. This pyramid is a simplified, amalgamation of information presented in the book chapter “Evidence-based decision making” (Forest et al., 2019) and book Evidence-Based Practice in Nursing and Healthcare: A Guide to Best Practice (Melnyk & Fineout-Overholt, 2019).

Evidence Table for Nursing

Advocate Health - Midwest provides system-wide evidence based practice resources. The Nursing Hub* has an Evidence-Based Quality Improvement (EBQI) Evidence Table , within the Evidence-Based Practice (EBP) Resource. It also includes information on evidence type, and a literature synthesis table.

*The Nursing Hub requires access to the Advocate Health - Midwest SharePoint platform.

Forrest, J. L., Miller, S. A., Miller, G. W., Elangovan, S., & Newman, M. G. (2019). Evidence-based decision making. In M. G. Newman, H. H. Takei, P. R. Klokkevold, & F. A. Carranza (Eds.), Newman and Carranza's clinical periodontology (13th ed., pp. 1-9.e1). Elsevier.

- Melnyk, B. M., & Fineout-Overholt, E. (2019). Evidence-based practice in nursing and healthcare: A guide to best practice (4th ed.). Wolters Kluwer.

- << Previous: Overview

- Next: Study Design >>

- Last Updated: Dec 29, 2023 2:03 PM

- URL: https://library.aah.org/guides/levelsofevidence

Evidence-Based Practice in Health

- Introduction

- PICO Framework and the Question Statement

- Types of Clinical Question

- Hierarchy of Evidence

The Evidence Hierarchy: What is the "Best Evidence"?

Systematic reviews versus primary studies: what's best, systematic reviews and narrative reviews: what's the difference, filtered versus unfiltered information, the cochrane library.

- Selecting a Resource

- Searching PubMed

- Module 3: Appraise

- Module 4: Apply

- Module 5: Audit

- Reference Shelf

What is "the best available evidence"? The hierarchy of evidence is a core principal of Evidence-Based Practice (EBP) and attempts to address this question. The evidence higherarchy allows you to take a top-down approach to locating the best evidence whereby you first search for a recent well-conducted systematic review and if that is not available, then move down to the next level of evidence to answer your question.

EBP hierarchies rank study types based on the rigour (strength and precision) of their research methods. Different hierarchies exist for different question types, and even experts may disagree on the exact rank of information in the evidence hierarchies. The following image represents the hierarchy of evidence provided by the National Health and Medical Research Council (NHMRC). 1

Most experts agree that the higher up the hierarchy the study design is positioned, the more rigorous the methodology and hence the more likely it is that the study design can minimise the effect of bias on the results of the study. In most evidence hierachies current, well designed systematic reviews and meta-analyses are at the top of the pyramid, and expert opinion and anecdotal experience are at the bottom. 2

Systematic Reviews and Meta Analyses

Well done systematic reviews, with or without an included meta-analysis, are generally considered to provide the best evidence for all question types as they are based on the findings of multiple studies that were identified in comprehensive, systematic literature searches. However, the position of systematic reviews at the top of the evidence hierarchy is not an absolute. For example:

- The process of a rigorous systematic review can take years to complete and findings can therefore be superseded by more recent evidence.

- The methodological rigor and strength of findings must be appraised by the reader before being applied to patients.

- A large, well conducted Randomised Controlled Trial (RCT) may provide more convincing evidence than a systematic review of smaller RCTs. 4

Primary Studies

If a current, well designed systematic review is not available, go to primary studies to answer your question. The best research designs for a primary study varies depending on the question type. The table below lists optimal study methodologies for the main types of questions.

Note that the Clinical Queries filter available in some databases such as PubMed and CINAHL matches the question type to studies with appropriate research designs. When searching primary literature, look first for reports of clinical trials that used the best research designs. Remember as you search, though, that the best available evidence may not come from the optimal study type. For example, if treatment effects found in well designed cohort studies are sufficiently large and consistent, those cohort studies may provide more convincing evidence than the findings of a weaker RCT.

What is a Systematic Review?

A systematic review synthesises the results from all available studies in a particular area, and provides a thorough analysis of the results, strengths and weaknesses of the collated studies. A systematic review has several qualities:

- It addresses a focused, clearly formulated question.

- It uses systematic and explicit methods:

a. to identify, select and critically appraise relevant research, and b. to collect and analyse data from the studies that are included in the review

Systematic reviews may or may not include a meta-analysis used to summarise and analyse the statistical results of included studies. This requires the studies to have the same outcome measure.

What is a Narrative Review?

Narrative reviews (often just called Reviews) are opinion with selective illustrations from the literature. They do not qualify as adequate evidence to answer clinical questions. Rather than answering a specific clinical question, they provide an overview of the research landscape on a given topic and so maybe useful for background information. Narrative reviews usually lack systematic search protocols or explicit criteria for selecting and appraising evidence and are threfore very prone to bias. 5

Filtered information appraises the quality of a study and recommend its application in practice. The critical appraisal of the individual articles has already been done for you—which is a great time saver. Because the critical appraisal has been completed, filtered literature is appropriate to use for clinical decision-making at the point-of-care. In addition to saving time, filtered literature will often provide a more definitive answer than individual research reports. Examples of filtered resources include, Cochrane Database of Systematic Reviews , BMJ Clincial Evidence , and ACP Journal Club .

Unfiltered information are original research studies that have not yet been synthesized or aggregated. As such, they are the more difficult to read, interpret, and apply to practice. Examples of unfiltered resources include, CINAHL , EMBASE , Medline , and PubMe d . 3

The Cochrane Collaboration is an international voluntary organization that prepares, maintains and promotes the accessibility of systematic reviews of the effects of healthcare.

The Cochrane Library is a database from the Cochrane Collaboration that allows simultaneous searching of six EBP databases. Cochrane Reviews are systematic reviews authored by members of the Cochrane Collaboration and available via The Cochrane Database of Systematic Reviews . They are widely recognised as the gold standard in systematic reviews due to the rigorous methodology used.

Abstracts of completed Cochrane Reviews are freely available through PubMed and Meta-Search engines such as TRIP database.

National access to the Cochrane Library is provided by the Australian Government via the National Health and Medical Research Council (NHMRC).

1. National Health and Medical Research Council. (2009). [Hierarchy of Evidence] . Retrieved 2 July, 2014 from: https://www.nhmrc.gov.au/

2. Hoffman, T., Bennett, S., & Del Mar, C. (2013). Evidence-Based Practice: Across the Health Professions (2nd ed.). Chatswood, NSW: Elsevier.

3. Kendall, S. (2008). Evidence-based resources simplified. Canadian Family Physician , 54, 241-243

4. Davidson, M., & Iles, R. (2013). Evidence-based practice in therapeutic health care. In, Liamputtong, P. (ed.). Research Methods in Health: Foundations for Evidence-Based Practice (2nd ed.). South Melbourne: Oxford University Press.

5. Cook, D., Mulrow, C., & Haynes, R. (1997). Systematic reviews: synthesis of best evidence for clinical decisions. Annals of Internal Medicine , 126, 376–80.

- << Previous: Types of Clinical Question

- Next: Module 2: Acquire >>

- Last Updated: Jul 24, 2023 4:08 PM

- URL: https://canberra.libguides.com/evidence

Systematic Reviews

- Levels of Evidence

- Evidence Pyramid

- Joanna Briggs Institute

The evidence pyramid is often used to illustrate the development of evidence. At the base of the pyramid is animal research and laboratory studies – this is where ideas are first developed. As you progress up the pyramid the amount of information available decreases in volume, but increases in relevance to the clinical setting.

Meta Analysis – systematic review that uses quantitative methods to synthesize and summarize the results.

Systematic Review – summary of the medical literature that uses explicit methods to perform a comprehensive literature search and critical appraisal of individual studies and that uses appropriate st atistical techniques to combine these valid studies.

Randomized Controlled Trial – Participants are randomly allocated into an experimental group or a control group and followed over time for the variables/outcomes of interest.

Cohort Study – Involves identification of two groups (cohorts) of patients, one which received the exposure of interest, and one which did not, and following these cohorts forward for the outcome of interest.

Case Control Study – study which involves identifying patients who have the outcome of interest (cases) and patients without the same outcome (controls), and looking back to see if they had the exposure of interest.

Case Series – report on a series of patients with an outcome of interest. No control group is involved.

- Levels of Evidence from The Centre for Evidence-Based Medicine

- The JBI Model of Evidence Based Healthcare

- How to Use the Evidence: Assessment and Application of Scientific Evidence From the National Health and Medical Research Council (NHMRC) of Australia. Book must be downloaded; not available to read online.

When searching for evidence to answer clinical questions, aim to identify the highest level of available evidence. Evidence hierarchies can help you strategically identify which resources to use for finding evidence, as well as which search results are most likely to be "best".

Image source: Evidence-Based Practice: Study Design from Duke University Medical Center Library & Archives. This work is licensed under a Creativ e Commons Attribution-ShareAlike 4.0 International License .

The hierarchy of evidence (also known as the evidence-based pyramid) is depicted as a triangular representation of the levels of evidence with the strongest evidence at the top which progresses down through evidence with decreasing strength. At the top of the pyramid are research syntheses, such as Meta-Analyses and Systematic Reviews, the strongest forms of evidence. Below research syntheses are primary research studies progressing from experimental studies, such as Randomized Controlled Trials, to observational studies, such as Cohort Studies, Case-Control Studies, Cross-Sectional Studies, Case Series, and Case Reports. Non-Human Animal Studies and Laboratory Studies occupy the lowest level of evidence at the base of the pyramid.

- Finding Evidence-Based Answers to Clinical Questions – Quickly & Effectively A tip sheet from the health sciences librarians at UC Davis Libraries to help you get started with selecting resources for finding evidence, based on type of question.

- << Previous: What is a Systematic Review?

- Next: Locating Systematic Reviews >>

- Getting Started

- What is a Systematic Review?

- Locating Systematic Reviews

- Searching Systematically

- Developing Answerable Questions

- Identifying Synonyms & Related Terms

- Using Truncation and Wildcards

- Identifying Search Limits/Exclusion Criteria

- Keyword vs. Subject Searching

- Where to Search

- Search Filters

- Sensitivity vs. Precision

- Core Databases

- Other Databases

- Clinical Trial Registries

- Conference Presentations

- Databases Indexing Grey Literature

- Web Searching

- Handsearching

- Citation Indexes

- Documenting the Search Process

- Managing your Review

Research Support

- Last Updated: Apr 8, 2024 3:33 PM

- URL: https://guides.library.ucdavis.edu/systematic-reviews

- Research Process

Levels of evidence in research

- 5 minute read

- 98.2K views

Table of Contents

Level of evidence hierarchy

When carrying out a project you might have noticed that while searching for information, there seems to be different levels of credibility given to different types of scientific results. For example, it is not the same to use a systematic review or an expert opinion as a basis for an argument. It’s almost common sense that the first will demonstrate more accurate results than the latter, which ultimately derives from a personal opinion.

In the medical and health care area, for example, it is very important that professionals not only have access to information but also have instruments to determine which evidence is stronger and more trustworthy, building up the confidence to diagnose and treat their patients.

5 levels of evidence

With the increasing need from physicians – as well as scientists of different fields of study-, to know from which kind of research they can expect the best clinical evidence, experts decided to rank this evidence to help them identify the best sources of information to answer their questions. The criteria for ranking evidence is based on the design, methodology, validity and applicability of the different types of studies. The outcome is called “levels of evidence” or “levels of evidence hierarchy”. By organizing a well-defined hierarchy of evidence, academia experts were aiming to help scientists feel confident in using findings from high-ranked evidence in their own work or practice. For Physicians, whose daily activity depends on available clinical evidence to support decision-making, this really helps them to know which evidence to trust the most.

So, by now you know that research can be graded according to the evidential strength determined by different study designs. But how many grades are there? Which evidence should be high-ranked and low-ranked?

There are five levels of evidence in the hierarchy of evidence – being 1 (or in some cases A) for strong and high-quality evidence and 5 (or E) for evidence with effectiveness not established, as you can see in the pyramidal scheme below:

Level 1: (higher quality of evidence) – High-quality randomized trial or prospective study; testing of previously developed diagnostic criteria on consecutive patients; sensible costs and alternatives; values obtained from many studies with multiway sensitivity analyses; systematic review of Level I RCTs and Level I studies.

Level 2: Lesser quality RCT; prospective comparative study; retrospective study; untreated controls from an RCT; lesser quality prospective study; development of diagnostic criteria on consecutive patients; sensible costs and alternatives; values obtained from limited stud- ies; with multiway sensitivity analyses; systematic review of Level II studies or Level I studies with inconsistent results.

Level 3: Case-control study (therapeutic and prognostic studies); retrospective comparative study; study of nonconsecutive patients without consistently applied reference “gold” standard; analyses based on limited alternatives and costs and poor estimates; systematic review of Level III studies.

Level 4: Case series; case-control study (diagnostic studies); poor reference standard; analyses with no sensitivity analyses.

Level 5: (lower quality of evidence) – Expert opinion.

By looking at the pyramid, you can roughly distinguish what type of research gives you the highest quality of evidence and which gives you the lowest. Basically, level 1 and level 2 are filtered information – that means an author has gathered evidence from well-designed studies, with credible results, and has produced findings and conclusions appraised by renowned experts, who consider them valid and strong enough to serve researchers and scientists. Levels 3, 4 and 5 include evidence coming from unfiltered information. Because this evidence hasn’t been appraised by experts, it might be questionable, but not necessarily false or wrong.

Examples of levels of evidence

As you move up the pyramid, you will surely find higher-quality evidence. However, you will notice there is also less research available. So, if there are no resources for you available at the top, you may have to start moving down in order to find the answers you are looking for.

- Systematic Reviews: -Exhaustive summaries of all the existent literature about a certain topic. When drafting a systematic review, authors are expected to deliver a critical assessment and evaluation of all this literature rather than a simple list. Researchers that produce systematic reviews have their own criteria to locate, assemble and evaluate a body of literature.

- Meta-Analysis: Uses quantitative methods to synthesize a combination of results from independent studies. Normally, they function as an overview of clinical trials. Read more: Systematic review vs meta-analysis .

- Critically Appraised Topic: Evaluation of several research studies.

- Critically Appraised Article: Evaluation of individual research studies.

- Randomized Controlled Trial: a clinical trial in which participants or subjects (people that agree to participate in the trial) are randomly divided into groups. Placebo (control) is given to one of the groups whereas the other is treated with medication. This kind of research is key to learning about a treatment’s effectiveness.

- Cohort studies: A longitudinal study design, in which one or more samples called cohorts (individuals sharing a defining characteristic, like a disease) are exposed to an event and monitored prospectively and evaluated in predefined time intervals. They are commonly used to correlate diseases with risk factors and health outcomes.

- Case-Control Study: Selects patients with an outcome of interest (cases) and looks for an exposure factor of interest.

- Background Information/Expert Opinion: Information you can find in encyclopedias, textbooks and handbooks. This kind of evidence just serves as a good foundation for further research – or clinical practice – for it is usually too generalized.

Of course, it is recommended to use level A and/or 1 evidence for more accurate results but that doesn’t mean that all other study designs are unhelpful or useless. It all depends on your research question. Focusing once more on the healthcare and medical field, see how different study designs fit into particular questions, that are not necessarily located at the tip of the pyramid:

- Questions concerning therapy: “Which is the most efficient treatment for my patient?” >> RCT | Cohort studies | Case-Control | Case Studies

- Questions concerning diagnosis: “Which diagnose method should I use?” >> Prospective blind comparison

- Questions concerning prognosis: “How will the patient’s disease will develop over time?” >> Cohort Studies | Case Studies

- Questions concerning etiology: “What are the causes for this disease?” >> RCT | Cohort Studies | Case Studies

- Questions concerning costs: “What is the most cost-effective but safe option for my patient?” >> Economic evaluation

- Questions concerning meaning/quality of life: “What’s the quality of life of my patient going to be like?” >> Qualitative study

Find more about Levels of evidence in research on Pinterest:

17 March 2021 – Elsevier’s Mini Program Launched on WeChat Brings Quality Editing Straight to your Smartphone

- Manuscript Review

Professor Anselmo Paiva: Using Computer Vision to Tackle Medical Issues with a Little Help from Elsevier Author Services

You may also like.

Descriptive Research Design and Its Myriad Uses

Five Common Mistakes to Avoid When Writing a Biomedical Research Paper

Making Technical Writing in Environmental Engineering Accessible

To Err is Not Human: The Dangers of AI-assisted Academic Writing

When Data Speak, Listen: Importance of Data Collection and Analysis Methods

Choosing the Right Research Methodology: A Guide for Researchers

Why is data validation important in research?

Writing a good review article

Input your search keywords and press Enter.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Basic Principles

5 The Hierarchy of Evidence

The hierarchy of evidence provides a useful framework for understanding different kinds of quantitative research designs. As shown in Figure 2.1, studies at the base of the pyramid involving laboratory and animal research are at the lowest level of evidence because they tend to be focused on understanding how things work at the cellular level and it is difficult to establish a direct link between the research findings and implications for practice.

This type of research is still valuable because it provides the researcher with a very high level of control which allows them to study things that they can’t do in humans. For example, you could breed genetically modified mice and compare them to regular mice in order to examine the influence of specific genes on behavior. Obviously this type of experiment would be unethical to do with humans but it can provide initial evidence to help us better understand phenomena (in this case, the influence of genes on behavior), intervention, or drug.

The next level includes research with no design and include case reports or case series reports that are commonly used in novel or rare situations (for example, a patient with a rare disease). Expert opinions, narratives, and editorials also fall into this category because they rely on an individual’s expertise, knowledge, and experience which is not necessarily objective.

Above this are retrospective observational studies such as case-control studies or chart reviews that seek to find patterns in data that has already been collected. One downside of this type of research is that the researcher has no control over the variables that were collected or the information that is available.

Next in the hierarchy are prospective observational studies which include cohort studies as well as non-experimental research designs such as surveys. Here the researcher does have control over what variables are measured as well as how and when they are measured. If done well, this approach can strengthen the findings because it provides the researcher with the opportunity to control for confounding variables and bias, take measures to improve response rates, and select their sample.

Randomized controlled trials (RCTs) are often hailed as “the gold standard” for quantitative research studies in health care because they allow the researcher to control the experiment and isolate the effect of an intervention by comparing it to a control group. However, the inclusion and exclusion criteria for participation can be quite strict and the high level of control is not consistent with real-world conditions, which can reduce the generalizability of findings to the population of interest. Pragmatic RCTs (PRCTs) have begun to gain more popularity for this reason. The goal of a PRCT is to keep the treatment that the control group receives consistent with usual care and the treatment that the intervention group receives consistent with what is practical in the context of real life. While PRCTs don’t provide the same degree of control and standardization as an RCT, the idea is that they provide more realistic evidence about how effective an intervention will be in real life.

Meta-analysis and systematic reviews come next on the hierarchy. The main benefit of systematic reviews and meta-analyses is that they include findings from a number of different studies, and thus, provide more robust evidence about the phenomenon of interest. Whenever possible, this type of evidence should be used to inform decisions about health policy and practice (rather than that from a single study).

It is important to note here that meta-analysis generally occurs as part of a systematic review and combining the data from several studies is not always appropriate. If the designs, methods, and/or measures used in different studies vary considerably then the researcher should not combine the data and analyze it as a group. It is also important not to include multiple studies that use the same dataset because the same sample gets used more than once which will skew the results.

Lastly, at the top of the hierarchy of evidence are clinical practice guidelines. These are at the very top because they are created by a team or panel of experts using a very rigorous process and include a variety of evidence ranging from quantitative and qualitative research studies, white papers and grey literature. Clinical practice guidelines also examine the quality of the evidence and interpret it in order to provide clear recommendations for practice (and often, research and policy as well).

Figure of The hierarchy of evidence (image available to use as per Creative Commons license – https://commons.wikimedia.org/wiki/File:Research_design_and_evidence.svg )

Applied Statistics in Healthcare Research Copyright © 2020 by William J. Montelpare, Ph.D., Emily Read, Ph.D., Teri McComber, Alyson Mahar, Ph.D., and Krista Ritchie, Ph.D. is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License , except where otherwise noted.

Share This Book

The UK Faculty of Public Health has recently taken ownership of the Health Knowledge resource. This new, advert-free website is still under development and there may be some issues accessing content. Additionally, the content has not been audited or verified by the Faculty of Public Health as part of an ongoing quality assurance process and as such certain material included maybe out of date. If you have any concerns regarding content you should seek to independently verify this.

The hierarchy of research evidence - from well conducted meta-analysis down to small case series

PLEASE NOTE:

We are currently in the process of updating this chapter and we appreciate your patience whilst this is being completed.

Evidence-based medicine has been described as ‘the conscientious, explicit and judicious use of current best evidence in making decisions about the care of individual patients.’ 1 This involves evaluating the quality of the best available clinical research, by critically assessing techniques reported by researchers in their publications, and integrating this with clinical expertise. Although it has provoked controversy, the hierarchy of evidence lies at the heart of the appraisal process.

Ranking of trial designs

The hierarchy indicates the relative weight that can be attributed to a particular study design. Generally, the higher up a methodology is ranked, the more robust it is assumed to be. At the top end lies the meta-analysis – synthesising the results of a number of similar trials to produce a result of higher statistical power. At the other end of the spectrum lie individual case reports, thought to provide the weakest level of evidence.

Several possible methods for ranking study designs have been proposed, but one of the most widely accepted is listed below. 2 Information about the individual study designs can be found elsewhere in Section 1A .

- Systematic reviews and meta-analyses

- Randomised controlled trials

- Cohort studies

- Case-control studies

- Cross-sectional surveys

- Case series and case reports

Concerns and caveats

The hierarchy is widely accepted in the medical literature, but concerns have been raised about the ranking of evidence, versus that which is most relevant to practice. Particular concerns are highlighted below.

- Techniques lower down the ranking are not always superfluous. For example, the link between smoking and lung cancer was initially discovered via case-control studies carried out in the 1950s 3 . Although randomised control trials (RCTs) are considered more robust, it would in many cases be unethical to perform an RCT. For example, if studying a risk factor exposure, you would need a cohort exposed to the risk factor by chance or personal choice.

- The hierarchy is also not absolute. A well-conducted observational study may provide more compelling evidence about a treatment than a poorly conducted RCT.

- The hierarchy focuses largely on quantitative methodologies. However, it is again important to choose the most appropriate study design to answer the question. For example, it is often not possible to establish why individuals choose to pursue a course of action without using a qualitative technique, such as interviewing.

Alternatives to the traditional hierarchy of evidence have been suggested. For example, the GRADE system (Grades of Recommendation, Assessment, Development and Evaluation) classifies the quality of evidence not only based on the study design, but also the potential limitations and, conversely, the positive effects found. For example, an observational study would start off as being defined as low-quality evidence. However, they can be downgraded to “very low” quality if there are clear limitations in the study design, or can be upgraded to “moderate” or “high” quality if they show a large magnitude of effect or a dose-response gradient.

The GRADE system is summarised in the following table (reproduced from 4 ):

Insert diagram re the GRADE system here:

The Oxford Centre for Evidence-Based Medicine have also developed individual levels of evidence depending on the type of clinical question which needs to be answered. For example, to answer questions on how common a problem is, they define the best level of evidence to be a local and current random sample survey, with a systematic review being the second best level of evidence. The complete table of clinical question types considered, and the levels of evidence for each, can be found here . 5

- Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn't. BMJ 1996: 312:7023

- Guyatt GH, Sackett DL, Sinclair JC, Hayward R, Cook DJ, Cook RJ. Users' guides to the medical literature. IX. A method for grading health care recommendations. JAMA 1995; 274:1800-4.

- Doll R and Hill AB. Smoking and carcinoma of the lung. BMJ 1950;2:739.

- Takada T, Strasberg S, Solomkin J et al. Updated Tokyo Guidelines for the management of acute cholangitis and cholecystitis. Journal of Hepato-Biliary-Pancreatic Sciences 2013;20:1-7.

- Oxford Centre for Evidence-Based Medicine. Levels of evidence, 2011 http://www.cebm.net/wp-content/uploads/2014/06/CEBM-Levels-of-Evidence-2.1.pdf - Accessed 8/04/17

Further reading

- Greenhalgh T. How to Read a Paper: The Basics of Evidence Based Medicine. London: BMJ, 2001

- Guyatt G, Rennie D et al. Users' Guides to the Medical Literature: A Manual for Evidence-Based Clinical Practice. McGraw-Hill Medical, 2008.

© Helen Barratt 2009, Saran Shantikumar 2018

OHSU Evidence-Based Practice Course for Interprofessional Clinical Teams

- Course Info

- Course Application

- Search Strategy Consultation

- GRADE Table Consultation

- EBP Guideline

- Informatics Consult

- Final Team Presentations and Guidelines

- Hierarchy of Evidence and Study Design

Session 2: Pre-Session Work

Hierarchy of evidence, is it a good fit for my pico, types of study designs.

Please watch the 3 videos below for more information on study design. This should take about 10 minutes.

Overview of Research Studies - The 5 C's

Randomized Controlled Trials (RCTs)

Systematic review & Meta-analysis

Randomized Controlled Trial is a prospective, analytical, experimental study using primary data generated in the clinical environment. Individuals similar at the beginning are randomly allocated to two or more groups (treatment and control) then followed to determine the outcome of the intervention.

Cohort Study (prospective) is a study of a group of individuals, some of whom are exposed to a variable of interest (e.g., drug or environmental exposure), in which participants are followed up over time to determine who develops the outcome of interest and whether the outcome is associated with the exposure.

Cohort Study (retrospective) is when data is gathered for a cohort that was formed sometime in the past. Exposures and outcomes have already occurred at the start of the study. You are studying the risk factor and see if you can associate a disease to it. Individuals split by exposure.

Case Control Study is a study in which patients who already have a specific condition or outcome are compared with people who do not. Researchers look back in time (retrospective) to identify possible exposures. They often rely on medical records and patient recall for data collection. Individuals split by disease.

Survey Study is an epidemiologic study that produces survey results, and will consist of simultaneous assessments of the health outcome, primary risk exposure and potential confounders and effect modifiers. Two types of survey research are cross-sectional and longitudinal studies.

Cross-Sectional Study is the observation of a defined population at a single point in time or during a specific time interval to examine associations between the outcomes and exposure to interventions. Exposure and outcome are determined simultaneously. Often rely on data originally collected for other purposes.

Longitudinal Study follow subjects over time with continuous or repeated monitoring of risk factors or health outcomes, or both. Researchers conduct several observations of the same subjects over a period of time, sometimes lasting many years.

Before and After Study is a study in in which observations are made before (pre) and after (post) the implementation of an intervention, both in a group that receives the intervention and in a control group that does not.

Case Series and Case Reports are descriptive study/studies that consist of collections of reports on the treatment of individual patients or a report on a single patient.

Systematic Review usually focuses on a specific clinical question and conducts an extensive literature search to identify studies with sound methodology. The studies are reviewed, assessed, and the results summarized according to pre-determined criteria of the review question.

Meta-Analysis takes a systematic review one step further by combining all the results using accepted statistical methodology.

- << Previous: Session 2

- Next: Session 3 >>

- Last Updated: Feb 7, 2023 12:40 PM

- URL: https://libguides.ohsu.edu/EBP4ClinicalTeams

- Joyner Library

- Laupus Health Sciences Library

- Music Library

- Digital Collections

- Special Collections

- North Carolina Collection

- Teaching Resources

- The ScholarShip Institutional Repository

- Country Doctor Museum

Evidence-Based Practice for Nursing: Evaluating the Evidence

- What is Evidence-Based Practice?

- Asking the Clinical Question

- Finding Evidence

- Evaluating the Evidence

- Articles, Books & Web Resources on EBN

Evaluating Evidence: Questions to Ask When Reading a Research Article or Report

For guidance on the process of reading a research book or an article, look at Paul N. Edward's paper, How to Read a Book (2014) . When reading an article, report, or other summary of a research study, there are two principle questions to keep in mind:

1. Is this relevant to my patient or the problem?

- Once you begin reading an article, you may find that the study population isn't representative of the patient or problem you are treating or addressing. Research abstracts alone do not always make this apparent.

- You may also find that while a study population or problem matches that of your patient, the study did not focus on an aspect of the problem you are interested in. E.g. You may find that a study looks at oral administration of an antibiotic before a surgical procedure, but doesn't address the timing of the administration of the antibiotic.

- The question of relevance is primary when assessing an article--if the article or report is not relevant, then the validity of the article won't matter (Slawson & Shaughnessy, 1997).

2. Is the evidence in this study valid?

- Validity is the extent to which the methods and conclusions of a study accurately reflect or represent the truth. Validity in a research article or report has two parts: 1) Internal validity--i.e. do the results of the study mean what they are presented as meaning? e.g. were bias and/or confounding factors present? ; and 2) External validity--i.e. are the study results generalizable? e.g. can the results be applied outside of the study setting and population(s) ?

- Determining validity can be a complex and nuanced task, but there are a few criteria and questions that can be used to assist in determining research validity. The set of questions, as well as an overview of levels of evidence, are below.

For a checklist that can help you evaluate a research article or report, use our checklist for Critically Evaluating a Research Article

- How to Critically Evaluate a Research Article

How to Read a Paper--Assessing the Value of Medical Research

Evaluating the evidence from medical studies can be a complex process, involving an understanding of study methodologies, reliability and validity, as well as how these apply to specific study types. While this can seem daunting, in a series of articles by Trisha Greenhalgh from BMJ, the author introduces the methods of evaluating the evidence from medical studies, in language that is understandable even for non-experts. Although these articles date from 1997, the methods the author describes remain relevant. Use the links below to access the articles.

- How to read a paper: Getting your bearings (deciding what the paper is about) Not all published research is worth considering. This provides an outline of how to decide whether or not you should consider a research paper. more... less... Greenhalgh, T. (1997b). How to read a paper. Getting your bearings (deciding what the paper is about). BMJ (Clinical Research Ed.), 315(7102), 243–246.

- Assessing the methodological quality of published papers This article discusses how to assess the methodological validity of recent research, using five questions that should be addressed before applying recent research findings to your practice. more... less... Greenhalgh, T. (1997a). Assessing the methodological quality of published papers. BMJ (Clinical Research Ed.), 315(7103), 305–308.

- How to read a paper. Statistics for the non-statistician. I: Different types of data need different statistical tests This article and the next present the basics for assessing the statistical validity of medical research. The two articles are intended for readers who struggle with statistics more... less... Greenhalgh, T. (1997f). How to read a paper. Statistics for the non-statistician. I: Different types of data need different statistical tests. BMJ (Clinical Research Ed.), 315(7104), 364–366.

- How to read a paper: Statistics for the non-statistician II: "Significant" relations and their pitfalls The second article on evaluating the statistical validity of a research article. more... less... Greenhalgh, T. (1997). Education and debate. how to read a paper: Statistics for the non-statistician. II: "significant" relations and their pitfalls. BMJ: British Medical Journal (International Edition), 315(7105), 422-425. doi: 10.1136/bmj.315.7105.422

- How to read a paper. Papers that report drug trials more... less... Greenhalgh, T. (1997d). How to read a paper. Papers that report drug trials. BMJ (Clinical Research Ed.), 315(7106), 480–483.

- How to read a paper. Papers that report diagnostic or screening tests more... less... Greenhalgh, T. (1997c). How to read a paper. Papers that report diagnostic or screening tests. BMJ (Clinical Research Ed.), 315(7107), 540–543.

- How to read a paper. Papers that tell you what things cost (economic analyses) more... less... Greenhalgh, T. (1997e). How to read a paper. Papers that tell you what things cost (economic analyses). BMJ (Clinical Research Ed.), 315(7108), 596–599.

- Papers that summarise other papers (systematic reviews and meta-analyses) more... less... Greenhalgh, T. (1997i). Papers that summarise other papers (systematic reviews and meta-analyses). BMJ (Clinical Research Ed.), 315(7109), 672–675.

- How to read a paper: Papers that go beyond numbers (qualitative research) A set of questions that could be used to analyze the validity of qualitative research more... less... Greenhalgh, T., & Taylor, R. (1997). Papers that go beyond numbers (qualitative research). BMJ (Clinical Research Ed.), 315(7110), 740–743.

Levels of Evidence

In some journals, you will see a 'level of evidence' assigned to a research article. Levels of evidence are assigned to studies based on the methodological quality of their design, validity, and applicability to patient care. The combination of these attributes gives the level of evidence for a study. Many systems for assigning levels of evidence exist. A frequently used system in medicine is from the Oxford Center for Evidence-Based Medicine . In nursing, the system for assigning levels of evidence is often from Melnyk & Fineout-Overholt's 2011 book, Evidence-based Practice in Nursing and Healthcare: A Guide to Best Practice . The Levels of Evidence below are adapted from Melnyk & Fineout-Overholt's (2011) model.

Uses of Levels of Evidence : Levels of evidence from one or more studies provide the "grade (or strength) of recommendation" for a particular treatment, test, or practice. Levels of evidence are reported for studies published in some medical and nursing journals. Levels of Evidence are most visible in Practice Guidelines, where the level of evidence is used to indicate how strong a recommendation for a particular practice is. This allows health care professionals to quickly ascertain the weight or importance of the recommendation in any given guideline. In some cases, levels of evidence in guidelines are accompanied by a Strength of Recommendation.

About Levels of Evidence and the Hierarchy of Evidence : While Levels of Evidence correlate roughly with the hierarchy of evidence (discussed elsewhere on this page), levels of evidence don't always match the categories from the Hierarchy of Evidence, reflecting the fact that study design alone doesn't guarantee good evidence. For example, the systematic review or meta-analysis of randomized controlled trials (RCTs) are at the top of the evidence pyramid and are typically assigned the highest level of evidence, due to the fact that the study design reduces the probability of bias ( Melnyk , 2011), whereas the weakest level of evidence is the opinion from authorities and/or reports of expert committees. However, a systematic review may report very weak evidence for a particular practice and therefore the level of evidence behind a recommendation may be lower than the position of the study type on the Pyramid/Hierarchy of Evidence.

About Levels of Evidence and Strength of Recommendation : The fact that a study is located lower on the Hierarchy of Evidence does not necessarily mean that the strength of recommendation made from that and other studies is low--if evidence is consistent across studies on a topic and/or very compelling, strong recommendations can be made from evidence found in studies with lower levels of evidence, and study types located at the bottom of the Hierarchy of Evidence. In other words, strong recommendations can be made from lower levels of evidence.

For example: a case series observed in 1961 in which two physicians who noted a high incidence (approximately 20%) of children born with birth defects to mothers taking thalidomide resulted in very strong recommendations against the prescription and eventually, manufacture and marketing of thalidomide. In other words, as a result of the case series, a strong recommendation was made from a study that was in one of the lowest positions on the hierarchy of evidence.

Hierarchy of Evidence for Quantitative Questions

The pyramid below represents the hierarchy of evidence, which illustrates the strength of study types; the higher the study type on the pyramid, the more likely it is that the research is valid. The pyramid is meant to assist researchers in prioritizing studies they have located to answer a clinical or practice question.

For clinical questions, you should try to find articles with the highest quality of evidence. Systematic Reviews and Meta-Analyses are considered the highest quality of evidence for clinical decision-making and should be used above other study types, whenever available, provided the Systematic Review or Meta-Analysis is fairly recent.

As you move up the pyramid, fewer studies are available, because the study designs become increasingly more expensive for researchers to perform. It is important to recognize that high levels of evidence may not exist for your clinical question, due to both costs of the research and the type of question you have. If the highest levels of study design from the evidence pyramid are unavailable for your question, you'll need to move down the pyramid.

While the pyramid of evidence can be helpful, individual studies--no matter the study type--must be assessed to determine the validity.

Hierarchy of Evidence for Qualitative Studies

Qualitative studies are not included in the Hierarchy of Evidence above. Since qualitative studies provide valuable evidence about patients' experiences and values, qualitative studies are important--even critically necessary--for Evidence-Based Nursing. Just like quantitative studies, qualitative studies are not all created equal. The pyramid below shows a hierarchy of evidence for qualitative studies.

Adapted from Daly et al. (2007)

Help with Research Terms & Study Types: Cut through the Jargon!

- CEBM Glossary

- Centre for Evidence-Based Medicine|Toronto

- Cochrane Collaboration Glossary

- Qualitative Research Terms (NHS Trust)

- << Previous: Finding Evidence

- Next: Articles, Books & Web Resources on EBN >>

- Last Updated: Jan 12, 2024 10:03 AM

- URL: https://libguides.ecu.edu/ebn

Library Research Guides - University of Wisconsin Ebling Library

Uw-madison libraries research guides.

- Course Guides

- Subject Guides

- University of Wisconsin-Madison

- Research Guides

- Nursing Resources

- Levels of Evidence (I-VII)

Nursing Resources : Levels of Evidence (I-VII)

- Definitions of

- Professional Organizations

- Nursing Informatics

- Nursing Related Apps

- EBP Resources

- PICO-Clinical Question

- Types of PICO Question (D, T, P, E)

- Secondary & Guidelines

- Bedside--Point of Care

- Pre-processed Evidence

- Measurement Tools, Surveys, Scales

- Types of Studies

- Table of Evidence

- Qualitative vs Quantitative

- Types of Research within Qualitative and Quantitative

- Cohort vs Case studies

- Independent Variable VS Dependent Variable

- Sampling Methods and Statistics

- Systematic Reviews

- Review vs Systematic Review vs ETC...

- Standard, Guideline, Protocol, Policy

- Additional Guidelines Sources

- Peer Reviewed Articles

- Conducting a Literature Review

- Systematic Reviews and Meta-Analysis

- Writing a Research Paper or Poster

- Annotated Bibliographies

- Reliability

- Validity Threats

- Threats to Validity of Research Designs

- Nursing Theory

- Nursing Models

- PRISMA, RevMan, & GRADEPro

- ORCiD & NIH Submission System

- Understanding Predatory Journals

- Nursing Scope & Standards of Practice, 4th Ed

- Distance Ed & Scholarships

- Assess A Quantitative Study?

- Assess A Qualitative Study?

- Find Health Statistics?

- Choose A Citation Manager?

- Find Instruments, Measurements, and Tools

- Write a CV for a DNP or PhD?

- Find information about graduate programs?

- Learn more about Predatory Journals

- Get writing help?

- Choose a Citation Manager?

- Other questions you may have

- Search the Databases?

- Get Grad School information?

Levels of Evidence

Rating System for the Hierarchy of Evidence: Quantitative Questions

Level I: Evidence from a systematic review of all relevant randomized controlled trials (RCT's), or evidence-based clinical practice guidelines based on systematic reviews of RCT's Level II: Evidence obtained from at least one well-designed Randomized Controlled Trial (RCT) Level III: Evidence obtained from well-designed controlled trials without randomization, quasi-experimental Level IV: Evidence from well-designed case-control and cohort studies Level V: Evidence from systematic reviews of descriptive and qualitative studies Level VI: Evidence from a single descriptive or qualitative study Level VII: Evidence from the opinion of authorities and/or reports of expert committees

Above information from "Evidence-based practice in nursing & healthcare: a guide to best practice" by Bernadette M. Melnyk and Ellen Fineout-Overholt. 2005, page 10.

Additional information can be found at: www.tnaonline.org/Media/pdf/present/conv-10-l-thompson.pdf

Where to Find the Evidence?

Systematic research review Where are they found? Cochrane Library, PubMed, Joanna Briggs Institute Clinical practice guidelines Where are they found? Many places! Don't get resources like MDConsult.

National Guideline Clearinghouse (NGC) http://www.guideline.gov or choose "guideline" or "Practice Guidelines" within the Publication Type limit in PubMed or CINAHL.

Current Practice Guidelines in Primary Care (AccessMedicine) This handy guide draws information from many sources of the latest guidelines for preventive services, screening methods, and treatment approaches commonly encountered in the outpatient setting.

ClinicalKey also has a number of Guidelines: https://www-clinicalkey-com.ezproxy.library.wisc.edu/#!/browse/guidelines Original research articles Where are they found? CINAHL, MEDLINE, Proquest Nursing & Allied Health, PsycINFO, PubMed

Steps In Analyzing A Research Article

When choosing sources it is important for you to evaluate each one to ensure that you have the best quality source for your project. Here are common categories and questions for you to consider:

· Does the first sentence contain a clear statement of the purpose of the article (without starting....The purpose of this article is to.....)

· Is the test population briefly described?

· Does it conclude with a statement of the experiment’s conclusions?

INTRODUCTION

· Does it properly introduce the subject?

· Does it clearly state the purpose of what is to follow?

· Does it briefly state why this report is different from previous publications?

METHODS AND MATERIALS

· Is the test population clearly stated? Is it appropriate for the experiment? Should it be larger? more

comprehensive?

· Is the control population clearly stated? Are all variables controlled? Should it be larger? more

· Are methods clearly described or referenced so the experiment could be repeated?

· Are materials clearly described and when appropriate, manufacturers footnoted?

· Are all statements and descriptions concerning design of test and control populations and materials

and methods included in this section?

· Are results for all parts of the experimental design provided?

· Are they clearly presented with supporting statistical analyses and/or charts and graphs when

appropriate?

· Are results straightforwardly presented without a discussion of why they occurred?

· Are all statistical analyses appropriate for the situation and accurately performed?

· Are all results discussed?

· Are all conclusions based on sufficient data?

· Are appropriate previous studies integrated into the discussion section?

Northern Arizona University http://jan.ucc.nau.edu/pe/exs514web/How2Evalarticles.htm

- << Previous: Annotated Bibliographies

- Next: Reliability >>

- Last Updated: Mar 19, 2024 10:39 AM

- URL: https://researchguides.library.wisc.edu/nursing

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- BMJ Glob Health

- v.4(Suppl 1); 2019

Synthesising quantitative and qualitative evidence to inform guidelines on complex interventions: clarifying the purposes, designs and outlining some methods

1 School of Social Sciences, Bangor University, Wales, UK

Andrew Booth

2 School of Health and Related Research (ScHARR), University of Sheffield, Sheffield, UK

Graham Moore